We can now move on to importing our dlls into Unity and writing our wrapper classes to handle interfacing with OpenCV and Unity. That way, we can then create our scripts to build our project:

- Create a folder. I will call mine ConfigureOpenCV:

- We need to create a new empty C++ project in Visual Studio. I will call mine ConfigureOpenCV, with the location being set in the ConfigureOpenCV folder:

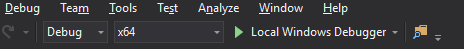

- Set the platform to be x64 in Visual Studio:

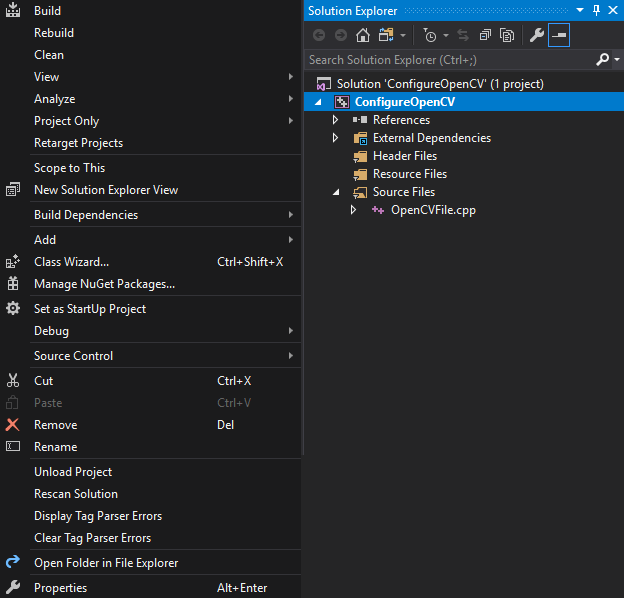

- Right-click on the project properties file and select Properties:

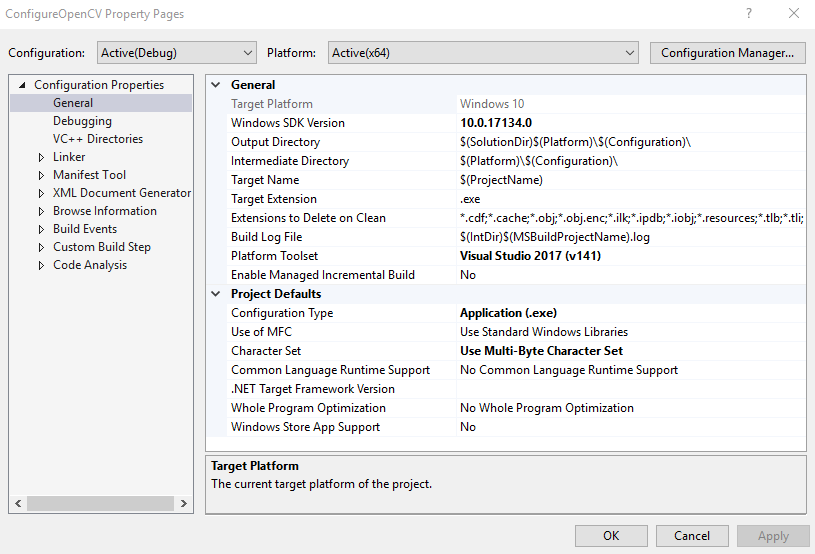

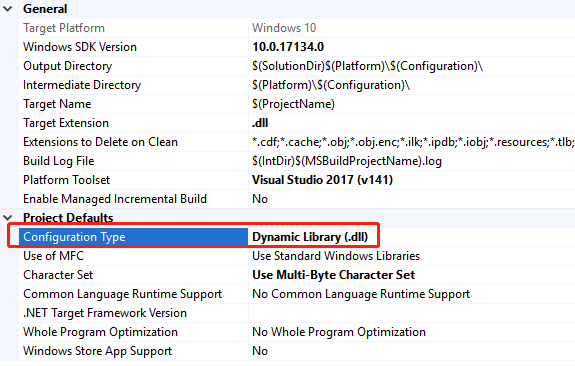

- This will open our properties window:

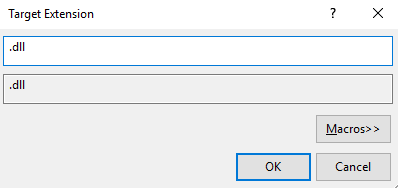

- The first thing we need to do is change Target Extension in the General tab from .exe to .dll:

- We need to change the Configuration Type from Application (.exe) to Dynamic Library (.dll):

- Over in VC++ Directories, add our OPENCV_DIRs to include it in Include Directories:

- Over in Linker’s General Tab, add $(OPENCV_DIR)\lib\Debug to the Additional Library Directories option:

- Finally, in the Linker’s Input tab, we need to add a few items to the Additional Dependencies option. Those items will be the following:

- opencv_core310.lib (or opencv_world330.lib, depending on your OpenCV version)

- opencv_highgui310.lib

- opencv_objdetect310.lib

- opencv_videoio310.lib

- opencv_imgproc310.lib

Figure shows the location of Additional Dependencies in the Linker's Input tab with opencv_core added.

- Now, we can create a new CPP file:

We will now incorporate the headers and namespaces we absolutely need here:

#include "opencv2/objdetect.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include <iostream>

#include <stdio.h>

using namespace std;

using namespace cv;

- Declare a struct that will be used to pass data from C++ to Mono:

struct Circle

{

Circle(int x, int y, int radius) : X(x), Y(y), Radius(radius) {}

int X, Y, Radius;

};

- CascadeClassifer is a class used for object detection:

CascadeClassifier _faceCascade;

- Create a string that will serve as the name of the window:

String _windowName = "OpenCV";

- Video Capture is a class used to open a video file, or capture a device or an IP video stream for video capture:

VideoCapture _capture;

- Create an integer value to store the scale:

int _scale = 1;

- extern "C", as a refresher, will avoid name mangling from C++. Our first method is Init for initialization:

extern "C" int __declspec(dllexport) __stdcall Init(int& outCameraWidth, int& outCameraHeight)

{

- We will create an if statement to load the LBP face cascade.xml file that is part of CVFeatureParams; if it cannot load, then it will exit with a return code of -1:

if (!_faceCascade.load("lbpcascade_frontalface.xml"))

return -1;

- Now, we will open the video capture stream:

_capture.open(0);

- If the video stream is not opened, then we will exit with a return code of -2:

if (!_capture.isOpened())

return -2;

- We will set the camera width:

outCameraWidth = _capture.get(CAP_PROP_FRAME_WIDTH);

- And we also need to set the camera height:

outCameraHeight = _capture.get(CAP_PROP_FRAME_HEIGHT);

return 0;

}

- Now, we need to make sure that we create a method to close the capture stream and release the video capture device:

extern "C" void __declspec(dllexport) __stdcall Close()

{

_capture.release();

}

- The next step is to create a method that sets the video scale:

extern "C" void __declspec(dllexport) __stdcall SetScale(int scale)

{

_scale = scale;

}

- Next up, we will create a method that allows us to detect an object:

extern "C" void __declspec(dllexport) __stdcall Detect(Circle* outFaces, int maxOutFacesCount, int& outDetectedFacesCount)

{

Mat frame;

_capture >> frame;

- Next up, if the frame is empty, we need to guard against possible errors from this by exiting from the method:

if (frame.empty())

return;

- Create a vector called faces:

std::vector<Rect> faces;

- We will create Mat, which is one of the various constructors that forms a matrix with the name of grayscaleFrame:

Mat grayscaleFrame;

- We then need to convert the frame to grayscale from RGB colorspace for proper cascade detection:

cvtColor(frame, grayscaleFrame, COLOR_BGR2GRAY);

Mat resizedGray;

- The next step is to scale down for better performance:

resize(grayscaleFrame, resizedGray, Size(frame.cols / _scale, frame.rows / _scale));

equalizeHist(resizedGray, resizedGray);

- Next up, we will detect the faces:

_faceCascade.detectMultiScale(resizedGray, faces);

- We will now create a for loop to draw the faces:

for (size_t i = 0; i < faces.size(); i++)

{

Point center(_scale * (faces[i].x + faces[i].width / 2), _scale * (faces[i].y + faces[i].height / 2));

ellipse(frame, center, Size(_scale * faces[i].width / 2, _scale * faces[i].height / 2), 0, 0, 360, Scalar(0, 0, 255), 4, 8, 0);

- Now, we will send this information to the application:

outFaces[i] = Circle(faces[i].x, faces[i].y, faces[i].width / 2);

outDetectedFacesCount++;

- Since we have a matrix, we need to make sure that we don't exceed the limits of the array. To do this, we will break if the faces count is equal to the max amount of faces count we have allocated; if it is, exit from the loop:

if (outDetectedFacesCount == maxOutFacesCount)

break;

}

- The last thing we need to do is display the debug output:

imshow(_windowName, frame);

- Now, build the dll file, and we can now begin to work in Unity.