THE IDOL WITH FEET OF CLAY

In 1962, Thomas Kuhn of the University of Chicago published The Structure of Scientific Revolutions. This book has had a profound influence on the way in which science is viewed by both philosophers and practitioners. Kuhn noted that reality is exceedingly complicated and can never be completely described by an organized scientific model. He proposed that science tends to produce a model of reality that appears to fit the data available and is useful for predicting the results of new experiments. Since no model can be completely true, the accumulation of data begins to require modifications of the model to correct it for new discoveries. The model becomes more and more complicated, with special exceptions and intuitively implausible extensions. Eventually, the model can no longer serve a useful purpose. At that point, original thinkers will emerge with an entirely different model, creating a revolution in science.

The statistical revolution was an example of this exchange of models. In the deterministic view of nineteenth-century science, Newtonian physics had effectively described the motion of planets, moons, asteroids, and comets—all of it based on a few well-defined laws of motion and gravity. Some success had been achieved in finding laws of chemistry, and Darwin’s law of natural selection appeared to provide a useful start to understanding evolution. Attempts were even made to extend the search for scientific laws into the realms of sociology, political science, and psychology. It was believed at the time that the major problem with finding these laws lay in the imprecision of the measurements.

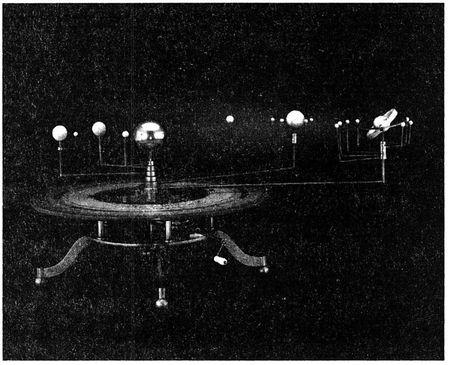

An orrery, a clockwork mechanism designed to show the movement of the planets about the Sun

Mathematicians like Pierre Simon Laplace of the early nineteenth century developed the idea that astronomical measurements involve slight errors, possibly due to atmospheric conditions or to the human fallibility of the observers. He opened the door to the statistical revolution by proposing that these errors would have a probability distribution. To use Thomas Kuhn’s view, this was a modification of the clockwork universe made necessary by new data. The nineteenth-century Belgian polymath Lambert Adolphe Jacques Quételet anticipated the statistical revolution by proposing

that the laws of human behavior were probabilistic in nature. He did not have Karl Pearson’s multiple parameter approach and was unaware of the need for optimum estimation methods, and his models were exceedingly naive.

Eventually, the deterministic approach to science collapsed because the differences between what the models predicted and what was actually observed grew greater with more precise measurements. Instead of eliminating the errors that Laplace thought were interfering with the ability to observe the true motion of the planets, more precise measurements showed more and more variation. At this point, science was ready for Karl Pearson and his distributions with parameters.

The preceding chapters of this book have shown how Pearson’s statistical revolution has come to dominate all of modern science. In spite of the apparent determinism of molecular biology, where genes are found that cause cells to generate specific proteins, the actual data from this science are filled with randomness and the genes are, in fact, parameters of the distribution of those results. The effects of modern drugs on bodily functions, where doses of 1 or 2 milligrams cause profound changes in blood pressure or psychic neuroses, seem to be exact. But the pharmacological studies that prove these effects are designed and analyzed in terms of probability distributions, and the effects are parameters of those distributions.

Similarly, the statistical methods of econometrics are used to model the economic activity of a nation or of a firm. The subatomic particles we confidently think of as electrons and protons are described in quantum mechanics as probability distributions. Sociologists derive weighted sums of averages taken across populations to describe the interactions of individuals—but only in terms of probability distributions. In many of these sciences, the use of statistical models is so much a part of their methodology that the parameters of the distributions are spoken of as if they were real, measurable things. The uncertain conglomeration of shifting and changing measurements that are the starting point of these

sciences is submerged in the calculations, and the conclusions are stated in terms of parameters that can never be directly observed.

So ingrained in modern science is the statistical revolution that the statisticians have lost control of the process. The probability calculations of the molecular geneticists have been developed independently of the mathematical statistical literature. The new discipline of information science has emerged from the ability of the computer to accumulate large amounts of data and the need to make sense out of those huge libraries of information. Articles in the new journals of information science seldom refer to the work of the mathematical statisticians, and many of the techniques of analysis that were examined years ago in Biometrika or the Annals of Mathematical Statistics are being rediscovered. The applications of statistical models to questions of public policy have spawned a new discipline called “risk analysis,” and the new journals of risk analysis also tend to ignore the work of the mathematical statisticians.

Scientific journals in almost all disciplines now require that the tables of results contain some measure of the statistical uncertainty associated with the conclusions, and standard methods of statistical analysis are taught in universities as part of the graduate courses in these sciences, usually without involving the statistics departments that may exist at these same universities.

In the more than a hundred years since Karl Pearson’s discovery of the skew distributions, the statistical revolution has not only been extended to most of science, but many of its ideas have spread into the general culture. When the television news anchor announces that a medical study has shown that passive smoking “doubles the risk of death” among nonsmokers, almost everyone who listens thinks he or she knows what that means. When a public opinion poll is used to declare that 65 percent of the public think

the president is doing a good job, plus or minus 3 percent, most of us think we understand both the 65 percent and the 3 percent. When the weatherman predicts a 95 percent chance of rain tomorrow, most of us will take along an umbrella.

The statistical revolution has had an even more subtle influence on popular thought and culture than just on the way we bandy probabilities and proportions about as if we knew what they meant. We accept the conclusions of scientific investigations based upon estimates of parameters, even if none of the actual measurements agrees exactly with those conclusions. We are willing to make public policy and organize our personal plans using averages of masses of data. We take for granted that assembling data on deaths and births is not only a proper procedure but a necessary one, and we have no fear of angering the gods by counting people. On the level of language, we use the words correlation and correlated as if they mean something, and as if we think we know their meaning.

This book has been an attempt to explain to the nonmathematician something about this revolution. I have tried to describe the essential ideas behind the revolution, how it came to be adopted in a number of different areas of science, how it eventually came to dominate almost all of science. I have tried to interpret some of the mathematical models with words and examples that can be understood without having to climb the heights of abstract mathematical symbolism.

The world “out there” is an exceedingly complicated mass of sensations, events, and turmoil. With Thomas Kuhn, I do not believe that the human mind is capable of organizing a structure of ideas that can come even close to describing what is really out there. Any attempt to do so contains fundamental faults. Eventually, those faults will become so obvious that the scientific model must be

continuously modified and eventually discarded in favor of a more subtle one. We can expect the statistical revolution will eventually run its course and be replaced by something else.

It is only fair that I end this book with some discussion of the philosophical problems that have emerged as statistical methods have been extended into more and more areas of human endeavor. What follows will be an adventure in philosophy. The reader may wonder what philosophy has to do with science and real life. My answer is that philosophy is not some arcane academic exercise done by strange people called philosophers. Philosophy looks at the underlying assumptions behind our day-to-day cultural ideas and activities. Our worldview, which we learn from our culture, is governed by subtle assumptions. Few of us are even aware of them. The study of philosophy allows us to uncover these assumptions and examine their validity.

I once taught a course in the mathematics department of Connecticut College. The course had a formal name, but the department members referred to it as “math for poets.” It was designed as a one-semester course to acquaint liberal arts majors with the essential ideas of mathematics. Early in the semester, I introduced the students to the Ars Magna of Girolamo Cardano, a sixteenth-century Italian mathematician. The Ars Magna contains the first published description of the emerging methods of algebra. Echoing his tome, Cardano writes in his introduction that this algebra is not new. He is no ignorant fool, he implies. He is aware that ever since the fall of man, knowledge has been decreasing and that Aristotle knew far more than anyone living at Cardano’s time. He is aware that there can be no new knowledge. In his ignorance, however, he has been unable to find reference to a particular idea in Aristotle, and so he presents his readers with this idea, which appears to be new. He is sure that some more knowledgeable reader will locate where, among the writings of the ancients, this idea that appears to be new can actually be found.

The students in my class, raised in a cultural milieu that not only believes new things can be found but that actually encourages innovation, were shocked. What a stupid thing to write! I pointed out to them that in the sixteenth century, the worldview of Europeans was constrained by fundamental philosophical assumptions. An important part of their worldview was the idea of the fall of man and the subsequent continual deterioration of the world—of morals, of knowledge, of industry, of all things. This was known to be true, so true that it was seldom even articulated.

I asked the students what underlying assumptions of their worldview might possibly seem ridiculous to students 500 years in the future. They could think of none.

As the surface ideas of the statistical revolution spread through modern culture, as more and more people believe its truths without thinking about its underlying assumptions, let us consider three philosophical problems with the statistical view of the universe:

1. Can statistical models be used to make decisions?

2. What is the meaning of probability when applied to real life?

3. Do people really understand probability?

L. Jonathan Cohen of Oxford University has been a trenchant critic of what he calls the “Pascalian” view, by which he means the use of statistical distributions to describe reality. In his 1989 book, An Introduction to the Philosophy of Induction and Probability, he proposes the paradox of the lottery, which he attributes to Seymour Kyberg of Wesleyan University in Middletown, Connecticut.

Suppose we accept the ideas of hypothesis or significance testing. We agree that we can decide to reject a hypothesis about

reality if the probability associated with that hypothesis is very small. To be specific, let’s set 0.0001 as a very small probability. Let’s now organize a fair lottery with 10,000 numbered tickets. Consider the hypothesis that ticket number 1 will win the lottery. The probability of that is 0.0001. We reject that hypothesis. Consider the hypothesis that ticket number 2 will win the lottery. We can also reject that hypothesis. We can reject similar hypotheses for any specific numbered ticket. Under the rules of logic, if A is not true, and B is not true, and C is not true, then (A or B or C) is not true. That is, under the rules of logic, if every specific ticket will not win the lottery, then no ticket will win the lottery.

In an earlier book, The Probable and the Provable, L. J. Cohen proposed a variant of this paradox based on common legal practice. In the common law, a plaintiff in a civil suit wins if his claim seems true on the basis of the “preponderance” of the evidence. This has been accepted by the courts to mean that the probability of the plaintiffs claim is greater than 50 percent. Cohen proposes the paradox of the gate-crashers. Suppose there is a rock concert in a hall with 1,000 seats. The promoter sells tickets for 499 of the seats, but when the concert starts, all 1,000 seats are filled. Under English common law, the promoter has the right to collect from each of the 1,000 persons at the concert, since the probability that any one of them is a gate-crasher is 50.1 percent. Thus, the promoter will collect money from 1,499 patrons for a hall that holds only 1,000.

What both paradoxes show is that decisions based on probabilistic arguments are not logical decisions. Logic and probabilistic arguments are incompatible. R. A. Fisher justified inductive reasoning in science by appealing to significance tests based on well-designed experiments. Cohen’s paradoxes suggest that such inductive reasoning is illogical. Jerry Cornfield justified the finding that smoking causes lung cancer by appealing to a piling up of evidence, where study after study shows results that are highly improbable unless you assume that smoking is the cause of the cancer. Is it illogical to believe that smoking causes cancer?

This lack of fit between logic and statistically based decisions is not something that can be accounted for by finding a faulty assumption in Cohen’s paradoxes. It lies at the heart of what is meant by logic. (Cohen proposes that probabilistic models be replaced by a sophisticated version of mathematical logic known as “modal logic,” but I think this solution introduces more problems than it solves.) In logic, there is a clear difference between a proposition that is true and one that is false. But probability introduces the idea that some propositions are probably or almost true. That little bit of resulting unsureness blocks our ability to apply the cold exactness of material implication in dealing with cause and effect. One of the solutions proposed for this problem in clinical research is to look upon each clinical study as providing some information about the effect of a given treatment. The value of that information can be determined by a statistical analysis of the study but also by the quality of the study. This extra measure, the quality of the study, is used to determine which studies will dominate in the conclusions. The concept of quality of a study is a vague one and not easily calculated. The paradox remains, eating at the heart of statistical methods. Will this worm of inconsistency require a new revolution in the twenty-first century?

Andrei Kolmogorov established the mathematical meaning of probability: Probability is a measure of sets in an abstract space of events. All the mathematical properties of probability can be derived from this definition. When we wish to apply probability to real life, we need to identify that abstract space of events for the particular problem at hand. When the weather forecaster says that the probability of rain tomorrow is 95 percent, what is the set of abstract events being measured? Is it the set of all people who will go outside tomorrow, 95 percent of whom will get wet? Is it the set

of all possible moments in time, 95 percent of which will find me getting wet? Is it the set of all one-inch-square pieces of land in a given region, 95 percent of which will get wet? Of course, it is none of those. What is it, then?

Karl Pearson, coming before Kolmogorov, believed that probability distributions were observable by just collecting a lot of data. We have seen the problems with that approach.

William S. Gosset attempted to describe the space of events for a designed experiment. He said it was the set of all possible outcomes of that experiment. This may be intellectually satisfying, but it is useless. It is necessary to describe the probability distribution of outcomes of the experiment in sufficient exactitude so we can calculate the probabilities needed to do statistical analysis. How does one derive a particular probability distribution from the vague idea of the set of all possible outcomes?

R. A. Fisher first agreed with Gosset, but then he developed a much better definition. In his experimental designs, treatments are assigned to units of experimentation at random. If we wish to compare two treatments for hardening of the arteries in obese rats, we randomly assign treatment A to some rats and treatment B to the rest. The study is run, and we observe the results. Suppose that both treatments have the same underlying effect. Since the animals were assigned to treatment at random, any other assignment would have produced similar results. The random labels of treatment are irrelevant tags that can be switched among animals—as long as the treatments have the same effect. Thus, for Fisher, the space of events is the set of all possible random assignments that could have been made. This is a finite set of events, all of them equally probable. It is possible to compute the probability distribution of the outcome under the null hypothesis that the treatments have the same effect. This is called the “permutation test.” When Fisher proposed it, the counting of all possible random assignments was impossible. Fisher proved that his formulas for analysis of variance provided good approximations to the correct permutation test.

That was before the day of the high-speed computer. Now it is possible to run permutation tests (the computer is tireless when it comes to doing simple arithmetic), and Fisher’s formulas for analysis of variance are not needed. Nor are many of the clever theorems of mathematical statistics that were proved over the years. All significance tests can be run with permutation tests on the computer, as long as the data result from a randomized controlled experiment.

When a significance test is applied to observational data, this is not possible. This is a major reason Fisher objected to the studies of smoking and health. The authors were using statistical significance tests to prove their case. To Fisher, statistical significance tests are inappropriate unless they are run in conjunction with randomized experiments. Discrimination cases in American courts are routinely decided on the basis of statistical significance tests. The U.S. Supreme Court has ruled that this is an acceptable way of determining if there was a disparate impact due to sexual or racial discrimination. Fisher would have objected loudly. In the late 1980s, the U.S. National Academy of Sciences sponsored a study of the use of statistical methods as evidence in courts. Chaired by Stephen Fienberg of Carnegie Mellon University and Samuel Krislov of the University of Minnesota, the study committee issued its report in 1988. Many of the papers included in that report criticized the use of hypothesis tests in discrimination cases, with arguments similar to those used by Fisher when he objected to the proof that smoking caused cancer. If the Supreme Court wants to approve significance tests in litigation, it should identify the space of events that generate the probabilities.

A second solution to the problem of finding Kolmogorov’s space of events occurs in sample survey theory. When we wish to take a random sample of a population to determine something about it, we identify exactly the population of people to be examined, establish a method of selection, and sample at random according to that method. There is uncertainty in the conclusions, and we can apply statistical methods to quantify that uncertainty.

The uncertainty is due to the fact that we are dealing with a sample of the populace. The true values of the universe being examined, such as the true percentage of American voters who approve of the president’s policies, are fixed. They are just not known. The space of events that enables us to use statistical methods is the set of all possible random samples that might have been chosen. Again, this is a finite set, and its probability distribution can be calculated. The real-life meaning of probability is well established for sample surveys.

It is not well established when statistical methods are used for observational studies in astronomy, sociology, epidemiology, law, or weather forecasting. The disputes that arise in these areas are often based on the fact that different mathematical models will give rise to different conclusions. If we cannot identify the space of events that generate the probabilities being calculated, then one model is no more valid than another. As has been shown in many court cases, two expert statisticians working with the same data can disagree about the analyses of those data. As statistical models are used more and more for observational studies to assist in social decisions by government and advocacy groups, this fundamental failure to be able to derive probabilities without ambiguity will cast doubt on the usefulness of these methods.

One solution to the question of the real-life meaning of probability has been the concept of “personal probability.” L. J. (“Jimmie”) Savage of the United States and Bruno de Finetti of Italy were the foremost proponents of this view. This position was best presented in Savage’s 1954 book, The Foundations of Statistics. In this view, probability is a widely held concept. People naturally govern their lives using probability. Before entering on a venture, people intuitively decide on the probability of possible outcomes. If the probability

of danger, for instance, is thought to be too great, a person will avoid that action. To Savage and de Finetti, probability is a common concept. It does not need to be connected with Kolmogorov’s mathematical probability. All we need do is establish general rules for making personal probability coherent. To do this, we need only to assume that people will not be inconsistent when judging the probability of events. Savage derived rules for internal coherence based upon that assumption.

Under the Savage-de Finetti approach, personal probability is unique to each person. It is perfectly possible that one person will decide that the probability of rain is 95 percent and that another will decide that it is 72 percent—based on their both observing the same data. Using Bayes’s theorem, Savage and de Finetti were able to show that two people with coherent personal probabilities will converge to the same final estimates of probability if faced with a sequence of the same data. This is a satisfying conclusion. People are different but reasonable, they seemed to say. Given enough data, such reasonable people will agree in the end, even if they disagreed to begin with.

John Maynard Keynes in his doctoral thesis, published in 1921 as A Treatise on Probability, thought of personal probability as something else. To Keynes, probability was the measure of uncertainty that all people with a given cultural education would apply to a given situation. Probability was the result of one’s culture, not just of one’s inner, gut feeling. This approach is difficult to support if we are trying to decide between a probability of 72 percent and one of 68 percent. General cultural agreement could never reach such a degree of precision. Keynes pointed out that for decision making we seldom, if ever, need to know the exact numerical probability of some event. It is usually sufficient to be able to order events. According to Keynes, decisions can be made from knowing that it is more probable that it will rain tomorrow than that it will hail, or that it is twice as probable that it will rain as that it will hail. Keynes points out that probability can be a partial ordering. One

does not have to compare everything with everything else. One can ignore the probability relationships between whether the Yankees will win the pennant and whether it will rain tomorrow.

In this way, two of the solutions to the problem of the meaning of probability rest on the general human desire to quantify uncertainty, or at least to do so in a rough fashion. In his Treatise, Keynes works out a formal mathematical structure for his partial ordering of personal probability. He did this work before Kolmogorov laid the foundations for mathematical probability, and there is no attempt to link his formulas to Kolmogorov’s work. Keynes claimed that his definition of probability was different from the set of mathematical counting formulas that represented the mathematics of probability in 1921. For Keynes’s probabilities to be usable, the person who invokes them would still have to meet Savage’s criteria for coherence.

This makes for a view of probability that might provide the foundations for decision making with statistical models. This is the view that probability is not based on a space of events but that probabilities as numbers are generated out of the personal feelings of the people involved. Then psychologists Daniel Kahneman and Amos Tversky of Hebrew University in Jerusalem began their investigations of the psychology of personal probability.

Through the 1970s and 1980s, Kahneman and Tversky investigated the way in which individuals interpret probability. Their work was summed up in their book (coedited by P. Slovic) Judgment Under Uncertainty: Heuristics and Biases. They presented a series of probabilistic scenarios to college students, college faculty, and ordinary citizens. They found no one who met Savage’s criteria for coherence. They found, instead, that most people did not have the ability to keep even a consistent view of what different numerical probabilities meant. The best they could find was that people could keep a consistent sense of the meaning of 50:50 and the meaning of “almost certain.” From Kahneman and Tversky’s work, we have to conclude that the weather forecaster who tries to

distinguish between a 90 percent probability of rain and a 75 percent probability of rain cannot really tell the difference. Nor do any of the listeners to their forecast have a consistent view of what that difference means.

In 1974, Tversky presented these results at a meeting of the Royal Statistical Society. In the discussion afterward, Patrick Suppes of Stanford University proposed a simple probability model that met Kolmogorov’s axioms and that also mimicked what Kahneman and Tversky had found. This means that people who used this model would be coherent in their personal probabilities. In Suppes’s model, there are only five probabilities:

surely true

more probable than not

as probable as not

less probable than not

surely false

more probable than not

as probable as not

less probable than not

surely false

This leads to an uninteresting mathematical theory. Only about a half-dozen theorems can be derived from this model, and their proofs are almost self-evident. If Kahneman and Tversky are right, the only useful version of personal probability provides no grist at all for the wonderful abstractions of mathematics, and it generates the most limited versions of statistical models. If Suppes’s model is, in fact, the only one that fits personal probability, many of the techniques of statistical analysis that are standard practice are useless, since they only serve to produce distinctions below the level of human perception.

The basic idea behind the statistical revolution is that the real things of science are distributions of numbers, which can be described by

parameters. It is mathematically convenient to embed that concept into probability theory and deal with probability distributions. By considering the distributions of numbers as elements from the mathematical theory of probability, it is possible to establish optimum criteria for estimators of those parameters and to deal with the mathematical problems that arise when data are used to describe the distributions. Because probability seems inherent in the concept of a distribution, much effort has been spent on getting people to understand probability, trying to link the mathematical idea of probability to real life, and using the tools of conditional probability to interpret the results of scientific experiments and observations.

The idea of distribution can exist outside of probability theory. In fact, improper distributions (which are improper because they do not meet all the requirements of a probability distribution) are already being used in quantum mechanics and in some Bayesian techniques. The development of queuing theory, a situation where the average time between arrivals at the queue equals the average service time in the queue, leads to an improper distribution for the amount of time someone entering the queue will have to wait. Here is a case where applying the mathematics of probability theory to a real-life situation leads us out of the set of probability distributions.

Kolmogorov’s final insight was to describe probability in terms of the properties of finite sequences of symbols, where information theory is not the outcome of probabilistic calculations but the progenitor of probability itself. Perhaps someone will pick up the torch where he left it and develop a new theory of distributions where the very nature of the digital computer is brought into the philosophical foundations.

Who knows where there may be another R. A. Fisher, working on the fringes of established science, who will soon burst upon the

scene with insights and ideas that have never been thought before? Perhaps, somewhere in the center of China, another Lucien Le Cam has been born to an illiterate farm family; or in North Africa, another George Box who stopped his formal education after secondary school may now be working as a mechanic, exploring and learning on his own. Perhaps another Gertrude Cox will soon give up her hopes of being a missionary and become intrigued with the puzzles of science and mathematics; or another William S. Gossett is trying to find a way to solve a problem in the brewing of beer; or another Neyman or Pitman is teaching in some obscure provincial college in India and thinking deep thoughts. Who knows from where the next great discovery will come?

As we enter the twenty-first century, the statistical revolution in science stands triumphant. It has vanquished determinism from all but a few obscure corners of science. It has become so widely used that its underlying assumptions have become part of the unspoken popular culture of the Western world. It stands triumphant on feet of clay. Somewhere, in the hidden corners of the future, another scientific revolution is waiting to overthrow it, and the men and women who will create that revolution may already be living among us.