From the perspective of artificial intelligence, evolutionary computation belongs to computation intelligence. The origins of evolutionary computation can be traced back to the late 1950s (see, e.g., the influencing works [12, 15, 46, 47]), and has started to receive significant attention during the 1970s (see, e.g., [41, 68, 133]).

The first issue of the Evolutionary Computation in 1993 and the first issue of the IEEE Transactions on Evolutionary Computation in 1997 mark two important milestones in the history of the rapidly growing field of evolutionary computation.

This chapter is focused primarily on basic theory and methods of evolutionary computation, including multiobjective optimization, multiobjective simulated annealing, multiobjective genetic algorithms, multiobjective evolutionary algorithms, evolutionary programming, differential evolution together with ant colony optimization, artificial bee colony algorithms, and particle swarm optimization. In particular, this chapter also highlights selected topics and advances in evolutionary computation: Pareto optimization theory, noisy multiobjective optimization, and opposition-based evolutionary computation.

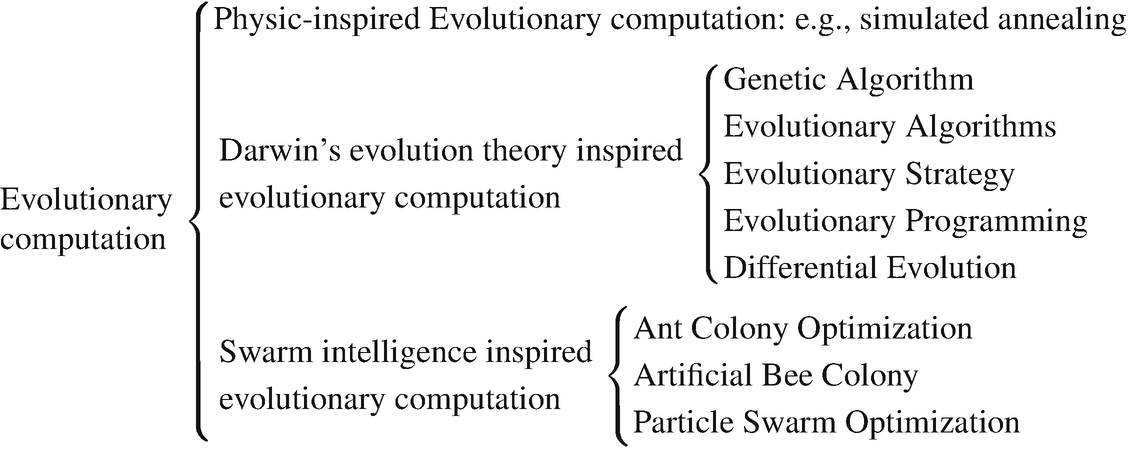

9.1 Evolutionary Computation Tree

- 1.

Physic-inspired evolutionary computation: Its typical representative is simulated annealing [100] inspired by heating and cooling of materials.

- 2.

Darwin’s evolution theory inspired evolutionary computation

Genetic Algorithm (GA) is inspired by the process of natural selections. A GA is commonly used to generate high-quality solutions to optimization and search problems by relying on bio-inspired operators such as mutation, crossover, and selection.

Evolutionary Algorithms (EAs) are inspired by Darwin’s evolution theory which is based on survival of fittest candidate for a given environment. These algorithms begin with a population (set of solutions) which tries to survive in an environment (defined with fitness evaluation). The parent population shares their properties of adaptation to the environment to the children with various mechanisms of evolution such as genetic crossover and mutation.

Evolutionary Strategy (ES) was developed by Schwefel in 1981 [143]. Similar to the GA, the ES uses evolutionary theory to optimize, that is to say, genetic information is used to inherit and mutate the survival of the fittest generation by generation, so as to get the optimal solution. The difference lies in: (a) the DNA sequence in ES is encoded by real number instead of 0-1 binary code in GA; (b) In the ES the mutation intensity is added for each real number value on the DNA sequence in order to make a mutation.

Evolutionary Programming (EP) [43]: The EP and the ES adopt the same coding (digital string) to the optimization problem and the same mutation operation mode, but they adopt the different mutation expressions and survival selections. Moreover, the crossover operator in ES is optional, while there is no crossover operator in EP. In the aspect of parent selection, the ES adopts the method of probability selection to form the parent, and each individual of the parent can be selected with the same probability, while the EP adopts a deterministic approach, that is, each parent in the current population must undergo mutation to generate offspring.

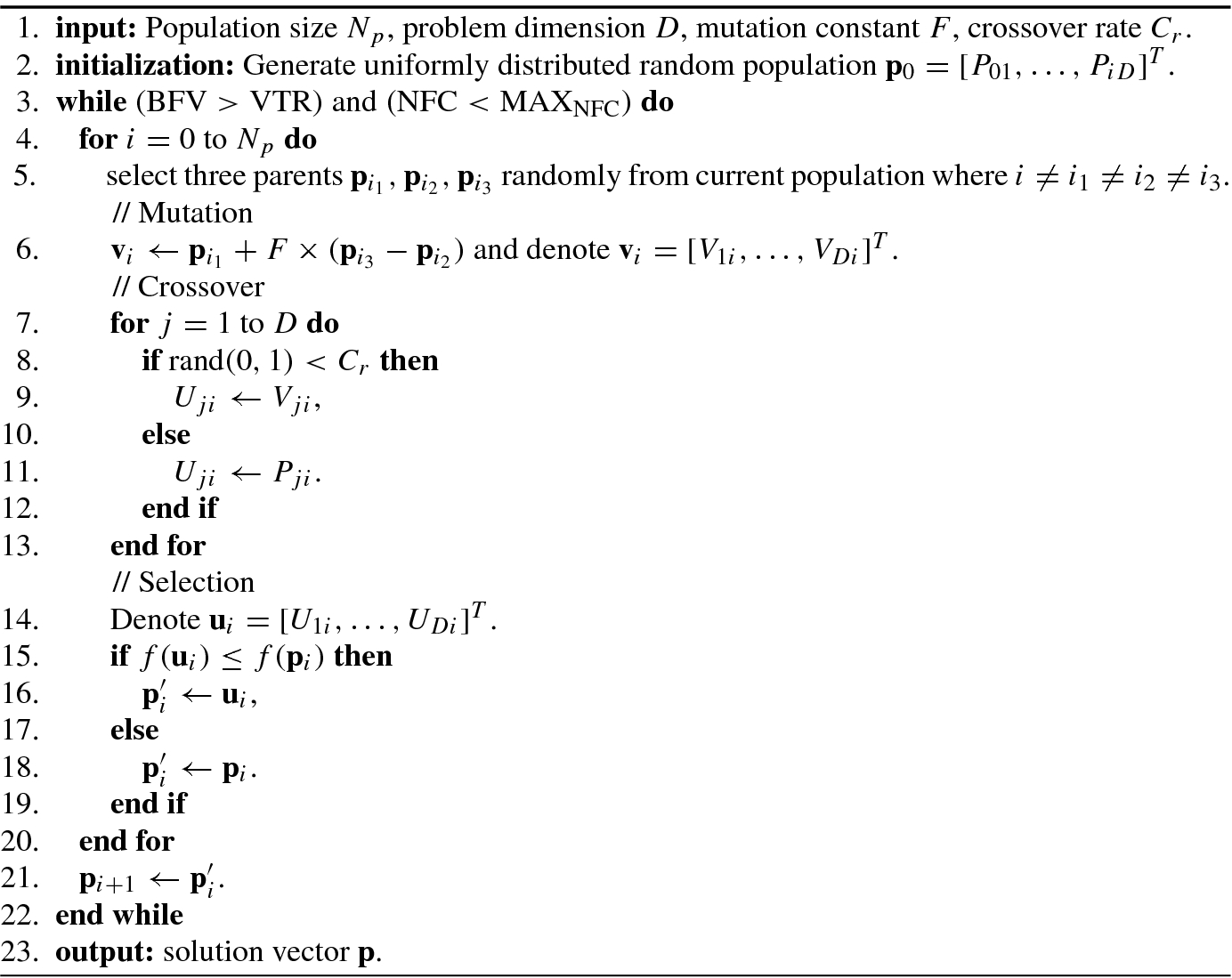

Differential Evolution (DE) was developed by Storn and Price in 1997 [147]. Similar to the GA, the main process includes three steps: mutation, crossover, and selection. The difference is that the GA controls the parent’s crossover according to the fitness value, while the mutation vector of the DE is generated by the difference vector of the parent generation, and crossover with the individual vector of the parent generation to generate a new individual vector, which directly selects with the individual of the parent generation. Therefore, the approximation effect of DE is more significant than that of the GA.

- 3.Swarm intelligence inspired evolutionary computation: Swarm intelligence includes two important concepts: (a) The concept of a swarm means multiplicity, stochasticity, randomness, and messiness. (b) The concept of intelligence implies a kind of problem-solving ability through the interactions of simple information-processing units. This “collective intelligence” is built up through a population of homogeneous agents interacting with each other and with their environment. The major swarm intelligence inspired evolutionary computation includes:

Ant Colony Optimization (ACO) by Dorigo in 1992 [28]; a swarm of ants can solve very complex problems such as finding the shortest path between their nest and the food source. If finding the shortest path is looked upon as an optimization problem, then each path between the starting point (their nest) and the terminal (the food source) can be viewed as a feasible solution.

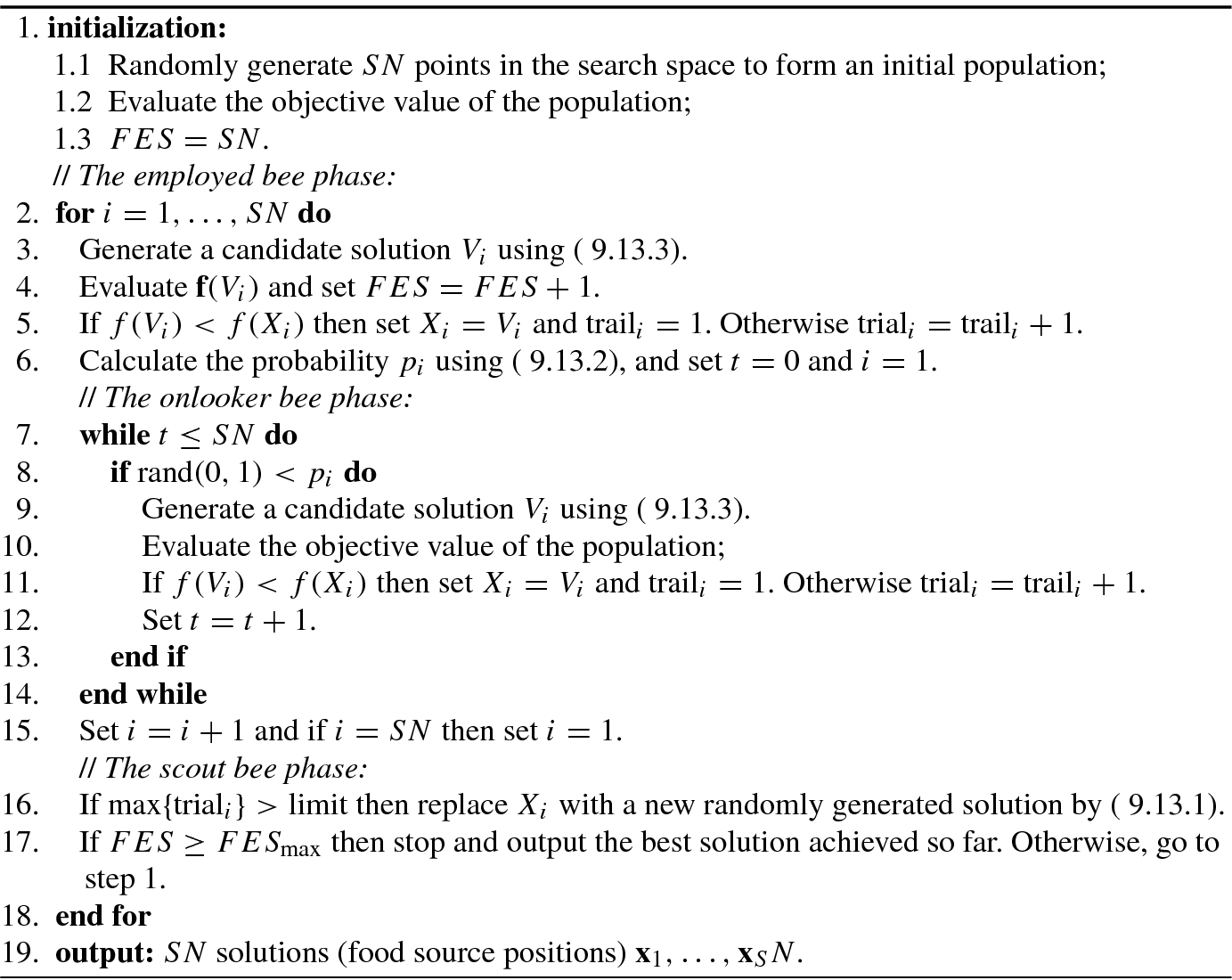

Artificial Bee Colony (ABC) algorithm, proposed by Karaboga in 2005 [81], is inspired by the intelligent foraging behavior of the honey bee swarm. In the ABC algorithm, the position of a food source represents a possible solution of the optimization problem and the nectar amount of a food source corresponds to the quality (fitness) of the associated solution.

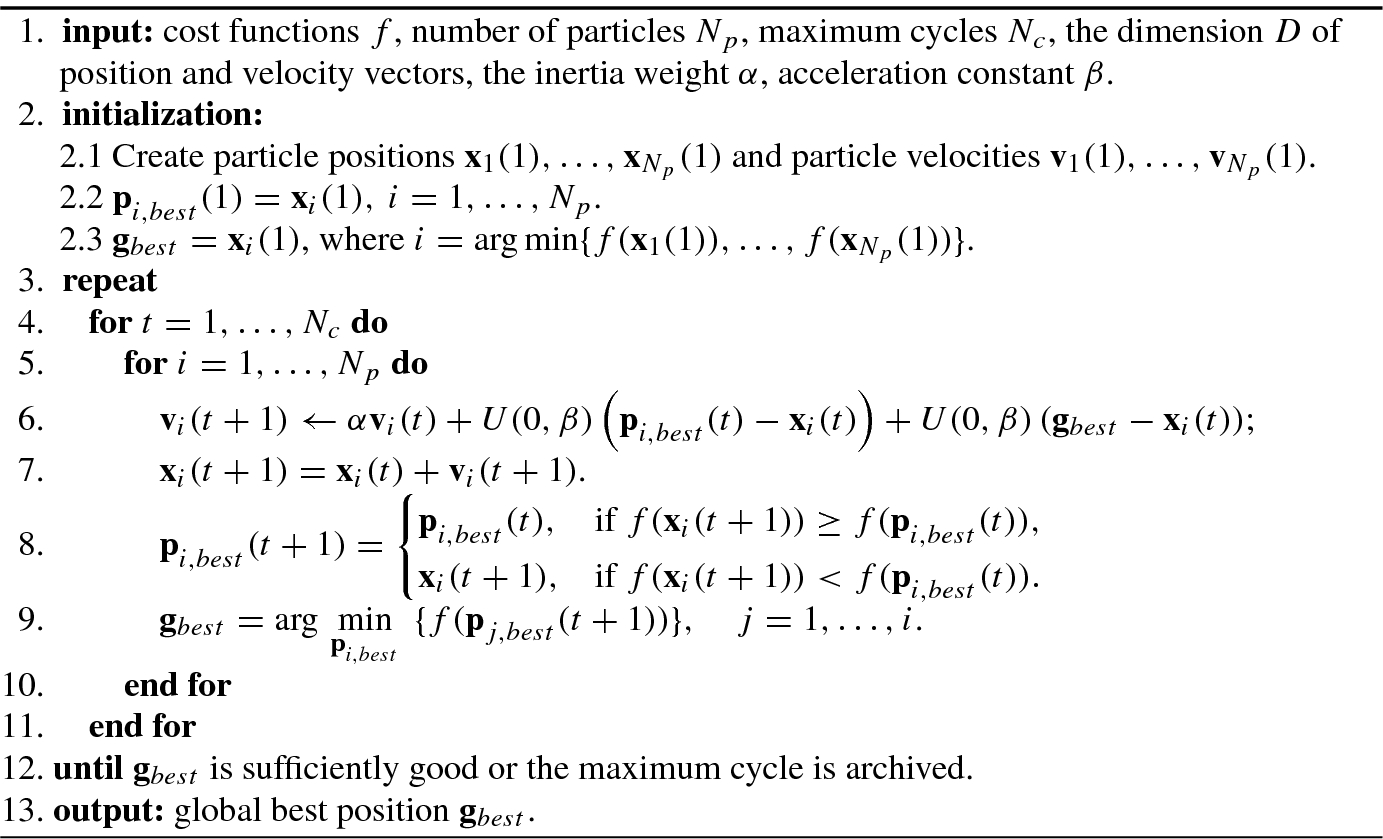

Particle Swarm Optimization (PSO), developed by Kennedy and Eberhart in 1995 [86], is essentially an optimization technique based on swarm intelligence, and adopts a population-based stochastic algorithm for finding optimal regions of complex search spaces through the interaction of individuals in a population of particles. Different from evolutionary algorithms, the particle swarm does not use selection operation; while interactions of all population members result in iterative improvement of the quality of problem solutions from the beginning of a trial until the end.

Evolutionary computation tree

9.2 Multiobjective Optimization

In science and engineering applications, a lot of optimization problems involve with multiple objectives. Different from single-objective problems, objectives in multiobjective problems are usually conflict. For example, in the design of a complex hardware/software system, an optimal design might be an architecture that minimizes cost and power consumption but maximizing the overall performance. This structural contradiction leads to multiobjective optimization theories and methods different from single-objective optimization. The need for solving multiobjective optimization problems has spawned various evolutionary computation methods.

This section discusses two multiobjective optimizations: multiobjective combinatorial optimization problems and multiobjective optimization problems.

9.2.1 Multiobjective Combinatorial Optimization

Combinatorial optimization is a topic that consists of finding an optimal object from a finite set of objects.

, the solution

, the solution ![$$\mathbf {x}=[x_1,\ldots ,x_n]^T\in \mathbb {R}^n$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq2.png) is a vector of discrete decision variables,

is a vector of discrete decision variables,  and B = {0, 1}, and Ax ≤b denotes the elementwise inequality (Ax)i ≤ b

i for all i = 1, …, m.

and B = {0, 1}, and Ax ≤b denotes the elementwise inequality (Ax)i ≤ b

i for all i = 1, …, m.- 1.

- 2.

(9.2.8)

(9.2.8) (9.2.9)

(9.2.9) (9.2.10)

(9.2.10)Here, it is not restrictive to suppose equality constraints with

.

. - 3.Multiobjective network flow or transhipment problems [ 152]: Let G(N, A) be a network flow with a node set N and an arc set A; the model can be stated as

(9.2.11)

(9.2.11) (9.2.12)

(9.2.12) (9.2.13)

(9.2.13) (9.2.14)

(9.2.14)where x ij is the flow through arc (i, j),

is the linear transhipment “cost” for arc (i, j) in objective k, and l

ij and u

ij are lower and upper bounds on x

ij, respectively.

is the linear transhipment “cost” for arc (i, j) in objective k, and l

ij and u

ij are lower and upper bounds on x

ij, respectively. - 4.

(9.2.16)

(9.2.16) (9.2.17)

(9.2.17) (9.2.18)

(9.2.18) (9.2.19)

(9.2.19)where z i = 1 if a facility is established at site i; x ii = 1 if customer j is assigned to the facility at site i; d ij is a certain usage of the facility at site i by customer j if he/she is assigned to that facility; a i and b i are possible limitations on the total customer usage permitted at the facility i;

is, for objective k, a variable cost (or distance, etc.) if customer j is assigned to facility i;

is, for objective k, a variable cost (or distance, etc.) if customer j is assigned to facility i;  is, for objective k, a fixed cost associated with the facility at site i.

is, for objective k, a fixed cost associated with the facility at site i.

An unconstrained combinatorial optimization problem P = (S, f) is an optimization problem [120], where S is a finite set of solutions (called search space), and  is an objective function that assigns a positive cost value to each solution vector s ∈ S. The goal is either to find a solution of minimum cost value or a good enough solution in a reasonable amount of time in the case of approximate solution techniques.

is an objective function that assigns a positive cost value to each solution vector s ∈ S. The goal is either to find a solution of minimum cost value or a good enough solution in a reasonable amount of time in the case of approximate solution techniques.

It requires intensive co-operation with the decision maker for solving a multiobjective combinatorial optimization problem; this results in especially high requirements for effective tools used to generate efficient solutions.

Many combinatorial problems are hard even in single-objective versions; their multiple objective versions are frequently more difficult.

An multiobjective decision making (MODM) problem is ill-posed from the mathematical point of view because, except in trivial cases, it has no optimal solution. The goal of MODM methods is to find a solution most consistent with the decision maker’s preferences, i.e., the best compromise. Under very weak assumptions about decision maker’s preferences the best compromise solution belongs to the set of efficient solutions [136].

9.2.2 Multiobjective Optimization Problems

Consider optimization problems with multiple objectives that may usually be incompatible.

![$$\displaystyle \begin{aligned} \mathrm{minimize}/\mathrm{maximize}\quad &\left \{\mathbf{y}=\mathbf{f}(\mathbf{x})=\left[f_{1}(\mathbf{x}),\ldots ,f_{m}(\mathbf{x})\right ]^T\right \},{} \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ20.png)

are the objective functions, and

are the objective functions, and  are p inequality constraints and q equality constraints of the problem, respectively; while

are p inequality constraints and q equality constraints of the problem, respectively; while  is called the decision space, and

is called the decision space, and  is known as the objective space.

is known as the objective space.

The n-dimensional decision space

in which each coordinate axis corresponds to a component (called decision variable) of decision vector x.

in which each coordinate axis corresponds to a component (called decision variable) of decision vector x.The m-dimensional objective space

in which each coordinate axis corresponds to a component (i.e., a scalar objective function) of objective function vector y = f(x) = [f

1(x), …, f

m(x)]T.

in which each coordinate axis corresponds to a component (i.e., a scalar objective function) of objective function vector y = f(x) = [f

1(x), …, f

m(x)]T.

The MOP’s evaluation function f : x →y maps decision vector x = [x 1, …, x n]T to objective vector y = [y 1, …, y m]T = [f 1(x), …, f m(x)]T.

If some objective function f i is to be maximized (or minimized), the original problem is written as the minimization (or maximization) of the negative objective − f i. Thereafter, we consider only the multiobjective minimization (or maximization) problems, rather than optimization problems mixing both minimization and maximization.

The constraints g(x) ≤0 and h(x) = 0 determine the set of feasible solutions.

- 1.Objectives:

- Cost function: The fuel cost function of each thermal generator, considering the valve-point effect, is expressed as the sum of a quadratic and a sinusoidal function. The total fuel cost in terms of real power can be expressed as

(9.2.24)

(9.2.24)where N is number of generating units, M is number of hours in the time horizon; a i, b i, c i, d i, e i are cost coefficients of the ith unit; P im is power output of ith unit at time m, and

is lower generation limits for ith unit.

is lower generation limits for ith unit. - Emission function: The atmospheric pollutants such as sulfur oxides (SOx) and nitrogen oxides (NOx) caused by fossil-fueled generating units can be modeled separately. However, for comparison purpose, the total emission of these pollutants which is the sum of a quadratic and an exponential function can be expressed as

(9.2.25)

(9.2.25)where α i, β i, γ i, η i, δ i are emission coefficients of the ith unit.

- 2.Constraints:

- Real power balance constraints: The total real power generation must balance the predicted power demand plus the real power losses in the transmission lines, at each time interval over the scheduling horizon, i.e.,

(9.2.26)

(9.2.26)where P Dm is load demand at time m, P Lm is transmission line losses at time m.

- Real power operating limits:

(9.2.27)

(9.2.27)where

is upper generation limits for the ith unit.

is upper generation limits for the ith unit. - Generating unit ramp rate limits:

(9.2.28)

(9.2.28) (9.2.29)

(9.2.29)where UR i and DR i are ramp-up and ramp-down rate limits of the ith unit, respectively.

![$${\mathbf {x}}^{\mathrm {opt},k}=[x_1^{\mathrm {opt},k},\ldots ,x_n^{\mathrm {opt},k}]^T$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq17.png) be a vector of variables which optimizes (either minimizes or maximizes) the kth objective function f

k(x). In other words, if the vector x

opt, k ∈ X

f is such that

be a vector of variables which optimizes (either minimizes or maximizes) the kth objective function f

k(x). In other words, if the vector x

opt, k ∈ X

f is such that

![$${\mathbf {f}}^{\mathrm {opt}}=[f_1^{\mathrm {opt}},\ldots ,f_m^{\mathrm {opt}}]^T$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq18.png) (where

(where  denotes the optimum of the kth objective function) is ideal for an MOP, and the point in

denotes the optimum of the kth objective function) is ideal for an MOP, and the point in  which determined this vector is the ideal solution, and is consequently called the ideal vector.

which determined this vector is the ideal solution, and is consequently called the ideal vector.

However, due to objective conflict and/or interdependence among the n decision variables of x, such an ideal solution vector is impossible for MOPs: none of the feasible solutions allows simultaneous optimal solutions for all objectives [63].

In other words, individual optimal solutions for each objective are usually different. Thus, a mathematically most favorable solution should offer the least objective conflict.

Many real-life problems can be described as multiobjective optimization problems. The most typical multiobjective optimization problem is traveling salesman problem.

Let  be a set of cities, where c

i is the ith city and N

c is the number of all cities; A = {(r, s) : r, s ∈ C} be the edge set, and d(r, s) be a cost measure associated with edge (r, s) ∈ A.

be a set of cities, where c

i is the ith city and N

c is the number of all cities; A = {(r, s) : r, s ∈ C} be the edge set, and d(r, s) be a cost measure associated with edge (r, s) ∈ A.

For a set of N c cities, the TSP problem involves finding the shortest length closed tour visiting each city only once. In other words, the TSP problem is to find the shortest-route to visit each city once, ending up back at the starting city.

Euclidean TSP: If cities r ∈ C are given by their coordinates (x r, y r) and d(r, s) is the Euclidean distance between city r and s, then TSP is an Euclidean TSP.

Symmetric TSP: If d(r, s) = d(s, r) for all (r, s), then the TSP becomes a symmetric TSP (STSP).

Asymmetric TSP: If d(r, s) ≠ d(s, r) for at least some (r, s), then the TSP is an asymmetric TSP (ATSP).

Dynamic TSP: The dynamic TSP (DTSP) is a TSP in which cities can be added or removed at run time.

The goal of TSP is to find the shortest closed tour which visits all the cities in a given set as early as possible after each and every iteration.

Another typical example of multiobjective optimizations is multiobjective data mining.

Predictive or supervised techniques learn from the current data in order to make predictions about the behavior of new data sets.

Descriptive or unsupervised techniques provide a summary of the data.

- 1.Feature Selection: It deals with selection of an optimum relevant set of features or attributes that are necessary for the recognition process (classification or clustering). In general, the feature selection problem ( Ω, P) can formally be defined as an optimization problem: determine the feature set F ∗ for which

(9.2.32)

(9.2.32)where Ω is the set of possible feature subsets, F refers to a feature subset, and

denotes a criterion to measure the quality of a feature subset with respect to its utility in classifying/clustering the set of points X ∈ Ψ.

denotes a criterion to measure the quality of a feature subset with respect to its utility in classifying/clustering the set of points X ∈ Ψ. - 2.

Classification: The problem of supervised classification can formally be stated as follows. Given an unknown function g : X → Y (the ground truth) that maps input instances x ∈ X to output class labels y ∈ Y , and a training data set D = {(x 1, y 1), …, (x n, y n)} which is assumed to represent accurate examples of the mapping g, produce a function h : X → Y that approximates the correct mapping g as closely as possible.

- 3.Clustering: Clustering is an important unsupervised classification technique where a set of n patterns

are grouped into clusters {C

1, …, C

K} in such a way that patterns in the same cluster are similar in some sense and patterns in different clusters are dissimilar in the same sense. The main objective of any clustering technique is to produce a K × n partition matrix U = [u

kj], k = 1, …, K;j = 1, …, n, where u

kj is the membership of pattern x

j to cluster C

k:

are grouped into clusters {C

1, …, C

K} in such a way that patterns in the same cluster are similar in some sense and patterns in different clusters are dissimilar in the same sense. The main objective of any clustering technique is to produce a K × n partition matrix U = [u

kj], k = 1, …, K;j = 1, …, n, where u

kj is the membership of pattern x

j to cluster C

k:

(9.2.33)for hard or crisp partitioning of the data, and

(9.2.33)for hard or crisp partitioning of the data, and (9.2.34)

(9.2.34) (9.2.35)

(9.2.35)with

for probabilistic fuzzy partitioning of the data.

for probabilistic fuzzy partitioning of the data. - 4.

Association Rule Mining: The principle of association rule mining (ARM) [1] lies in the market basket or transaction data analysis. Association analysis is the discovery of rules showing attribute-value associations that occur frequently. The objective of ARM is to find all rules of the form X ⇒ Y, X ∩ Y = ∅ with probability c%, indicating that if itemset X occurs in a transaction, the itemset Y also occurs with probability c%.

Most of the data mining problems can be thought of as optimization problems, while the majority of data mining problems have multiple criteria to be optimized. For example, a feature selection problem may try to maximize the classification accuracy while minimizing the size of the feature subset. Similarly, a rule mining problem may optimize several rule interestingness measures such as support, confidence, comprehensibility, and lift at the same time [110, 148].

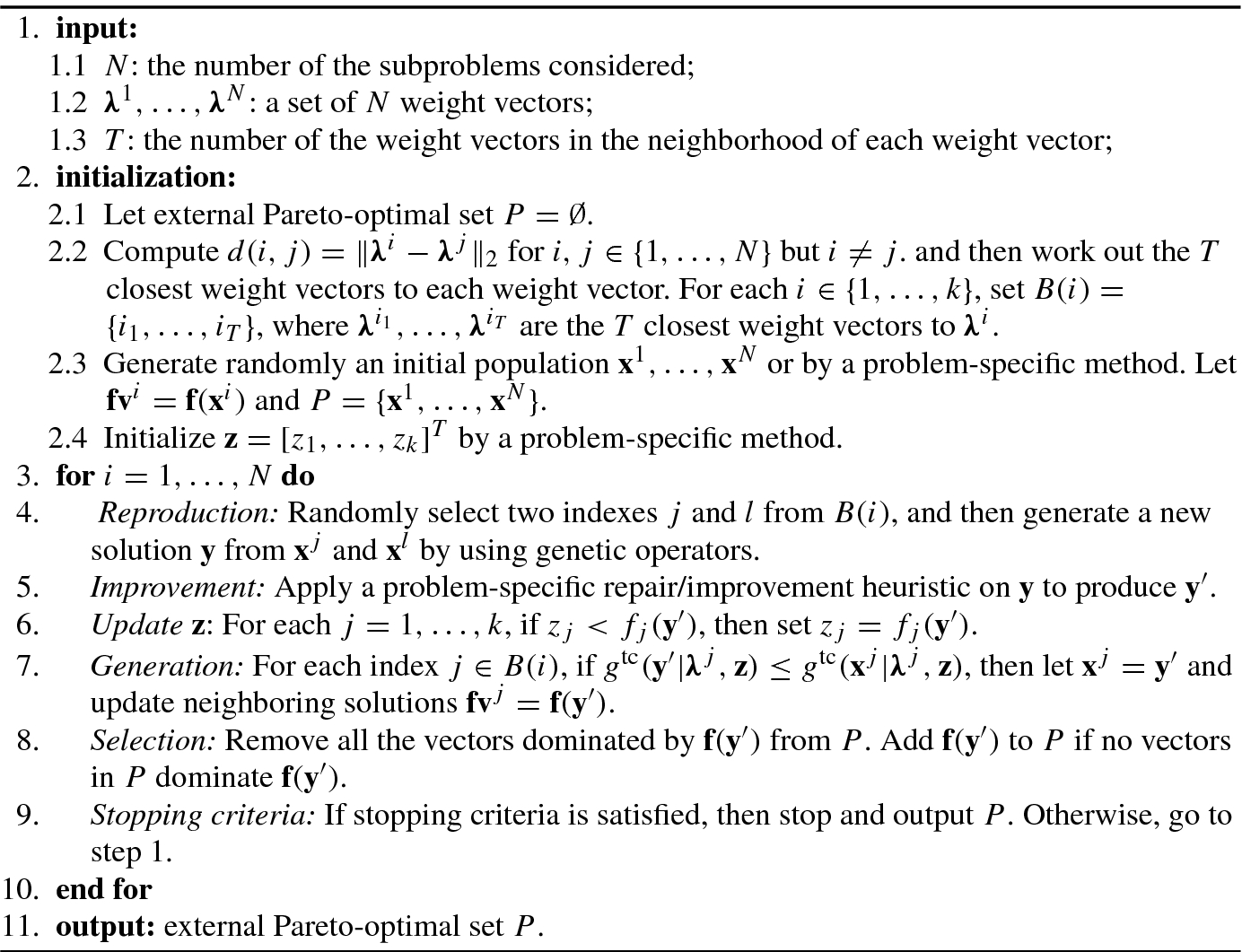

9.3 Pareto Optimization Theory

Many real-world problems in artificial intelligence involve simultaneous optimization of several incommensurable and often competing objectives. In these cases, there may exist solutions in which the performance on one objective cannot be improved without reducing performance on at least one other. In other words, there is often no single optimal solution with respect to all objectives. In practice, there could be a number of optimal solutions in multiobjective optimization problems (MOPs) and the suitability of one solution depends on a number of factors including designer’s choice and problem environment. These solutions are optimal in the wider sense that no other solutions in the search space are superior to them when all objectives are considered. In these problems, the Pareto optimization approach is a natural choice. Solutions given by the Pareto optimization approach are called Pareto-optimal solutions that are closely related to Pareto concepts.

9.3.1 Pareto Concepts

Pareto concepts are named after Vilfredo Pareto (1848–1923). These concepts constitute the Pareto optimization theory for multiple objectives.

![$$\displaystyle \begin{aligned} {\mathrm{max/min}}_{\mathbf{x}\in S}\left \{\mathbf{f}(\mathbf{x})=[f_1(\mathbf{x}),\ldots ,f_m (\mathbf{x})]^T\right \} \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ36.png)

Reformulation approach: This approach entails reformulating the problem as a single objective problem. To do so, additional information is required from the decision makers, such as the relative importance or weights of the objectives, goal levels for the objectives, value functions, etc.

Decision making approach: This approach requires that the decision makers interact with the optimization procedure typically by specifying preferences between pairs of presented solutions.

Pareto optimization approach: It finds a representative set of nondominated solutions approximating the Pareto front. Pareto optimization methods, such as evolutionary multiobjective optimization algorithms, allow decision makers to investigate the potential solutions without a priori judgments regarding to the relative importance of objective functions. Post-Pareto analysis is necessary to select a single solution for implementation.

On the other hand, the most distinguishing feature of single objective evolutionary algorithms (EAs) compared to other heuristics is that EAs work with a population of solutions, and thus are able to search for a set of solutions in a single run. Due to this feature, single objective evolutionary algorithms are easily extended to multiobjective optimization problems. Multiobjective evolutionary algorithms (MOEAs) have become one of the most active research areas in evolutionary computation.

To describe the Pareto optimality of MOP solutions in which we are interested, it needs the following key Pareto concepts: Pareto dominance, Pareto optimality, the Pareto-optimal set, and the Pareto front.

For the convenience of narration, denote x = [x

1, …, x

n]T ∈ F ⊆ S and ![$${\mathbf {x}}^{\prime }=[x_1^{\prime },\ldots ,x_n^{\prime }]^T \in F\subseteq S$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq25.png) , where F is the feasible region, in which the constraints are satisfied.

, where F is the feasible region, in which the constraints are satisfied.

It goes without saying that the above relations are available for f(x) = x and f(x′) = x′ as well. However, for two decision vectors x and x′, these relations are meaningless. In multiobjective optimization, the relation between decision vectors x and x′ are called the Pareto dominance which is based on the relations between vector objective functions.

- 1.

x is no worse than x′ in all objective functions, i.e., f i(x) ≤ f i(x′) for all i ∈{1, …, m},

- 2.

x is strictly better than x′ for at least one objective function, i.e., f j(x) < f j(x′) for at least one j ∈{1, …, m};

The Pareto dominance can be either weak or strong.

Two incomparable solutions x and x′ are also known as mutually nondominating.

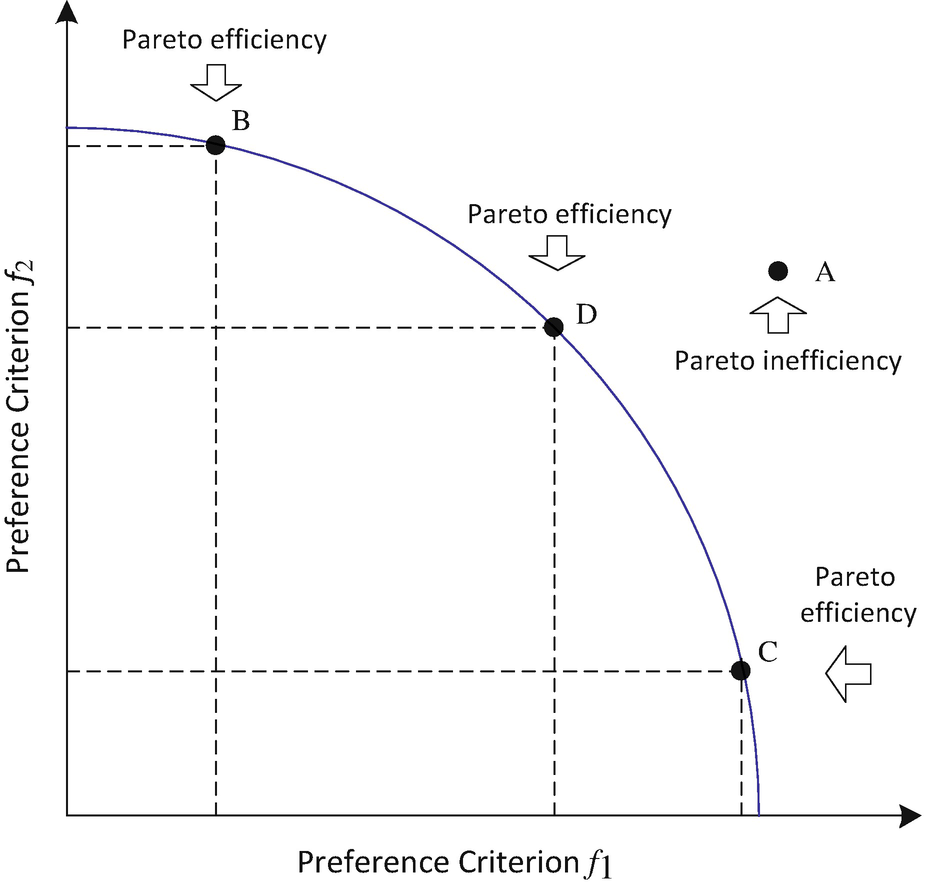

A Pareto improvement is a change to a different allocation that makes at least one objective better off without making any other objective worse off. An trial solution is known as “Pareto efficient” or “Pareto-optimal” or “globally nondominated” when no further Pareto improvements can be made, in which case we are assumed to have reached Pareto optimality.

In other words, all decision vectors which are not dominated by any other decision vector are known as “nondominated” or Pareto optimal. The phrase “Pareto optimal” means the solution is optimal with respect to the entire decision variable space F unless otherwise specified.

Pareto efficiency and Pareto improvement. Point A is an inefficient allocation between preference criterions f 1 and f 2 because it does not satisfy the constraint curve of f 1 and f 2. Two decisions to move from Point A to Points C and D would be a Pareto improvement, respectively. They improve both f 1 and f 2, without making anyone else worse off. Hence, these two moves would be a Pareto improvement and be Pareto optimal, respectively. A move from Point A to Point B would not be a Pareto improvement because it decreases the cost f 1 by increasing another cost f 2, thus making one side better off by making another worse off. The move from any point that lies under the curve to any point on the curve cannot be a Pareto improvement due to making one of two criterions f 1 and f 2 worse

A nondominated decision vector in A ⊆ X f is only Pareto-optimal in a local decision space A, while a Pareto-optimal decision vector is Pareto-optimal in entire feature decision space X f. Therefore, a Pareto-optimal decision vector is definitely nondominated, but a nondominated decision vector is not necessarily Pareto-optimal.

There may be a large number of Pareto-optimal solutions. To gain the deeper insight into the multiobjective optimization problem and knowledge about alternate solutions, there is often a special interest in finding or approximating the Pareto-optimal set.

Due to conflicting objectives it is only possible to obtain a set of trade-off solutions (referred to as Pareto-optimal set in decision space or Pareto-optimal front in objective space), instead of a single optimal solution.

Therefore our aim is to find the decision vectors that are nondominated within the entire search space. These decision vectors constitute the so-called Pareto-optimal set, as defined below.

and

and  are the Pareto-optimal sets for minimization and maximization, respectively.

are the Pareto-optimal sets for minimization and maximization, respectively.Pareto-optimal decision vectors cannot be improved in any objective without causing a degradation in at least one other objective; they represent globally optimal solutions. Analogous to single-objective optimization problems, Pareto-optimal sets are divided into local and global Pareto-optimal sets.

The set of all objectives given by Pareto-optimal set of decision vectors is known as the Pareto-optimal front (or simply called Pareto front).

Solutions in the Pareto front represent the possible optimal trade-offs between competing objectives.

For a given system, the Pareto frontier is the set of parameterizations (allocations) that are all Pareto efficient or Pareto optimal. Finding Pareto frontiers is particularly useful in engineering. This is because that when yielding all of the potentially optimal solutions, a designer needs only to focus on trade-offs within this constrained set of parameters without considering the full range of parameters.

It should be noted that a global Pareto-optimal set does not necessarily contain all Pareto-optimal solutions.

Pareto-optimal solutions are those solutions within the genotype search space (i.e., decision space) whose corresponding phenotype objective vector components cannot be all simultaneously improved. These solutions are also called non-inferior, admissible, or efficient solutions [73] in the sense that they are nondominated with respect to all other comparison solution vector and may have no clearly apparent relationship besides their membership in the Pareto-optimal set.

A “current” set of Pareto-optimal solutions is denoted by P current(t), where t represents the generation number. A secondary population storing nondominated solutions found through the generations is denoted by P known, while the “true” Pareto-optimal set, denoted by P true, is not explicitly known for MOP problems of any difficulty. The associated Pareto front for each of P current(t), P known, and P true are termed as PF current(t), PF known, and PF true, respectively.

The decision maker is often selecting solutions via choice of acceptable objective performance, represented by the (known) Pareto front. Choosing an MOP solution that optimizes only one objective may well ignore “better” solutions for other objectives.

We wish to determine the Pareto-optimal set from the set X of all the decision variable vectors that satisfy the inequality constraints (9.2.21) and (9.2.22). But, not all solutions in the Pareto-optimal set are normally desirable or achievable in practice. For example, we may not wish to have different solutions that map to the same values in objective function space.

Lower and upper bounds on objective values of all Pareto-optimal solutions are given by the ideal objective vector f ideal and the nadir objective vectors f nad, respectively. In other words, the Pareto front of a multiobjective optimization problem is bounded by a nadir objective vector z nad and an ideal objective vector z ideal, if these are finite.

Nondominated set produced by heuristic algorithms is referred to as the archive (denoted by A) of the estimated Pareto front.

The archive of estimated Pareto fronts will only be an approximation to the true Pareto front.

Fitness selection approach,

Nondominated sorting approach,

Crowding distance assignment approach, and

Hierarchical clustering approach.

9.3.2 Fitness Selection Approach

To fairly evaluate the quality of each solution and make the solution set search in the direction of Pareto-optimal solution, how to implement fitness assignment is important.

There are benchmark metrics or indicators that play an important role in evaluating the Pareto fronts. These metrics or indicators are: hypervolume, spacing, maximum spread, and coverage [139].

, its hypervolume (HV) is defined as

, its hypervolume (HV) is defined as

is the area in the objective search space covered by the obtained Pareto front, and a

i is the hypervolume determined by the components of x

i and the origin.

is the area in the objective search space covered by the obtained Pareto front, and a

i is the hypervolume determined by the components of x

i and the origin.

is the mean distance between all the adjacent solutions and m is the number of nondominated solutions in the Pareto front, and

is the mean distance between all the adjacent solutions and m is the number of nondominated solutions in the Pareto front, and

A value of spacing equal to zero means that all the solutions are equidistantly spaced in the Pareto front.

The value C(A, B) = 1 means that all solutions in B are weakly dominated by ones in A. On the other hand, C(A, B) = 0 means that none of the solutions in B is weakly dominated by A. It should be noted that both C(A, B) and C(B, A) have to be evaluated, respectively, since C(A, B) is not necessarily equal to 1 − C(B, A).

If 0 < C(A, B) < 1 and 0 < C(B, A) < 1, then neither A weakly dominates B nor B weakly dominates A. Thus, the sets A and B are incomparable, which means that A is not worse than B and vice versa.

- 1.

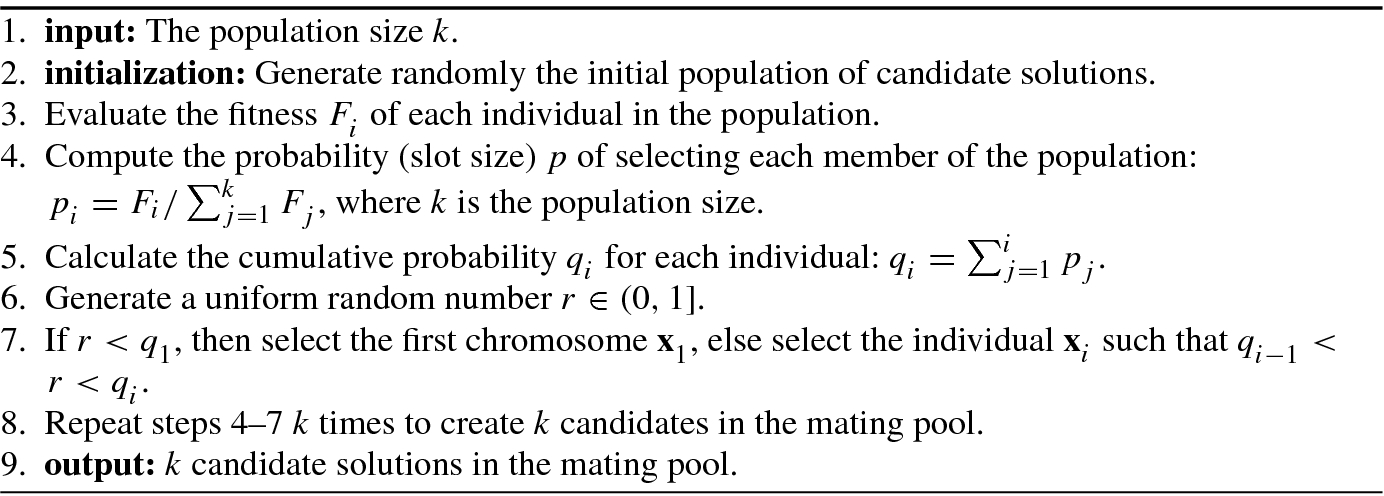

Binary tournament selection: k individuals are drawn from the entire population, allowing them to compete (tournament) and extract the best individual among them. The number k is the tournament size, and often takes 2. In this special case, we can call it binary tournament selection. Tournament selection is just the broader term where k can be any number ≥ 2.

- 2.Roulette wheel selection: It is also known as fitness proportionate selection which is a genetic operator for selecting potentially useful solutions for recombination. In roulette wheel selection, as in all selection methods, the fitness function assigns a fitness to possible solutions or chromosomes. This fitness level is used to associate a probability of selection with each individual chromosome. If F i is the fitness of individual i in the population, its probability of being selected is

(9.3.36)

(9.3.36)where N is the number of individuals in the population.

Algorithm 9.1 gives a roulette wheel fitness selection algorithm.

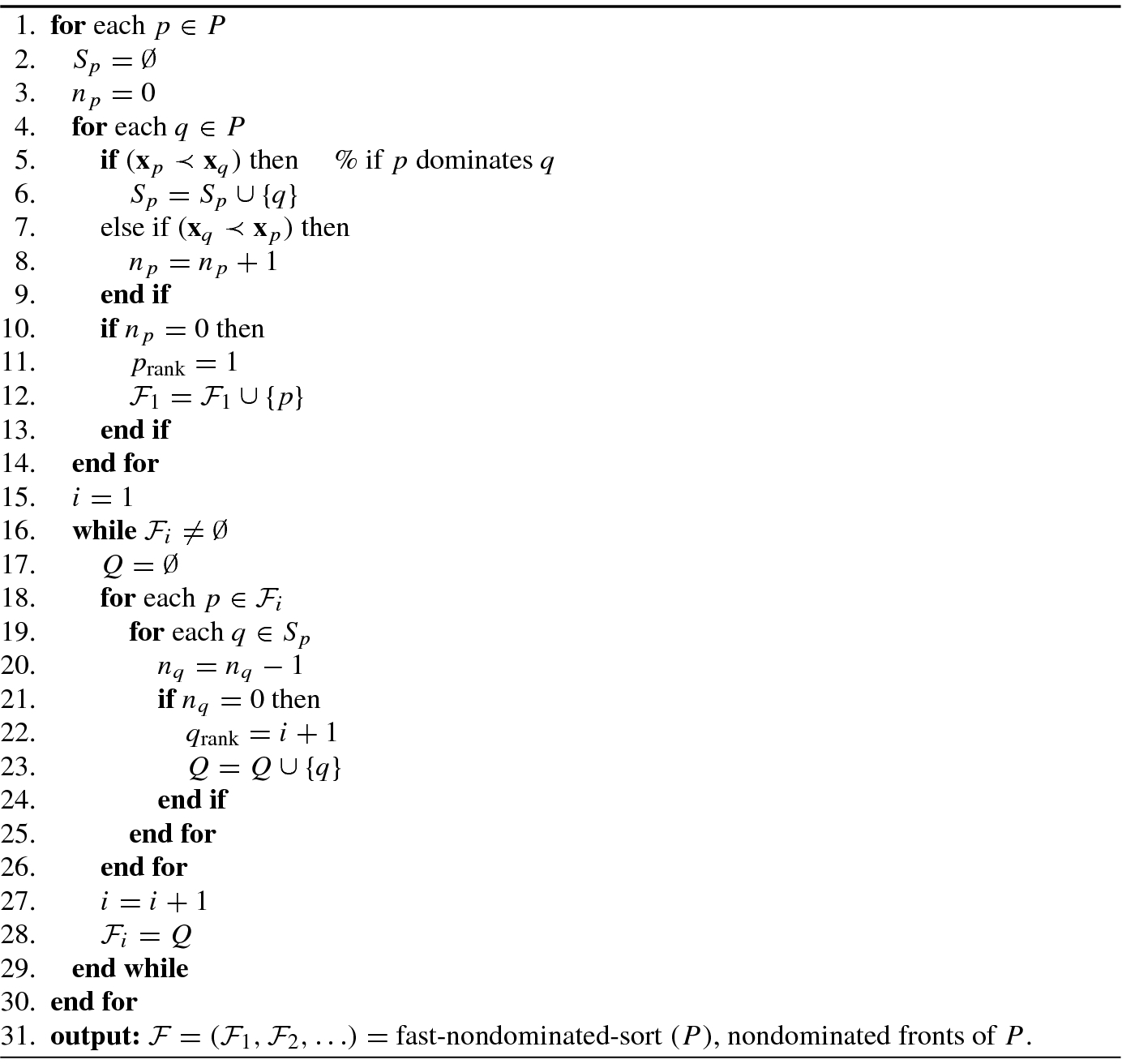

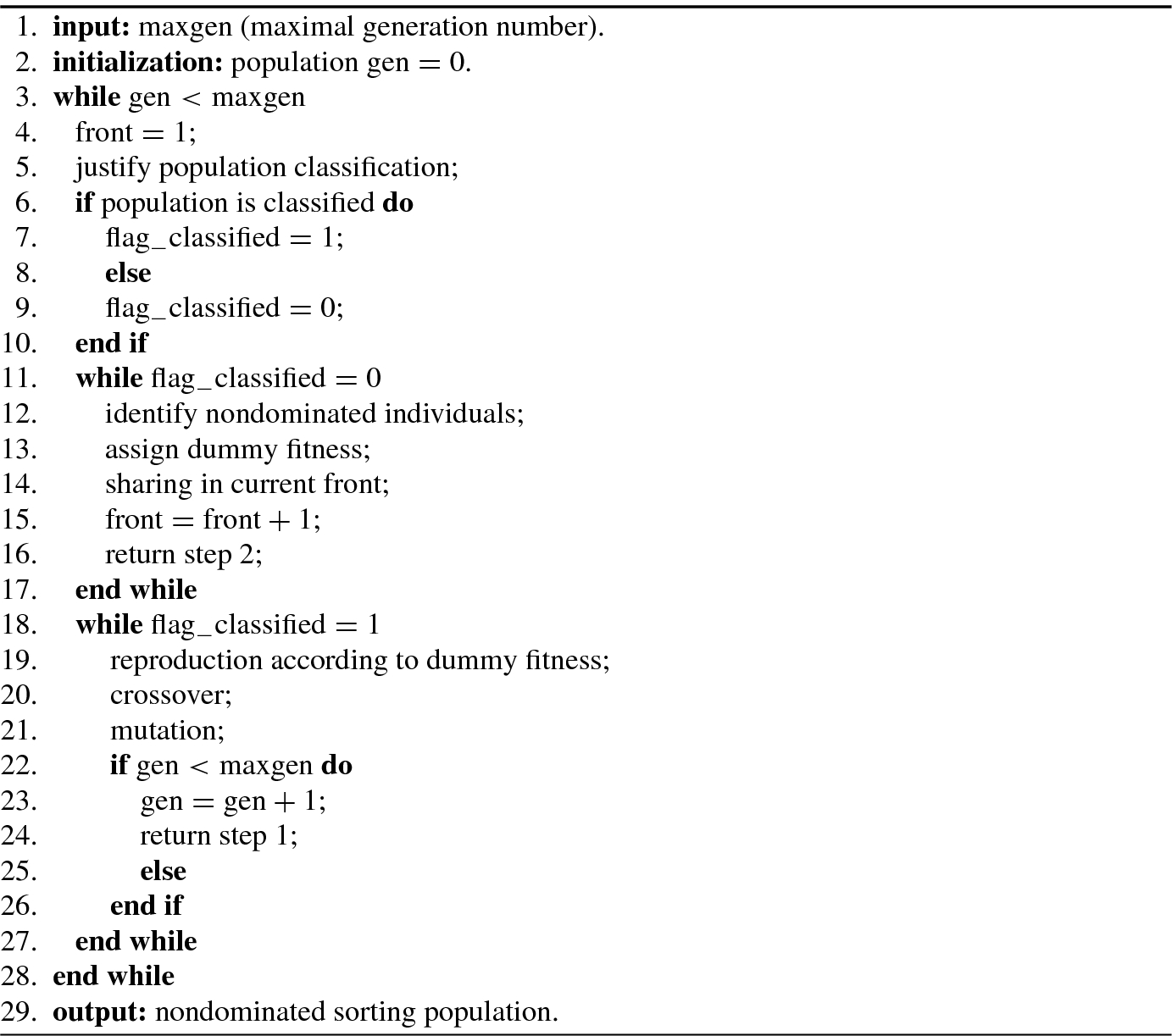

9.3.3 Nondominated Sorting Approach

The nondominated selection is based on nondominated rank: dominated individuals are penalized by subtracting a small fixed penalty term from their expected number of copies during selection. But this algorithm failed when the population had very few nondominated individuals, which may result in a large fitness value for those few nondominated points, eventually leading to a high selection pressure.

Use a ranking selection method to emphasize good points.

Use a niche method to maintain stable subpopulations of good points.

If an individual (or solution) is not dominated by all other individuals, then it is assigned as the first nondominated level (or rank). An individual is said to have the second nondominated level or rank, if it is dominated only by the individual(s) in the first nondominated level. Similarly, one can define individuals in the third or higher nondominated level or rank. It is noted that more individuals maybe have the same nondominated level or rank. Let i rank represent the nondominated rank of the ith individual. For two solutions with different nondomination ranks, if i rank < j rank then the ith solution with the lower (better) rank is preferred.

Algorithm 9.2 shows a fast nondominated sorting approach.

In order to identify solutions of the first nondominated front in a population of size N P, each solution can be compared with every other solution in the population to find if it is dominated. At this stage, all individuals in the first nondominated level in the population are found. In order to find the individuals in the second nondominated level, the solutions of the first level are discounted temporarily and the above procedure is repeated for finding third and higher levels or ranks of nondomination.

9.3.4 Crowding Distance Assignment Approach

If the two solutions have the same nondominated rank (say i rank = j rank), which one should we choose? In this case, we need another quality for selecting the better solution. A natural choice is to estimate the density of solutions surrounding a particular solution in the population. For this end, the average distance of two points on either side of this point along each of the objectives needs to be calculated.

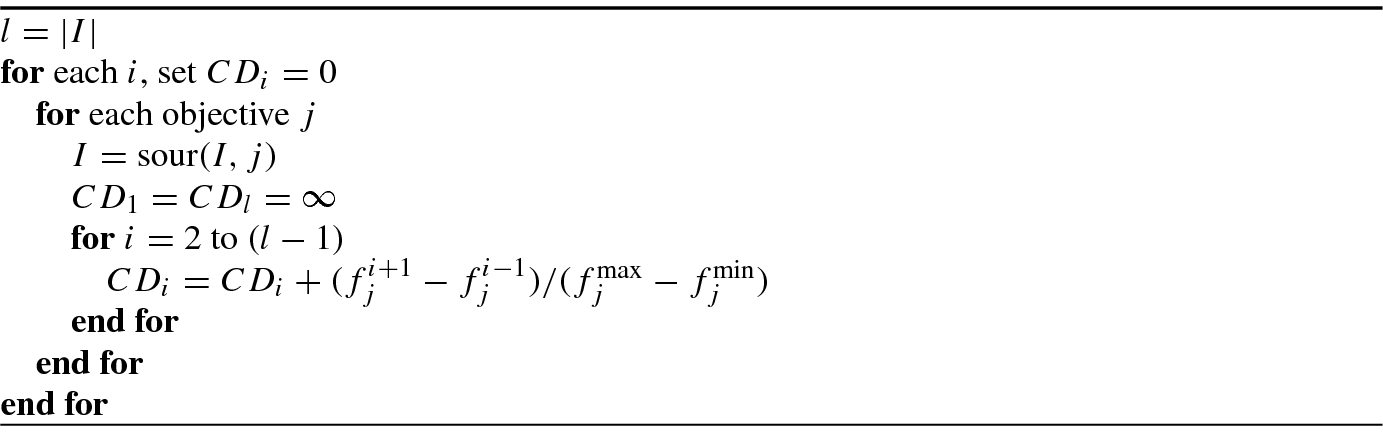

Let f 1 and f 2 be two objective functions in a multiobjective optimization problem, and let x, x i and x j be the members of a nondominated list of solutions. Furthermore, x i and x j are the nearest neighbors of x in the objective spaces. The crowding distance (CD) of a trial solution x in a nondominated set depicts the perimeter of a hypercube formed by its nearest neighbors (i.e., x i and x j) at the vertices in the fitness landscapes.

denote the kth objective function of the ith individual, where i = 1, …, |I| and k = 1, …, K. Except for boundary individuals 1 and |I|, the ith individual in all other intermediate population set {2, …, |I|− 1} is assigned a finite crowded distance (CD) value given by Luo et al. [98]:

denote the kth objective function of the ith individual, where i = 1, …, |I| and k = 1, …, K. Except for boundary individuals 1 and |I|, the ith individual in all other intermediate population set {2, …, |I|− 1} is assigned a finite crowded distance (CD) value given by Luo et al. [98]:

and

and  are the maximum and minimum values of the kth objective of all individuals, respectively.

are the maximum and minimum values of the kth objective of all individuals, respectively.

Algorithm 9.3 shows the crowding-distance-assignment (I).

Between two populations with differing nondomination ranks, the population with the lower (better) rank is preferred. If both populations belong to the same front, then the population with larger crowding distance is preferred.

9.3.5 Hierarchical Clustering Approach

Cluster analysis can be applied to the results of a multiobjective optimization algorithm to organize or partition solutions based on their objective function values.

- 1.

Define decision variables, feasible set, and objective functions.

- 2.

Choose and apply a Pareto optimization algorithm.

- 3.Clustering analysis:

Clustering tendency: By visual inspection or data projections verify that a hierarchical cluster structure is a reasonable model for the data.

Data scaling: Remove implicit variable weightings due to relative scales using range scaling.

Proximity: Select and apply an appropriate similarity measure for the data, here, Euclidean distance.

Choice of algorithm(s): Consider the assumptions and characteristics of clustering algorithms and select the most suitable algorithm for the application, here, group average linkage.

Application of algorithm: Apply the selected algorithm to obtain a dendrogram.

Validation: Examine the results based on application subject matter knowledge, assess the fit to the input data and stability of the cluster structure, and compare the results of multiple algorithms, if used.

- 4.

Represent and use the clusters and structure: If the clustering is reasonable and valid, examine the divisions in the hierarchy for trade-offs and other information to aid decision making.

9.3.6 Benchmark Functions for Multiobjective Optimization

![$$\displaystyle \begin{aligned} \min~{\mathbf{f}}_2(x)=[g(x),h(x)]\quad \text{with }~g(x)=x^2,~h(x)=(x-2)^2, \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ75.png)

- 1.

- 2.

- 3.Constrained benchmark functions

- where x = [x 1, …, x n]T, the function f 1 is a function of the first decision variable x 1 only, g is a function of the remaining n − 1 variables x 2, …, x n, and the parameters of h are the function values of f 1 and g. For example,

(9.3.47)

(9.3.47)where n = 30 and x i ∈ [0, 1]. The Pareto-optimal front is formed with g(x) = 1.

In this test, x is a 500-dimensional binary vector, a ij is the profit of item j according to knapsack i, b ij is the weight of item j according to knapsack i, and c i is the capacity of knapsack i (i = 1, 2 and j = 1, 2, …, 500).

- 4.

where x i ∈ [−103, 103].

- 5.Multiobjective benchmark functions for

![$$\min \mathbf {f}(\mathbf {x})=[f_1(\mathbf {x}),f_2 (\mathbf {x}),g_3 (\mathbf {x}),\ldots , g_m (\mathbf {x})]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq37.png)

where the value of α ∈ [0, 1] can be viewed as the correlation strength. α = 0 corresponds to the minimum correlation strength 0, where g i(x) is the same as the randomly generated objective f i(x). α = 1 corresponds to the maximum correlation strength 1, where g i(x) is the same as f 1(x) or f 2(x).

- where α ik is specified as follows:

(9.3.52)For example, the four-objective problem is specified as

(9.3.52)For example, the four-objective problem is specified as (9.3.53)

(9.3.53)

More benchmark test functions can be found in [149, 165, 172]. Yao et al. [165] summarized 23 benchmark test functions.

9.4 Noisy Multiobjective Optimization

Optimization problems in various real-world applications are often characterized by multiple conflicting objectives and a wide range of uncertainty. Optimization problems containing uncertainty and multiobjectives are termed as uncertain multiobjective optimization problems. In evolutionary optimization community, uncertainty in the objective functions is generally stochastic noise, and the corresponding multiobjective optimization problems are termed as noisy (or imprecise) multiobjective optimization problems.

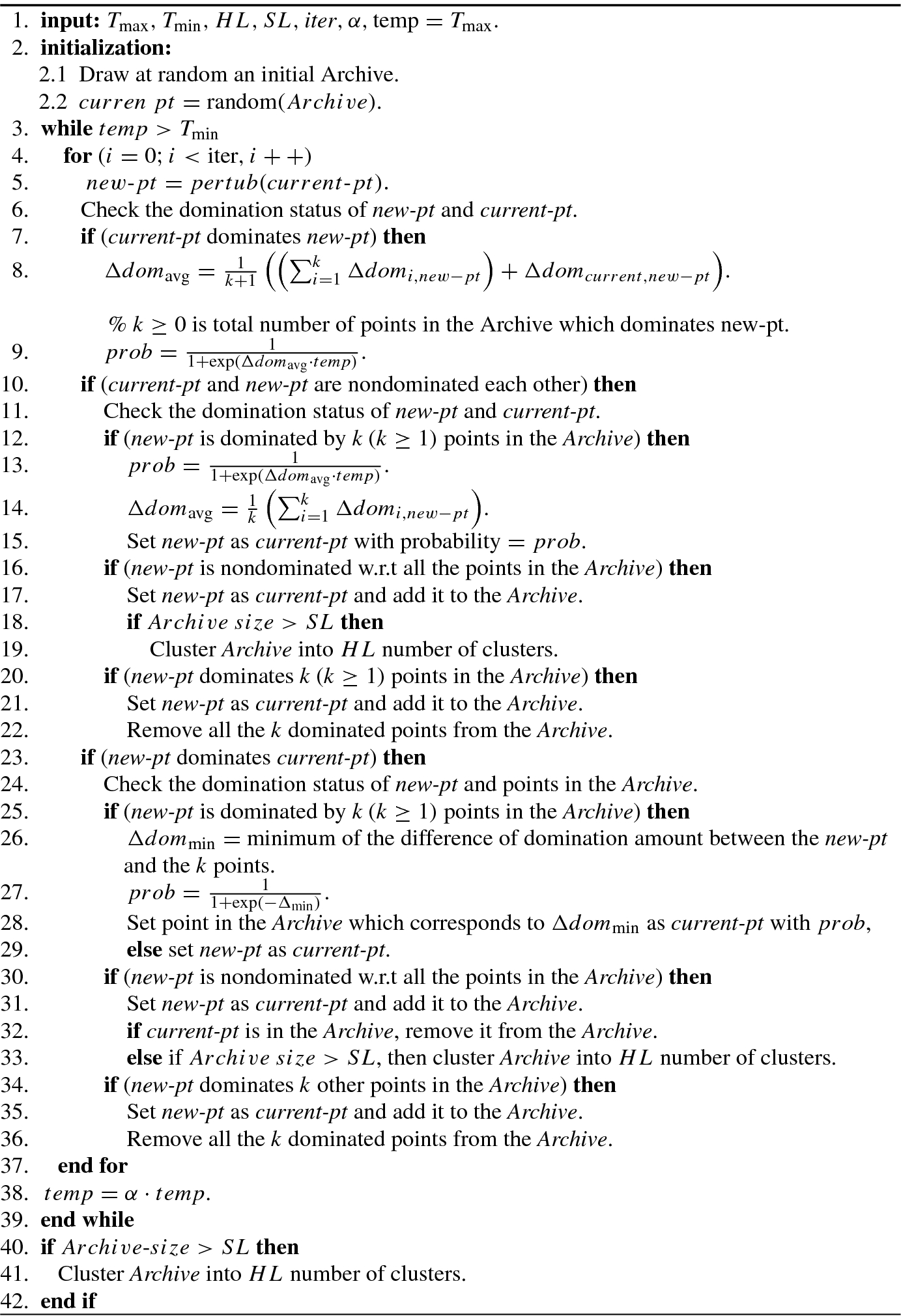

Noisy multiobjective optimization problems are also known as interval multiobjective optimization problems, since objectives f

i(x, c

i) contaminated by noise vector c

i can be reformulated, in interval-value form, as ![$$f_i(\mathbf {x},{\mathbf {c}}_i)=[ \underline {f}_i (\mathbf {x},{\mathbf {c}}_i),\overline {f}_i(\mathbf {x},{\mathbf {c}}_i)]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq38.png) .

.

9.4.1 Pareto Concepts for Noisy Multiobjective Optimization

- 1.

Noise, also referred to as aleatory uncertainty. Noise is an inherent property of the system modeled (or is introduced into the model to simulate this behavior) and therefore cannot be reduced. By Oberkampf et al. [117], aleatory uncertainty is defined as the “inherent variation associated with the physical system or the environment under consideration.”

- 2.

Imprecision, also known as epistemic uncertainty, describes not uncertainty due to system variance but the uncertainty of the outcome due to “any lack of knowledge or information in any phase or activity of the modeling process” [117].

Decision vector

corresponding to different objectives is no longer a fixed point, but is characterized by (x, c) with c = [c

1, …, c

d]T that is a local neighborhood of x, where

corresponding to different objectives is no longer a fixed point, but is characterized by (x, c) with c = [c

1, …, c

d]T that is a local neighborhood of x, where ![$$c_i=[ \underline {c}_i,\overline {c}_i]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq40.png) is an interval with lower limits

is an interval with lower limits  and upper limit

and upper limit  for i = 1, …, d.

for i = 1, …, d.Objectives f i is no longer a fixed value f i(x), but is an interval of objective, denoted by

![$$f_i(\mathbf {x},\mathbf {c})=[ \underline {f}_i(\mathbf { x}, \mathbf {c}),\overline {f}_i(\mathbf {x},\mathbf {c})]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq43.png) .

.

![$$\displaystyle \begin{aligned} &{\mathrm{max/min}}\ \big\{\mathbf{f}=[f_1 (\mathbf{x},{\mathbf{c}}_1),\ldots ,f_m (\mathbf{x},{\mathbf{c}}_m)]^T\big\} ,{} \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ89.png)

![$$\displaystyle \begin{aligned} &\text{subject to}~\,{\mathbf{c}}_i=[c_{i1},\ldots ,c_{il}]^T,\ c_{ij}=[\underline{c}_{ij},\overline{c}_{ij}],\quad \Big\{ \begin{aligned} &i=1,\ldots ,m,\\ &j=1,\ldots ,l, \end{aligned} \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ90.png)

![$$f_i(\mathbf {x},{\mathbf {c}}_i)=[ \underline {f}_i(\mathbf {x},{\mathbf {c}}_i), \overline {f}_i(\mathbf {x},{\mathbf {c}}_i)]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq44.png) is the ith objective function with interval

is the ith objective function with interval ![$$[ \underline {f}_i,\overline {f}_i],\, i=1,\ldots ,m$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq45.png) (m is the number of objectives, and m ≥ 3); c

i is a fixed interval vector independent of the decision vector x (namely, c

i remains unchanged along with x), while

(m is the number of objectives, and m ≥ 3); c

i is a fixed interval vector independent of the decision vector x (namely, c

i remains unchanged along with x), while ![$$c_{ij}=[ \underline {c}_{ij}, \overline {c}_{ij}]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq46.png) is the jth interval parameter component of c

i.

is the jth interval parameter component of c

i.

For any two solutions x 1 and x 2 ∈ X of problem (9.4.1), the corresponding ith objectives are f i(x 1, c i) and f i(x 2, c i), i = 1, …, m, respectively.

![$$\mathbf {f}({\mathbf {x}}_1)=[ \underline {\mathbf {f}}({\mathbf {x}}_1),\overline {\mathbf {f}}({\mathbf {x}}_1)]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq47.png) and

and ![$$\mathbf {f}({\mathbf {x}}_2)=[ \underline {\mathbf {f}}({\mathbf {x}}_2),\overline {\mathbf {f}}({\mathbf {x}}_2)]$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq48.png) . Their interval order relations are defined as

. Their interval order relations are defined as

In the absence of other factors (e.g., preference for certain objectives, or for a particular region of the trade-off surface), the task of an evolutionary multiobjective optimization (EMO) algorithm is to provide as good an approximation as the true Pareto front. To compare two evolutionary multiobjective optimization algorithms, we need to compare the nondominated sets they produce.

Since an interval is also a set consisting of the components larger than its lower limit and smaller than its upper limit, x 1 = (x, c 1) and x 2 = (x, c 2) can be regarded as two components in sets A and B, respectively. Hence, the decision solutions x 1 and x 2 are not two fixed points but two approximation sets, denoted as A and B, respectively.

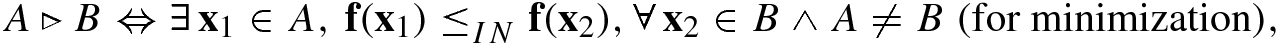

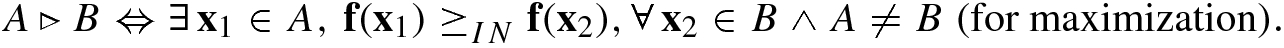

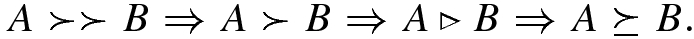

Different from Pareto concepts defined by decision vectors in (precise) multiobjective optimization, the Pareto concepts in noisy (or imprecise) multiobjective optimization are called the Pareto concepts for approximation sets due to concept definitions for approximation sets.

To evaluate approximations to the true Pareto front, Hansen and Jaszkiewicz [64] define a number of outperformance relations that express the relationship between two sets of internally nondominated objective vectors, A and B. The outperformance is habitually called the dominance.

From the above definition of the relation ⊲, one can conclude that A ≽ B ⇒ A⊲B ∨ A = B. In other words, if A weakly dominates B, then either A is better than B or they are equal.

Relation comparison between objective vectors and approximation sets

Relation | Objective vectors | Approximation sets | ||

|---|---|---|---|---|

Weakly dominates | x ≽x ′ | x is not worse than x ′ | A ≽ B | Every f(x 2) ∈ B is weakly dominated by at least one f(x 1) in A |

Dominates | x ≻x ′ | x is not worse than x ′ in all objectives and better in at least one objective | A ≻ B | Every f(x 2) ∈ B is dominated by at least one f(x 1) in A |

Strictly dominates | x ≻≻x ′ | x is better than x ′ in all objectives | A ≻≻ B | Every f(x 2) ∈ B is strictly dominated by at least one f(x 1) |

Better | A⊲B | Every f(x 2) ∈ B is weakly dominated by at least one f(x 1) and A ≠ B | ||

Incomparable | x∥x ′ | x⋡x ′ ∧x ′ ⋡x | A∥B | A⋡B ∧ B⋡A |

A dominates B: the corresponding probabilities are P(A ≻ B) = 1, P(A ≺ B) = 0, and P(A ≡ B) = 0.

A is dominated by B: the corresponding probabilities are P(A ≺ B) = 1, P(A ≻ B) = 0, and P(A ≡ B) = 0.

A and B are nondominated each other: the corresponding probabilities are P(A ≻ B) = 0, P(A ≺ B) = 0, and P(A ≡ B) = 1.

The fitness of an individual in one population refers to as the direct competition (capability) with some individual(s) from another population. Hence, the fitness plays a crucial role in evaluating individuals in evolutionary process. When considering two fitness values A and B with multiple objectives, under the case without noise, there are three possible outcomes from comparing the two fitness values.

In noisy multiobjective optimization, in addition to the convergence, diversity, and spread of a Pareto front, there are two indicators: hypervolume and imprecision [96]. For convenience, we focus on the multiobjective maximization hereafter.

- 1.

The distance of the obtained nondominated front to the Pareto-optimal front should be minimized.

- 2.

A good (in most cases uniform) distribution of the solutions found—in objective space—is desirable.

- 3.

The extent of the obtained nondominated front should be maximized, i.e., for each objective, a wide range of values should be present.

The performance metrics or indicators play an important role in evaluating noisy multiobjective optimization algorithms [53, 89, 173].

and

and  are the worst-case and the best-case hypervolume, respectively.

are the worst-case and the best-case hypervolume, respectively.The smaller the value of the imprecision, the smaller the uncertainty of the front.

9.4.2 Performance Metrics for Approximation Sets

The performance metrics or indicators play an important role in noisy multiobjective optimization.

and f

i denotes the ith objective functions with and without the additive noise, respectively. σ

2 is represented as a percentage of

and f

i denotes the ith objective functions with and without the additive noise, respectively. σ

2 is represented as a percentage of  , where

, where  is the maximum of the ith objective in true Pareto front.

is the maximum of the ith objective in true Pareto front.- 1.Proximity indicator: The metric of generational distance (GD) gives a good indication of the gap between the evolved Pareto front PF known and the true Pareto front PF true, and is defined as

(9.4.24)

(9.4.24)where

is the number of members in PF

known, d

i is the Euclidean distance (in objective space) between the member i of PF

known and its nearest member of PF

true. Intuitively, a low value of GD is desirable because it reflects a small deviation between the evolved and the true Pareto front. However, the metric of GD gives no information about the diversity of the algorithm under evaluation.

is the number of members in PF

known, d

i is the Euclidean distance (in objective space) between the member i of PF

known and its nearest member of PF

true. Intuitively, a low value of GD is desirable because it reflects a small deviation between the evolved and the true Pareto front. However, the metric of GD gives no information about the diversity of the algorithm under evaluation. - 2.Diversity indicator: To evaluate the diversity of an algorithm, the following modified maximum spread is used as the diversity indicator:

![$$\displaystyle \begin{aligned} \mathrm{MS}=\sqrt{\frac 1m\sum_{i=1}^m =\left [\big (\min \{f_i^{\max},F_i^{\max}\}-\min\{f_i^{\min},F_i^{\min}\}\big )/(F_i^{\max}-F_i^{\min})\right ]^2}, \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ113.png) (9.4.25)

(9.4.25)where m is the number of objectives,

and

and  are, respectively, the maximum and minimum of the ith objective in PF

known; and

are, respectively, the maximum and minimum of the ith objective in PF

known; and  and

and  are the maximum and minimum of the ith objective in PF

true, respectively. This modified metric takes into account the proximity to FP

true.

are the maximum and minimum of the ith objective in PF

true, respectively. This modified metric takes into account the proximity to FP

true. - 3.Distribution indicator: To evaluate how evenly the nondominated solutions are distributed along the discovered Pareto front, the modified metric of spacing is defined as

(9.4.26)

(9.4.26)where

is the average Euclidean distance between all members of PF

known and their nearest members of PF

true.

is the average Euclidean distance between all members of PF

known and their nearest members of PF

true.

9.5 Multiobjective Simulated Annealing

Metropolis algorithm, proposed by Metropolis et al. in 1953 [100], is a simple algorithm that can be used to provide an efficient simulation of a collection of atoms in equilibrium at a given temperature. This algorithm was extended by Hastings in 1970 [65] to the more general case, and hence is also called Metropolis-Hastings algorithm. The Metropolis algorithm is the earliest simulated annealing algorithm that is widely used for solving multiobjective combinatorial optimization problems.

9.5.1 Principle of Simulated Annealing

The idea of simulated annealing originates from thermodynamics and metallurgy [153]: when molten iron is cooled slowly enough it tends to solidify in a structure of minimal energy. This annealing process is mimicked by a local search strategy; at the start, almost any move is accepted, which allows one to explore the solution space. Then, the temperature is gradually decreased such that one becomes more and more selective in accepting new solutions. By the end, only improving moves are accepted in practice.

- 1.

Exact procedures;

- 2.

Specialized heuristic procedures;

- 3.

Metaheuristic procedures.

The main disadvantage of exact algorithms is their high computational complexity and inflexibility.

A metaheuristic can be defined as an iterative generation process which guides a subordinate heuristic by combining intelligently different concepts for exploring and exploiting the search space [118]. Metaheuristic is divided into two categories: single-solution metaheuristic and population metaheuristic [51].

Single-solution metaheuristic considers a single solution (and search trajectory) at a time. Its typical examples include simulated annealing (SA), tabu search (TS), etc. Population metaheuristic evolves concurrently a population of solutions rather than a single solution. Most evolutionary algorithms are based on population metaheuristic.

The divide-and-conquer strategy divides the problem into subproblems of manageable size, then solves the subproblems. The solutions to the subproblems must be patched back together, and the subproblems must be naturally disjoint, while the division made must be appropriate, so that errors made in patching do not offset the gains obtained in applying more powerful methods to the subproblems.

Iterative improvement starts with the system in a known configuration, then a standard rearrangement operation is applied to all parts of the system in turn, until discovering a rearranged configuration that improves the cost function. The rearranged configuration then becomes a new configuration of the system, and the process is continued until no further improvements can be found. Iterative improvement makes a search in this coordinate space for rearrangement steps which lead to downhill. Since this search usually gets stuck in a local but not a global optimum, it is customary to carry out the process several times, starting from different randomly generated configurations, and save the best result.

By metaheuristic procedures, it means that they define only a “skeleton” of the optimization procedure that has to be customized for particular applications. The earliest metaheuristic method is simulated annealing [100]. Other metaheuristic methods include tabu search [52], genetic algorithms [55], and so on.

The goal of multiple-objective metaheuristic procedures is to find a sample of feasible solutions that is a good approximation to the efficient solutions set.

In physically appealing, particles are free to move around at high temperatures, while as the temperature is lowered they are increasingly confined due to the high energy cost of movement. From the point of view of optimization, the energy E(x) of the state x in physically appealing is regarded as the function to be minimized, and by introducing a parameter T, the computational temperature is lowered throughout the simulation according to an annealing schedule.

Any move leading to a negative value δE(x ′, x i) < 0 is an improving one and always be accepted (p = 1).

If δE(x ′, x i) > 0 is an positive value, then the probability to accept x ′ as a new current solution x i+1 is given by

. Clearly, the higher the difference δE, the lower the probability to accept x

′ instead of x

i.

. Clearly, the higher the difference δE, the lower the probability to accept x

′ instead of x

i.δE(x ′, x i) = 0 implies that the new state x ′ is the same level of value as the current state x, there may exist two schemes—move to the new state x ′ or stay in the current state x i. The analysis of this problem shows that the move scheme is better than the stay one. In the stay scheme, search will end on both edges of the Pareto frontier not entering the middle of the frontier. However, if the move scheme is used, then search will be continue into the middle part of the frontier, move freely between nondominated states like a random walk when the temperature is low and eventually will be distributed uniformly over the Pareto frontier as time goes to infinity [114]. This result is in accordance with p = 1 given by (9.5.1).

The Boltzmann probability factor is defined as  , where E(x) is the energy of the solution x, and k

B is Boltzmann’s constant.

, where E(x) is the energy of the solution x, and k

B is Boltzmann’s constant.

At each T the simulated appealing algorithm aims at drawing samples from the equilibrium distribution  . As T → 0, any sample from P(E(x)) will almost surely lie at the minimum of E.

. As T → 0, any sample from P(E(x)) will almost surely lie at the minimum of E.

The simulated annealing consists of two processes: first “melting” the system being optimized at a high effective temperature, then lowering the temperature by slow stages until the system “freezes” and no further changes occur. At each temperature, the simulation must proceed long enough so that the system reaches a steady state. The sequence of temperatures and the number of rearrangements of {x i} attempted to reach equilibrium at each temperature can be thought of as an annealing schedule.

Intuitively, in addition to perturbations which decrease the energy, when T is high, perturbations from x to x ′ which increase the energy are likely to be accepted and the samples can explore the state space. However, as T is reduced, only perturbations leading to small increases in E are accepted, so that only limited exploration is possible as the system settles on (hopefully) the global minimum.

- 1.Decision Rule: In this rule, when moving from a current solution (or state) x i to the new solution x ′, the cost criterion is defined as

(9.5.2)

(9.5.2) - 2.

Neighborhood V (x) is defined as a set of feasible solution close to x such that any solution satisfying the constraints of MOCO problems, D = {x : x ∈ LD, x ∈ B n} and LD = {x : Ax = b}, can be obtained after a finite number of moves.

- 3.Typical parameters: Some simulated annealing-typical parameters must be fixed as follows:

The initial temperature parameter T 0 or alternatively an initial acceptance probability p 0;

The cooling factor α (α < 1) and the temperature length step N step in the cooling schedule.

The stopping rule(s): final temperature T stop and/or the maximum number of iterations without improvement N stop.

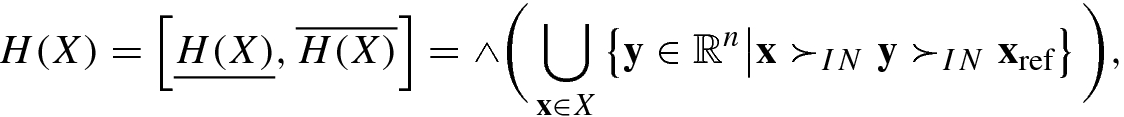

Algorithm 9.4 shows a single-objective simulated annealing algorithm.

- 1.

Annealing differs from iterative improvement in that the procedure need not get stuck since transitions out of a local optimum are always possible at nonzero temperature.

- 2.

Annealing is an adaptive form of the divide-and-conquer approach. Gross features of the eventual state of the system appear at higher temperatures, while fine details develop at lower temperatures.

9.5.2 Multiobjective Simulated Annealing Algorithm

the concept of neighborhood;

acceptance of new solutions with some probability;

dependence of the probability on a parameter called the temperature; and

the scheme of the temperature changes.

As comparison with single-objective simulated annealing, population-based simulated annealing (PSA) uses also the following ideas:

- 1.

Apply the concept of a sample (population) of interacting solutions from genetic algorithms [55] at each iteration in simulated annealing. The solutions are called generate solutions.

- 2.

In order to assure dispersion of the generated solutions over the whole set of efficient solutions, one must control the objective weights used in the multiple objective rules for acceptance probability in order to increase or decrease the probability of improving values of the particular objectives.

If z k(x ′) ≤ z k(x n), ∀ k = 1, …, K then the move from x i to x ′ is an improvement with respect to all the objective δz k(x ′, x n) = z k(x ′) − z k(x n) ≤ 0, ∀k = 1, …, K. Therefore, x ′ is always accepted (p = 1).

An improvement and a deterioration can be simultaneously observed on different cost criteria δz k < 0 and

. The first crucial point is how to define the acceptance probability p.

. The first crucial point is how to define the acceptance probability p.- When all cost criteria are deteriorated: ∀k, δz k ≥ 0 at least one strict inequality, a probability p to accept x ′ instead of x i must be calculated. LetFor the computation of the probability p, the second crucial point is how to compute the “distance” between z(x ′) and z(x i).

![$$\displaystyle \begin{aligned} \mathbf{z}({\mathbf{x}}^{\prime})=[z_1({\mathbf{x}}^{\prime}),\ldots ,z_K({\mathbf{x}}^{\prime})]^T\quad \text{and}\quad \mathbf{z}({\mathbf{x}}_i)=[z_1({\mathbf{x}}_i),\ldots ,z_K({\mathbf{x}}_i)]^T. \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ117.png) (9.5.3)

(9.5.3)

To overcome the above two difficulties, Ulungu et al. [153] proposed a criterion scalarizing approach in 1999.

- Weighed Chebyshev norm L ∞:where

(9.5.12)

(9.5.12) are the cost (criterion) values of the ideal point

are the cost (criterion) values of the ideal point  .

.

It is easily shown [153] that the global acceptance probability p in (9.5.8) is just a most intuitive scalarizing function, i.e., p = t(Π, λ).

Searching precision: Because of problem complexity of multiobjective optimization, the algorithm finds hardly the Pareto-optimal solutions, and hence it must find the possible near solutions to the optimal solutions set.

Searching-time: The algorithm must find the optimal set efficiently during searching-time.

Uniform probability distribution over the optimal set: The found solutions must be widely spread, or uniformly distributed over the real Pareto-optimal set rather than converging to one point because every solution is important in multiobjective optimization.

Information about Pareto frontier: The algorithm must give as much information as possible about the Pareto frontier.

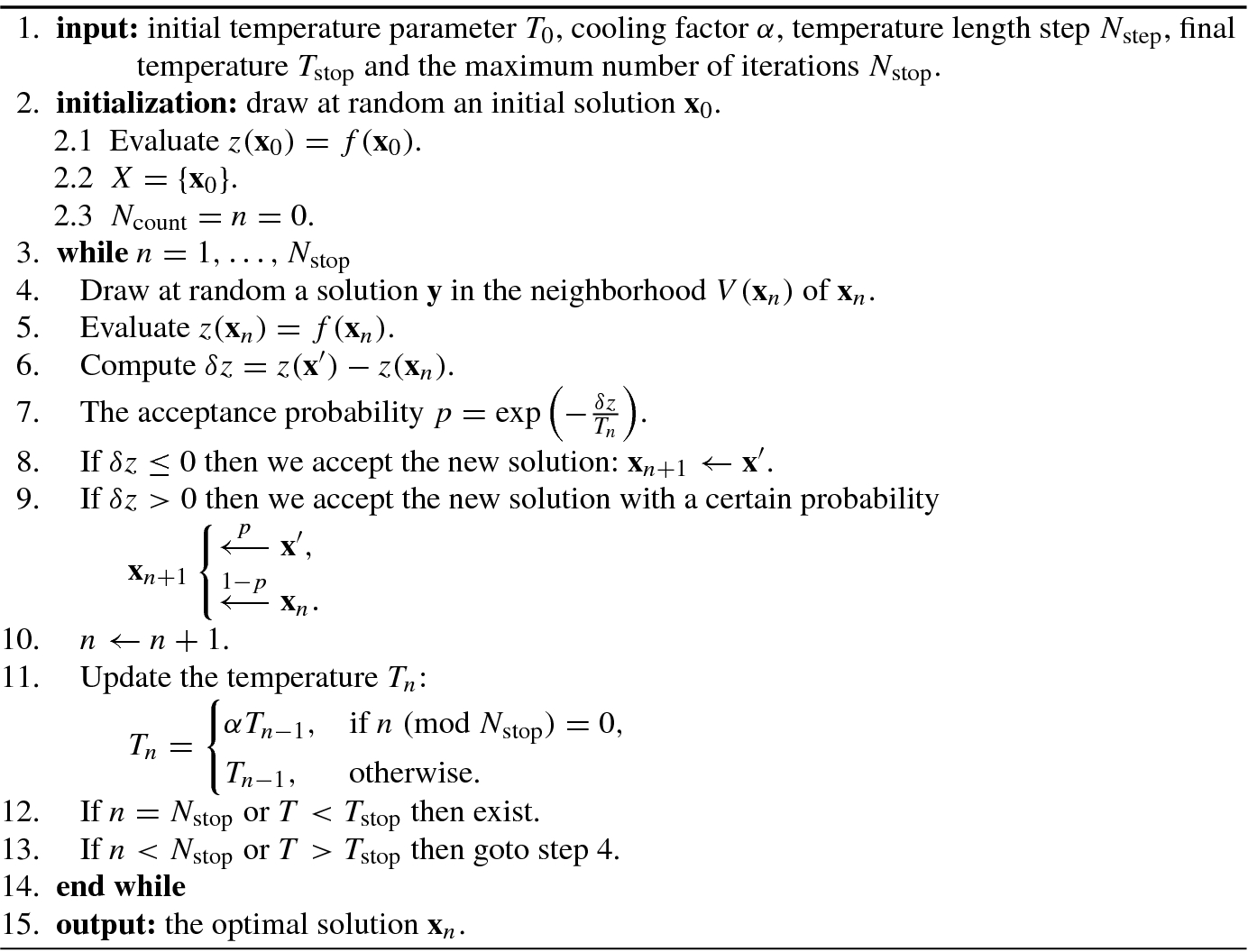

A multiobjective simulated annealing algorithm is shown in Algorithm 9.5.

- 1.

By using the concepts of Pareto optimality and domination, high searching precision can be achieved by simulated annealing.

- 2.

The main drawback of simulated annealing is searching-time, as it is generally known that simulated annealing takes long time to find the optimum.

- 3.

An interesting advantage of simulated annealing is its uniform probability distribution property as it is mathematically proved [50, 104] that it can find each of the global optima with the same probability in a scalar finite-state problem.

- 4.

For multiobjective optimization, as all the Pareto solutions have different cost vectors that have a trade-off relationship, a decision maker must select a proper solution from the found Pareto solution set or sometimes by interpolating the found solutions.

Therefore, in order to apply simulated annealing in multiobjective optimization, one must reduce searching-time and effectively search Pareto optimal solutions. It should be noted that any solution in the set PE of Pareto-optimal solutions should not be dominated by other(s), otherwise any dominated solution should be removed from PE. In this sense, Pareto solution set should be the best nondominated set.

9.5.3 Archived Multiobjective Simulated Annealing

In multiobjective simulated annealing (MOSA) algorithm developed by Smith et al. [145], the acceptance of a new solution x is determined by its energy function. If the true Pareto front is available, then the energy of a particular solution x is calculated as the total energy of all solutions that dominates x. In practical applications, as the true Pareto front is not available all the time, one must estimate first the Pareto front F ′ which is the set of mutually nondominating solutions found thus far in the process. Then, the energy of the current solution x is the total energy of nondominating solutions. These nondominating solutions are called the archival nondominating solutions or nondominating solutions in Archive.

In order to estimate the energy of the Pareto front F ′, the number of archival nondominated solutions in the Pareto front should be taken into consideration in MOSA. But, this is not done in MOSA.

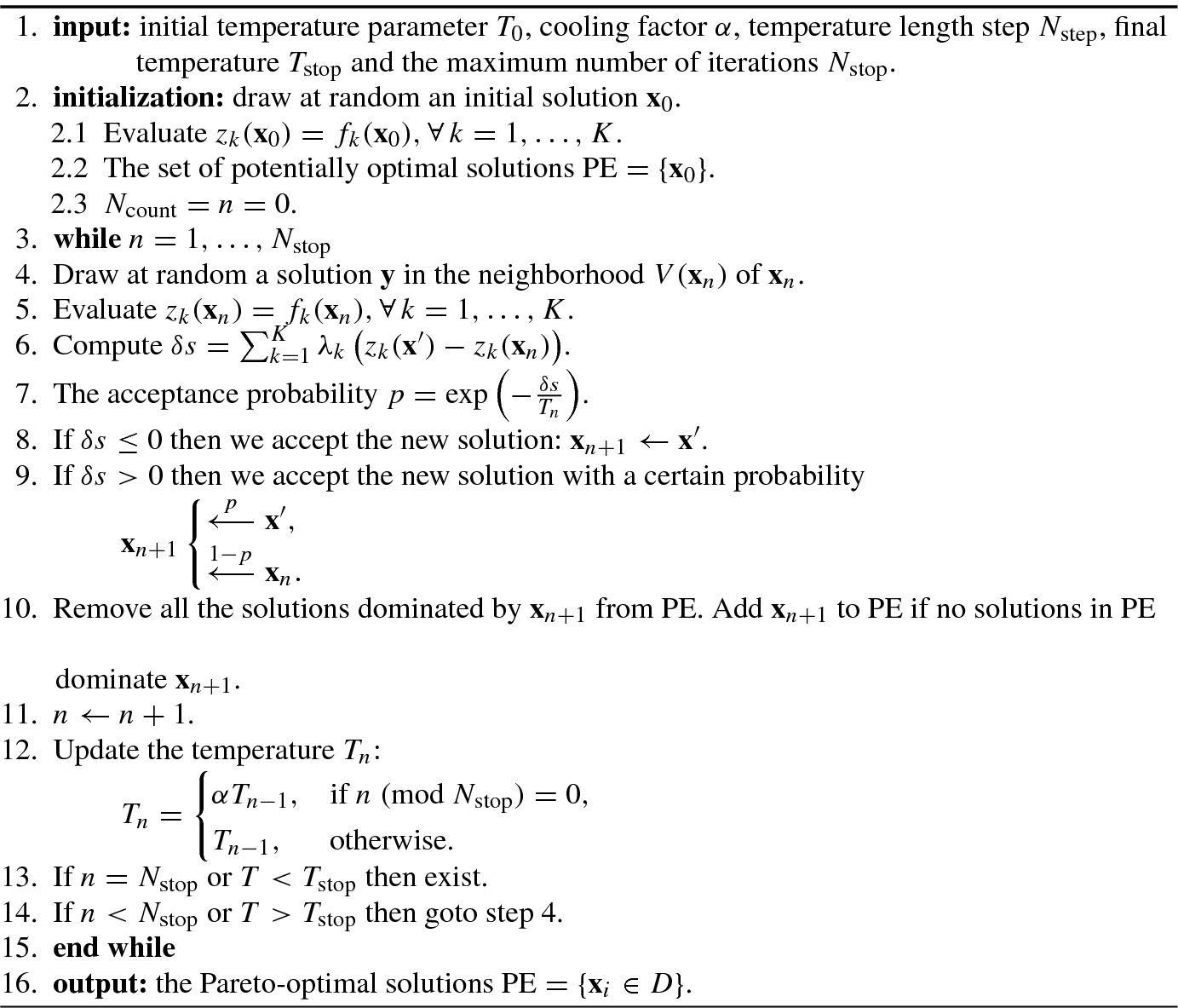

By incorporating the nondominating solutions in the Archive, one can determine the acceptance of a new solution. Such an MOSA is known as archived multiobjective simulated annealing (AMOSA), which was proposed by Bandyopadhyay et al. in 2008 [7], see Algorithm 9.6.

HL: The maximum size of the Archive on termination. This set is equal to the maximum number of nondominated solutions required by the user;

SL: The maximum size to which the Archive may be filled before clustering is used to reduce its size to HL;

: Maximum (initial) temperature,

: Maximum (initial) temperature,  : Minimal (final) temperature;

: Minimal (final) temperature;iter: Number of iterations at each temperature;

α: The cooling rate in SA.

![$$\bar {\mathbf {x}}^*=[\bar x_1^*,\ldots ,\bar x_n^*]^T$$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_IEq70.png) denote the decision variable vector that simultaneously maximizes the objective values

denote the decision variable vector that simultaneously maximizes the objective values  , while satisfying the constraints, if any. In maximization problems, a solution

, while satisfying the constraints, if any. In maximization problems, a solution  is said to dominate

is said to dominate  if and only if

if and only if

One of the points, called current-pt, is randomly selected from Archive as the initial solution at temperature  . The current-pt is perturbed to generate a new solution called new-pt. The domination status of new-pt is checked with respect to the current-pt and solutions in Archive.

. The current-pt is perturbed to generate a new solution called new-pt. The domination status of new-pt is checked with respect to the current-pt and solutions in Archive.

Depending on the domination status between current-pt and new-pt, the new-pt is selected as the current-pt with different probabilities. This process constitutes the core of Algorithm 9.6, see Step 4 to Step 37.

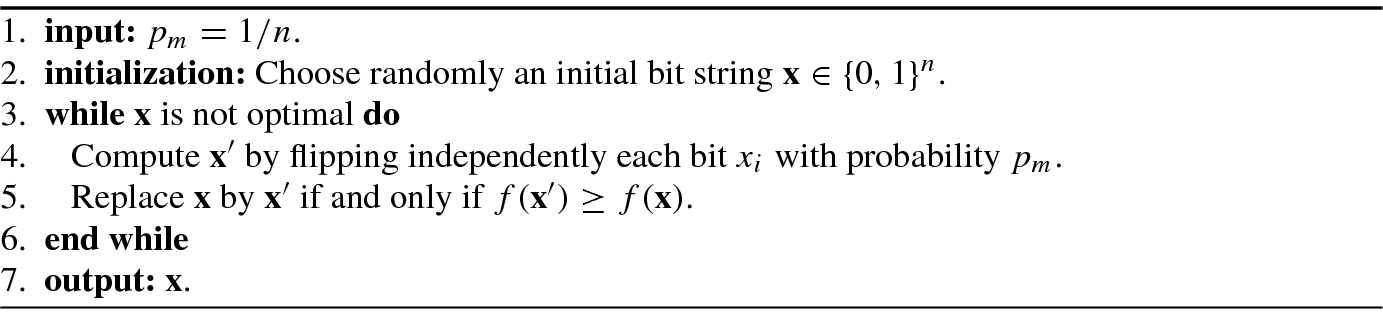

9.6 Genetic Algorithm

A genetic algorithm (GA) is a metaheuristic algorithm inspired by the process of natural selection. Genetic algorithms are commonly used to generate high-quality solutions to optimization and search problems by relying on bio-inspired operators such as mutation, crossover, and selection.

In a genetic algorithm, a population of candidate solutions (called individuals, creatures, or phenotypes) to an optimization problem is evolved toward better solutions. Each candidate solution has a set of properties (its chromosomes or genotype) which can be mutated and altered; traditionally, solutions are represented in binary as strings of 0s and 1s, but other encodings are also possible.

The evolution is an iterative process: (a) It usually starts from a population of randomly generated individuals. The population in each iteration is known as a generation. (b) In each generation, the fitness of every individual in the population is evaluated. The fitness is usually the value of the objective function in the optimization problem being solved. (c) The fitter individuals are stochastically selected from the current population, and each individual’s genome is modified (recombined and possibly randomly mutated) to form a new generation. (d) This new generation of candidate solutions is then used in the next iteration of the algorithm. (e) The algorithm terminates when either a maximum number of generations has been produced, or a satisfactory fitness level has been reached for the population.

9.6.1 Basic Genetic Algorithm Operations

The genetic algorithm consists of encoded chromosome, fitness function, reproduction, crossover, and mutation operations generally.

A typical genetic algorithm requires three important concepts:

a genetic representation of the solution domain,

a fitness function to evaluate the solution domain, and

a notion of population. Unlike traditional search methods, genetic algorithms rely on a population of candidate solutions.

A genetic algorithm (GA) is a search technique used for finding true or approximate solutions to optimization and search problems. GAs are a particular class of evolutionary algorithms (EAs) that use inheritance, mutation, selection, and crossover (also called recombination) inspired by evolutionary biology.

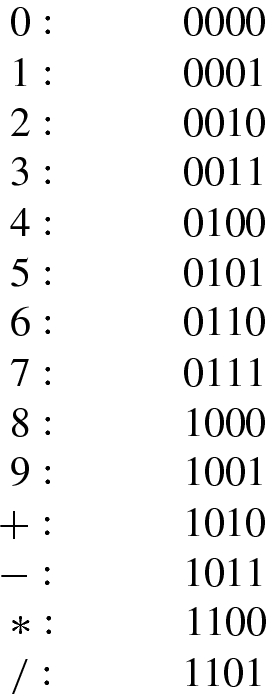

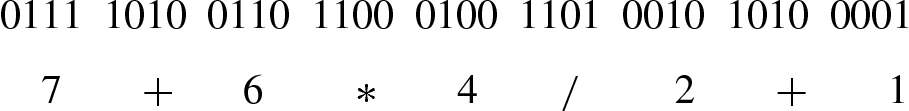

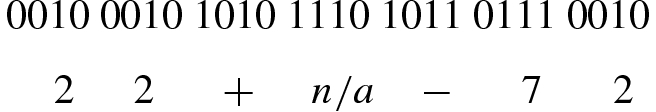

GAs encode the solutions (or decision variables) of a search problem into finite-length strings of alphabets of certain cardinality. Candidate solutions are called individuals, creatures, or phenotypes. An abstract representation of individuals is called chromosomes, the genotype or the genome, the alphabets are known as genes and the values of genes are referred to as alleles. In contrast to traditional optimization techniques, GAs work with coding of parameters, rather than the parameters themselves.

One can think of a population of individuals as one “searcher” sent into the optimization phase space. Each searcher is defined by his genes, namely his position inside the phase space is coded in his genes. Every searcher has the duty to find a value of the quality of his position in the phase space.

To evolve good solutions and to implement natural selection, a measure is necessary for distinguishing good solutions from bad solutions. This measure is called fitness.

Once the genetic representation and the fitness function are defined, at the beginning of a run of a genetic algorithm a large population of random chromosomes is created. Each decoded chromosome will represent a different solution to the problem at hand.

When two organisms mate they share their genes. The resultant offspring may end up having half the genes from one parent and half from the other. This process is called recombination. Very occasionally a gene may be mutated.

Genetic algorithms are a way of solving problems by mimicking the same processes mother nature uses. They use the same combination of selection, recombination (i.e., crossover), and mutation to evolve a solution to a problem.

Each chromosome is made up of a sequence of genes from certain alphabet which can consist of binary digits (0 and 1), floating-point numbers, integers, symbols (i.e., A, B, C, D), etc.

This shows all the different genes required to encode the problem as described. The possible genes 1110, 1111 will remain unused and will be ignored by the algorithm if encountered.

To evolve good solutions and to implement natural selection, we need a measure for distinguishing good solutions from bad solutions. In essence, the fitness measure must determine a candidate solution’s relative fitness, which will subsequently be used by the GA to guide the evolution of good solutions. This is one of the characteristics of the GA: it only uses the fitness of individuals to get the relevant information for the next search step.

To assign fitness, Hajela and Lin [61] proposed a weighed-sum method: Each objective is assigned a weight w i ∈ (0, 1) such that ∑iw i = 1, and the scalar fitness value is then calculated by summing up the weighted objective values w if i(x). To search multiple solutions in parallel, the weights are not fixed but coded in the genotype so that the diversity of the weight combinations is promoted by phenotypic fitness sharing [61, 169]. As a consequence, the fitness assignment evolves the solutions and weight combinations simultaneously.

Individuals are selected through a fitness-based process, where fitter individuals with lower inferior value are typically more likely to be selected. On the one hand, excellent individuals must be reserved to avoid too random search and low efficiency. On the other hand, these high-fitness individuals are not expected to over breed, avoiding the premature convergence of the algorithm. For simplicity and efficiency, an elitist selection strategy is employed. First, the individuals in the population p(t) are sorted from small to large according to the inferior value. Then the former 1∕10 of the sorted individuals are directly selected into the crossover pool. The remaining 9∕10 of individuals are gained by random competitions of all individuals in the population.

Crossover is a unique feature of the originality of GA in evolutionary algorithms. Genetic crossover is a process of genetic recombination that mimics sexual reproduction in nature. Its role is to inherit the original good genes to the next generation of individuals and to generate new individuals with more complex genetic structures and higher fitness value.

is the maximum inferior value, and r

avg is the average inferior value of the population.

is the maximum inferior value, and r

avg is the average inferior value of the population.

It is seen from Eq. (9.6.1) for crossover probability that when the individual fitness value of the participating crossover is lower than the average fitness value of the population, i.e., the individual is a poor performing individual, a large crossover probability is adopted for it. When the individual fitness value of the crossover is higher than the average fitness value, i.e., the individual has excellent performance, then a small crossover probability is used to reduce the damage of the crossover operator to the better performing individual.

When the fitness values of the offspring generated by the crossover operation are no longer better than their parents, but the global optimal solution is not reached, the GA algorithm will occur premature convergence. At this time, the introduction of the mutation operator in the GA tends to produce good results.

Mutation in the GA simulates the mutation of a certain gene on the chromosome in the evolution of natural organisms in order to change the structure and physical properties of the chromosome.

On one hand, the mutation operator can restore the lost genetic information during population evolution to maintain individual differences in the population and prevent premature convergence. On the other hand, when the population size is large, for one to introduce moderate mutation after crossover operation, one can also improve the local search efficiency of the GA algorithm, thereby increasing the diversity of the population to reach the global domain of the search.

![$$\displaystyle \begin{aligned} P_m = P_{m0}[1-2(r_{\max}-r_{\min})/3(\mathrm{PopSize}-1)], \end{aligned} $$](../images/492994_1_En_9_Chapter/492994_1_En_9_Chapter_TeX_Equ132.png)

and

and  are, respectively, the maximum and minimum inferior value of the individual, PopSize is the number of individuals, and P

m0 is the mutation probability of the program.

are, respectively, the maximum and minimum inferior value of the individual, PopSize is the number of individuals, and P

m0 is the mutation probability of the program.

When the individual fitness value is lower than the average fitness value of the population, namely the individual has a poor performance, one adopts a large crossover probability and mutation probability.

If the individual fitness value is higher than the average fitness value, i.e., the individual’s performance is excellent, then the corresponding crossover probability and mutation probability are adaptively taken according to the fitness value.

When the individual fitness value is closer to the maximum fitness value, the crossover probability and the mutation probability are smaller.

If the individual fitness is equal to the maximum fitness value, then the crossover probability and the mutation probability are zero.

Therefore, individuals with optimal fitness values will remain intact in the next generation. However, this will allow individuals with the greatest fitness to rapidly multiply in the population, leading to premature convergence of the algorithm. Hence, this adjustment method is applicable to the population in the late stage of evolution, but it is not conducive to the early stage of evolution, because the dominant individuals in the early stage of evolution are almost in a state of no change, and the dominant individuals at this time are not necessarily optimized. The global optimal solution to the problem increases the likelihood of evolution to a locally optimal solution. To overcome this problem, we can use the default crossover probability p c = 0.8 and the default mutation probability p m = 0.001 for each individual, so that the crossover probability and mutation probability of individuals with good performance in the population are no longer zero.

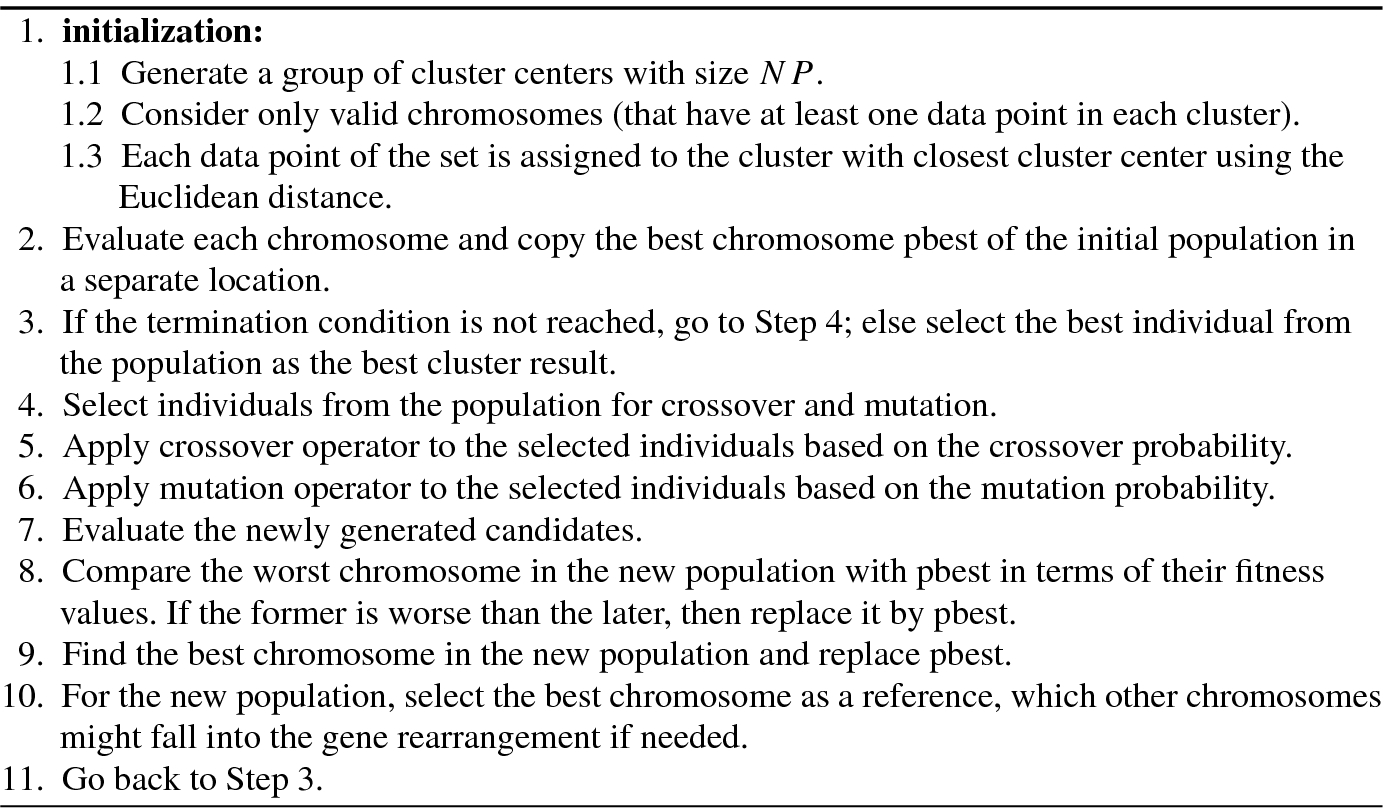

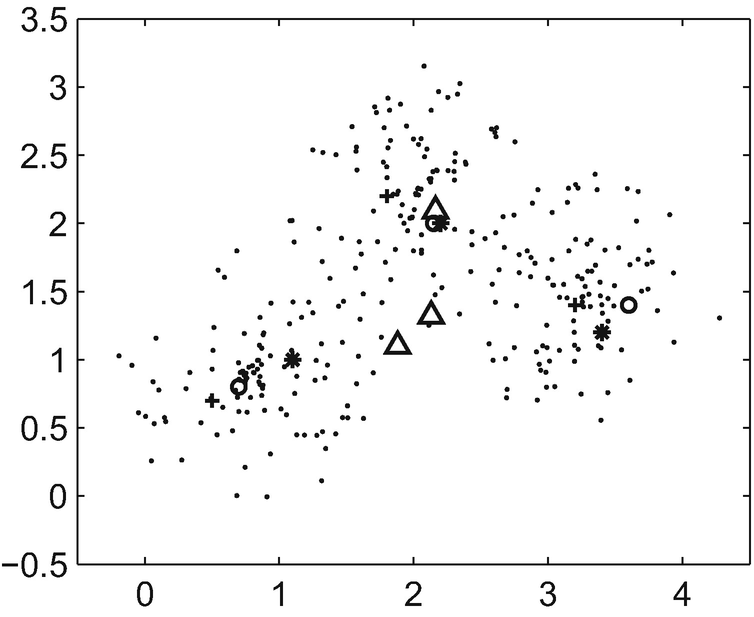

9.6.2 Genetic Algorithm with Gene Rearrangement Clustering

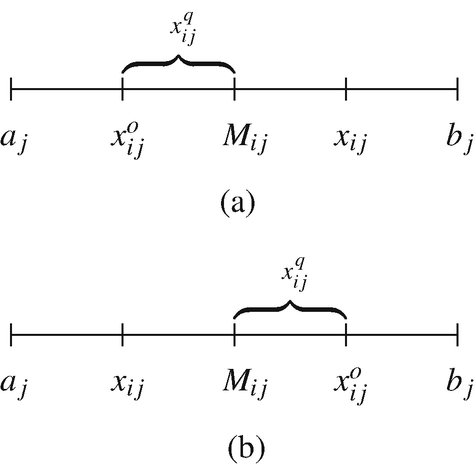

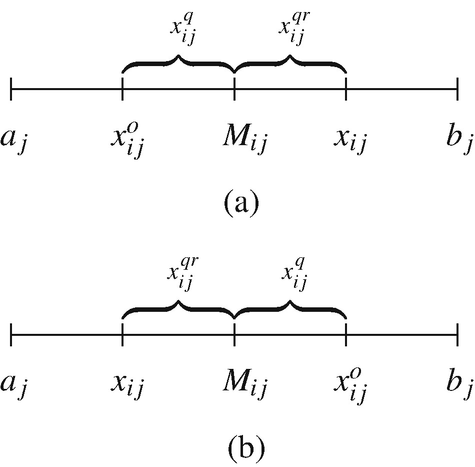

Genetic clustering algorithms have a certain degree of degeneracy in the evolution process. The degeneracy problem is mainly caused by the non-one-to-one correspondence between the solution space of the clustering problem and the genetic individual coding in the evolution process [127].