4.1 Introduction

- 1.

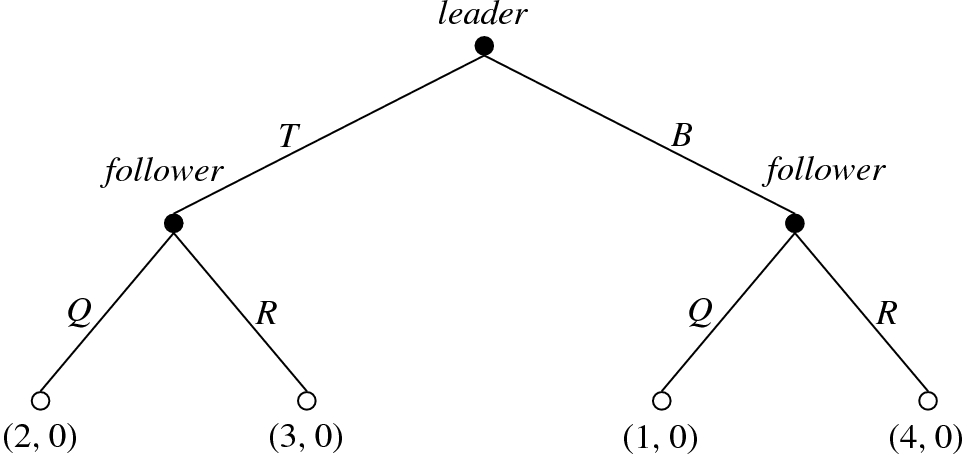

in the first stage: one player, henceforth called the leader, chooses an action x in his action set X;

- 2.

in the second stage: one player, henceforth called the follower, observes the action x chosen by the leader in the first stage and then chooses an action y in his action set Y ;

- 3.

after the two-stage interaction: the leader receives L(x, y) where

is the leader’s payoff function, and the follower receives F(x, y) where

is the leader’s payoff function, and the follower receives F(x, y) where  is the follower’s payoff function.

is the follower’s payoff function.

Assume that players aim to minimize their payoff functions, as traditionally used in optimization literature where the payoff functions embed players’ costs. However, since  , we could assume equivalently that players maximize their payoff functions (which usually appears in economics contexts, where payoff functions represent players’ profits). At the moment, we set no assumptions on the structure of the actions sets X and Y : they could be of finite or infinite cardinalities, subsets of finite or infinite dimensional spaces, strict subsets or whole spaces, etc. Their nature will be specified each time in the sequel of the chapter.

, we could assume equivalently that players maximize their payoff functions (which usually appears in economics contexts, where payoff functions represent players’ profits). At the moment, we set no assumptions on the structure of the actions sets X and Y : they could be of finite or infinite cardinalities, subsets of finite or infinite dimensional spaces, strict subsets or whole spaces, etc. Their nature will be specified each time in the sequel of the chapter.

that assigns to any action x of the leader the set M(x) defined above is the follower’s best reply correspondence. For sake of brevity, it will be also called argmin map.

that assigns to any action x of the leader the set M(x) defined above is the follower’s best reply correspondence. For sake of brevity, it will be also called argmin map.The leader, if he can foresee the optimal response  chosen for any action x by the follower, will pick an action in the set

chosen for any action x by the follower, will pick an action in the set  according to such a forecast.

according to such a forecast.

Given the informative structure of the game, predicting the follower’s optimal responses (or, equivalently, the response function y(⋅) of the follower) is typically a hard task for the leader, unless some additional information is available. Therefore, depending on the additional information of the leader about the single-valuedness of M or, when M is not single-valued, about how the follower chooses an optimal response in the set M(x) for any x ∈ X, one can associate to the Stackelberg game Γ different kinds of mathematical problems.

In Sect. 4.2 such mathematical problems and the related solution concepts are presented, together with illustrative examples and their possible roles in bilevel optimization.

In Sect. 4.3 we describe and discuss the main crucial issues arising in the just mentioned problems, focusing on the following ones: existence of solutions, “variational” stability, well-posedness, approximation, numerical approximation and selection of solutions. The crucial issues negatively answered are illustrated by counterexamples.

Regularizing the follower’s optimal reaction sets;

Regularizing the follower’s payoff function.

In Sect. 4.5 we investigate the second approach, that enables both to overcome the non-uniqueness of the follower’s optimal response and to select among the solutions. To get these goals, we construct sequences of Stackelberg games with a unique second-stage solution which approximate in some sense the original game by exploiting first the Tikhonov regularization and then the proximal regularization, two well-known regularization techniques in convex optimization.

A conclusive section concerns various extensions of the Stackelberg game notion considered in this chapter.

4.2 Models and Solution Concepts

We will illustrate five problems and solution concepts connected to the Stackelberg game defined in the previous section. The first one appears if the follower’s best reply correspondence M is assumed to be single-valued (and the leader knows it), whereas the others concern situations where M is not single-valued. Their connections will be emphasized and illustrated by examples. In this section, we only refer to seminal papers and to the first ones on regularization and approximation methods.

4.2.1 Stackelberg Problems

Let us recall the solution concepts associated to (SP).

The infimum value infx ∈ XL(x, m(x)) is the Stackelberg value of (SP).

- A leader’s action

is a Stackelberg solution to (SP) if

is a Stackelberg solution to (SP) if

An action profile

is a Stackelberg equilibrium of (SP) if

is a Stackelberg equilibrium of (SP) if  is a Stackelberg solution and

is a Stackelberg solution and  . △

. △

with a, b ∈ ]0, +∞[ and linear cost functions C

i(q

i) = cq

i for any i ∈{1, 2} with c ∈ ]0, a[. Hence, the optimal response of firm 2 to any quantity chosen by firm 1 is unique and given by

with a, b ∈ ]0, +∞[ and linear cost functions C

i(q

i) = cq

i for any i ∈{1, 2} with c ∈ ]0, a[. Hence, the optimal response of firm 2 to any quantity chosen by firm 1 is unique and given by

, the quantities produced at equilibrium are

, the quantities produced at equilibrium are

. In such a problem the leader’s constraints depend also on the follower’s best reply function m, which makes it harder to manage than (SP) from the mathematical point of view. In the sequel of the chapter we will consider only constraints for the leader which do not depend on the actions of the follower.

. In such a problem the leader’s constraints depend also on the follower’s best reply function m, which makes it harder to manage than (SP) from the mathematical point of view. In the sequel of the chapter we will consider only constraints for the leader which do not depend on the actions of the follower.From now on, in the next subsections of this section we assume that M is not single-valued.

4.2.2 Pessimistic Leader: Weak Stackelberg Problem

The infimum value w =infx ∈ Xsupy ∈ M(x)L(x, y) is the security (or pessimistic) value of (PB).

- A leader’s action

is a weak Stackelberg (or pessimistic) solution to (PB) if that is,

is a weak Stackelberg (or pessimistic) solution to (PB) if that is,

.

. An action profile

is a weak Stackelberg (or pessimistic) equilibrium of (PB) if

is a weak Stackelberg (or pessimistic) equilibrium of (PB) if  is a weak Stackelberg solution and

is a weak Stackelberg solution and  . △

. △

For first investigations about regularization and approximation of problem (PB) see [51, 69, 71, 89].

4.2.3 Optimistic Leader: Strong Stackelberg Problem

The infimum value s =infx ∈ Xinfy ∈ M(x)L(x, y) is the optimistic value of (OB).

- A leader’s action

is a strong Stackelberg (or optimistic) solution to (OB) if that is,

is a strong Stackelberg (or optimistic) solution to (OB) if that is,

.

. An action profile

is a strong Stackelberg (or optimistic) equilibrium of (OB) if

is a strong Stackelberg (or optimistic) equilibrium of (OB) if  is a strong Stackelberg solution and

is a strong Stackelberg solution and  . △

. △

The use of terms “weak Stackelberg” and “strong Stackelberg” goes back to [20] where a concept of sequential Stackelberg equilibrium in a general dynamic games framework is introduced. For a first investigation on regularization and approximation of problem (OB) see [52].

is a strong Stackelberg equilibrium of (OB) if and only if it is a (global) solution of the following problem:

is a strong Stackelberg equilibrium of (OB) if and only if it is a (global) solution of the following problem:

,

,  and

and  . Moreover, the value s

′ =infx ∈ X,y ∈ M(x)L(x, y) coincides with s, the optimistic value of (OB), and the problem is frequently called “bilevel optimization problem” (for first results, see for example [14, 26, 85, 96, 108, 111]).

. Moreover, the value s

′ =infx ∈ X,y ∈ M(x)L(x, y) coincides with s, the optimistic value of (OB), and the problem is frequently called “bilevel optimization problem” (for first results, see for example [14, 26, 85, 96, 108, 111]).The following examples show that the pessimistic and the optimistic behaviours of the leader can design different solutions, values and equilibria.

![$$\displaystyle \begin{aligned} L(x,y)=-x-y\quad \text{and}\quad F(x,y)=\begin{cases} (x+7/4)y, & \mbox{if } x\in[-2,-7/4[ \\ 0, & \mbox{if } x\in [-7/4,7/4[ \\ (x-7/4)y, & \mbox{if } x\in[7/4,2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equq.png)

![$$\displaystyle \begin{aligned} M(x)=\begin{cases} \{1\}, & \mbox{if } x\in [-2,-7/4[ \\ {} [-1,1], & \mbox{if } x\in [-7/4,7/4] \\ \{-1\}, & \mbox{if } x\in ]7/4,2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equr.png)

![$$\displaystyle \begin{aligned} \sup_{y\in M(x)}L(x,y)=\begin{cases} -x-1, & \mbox{if } x\in[-2,-7/4[ \\ -x+1, & \mbox{if } x\in[-7/4,2], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equs.png)

![$$\displaystyle \begin{aligned} \inf_{y\in M(x)}L(x,y)=\begin{cases} -x-1, & \mbox{if } x\in[-2,7/4] \\ -x+1, & \mbox{if } x\in]7/4,2], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equt.png)

4.2.4 Intermediate Stackelberg Problem

Let us recall the solution concepts related to (IS D).

The infimum value

is the intermediate value of (IS

D).

is the intermediate value of (IS

D).- A leader’s action

is an intermediate Stackelberg solution with respect to D if

is an intermediate Stackelberg solution with respect to D if

An action profile

is an intermediate Stackelberg equilibrium with respect to D if

is an intermediate Stackelberg equilibrium with respect to D if  is an intermediate Stackelberg solution with respect to D and

is an intermediate Stackelberg solution with respect to D and  . △

. △

Depending on the probability distribution D(x) on M(x) for any x ∈ X, problem (IS D) could become (PB) or (OB). Examples on the connections among weak, strong and intermediate Stackelberg solutions are illustrated in [83], one of which is presented below for the sake of completeness.

![$$\displaystyle \begin{aligned} \operatorname*{\mbox{Arg min}}_{x\in X}\left(\alpha L(x,x^2)+(1-\alpha)L(x,-x^2)\right)=\begin{cases} \{-1\}, & \mbox{if } \alpha\in[0,{3}/{4}] \\ \{-{1}/{2(2\alpha-1)}\}, & \mbox{if }\alpha\in]{3}/{4},1]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equx.png)

![$$\displaystyle \begin{aligned} v_D=\inf_{x\in X}\left(\alpha L(x,x^2)+(1-\alpha)L(x,-x^2)\right)=\begin{cases} 2\alpha-2, & \mbox{if } \alpha\in[0,{3}/{4}] \\ -{1}/{4(2\alpha-1)}, & \mbox{if }\alpha\in]{3}/{4},1], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equy.png)

4.2.5 Subgame Perfect Nash Equilibrium Problem

The equilibrium concepts presented until now consist of action profiles, that is pairs composed by one action of the leader and one action of the follower and, moreover, they are focused primarily on the leader’s perspectives. Such concepts are broadly used in an engineering setting or by optimization practitioners, see for example [10, 28, 41, 81]. However, as traditional in a game-theoretical framework, we are now interested in an equilibrium concept which takes more into account the strategic aspects of the game. The equilibrium concept naturally fitting such goal is the subgame perfect Nash equilibrium (henceforth SPNE), which represents the most widely employed solution concept in an economic setting. The SPNE solution concept was introduced by the Nobel laureate Selten in [101] who suggested that players should act according to the so-called principle of sequential rationality: “the equilibria to select are those whereby the players behave optimally from any point of the game onwards”. For the notions of strategy, subgame and SPNE in a general game-theoretical framework and for further discussion on the behavioural implications and procedures to find SPNEs see, for example, [39, Chapter 3] and [87, Chapter 7].

We first recall the notion of player’s strategy only for a two-player Stackelberg game Γ = (X, Y, L, F). In such a game, the set of leader’s strategies coincides with the set of leader’s actions X, whereas the set of follower’s strategies is the set of all the functions from X to Y , i.e. Y X = {φ: X → Y }.

The set of strategy profiles is X × Y X and the definition of subgame perfect Nash equilibrium is characterized in the following way.

is an SPNE of Γ if the following conditions are satisfied:

is an SPNE of Γ if the following conditions are satisfied: - (SG1)

- for each choice x of the leader, the follower minimizes his payoff function,

- (SG2)

- the leader minimizes his payoff function taking into account his hierarchical advantage, i.e.

Note that the denomination “subgame perfect Nash equilibrium” is due to the following key features: first the SPNE notion is a refinement of the Nash equilibrium solution concept (introduced by the Nobel laureate Nash in [95]), secondly the restriction of an SPNE to any subgame constitutes a Nash equilibrium.

We emphasize that an SPNE consists of a strategy profile, that is a pair composed by one strategy of the leader (or equivalently one action of the leader) and one strategy of the follower (which is a function from the actions set of the leader to the actions set of the follower), as illustrated in Definition 4.2.5 and differently from all the equilibrium concepts defined before where only action profiles are involved. However, we can connect SPNEs and the equilibrium notions described above in this section, as pointed out in the following examples.

The set of SPNEs of a Stackelberg game Γ = (X, Y, L, F) where the follower’s optimal response to any choice of the leader is unique can be fully characterized in terms of the solutions to the associate problem (SP) defined in Sect. 4.2.1.

where

where  and

and  are given by

are given by

is the unique Stackelberg solution and, moreover,

is the unique Stackelberg solution and, moreover,  is the unique Stackelberg equilibrium.

is the unique Stackelberg equilibrium.In the case where the follower’s best reply correspondence is not single-valued, the solutions to the associate problem (PB) as well as to the associate problem (OB) defined in Sects. 4.2.2 and 4.2.3, respectively, can be part of an SPNE.

and

and  where

where  and

and  are the functions defined by

are the functions defined by

and

and  where

where  and

and  are the functions defined by

are the functions defined by

and

and  , since

, since

Therefore, having also in mind to deal with the issue of how reducing the number of SPNEs, we will take into account these latter types of SPNEs “induced” by weak or strong Stackelberg solutions.

- A strategy profile

is an SPNE of Γ induced by a weak Stackelberg solution if

is an SPNE of Γ induced by a weak Stackelberg solution if  is an SPNE of Γ which satisfies

is an SPNE of Γ which satisfies

- A strategy profile

is an SPNE of Γ induced by a strong Stackelberg solution if

is an SPNE of Γ induced by a strong Stackelberg solution if  is an SPNE of Γ which satisfies

is an SPNE of Γ which satisfies

△

Coming back to the second example on page 13,  is an SPNE induced by a weak Stackelberg solution,

is an SPNE induced by a weak Stackelberg solution,  is an SPNE induced by a strong Stackelberg solution,

is an SPNE induced by a strong Stackelberg solution,  and

and  are SPNEs that are not induced either by a weak or by a strong Stackelberg solution and the game has no further SPNEs.

are SPNEs that are not induced either by a weak or by a strong Stackelberg solution and the game has no further SPNEs.

Let us provide below an example where the SPNEs induced by weak or strong Stackelberg solutions are derived in a continuous setting, differently from the above example.

and

and  where

where ![$$\bar \varphi \colon [-2,2]\to [-1,1]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq59.png) and

and ![$$\bar \psi \colon [-2,2]\to [-1,1]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq60.png) are defined respectively by

are defined respectively by ![$$\displaystyle \begin{aligned} \bar\varphi(x)=\begin{cases} 1, & \mbox{if } x\in [-2,-7/4[ \\ -1, & \mbox{if } x\in [-7/4,2] \end{cases}\quad \text{and}\quad \bar\psi(x)=\begin{cases} 1, & \mbox{if } x\in [-2,7/4] \\ -1, & \mbox{if } x\in ]7/4,2], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equak.png)

where

where ![$$\bar \vartheta \colon [-2,2]\to [-1,1]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq62.png) is defined by

is defined by ![$$\displaystyle \begin{aligned} \bar\vartheta(x)=\begin{cases} 1, & \mbox{if } x\in[-2,-7/4[ \\ -4x/7, & \mbox{if } x\in[-7/4,7/4[ \\ -1, & \mbox{if } x\in [7/4,2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equal.png)

4.3 Crucial Issues in Stackelberg Games

Existence: do the solutions of the problem exist under not too restrictive assumptions on the actions sets and on the payoff functions (possibly discontinuous, as not unusual in economic frameworks)?

“Variational” stability: the data of the problem could be affected by uncertainty, hence given a perturbation of the original problem acting on the actions sets and/or on the payoff functions, does a sequence of solutions of the perturbed problems converge to a solution of the original problem? And what about the values? Such analysis, which helps to predict the “behaviour” of the game when one is not able to deal with exact equilibria, and similar analyses that compare outcomes before and after a change in exogenous parameters are known in economics as comparative statics.

Well-posedness: does the problem have a unique solution? And does any method which constructs an “approximating sequence” automatically allow to approach a solution?

Approximation: how constructing appropriate concepts of approximate solutions in order to both obviate the lack of solutions of a problem and to manage problems where infinite dimensional spaces are involved?

Approximation via numerical methods: how transforming the original problem into a better-behaved equivalent problem? And how overcoming the numerical difficulties which derive from the possible non-single-valuedness of the follower’s best reply correspondence?

Selection: if the problem has more than one solution, is it possible to construct a procedure which allows to reduce the number of solutions or, better yet, to pick just one solution? Which are the motivations that would induce the players to act according to such a procedure in order to reach the designed solution?

Now, we discuss which of the issues displayed above can be positively or negatively answered.

4.3.1 Stackelberg Problems

Problem (SP) is well-behaved regarding all the topics just summarized. In particular, by applying the maximum theorem in [6, 16], the existence of Stackelberg solutions and Stackelberg equilibria is guaranteed provided that the action sets X and Y are compact subsets of two Euclidean spaces and that the payoff functions L and F are continuous over X × Y .

L is lower semicontinuous over X × Y ,

F is lower semicontinuous over X × Y ,

- for any (x, y) ∈ X × Y and any sequence (x n)n converging to x in X, there exists a sequence

in Y such that

in Y such that

- for any sequence

such that

such that

and

and

converges to

converges to

in X for a selection of integers (n

k)k, we have

in X for a selection of integers (n

k)k, we have

.

.

is a Stackelberg equilibrium of (SP)

nfor any

is a Stackelberg equilibrium of (SP)

nfor any

, then any convergent subsequence of the sequence

, then any convergent subsequence of the sequence

in X × Y has a limit

in X × Y has a limit

which is a Stackelberg equilibrium of (SP) and satisfies

which is a Stackelberg equilibrium of (SP) and satisfies

△

- the continuous convergence of (L n)n to L can be substituted by the following two conditions:

- (a)for any (x, y) ∈ X × Y and any sequence (x n, y n)n converging to (x, y) in X × Y , we have

- (b)for any x ∈ X there exists a sequence

converging to x in X such that, for any y ∈ Y and any sequence (y

n)n converging to y in Y , we have

converging to x in X such that, for any y ∈ Y and any sequence (y

n)n converging to y in Y , we have

- (a)

- whereas, the continuous convergence of (F n)n to F can be substituted by the following two conditions:

- (c)for any (x, y) ∈ X × Y and any sequence (x n, y n)n converging to (x, y) in X × Y , we have

- (d)for any (x, y) ∈ X × Y and any sequence (x n)n converging to x in X, there exists a sequence

in Y such that

in Y such that

- (c)

△

Results on well-posedness of (SP) can be found in [68, 91], whereas in [28, Chapter 6] numerical approximation and algorithmic issues are widely investigated.

Concerning problem (GSP) defined in Sect. 4.2.1, we mention that in [103] a first approximation technique based on a barrier method has been proposed, then in [67] a general approximation scheme involving conditions of minimal character has been introduced together with applications to barrier methods, whereas in [72] also external penalty methods have been considered.

4.3.2 Weak Stackelberg (or Pessimistic Bilevel Optimization) Problem

Problem (PB) is the worst-behaved among the problems illustrated in Sect. 4.2. In fact, the compactness of the action sets and the continuity of the payoff functions do not guarantee, in general, the existence of weak Stackelberg solutions and weak Stackelberg equilibria even in a finite dimensional setting, as proved in many folk examples in literature (see [15, 80] and [10, Remark 4.3]) and in the following one.

![$$\displaystyle \begin{aligned} M(x)=\begin{cases} \{-1\}, & \mbox{if } x\in[-1,0[ \\ {} [-1,1], & \mbox{if } x=0 \\ \{1\}, & \mbox{if } x\in ]0,1]. \end{cases} \end{aligned} $$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equ1.png)

![$$\displaystyle \begin{aligned} \sup_{y\in M(x)}L(x,y)=\begin{cases} -x-1, & \mbox{if } x\in[-1,0[ \\ -x+1, & \mbox{if } x\in [0,1], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equaw.png)

. Hence such a (PB) has no solution and no weak Stackelberg equilibrium.

. Hence such a (PB) has no solution and no weak Stackelberg equilibrium.Nevertheless, existence results for very special classes of problem (PB) can be found in [2, 3, 80].

In a more general framework, to overcome the lack of solutions, for any 𝜖 > 0 the concept of 𝜖-approximate solution of (PB) has been investigated in a sequential setting by Loridan and Morgan in [71], where the existence of such solutions is analyzed under mild convexity assumptions on the follower’s payoff function, and in [74] where quasi-convexity assumptions are considered.

Then, in [76] the notion of strict 𝜖-approximate solution has been introduced and related existence results have been provided without requiring convexity assumptions. A crucial property of these two concepts is the convergence of the approximate pessimistic values towards the pessimistic value of (PB) as 𝜖 tends to zero. We point out that all the results in the papers just mentioned have been obtained in the case of non-parametric constraints.

Afterwards, Lignola and Morgan in [51] extensively investigated all the possible kinds of constraints (including those defined by parametric inequalities) in a topological setting. More recently, new notions of approximate solutions have been introduced in [63, 64] in an easier-to-manage sequential setting which allow to construct a surrogate for the solution of (PB) called viscosity solution. Such concepts will be presented in Sect. 4.4 together with their properties.

Consider X = Y = [−1, 1], L(x, y) = x + y, F(x, y) = 0 and let L

n(x, y) = x + y + 1∕n and F

n(x, y) = y∕n for any  .

.

Although the sequence of functions (L n)n continuously converges to L and the sequence (F n)n continuously converges to F, the sequence of pessimistic values of (PB)n does not converge to the pessimistic value of (PB).

However, convergence results of the exact solutions to (PB)n towards a solution to (PB) have been obtained in [69] for a special class of problems.

In a more general framework, in order to obviate the lack of stability, a first attempt to manage the stability issue (intended in the Hausdorff’s sense) of (PB) can be found in [89] by means of approximate solutions. Afterwards, “variational” stability (in the sense of the above example) for such approximate solutions has been investigated under conditions of minimal character and assuming non-parametric constraints in [71] (under mild convexity assumptions on the follower’s payoff function) and in [74] (under quasi-convexity assumptions). For applications to interior penalty methods see [70, Section 5] and to exterior penalty methods see [74, Section 5].

Then, a comprehensive analysis on all the types of follower’s constraints has been provided in [51] for a general topological framework. Such results will be presented in Sect. 4.4.

Furthermore, (PB) is hard to manage even regarding well-posedeness properties, investigated in [91].

Concerning the numerical approximation issue of (PB), various regularization methods involving both the follower’s optimal reaction set and the follower’s payoff function have been proposed in order to approach problem (PB) via a sequence of Stackelberg problems; for first results, see [29, 75, 77, 78, 88]. These methods, their properties and the results achieved will be presented in Sect. 4.5.

4.3.3 Strong Stackelberg (or Optimistic Bilevel Optimization) Problem

Problem (OB) is ensured to have strong Stackelberg solutions under the same assumptions stated for the existence of solutions of (SP), i.e. by assuming that the action sets X and Y are compact subsets of two Euclidean spaces and that the payoff functions L and F are continuous over X × Y .

The continuity assumptions can be weakened as in Remark 4.3.1, by applying Proposition 4.1.1 in [49] about the lower semicontinuity of the marginal function. △

We remind that results concerning the existence of strong Stackelberg solutions have been first obtained in [53].

We point out that problem (OB), as well as (PB), can exhibit under perturbation a lack of convergence of the strong Stackelberg solutions and of the optimistic values, as illustrated in Example 4.1 in [52], rewritten below for the sake of completeness.

Consider X = Y = [0, 1], L(x, y) = x − y + 1, F(x, y) = x and let L

n(x, y) = L(x, y) and F

n(x, y) = y∕n + x for any  .

.

Though the sequences of functions (L n)n and (F n)n continuously converge to L and F, respectively, the sequence of optimistic values of (OB)n does not converge to the optimistic value of (OB).

However, notions of approximate solutions have been introduced and investigated in [52] in order to face the lack of stability in (OB), whereas achievements on well-posedness can be derived by applying results obtained in [54, 55].

Obviously, as well as in (PB), the non-single-valuedness of the follower’s best reply correspondence M gives rise to difficulties from the numerical point of view in (OB).

4.3.4 Intermediate Stackelberg Problem

Sufficient conditions for the existence of intermediate Stackelberg solutions of (IS D) are investigated in [83, Section 3] in the general case where M(x) is not a discrete set for at least one x ∈ X. However, we point out that problem (IS D) inherits all the difficulties illustrated for (PB) about existence, stability, well-posedness and approximation issues.

4.3.5 Subgame Perfect Nash Equilibrium Problem

When the follower’s best reply correspondence M is not a single-valued map, infinitely many SPNEs could come up in Stackelberg games, as shown in the following example.

![$$\displaystyle \begin{aligned} \varphi^\sigma(x)=\begin{cases} -1, & \mbox{if } x\in[-1,0[ \\ \sigma, & \mbox{if } x=0 \\ 1, & \mbox{if } x\in ]0,1], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equbd.png)

. Therefore, the strategy profile (−1, φ

σ) is an SPNE of Γ for any σ ∈ [−1, 1], so Γ has infinitely many SPNEs.

. Therefore, the strategy profile (−1, φ

σ) is an SPNE of Γ for any σ ∈ [−1, 1], so Γ has infinitely many SPNEs.Hence, restricting the number of SPNEs becomes essential and the selection issue arises. For further discussion on the theory of equilibrium selection in games, see [40].

We showed via the second example on page 13 and the example on page 14 that starting from a solution to the associated (PB) or to the associated (OB) one can induce an SPNE motivated according to each of the two different behaviours of the leader. Finding such SPNEs induced by weak or strong Stackelberg solutions, as defined in Definition 4.2.6, provides two methods to select an SPNE in Stackelberg games. Nevertheless such methods can be exploited only in the two extreme situations illustrated before. Anyway they require to the leader of knowing the follower’s best reply correspondence and, at the best of our knowledge, they do not exhibit a manageable constructive approach to achieve an SPNE. △

Furthermore, also the numerical approximation issue matters for the SPNEs in Stackelberg games since M can be not single-valued. Therefore, it would be desirable to design constructive methods in order to select an SPNE with the following features: relieving the leader of knowing M, allowing to overcome the difficulties deriving from the possible non-single-valuedness of M, providing some behavioural motivations of the players (different from the extreme situations described above). Results in this direction have been first obtained in [93], where an SPNE selection method based on Tikhonov regularization is proposed, and more recently in [22], where proximal regularization is employed.

In the rest of the chapter we will again present results under easy-to-manage continuity or convergence assumptions, but we point out that the papers quoted in the statements involve conditions of minimal character, as in Remarks 4.3.1 and 4.3.3.

4.4 Regularizing the Follower’s Optimal Reaction Set in Stackelberg Games

The first attempts for approximating a Stackelberg game Γ = (X, Y, L, F) by a general sequence of perturbed or regularized games have been carried out by Loridan and Morgan. They started in [67, 68, 73] with the case where the second-stage problem (P x) has a unique solution for any x and there is no difference between the optimistic and the pessimistic bilevel optimization problem; then, they extensively investigated in [69–71, 74–77] pessimistic bilevel problems in which the follower’s constraints do not depend on the leader’s actions.

for i = 1, …, k, and we denote by:

for i = 1, …, k, and we denote by:  the set of solutions to the optimistic bilevel problem (OB), i.e. the set of strong Stackelberg solutions of (OB).

the set of solutions to the optimistic bilevel problem (OB), i.e. the set of strong Stackelberg solutions of (OB). the set of solutions to the pessimistic bilevel problem (PB), i.e. the set of weak Stackelberg solutions of (PB).

the set of solutions to the pessimistic bilevel problem (PB), i.e. the set of weak Stackelberg solutions of (PB).

4.4.1 Regularization of Pessimistic Bilevel Optimization Problems

Let X and Y be nonempty subsets of two Euclidean spaces.

is lower semicontinuous over X if for any x ∈ X, for any sequence (x

n)n converging to x in X and any y ∈ T(x) there exists a sequence (y

n)n converging to y in Y such that y

n ∈ T(x

n) for n sufficiently large, that is

is lower semicontinuous over X if for any x ∈ X, for any sequence (x

n)n converging to x in X and any y ∈ T(x) there exists a sequence (y

n)n converging to y in Y such that y

n ∈ T(x

n) for n sufficiently large, that is

, one has y ∈ T(x), equivalent to

, one has y ∈ T(x), equivalent to

and

and  denote, respectively, the Painlevé-Kuratowski lower and upper limit of a sequence of sets.△

denote, respectively, the Painlevé-Kuratowski lower and upper limit of a sequence of sets.△Then, let us recall the well-known maximum theorem [6, 16].

the function L is lower semicontinuous over X × Y ;

the set-valued map

is lower semicontinuous over X;

is lower semicontinuous over X;

is lower semicontinuous over X.△

4.4.1.1 Approximate and Strict Approximate Solutions

An attempt to present a complete discussion, which aimed to unify all previous results in a general scheme, was given in [51] in the setting of topological vector spaces and by analyzing the second-stage problem in the presence and in the absence of general or inequality constraints. Unfortunately, using Γ-limits and working in a topological setting have restricted the readability and the applicability of that paper, so, here we recall the main idea and the principal results in a simplified form but under more restrictive assumptions.

the map

the map

is lower semicontinuous even if the maps M and M

ε are not.

is lower semicontinuous even if the maps M and M

ε are not. and

and  for every x ∈ ]0, 1];

for every x ∈ ]0, 1]; ;

; ;

;

,

,  solve the equation

solve the equation

is lower semicontinuous on whole X.

is lower semicontinuous on whole X.In order to prove the lower semicontinuity of  , a crucial role is played both by the lower semicontinuity and closedness of the constraints map K and by the continuity of the follower’s payoff function F, as shown by the next lemmas, which concern, respectively, the continuity and convexity properties of inequality constraints maps and the lower semicontinuity of

, a crucial role is played both by the lower semicontinuity and closedness of the constraints map K and by the continuity of the follower’s payoff function F, as shown by the next lemmas, which concern, respectively, the continuity and convexity properties of inequality constraints maps and the lower semicontinuity of  and M

ε. The first lemma can be found in [13, theorems 3.1.1 and 3.1.6].

and M

ε. The first lemma can be found in [13, theorems 3.1.1 and 3.1.6].

- (C1)

for every i = 1, …, k, the function g iis lower semicontinuous over X × Y ; then, the set-valued map K is closed on X.

If the following assumptions are satisfied:

- (C2)

for every i = 1, …, k and y ∈ Y , the function g i(⋅, y) is upper semicontinuous over X

- (C3)

for every i = 1, …, k and x ∈ X, the function g i(x, ⋅) is strictly quasiconvex (see [ 13]) and upper semicontinuous on Y , which is assumed to be convex;

- (C4)

for every x ∈ X there exists y ∈ Y such that

;

;then, the set-valued map K is lower semicontinuous and convex-valued over X. △

Under the assumptions (C

1) − (C

4), if the set X is closed, the set Y is convex and compact and the function F is continuous over X × Y , then the map

is lower semicontinuous over X.△

is lower semicontinuous over X.△

In order to get the lower semicontinuity of M

ε, a crucial role is also played by suitable convexity properties of the data which guarantee that  for any x ∈ X.

for any x ∈ X.

- (H1)

the function F(x, ⋅) is strictly quasiconvex on K(x), for every x ∈ X

then, the map M εis lower semicontinuous over X.△

and

and  , defined by

, defined by

Assume that the set X is compact and the function L is lower semicontinuous over X × Y .

If assumptions of Lemma

4.4.5hold, then the approximate solutions set

is nonempty for any ε > 0.

is nonempty for any ε > 0.

If assumptions of Lemma

4.4.6hold, then the approximate solutions set

is nonempty for any ε > 0. △

is nonempty for any ε > 0. △

- do the approximate security values w ε and

, defined respectively by converge towards the security value w of the original problem when ε tends to zero?

, defined respectively by converge towards the security value w of the original problem when ε tends to zero?

are such approximate solutions stable with respect to perturbations of the data, for any fixed ε > 0?

. Let, for any n,

. Let, for any n,  is the ε-argmin map of F

n with constraints K

n. More specifically, given k sequences of real valued functions (g

i,n)n defined on X × Y , we set

is the ε-argmin map of F

n with constraints K

n. More specifically, given k sequences of real valued functions (g

i,n)n defined on X × Y , we set

the map

the map

the set

the set

of approximate solutions for (PB)n converges to

of approximate solutions for (PB)n converges to  and one has

and one has

△

under the assumptions of Proposition 4.4.8, the map M ε may not be lower semicontinuous over X, so this property is not necessary for the approximate security values convergence;

no convexity assumption is made on g i, F and L.

:

: - (C 3,n )

for every i = 1, …, k and x ∈ X, the function g i,n(x, ⋅) is strictly quasi-convex on Y ;

- (C 4,n )

for every x ∈ X there exists y ∈ Y such that

;

;- (H 2,n )

- for any (x, y) ∈ X × Y and any sequence (x n, y n)nconverging to (x, y) in X × Y one has

the ε-solutions are stable, i.e. inclusion in (4.4.3) holds,

the ε-values are stable, i.e.

.△

.△

We stress that, in general, one cannot prove a result analogous to (4.4.3) also for the strict approximate solutions  , due to the open nature of them. A natural extension of the previous investigations was the research of possible candidates to substitute the lacking weak Stackelberg solutions to (PB), which will be presented in the next subsection.

, due to the open nature of them. A natural extension of the previous investigations was the research of possible candidates to substitute the lacking weak Stackelberg solutions to (PB), which will be presented in the next subsection.

We mention that the approximate solutions presented above have been afterwards employed in [1, 4] (about the strong-weak Stackelberg problem, which generalizes problems (PB) and (OB)) and in [45].

4.4.1.2 Inner Regularizations and Viscosity Solutions

defining regularizing problems which have solutions under not too restrictive conditions;

utilizing sequences of solutions to the regularizing problems admitting convergent subsequences;

considering a limit point of one of such subsequences as a surrogate solution to (PB) provided that the supremum values of the objective functions calculated in such approximating solutions converge to the security value w.

To accomplish the first step, we identify the set-valued map properties that are helpful in this regularization process.

, where

, where

if the conditions below are satisfied:

if the conditions below are satisfied: - (R 1 )

for every x ∈ X and 0 < ε < η;

for every x ∈ X and 0 < ε < η;- (R 2 )

- for any x ∈ X, any sequence (x n)n converging to x in X and any sequence of positive numbers (ε n)n decreasing to zero, one has

- (R 3 )

is a lower semicontinuous set-valued map on X, for every ε > 0. △

is a lower semicontinuous set-valued map on X, for every ε > 0. △

The above definition is related to the pessimistic nature of the problem (PB) and we are aware that different problems at the upper level would require different definitions of inner regularizations for the family  .

.

The strict and large ε-argmin maps,  and

and  , constitute inner regularization classes under appropriate conditions.

, constitute inner regularization classes under appropriate conditions.

The family

is an inner regularization whenever the assumptions of Lemma

4.4.5are satisfied.

is an inner regularization whenever the assumptions of Lemma

4.4.5are satisfied.

The family

is an inner regularization whenever the assumptions of Lemma

4.4.6are satisfied. △

is an inner regularization whenever the assumptions of Lemma

4.4.6are satisfied. △

The family

is an inner regularization whenever the assumptions of Lemma

4.4.5are satisfied.

is an inner regularization whenever the assumptions of Lemma

4.4.5are satisfied.

The family

is an inner regularization whenever the assumptions of Lemma

4.4.6are satisfied. △

is an inner regularization whenever the assumptions of Lemma

4.4.6are satisfied. △

and

and  could be the maps:

could be the maps:

- the family

is an inner regularization whenever assumptions (C

1)–(C

3) and the following hold:

is an inner regularization whenever assumptions (C

1)–(C

3) and the following hold:

- (C 5 )

for every ε > 0 and x ∈ X there exists y ∈ Y such that

;

;

the family

is an inner regularization whenever the assumptions (C

1)–(C

3), (C

5) and (H

1) are satisfied.△

is an inner regularization whenever the assumptions (C

1)–(C

3), (C

5) and (H

1) are satisfied.△

The next steps consist in introducing the concept of viscosity solution for pessimistic bilevel optimization problems related to an inner regularization class and in stating a related existence result.

be an inner regularization for the family

be an inner regularization for the family  . A point

. A point  is a

is a  -viscosity solution for (PB) if for every sequence (ε

n)n decreasing to zero there exists

-viscosity solution for (PB) if for every sequence (ε

n)n decreasing to zero there exists  ,

,  for any

for any  , such that:

, such that: - (V 1 )

a subsequence of

converges to

converges to  ;

;- (V 2 )

- (V 3 )

△

△

If

is an inner regularization for the family

is an inner regularization for the family

, the sets X and Y are compact and the function L is continuous over X × Y , then there exists a

, the sets X and Y are compact and the function L is continuous over X × Y , then there exists a

-viscosity solution for the pessimistic problem (PB). △

-viscosity solution for the pessimistic problem (PB). △

Suitable existence results of viscosity solutions with respect to each of the families considered above can be derived from Theorem 4.4.15 and Propositions 4.4.11, 4.4.12, and 4.4.13.

The next example illustrates the above procedure in a simple case.

the argmin map M of the minima to (P x) is not lower semicontinuous at x = 0, since M(0) = Y and

for every x ∈ ]0, 1];

for every x ∈ ]0, 1];the marginal function e(⋅) is not lower semicontinuous at x = 0, since e(0) = 1 and e(x) = x − 1 for every x ∈ ]0, 1];

the problem (PB) does not have a solution since

but e(x) > −1 for every x ∈ [0, 1];

but e(x) > −1 for every x ∈ [0, 1];the map

is lower semicontinuous over X since

is lower semicontinuous over X since ![$$\widetilde {M}^\varepsilon _d(x)= [-1,1]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq151.png) if x ∈ [0, ε∕2[ and

if x ∈ [0, ε∕2[ and  if x ∈ [ε∕2, 1];

if x ∈ [ε∕2, 1];- the minimum point of the marginal function

is

is  and

and

the family

is an inner regularization even if the function g

1(x, ⋅) is not strictly quasiconvex (see Remark 4.4.4);

is an inner regularization even if the function g

1(x, ⋅) is not strictly quasiconvex (see Remark 4.4.4);the data satisfy the conditions in Theorem 4.4.15;

the

-viscosity solution for (PB) is x = 0.

-viscosity solution for (PB) is x = 0.

4.4.2 Regularization of Optimistic Bilevel Optimization Problems

As we observed in Sect. 4.3.3, a strong Stackelberg equilibrium exists under not too restrictive conditions. However, when such a problem has to be approached by a sequence of perturbed problems (e.g. in discretization process of (OB) in an infinite dimensional setting), the perturbed solutions may not converge towards a solution of the original problem, that is such problems are lacking in stability in the sense specified in Sect. 4.4.1.1.

and, for any n, K

n is defined as in (4.4.2) and M

n is the argmin map of F

n with constraints K

n. Then, denoted by

and, for any n, K

n is defined as in (4.4.2) and M

n is the argmin map of F

n with constraints K

n. Then, denoted by  the set of solutions to (OB)n, for any

the set of solutions to (OB)n, for any  , one asks if one has

, one asks if one has

(F n)n and (L n)n uniformly converge, and therefore also continuously converge, to the functions defined by F(x, y) = 0 and L(x, y) = −xy respectively;

M(x) = [0, 1] and, for any

,

,  for every x ∈ [0, 1];

for every x ∈ [0, 1]; and, for any

and, for any  ,

, ![$$\mathcal {S}_n=[0,1]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq164.png) .

.

Nevertheless, also in the optimistic case, regularizing the follower’s reaction set turns out to be useful to obtain satisfactory approximation results for the strong Stackelberg values and solutions.

△

:

: - (H 3,n )

- for any (x, y) ∈ X × Y and any sequence (x n, y n)nconverging to (x, y) in X × Y one has

△

(F n)n and (L n)n continuously converge on X × Y to the functions defined by F(x, y) = 0 and L(x, y) = x(x − y) respectively;

M(x) = M ε(x) = [0, 1] for every x ∈ X;

for any

,

, ![$$M^\varepsilon _n(x)= [0,n\varepsilon ]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq168.png) if nε ≤ 1 and

if nε ≤ 1 and ![$$M^\varepsilon _n(x)=[0,1]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq169.png) if nε > 1;

if nε > 1; and, for any

and, for any  ,

,  if nε ≤ 1 and

if nε ≤ 1 and  if nε > 1.

if nε > 1.

if the sequence (ε

n)n is infinitesimal of the first order.

if the sequence (ε

n)n is infinitesimal of the first order.It would be interesting defining suitable viscosity solutions also for optimistic bilevel optimization problems aimed to reach an inclusion analogous to (4.4.4).

This is a still unexplored topic, but given an optimistic bilevel problem approached by a sequence of approximating optimistic bilevel problems (OB)n, it seems to be reasonable investigating the limit points of sequences of solutions to (OB)n that are not so far from the solution set to (OB).

We mention that algorithms to approach approximate solutions to (OB) have been developed in [66], where the reformulation of (OB) via the optimal value function is exploited, and in [44], where a reformulation of (OB) as a generalized Nash equilibrium problem is employed.

4.4.3 Regularization of Bilevel Problems with Equilibrium Constraints

Stackelberg games are models that can be naturally extended to the case where there are one leader and one, or more than one, follower who solve a second-stage problem described by a parametric variational inequality, Nash equilibrium, quasi-variational inequality or more generally quasi-equilibrium. The critical issues of pessimistic and optimistic approach are still present and regularizing the problem solved by the follower(s) is useful also in this case. First we present the case where one solves a variational inequality in the second stage. The set of solutions of such a parametric variational inequality can be considered as the follower’s constraints in the first stage, so traditionally the associate bilevel problem is called bilevel problem with variational inequality constraints, and analogously for the other problems considered in the second stage.

4.4.3.1 Variational Inequality Constraints

, where any problem (V

x) consists in finding, see [12],

, where any problem (V

x) consists in finding, see [12],

is the set of solutions to the variational inequality (V

x).

is the set of solutions to the variational inequality (V

x).

follows from using the Minty type variational inequality, which consists in finding

follows from using the Minty type variational inequality, which consists in finding

The above regularizations have been employed for investigating, in a theoretical setting, well-posedness in [54] and stability properties in [50] of (OBV I) in Banach spaces; moreover, we mention that an exact penalization scheme has been proposed in [112] for deriving necessary optimality conditions of (OBV I). Convergence properties of the approximate weak Stackelberg values of (PBV I) can be found in [57] in finite dimensional spaces. They have been also considered, in applied framework as truss topology optimization in [36] or climate regulation policy in [34].

4.4.3.2 Nash Equilibrium Constraints

and l real-valued functions F

1, …, F

l defined on X × Y . Then, for any x ∈ X, the Nash equilibrium problem

and l real-valued functions F

1, …, F

l defined on X × Y . Then, for any x ∈ X, the Nash equilibrium problem  consists in finding

consists in finding

is the set of solutions to the Nash equilibrium problem

is the set of solutions to the Nash equilibrium problem  .

.

4.4.3.3 Quasi-Variational Inequality Constraints

, where any (Q

x) consists in finding, see [12],

, where any (Q

x) consists in finding, see [12],

is the set of solutions to the quasi-variational inequality (Q

x).

is the set of solutions to the quasi-variational inequality (Q

x).

Requiring that an approximate solution to (Q x) may violate the constraints, provided that it remains in a neighborhood of them, is quite necessary in quasi-variational inequality setting where a fixed-point problem is involved, as shown in [48, Ex. 2.1]. The above regularizations have been used in [59] for establishing convergence results of the weak Stackelberg values of (PBQI) in finite dimensional spaces, and in [61] for a complete investigation in infinite dimensional spaces of convergence properties of approximate solutions and values of (OBQI) problems, there called semi-quasivariational optimistic bilevel problems.

4.4.3.4 Quasi-Equilibrium Constraints

(also called quasi-variational problem in [58]) consists in finding y ∈ Y such that

(also called quasi-variational problem in [58]) consists in finding y ∈ Y such that

was introduced and it was proved that the map

was introduced and it was proved that the map  defined by

defined by

may fail to be an inner regularization under the same hypotheses. The results were established in infinite dimensional Banach spaces, so it had been necessary to balance the use of weak and strong convergence in the assumptions, but, in finite dimensional spaces, the statements can be simplified and made more readable.

may fail to be an inner regularization under the same hypotheses. The results were established in infinite dimensional Banach spaces, so it had been necessary to balance the use of weak and strong convergence in the assumptions, but, in finite dimensional spaces, the statements can be simplified and made more readable.4.5 Regularizing the Follower’s Payoff Function in Stackelberg Games

The general non-single-valuedness of the follower’s best reply correspondence M brings out the difficulties in the numerical approximation of problems (PB) and (OB), as mentioned in Sect. 4.3.3, as well as the need to define constructive methods for selecting an SPNE in Stackelberg games, as emphasized in Sect. 4.3.5. For these reasons and possibly also for behavioural motivations (see, for example, [22]), regularization methods involving the follower’s payoff function have been introduced. Such methods allow to construct sequences of perturbed problems where the solution of the second-stage problem is unique by exploiting well-known regularization techniques in convex optimization. For the sake of brevity, we will present only two approaches for regularizing the follower’s payoff function: the first one is based on the Tikhonov regularization, the second one on the proximal regularization.

In this section we assume that the leader’s and follower’s action sets X and Y are subsets of the Euclidean spaces  and

and  , respectively, and we denote by

, respectively, and we denote by  and

and  the norm of

the norm of  and

and  , respectively. Furthermore, for the sake of brevity, both leader’s and follower’s constraints are assumed to be constant.

, respectively. Furthermore, for the sake of brevity, both leader’s and follower’s constraints are assumed to be constant.

4.5.1 Regularizing the Follower’s Payoff Function via Tikhonov Method

Let us recall preliminarily the Tikhonov regularization method for the approximation of solutions to convex optimization problems. Then, we illustrate how it has been employed to regularize bilevel optimization problems (PB) or (OB) and to select SPNEs.

4.5.1.1 Tikhonov Regularization in Convex Optimization

with norm

with norm  . In [107] Tikhonov introduced, in an optimal control framework, the following perturbed minimization problems

. In [107] Tikhonov introduced, in an optimal control framework, the following perturbed minimization problems

and λ

n > 0, and he proved the connections between the solutions to problem

and λ

n > 0, and he proved the connections between the solutions to problem  and the solutions to problem (P), which are recalled in the next well-known result.

and the solutions to problem (P), which are recalled in the next well-known result.Assume that the set A is compact and convex, the function J is lower semicontinuous and convex over A and limn→+∞λ n = +∞.

has a unique solution

has a unique solution

, for any

, for any

, and the sequence

, and the sequence

is convergent to the minimum norm element of the set of the minimizers of J over A, so

is convergent to the minimum norm element of the set of the minimizers of J over A, so

where

. △

. △

Therefore, the Tikhonov regularization method allows to approach a solution of a convex minimization problem by constructing a sequence of perturbed problems, in general better-behaved than the original problem, that have a unique solution. Furthermore, the limit of the sequence generated accordingly is uniquely characterized in the set of solutions to the original problem.

We mention that, afterwards, Tikhonov regularization has been broadly exploited for finding Nash equilibria in one-stage games where players move simultaneously: see [7, 47] for zero-sum games (with applications also to differential games) and [93, Section 4], [25, 43] for general N-players games.

4.5.1.2 Tikhonov Regularization of the Follower’s Payoff Function in Stackelberg Games

and λ

n > 0. The next result states preliminarily properties about the connections between the perturbed problems

and λ

n > 0. The next result states preliminarily properties about the connections between the perturbed problems  and problem (P

x) as n goes to + ∞.

and problem (P

x) as n goes to + ∞.Assume that the set Y is compact and convex, the function F is continuous over X × Y and limn→+∞λ n = +∞.

;

; .△

.△

We highlight that the sequence of functions generated by Tikhonov-regularizing the follower’s payoff function satisfies assumptions (F1), (F2) and  in [71, Section 4], therefore additional results involving ε-solutions and strict ε-solutions hold by applying propositions 4.2, 4.4 and 6.1 in [71]. For instance, the inclusion stated in Proposition 4.5.2 above is still valid even when considering ε-argmin maps. △

in [71, Section 4], therefore additional results involving ε-solutions and strict ε-solutions hold by applying propositions 4.2, 4.4 and 6.1 in [71]. For instance, the inclusion stated in Proposition 4.5.2 above is still valid even when considering ε-argmin maps. △

More specific results concerning problems  and the connections with (P

x) are achieved by adding a convexity assumption, usual in a Tikhonov regularization framework.

and the connections with (P

x) are achieved by adding a convexity assumption, usual in a Tikhonov regularization framework.

Assume that the function F(x, ⋅) is convex over Y for any x ∈ X and hypotheses of Proposition 4.5.2are satisfied.

- problem

has a unique solution

has a unique solution

for any

for any

, that is

, that is

(4.5.1)

(4.5.1)  , where

, where

is the minimum norm element of the set M(x), that is

is the minimum norm element of the set M(x), that is

(4.5.2)

(4.5.2)

and any sequence (x

k)kconverging to x in X, we have

and any sequence (x

k)kconverging to x in X, we have

△

Under the assumptions of Proposition 4.5.4 additional results regarding ε-solutions have been proved in [77, Proposition 3.3] for the Tikhonov-regularized problem  . △

. △

We point out that, in general, the sequence  is not continuously convergent to

is not continuously convergent to  (for the definition of continuous convergence see Proposition 4.3.2), as shown in [77, Remark 3.1] and in the example below.

(for the definition of continuous convergence see Proposition 4.3.2), as shown in [77, Remark 3.1] and in the example below.

May Not Be Continuously Convergent

May Not Be Continuously Convergent

![$$\displaystyle \begin{aligned} \bar\varphi^{\mathcal{T}}_n(x)=\begin{cases} -1, & \mbox{if } x\in[-1,-1/n[ \\ nx, & \mbox{if } x\in[-1/n,1/n] \\ 1, & \mbox{if } x\in ]1/n,1] \end{cases}\quad \text{and}\quad \hat\varphi(x)=\begin{cases} -1, & \mbox{if } x\in[-1,0[ \\ 0, & \mbox{if } x=0 \\ 1, & \mbox{if } x\in ]0,1]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdh.png)

is not continuously convergent to

is not continuously convergent to  , as the function

, as the function  is not continuous at x = 0.

is not continuous at x = 0.

is a Stackelberg problem for any

is a Stackelberg problem for any  since the solution to the second-stage problem is unique. Two questions arise:

since the solution to the second-stage problem is unique. Two questions arise: - 1.

Are there solutions to

?

? - 2.

What happens when n → +∞?

Assume that the set X is compact, Y is compact and convex, the functions L and F are continuous over X × Y and the function F(x, ⋅) is convex over Y for any x ∈ X.

Then, the Tikhonov-regularized Stackelberg problem

has at least one solution, for any

has at least one solution, for any

. △

. △

As regards to investigation on the connections between  and (PB) as n goes to + ∞, the second question is addressed in the following result.

and (PB) as n goes to + ∞, the second question is addressed in the following result.

Assume that limn→+∞λ

n = +∞ and hypotheses of Proposition

4.5.6are satisfied. Denote with

a solution to

a solution to

for any

for any

.

.

in X × Y has a limit

in X × Y has a limit

which satisfies

which satisfies

where w is the security value of (PB) defined in Definition 4.2.2. △

An action profile  satisfying (4.5.3) is a lower Stackelberg equilibrium pair for problem (PB).△

satisfying (4.5.3) is a lower Stackelberg equilibrium pair for problem (PB).△

The lower Stackelberg equilibrium pair solution concept was introduced in [69, Remark 5.3] in the context of approximation of (PB) via ε-solutions. Note that a result analogous to Proposition 4.5.7 has been obtained in [75] for the least-norm regularization of the second-stage problem of (PB). In fact such a regularization, which is an adaptation of the method introduced in [104] and which involves the regularization both of the follower’s optimal reaction set and of the follower’s payoff function, generates sequences of action profiles whose limit points are lower Stackelberg equilibrium pairs, as proved in propositions 2.6 and 3.5 in [75].

We observe that if  is a weak Stackelberg equilibrium of (PB), then

is a weak Stackelberg equilibrium of (PB), then  is a lower Stackelberg equilibrium pair. The converse is not true, in general, as illustrated in the following example.

is a lower Stackelberg equilibrium pair. The converse is not true, in general, as illustrated in the following example.

![$$\displaystyle \begin{aligned} L(x,y)=-x-y\quad \text{and}\quad F(x,y)=\begin{cases} 0, & \mbox{if } x\in[1/2,1[ \\ (x-1)y, & \mbox{if } x\in [1,2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdj.png)

It is worth to emphasize that, in general, the sequence  , where

, where  is a solution to

is a solution to  , converges neither to a weak Stackelberg (or pessimistic) solution of (PB) nor to a strong Stackelberg (or optimistic) solution of (OB); analogously, the sequence

, converges neither to a weak Stackelberg (or pessimistic) solution of (PB) nor to a strong Stackelberg (or optimistic) solution of (OB); analogously, the sequence  converges, in general, neither to a weak Stackelberg equilibrium nor to a strong Stackelberg equilibrium, as illustrated in the next example also used in [93]. △

converges, in general, neither to a weak Stackelberg equilibrium nor to a strong Stackelberg equilibrium, as illustrated in the next example also used in [93]. △

May Converge Neither to a Weak Stackelberg Equilibrium Nor to a Strong Stackelberg Equilibrium

May Converge Neither to a Weak Stackelberg Equilibrium Nor to a Strong Stackelberg Equilibrium

![$$\displaystyle \begin{aligned} L(x,y)=-x-y\quad \text{and}\quad F(x,y)=\begin{cases} (x+1/4)y, & \mbox{if } x\in[-1/2,-1/4[ \\ 0, & \mbox{if } x\in[-1/4,1/4] \\ (x-1/4)y, & \mbox{if } x\in ]1/4,1/2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdk.png)

and

and  converge to − 1∕4 and (−1∕4, 1), respectively. Instead, the strong Stackelberg solution is 1∕4 and the strong Stackelberg equilibrium is (1∕4, 1), whereas the weak Stackelberg solution and the weak Stackelberg equilibrium do not exist.

converge to − 1∕4 and (−1∕4, 1), respectively. Instead, the strong Stackelberg solution is 1∕4 and the strong Stackelberg equilibrium is (1∕4, 1), whereas the weak Stackelberg solution and the weak Stackelberg equilibrium do not exist.Afterwards, the Tikhonov regularization approach illustrated up to now has been employed in [27] where, by requiring stronger assumptions on the payoff functions, further regularity properties for the Tikhonov-regularized second-stage problem  have been shown. Moreover, an algorithm have been designed for the solutions of the Tikhonov-regularized Stackelberg problem

have been shown. Moreover, an algorithm have been designed for the solutions of the Tikhonov-regularized Stackelberg problem  as n goes to + ∞.

as n goes to + ∞.

consists in considering the following perturbed second-stage problems

consists in considering the following perturbed second-stage problems

, provided that the function L(x, ⋅) is strongly convex on Y for any x ∈ X. In [29] such a Tikhonov-like approach has been investigated for problem (OB), in order to circumvent the non-uniqueness of the solutions to the second-stage problem, and an algorithm has been proposed. Further discussions on this kind of regularization can be found in [28, Subsection 7.3.2].

, provided that the function L(x, ⋅) is strongly convex on Y for any x ∈ X. In [29] such a Tikhonov-like approach has been investigated for problem (OB), in order to circumvent the non-uniqueness of the solutions to the second-stage problem, and an algorithm has been proposed. Further discussions on this kind of regularization can be found in [28, Subsection 7.3.2].We highlight that the idea of using the leader’s payoff function in the regularization of the second-stage problem goes back to Molodtsov in [88]: he introduced a “mixed” regularization method, by combining both the regularization of the follower’s reaction set and the regularization of the follower’s payoff function, which allows to approximate problem (PB) via a sequence of strong Stackelberg problems. Then, more general properties of the Molodtsov regularization have been obtained in [78], where perturbations of the data of problem (PB) have been also considered.

4.5.1.3 Selection of SPNEs via Tikhonov Regularization

A Stackelberg game where the follower’s best reply correspondence M is not single-valued could have infinitely many SPNEs, as illustrated in the example on page 23. Therefore, let us focus on how restricting the number of SPNEs or, better yet, picking just one. The first attempt to select an SPNE via a constructive approach that allows to overcame the non-single-valuedness of M is due to Morgan and Patrone in [93], where the Tikhonov regularization approach presented in Sect. 4.5.1.2 was exploited.

is defined on X × Y by

is defined on X × Y by

, i.e.

, i.e.  is the game obtained from Γ by replacing the follower’s payoff function F with the objective function of the Tikhonov-regularized problem

is the game obtained from Γ by replacing the follower’s payoff function F with the objective function of the Tikhonov-regularized problem  . Propositions 4.5.4 and 4.5.6 imply the following properties for

. Propositions 4.5.4 and 4.5.6 imply the following properties for  .

. ,

, the follower’s best reply correspondence in

is single-valued;

is single-valued;

the game

has at least one SPNE: the strategy profile

has at least one SPNE: the strategy profile

, where

, where

is defined in (4.5.1) and

is defined in (4.5.1) and

is a solution to

is a solution to

, is an SPNE of

, is an SPNE of

.

.

Moreover, the sequence of strategies

is pointwise convergent to the function

is pointwise convergent to the function

defined in (4.5.2). △

defined in (4.5.2). △

It is natural to ask if the limit of the sequence of SPNEs  , associated to the sequence of perturbed Stackelberg games

, associated to the sequence of perturbed Stackelberg games  , is an SPNE of the original game Γ. The answer is negative, in general, as displayed in the example below.

, is an SPNE of the original game Γ. The answer is negative, in general, as displayed in the example below.

May Not Converge to an SPNE of the Original Game

May Not Converge to an SPNE of the Original Game

is

is  where

where  and the function

and the function  is derived in the example on page 43; moreover

is derived in the example on page 43; moreover

In order to achieve an SPNE of Γ we need to take into account the limit of the sequence of action profiles  .

.

Assume that limn→+∞λ

n = +∞ and hypotheses of Proposition

4.5.6are satisfied. Denote with

an SPNE of

an SPNE of

, as defined in Corollary

4.5.10, for any

, as defined in Corollary

4.5.10, for any

.

.

converges to

converges to

in X × Y , then the strategy profile

in X × Y , then the strategy profile

where

where

with

defined in (4.5.2), is an SPNE of Γ. △

defined in (4.5.2), is an SPNE of Γ. △

for any

for any  and

and  . Therefore, the SPNE selected according to Theorem 4.5.11 is

. Therefore, the SPNE selected according to Theorem 4.5.11 is  where the function

where the function  is defined on X by

is defined on X by ![$$\displaystyle \begin{aligned} \widetilde\varphi^{\mathcal{T}}(x)=\begin{cases} -1, & \mbox{if } x\in [-1,0] \\ 1, & \mbox{if } x\in ]0,1]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdq.png)

for any x ∈ X and

for any x ∈ X and  . △

. △The following example shows that the SPNE selected according to Theorem 4.5.11 does not coincide, in general, with the SPNEs induced by a weak or a strong Stackelberg solution, as defined in Definition 4.2.6.

converges to (7∕4, 0), so the SPNE selected according to Theorem 4.5.11 is the strategy profile

converges to (7∕4, 0), so the SPNE selected according to Theorem 4.5.11 is the strategy profile  where

where ![$$\displaystyle \begin{aligned} \widetilde\varphi^{\mathcal{T}}(x)=\begin{cases} 1, & \mbox{if } x\in[-2,-7/4[ \\ 0, & \mbox{if } x\in[-7/4,7/4] \\ -1, & \mbox{if } x\in]7/4,2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdr.png)

The Tikhonov approach to select SPNEs presented in this subsection has been extended also to the class of one-leader two-follower Stackelberg games in [93, Section 4]. In this case the two followers, after having observed the action x chosen by the leader, face a parametric two-player Nash equilibrium problem  (see Sect. 4.4.3.2). By Tikhonov-regularizing the followers’ payoff functions in problem

(see Sect. 4.4.3.2). By Tikhonov-regularizing the followers’ payoff functions in problem  , a sequence of perturbed one-leader two-follower Stackelberg games has been defined where the solution to the Nash equilibrium problem in the second-stage is unique. This allows to select an SPNE similarly to Theorem 4.5.11, as proved in theorems 4.1 and 4.2 in [93].

, a sequence of perturbed one-leader two-follower Stackelberg games has been defined where the solution to the Nash equilibrium problem in the second-stage is unique. This allows to select an SPNE similarly to Theorem 4.5.11, as proved in theorems 4.1 and 4.2 in [93].

4.5.2 Regularizing the Follower’s Payoff Function via Proximal Method

Let us recall firstly the proximal regularization method for approximating the solutions of a convex optimization problem. Then, we show how it can be employed to regularize bilevel optimization problems (PB) or (OB) and to select SPNEs involving behavioural motivations.

4.5.2.1 Proximal-Point Algorithm in Convex Optimization

with norm

with norm  . The algorithm defined below has been introduced by Martinet in [86] for regularizing variational inequalities and by Rockafellar in [99] for finding zeros of maximal monotone operators.

. The algorithm defined below has been introduced by Martinet in [86] for regularizing variational inequalities and by Rockafellar in [99] for finding zeros of maximal monotone operators.

The well-definedness of algorithm (PA) and its convergence properties are recalled in the following well-known result stated in [99].

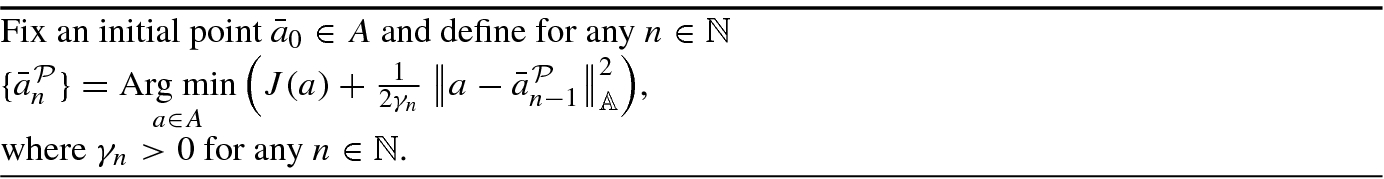

Assume that the set A is compact and convex, the function J is lower semicontinuous and convex over A and

.

.

is convergent to a solution of problem (P), that is

is convergent to a solution of problem (P), that is

△

![$$J_{\gamma }^{\mathcal {P}}\colon A\to [-\infty ,+\infty ]$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_IEq297.png) defined on A by

defined on A by

the proximal-regularized problems

are recursively defined, differently from the Tikhonov-regularized problems

are recursively defined, differently from the Tikhonov-regularized problems  in Sect. 4.5.1.1;

in Sect. 4.5.1.1;the limit of the sequence generated by (PA) is “just” a minimizer of J over A, while in the Tikhonov approach the limit point is characterized as the minimum norm element in the set of minimizers of J over A;

as regards to the assumptions ensuring the convergence of algorithm (PA) and of Tikhonov method, the hypothesis on the sequence of parameters (γ n)n in Theorem 4.5.13 is weaker than the corresponding hypothesis on (λ n)n in Theorem 4.5.1.

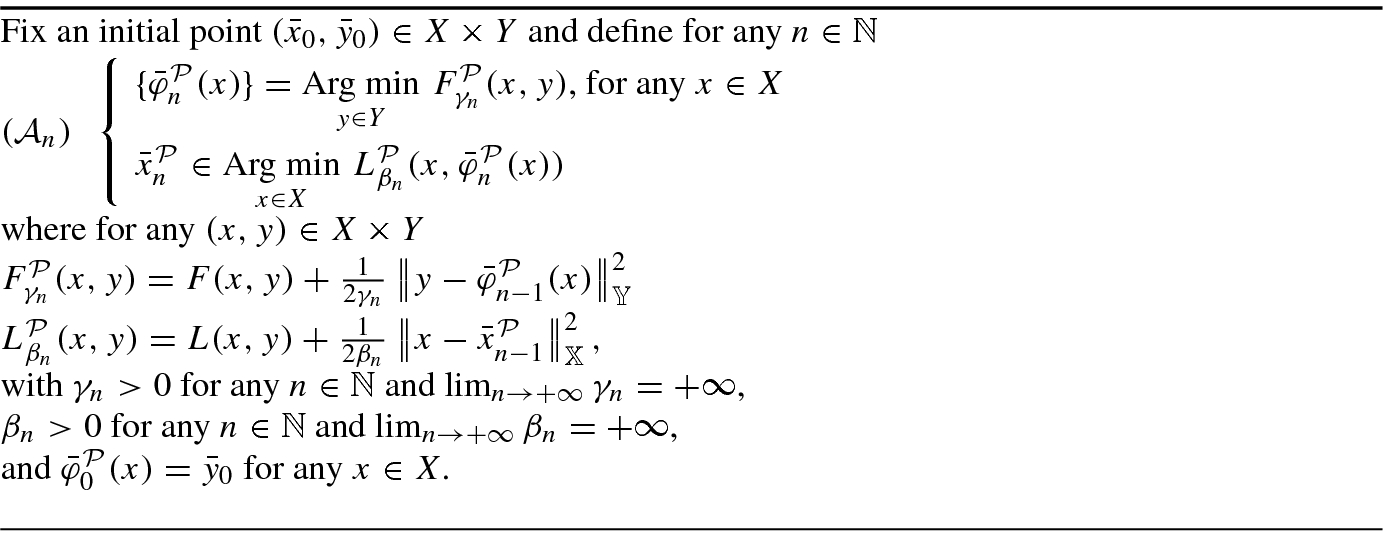

4.5.2.2 Proximal Regularization of the Follower’s Payoff Function in Stackelberg Games

, define for any

, define for any

and

and  for any x ∈ X.

for any x ∈ X.Assume that Y is compact and convex, the function F is continuous over X × Y , the function F(x, ⋅) is convex over Y for any x ∈ X and  . From Theorem 4.5.13 it straightforwardly follows that, for any x ∈ X, the sequence

. From Theorem 4.5.13 it straightforwardly follows that, for any x ∈ X, the sequence  is well-defined and

is well-defined and  . △

. △

is unique for any

is unique for any  . Analogously to Sect. 4.5.1.2, we are interested in two questions.

. Analogously to Sect. 4.5.1.2, we are interested in two questions. - 1.

Are there solutions to

?

? - 2.

What happens when n → +∞?

defined in (4.5.4) is continuous over X, so the next proposition provides the answer to the first question.

defined in (4.5.4) is continuous over X, so the next proposition provides the answer to the first question.Assume that the set X is compact, Y is compact and convex, the functions L and F are continuous over X × Y and the function F(x, ⋅) is convex over Y for any x ∈ X.

Then, the proximal-regularized Stackelberg problem

has at least one solution, for any

has at least one solution, for any

. △

. △

Concerning the second question, the limit of the sequence generated by the solutions to  for any

for any  has no connections, in general, either with weak Stackelberg (or pessimistic) solutions to (PB) or with strong Stackelberg (or optimistic) solutions to (OB), as illustrated in the following example also used in [22].

has no connections, in general, either with weak Stackelberg (or pessimistic) solutions to (PB) or with strong Stackelberg (or optimistic) solutions to (OB), as illustrated in the following example also used in [22].

May Not Converge to Weak or to Strong Stackelberg Solutions

May Not Converge to Weak or to Strong Stackelberg Solutions

![$$\displaystyle \begin{aligned} L(x,y)=x+y\quad \text{and}\quad F(x,y)=\begin{cases} 0, & \mbox{if } x\in[1/2,1[ \\ (x-1)y, & \mbox{if } x\in [1,2]. \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdy.png)

and γ

n = n, we obtain

and γ

n = n, we obtain ![$$\displaystyle \begin{aligned} \bar\varphi^{\mathcal{P}}_n(x)=\begin{cases} 1, & \mbox{if } x\in [{1}/{2},1] \\ c_n-c_nx+1, & \mbox{if } x\in ]1,1+{2}/{c_n}] \\ -1, & \mbox{if } x\in ]1+{2}/{c_n},2], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equdz.png)

is

is

converges to 1, which is neither a weak nor a strong Stackelberg solution. In fact, a solution to (PB) does not exist, whereas the solution to (OB) is 1∕2. Consequently, even the sequence

converges to 1, which is neither a weak nor a strong Stackelberg solution. In fact, a solution to (PB) does not exist, whereas the solution to (OB) is 1∕2. Consequently, even the sequence  does not converge either to a weak or to a strong Stackelberg equilibrium.

does not converge either to a weak or to a strong Stackelberg equilibrium. is not continuously convergent. In fact, coming back to such an example, it is sufficient to note that the pointwise limit of

is not continuously convergent. In fact, coming back to such an example, it is sufficient to note that the pointwise limit of  defined by

defined by ![$$\displaystyle \begin{aligned} \lim_{n\to+\infty}\bar\varphi^{\mathcal{P}}_n(x)=\begin{cases} 1, & \mbox{if } x\in[1/2,1] \\ -1, & \mbox{if } x\in ]1,2], \end{cases} \end{aligned}$$](../images/480569_1_En_4_Chapter/480569_1_En_4_Chapter_TeX_Equeb.png)

and l and f real-valued convex functions defined on

and l and f real-valued convex functions defined on  . Such a problem, firstly studied in [106] and labelled as “simple” in [30], involves a non-parametric second-stage problem differently from the general definitions of (PB) and (OB), and its solutions clearly coincide with the weak and strong Stackelberg solutions. Consequently, problem (SBP) does not entail the same kind of inherent difficulties that bilevel optimization problems (PB) and (OB) exhibit regarding the regularization issue, as illustrated in this subsection and in Sect. 4.5.1.2.

. Such a problem, firstly studied in [106] and labelled as “simple” in [30], involves a non-parametric second-stage problem differently from the general definitions of (PB) and (OB), and its solutions clearly coincide with the weak and strong Stackelberg solutions. Consequently, problem (SBP) does not entail the same kind of inherent difficulties that bilevel optimization problems (PB) and (OB) exhibit regarding the regularization issue, as illustrated in this subsection and in Sect. 4.5.1.2.4.5.2.3 Selection of SPNEs via Proximal Regularization