4.3. The Modality Effect

What I used to think

I did not used to think a great deal about the difference between presenting an image or animation alongside text, versus alongside an oral description. Indeed, if forced to choose between the two, I would always opt for the image alongside text, as I perceived text more likely to stick in students’ minds than narration.

Sources of inspiration

- Baddeley, A. (2012) ‘Working memory: theories, models, and controversies’, Annual Review of Psychology 63, pp. 1-29.

- Brzezinski, T. (no date) Polygons: Exterior Angles – REVAMPED. Available at: https://www.geogebra.org/m/mKzJCf5p

- Mayer, R. E. (2008) ‘Applying the science of learning: evidence-based principles for the design of multimedia instruction’, American Psychologist 63 (8) pp. 760-769.

- Mayer, R. E. and Anderson, R. B. (1992) ‘The instructive animation: helping students build connections between words and pictures in multimedia learning’, Journal of Educational Psychology 84 (4) pp. 444-452.

- Mousavi, S. Y., Low, R. and Sweller, J. (1995) ‘Reducing cognitive load by mixing auditory and visual presentation modes’, Journal of Educational Psychology 87 (2) pp. 319-334.

- Yorgey, B. (2017) ‘Post without words 18’, The math less travelled blog. Available at: https://mathlesstraveled.com/2017/05/31/post-without-words-18/

My takeaway

The presentation of information in different modalities – referred to as The Modality Effect – is a feature of both Cognitive Load Theory (Mousavi et al, 1995) and the Cognitive Theory of Multimedia Learning (Mayer and Anderson, 1992).

To appreciate the importance of the Modality Effect we need to develop the basic model of working memory introduced in Section 1.1. Baddley and Hitch (eg Baddley, 2012) propose a multicomponent model of working memory, where working memory is not viewed as a single workhouse, but rather one divided up into separate components, each with their own specific role. The two most significant components for our discussion here are:

- The Phonological Loop – deals with speech and sometimes other kinds of auditory information

- The Visuospatial Sketchpad – holds visual information and the spatial relationships between objects

The key point here is that working memory gets overloaded if too much information flows into one of these components, but we can use the different components to aid processing. Hence, the capacity of working memory may be determined by the modality (auditory or visual) of presentation, and we can make more efficient use of the limited capacity of working memory by presenting information in a mixed (auditory and visual mode) rather than in a single mode.

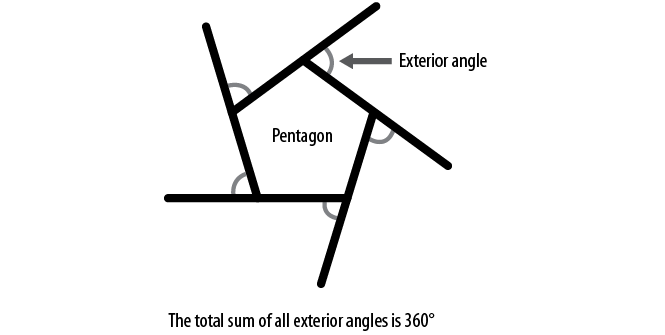

Consider two slides to convey the message that exterior angles in a polygon add up to 360°:

Slide 1:

Figure 4.2 – Source: Craig Barton

Slide 2:

Figure 4.3 – Source: Tim Brzezinski created using Geogebra, available at https://www.geogebra.org/m/mKzJCf5p

Slide 1 looks friendly enough, especially to experts such as ourselves. It is an image surrounded by a small amount of text. But we must consider how working memory processes this. The image is held inside the Visuospatial Sketchpad. The text, however, is also initially held by Visuospatial Sketchpad before effectively being read aloud inside students’ minds and thus held inside the Phonological Loop. It is this initial processing of the text as an image that could lead to cognitive overload for our students as the Visuospatial Sketchpad reaches capacity, especially as students try to also process the meaning of the slide.

Now, Slide 2 is in fact a dynamic animation built in Geogebra by Tim Brzezinski. It can be presented on the board without text, together with an oral narration from me along the lines of ‘Consider each of the exterior angles. We can see that together they form a full turn…’. In terms of working memory, we have freed up the Visuospatial Sketchpad to focus solely on the image, while making use of the Phonological Loop to deal with the narration.

Directly relevant to this is the Temporal Contiguity Principle from the Cognitive Theory of Multimedia Learning (Mayer, 2008). This explains that ‘people learn more deeply when corresponding graphics and narration are presented simultaneously rather than successively’. This is interesting, as my intuition would be to present the image/animation, give students a chance to process it, and then begin the narration. This intuition is faulty due to my lack of understanding of how working memory functions. The image and the narration are processed in separate channels, and hence there is no advantage to be gained from separating them in time.

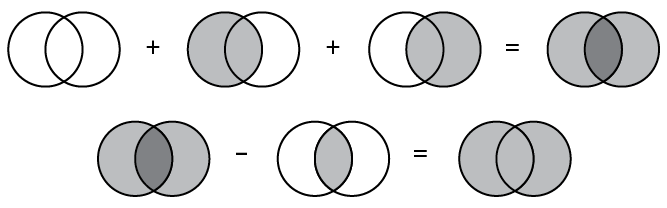

However, there are of course times where we may want to present an image and challenge the students to think what it represents. In such circumstances it clearly makes sense to separate the narration from the image. One of my favourites examples of this is the ‘Post without Words’ series from Brent Yorgey, which I would insist my students look at and ponder in silence before we discuss it:

Figure 4.4 – Source: Brent Yorgey, available at https://mathlesstraveled.com/2017/05/31/post-without-words-18/

The presentation of images with or without narration and text may not seem like much. However, we need to remember that we are dealing with novice learners in the early knowledge acquisition phase of learning. A phrase I like is ‘the cusp of understanding’. Imagine you have a student who is close to making the key breakthrough needed to understand a concept – they are so close, but things could still go either way. Students on such a cusp of understanding need as much of their working memory resources as possible dedicated to the matter in hand. Hence, such small changes to the presentation of information could make all the difference, allowing for successful processing of information to instigate that all-important change to long-term memory. Indeed, when considering all the tweaks suggested in this chapter, it may be useful to think in terms of students on the cusp of understanding.

What I do now

Where possible – and when appropriate – I present images or animations without on-screen text, and narrate over the top instead. Obviously there are times when I do need to use text, and for those occasions I follow the guidelines suggested by the Split-Attention and Redundancy Effects discussed later in this chapter.