Now that we know how to create our images, we need a way to maintain the desired state of our applications. Here's where container orchestrators come in. Container orchestrators answer questions such as the following:

- How do I maintain my applications so that they are highly available?

- How do I scale each microservice on demand?

- How do I load balance my application across multiple hosts?

- How do I limit my application's resource consumption on my hosts?

- How do I easily deploy multiple services?

With container orchestrators, administrating your containers has never been as easy or efficient as it is now. There are several orchestrators available, but the most widely used are Docker Swarm and Kubernetes. We will discuss Kubernetes later on in this chapter and take a more in-depth look at it in the Chapter 7, Understanding the Core Components of a Kubernetes Cluster.

What all orchestrators have in common is that their basic architecture is a cluster that is composed of some master nodes watching for your desired state, which will be saved in a database. Masters will then start or stop your containers depending on the state of the worker nodes that are in charge of the container workloads. Each master node will also be in charge of dictating which container has to run on which node, based on your predefined requirements, and to scale or restart any failed instances.

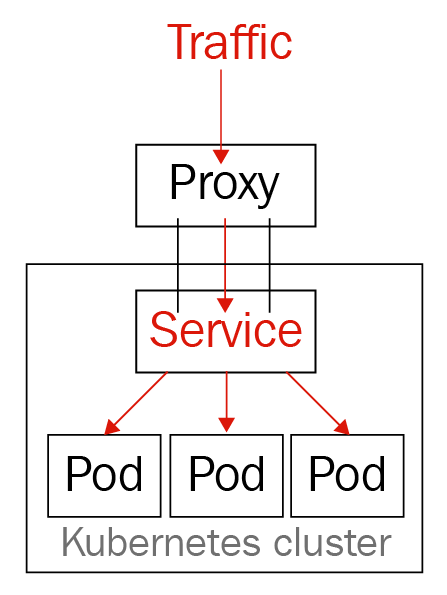

However, orchestrators not only provide high availability by restarting and bringing up containers on demand, both Kubernetes and Docker Swarm also have mechanisms to control traffic to the backend containers, in order to provide load balancing for incoming requests to your application services.

The following diagram demonstrates the traffic going to an orchestrated cluster:

Let's explore Kubernetes a little bit more.