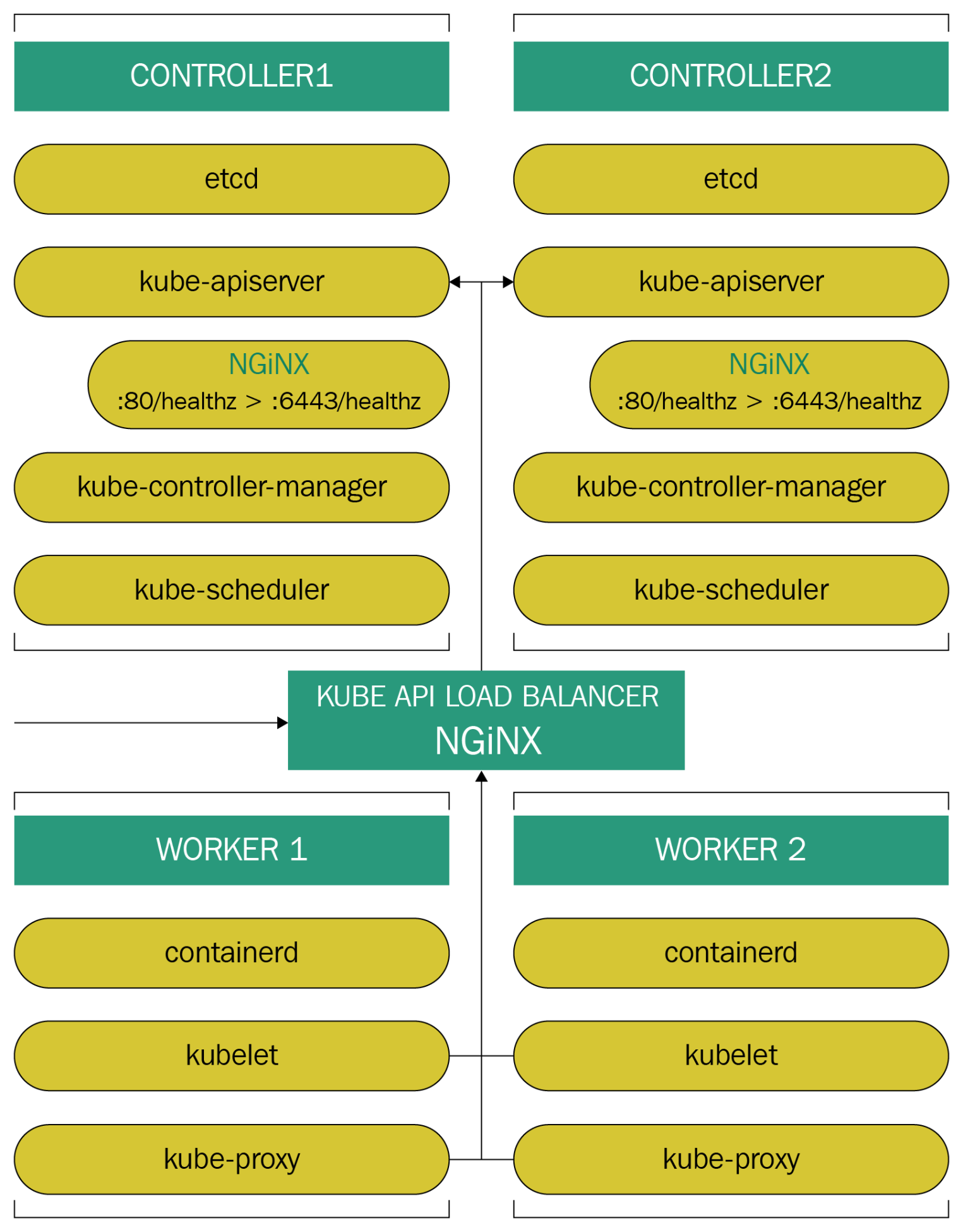

Our nodes still need to talk to our API server and, as we mentioned before, having several master nodes requires a load balancer. When it comes to load balancing requests from our nodes to the masters, we have several options to pick from, depending on where you are running your cluster. If you are running Kubernetes in a public cloud, you can go ahead with your cloud provider's load balancer option, as they are usually elastic. This means that they autoscale as needed and offer more features than you actually require. Essentially, load balancing requests to the API server will be the only task that your load balancer will perform. This leads us to the on-premises scenario—as we are sticking to open source solutions here, then you can configure a Linux box running either HAProxy or NGINX to satisfy your load balancing needs. There is no wrong answer in choosing between HAProxy and NGINX, as they provide you with exactly what you need.

So far, the basic architecture will look like the following screenshot: