1 Introduction

Musicians introduce deviations to the score when performing a musical piece in order to achieve a particular expressive intention. Computational expressive music performance modelling (CEMPM) aims to characterise such deviations using computational techniques (e.g. machine learning techniques). In this context, CEMPM aims to formulate a hypothesis on the expressive devices musicians use when performing (consciously or unconsciously), which can be empirically verified on measured performance data. Empirical models are often obtained from the quantitative analysis of musical performances, based on measurements of timing, dynamics, and articulation (e.g Shaffer et al. 1985; Clarke 1985; Gabrielsson 1987; Palmer 1996a; Repp 1999; Goebl 2001, to name a few). State of the art reviews are presented in Gabrielsson (2003). Computational models have been implemented as rule-based models (Friberg et al. 2000; the KTH model), mathematical models (Todd 1992), structure-level models (Mazzola 2002).

Machine Learning techniques have been used to predict performance variations in timing, articulation and energy (e.g. Widmer 2002), to model concrete expressive intentions (e.g. mood, musical style, performer etc). Most of the literature focus on classical piano music (e.g. Widmer 2002). The piano keys work as ON/OFF switching devices (e.g. MIDI pianos), which simplifies the process of data acquisition, where performance data has to be converted into machine readable data. Some exceptions can be found in in jazz saxophone music where case-based reasoning (Arcos et al. 1998) and inductive logic programming (Ramirez et al. 2011) have been used. Jazz guitar expressive performance modelling has been studied by Giraldo and Ramirez (2016a, b), in which special emphasis is done in melodic ornamentation.

However, few studies have been done in the context of classical guitar, aiming to study the intrinsic variations performers introduce when no specific expressive intentions are provided. In this study, we present a machine learning approach in which CEMPM techniques are applied to study the expressive variations that nine different guitarists introduce when performing the same musical piece, for which no performance indications are provided. We study the correlations on the variations in timing and energy. We extract features from the score to obtain predictive models for each musician to later cross-validate among them.

2 Materials and Methods

For this study we obtained recordings of nine professional guitarists performing the same musical piece. The piece was written for classical guitar, and was composed specifically for this study. Musicians did not knew the piece before hand, and any particular expressive/performance indications were provided (nor written or verbally). The performers were allowed to freely introduce the expressive variations to their taste/criteria. Musicians were also allowed to practice the piece as long as they wanted, until they were satisfied with their interpretation, before recording. The recordings took place at different studios/institutions and were collected by the Department of Music form the Faculty of Arts of the University of Quebec in Montreal (UQAM) Canada.

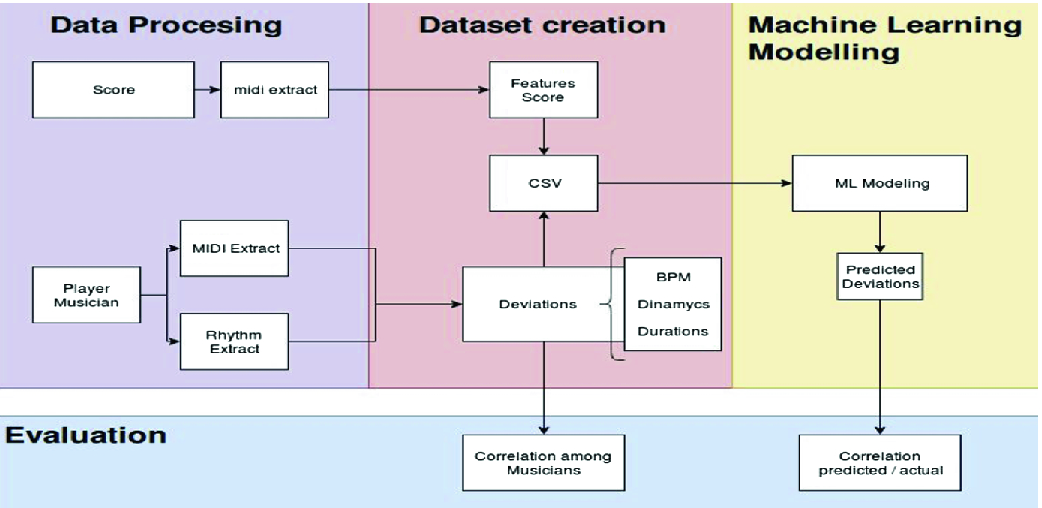

2.1 Framework

Framework and data processing flow.

Data Processing. The musical score was created in musicXML format from which we obtained machine readable data (MIDI type) information of each note, i.e. its onset (in seconds), duration (in seconds), pitch, and velocity (which refers to volume). We used the score as the dead-pan performance (i.e. robotic or inexpressive performance).

with K frequency bins and N frames as:

with K frequency bins and N frames as:

contains the spectral bases for each of the R pitches and

contains the spectral bases for each of the R pitches and  is the pitch activity matrix across time. The number of R pitches and the W and W matrices initial weights were initialised, informed by the score (for an overview see Clarke 1985). Later, manual correction was performed over the spectrum. Finally, energy information (i.e velocity) was obtained from the RMS value, calculated over the audio wave, in between the obtained note boundaries.

is the pitch activity matrix across time. The number of R pitches and the W and W matrices initial weights were initialised, informed by the score (for an overview see Clarke 1985). Later, manual correction was performed over the spectrum. Finally, energy information (i.e velocity) was obtained from the RMS value, calculated over the audio wave, in between the obtained note boundaries.Similarly, we performed automatic beat extraction (Zapata et al. 2014), followed by manual correction to obtain the beat information (in seconds) over the audio signal.

Data-set Creation. Feature extraction from the score was performed by extracting local information of the notes in the score (e.g pitch, duration, etc.) as well as contextual information in which the note occurs (e.g. previous/next interval, metrical strength, harmonic/melodic analysis, etc.). For an overview see Giraldo and Ramirez (2016a, b). A total of 27 descriptors were extracted for each note. Later, deviations in tempo variation, measured in Beats Per Minute (BPM) and Inter Onset Interval (IOI) for each note/performer, were calculated by considering the difference among the theoretical BPM/IOI values in the score and the corresponding values in the performance. Finally, we obtained data-sets for each of the nine performers, as well as for each of the three performance deviations considered (i.e. energy, BPM, and IOI deviations). A total of 27 data-sets were obtained, where each instance is composed by the feature set extracted for each note, and the considered deviations are the value to be predicted.

Machine Learning Modelling. Each of the nine performer data-sets were used as both train and test sets in a all-vs-all cross-validation fashion. This consisted in obtaining a predictive model for each performer (i.e all performer data sets were used as train set) and applying each of them to all the performers (i.e all the performer data sets were used as test set), and finally obtaining a model evaluation for each pair.

Mean Correlation Coefficient (CC) comparison among models.

Deviations | SVR | RT | ANN |

|---|---|---|---|

(CC) | (CC) | (CC) | |

Energy | 0.45 | 0.42 | 0.61 |

IOI | 0.58 | 0.60 | 0.82 |

BPM | 0.68 | 0.69 | 0.87 |

BPM percentage of deviation among nine performers for each consecutive note

3 Results

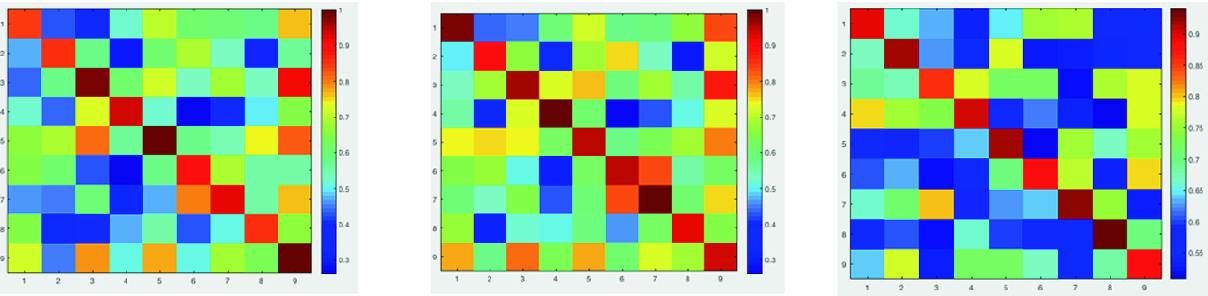

Scaled graph of the correlations obtained for each performer model, for each of the three expressive deviations considered (from left to right: BPM, IOI and Energy). Vertical axis correspond to performer data used as train set (from 1 to 9), and horizontal axis corresponds to performer data used as test set (from 1 to 9)

4 Conclusion

In this paper we have presented a machine learning approach based on computational modelling of expressive music performance to study the correlations on the intrinsic expressive deviations that musicians introduce when performing a musical piece. We have obtained recordings of the same musical piece by nine professional guitarists, in which any indications of expressiveness is indicated, and performers have freely choose on the expressive actions performed. We have extracted descriptors from the score, and measure the deviations introduced on the performance by each performer in terms of the BPM, IOI and Energy deviations. We have obtained machine learning models using ANNs, and for each performer, and cross-validated the performance among interpreters’ models based on CC. Preliminary results indicate, that performer take similar actions in terms of timing deviations, whereas less correlation was obtained in energy deviations.