GRAND MASTER: Oh, if you knew what our astrologers say of the coming age, and of our age, that has in it more history within one hundred years than all the world had in four thousand years before!

—TOMMASO CAMPANELLA (1568–1639),

The City of the Sun1

“We must make use of physics for warfare.”

—Nazi slogan, to be reworded by the German scientist Werner

Heisenberg as “We must make use of warfare for physics.”2

THE SUN DOES NOT STAND STILL, OR EVEN MOVE IN A SIMPLE PATTERN. At different times, and under different examinations, it may appear a solid orb, a ball of fire, or a constantly discharging source of winds, flares, spirals, and radioactive particles. Its latitudes rotate at different speeds, and its whole surface surges up and down about two and a half miles every 160 minutes—although even to talk about the Sun’s “surface” is misleading since, being largely a collection of gases, it has none. Seen from other planets, it is larger or smaller than when viewed from Earth; and its atmospheric effects are different on Mercury, say, than on the torrid mists of Venus, and different again on our planet. By the end of the eighteenth century, astronomers had recognized that it was simply one star among many—just the one that happened to be nearest to us—and had estimated its distance, size, mass, rate of rotation, and movements in space to within 10 percent of today’s values. But they continued to gnaw away at other problems.

What was going on inside it? What caused it to shine? How old was it, and how did it relate to other heavenly bodies? Only now were they finding many of the answers. Between 1800 and 1950, aided and abetted by advances in technology, the Industrial Revolution, and a new enthusiasm for scientific inquiry, hardly a year went by without astronomers, physicists, chemists, and geologists finding something that added to our understanding.

In just the first half of the nineteenth century, for example, absorption lines in the solar spectrum were mapped, and electromagnetic induction and electromagnetic balance were being discovered, both of which increased our knowledge of how the Sun draws us to it. During the same period, the Sun’s energy output—the “solar constant”—was measured, leading to a greater appreciation of both its temperature and its influence on climate. In 1860, the Vatican’s chief astronomer photographed the eclipse of July 18, showing that the corona and prominences were real features, not optical illusions or deceivingly illuminated mountains on the eclipsing Moon.

The list of specific discoveries runs close to two hundred. In just the single decade 1871-80, for instance, came electromagnetic radiation, the solar distillation of water (the Sun as purifier), and the first radical reestimate of the Sun’s age at 20 million years and of the length of time it would have taken to contract to its current size—both of which were to touch off a major debate between scientists and literal interpreters of the Bible. Other discoveries were less contentious: The Sun’s surface temperature was estimated at 9,806°F (5,430°C), while its core was reckoned to be gaseous, with temperatures steadily decreasing from its center to the surface. Several new instruments (the heliospectroscope, star spectroscope, telespectroscope) were invented, and all fixed stars were found to display only a few combinations of spectra, depending on their physical-chemical nature, “an achievement of as great significance as Newton’s law of gravitation.”3

In recent years writers such as Dava Sobel, Timothy Ferris, and Bill Bryson, as well as popularizing scientists including Carl Sagan and Stephen Hawking, have demystified such esoteric subjects as dark matter (invisible but still exercising attraction, whose presence we infer from its gravitational pull, though we don’t know what it is) and black holes (familiar, but still difficult fully to imagine). But what about deuterium, the ionosphere, even the Coriolis effect? Such words and phrases are part of a discourse that can seem closed to most of us. But two or three of the main strands of research can be braided together to show how, between 1800 and 1953, our knowledge of—and our feelings about—the Sun were transformed.

TOWARD THE MIDDLE of the nineteenth century, the French philosopher Auguste Comte was asked what would be forever impossible. He looked up at the stars: “We may determine their forms, their distances, their bulk, their motions—but we can never know anything of their chemical or mineralogical structure; and, much less, that of organized beings living on their surface.” Within a surprisingly few years mankind would have grasped those very truths. To put into context how quickly research expanded and focus shifted: in 1800 only one stellar “catalogue of precision” was available; in 1801, J. J. Lalande published one describing 47,390 stars; and in 1814 Giuseppe Piazzi added another 7,600. Others followed; between 1852 and 1859 alone some 324,000 were registered. Photographic charting began in 1885; and by 1900 the third and concluding volume of a work appeared that located a massive 450,000 stars, the fruit of collaboration between scientists in Groningen and John Herschel in Cape Town. It became abundantly clear that the Sun did not hang there as the supreme object but was just one more star, an unspectacular one at that. Scientists could now concern themselves with this multitude of other suns, many far larger and heavier than our own.*

Even this piece of knowledge was to be massively augmented: in the predawn hours of October 6, 1923, at the Mount Wilson Observatory, Edwin Hubble was examining a photograph of a fuzzy, spiral-shaped swarm of stars known as M31,† or Andromeda, which was assumed to be part of the Milky Way, when he noticed a star that waxed and waned with clockwork regularity, and the longer it took to vary, the greater its intrinsic brightness. Hubble sat down and calculated that the star was over nine hundred thousand light-years away—three times the then estimated diameter of the entire universe! As National Geographic reported the story: “Clearly this clump of stars resided far beyond the confines of the Milky Way. But if Andromeda were a separate galaxy, then maybe many of the other nebulae in the sky were galaxies as well. The known universe suddenly ballooned in size.”6

Today we know that there are at least 100 billion galaxies, each one harboring a similarly enormous number of stars. The Earth had already been demoted by the realization that it circled the Sun, and not the other way around; William Herschel and his son, John, with their revolutionary telescopes, had revealed the riches beyond our solar system, and unwittingly demoted the Sun as well. Here was a third or even a fourth diminution: that not only were we orbiting what was just a minor star among a multitude in the Milky Way, but that the Milky Way itself was but one galaxy of an untold number. For scientists, stellar astronomy was the future; minor stars need not apply. As A. E. Housman, poet and classicist and also a keen student of astronomy, tartly observed, “We find ourselves in smaller patrimony.”7

THIS DISPLACEMENT WAS occurring alongside the centuries-long and frequently vehement argument over the age of the Earth—and thus the age of both the Sun and the universe, an issue that provoked intense interest among astronomers, theologians, biologists, and geologists. The date of Creation had been made particularly exact by the elderly Irish Protestant archbishop James Ussher (1581–1656), who took all the “begats” in Genesis and the floating chronology of the Old Testament and—with a little helpful tweaking from Middle Eastern and Mediterranean histories, not least the Jewish calendar, which lays it down that Creation fell on Sunday, October 23, 3760 B.C.—arrived at the conveniently round figure of four thousand years between the Creation and the (most likely) birth date of Christ in 4 B.C.

Were it not for the London bookseller Thomas Guy, Ussher’s dating might have sunk into obscurity, as had those of the hundred or so biblical chronologers before him. By law, only certain publishers, such as the Cambridge and Oxford university presses, were allowed to print the Bible, but Guy acquired a sublicense to do so, and in a moment of marketing inspiration printed Ussher’s chronology in the margins of his books, along with engravings of bare-breasted women loosely linked to Bible stories. The edition earned Guy a fortune, enough to endow the great London hospital that bears his name. If this were not enough, in 1701 the Church of England authorized Ussher’s chronology to appear in all official versions of the King James Version. The “received chronology” soon became such an automatic presence that it was printed well into the twentieth century.

As the historian Martin Gorst puts it, “The influence of his date was enormous. For nearly two hundred years, it was widely accepted as the true age of the world. It was printed in Bibles, copied into various almanacs and spread by missionaries to the four corners of the world. For generations it formed the cornerstone of the Bible-centered view of the universe that dominated Western thought until the time of Darwin. And even then, it lingered on.”8 Gorst recalls coming across his grandmother’s 1901 Bible and finding, set opposite the opening verse of Genesis, the date and time for when the world began: 6:00 P.M. on Saturday, October 22, 4004 B.C. *

Even in Ussher’s time, however, freethinkers had begun to question the accepted chronology. Travelers returned from far-off lands with reports of histories that went back much further than 4004 B.C., and with the advent of new forms of inquiry based on scientific principles—Nullius in Verba (Take Nobody’s Word for It) runs the motto of Britain’s Royal Society—it became the turn of natural philosophers to debate the age of the globe.

In 1681, a fellow don of Newton’s at Cambridge, Thomas Burnet, published his bestselling Sacred Theory of the Earth, which argued that the world’s great mountain ranges and vast oceans had been shaped by the Flood of Genesis; he got around the Old Testament statement that Creation had taken only six days by quoting Saint Peter’s pronouncement that “one day is with the Lord as a thousand years.” A shelf of books appeared offering similar explanations. Then, later that same decade, two British naturalists, John Ray and Edward Lhuyd, after examining shells in a Welsh valley and ammonites on the northeastern English coast, concluded that both groups of fossils came from species that would have needed far more than 5,680-odd years in which to live and die out in such numbers. Their researches aroused a short-lived flurry of interest, followed by a period of neglect until the London physician John Woodward (1665–1728) posited that fossils were the “spoils of once-living animals” that had perished in the Flood. But this theory too was met with ridicule: faith in Ussher’s chronology endured.

It was Edmond Halley who, in 1715, became the first person to suggest that careful observation of the natural world (such as measuring the salinity of the oceans) would provide clues to the Earth’s age. His ideas were eagerly adopted by Georges-Louis de Buffon (1707–1788), who in his Histoire naturelle (1749) argued that the Earth was tens of thousands of years old, an idea that “dazzled the French public with a timescale so vast they could barely comprehend its enormity.”10 While Buffon did not sample the oceans, he did believe the Earth began not in the biblical single moment but as the result of a comet colliding with the Sun, the debris from this explosion coalescing to form the planets.†

Theologians at the Sorbonne erupted in fury, and Buffon was forced to publish a retraction—privately declaring, “Better to be humble than hanged.” But he would not be kept quiet for long. When another Frenchman, the mathematician Jean-Jacques Dortous de Mairan, demonstrated that the Earth did not get its heat from the Sun alone but contained an inner source, Buffon was galvanized into writing a history of the world from its inception. If the Earth were still cooling, then by finding the rate at which it lost heat one could calculate its age. Over the next six years he conducted a series of experiments, ultimately concluding that the Earth was 74,832 years old (a figure he believed to be highly conservative—his unofficial estimate was a hundred thousand). His book was met by a chorus of disbelief. In April 1788 he died, and the following year, the Revolution erupted. Buffon’s crypt was broken into and the lead from his coffin requisitioned to make bullets.

Undaunted, the intellectually curious continued to search for explanations for all they found on Earth, and by the nineteenth century the division between the Church and science was greater than ever. Between 1800 and 1840 the words “geology,” “biology,” and “scientist” were either coined or acquired specialized senses in the vernacular. Geology in particular was popular, and soon scientists and others were identifying and naming the successive layers of strata—in Byron’s phrase, “thrown topsy-turvy, twisted, crisped, and curled”—that formed the Earth’s surface. A shy young British barrister named Charles Lyell (1797–1875), building on John Woodward’s example, used fossils to measure Earth’s age, finding them in lava remains in Sicily that he estimated to be a hundred thousand years old.

By 1840, the evidence that the Earth was exceedingly “ancient” was overwhelming. Even if man had been around for only six thousand years, prehistoric time had expanded beyond comprehension. Then in 1859 came The Origin of Species, and the great argument that linked man’s evolution to that of the apes, undermining a literal reading of the Bible story. Dr. Ussher’s reckonings were at last no longer feasible. Cambridge University Press removed his chronology from its Bibles in 1900; Oxford followed suit in 1910. By then Mark Twain had already imagined the Eiffel Tower as representing the age of the world, and likened our share of that age to the skin of paint on the knob at its pinnacle.

Mighty doctrines, when overthrown, open up amazing perspectives. Once science had extended the age of the Earth to over a hundred thousand years, an array of new ideas emerged. What age to give now to the Sun? After Darwin, scientists had to consider that it had been releasing its energy over millions of years. Natural selection required that the solar system be of a previously inconceivable age. It is only because our Sun is not particularly powerful and burns relatively slowly, being tolerant of the sensitive carbon-based compounds that are the basis of earthly life, that evolution (not that Darwin ever liked that word) could occur.

Although the argument about Earth’s age rumbled on through the latter decades of the nineteenth century, questions about its star were drawing more attention from scientists. As Timothy Ferris puts it, “The titans of physics chose to focus less on the Earth than on that suitably grander and more luminous body.”11 In the summer of 1899, a professor of geology at the University of Chicago, Thomas Chamberlin (1843–1928), published a paper on how the Sun was fueled that challenged one of the then-basic assumptions of astrophysics:

Is present knowledge relative to the behavior of matter under such extraordinary conditions as obtain in the interior of the Sun sufficiently exhaustive to warrant the assertion that no unrecognized sources of heat reside there? What the internal constitution of the atoms may be is as yet open to question. It is not improbable that they are complex organizations and seats of enormous energies.12

Within five years the central principles of physics, and consequently the basic assumptions about the functioning of the Sun, had been remade.

IN THE SPRING of 1896, Henri Becquerel, a Paris physicist, inadvertently stored some unexposed photographic plates wrapped in black paper underneath a lump of uranium ore he had been using in an experiment. (Uranium, named after the planet in 1789, is the most complex atom regularly found in nature, composed primarily of uranium-238—as would be discovered, composed of 92 protons and 146 neutrons—plus a small percentage of the less stable uranium-235, three neutrons the fewer.) When after a few weeks he developed the plates, he found that it was as if they had been exposed to light, for they had been imprinted with an image of the silvery-white mineral, which, he thought, must be emitting a “type of invisible phosphorescence.” Marie and Pierre Curie, following up on this lucky discovery, would later name the phosphorescence “radioactivity.” But uranium was not easy to obtain, nor was the radiation it produced particularly impressive, so the Curies’ findings were generally ignored. Then in 1898 they discovered that pitchblende, another uranium ore, emitted much greater amounts of radioactivity than other sources of the metal. Was it possible that there were elements on Earth that were releasing tremendous amounts of unseen energy, waiting to be tapped?

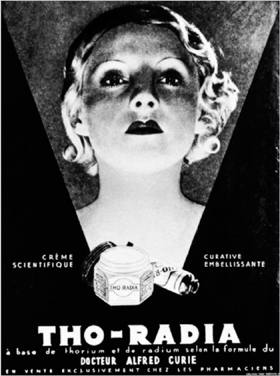

Soon the further, rarer element (termed “radium”) that the Curies had extracted from pitchblende was being written up in mainstream papers as the miracle metal, the most valuable element known to man, capable of curing blindness, revealing the sex of a fetus, even turning the skin of a black man white. “A single gram would lift five hundred tons a mile in height, and an ounce could drive a car around the world.”13 Advertisements promoted its use in “radioactive drinking water” for the treatment of gout, rheumatism, arthritis, diabetes, and a range of other afflictions. By 1904, scientists were also claiming radium to be a source of energy for the Sun.

An advertisement for Tho-Radia, a cream containing radium and thorium, marketed in the 1930s as a breakthrough in beauty preparations. People demonstrated radium at parties and went to radium dances, while the word became a fashionable brand name: Radium beer, Radium butter, Radium chocolate, Radium condoms, Radium suppositories, and even Radium contraceptive jelly. Illu(12.1)

The question was whether the energy was coming from within the atoms of radium or from outside. The great physicist Ernest Rutherford (1871–1937), a New Zealander who had worked on the nature of atoms in both England and Canada, began to explore atomic nuclei, combining forces with a Canada-based English chemist, Frederick Soddy (1877–1956). Radium, Rutherford had established, generates enough heat to melt its weight in ice every hour, and will continue to do so for a millennium or more: the Earth stays warm not least because it is heated by radioactive decay in the rocks and the molten core that lies at its center.*

The two men were soon experimenting on thorium, a radioactive element not unlike radium, and found that it independently yielded a radioactive gas—one element transmuting into another. This result was so startling that when Soddy told Rutherford about his finding, the New Zealander shouted back across the lab floor, “Don’t call it transmutation—they’ll have our heads off as alchemists!” They proceeded to prove that the heavy atoms of thorium, radium, and other elements they discovered to be radioactive broke down into atoms of lighter elements (in the form of gases), and as they did so they threw off minute particles—which, dubbed alpha and beta rays, were actually the elements’ main output of energy.

Further experiments by Rutherford showed that most of an atom’s mass lay at its core, surrounded by a crackling web of electrons. He and Soddy speculated that radioactivity of the same kind could be powering the Sun, but while their work was considered worthy of further investigation, it was not seen as astrophysically revolutionary. Some forty years later, Robert Jungk would write in his groundbreaking account, Brighter Than a Thousand Suns, “Professor Rutherford’s alpha particles ought really, at that time, to have upset not only atoms of nitrogen but also the peace of mind of humanity. They ought to have revived the dread of an end of the world, forgotten for many centuries. But in those days all such discoveries seemed to have little to do with the realities of everyday life.”*

Soddy did his best to explain the discovery, first in The Interpretation of Radium (1912), then in The Interpretation of the Atom (1932), arguing that, before atomic decay was known, the only explanations for the Sun’s energy output had been chemical, and thus short-term and puny; but once we had learned about radioactivity, the storm of subatomic reactions that revealed themselves was on something like the right scale to power the Sun.

Nearly a decade passed before anyone built on Soddy and Rutherford’s discoveries. Eddington, fresh from his successes off the coast of West Africa, now conducted a wide-ranging study of the energy and pressure equilibrium of stars and went so far as to construct mathematical models of their temperature and density (“What is possible in the Cavendish laboratory,” he famously commented, “may not be too difficult in the Sun”). He estimated that the Sun had a central temperature of 40,000,000°F, and argued that there must be a simple relationship between the total rate of energy loss from a star (its “luminosity”) and its mass. Once he knew the Sun’s mass, he reckoned, he could predict its luminosity.

To the ancients it had seemed obvious that the Sun was on fire, but to late-nineteenth- and early-twentieth-century physicists, that was unacceptable: it was just too hot to be chemically burning. So the question remained. As John Herschel put it, the “great mystery” was

to conceive how so enormous a conflagration (if such it be) can be kept up. Every discovery in chemical science here leaves us completely at a loss, or rather, seems to remove farther the prospect of probable explanation. If conjecture might be hazarded, we should look rather to the known possibility of an indefinite generation of heat by friction, or to its excitement by the electric discharge … for the origin of the solar radiation.14

Since research into the Earth’s age was now suggesting it was more than two million years old, the Sun must have been shining for at least that length of time. What process could possibly account for such a startling output of energy? As the Ukrainian-born American physicist George Gamow (1904–1968) put it, “If the Sun were made of pure coal and had been set afire at the time of the first Pharaohs of Egypt, it would by now have completely burned to ashes. The same inadequacy applies to any other kind of chemical transformation that might be offered in explanation … none of them could account for even a hundred-thousandth part of the Sun’s life.”15

Cosmologists looked to Gamow’s fellow astrophysicists for an answer, and Eddington offered two: the first was that electrons and protons, having opposite electric charges, annihilated each other within the Sun’s core, with the concomitant conversion of matter to energy. About a year later he advanced his second, and correct, solution: the Sun, by fusing protons, created heavier atoms, in the process converting mass to energy. But how could such fusion take place in the Sun’s consuming heat?

A feature of these years was that many vital discoveries were made by outsiders—scientists whom no one had regarded as solar physicists before their contribution to the field.16 Eddington had a young disciple: Cecilia Payne (1900–1980) had been five years old when she saw a meteor and decided to become an astronomer. After college, she was introduced to Eddington, who advised her to continue her studies in America; and she became the first student, male or female, to earn a doctorate from the Harvard College Observatory. The examiners judged her 1925 thesis, a photographic study of variable stars, the best ever written on astronomy.

Her solution to the temperature problem was to use Rutherford’s discoveries of atomic structure to show that all stars had the same chemical makeup: where their spectra differed, it was from physical conditions, not from their innate composition. Hydrogen and helium were by far the most abundant of the Sun’s fifty-seven known elements, as they were in other stars.17 Despite this conclusion, in her final list of chemical elements in the Sun she omitted hydrogen and helium, branding her own argument as “spurious.”

It later transpired that her supervisor, the renowned Princeton astronomer Henry Norris Russell, had tried to talk her out of her theory. “It is clearly impossible that hydrogen should be a million times more abundant than the metals,” he wrote to her, reiterating the conventional wisdom.18 But Payne’s arguments nagged at him; he reanalyzed the Sun’s absorption spectrum and finally accepted that she was right: giant stars indeed had an outer atmosphere of nearly pure hydrogen, “with hardly more than a smell of metallic vapors” in them. Stars fuse hydrogen into helium, releasing a continuous blast of energy. And when the Sun’s great energy was generated by the transformation of chemical elements taking place in its interior—as Gamow impishly noted—what was happening was precisely that “transformation of elements” that had been so unsuccessfully pursued by the alchemists of old.19

The next step was to understand nuclear fusion. The late 1920s and early 1930s witnessed a shift to research on the atomic nucleus,20 one particular center being the Institute for Theoretical Physics at Copenhagen University, directed by the estimable Niels Bohr (1885–1962), “who dressed like a banker and mumbled like an oracle.”21 By the 1920s Bohr had attained international stature and was able to attract many of the greatest physicists of the day, among them George Gamow. This remarkable Ukrainian had a reputation both for scientific innovation and for playfulness (he would illustrate his scientific papers with drawings of a skull and crossbones to indicate the danger of accepting hypotheses about fundamental particles at face value). In 1928 he showed how a positively charged helium nucleus (an “alpha particle,” of the kind emitted in such great numbers by the Sun) could escape from a nucleus of a particular metal found on Earth—uranium—despite the binding electrical forces within the metal.*

Gamow not only showed how alpha particles broke out of nuclei, he went on to show how they could break in. At Cambridge, two of his colleagues, John Cockcroft and Ernest Walton, set about applying Gamow’s theory, testing whether very high voltages would propel the particles through the outer walls of the nuclei. In 1932 they succeeded: for the first time a nucleus from one element had been broken down into another element by artificial means, a feat that became known as “splitting the atom.” In that same “year of miracles,” another Cambridge man, James Chadwick, discovered the neutron, the most common form of particle lurking inside virtually all nuclei. Suddenly, a whole variety of powerful reactions could be detected and even induced in the subatomic world. What was now obvious was that such discoveries bore out Payne’s view that these were the reactions powering the Sun.23

The high tide of crucial new work roared on. In 1934, the French physicist Frédéric Joliot and his wife, Irène Curie (daughter of Pierre and Marie), proved that by bombarding stable elements with alpha particles, “a new kind of radioactivity” could be affected. A few weeks later, the Italian physicist Enrico Fermi bombarded uranium with neutrons and reported similar results.

In 1938-39, Hans Bethe (1906–2005), the great German American atomic physicist, originally from Strasbourg but by this time at Cornell, wrote a series of articles culminating in “Energy Production in Stars,” which explained how stars—including the Sun—managed to burn for billions of years. He had been cataloguing the subatomic reactions that had been identified to date, but up until 1932 these had been only a fistful. Suddenly there came a landslide, and he could now see which particular ones explained the Sun’s workings. He posited that its abundant energy was the result of a sequence of six nuclear responses, and it was this process that lit all the stars. Simply put: the Sun was what would become known as a nuclear reactor.24

That same year, the German physicists Otto Hahn and Fritz Strassmann demonstrated that what Fermi had witnessed in 1934 was actually the bursting of the uranium nucleus. Two of their colleagues, Lise Meitner and Otto Robert Frisch, went even further, showing that when a uranium atom is split, enormous amounts of energy are released. (Frisch asked a biologist colleague for the word that described one bacterium dividing into two, and was told “fission,” and thus the splitting of atoms acquired its name.)

The Hungarian Leo Szilard was the next to make his mark. Although even Einstein believed that an atomic bomb was “impossible,” as the energy released by a single nucleus was so insignificant, Szilard, spurred on by memories of reading H. G. Wells’s 1914 novel The World Set Free, which predicted just such a weapon, confirmed that neutrons were also given off in each “splitting,” creating the possibility of chain reactions whereby each fission set off further fissions (an idea Szilard conceived while waiting at a stoplight one dull September morning in 1933, in London), so that the energy of splitting a single uranium nucleus could be multiplied by many trillions, releasing energy exponentially.25 And not only was fission possible; it could be produced at will. Opined the Cambridge scientist C. P. Snow, “With the discovery of fission … physicists became, almost overnight, the most important military resource a nationstate could call upon.”26

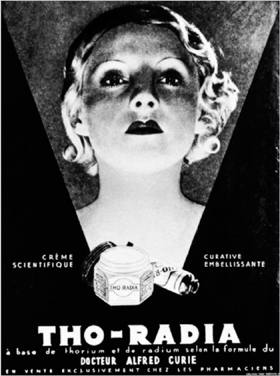

By the advent of the Second World War, both Allied and Axis scientists were keenly aware that nuclear fission might be converted into some kind of weapon, although no one was sure how.* Still highly skeptical, Einstein declared that the challenge of inducing a chain reaction and making a fission bomb was akin to going out to shoot birds at night in a land where there were very few birds; but secretly, in a letter to President Roosevelt dated August 2, 1939 (drafted along with his friend Leo Szilard), he urged that funds be devoted to developing fission-based weapons: “A single bomb of this type, carried by boat and exploded in a port, might very well destroy the whole port together with some of the surrounding territory.”28

Albert Einstein (1879–1955) with the American theoretical physicist J. Robert Oppenheimer (1904–1967) during the time they worked together before the Manhattan Project Illu(12.2)

Much alarmed by this letter, that autumn Roosevelt earmarked a small amount of money for the study of fission: small because it was assumed that any bomb would require so many tons of uranium that it was theoretically possible but probably not a practical option. However, in early 1940 two German refugees in Britain, Otto Frisch and Rudolf Peierls, calculated that only a few pounds of the 235 isotope were required. Other scientists in Britain conceived a gaseous diffusion technique, and put together, these two advances galvanized the government to lobby for the U.S.-based research to be shifted into more professional hands and be more effectively financed.

The Americans listened. In 1942, Brig. Gen. Leslie R. Groves, Jr., took over what became known as the Manhattan Project. Guided by the solar physicist J. Robert Oppenheimer (1904–1967), Groves brought together the top nuclear scientists of the day, supported by unprecedented funding and manpower.29 The project took over some thirty sites across the United States and Canada—Oak Ridge, Tennessee; several in central Manhattan; Chalk River, Ontario; Richland, Washington; and its headquarters at Los Alamos (“The Cotton-woods”), a small school on a ranch near Santa Fe, New Mexico. At its peak the project employed more than 130,000 people (most of whom had no idea what they were working on). But it was still far from inevitable that the United States would become the first country to build the bomb. On the eve of World War II, Germany was at least as far advanced in nuclear physics, and it had plentiful supplies of uranium.30

When a nucleus of uranium-235 absorbs a neutron, it splits into atoms of strontium and xenon, releasing energy plus twenty-five neutrons for every ten atoms. Uranium-238, on the other hand, absorbs neutrons and does not divide, so there is no such reaction. A bomb needs to be 80 percent pure uranium-235; otherwise the uranium-238 blocks the chain reaction. For the scientists on the Manhattan Project, the problem was how to separate the two. The breakthrough came at Oak Ridge: a series of “racetracks”—really huge belts of silver magnets—were devised to pull gaseous uranium through vacuum chambers, separating the weapons-grade U-235 from its heavier, benign sibling. “You used to walk on the wooden walkways and feel the magnets pulling at the nails in your boots,” a scientist there recalled. On one occasion, a man carrying a sheet of metal walked too close to the racetrack and found himself stuck to the wall. Everyone yelled for the machines to be shut down, but since it would have taken a day or more to restart everything, the engineer in charge refused. Instead, the unfortunate had to be pried off with two-by-fours.*

When the Third Reich surrendered on May 8, 1945, the Manhattan Project was months away from a weapon. To hasten victory in the Pacific, Oppenheimer decided to conduct a test, and on July 16, in the desert north of Alamogordo, New Mexico, released the equivalent of nineteen kilotons of TNT, far mightier than any previous man-made explosion. The news was rushed to Truman, who tried to use it as leverage on Stalin at the Potsdam Conference, to no avail. After listening to the arguments from his scientific and military advisers, and hoping to avoid an invasion calculated to exact a million and a quarter Allied dead—nearly double the British and United States fatalities in the whole war up to that point—Truman ordered that the weapons be used against Japanese cities, and on August 6, a uranium-based bomb, “Little Boy,” was dropped on Hiroshima.† Three days later, “Fat Man” was loosed on Nagasaki. The pilot on the latter mission recalled, “This bright light hit us and the top of that mushroom cloud was the most terrifying but also the most beautiful thing you’ve ever seen in your life. Every color in the rainbow seemed to be coming out of it.”32 The bombs killed at least 100,000 people outright, another 180,000 dying later of burns, radiation sickness, and related cancers. The Atomic Age had begun. Hans Bethe, aghast, devoted the rest of his life to checking the weapon’s “own impulse,” as he put it: “Like others who had worked on the atomic bomb, I was exhilarated by our success—and terrified by the event.”33 Winston Churchill told the House of Commons that he wondered whether giving such power to man was a sign that God had wearied of his creation. “I feel we have blood on our hands,” Oppenheimer told Truman, who replied, “Never mind. It’ll all come out in the wash.”

On September 23, 1949, the Soviet Union tested its first nuclear device, also a fission bomb. The amount of energy practically releasable by fission bombs (that is, atomic bombs—or A-bombs—which produce their destructive force through fission alone) ranges between the equivalent of less than a ton of TNT to around 500,000 tons (500 kilotons). The other, vastly more powerful category of bomb obtains its energy by fusing light elements (not necessarily hydrogen) into slightly heavier ones—the same process that fires the stars. Duplicate this, and one can make weapons of almost limitless power. Known variously as the hydrogen bomb, H-bomb, thermonuclear bomb, or fusion bomb, these work by detonating a fission bomb in a specially manufactured compartment adjacent to a fusion fuel. The gamma rays and X-rays emitted by the explosion compress and heat a capsule of tritium, deuterium, or lithium deuteride, initiating a fusion reaction.

XX-28 George, a 225-kiloton thermonuclear bomb, exploding on May 8, 1951 Illu(12.3)

In 1952 “a blinding flash of light” from an explosion that the United States set off on a small island in the South Pacific signaled the detonation of the first hydrogen bomb, and for a split second an energy that had existed only at the center of the Sun was unleashed by man on Earth; the explosion made a crater in the ocean floor a mile wide. In the intense debate that followed, some scientists, such as the ferociously anti-Communist Edward Teller, who had originally posited such a bomb, argued that nuclear power was a good thing and that research should be continued; others, Oppenheimer, Einstein, and Bethe among them, felt that their dreams for what physics might accomplish had turned into darkness and blood. What went almost unnoticed was that splitting the atom heralded yet one more demotion for the Sun: its otherworldly energy was no longer unique. As Bohr exclaims to Werner Heisenberg in Michael Frayn’s play Copenhagen, “You see what we did? We put man back at the center of the universe.”

* In the first quarter of the nineteenth century a German astronomer formulated Olbers’ Paradox: were there to be an infinite number of stars in the universe there would be no night, the sky would be one huge glare, and we would be drowned in—indeed, could not have come into existence under—such overwhelming stellar energy.4 A good explanation of why this is not so is worked out by fifteen-year-old Christopher Boone, the autistic narrator of Mark Haddon’s 2003 novel, The Curious Incident of the Dog in the Night-time: “I thought about how, for a long time, scientists were puzzled by the fact that the sky is dark at night, even though there are billions of stars in the universe and there must be stars in every direction you look, so that the sky should be full of starlight because there is very little in the way to stop the light reaching Earth. Then they worked out that the universe was expanding, that the stars were all rushing away from one another after the Big Bang, and the further the stars were away from us the faster they were moving, some of them nearly as fast as the speed of light, which was why their light never reached us.”5 One should further add that no star shines for more than around 11 billion years.

† Certain objects have the letter M before their designated numbers in honor of Charles Messier, the French astronomer who in 1774 published a catalogue of 45 deep-sky sightings such as nebulae and star clusters. The final version of the catalogue was published in 1781, by which time the list had grown to 103.

* As recently as 1999, a Gallup poll reported that 47 percent of Americans believed God created the human race within the last ten thousand years. Stephen Hawking notes in A Brief History of Time that a date of ten thousand years fits curiously well with the end of the last Ice Age, “which is when archaeologists tell us that civilization really began.”9

† Buffon simply ignored the biblical account of Creation, possibly encouraged by the appearance, in 1770, of the first printed blunt denial of any divine purpose, Système de la nature, by the forty-seven-year-old French philosopher Paul-Henri Dietrich d’Holbach.

* Most of the planet’s internal heat is generated by four long-lived radioisotopes—potassium-40, thorium-232, and uranium-235 and -238—that release energy over billions of years as they decay into stable isotopes of other elements. The Earth’s core is a hot, dense, spinning solid sphere, composed primarily of iron, with some nickel. Its diameter is about 1,500 miles, some 19 percent of the Earth’s (7,750 miles). Surrounding it is a liquid outer layer around 1,370 miles thick, 85 percent iron, whose heat-induced roiling creates the Earth’s magnetic field. The two cores together comprise one-eighth of the Earth’s volume, but a third of its mass. Over a period of 700 to 1,200 years, the inner core will make one full spin more than the rest of the planet, intensifying its magnetic field.

* Robert Jungk, Brighter Than a Thousand Suns (Harmondsworth: Penguin, 1960), p. 19. During the last year of the First World War, Rutherford failed to attend a meeting of experts who were to advise the high command on new systems of defense against enemy submarines. When censured, he retorted: “I have been engaged in experiments which suggest that the atom can be artificially disintegrated. If it is true, it is of far greater importance than a war.” Ibid., p. 15.

* The general spirit of the Copenhagen hothouse can be gleaned from a trip several of the scientists made to the cinema in 1928. After seeing a Western, Bohr contended that he knew why the hero always won the gunfights provoked by the villain. Reaching a decision by free will always takes longer than reacting instinctively, so the villain who sought to kill in cold blood acted more slowly than the hero, who reacted spontaneously. To test this “in a scientific manner,” Bohr and his band of fellow researchers sought out the nearest toy shop and bought two guns, with which a showdown was duly enacted, Bohr taking on the role of hero and the six-foot-four Gamow that of villain. Bohr’s theory was adjudged correct.22

* With the war under way, one of the routine duties of R. V. Jones, the scientific adviser to M16, was to read through a monthly summary of German scientific publications. Early in 1942 he perused the latest report, and the next minute was running like a lamplighter down the corridor. “The German nuclear physicists have stopped publishing!” The War Cabinet met within the hour.27

* See Sam Knight, “How We Made the Bomb,” London Times, July 8, 2004, T2, p. 14. The early labs were hardly sophisticated places: stray radiation induced radioactivity in everything from gold teeth to the zippers on trouser flies. A large proportion of those who worked on the Manhattan Project died at suspiciously early ages.

† In May 1946 The New Yorker sent the Japanese-speaking journalist John Hersey to write an extended account of what had befallen. His thirty-thousand-word report took up the whole of the August 31 issue (no cartoons, verse, or shopping notes), which sold out within hours. One haunted subscriber had preordered a thousand copies: Albert Einstein.31