IN SEPTEMBER 1958 PAUL-HENRI REBUT, A YOUNG GRADUATE from France’s prestigious École Polytechnique near Paris, arrived for his first day of work as a researcher at the Commissariat d’Énergie Atomique (CEA). He had been hired to join the CEA’s new fusion department but as he walked in he found the labs and offices largely deserted. His new colleagues were all in Geneva for the second Conference on the Peaceful Uses of Atomic Energy, the meeting that revealed to the world the secret fusion programmes of Britain, the United States and the Soviet Union. Before that meeting there had been some plasma physics research going on in Europe but, beyond Britain’s Harwell laboratory, little that was aimed at fusion energy. The Geneva meeting changed all that. Fusion programmes started up in several countries and, in the same month that Rebut started his new job, European collaboration in fusion began.

A few years earlier, European governments had been debating how to build on the success of the European Coal and Steel Community, the precursor to the European Economic Community (EEC) which later became the European Union. At the time the community consisted of only six members: France, Germany, Italy, the Netherlands, Belgium and Luxembourg. Some of these wanted the community to cover sources of energy other than coal, including the new atomic energy which, because of its high development costs, was a prime candidate for international collaboration. Others wanted to create a single market for goods across the member states. Trying to accommodate these divergent goals in one body was thought too difficult so a compromise was thrashed out. On 1st January, 1958 the coal and steel community was joined by two others, the EEC and Euratom, a body to coordinate the pursuit of atomic energy.

Fusion didn’t fit naturally into Euratom’s remit because the prospects of fusion power were some way off, so Euratom managers asked CERN, the recently-formed European particle physics laboratory in Geneva, if it would take responsibility for fusion. CERN set up a study group to investigate what fusion research was going on in Europe and what role CERN could play. But the CERN council eventually decided that as the ultimate aim of fusion is to generate energy on a commercial basis, such research was outside the limits of its statutes, which restricted it to pursuing basic science. So the ball was back in Euratom’s court.

On 1st September in the halls of the Palais des Nations at the start of the Geneva conference the vice president of Euratom, Italian physicist and politician Enrico Medi, sought out his compatriot and fellow physicist Donato Palumbo and asked him to head up Euratom’s fusion programme. Born in Sicily, Palumbo was a talented researcher who had graduated from the respected Scuola Normale Superiore in Pisa and then returned to Sicily to teach at the University of Palermo where he was later made a professor. He specialised in theoretical plasma physics so he was scientifically well qualified for the fusion programme but he was a quiet and unassuming man and so not an obvious choice for the cut and thrust of European politics. Palumbo at first refused, saying he wasn’t ready for such a job, but Medi persisted and Palumbo finally agreed.

The fission department of Euratom had a head start on fusion and by far the larger budget. Palumbo was given just $11 million for his first five-year research programme. The fission effort had set about creating a series of Joint Research Centres in various countries where Euratom work would be carried out. With his limited resources Palumbo knew that he couldn’t create anything that would rival the national fusion labs, which were then growing rapidly. He also disliked bureaucracy and hierarchies, and so decided to take a different tack. He set out to persuade each national fusion lab to sign a so-called association contract to carry out fusion research agreed collectively within Euratom. It wasn’t hard to persuade them because Euratom was offering to pay 25% of the labs’ running costs. The first to sign up was France’s CEA in 1959 and over the next few years all six Euratom members joined the fusion effort.

Palumbo may not have been a bureaucrat but he was a natural diplomat. Committee meetings at each lab always dealt with science issues first, with a bit of business discussed at the end. The central coordinating committee was delicately named the Groupe de Liaison, because France’s proud CEA and Germany’s Max Planck Society would not have liked anything that sounded too controlling. This low-key approach made him popular in the growing fusion community and reassured the national labs that Euratom was not planning to take over fusion research.

During the first decade of his tenure at Euratom, Palumbo’s fusion programme was mostly focused on understanding the plasma physics of confinement and heating. The labs built a variety of mirror machines, pinches and other toroidal devices. They experimented with heating using neutral particle beams and radio waves. The young Rebut found that the field was so new that there was no one there at the CEA who could teach him about plasma theory so he found what literature he could and taught himself, eventually making important contributions to the understanding of plasma stability. But trained as an engineer as well as a physicist, he soon got involved in designing, building and operating small fusion devices. He built a so-called hard-core pinch, a linear device that relied on a copper conductor along its central axis to carry the current to create the pinch rather than a plasma current. He moved onto toroidal pinches, again with a copper conductor along the axis, and became convinced that only toroidal devices would work because mirrors lost too many particles at their ends. This made him somewhat marginal in the French fusion effort since the CEA’s largest device at the time was a mirror machine at its laboratory in Fontenay aux Roses, a Paris suburb.

As a whole, European researchers were suffering the same frustrations as their colleagues in the United States: their machines were plagued by Bohm diffusion, confinement was poor, and funding was slowly dwindling. It was a crisis in Euratom’s fission section, however, that put the future of the programme in doubt. From the outset the organisation’s aim had been to collaboratively develop a prototype fission reactor that member states could then develop commercially. The favoured design was a heavy water reactor with organic liquid coolant, known as ORGEL. But the commercial appeal of separately developing their own reactors was too much for the member states and in 1968 the project collapsed, prompting savage budget cuts in all parts of Euratom. Palumbo was left with just enough money to pay the staff that Euratom employed in each of the associated labs. For a couple of years the programme stumbled on with a small amount of money from the Dutch government.

Just as the programme was at its lowest ebb, Russia announced its astonishing results with tokamaks at the 1968 IAEA meeting in Novosibirsk. Palumbo was in the middle of drafting a proposal for the next five-year programme but realised that the tokamak results changed everything. He started from scratch and arrived at the June 1969 meeting of the Groupe de Liaison with a new proposal. He suggested that the programme should concentrate its efforts on tokamaks and some other toroidal devices, that an extra 20% funding should be provided by Euratom for the building of any new device, and that a group should be set up to investigate building a large device collaboratively by all the associated labs. There was a heated debate over putting so much emphasis on tokamaks, but they reached an agreement and sent Palumbo’s proposal to the Euratom council for approval. Euratom, still smarting from the collapse of ORGEL, enthusiastically endorsed the fusion plan and even gave Palumbo slightly more funding than he had asked for. The laboratories set about drawing up plans for new machines.

The CEA, with its emphasis on mirror machines, was thrown into confusion when fusion fashion switched to tokamaks. Rebut, who had focused on toroidal devices, became the man of the moment. At the time of the Novosibirsk meeting he had been in the middle of designing a large pinch device, but he immediately abandoned it and began working on a tokamak design. When the Groupe de Liaison met again in October 1971, five new machines were approved with the new additional funding. At the same time, a small group was set up to investigate building a large multinational tokamak. This would-be machine was given the name the Joint European Torus (JET) – not ‘tokamak’ because German delegates thought it sounded too Russian.

Of the machines that were given the go-ahead at that meeting, the most ambitious was Rebut’s Tokamak de Fontenay aux Roses (TFR). The design was roughly the same size as Russia’s T-3 and Princeton’s Symmetric Tokamak but the plasma tube, with a radius of 20cm, was larger so that it contained more plasma and had a lower aspect ratio. What made the TFR stand out was the huge amount of electrical power that was put into containment. Rebut designed a large flywheel that was accelerated up to a high speed over a long period and then acted as an energy store for each plasma shot. Connecting the flywheel to a generator created a huge electrical pulse which, via the tokamak’s electromagnet, drove the plasma current that pinched the plasma in place. The TFR was able to generate plasma currents of 400,000 amps for up to half a second, world records at the time.

While the TFR was still being built, the JET study group had to decide what the next generation of tokamaks would be like. They didn’t have many practical details to work with because few tokamaks had yet been built outside Russia. The Symmetric Tokamak had started operating in 1970 and Ormak in 1971; in Europe there was only Britain’s CLEO, converted from a part-finished stellarator. And they couldn’t rely on theory either; there simply was no conceptual understanding of how tokamaks worked. What they did know was that tokamaks got great results and that if you made them bigger their operating conditions would probably get better. The JET study group knew that they wanted a machine that got close to reactor conditions and produced a significant amount of fusion power – and that meant a plasma that would heat itself.

Up until this point, fusion devices were not built to be genuine reactors because they generated so few fusion reactions. Their main aim was to practice confining and heating plasma. Now that devices were getting closer to producing lots of fusion reactions, reactor designers had to take into account the copious energy and particles the reactions would produce. Fusing a deuterium nucleus and a tritium nucleus produces two things. First is a high-energy neutron which has 80% of the energy released by the reaction so it will be moving very fast. Because neutrons are electrically neutral they are not affected by the tokamak’s magnetic field and so zap straight out and bury themselves in the tokamak wall or something else nearby, converting their kinetic energy into heat. In a power reactor the idea is to get those neutrons to heat water, raise steam, and use that steam to drive a turbine, turn a generator and create electricity.

The other thing produced in the reaction, carrying the remaining 20% of the energy, is a helium nucleus, otherwise known as an alpha particle. The alpha particle is charged so it will follow a helical path around magnetic field lines in the tokamak just as the ions and electrons of the plasma do. Capturing the alpha particles in the field serves a useful purpose: as they twirl around in the plasma they knock into other particles, transfer energy and generally heat things up. The designers of fusion reactors very much wanted to exploit this effect. If you can get alpha particles to heat up your plasma, that will help to keep the fusion reaction going and may even make other heating methods, such as neutral particle beams and radio-frequency waves, unnecessary.

The difficulty is that the newly created alpha particles are heavier than hydrogen and moving fast, so their spirals will be much wider. If the plasma vessel is too small, the spiralling alphas won’t get very far before hitting the wall. A stronger magnetic field will make the alpha particle spirals smaller, and the field is created in part by the plasma current. So reactor designers could derive a formula for successful alpha particle heating: for a given size of plasma vessel, there is a minimum plasma current that will create a strong enough field to keep the alpha particles within that vessel.

The JET study group reported back to the Groupe de Liaison in May 1973 and recommended that Euratom should build a tokamak around 6m across which, to contain alpha particles, would need to carry a current of at least 3 million amps (MA). This was a huge leap from the tokamaks of the day. France’s TFR was just 2m across and carried 400,000 amps and it was only just being finished. Physicists had no real idea how plasma would behave with 3MA of current flowing through it. But the excitement about tokamaks was so great in the early 1970s that Palumbo acted on the study group’s suggestions straight away and started to assemble a team to work out a detailed design for such a reactor. The obvious man to lead that effort, the man who had just finished building the world’s most powerful tokamak, was Paul-Henri Rebut.

In the United Kingdom, Sebastian Pease’s Culham laboratory was struggling. Researchers there had kept on working with ZETA during the 1960s and had done some good science. But attempts to build large follow-on machines – ZETA 2 and another one called ICSE – were thwarted by the government. Researchers had to content themselves with smaller devices. As the decade wore on, however, funding to the lab was repeatedly cut. Pease realised that if his lab was ever going to get involved in operating a large device again, it would have to be through collaboration with Britain’s European neighbours. But there was a problem: at that time Britain was not a member of the EEC or Euratom. Nevertheless, Pease talked to Palumbo and he allowed Pease and his colleagues to attend meetings of the Groupe de Liaison and join in discussions about JET.

By 1973, when Palumbo was putting together a team to design JET, Britain had joined the EEC and Euratom, and hence Pease was able to offer Culham as a base for the JET design team. So it was that in September 1973, only a few months after the study group proposed that JET should be built, Rebut set sail for England to begin the work. And he did literally set sail. Reluctant to leave behind a yacht that he had designed and built himself but which was not quite finished, Rebut sailed across the English Channel to take up his new job.

Initially housed in wooden huts left over from Culham’s time as a World War II airbase, the JET team had a daunting task ahead of them, and just two years to do it in. With the understanding of tokamaks still so sketchy there were two routes they could take: the safe, conservative route of simply scaling up in size from existing, successful machines such as TFR; or the more risky path of trying out some untested ideas. With the flamboyant Rebut in charge it was always going to be the latter. But the designers were constrained by a number of factors: disruptions and other instabilities, for example, limited the plasma’s density and the current that could flow through it. There were practical considerations, such as the strength of magnets. And there was cost.

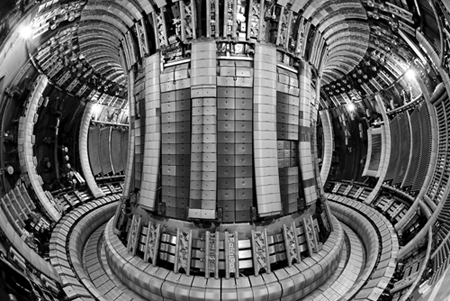

Bearing all of these issues in mind, the team came up with a design for a tokamak that was 8.5m across and the interior of the plasma vessel was twice the height of a person. The volume of the plasma vessel was 100 cubic metres (m3), a vast space compared to TFR’s 1m3. The current flowing through JET’s plasma (3.8MA) would be ten times that in TFR.

Earlier tokamaks – designed principally to study plasma – did not use the most reactive form of fusion fuel, deuterium-tritium. JET would be different but this added a hornet’s nest of problems to the project. Tritium is a radioactive gas which, because it is chemically identical to hydrogen, is easily assimilated into the body. So extreme care has to be taken to avoid leaks and every gram of it has to be accounted for. Carrying out deuterium-tritium, or D-T, reactions also produces a lot of high-energy neutrons. When these collide with atoms in the reactor structure it can knock other protons or neutrons out of their nuclei, potentially turning them radioactive. So over time, the interior of the tokamak acquires a level of radioactivity from the neutron bombardment. The levels are nothing like in a fission reactor, but they are enough to make it dangerous for engineers to go inside to carry out repairs or make alterations. JET had to be designed so that any work inside the vessel could be done from outside with a remote manipulator arm, even though such machines were only in their infancy at that time.

Everything about the design was big and ambitious, but one innovation stood out. Rebut decided to make the shape of the plasma vessel D-shaped in cross-section rather than circular. Part of the motivation for this was cost. The magnetic fields required for containment exert forces on the toroidal field coils, the vertical ones that wrap around the plasma vessel. These magnetic forces push the coils in towards the central column of the tokamak and are stronger closer to the centre. JET would require major structural reinforcement to support the toroidal field coils against these forces which would be very expensive. So, Rebut mused, why not let the coils be moulded by the magnetic forces? If left to find their own equilibrium, the coils would become squashed against the central column into a D-shape and stresses on them would be greatly reduced. So Rebut designed a tokamak with D-shaped toroidal field coils and a D-shaped plasma vessel inside them. In cross-section the vessel was 60% taller than it was wide. Overall, the tokamak now looked less like a doughnut and more like a cored apple.

But more importantly Rebut thought a D-shape would get him better performance. In 1972 the Russian fusion chief Lev Artsimovich and his colleague Vitalii Shafranov calculated that plasma current flows best when close to the inside wall of a plasma vessel – close to the central column. So if the plasma vessel was not circular in cross-section but was squashed against the central column, more plasma current would gain from the most favourable conditions. The Russians were in the process of testing the idea but at the time there was little proof that it would work. Rebut was convinced that the key to confinement was a high plasma current and a D-shape, he believed, could allow him to go far beyond the 3MA in JET’s specification. He guessed that the Groupe de Liaison would not be persuaded by the Russians’ theoretical prediction alone, so adding the issues of cost and engineering would help his case. He was right to be worried. When the JET team presented its design in September 1975, there was vigorous debate about the size of the proposed reactor, the D-shaped plasma vessel, the cost and more besides. Palumbo admitted that he would have preferred the tried-and-tested circular vessel but he trusted Rebut and his team and argued for their design.

Such was the persuasiveness of Rebut that the various Euratom committees eventually agreed to go ahead with JET pretty much as described in the design report. The Council of Ministers, the key panel of government representatives that oversaw the EEC and Euratom, also approved the plan. Constructing JET was predicted to cost 135 million European currency units (a forerunner of the euro) of which 80% was to come from Euratom coffers and the rest from member governments. All that remained was for the council to decide where it was to be built. Although there were technical requirements for the site, this was predominantly a political decision. The fifty-six-strong JET design team waited at Culham to hear the decision. Most of them would move straight to new jobs helping to build JET, so there was little point returning to their home countries.

Hosting such a high-profile international project was viewed as a prize by European nations and soon there were six sites vying for the honour: Culham; a lab of France’s CEA in Cadarache; Germany offered its fusion lab at Garching and another site; Belgium offered one; as did Italy with the Euratom-backed fission lab at Ispra. In December 1975 the Council of Ministers debated the site issue for six hours and came away without a decision. So the politicians asked the European Commission, the EEC’s executive body, to make a recommendation. The commission opted for Ispra, but when the council met again in March Britain, France and Germany vetoed this suggestion.

The council met again several times in 1976 but still without any resolution of the issue. Rebut and the team at Culham were getting desperate. Some were accepting jobs elsewhere while others were simply returning to their old pre-JET jobs at home. The European Parliament demanded that the matter should be settled. Politicians began to talk of the project being on its deathbed. The council discussed whether they should abandon their normal rule of making unanimous decisions and decide it by a simple majority, but they failed to decide on that too. All they did manage to do by this time was whittle the list down to two candidate sites: Culham and Garching. The frustrated scientists at Culham sent petitions to the council; their families sent petitions to the council. But by the summer of 1977 the game was up and Rebut and his Culham host Pease decided it was time to wind up the JET team.

On 13th October, while the team was still disbanding, fate intervened. A Lufthansa airliner en route from Mallorca to Frankfurt was hijacked by terrorists from the Popular Front for the Liberation of Palestine. They demanded $15 million and the release of eleven members of an allied terrorist group, the Red Army Faction, who were in prison in Germany. Over the following days, the hijackers forced the plane to move from airport to airport across the Mediterranean and Middle East before finally stopping on 17th October in Mogadishu, Somalia, where they dumped the body of the pilot – whom they had shot – out of the plane. They set a deadline that night for their demands to be met. German negotiators assured them that the Red Army Faction prisoners were being flown over from Germany but at 2 a.m. local time a team of German special forces, the GSG 9, stormed the plane. In the fight that followed, three of the four terrorists were killed and one was wounded. All the passengers were rescued with only a few minor injuries.

So what was the connection with JET? Germany created the GSG 9 in the wake of the bungled police rescue of Israeli athletes after their kidnap by Palestinian terrorists at the Munich Olympics in 1972. For training, the GSG 9 went to the world’s two best known anti-terrorist groups: Israel’s Sayeret Matkal and Britain’s Special Air Service (SAS). Mogadishu was the GSG 9’s first operation and two SAS members travelled with the group as advisers, supplying them with stun grenades to disorient the hijackers.

A home at last: the JET design team, with Paul-Henri Rebut at front centre, celebrates the decision to build JET at Culham, a week after the end of the Mogadishu hijack.

(Courtesy of EFDA JET)

The whole hijack drama had been followed with mounting horror, especially in Germany. When the news of the rescue broke on 18th October the country was awash with relief and euphoria, especially when a plane arrived back in Germany carrying the rescued passengers along with the GSG 9, who were welcomed as heroes. Into this heady atmosphere stepped the British Prime Minister, James Callaghan, who arrived in Bonn on the same day for a scheduled summit meeting and was greeted by German chancellor Helmut Schmidt with the words: ‘Thank you so much for all you have done.’ There were a number of EEC matters that divided Britain and Germany at the time and in the happy atmosphere of that summit meeting many of them were put to rest, including the question of JET’s location. A meeting of the Council of Ministers was hastily called a week later. The result was phoned through to Culham around noon and the champagne was, finally, uncorked. At last JET was ready to be built and Rebut and what remained of his team didn’t have to move anywhere.

Long before climate change became widely recognised as a threat to our future, an environmental movement grew up in the United States which drew attention to the pollution of the atmosphere by the burning of fossil fuels. This public pressure led to the 1970 Clean Air Act and the creation of the Environmental Protection Agency. At the same time, America’s electricity utilities were having trouble keeping up with the soaring demand for power, leading to often serious blackouts and brownouts. The Nixon administration responded by making the search for alternative energy sources, with less environmental impact, a national priority. The Atomic Energy Commission was pinning its hopes on the fission breeder reactor which it had been developing for some time. The AEC argued that the breeder produced less waste heat than the light-water fission reactors that power utilities were building at the time, and burned fuel more efficiently. But the increasingly vocal environmentalists didn’t buy it. As far as they could see, breeder reactors had the same safety concerns as light-water machines plus their plutonium fuel was toxic, highly radioactive and a proliferation risk.

With the government looking for alternative energy sources and the public suspicious of nuclear power, fusion scientists suddenly found themselves in demand. Fusion was seen as a ‘clean’ version of nuclear energy and the idea of generating electricity from sea water seemed almost magical. Researchers from Princeton and elsewhere were now being interviewed by newspapers, courted by members of Congress, and the new tokamak results meant that they really had something to talk about. As if on cue, Robert Hirsch, someone very well suited to make the most of this new celebrity, was put in charge of fusion at the AEC. Hirsch was in his late 30s; he wasn’t a plasma physicist, but he was a passionate advocate of fusion and he knew how to play the Washington game: he was at home in the world of Senate committees, industry lobbyists and White House staffers. In 1968 he had been working with television inventor Philo T. Farnsworth on a fusion device using electrostatic confinement and applied to the AEC for funding. Instead, Amasa Bishop hired him. He worked under Bishop and his successor Roy Gould but was always frustrated by the relaxed, collegial approach of the fusion programme. In Hirsch’s mind, fusion should be the subject of a crash development programme like the one that sent Apollo to the moon.

In 1971, Hirsch got his chance. Leadership of the AEC changed from nuclear physicist Glenn Seaborg to economist James Schlesinger, then assistant director at the Office of Management and Budget. Schlesinger wanted to counter criticism at the time that the AEC was simply a cheerleader for the nuclear industry; he wanted to diversify into other types of energy. One of his first changes was to promote the fusion section – which at that time was part of the research division – into a division in its own right. Gould, an academic from the California Institute of Technology, stuck to it for around six months and then stood down. Hirsch, like Schlesinger, was interested in planning and effective management. He was a perfect fit and took over as head of the fusion division in August 1972.

At that time, the fusion division was a tiny operation: just five technical staff and five secretaries. Its role and operations had not changed much since the 1950s. The direction of research and its timetable was pretty much decided by the heads of the labs. The fusion chief at the AEC acted as referee between the competing labs and was their champion in government. Hirsch had very different ideas. First he wanted more expertise in the divisional headquarters so that decisions about strategy could be made there. Within a year he had more than tripled the technical staff and by mid 1975 the division boasted fifty fusion experts and twenty-five support staff. He created three assistant director posts in charge of confinement systems, research, and development and technology. Now the lab heads had to report to the various assistant directors, not to Hirsch himself.

Hirsch also wanted the programme to be leaner and more focused, and that meant closing down some fusion devices which were not helping to advance towards an energy-producing reactor. Before the end of 1972 he closed down two projects at the Livermore lab: an exotic mirror machine called Astron and a toroidal pinch with a metal ring at the centre of the plasma held up by magnetic forces, hence its name, the Levitron. The following April he closed another mirror machine, IMP, at Oak Ridge. These terminations sent shock waves through the fusion laboratories. It was the first time that Washington managers, rather than laboratory directors, decided the fate of projects.

Hirsch knew that if fusion was going to be taken seriously by politicians it needed a timetable; an identifiable series of milestones towards a power-producing reactor. He set up a panel of lab directors plus other physicists and engineers to sketch out such a plan. The first milestone they defined was scientific feasibility, showing that fusion reactions could produce as much energy as was pumped into the plasma to heat it – a state known as break-even. The second would be a demonstration reactor, one that could produce significant amounts of excess energy for extended periods. After that would come commercial prototypes, probably developed in collaboration with industry. The panel suggested that the first goal could be achieved sometime around 1980-82, while a demonstration reactor might be built around 2000. There was some disquiet about this timetable at the fusion labs. They weren’t sure eight or ten years was enough to get to scientific feasibility, but at Hirsch’s urging this plan became official division policy.

Meanwhile something happened that would give Hirsch’s plan new urgency. On 6th October, 1973, Egypt and Syria launched a surprise attack against Israel. Starting on the Jewish holy day of Yom Kippur, the attackers made rapid advances into the Golan Heights and the Sinai Peninsula, although after a week Israeli forces started to push the Arab armies back. The conflict didn’t remain a Middle Eastern affair for long. On 9th October the Soviet Union started to supply both Egypt and Syria by air and sea. A few days later, the United States, in part because of the Soviet move and also fearing Israel might resort to nuclear weapons, began an airlift of supplies to Israel. What became known as the Yom Kippur War lasted little more than two weeks, but its effects reverberated around the world for much longer.

Arab members of OPEC, the Organisation of Petroleum Exporting Countries, were furious that the US aided Israel during the conflict. On 17th October, with the war still raging, they announced an oil embargo against countries they considered to be supporting Israel. The effect was dramatic: the price of oil quadrupled by the beginning of 1974, forcing the United States to fix prices and bring in fuel rationing. The prospect of fuel shortages and rising prices at the pump was a shock to the American psyche. All of a sudden the huge gas-guzzling cars of the 1960s seemed recklessly wasteful and US and Japanese carmakers rushed to get more fuel-efficient models onto the market. In response, President Richard Nixon launched Project Independence, a national commitment to energy conservation and the development of alternative sources of energy. That meant more money for fusion, lots more. In 1973 the federal budget for magnetic confinement fusion was $39.7 million; the following year it was boosted to $57.4 million, and that was more than doubled to $118.2 million in 1975. And the increases continued: by the end of the decade magnetic fusion was receiving more than $350 million annually.

Reading the political runes in 1973, Hirsch was keen to get his plan moving but wanted to make one significant change – he wanted the scientific feasibility experiment to use deuterium-tritium fuel. The fusion labs had previously assumed that they would use simple deuterium plasmas so that they wouldn’t have to deal with radioactive tritium, radioactive plasma vessels or the added complications of alpha-particle heating. They wanted a nice clean experiment in which they could get a deuterium plasma into a state in which, if it had been D-T, they would get the required energy output – a situation known as ‘equivalent break-even.’ For Hirsch, that wasn’t enough. He suspected that many of the scientists at the fusion labs were just too comfortable working on plasma physics experiments, but he wanted them to get down to the nitty-gritty of solving the engineering issues that a real fusion reactor would face. And he knew that a real burning D-T plasma would be PR gold. The White House, Congress and the public would never understand the significance of equivalent break-even but if a reactor could generate real power – light a lightbulb – using an artificial sun, that would get onto the evening news and every front page.

Not all fusion scientists were against moving quickly to a D-T reactor. Oak Ridge was not afraid of radioactivity. The lab had been set up during the wartime Manhattan Project and had pioneered the separation of fissile isotopes of uranium and plutonium to use in atomic weapons. Since then it had branched out into many fields of technology, some of which involved handling radioactive materials. Oak Ridge’s tokamak, Ormak, was performing well and researchers there saw a D-T reactor as a natural next step. In fact, they offered Hirsch more than he had asked for: they proposed a machine that would reach not just break-even but ‘ignition,’ a state where the heat from alpha particles produced in the reactions is so vigorous that it is enough to keep the reactor running without the help of external heat sources – a self-sustaining plasma. To reach ignition would require very powerful magnets made from superconductors, another area in which Oak Ridge already had expertise.

Seeing all the government money that was being thrown at new energy sources in the winter of 1973-74, Hirsch wanted to speed up his fusion development plan. Instead of building a deuterium-only feasibility experiment by 1980 followed by a D-T reactor by 1987, he proposed that they should move straight to a D-T reactor, starting construction in 1976 and finishing in 1979. Such a timetable would require a much steeper increase in funding as a D-T machine, at $100 million, would be twice the cost of a feasibility experiment.

None of the fusion labs liked this accelerated plan. Princeton didn’t want to get involved in D-T burning yet and the new plan would eliminate the deuterium-only feasibility experiment they had hoped to build next. Oak Ridge, although enthusiastic about D-T, thought the timetable was too short. And Los Alamos and Livermore, which were planning new pinches and mirror machines, feared that a big D-T tokamak would consume all the fusion budget and squeeze them out entirely.

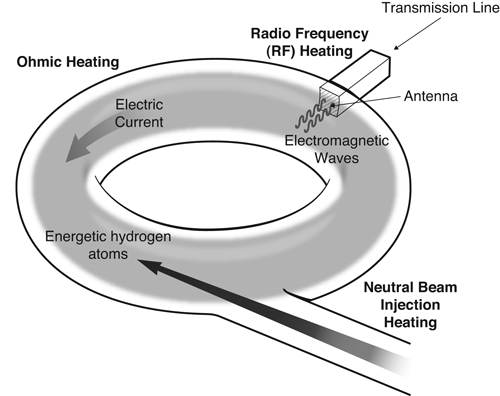

An issue that would play a key role in the move to larger tokamaks was plasma heating – how to get the temperature in the reactor up to the level necessary for fusion. Early tokamaks simply relied on ohmic heating, where the resistance of the plasma to the flow of current heats it up. Using ohmic heating alone, these machines were able to get to temperatures of tens of millions of °C, but fusion would need ten times that much. Theory predicted that as the temperature in the plasma got higher, ohmic heating would get less efficient, so another way of heating the plasma was needed. US researchers were pinning their hopes on neutral particle beams. These were being developed at Oak Ridge and the Berkeley National Laboratory as a way of injecting fuel into mirror machines, but tokamak researchers realised that they might work as plasma heating systems.

Neutral beam systems start out with a bunch of hydrogen, deuterium or tritium ions and use electric fields to accelerate them to high speed. If those ions were fired straight into a tokamak they would be deflected because its magnetic field exerts a strong force on moving charged particles. So the ion beam must first be fed through a thin gas where the ions can grab some electrons, neutralise, and move on through the magnetic field undisturbed. Once in the tokamak’s plasma, the beam gets ionised again by collisions with the plasma ions but because the beam particles are moving so fast when they do collide they send the plasma ions zinging off at high speed, thereby heating up the plasma.

During 1973 a race developed to see who would be first to demonstrate neutral beam heating in a tokamak. The team running the CLEO tokamak at Culham, using a variation on Oak Ridge’s beam system, were first to inject a beam but their measurements didn’t show any temperature rise above the ohmic heating. Princeton’s ATC, using the Berkeley beam injector, came in next and managed to get a modest rise in temperature. Oak Ridge lost the race, but got the best heating results in Ormak. These first efforts had low beam power, typically 80 kilowatts, and temperature gains were small, around 15% above ohmic heating. But within a year ATC was doing better, boosting the ion temperature in its plasma from around 2 million °C to more than 3 million °C. The signs were promising that neutral beam heating would be able to take tokamaks up to reactor-level temperatures. That would really be put to the test in an upcoming machine, the Princeton Large Torus (PLT), which had begun construction in 1972. Designed to be the first tokamak to carry more than a million amps of plasma current, PLT would have a 2-megawatt beam heating system to boost the ion temperature above 50 million °C.

The sensible thing would have been to wait and see how well the PLT worked before embarking on a larger and more ambitious reactor, but Hirsch didn’t want to wait. In December 1973 he called together the laboratory heads and other leading fusion scientists to discuss plans for the D-T reactor. The Princeton researchers were highly critical of Oak Ridge’s proposal for a reactor that could reach ignition. Such a machine would require a huge leap in temperature from what was then possible and would cost, they had independently calculated, four times the allotted $100 million budget. Then the question of timetable came up. Oak Ridge’s head of fusion Herman Postma was asked if his design would be ready to begin construction in 1976, Hirsch’s preferred start date. The reactor’s ambitious design and superconducting magnets would take some time to get right, so Postma said that he didn’t know. Hirsch was furious.

After a break for lunch, the head of the Princeton lab, Harold Furth, got up and made a surprising proposal. He sketched out a machine that he and a few colleagues had first proposed nearly three years earlier. Since it was difficult to get plasma temperature up to reaction levels, they had reasoned that you could build a tokamak that was only capable of reaching a relatively modest temperature and fill it with a plasma made of just tritium. Then with a powerful neutral beam system they would fire deuterium into the plasma. While there would be no reactions in the bulk of the plasma, at the place where the deuterium beam hits the tritium plasma the energy of the collisions would be enough to cause a reasonable number of fusion reactions. Furth referred to this setup as a ‘wet wood burner’: wet wood won’t burn on its own, but it will if you fire a blowtorch at it.

Such a reactor would never work as a commercial power-producing plant because it could only achieve modest gain (energy out/energy in). Reactor designers had always assumed that beam heating systems would only be used to get the plasma up to burning temperature and then the heat from alpha particles would sustain the reaction. It was never the idea for beams to be an integral part of the reactor. But Furth suggested that this would be a quick and relatively cheap way to get to break-even.

Hirsch gave the two labs six months to come up with more detailed proposals. When those plans were revealed in July 1974, Hirsch had a difficult choice. On one hand was a bold, technologically inventive machine that had the potential to get all the way to ignition in one step, although it came from a lab that was relatively new to the fusion game. The alternative was a much more conservative choice. Princeton’s wet wood burner was not dissimilar to the Princeton Large Tokamak that the lab was currently building. It would not go a long way towards demonstrating how a fusion power reactor would work but Princeton had nearly twenty-five years’ experience of building these things and if Hirsch simply wanted a demonstration of feasibility, this was more likely to give it to him. He was aware that European labs were working together to design a large reactor and Russia had ambitious plans too, so he could not afford to delay – as always, American prestige was at stake. So he played safe and gave Princeton the nod to begin work on the Tokamak Fusion Test Reactor (TFTR) with an estimated total cost of $228 million.

Like the JET design team across the Atlantic, the designers of TFTR didn’t have a lot of information to work with. But unlike Rebut’s daring design for JET, the Princeton team opted to keep it simple. No D-shaped plasma for them; they stuck with the tried-and-tested circular design. It was a trademark of the Princeton lab to keep their devices as simple as possible – the simpler they are the faster they can be built and the easier it is to interpret the results.

In 1975 the PLT began operation and produced some impressive results using neutral beam heating, raising the ion temperature to 60 million °C. Theorists had predicted that a beam impacting with the plasma would cause instabilities, but these failed to materialize. Altogether the Princeton researchers were happy with the results, but there was one thing that caused some concern: confinement time got worse the more beam heating was applied. Although this cast a small dark cloud over the future of TFTR, in the rush to finish its design and get construction started there was little time to consider the issue.

Ground was broken for the new machine in October 1977 and it was scheduled to start operating in the summer of 1982. Much of the work was parcelled out to commercial contractors, a significant fraction was built by other government labs, and the rest was done in-house by Princeton staff. Like almost any science project of this size, there were numerous technical headaches along the way. New buildings had to be built with thick concrete shielding to protect people from the neutron flux when D-T reactions were taking place. The power supply system, involving the usual giant flywheel, proved unreliable and had to be virtually rebuilt, which bankrupted the contractor involved. For the first time, computers were bought to help analyse results from the reactor but, being a new technology, it took some years to get them working properly. As the summer of 1982 passed and moved into autumn the diagnostics systems for monitoring the reactor were nowhere near finished. TFTR project director Don Grove was determined for TFTR to get its first plasma before the end of the year, and that meant before Christmas. In desperation Princeton staff took over the installation of diagnostics from the contractor on 12th December and worked around the clock to get it in place.

By 23rd December they had installed the very minimum set of diagnostic instruments. The cabling that connected these instruments, via a tunnel, to the nearby control room had not been installed so they set up a temporary control room in the reactor building. A thousand and one things had to connected, checked, rechecked and tested. The Princeton researchers had never built such a large and complex machine before and everything was new and unfamiliar. The sky darkened and the team worked on into the evening. Grove decreed that whether they finished or not they would stop working at 2 a.m. The clock ticked past midnight into the early hours of Christmas Eve. They were very close, but not there yet. No one admits to knowing how it happened, but the clock on the control room wall mysteriously stopped at around 1.55 a.m. Since it was not officially 2 a.m. yet, the team kept working.

Staff celebrate first plasma in the Tokamak Fusion Test Reactor at Princeton on Christmas Eve 1982. Note the clock, stalled at 1.55 a.m.

(Courtesy of Princeton Plasma Physics Laboratory)

About an hour later they attempted their first shot. There was a flash in the machine and it was done. Grove ceremoniously handed a computer tape to Furth containing measurement of the first plasma current and Furth handed over a crate of champagne. The giant machine was duly christened and everyone went home for Christmas. TFTR would not produce another plasma till March as the team had to finish installing all the things that had been left out in the rush to meet the deadline.

Despite the head start that the JET team originally had in designing their machine, the delay over deciding its location put them firmly in second place. The ground breaking ceremony for TFTR in October 1977 was only days after the end of the hijack drama at Mogadishu. Construction of JET didn’t begin until 1979 so the Culham team were two years behind their US rivals, but they soon made up lost ground thanks to the steely determination of Rebut. The Frenchman did not, however, get the job of JET director. Euratom passed him over and gave the job to Hans-Otto Wüster, a German nuclear physicist. Wüster had made a name for himself as deputy director general of CERN during the construction of its Super Proton Synchrotron. Although not a plasma physicist, Wüster had an easygoing style that allowed him to talk with equal ease to construction workers and theoretical physicists – very different from the blunt Rebut. But underneath the charm he was an adroit politician, something that proved very useful in keeping JET on track. Rebut, however, was knocked sideways by the decision and considered resigning. But the job of technical director still allowed him to supervise the construction of the design he fought so hard to bring to life, and Wüster gave him complete freedom in the construction.

Palumbo also did his bit to set JET off on the right track. Although 80% of JET’s funding came from Euratom – with another 10% from the UK and the remaining 10% split between the other associations – he insisted that JET be set up legally under European law as a Joint Undertaking, in other words an autonomous organisation with its own staff of physicists, theorists and engineers, and at arm’s length from interference by Euratom and the national labs. Even at Culham, where JET was sited, it remained separate from the national laboratory – it had its own buildings and its own staff. Culham researchers worried that JET would suck all the vitality out of their own lab and tended to view the JET researchers as a superior bunch who kept themselves to themselves. There was one issue that caused more than cool relations: pay. The JET undertaking instituted a system of secondment in which researchers from Euratom association labs would come to JET and work there for a while. During these sojourns they enjoyed the generous rates of pay typical of people working for international organisations. But the Culham staff seconded to JET continued to get the local salary rate which was less than half what their overseas colleagues were getting. This disparity caused huge resentment among British employees at JET, forcing them eventually to take the matter to court.

Just as in the building of TFTR, there were numerous technical hurdles to overcome in JET’s construction, but Rebut ruled with an iron hand and allowed very few changes to the design. On 25th June, 1983, almost exactly six months after TFTR produced its first plasma, JET fired up for the first time. ‘First light, a bit of current,’ the operator wrote in his log book. It was only a bit, just 17 kiloamps, but the race between the two giant machines was on. Rebut had made a bet with his opposite numbers in Princeton that, even though JET started later, it would achieve a plasma current of 1MA first. The loser would have to pay for a dinner at the winner’s lab and bring the wine. JET duly passed the milestone first, in October, and so the two teams dined together, at Culham, drinking Californian wine.

It didn’t remain a two-horse race for very long, however, with a Japanese contender known as JT-60 joining in April 1985. Japan had noted the fusion results revealed at the Geneva conference in 1958 but decided against an all-out machine building programme. They kept their plasma physics experiments small and in university labs. When the Russian-inspired dash for tokamaks began in the late 1960s Japan decided to take the plunge and built its first tokamak, the JFT-2, which was roughly the same size as Oak Ridge’s Ormak and was completed in 1972. From there, they jumped straight to the giant tokamak class, beginning design work on JT-60 in 1975.

Unlike in Culham and Princeton, the researchers at the new fusion research establishment in Naka did not supervise the construction of the machine themselves. They drew up detailed plans and then handed them over to some of the giants of Japanese engineering, including Hitachi and Toshiba, and left them to get on with it. Although it was a more expensive way of building a fusion reactor, the researchers were spared the stresses and strains of managing a complex engineering project. At Naka there was no unruly rush to demonstrate a half-finished machine just to meet a deadline. Instead the construction companies finished the job in an orderly fashion, tested that it was working, and then handed it over to the researchers. JT-60 was roughly the same size as JET and had a similar D-shaped plasma cross-section. Because of Japanese political sensitivities, it was not equipped to use radio-active tritium so the best it would be able to achieve was an ‘equivalent break-even’ but despite that, in many ways it exceeded the achievements of its rivals in the West.

Russia too, after the success of T-3 in 1968, continued to innovate, building a string of machines from T-4 right up to T-12. T-7 was the first machine to use superconducting magnets. Superconductors, when cooled to very low temperatures, will carry electrical current with no resistance so they allow much more powerful magnets and much longer pulses. This lets researchers explore how plasma behaves in a near steady state, rather than in a short pulse. The size of T-10 was on a par with Princeton’s PLT. Other centres got involved too, such as the Ioffe Institute in Leningrad which built a series of tokamaks.

In the mid 70s, as the US, Europe and Japan began building large tokamaks, Russia too started work on T-15. Although it was not quite as large as the other three giants of that period and was not equipped to use tritium, it was the only one of the four to use superconducting magnets. The intention was to follow T-15 with a dedicated ignition machine, T-20, bigger than TFTR, JET or JT-60. But the researchers’ ambitions were undermined by the crumbling state around them. The Soviet Union in the 1980s was already in a downward spiral. The T-15 team had trouble getting funding and materials and the situation got worse every year. By the time the machine was finally finished in 1988 it was already looking out-of-date and the institute couldn’t afford to buy the liquid helium needed to cool its superconducting magnets. Following the final collapse of the Soviet Union in 1991 the new Russia just didn’t have the resources to run an active fusion programme and T-15 was eventually mothballed.

Back in 1983, the Princeton researchers were getting used to their new machine. The huge scale of the thing compared to earlier tokamaks made it an exciting time. They ran the machine for two shifts each day. Researchers would gather for a planning meeting at 8 a.m. and the first shots would begin at 9 a.m. A roster of thirty-six shots per day was typical. There would be another meeting at 5 p.m. and shots would often run late into the evening but they had to stop at midnight to let the technicians and fire crew go home – they always had to be present for safety reasons. If the team were working late they would send one of their number out to get a carload of pizzas or hoagies – the Philadelphia term for a submarine sandwich.

The Tokamak Fusion Test Reactor at Princeton showing the neutral beam injection system on the left.

(Courtesy of Princeton Plasma Physics Laboratory)

TFTR was bristling with diagnostic instruments and the huge volumes of data these produced demanded a whole new way of working. Smaller machines had essentially been run by one small group of researchers who would plan experiments, carry them out, analyse the results and then do some more. Such an approach on TFTR would waste too much valuable machine time. So different groups were set up with a range of goals so that at any one time some would be preparing experiments, others doing shots and collecting data, and others analysing results of earlier shots. While it was all relatively informal at first, soon there were demands that groups didn’t horde their data but made it available for everyone to study. Competition for time on the machine grew so intense that they had to set up a system of written experimental proposals, five to ten pages long, that were peer-reviewed by other researchers at the lab. Princeton had entered the realm of ‘big science’ and it took some time for its researchers to adjust.

Initially, the researchers were getting very encouraging results. Even though the neutral beam heating systems hadn’t been installed yet, TFTR was producing temperatures in the tens of millions of °C using ohmic heating alone and with respectable confinement times. JET, when it started up in June 1983, made similarly good strides using ohmic heating. The scaling laws had been right that larger machines would lead to better confinement. But later, when heating was applied in both machines, the mood changed. TFTR initially had just neutral beam heating while JET had two heating systems, neutral beams and a radiowave-based technique known as ion cyclotron resonant heating or ICRH. In a tokamak plasma the ions and electrons move in spirals around the magnetic field lines and these spirals have a characteristic frequency. If you send into the plasma a beam of radiowaves at the same frequency, the waves resonate with spiralling particles and pump their energy into the particles, boosting their speed and hence the temperature of the plasma. So JET had radiowave antennas in the walls of the vessel to heat the plasma via ICRH.

But whatever the heating method used, the effect was the same: although heating did lead to higher temperatures, as predicted, it produced instabilities in the plasma which led to reduced confinement time. The loss in one counteracted the gain in the other so the overall effect was not much improvement in overall plasma properties. Projections showed that if the plasmas continued to behave in the same way as heating was increased, neither machine would get to break-even. The warnings about neutral beam heating provided by machines such as PLT had come too late: both Princeton and Culham seemed stuck with designs that would not achieve their goals.

Tokamaks need help to heat plasma to fusion temperatures, usually provided by ohmic heating (friction), radio waves and neutral particle beams.

(Courtesy of EFDA JET)

In February 1982, Fritz Wagner, a physicist at Germany’s fusion lab, the Max Planck Institute for Plasma Physics in Garching near Munich, was carrying out experiments with the lab’s ASDEX tokamak. ASDEX was a medium-sized tokamak and Wagner, who was relatively new to plasma physics, was studying the effect of neutral beam injection on the properties of the plasma – this was before TFTR and JET had started up. He started his shots just heating the plasma ohmically and then turned on the neutral beam and measured what happened. In most of his shots the arrival of the neutral beam produced a jump in temperature and the inevitable dip in density as instabilities caused by the beam made particles escape. But he noticed something strange: if he started out with a slightly higher density of particles and stayed above a certain beam power, when the beam kicked in the density suddenly jumped up instead of down and continued to rise, eventually reaching a state where temperature and density remained high right across the width of the plasma, ending in a steep decline at the plasma edge. This was entirely unlike the usual pattern which showed a maximum of temperature and density in the centre of the plasma and a gradual decline towards the edge. Wagner did more experiments with different starting densities and found that there was no in-between state: high beam power was needed and the density either jumped up or down depending on whether the starting density was above or below a critical value.

Wagner was perplexed and spent the weekend checking and rechecking his results to make sure he hadn’t misinterpreted something. His colleagues at the Garching lab were sceptical about it at first. No such effect had ever been predicted by theory or seen at another lab. But Wagner was able to demonstrate the effect reliably on demand so they were forced to take it seriously. If the effect worked on other tokamaks it could be amazingly important because the jump up in density, which was soon dubbed high-mode or H-mode, produced confinement twice as good as the low pressure state (low-mode or L-mode). The wider community of fusion scientists took more persuading. At a fusion conference in Baltimore a few months later he was grilled for hours by a disbelieving audience at an evening session. Until some other tokamak could also demonstrate H-mode, it would remain a curious quirk of ASDEX.

Wagner had to wait two years for another tokamak, Princeton’s Poloidal Divertor Experiment (PDX), to prove him right. Another machine, DIII-D at General Atomics in San Diego, repeated the feat in 1986. Now everyone was interested in H-mode. TFTR and JET, which were both struggling with poor confinement brought on by neutral beam injection, could be saved by H-mode but no one knew if it would work in such big machines. And there was another problem: ASDEX, PDX and DIII-D all had something that the giant tokamaks didn’t have – a divertor – and it seemed that H-mode only worked if you had one.

A divertor is a device in the plasma vessel that aims to reduce the amount of impurities that get into the plasma. Impurities are a problem in a fusion plasma because they leak energy out and make it harder to get to high temperatures. It works like this: if the impurity is a heavy atom, like a metal that has been knocked out of the vessel wall by a stray plasma ion, it will get ionised by collisions with other ions as soon as it strays into the plasma. But while a deuterium atom is fully ionised in a plasma – it has no more orbiting electrons – a metal atom will lose some of its electrons but hold onto others in lower orbitals. It is these remaining electrons that cause the problem. When the metal ions collides with others these electrons get knocked up into higher orbitals and then drop down again emitting a photon which, immune to magnetic fields, will shoot out of the plasma, taking its energy with it.

In early tokamaks, researchers tried to reduce this effect with a device called a limiter. There were different types of limiter but a common one took the form of a flat metal ring, like a large washer, which fits inside the plasma vessel and effectively reduces its diameter at that point. During operation the plasma current has to squeeze through the slightly narrower constriction formed by the limiter. This helps to reduce the plasma diameter and so keeps it away from the walls, and it also scrapes off the outermost layer of plasma where most impurities are likely to be lurking. As the only place where the plasma deliberately touches a solid surface, limiters had to be made of very heat-resistant metals such as tungsten or molybdenum. But when external heating began to be used in tokamaks in the mid 1970s the higher temperature proved too much for metal limiters and they started to become a source of impurities rather than a solution for them. So researchers switched to limiters made of carbon which is very heat-resistant. Even if the carbon did end up as an impurity it would do less damage because, being a light atom, it would probably be fully ionised by the plasma and wouldn’t radiate heat.

Some labs tried to counter the problem of impurities by coating the inside walls of their vessels – usually made of steel – with a thin layer of carbon. The coatings helped but they didn’t last for long, so at some labs they began to cover the inside walls of their vessels with tiles of solid carbon or graphite. Russia produced the first fully carbon-lined tokomak, TM-G, in the early 1980s and after it reported encouraging results others followed suit. By 1988 the interiors of JET, DIII-D and JT-60 were half covered in tiles and total coverage only took a few more years.

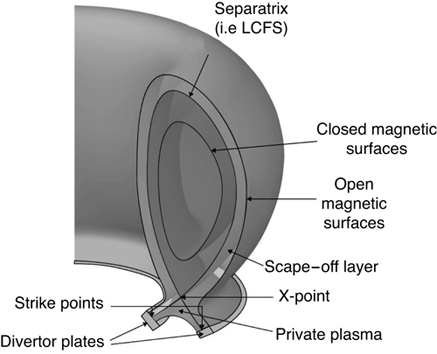

Limiters were, however, still proving to be a problem and researchers resurrected the idea of a divertor that Lyman Spitzer had first suggested in 1951 for his stellarators. A divertor takes the meeting point between the outer layer of plasma and a solid surface and puts it in a separate chamber, away from the bulk of the plasma, so that any atoms kicked out of the surface could be whisked away before they polluted the plasma. In some of Spitzer’s stellarators, at a certain point in one of the straight sections, instead of the narrow aperture of a limiter there would be a deep groove going all the way around the vessel poloidally (the short way around). Extra magnets would coax the outermost magnetic field lines – known as the ‘scrape-off layer’ – to divert from the plasma vessel and form a loop into the groove and out again. But inside the groove the field lines would pass through a solid barrier so any ions – deuterium or impurity – following those field lines would be diverted into the groove and then halted by the barrier. Unlike a limiter, this halting of the outermost ions occurs away from the main plasma, where it’s less likely to re-pollute it.

The divertor at the bottom of a tokamak’s plasma vessel removes heat and helium ‘exhaust’ from the plasma, and helps to achieve H-mode.

(Courtesy of EFDA JET)

Divertors didn’t work well in stellarators. The extra fields to divert the scrape-off layer caused such a bump in the magnetic field at that point that it worsened confinement. But in the mid 1970s people tried them again in tokamaks, first in Japan followed by Russia, the UK, the US (PDX) and Germany (ASDEX). In tokamaks it was possible to position the divertors differently: because the plasma moves by spiralling around the plasma vessel – combining toroidal and poloidal motion – a divertor could be fitted as a groove going around the torus the long way, toroidally. In this way the symmetry of the toroidal shape is not spoiled but the scrape-off layer will always pass the divertor once per poloidal circuit. And D-shaped plasma vessels had the perfect place to put a divertor: in the top or bottom corners of the D.

This small group of tokamaks that had divertors seemed to be the only ones in which H-mode worked, but nobody knew why. Both JET and TFTR were desperate to try to reach H-mode to improve their performance, but they were designed before divertors had proved themselves in tokamaks so neither had one. JET at least had the D-shape that could easily accommodate a divertor but installing one would involve an expensive refit. The JET team had a hunch, however: perhaps it was not the divertor itself that was responsible for H-mode but the unusual magnetic configuration with the outer scrape-off layer pulled out into a loop.

In the bulk of the plasma, the field lines loop right around forming closed, concentric magnetic surfaces, like the layers of an onion. The magnetic surfaces in the scrape-off layer are said to be open surfaces because they don’t close the loop around the plasma but veer off into the divertor. There is one surface that marks the boundary between the open and closed magnetic surfaces. Known as the ‘separatrix,’ this surface appears to form a cross – dubbed the x-point – close to the divertor where field lines cross over themselves.

In H-mode, the plasma edge was marked by a steep drop in density and temperature, almost as if something was blocking plasma from escaping. JET researchers wondered whether this ‘transport barrier’ was in some way related to the separatrix, the transition from closed to open magnetic surfaces. The question for JET was whether it could reproduce this magnetic shape with a separatrix and x-point without having a divertor? And if they could, would it produce H-mode? By adjusting the strength of certain key magnets around the tokamak, JET researchers were able to stretch out the plasma vertically and, eventually, produce the desired shape. The x-point was just inside the plasma vessel and the open magnetic field lines simply passed through the wall instead of into a divertor. It was enough to have an attempt at H-mode. They tested JET in this divertor-like mode for the first time in 1986. With a plasma current of 3 MA and heating of 5 MW, the plasma went into H-mode for 2 seconds, reaching a temperature of nearly 80 million °C and holding a high density. Researchers calculated that if they had been using a 50:50 mix of deuterium and tritium they would have produced 1 MW of fusion power. So H-mode was possible in a large tokamak. All JET needed now was a divertor.

TFTR, however, was stymied. With its circular vessel cross-section it was difficult to install a divertor and almost impossible to coax its magnetic field into an elongated shape with x-points and open field lines. So instead the Princeton researchers chipped away at the problem in any way they could think of, trying to coax longer confinement times despite the degradation caused by neutral beam heating – and eventually they did make a breakthrough, almost by accident.

A gruff experimentalist called Jim Strachan was doing some routine experiments on TFTR in 1986, trying to produce a heated plasma with very low density. The problem was the tokamak was not playing ball. Ever since they had started coating parts of the vessel interior with carbon – to stop metal from the walls from getting into the plasma – they had encountered a downside of carbon: it likes to absorb things. Carbon will absorb water, oxygen and hydrogen, along with its siblings deuterium and tritium. With all this stuff absorbed into the vessel walls, when you start heating a plasma the heat causes the absorbed atoms to emerge again and contaminate the plasma. Even if it is just deuterium in the walls – the same stuff as the plasma – it means that experimenters had no control over the plasma density because they never knew how much material would emerge from the carbon.

Earlier in 1986 a new carbon limiter had been installed in the TFTR vessel. This wasn’t a narrowing ring at one point in the torus but was instead a sort of ‘bumper’ of carbon tiles along the midline of the outer wall right around the torus. Once installed, the limiter was saturated with oxygen so researchers ran hot deuterium plasmas in the tokamak to oust the oxygen. That worked fine but it left the carbon tiles full of deuterium which would play havoc with Strachan’s attempt to produce low density plasmas. So Strachan started running shot after shot of helium plasma to get rid of the deuterium. Helium is a non-reactive noble gas, so does not get absorbed into the carbon as much. Strachan continued this for days, trying to get the tokamak as clean as possible, and then on 12th June he did a low-density heated deuterium shot – TFTR’s shot number 2204. The density stayed low, the temperature high and, astoundingly, the confinement time – 4.1 seconds – was twice what TFTR had achieved before. What’s more, it produced lots of neutrons – a sign of fusion reactions.

Strachan tried again and found he could produce these shots – which his colleagues soon dubbed ‘supershots’ – on demand. The key seemed to be the preparatory cleaning shots using helium, so the TFTR team set up a protocol to prepare the machine that way before every shot. This conditioning took anywhere between two and sixteen hours for every supershot, but it was worth it. The plasma current had been low in Strachan’s initial efforts, but with further experiments researchers managed to push the plasma current up, until at last they were working with plasmas that were a lot more like the ones needed for a fusion reactor, with temperatures above 200 million °C. Finally, the TFTR team could again see a path to D-T shots and alpha-heating.

JT-60 was the only one of the three giant reactors to have been built with a divertor, but it was positioned half way up the outside wall. When the Naka team tried to produce H-mode, they found that they just couldn’t get the right magnetic configuration with the divertor where it was. The Japanese researchers worked with the reactor for just four years and then in 1989 made a bold decision: they gave JT-60 a complete refit, installing a new divertor at the bottom and covering the whole interior with carbon tiles. The rebooted JT-60U began operating again in 1991 and the gamble paid off because it was soon operating in H-mode with properties as good as JET’s.

By the beginning of the 1990s, TFTR and JET had refined supershots and H-mode to such an extent that they were getting fantastic results. TFTR could reach ion temperatures of 400 million °C. One measure of success is gain, the ratio of fusion power out over heating power in, denoted by Q. So break-even would be Q=1. At a conference in Washington in 1990, the two teams reported shots that, if they had been performed with D-T plasma, would have achieved Q=0.3 for TFTR and Q=0.8 for JET. Two years later the JET team announced that they had produced shots that would be Q=1.14 in D-T – more power out than in – but this record was soon bettered by JT-60U, which achieved Q=1.2. Although it had taken longer than expected for the big tokamaks to achieve this sort of performance, because of the problems with degraded confinement, they had got there. But just as the teams at Princeton and Culham were starting to think about tritium and burning plasmas something unbelievable happened: a pair of scientists declared that they had achieved fusion in a test tube.

On 23rd March, 1989, Martin Fleischmann, a prominent electrochemist from Southampton University, and Stanley Pons of the University of Utah stood up in a press conference in Utah and described an experiment they had been performing in the basement of the university’s chemistry department. They took a glass cell – little more than a glorified test tube – and filled it with heavy water – made from deuterium and oxygen. They inserted two electrodes, one of platinum and one of palladium, and then they passed an electric current through it. Nothing much would happen in the cells for hours or even days but then they would start to generate heat; much more heat, Fleischmann and Pons said, than can be explained by the current passing through the cell or any chemical reactions that might be taking place. Their best cell, Pons said, produced 4.5 watts of heat from 1 watt of electrical input: Q=4.5. They didn’t believe that a chemical reaction could be producing such heat and so it had to be a nuclear process, in other words the fusion of deuterium nuclei into helium-3 and a neutron.

Part of what was going on in the cells was an everyday process called electrolysis. When they passed a current through the cell, heavy water molecules were split apart and the oxygen ions migrated towards the positive, platinum electrode while the deuterium ions moved to the negative, palladium electrode. But the choice of electrodes was key because palladium is well known to have an affinity for hydrogen, and hence for deuterium too. It is able to absorb large quantities of hydrogen or deuterium into its crystal lattice structure. During the hours when the cells are first switched on, the palladium electrodes absorb more and more deuterium. Pons believed that eventually there would be twice as many deuterium ions in the lattice as there were palladium ones.

The next part is where it all becomes strange. Pons and Fleischmann believed that some of these deuterium ions, crushed together in the palladium lattice, somehow overcame their mutual repulsion and fused. As evidence for fusion, Fleischmann and Pons said they had detected neutrons coming from the cells – which would be expected from such a fusion reaction – as well as both helium-3 and tritium – other possible fusion products. The two scientists were cautiously optimistic about the usefulness of their discovery. ‘Our indications are that the discovery will be relatively easy to make into a usable technology for generating heat and power,’ Fleischmann told the press conference. The Utah announcement caused a sensation around the world. Newspapers, TV and radio picked up the story. The idea that you could generate as much heat and electricity as you wanted using a simple glass cell filled with deuterium from seawater fired people’s imaginations. No longer would the world be dependent on coal, oil, natural gas and uranium.

The initial reaction of fusion scientists was utter incredulity. It just didn’t make sense. As far as they had always understood it, deuterium ions are extremely reluctant to fuse because of their positive charges. Those charges make the ions strongly repel each other and it takes a huge amount of energy to force them close enough together to fuse. Inside a metal lattice, where was all the energy coming from to overcome the repulsion? But there were reasons for fusion scientists to pause for thought. The behaviour of ions inside a metal lattice is much stranger and harder to predict than in the near empty space inside a tokamak. Lattices do funny things to ions, affecting their apparent masses and how they interact with other ions. This was an environment that most plasma physicists had little knowledge of. Could it be that it was just something that they had missed? The two scientists were highly respected – Fleischmann was one of the world’s foremost electrochemists. And these two men must have been pretty sure of their results to stand up and make such bold claims in front of the world’s press, without the usual procedure of having published their results in a journal first and subjecting them to review by other experts.

In the days that followed the press conference, it also emerged that another group at a different Utah institution – Brigham Young University – had been doing similar experiments and had also detected neutrons and heat, but much less heat than Fleischmann and Pons found. Also fusion researchers found themselves called upon in the media to explain what fusion is all about, and to justify why they needed such huge complex tokamaks to achieve it. All of a sudden these machines seemed a wasteful extravagance.

One of the benefits of such a simple experimental setup as the one in Utah is that it is very easy for other scientists to carry out similar tests to verify or refute it. Within days researchers the world over had current flowing through heavy water and were waiting to see the same signs of fusion taking place. It took a few weeks for the first results to come in. A team at Texas A&M University also detected excess heat in their cells, which were modelled on Pons and Fleischmann’s, but they hadn’t yet tested for neutrons. Other results came in from labs all over the world in the following weeks, but they didn’t make things any clearer: some saw neutrons but not heat, others got heat but no neutrons, and some found nothing at all. Researchers at Georgia Tech announced they had found neutrons, only to withdraw the claim three days later when they realised their neutron detector gave false positives in response to heat.

That didn’t stop Pons being greeted as a hero when he appeared at a meeting of the American Chemical Society in Dallas on 12th April. Chemists were enjoying their moment in the Sun. There was a recent precedent of a miraculous discovery by a pair of researchers working on their own. Three years previously physicists had been stunned when two researchers in a lab in Switzerland announced the discovery of high-temperature superconductivity. That led to a now legendary session at the March 1987 meeting of the American Physical Society in New York City. Thousands of scientists crammed into a hastily arranged session – now referred to as the ‘Woodstock of physics’ – to hear all the latest results about these wondrous new materials. For the 7,000 chemists who gathered in Dallas to hear about cold fusion, it was their turn to make history.

The president of the Chemical Society, Clayton Callis, introduced the session by saying what a boon fusion would be to society and commiserated with physicists over what a hard time they were having achieving it. ‘Now it appears that chemists have come to the rescue,’ he said, to rapturous applause. Harold Furth, director of the Princeton fusion lab, came to make the case for conventional fusion. He didn’t think nuclear reactions were happening in the Utah pair’s cells. Certain key measurements hadn’t been done so the proof for fusion just wasn’t there. But that wasn’t what the chemists wanted to hear. During his presentation, Furth had shown a slide of his lab’s giant tokamak, TFTR – the size of a house, bristling with diagnostic instruments and sprouting pipes and wires. When Pons came on afterwards he flashed up a slide of his own setup: a glass cell the size of a beer bottle held by a rusty lab clamp in a plastic washing-up bowl. ‘This is the U-1 Utah tokamak,’ he said, and the crowd went wild.