IN THE EARLY 1970S, WHEN MOST OF THE WORLD’S FUSION researchers were rushing to build tokamaks following the success of the Russian T-3 machine, some in the US thought it wasn’t a good idea to turn their fusion programme into a one-horse race. In its twenty years of existence, the US programme had supported a range of different fusion machines – stellarators, pinches, mirror machines and other more exotic devices. But their number was decreasing. The stellarator had been largely abandoned with the arrival of the tokamak and others simply didn’t perform well enough, suffering from instabilities, leaking plasma, or too low temperatures or densities. Something else was needed if the US programme wasn’t going to become the tokamak show.

The strongest contender, though still some way behind tokamaks, was the mirror machine. These devices had been a mainstay at the Lawrence Livermore lab near San Francisco ever since it was founded and Richard Post was made head of its controlled fusion group. Post and his colleagues had built a number of small machines but instabilities were preventing them from confining a plasma for more than a fraction of a millisecond and plasma density remained low. But for reactor engineers, mirror machines have an elegant simplicity: straight field lines, plain circular magnets, predictable particle motions – a far cry from the geometrical contortions needed to make a tokamak or stellarator work. They confine plasma the same way that a stellarator does: with magnetic field lines aligned along the tube and particles pulled towards the lines so that they execute tight little spirals around them. The problems with a mirror start when the particles get to the end of the tubular vessel: how do you stop them escaping?

The simplest solution is to have an extra-powerful magnet coil around each end of the vessel. This squeezes the field lines into a tight bunch. When the spiralling particles encounter this more intense magnetic field they are repelled and head back the way they came, back along the tube. This works for the majority of particles but a lot still leak out, reducing the performance of the device. Researchers developed other more complicated designs for the end-magnet in the hope that they would produce a more leak-proof magnetic plug. These included coils in the shape carved out by the stitching on a baseball, and two interlocking versions of that shape, dubbed yin-yang coils in a nod to the Taoist symbol signifying shadow and light.

In 1973, researchers at Livermore were working with a mirror machine called 2XII and not getting very good results – the confinement was poor. They noticed, however, that containment improved when the plasma densities were higher, so they started looking for ways to inject more plasma and thereby boost the density. Colleagues at the nearby Berkeley Radiation Laboratory provided the solution: they were developing a system for producing neutral particle beams and to the 2XII team this seemed an ideal way to add more material to their plasma. It took two years to upgrade their machine for neutral beam injection but when they fired up their new 2XIIB – with an extra ‘B’ for ‘beams’ – in June 1975 they found that the containment was actually worse.

In desperation they tried a trick that had been suggested years before as a way of damping down instabilities: passing a stream of lukewarm plasma through the hot plasma in the vessel. The improvement was dramatic. By the following month they had doubled the ion temperature to 100 million °C – a record at the time – reached record plasma density and increased the confinement time tenfold. There was another side effect: the warm flow made the neutral beams very effective at heating the plasma, so much so that the researchers felt that they could abandon the heating method they had used previously – a rapid current pulse in the magnet coils to rapidly compress the plasma. Without the current pulse, 2XIIB was effectively a steady-state machine – the holy grail of reactor designers.

This really got the programme managers at the Energy Research and Development Administration (ERDA) interested. Here was something that could fill the role of a serious contender to the tokamak. It was little more than a year since the Middle East oil embargo had sent fuel prices through the roof and politicians were searching obsessively for anything that could become an alternative energy source – alternative to importing oil from the Arabian peninsula. Livermore hoped to jump on that gravy train and quickly drew up plans for a large-scale version of 2XIIB, to be called the Mirror Fusion Test Facility or MFTF, and the ERDA agreed to fund its construction.

While 2XIIB was undoubtedly a success, its end-magnets were still leaky and so many had doubts that MFTF would really make the grade as a power-generating reactor. Even using the most optimistic projections, a full-scale MFTF could only achieve a very modest gain – it would produce slightly more power than was used to keep it running. Hence there was considerable pressure on Livermore, during the design of MFTF, to come up with some technique to stop the leaks and improve the gain.

A solution was found in 1976, simultaneously with researchers in the Soviet Union, with a system that came to be known as a tandem mirror. In such a machine, the single magnets at each end are replaced by a pair of magnets separated by a short straight section. The two magnets and the plasma confined between them form a ‘mini-mirror’ system and this proved to be a more effective plug than a single mirror alone. To test the idea, Livermore persuaded ERDA to fund the construction of another experiment, smaller than MFTF, called the Tandem Mirror Experiment. They built TMX and sure enough they showed that the double-magnet end plugs reduced leakage. The only trouble was that now the Livermore researchers had to redesign MFTF. They chose to shorten the existing MFTF and make it into one of the end plugs, so they now needed another whole MFTF as the second end plug and a new central section. The new design, dubbed MFTF-B, was significantly larger and more expensive than the original one but, having got this far, ERDA agreed to press ahead.

While tandem mirrors reduced leakage, there was still room for improvement. In 1980, researchers at Livermore came up with another idea: put a third magnet at each end of the machine and this would produce a double plasma plug at both ends to further block escaping plasma. To test the idea they upgraded the TMX machine with the extra magnets and it did produce a more effective plug. That in turn led to another redesign of MFTF-B to add extra magnets.

By now MFTF-B had turned into a monster of a machine. The plasma tube and all the magnets at each end were enclosed in a stainless steel vacuum vessel that was 10m in diameter and 54m long. You could easily drive a double-decker bus down the middle of it. When it started operating it would need a staff of 150 to tend to it and was expected to consume $1 million of electricity every month. The elaborate end plugs were far from the simple mechanisms that had attracted many people to mirror machines in the first place. Some researchers joked that, like a tree’s rings, you could tell how old a mirror machine was by how many magnets had been added to the ends.

In all it took nine years to build MFTF-B at a cost of $372 million. On 21st February, 1986 staff and guests gathered for the official dedication ceremony. The Secretary of Energy, John Herrington, had travelled over from Washington along with other DoE staff and he commended the Livermore team for their work. But it wasn’t the joyous occasion everyone had been expecting.

The political climate in the mid 1980s was very different from a decade earlier. Ronald Reagan had come into the White House in 1981 and had aggressively cut public spending. The high oil prices and frantic search for alternative energy sources of the 1970s were now just a memory. To the Reagan-era DoE, funding a second type of fusion reactor just to provide competition for tokamaks was an expensive luxury. So the day after congratulating Livermore on its achievement, DoE shut the doors on MFTF-B without ever having turned it on. A few years later it was dismantled for scrap and to this day the scientists, engineers and technicians who spent years working on the machine do not know if it would have worked.

What is the moral of this story? Fusion energy isn’t inevitable. No fusion machine, no matter how much has been spent on it, is safe from the budget axe. The giant machines of today – the newly completed National Ignition Facility and the partially build ITER – could suffer a similar fate to MFTF-B. The search for fusion energy is expensive and it will only continue if politicians and the public want it and need it.

Twenty-three years later on 31st March, 2009 Livermore held another dedication ceremony, this time for the National Ignition Facility. NIF wasn’t about to be shut down but it was under enormous pressure to perform. NIF’s funders at the National Nuclear Security Administration (NNSA), a part of the Department of Energy, wanted payback for the huge cost of the machine, and wanted it soon. A few years earlier, while NIF was still being constructed, NNSA officials and senior researchers drew up a plan to get to ignition on NIF as quickly as possible, so as to provide a springboard for all the things they planned to do with the facility: weapons research, basic science and, of course, fusion energy.

Called the National Ignition Campaign (NIC), it began in 2006 and included designing the targets for NIF shots and simulating what was likely to happen to those targets. Other labs were also involved in the NIC, including Rochester University’s Laboratory of Laser Energetics and its Omega laser as well as the Z Machine at Sandia National Laboratory which studies inertial confinement fusion with very high current pulses rather than lasers. These facilities were able to try out at lower energy some of the things that would eventually be done at NIF.

By the time of NIF’s inauguration the NIC was three years old and researchers were confident that ignition was within their reach. There was a lot of calibrating and commissioning to do on NIF so it would be well into 2010 before researchers could do shots on targets filled with deuterium-tritium fuel that would be capable of ignition, but the NIF team said they may well reach their goal before the end of that year.

NIF is a truly astounding machine. Its size alone takes your breath away: the building that contains it is the size of a football stadium and ten stories high. Inside is a laser so big and so powerful it would make a James Bond villain weep. Among all the brushed metal, white-painted steel superstructure and tidily bundled cables there is the hum of quiet efficiency. The place has a feeling of a huge power, ready to be unleashed.

The heart of the machine is a small unassuming optical fibre laser that produces an unremarkable infrared beam with an energy measured in billionths of a joule. This beam is split into forty-eight smaller beams and each is passed through a separate preamplifier in the shape of a rod of neodymium-doped glass. Just before the beam pulse arrives, the preamplifiers are pumped full of energy by xenon flashlamps and this energy is then dumped into the beam as it passes through. After four passes through the preamplifiers the energy of the forty-eight beams has been boosted ten billion times to around 6 joules. The beams are then each split into four beamlets – giving a total of 192 – and passed through to NIF’s main amplifiers. These are what takes up most of the space in the facility’s cavernous hall, being made up of 3,072 slabs of neodymium glass (each nearly a metre long and weighing 42 kilograms) pumped by a total of 7,680 flashlamps. After a few passes through the amplifiers the beams, which now have a total energy of 6 megajoules, head towards the switchyard.

The switchyard is a structure of steel beams which supports ducts and turning mirrors to direct the beams all around the spherical target chamber so that they all approach from different directions. The chamber itself is 10m in diameter and made from 10cm-thick aluminium with an extra 30cm jacket of concrete on the outside to absorb neutrons from the fusion reactions. This protective sphere is punctured by dozens of holes: square ones for the laser beams and round ones to act as viewing ports for the numerous diagnostic instruments that will study the fusion reactions. Before the beams enter the chamber they must pass through one final but crucial set of optics. These are the KDP crystals which convert the infrared light produced by the Nd:glass lasers, with a wavelength of 1,053 nanometres, first to green light (527 nm) and then to ultraviolet light (351 nm) because this shorter wavelength is more efficient at imploding fusion targets.

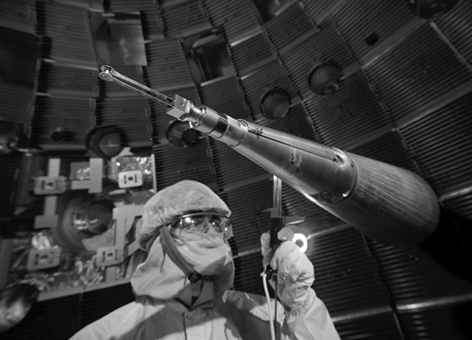

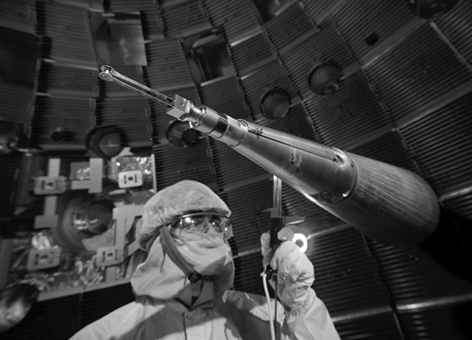

Finally the beams, which for most of their journey have filled ducts 40cm across, are focused down to a point in the middle of the target chamber where they must pass through a pair of holes in the hohlraum each 3mm across. For all its size and brute force, the laser’s end result must be needle-fine and extremely precise. The hohlraum is held in the dead centre of the target chamber by a 7m-long mechanical arm. This positioner must hold the target absolutely steady in exactly the right spot with an accuracy of less than the thickness of a piece of paper. The arm also contains a cooling system to chill the target down to -255°C so that the deuterium-tritium fuel freezes onto the inside wall of the capsule.

Preparing for a shot: Inside NIF’s reaction chamber showing part of the positioner arm and, at its tip, a hohlraum.

(Courtesy of Lawrence Livermore National Laboratory)

An NIF shot goes like this: the original fibre laser creates a short laser pulse, around 20 billionths of a second long, which then travels through the preamplifiers, amplifiers and final optics before it enters the target chamber as 192 beams of ultraviolet light with a total energy of 1.8 megajoules. This is roughly equivalent to the kinetic energy of a 2-tonne truck travelling at 160 kilometres/hour (100 miles/hour) but because the pulse is only a few billionths of a second long its power is huge, roughly 500 trillion watts which is 1,000 times the power consumption of the entire United States at any particular moment. With that sort of power converging on the hohlraum, things start happening very fast. The 192 beams are directed into the hohlraum through holes in the top and bottom and onto the inside walls. The walls are instantly heated to such a high temperature that they emit a pulse of x-rays. The hohlraum’s interior suddenly becomes a superhot oven with a temperature of, say, 4 million °C and x-rays flying all over the place. The plastic wall of the capsule starts to vaporise and flies off at high speed. This ejection of material acts like a rocket, driving the rest of the plastic and the fusion fuel inwards towards the centre of the capsule.

If the NIF scientists have got everything right, then this inward drive will be completely symmetrical and the deuterium-tritium fuel will be crushed into a tiny blob around 30 thousandths of a millimetre across and with a density 100 times that of lead. The blob’s core temperature will be more than 100 million °C but even this isn’t quite enough to start fusion. The laser pulse has a final trick up its sleeve to provide the spark. If the timing is right then a converging spherical shockwave from the original laser pulse should arrive at the blob’s central hot spot just as it reaches maximum compression. This shock gives the hot spot a final kick to start nuclei fusing. Once the reactions start, the high-energy alpha-particles produced by each fusion heat up the slightly cooler fuel around the hot spot. That leads to more fusions, more alpha particles, the reaction gains its own momentum and –BOOM! – fusion history is made. A faultless shot might produce 18 megajoules of energy, ten times that of the incoming laser beams.

That sequence of events was the goal when Livermore researchers began their NIC experiments in 2010. The first unknown was whether the laser was up to the job. Critics of NIF had warned that laser technology wasn’t ready for such a high-energy machine. They foretold that the amount of power moving through the optics would cause them to overheat and crack; that specks of dust on glass surfaces would heat up and damage them; and that flashlamps would continually blow out and need to be replaced. During NIF’s construction there were problems with exploding capacitor banks and flashlamps, and the whole system for preparing and handling the optical glass had to be redesigned to keep it as clean as a semiconductor production plant. NIF’s designers did their work well. When they finally turned it on and ramped up the power over the first couple of years, the laser didn’t tear itself to pieces. The occasional lamp did blow and some optical surfaces got damaged, but NIF staff had worked out ways to either repair surfaces or to block out a damaged section so that the laser could keep running.

The Livermore researchers knew from earlier machines that getting the laser to work was just the start: numerous hurdles still lay ahead. The first of these was the chaotic environment inside the hohlraum once the laser pulse starts. While the high-energy beams are heating the inside walls they kick up lots of gold atoms that form a plasma inside the hohlraum. If it’s not carefully controlled this plasma can cause havoc, sapping the energy of the incoming beams, diverting them from their desired paths and even reflecting some of the beam back out of the hole in the hohlraum. Such interactions had limited the achievements of earlier laser fusion machines and the NIF team studied the problem carefully and carried out extensive simulations. In the early experiments of the NIC the team mostly managed to keep these plasma interactions under control, largely by avoiding situations that were known to aggravate them.

Another potentially difficult area was the implosion of the capsule. The implosion is an inherently unstable situation because it involves a dense material – the plastic shell – pushing on a less dense one – the fusion fuel, and Rayleigh-Taylor instabilities can lead to fuel trying to burst out of its confinement. Researchers’ first weapon against this is symmetry, hence the careful placement of beams around the hohlraum interior to ensure that the x-rays heat the capsule evenly. Their second weapon is speed: if they can make the implosion sufficiently fast, the plastic and fuel won’t have time to bulge out of shape.

The experimenters use a measure called the experimental ignition threshold factor (ITFX) to chart their progress. The ITFX is defined so that an ignited plasma has an ITFX of 1. For the first year of the NIC, the value of ITFX demonstrated the advances they made. When ignition experiments started the shots achieved an ITFX value of 0.001. A year later it had reached 0.1 – a hundred-fold increase – but there it stalled. The second year of the NIC was plagued by phenomena that the NIF team was unable to explain. Although the target chamber was bristling with diagnostic instruments – nearly sixty in total – to probe what was going on inside, measuring x-rays, neutrons, and even taking time-lapse movies of the implosions, the researchers could not figure out why the capsules were not behaving as the computer simulations said they would. For reasons unknown, a significant portion of the laser beam’s energy was getting diverted from its intended purpose of driving the implosion of the capsule. The capsule shell was also being preheated before the implosion started – perhaps by the stray laser energy – which made it less dense and less efficient at compressing the fuel. The implosion velocity was also too slow. At a fusion conference in September 2011, the DoE’s Under Secretary for Science, Steven Koonin, who oversaw NIF, said that ‘ignition is proving more elusive than hoped.’ He also said that ‘some science discovery may be required,’ which is a polite way of saying ‘we don’t know what’s going on.’

Koonin set up a panel of independent fusion experts to give him regular reports on NIF’s progress. The panel was critical of the schedule-driven approach of the NIC, which specified what shots had to be carried out and when and, if something unexpected arose, didn’t allow any time to explore what was going wrong. In a report from mid 2012 the panel pointed out that Livermore’s simulations of NIF predicted that the shots they were then carrying out should be achieving ignition, but the measured ITFX values showed they were still a long way off. What sort of a guide to progress were the simulations if their predictions were so wide of the mark? As before in laser fusion, it was simulations that led researchers to have inflated expectations.

It had been stipulated when the NIC was devised that if ignition is not achieve by the end of two years of experiments at NIF then the NNSA had sixty days to report to Congress on why it had failed, what could be done to salvage the situation, and what impact this will have on stockpile stewardship. That deadline passed on 30th September, 2012 and on 7th December the NNSA submitted its report to Congress. The report admitted that Livermore researchers did not know why the implosions were not behaving as predicted and even conceded that it was too early to say whether or not ignition could ever be achieved with NIF. The NNSA asked for NIF’s funding – running at roughly $450 million per year – to be continued for a further three years so that researchers could investigate why there was a divergence between simulations and measured performance. Significantly, the report also called for parallel research to be carried out on other approaches to ignition as a backup in case NIF failed. These alternatives included pulsed-power fusion at Sandia’s Z Machine, direct-drive laser fusion using Rochester’s Omega and even direct drive on NIF.

At the time of writing, it was not known how Congress would react to this proposal although President Barack Obama’s proposed budget for 2014 suggests cutting NIF funding by 20%. Also, some members of Congress have campaigned for years for NIF’s closure and this admission of weakness could only help their cause. Whatever happens, progress towards ignition looks set to slow because, while the NIC used around 80% of the shots on NIF, from the beginning of 2013 weapons scientists would be getting a bigger share, more than 50%. Many laser fusion experts still believed that NIF can get to ignition, but the question is: when?

Meanwhile, in France, the ITER project was just starting to get moving. Following the ceremony in Paris to sign the international agreement in November 2006 it took almost a year for each partner to ratify the treaty and only then could they officially create the ITER Organisation. But that didn’t hold up excavation of the site. Machinery began clearing trees from land near Saint Paul lez Durance in January 2007. Some rare plants and animals were moved elsewhere; remains of an eighteenth-century glass factory and some fifth-century tombs were preserved. Heavy earth-moving machines arrived in March 2008 and set about carving away at the side of a hill to lower the ground level then shifting the earth downhill to build it up into a level surface. Altogether the diggers shifted 2.5 million cubic metres of material to create a platform of 42 hectares, the area of sixty football pitches. And then everything came to a halt as the new team in the temporary office buildings nearby struggled to get to grips with the machine they had to build.

It hadn’t taken long after the final decision to build ITER at Cadarache for the project partners to start filling senior management positions. As expected, Japan’s choice for director general, Kaname Ikeda, the country’s ambassador to Croatia, was approved. Ikeda had held numerous government jobs relating to research and high-tech industry and had a degree in nuclear engineering – a suitably senior person to head such an international organisation, but not a fusion scientist. His deputy was Norbert Holtkamp, a German physicist who had a track record of managing big projects, having just finished building the particle accelerator for the Spallation Neutron Source at Oak Ridge National Laboratory in Tennessee – but again, not a fusion scientist.

These two couldn’t have been more different. Ikeda was every inch the Japanese diplomat: polite, deferential, immaculately presented. Holtkamp, by contrast, was laid-back, affable and liked to do things his own way. Even a few years working in the United States hadn’t squeezed him into the corporate mould – at ITER he managed to persuade the office manager to exempt him from the usual no-smoking rules so that he could puff cigars in his office. Below them were seven deputy directors, one from each project partner, and the recruitment continued in a similar vein, trying to keep a similar number of staff from each partner. It was a management structure designed by a committee of international bureaucrats and it would prove to be a millstone around the young organisation’s neck.

This new management team, whose leaders were new to fusion, included many researchers who had not been involved in drawing up the design for ITER, so the first thing they had to do was thoroughly familiarise themselves with the machine. They also had to check and recheck every detail of the design to ensure it was ready to be used as the blueprint for industrial contracts to build the various components of the reactor. Then there was the fact that the design was, by then, half a dozen years old and fusion science had moved on: now was the chance to incorporate the latest thinking into the design. So the new team appealed to the worldwide fusion community to come to their aid. They asked researchers to fill in ‘issue cards’ describing any aspect of the design that worried them or possible improvements that could be applied.

Fusion scientists weren’t shy in coming forward and by early 2007 the ITER team had received around 500 cards. They drafted in outside experts to help and set up eight expert panels to sift through all the concerns and suggestions. Many proved to be impractical and could be discounted, others required just minor modifications to the design, but a few required large – and expensive – changes. By the end of 2007 they had whittled the list down to around a dozen major issues and work still remained to figure out how these could be incorporated into the design without inflating the cost.

One of the most contentious concerned a new method for controlling edge-localised modes (ELMs), the instability that causes eruptions at the edge of the plasma that can damage the vessel wall or divertor – a side-effect of the superior confinement of H-mode. The solution already chosen for ITER was to fire pellets of frozen deuterium into the plasma at regular intervals. These cause minor ELMs, letting some energy leak out and so preventing larger, more damaging ones. But researchers working on the DIII-D tokamak at General Atomics in San Diego had come up with a simpler solution: applying an additional magnetic field to the plasma surface roughens it up, which also allows energy to leak out in a controllable way. The problem was that this additional field required a new set of magnet coils to be built on or close to the vessel wall, a potentially costly change at this late stage.

The ultimate goal of these early years was to produce a document called the project baseline. Thousands of pages long, the baseline is a complete description of the project, including its design, schedule and cost. The project partners were not going to sign off on the start of construction until they had seen and approved the baseline document. So the levelled site sat quiet and empty while the ITER team continued to wrestle with a paper version of the project.

In June 2008 the team presented the results of the design review to the ITER council, which is made up of two representatives from each partner. Despite their extensive whittling down of the many suggestions from researchers there were still numerous refinements and modifications to components including the main magnets and the heating systems, plus there were the additional magnet coils to control ELMs. These changes, the team estimated, would add around €1.5 billion to the cost. Nor did the original estimate of €5 billion for construction look that secure. The more the ITER team looked into the details of the design, the more they found that the designers of 2001 may have been overly optimistic and that the final cost could be as much as twice the original estimate.

It’s not uncommon for major scientific projects such as ITER to go over budget, but this ballooning price tag would be an especially hard sell because, since the founding of ITER in 2006, the worldwide financial crisis had swept through the economies of all the project partners. The delegations would not relish going back to their governments and asking for more money when ‘austerity’ was the new black. So the council sent the ITER team back to work with the instruction to nail down the cost completely so that there would be no more surprises. Nor did the council trust them to get on with it unsupervised. It appointed an independent panel, led by veteran Culham researcher Frank Briscoe, to investigate the project cost and how it was estimated. It also set up a second panel to study the organisation’s management structure.

A year later the team asked the council for permission to build ITER in stages. First they would fire up the machine with just a vacuum vessel, magnets to contain the plasma, and the cryogenic system needed to cool the superconductors in the magnets. The idea behind this was to make the whole system simpler so that operators could get the hang of running the machine without all the added complication of diagnostic instruments, particle and microwave heating systems, a neutron-absorbing blanket on the walls and a divertor, which would be added later. The council agreed and also approved a slip in the schedule: first plasma in 2018 not 2016, and first D-T plasma in 2026, nearly two years later than originally planned. The ITER team were still working on the project baseline, including that all-important cost estimate, but the council asked to see it at its next meeting in November 2009.

But the autumn meeting ended up being all about schedule. The European Union was concerned that finishing construction by 2018 was still too soon. Rushing the process could lead to mistakes that would be impossible to correct later. So ITER’s designers were sent back to the drawing board to do more work on the schedule. Other members of the collaboration were getting frustrated by the delays. They wanted to push ahead as quickly as possible but Europe, as the project’s biggest contributor at 45%, had the most to lose and so could throw its weight around. In the spring of 2010 a new completion date was agreed: November 2019. But still there was no baseline and the reactor’s home remained an idle building site.

Later that spring the true underestimation of ITER’s cost became apparent. The European Union was going to have to pay €7.2 billion for its 45% share of the cost. That put the total bill in the region of €16 billion. While this colossal figure would impose a severe financial strain on fusion funding for all the ITER partners, for the EU it caused a near meltdown. The problem was this: EU funding is agreed by member states in seven-year budgets; the current budget cycle ran until the end of 2013; the budget line for fusion in the years 2012 and 2013 contained €700 million; but the new inflated ITER cost required €2.1 billion from the EU in those years, so Europe had to find another €1.4 billion from somewhere.

EU managers considered a number of options to fill the gap, including getting a loan from the European Investment Bank, an EU institution that lends to European development projects. But this was rejected because there was no identifiable income stream to repay the loan. They considered raiding the budgets of EU research programmes but feared a backlash from scientists across the continent. In the end, they appealed directly to EU member governments for an extra payment to get them out of a hole. In June member states declined to bail out the project, essentially saying this was the EU’s problem and it would have to find its own solution. The June ITER council meeting came and went with still no decision on the baseline.

With much persuasion and institutional arm-twisting, EU managers finally managed to cobble together the necessary funds from within the EU budget. Some €400 million was taken from other research programmes and the rest from other sources, in particular unused farming subsidies. The way was now clear for ITER to move forward.

On 28th July, 2010, the ITER council met in extraordinary session at Cadarache. Chairing the meeting was none other than Evgeniy Velikhov, again on hand to guide ITER through one of its major turning-points. With huge relief the national delegates approved the baseline, allowing ITER to move into its construction phase. But that was not their only item of business. They also bade farewell to director general Kaname Ikeda, who had asked to stand down once the baseline was approved. In his place, the council appointed Osamu Motojima, former director of Japan’s National Institute for Fusion Science. Motojima knew how to build large fusion facilities, having led the construction of Japan’s Large Helical Device, a type of stellarator. Ikeda’s was not the only departure following the baseline debacle. Norbert Holtkamp also stood down and his position of principal deputy director general was dispensed with.

Motojima’s mandate from the council was to keep costs down, keep to schedule and simplify ITER management. The latter he set about by sweeping away the previous seven-department structure and replacing it with a more streamlined three. The first department, responsible for safety, quality and security, was headed by Spaniard Carlos Alejaldre, who had filled the same role under the old structure. To run the key ITER Project Department, responsible for construction, Motojima appointed Remmelt Haange of Germany’s Max Planck Institute for Plasma Physics. Haange, like Motojima, was a seasoned reactor builder, having been involved in the construction of JET and as the technical director of Germany’s Wendelstein 7-X stellarator project. Finally, Richard Hawryluk, deputy director of the Princeton Plasma Physics Laboratory, was picked to head the new administration department. With these veteran fusion researchers at the helm, the project got down to the serious business of building the world’s biggest tokamak. Soon trucks and earth-moving machines were crawling over the site like worker ants.

Evgeniy Velikhov celebrates the end of his term as ITER council chair in November 2011.

(Courtesy of ITER Organisation)

Research into fusion energy is now well into its seventh decade. Thousands of men and women have worked on the problem. Billions have been spent. So it seems reasonable to ask, will it ever work? Will this amazing technology, which promises so much but is so hard to master, ever produce power plants that can efficiently and cheaply power our cities? Today’s front-rank machines, such as NIF and ITER, seem so thoroughly simulated and engineered, and the previous generation of machines got so close to break-even, that surely the long-sought goal can’t be far away? Let’s first consider the case of inertial confinement fusion and NIF.

At the time of writing it is still anyone’s guess whether NIF will ever be made to work. Many believe that it is just a matter of twiddling all the knobs until the right combination of parameters is found and suddenly everything will gel. But Livermore’s choices of neodymium glass lasers and indirect drive targets have always been controversial and critics say the whole field needs a complete change of direction, such as to krypton-fluoride gas lasers – which are naturally short wavelength – and simple and cheap direct-drive targets.

NIF cannot escape from the fact that its primary goal isn’t fusion energy but simulating nuclear explosions to help maintain the weapons stockpile. Nevertheless, when the machine was inaugurated in 2009 the press coverage focused almost exclusively on fusion energy. That was no accident. During the preceding years NIF’s managers felt which way the political wind was blowing: while maintaining the nuclear stockpile was still important, so was climate change and energy independence. If they were to maintain support for NIF from the public and Congress they had to broaden its appeal. Hence the emphasis on energy, not nukes.

NIF director Ed Moses and his team expected that when they achieved ignition it would spark a surge of interest in fusion energy and – hopefully – new money. They wanted to be ready to ride that wave of enthusiasm so, in traditional fashion, they started to plan for the reactor that would come next, one designed for energy production, not science or stockpile stewardship. Taking the achievement of ignition – which they expected soon – as their starting point, they sought to establish how fast and how cheaply they could build a prototype laser fusion power plant. They adopted a deliberately low-risk approach, sticking as closely as possible to NIF’s design in order to cut down on development time. All components had to be commercially available now or in the near future. They consulted with electricity utility companies about what sort of reactor they would like – something fusion researchers had never really done before. They called this dream machine LIFE, for laser inertial fusion energy.

The first thing to tackle was the laser. NIF’s laser, though a wonder, is totally unsuitable for an inertial fusion power plant. It’s a single monolithic device, prone to optical damage, and could only be fired a few times a day. The NIF team didn’t want to abandon neodymium glass lasers altogether – it was the technology that they knew and understood. But they could get rid of the temperamental and power-hungry xenon flashlamps that pump the laser glass full of energy. The ideal alternative would be solid-state light emitting diodes, similar to those used in LED TV screens and the latest generation of low-energy light bulbs. They are more efficient than flashlamps, power up more quickly and are less prone to damage. Electronics companies can make suitable diodes today but they are so expensive that they would make a laser power plant uneconomic. However the NIF researchers calculated that, like most electronic components, their price will go down rapidly and, by the time LIFE needs them, they will be affordable.

It also wouldn’t do to have LIFE relying on a single laser to drive the whole power plant. If any tiny thing went wrong the entire plant would have to be shut down for repairs. So instead of a single laser split into 192 beams, LIFE would have twice as many beams (384) with each produced by its own laser. The plan was for the lasers to be produced in a factory as self-contained units – essentially a box big enough to keep a torpedo in. The operators of the plant wouldn’t need to know anything about lasers; the units would be delivered by truck and the operators would just slot them into place and turn them on. The plant would have spares on site and if one laser failed it could be pulled out and be replaced without stopping energy production.

Livermore researchers also had a novel solution to one of the big questions of fusion reactor design: neutron damage. Nuclear engineers are working hard to find new structural materials for fusion reactors that can withstand a constant barrage of high-energy neutrons for years on end. But the Livermore team didn’t want to have to wait for new materials to be developed and tested. They opted for a simpler solution for LIFE: make the reaction chamber replaceable. In their design, the only thing that physically connects to the reaction chamber is the pipework for cooling fluid. After a couple of years this can be disconnected and the entire reaction chamber wheeled out on rails to an adjacent building, then a fresh chamber can be wheeled in. The old chamber would need a few months to ‘cool off’ so that its radioactivity drops to a safer level, then be dismantled and buried in shallow pits.

It was a bold plan and, because of its policy of relying on known technology and off-the-shelf components, the team calculated that, once ignition on NIF is achieved, they could build a prototype LIFE power plant in just twelve years.

Livermore wasn’t the only organisation thinking ahead to what will happen after ignition is achieved. The US Department of Energy and in particular its science chief Steven Koonin realised that when that breakthrough came the White House, Congress, other organisations and the public would start asking questions, such as what has the US been doing in inertial fusion in recent years? And what is it going to do now to progress from scientific breakthrough to commercial power plant? The answer to the first question was: not very much. During the construction of NIF and afterwards, other research on inertial confinement fusion was starved of funding. The Rochester University lab got some money for its supporting role to NIF but research at the national laboratories and elsewhere was minimal. However that didn’t mean that those few researchers working in the field didn’t have ideas about what to do next.

Koonin was very familiar with NIF, having been drafted onto various panels over the years to assess it and other fusion projects. What Koonin needed now was a broad survey of the state of the whole field of inertial fusion research, so again the National Academy of Science was called on to investigate. The NAS assembled a panel of experts from universities, national labs and industry. Over the course of a year they visited many of the main facilities involved in inertial confinement fusion research and heard dozens of presentations. Their first port of call outside Washington was to Livermore where NIF researchers explained their plans for the LIFE power plant.

Next they visited the Sandia National Laboratory in Albuquerque, New Mexico. Researchers there had been working on inertial confinement fusion using, not lasers, but extremely powerful current pulses to crush a target magnetically. Their technique uses the pinch effect, the same phenomenon that Peter Thonemann stumbled upon in the 1940s and caused him to travel from Australia to Oxford to start building fusion reactors. The pinch effect causes a flowing electric current to be squeezed by its own magnetic field inwards towards its middle. Thonemann’s devices, along with all tokamaks, use the pinch effect to squeeze a flowing plasma, compressing and heating it. The researchers at Sandia use the pinch in a different way. They confine fusion fuel in a cylindrical metal can and then pass a huge current down the outer walls of the can. The pinch effect squeezes the walls of the can in towards the centre and so crushes the can. If the current pulse is big enough and fast enough then the crushed can compresses and heats the fuel inside enough to spark fusion.

To do this requires an enormous pulse of electric current and so Sandia researchers use the Z Machine which can store charge in huge banks of capacitors and then release it quickly. The 37m wide machine can create current pulses of 27 million amps lasting a ten-millionth of a second. In 2013 the Sandia team will start trying to achieve fusion using the Z Machine and simulations suggest they might be able to reach break-even. But to really put the idea to the test and produce genuine energy gain they reckon that they need a new machine – Z-IFE – able to produce current pulses up to 70 million amps.

As a potential power source, the Z Machine has the drawback that it takes longer to charge up than a laser and the metal can targets are bigger and more cumbersome than the fuel capsules of laser fusion. So the Sandia scheme would work at a slower repetition rate – 1 shot every 10 seconds. To make that rate economic, each explosion would have to be bigger to generate more power, so this scheme has the added challenge of developing a reaction chamber that can withstand a much bigger blast and be ready for the next one every 10 seconds. Nevertheless, the team believes that their sledgehammer approach of big pulses, big bangs and slow repetition is much simpler to implement than the high speed and pinpoint accuracy required for laser fusion energy.

The NAS panel’s next port of call was Rochester and the Laboratory of Laser Energetics. Here the main topic of discussion was other ways to achieve fusion with lasers that avoid some of the drawbacks of NIF’s indirect drive using neodymium-glass lasers. Researchers at Rochester and the Naval Research Laboratory in Washington, DC, argue that direct drive would be a better approach for laser fusion energy generation. Shining the laser directly onto the fuel capsule avoids the energy lost in the hohlraum, so a less powerful laser would be needed. It would be simpler too, since instead of having to construct a target with a fuel capsule carefully positioned inside a gold or uranium can for each shot, only the fuel capsule would be needed. Since future laser fusion power plants are expected to have to perform around 10 shots per second they will consume slightly less than a million targets a day, so simplicity – and just as importantly, low cost – will be a key factor.

Livermore researchers abandoned direct drive because you need laser beams of very high quality to make it work; any imperfections in the beam and the capsule will not implode symmetrically. But Rochester and the Naval Research Lab stuck at it and developed ways to smooth over beam imperfections. They have tested these techniques using Rochester’s Omega laser but it doesn’t have the power of NIF so they have not been able to test direct drive to ignition energies.

The Naval Research Lab team have also made another innovation. They have developed a laser that emits light that is already ultraviolet so it doesn’t need to be stepped down to shorter wavelengths like Omega and NIF, which avoids the energy loss of conversion using KDP crystals. Instead of using neodymium-doped glass as the medium to amplify light, their laser uses krypton- fluoride gas which is pumped full of energy using electron beams. The Naval researchers have built demonstration models that have a high repetition rate but low power, and ones with high power but are only capable of single shots. They haven’t yet won funding to develop a high-power, high-repetition version ready for fusion experiments.

The NAS panel heard about other approaches as well, such as imploding targets using beams of heavy ions. Accelerating ions is a much more energy-efficient process than making a laser beam and there is no problem with creating a high repetition rate. Focusing also uses robust electromagnets rather than delicate lenses which can get damaged by blasts or powerful beams. But creating beams with the right energy and sufficiently high intensity to implode a target is still a challenge. Researchers at the Lawrence Berkeley National Laboratory near San Francisco have built an accelerator to investigate those challenges but the project is desperately short of funds.

Then there is another laser-based technique called fast ignition. This separates out the two functions of the laser pulse – compressing the fuel and heating it to fusion temperature – and uses a different laser for each. In a conventional laser fusion facility these two jobs are achieved by carefully crafting the shape of the laser pulse during its 20-nanosecond length: the first part of the pulse steadily applies pressure to implode the capsule and compress the fuel, then an intense burst at the end sends a shockwave through the fuel which converges on the central hotspot, heating it to the tens of millions of °C required to ignite. Getting that pulse shape right is complicated and requires a very high-energy laser. By separating the two functions a fast ignition reactor can make do with a much lower-energy driver laser because it only has to do the compression part. Once the implosion has halted and the fuel has reached maximum density, a single beam with a very short but very high intensity pulse is fired at the fuel and this heats some of it to a temperature high enough to spark ignition.

Researchers at Osaka University in Japan have pioneered the fast ignition approach and have been joined recently by Rochester’s Omega laser which was upgraded with a second laser for fast ignition experiments. Although these efforts are making progress towards understanding how fast ignition works, neither is powerful enough to achieve full ignition. Researchers in Europe are keen to join this hunt and have drawn up detailed plans for a large fast ignition facility that would demonstrate its potential for power generation. Known as the High Power Laser Energy Research facility (HiPER), it would have a 200-kilojoule driver laser – one-tenth the energy of NIF’s – and a 70-kilojoule heater laser. Calculations suggest HiPER should be able to achieve a much higher energy gain than NIF but its designers are waiting to finalise the design. They want to wait for the achievement of ignition at NIF in case it provides any useful lessons.

The problem for all these possible alternatives – with the possible exception of Rochester’s Omega laser – is that they have been starved of funding. For the past two decades while the Department of Energy has been pouring money into NIF other approaches have been neglected. The fear for the proponents of these alternative approaches was that history was about to repeat itself: Livermore’s design for the LIFE reactor is very thorough and very persuasive; would it persuade the NAS panel that the bulk of any future ICF funding should be channelled straight to Livermore?

But the panel was not seduced. Its mandate had been to come up with a future research programme on the assumption that ignition at NIF had been achieved. Ignition’s stubborn refusal to cooperate at NIF has knocked some of the lustre off the plans for LIFE – it no longer seemed an obvious shoo-in for the next big inertial fusion project. The panel’s report, released in February 2013, says that many of the technologies involved in inertial fusion are at an early stage of technological maturity and that it is too early to pick which horse to back. It suggested a broad programme of research that would provide the information needed to narrow the field in the future. This was good news for the alternative approaches, but with a faltering US economy forcing cutbacks to many areas of research funding and with Livermore researchers seemingly losing their way on the road to ignition, prospects for a generous research programme do not look good.

With the construction of ITER going at full throttle and with NIF working towards ignition, is fusion closing in on the big breakthrough that researchers have been dreaming of for more than six decades? Many thousands of those researchers would like to believe so but there have been false dawns before: Ronald Richter’s Argentine fusion reactor that never was; ZETA and all the enthusiasm generated by the 1958 Geneva conference; the astonishing temperatures achieved by the first Russian tokamaks in 1968; and the heat generated by TFTR and JET in the 1990s which got close to break-even but didn’t quite get there. Each time, the press has excitedly published accounts of the promise of fusion for solving the world’s energy problems but then unexpected technical problems, lack of funding or simply the slow pace of research has meant that fusion has faded again from the public consciousness. Many have grown cynical that fusion will ever deliver on its promises. Remember the jibe: fusion is the energy of the future, and always will be.

But there are legitimate concerns that fusion will ever provide an economic source of energy – even if high gain is achieved – and those concerns are usually expressed by engineers. They argue that fusion scientists’ fixation on developing a reactor that will simply produce more energy than it consumes ignores the very serious hurdles such a reactor would still have to overcome before it could compete with existing sources of energy.

In 1994 the Electric Power Research Institute (EPRI) – the R&D wing of the US electric utility industry – asked a panel of industry R&D managers and senior executives to draw up a set of criteria that a fusion reactor would have to meet in order to be acceptable to the electricity industry. They came up with three. The first is economics: to compensate for the increased risk of adopting a new technology, a new fusion plant would need to have lower life-cycle costs than competing technologies at the time. The second criterion was public acceptance: it would need to be something the public wanted and had confidence in. Finally the industry panel would want fusion to have a simple regulatory approval process: if the nuclear regulator required a lengthy investigation of the design or required that the reactor be sited far from population centres or be encased in a containment building, fusion’s prospects could be seriously damaged.

One of the first to question the viability of fusion power generation was Lawrence Lidsky, a professor of nuclear engineering at the Massachusetts Institute of Technology and an associate director of its Plasma Fusion Center. By 1983 Lidsky had been working in plasma physics and reactor technology for twenty years and had formed some serious concerns about fusion’s future. Colleagues at the Plasma Fusion Center were reluctant to talk about it, so Lidsky wrote an article for MIT’s magazine Technology Review entitled ‘The Trouble with Fusion.’ Lidsky argued that, because of the inescapable physics of deuterium-tritium fusion, any fusion power plant is going to be bigger, more complex and more expensive than a comparable nuclear fission reactor – and so would fail EPRI’s economic and regulatory criteria – and that complexity would make it prone to small breakdowns – failing the public acceptance criterion.

Lidsky’s first criticism was with the choice of the fuel itself. When the fusion pioneers of the 1940s and ’50s realised how difficult it was going to be to get to temperatures high enough to cause fusion, they naturally sought the fuel that would react the most easily – a mixture of deuterium and tritium. Reacting any other combination of light nuclei, such as deuterium and deuterium or hydrogen and helium-3, was just not conceivable with the technology of the day. So D-T became the focus of fusion research and scientists chose to ignore the fact that the reaction produces copious quantities of high-energy neutrons that would be a major headache for any working power reactor. Fission reactors produce neutrons too but the ones in a fusion reactor have a higher energy and so penetrate the structure of the reactor itself where they can knock atoms in the steel out of position. Over years of operation this neutron bombardment makes the reactor radioactive and weakens it structurally, which limits its life and means that any maintenance or repair is difficult or impossible to carry out with human beings.

Key parts of the reactor could instead be made with other metals that are more resistant to neutrons, such as vanadium, but that would increase the cost. Fusion scientists have long been aware of this issue and have sought other more exotic neutron-resistant materials but such efforts have always played second fiddle to the drive towards break-even. In any event, testing the materials would require a very intense source of neutrons and today no such source exists. US researchers have proposed building fusion reactors that are optimised to produce lots of neutrons rather than energy and one of these could be used as a testbed for new materials. But with the country’s fusion budget severely squeezed it was never a high priority.

Another option is to produce neutrons using a high-intensity particle beam in a purpose-built accelerator facility. The agreement between the European Union and Japan over where to build ITER, the so-called broader approach, provided money to start work on such a test rig, dubbed IFMIF (International Fusion Materials Irradiation Facility), but at the time of writing this project was still working on a design and testing technology – far from actually bombarding any materials with neutrons. So the effort to find suitable materials for a fusion reactor lags behind the rest of the fusion enterprise and is unlikely to provide any useful data for ITER, though it could for ITER’s successors.

Lidsky also contended that a D-T fusion reactor would be unavoidably large and complex and therefore unacceptable to the electricity industry. For a start, the reactor must cope with a range of temperatures that drops over the distance of a few metres from roughly 150,000,000°C – hotter than anywhere else in our solar system – to -269°C, a few degrees from absolute zero temperature, which is the operating temperature of the superconducting magnets. Managing these temperature gradients and heat flows will be a major challenge. And, he argued, a power-producing D-T reactor cannot avoid being large and therefore expensive. History backs up his assertion: as tokamaks have developed they have got bigger and bigger. The plasma vessel of ITER is 19m across and this is just the start: outside it are several metres more including the first wall – the initial line of defence against heat and neutrons – the ‘blanket’ through which liquid lithium will pass so that it can be bred into tritium by the neutrons, a thermal shield to protect the super-cold magnets from the heat of the reactor and finally the magnets themselves. Altogether a huge structure, considerably larger than the core of a fission reactor and in power engineering, size = cost. Anyway, ITER is not designed to generate electricity; the demonstration power reactor that is proposed to come after it, known as DEMO, will by some estimates be 15% larger in linear dimensions.

If fusion research continued on its current course, Lidsky concluded in 1983, ‘the costly fusion reactor is in danger of joining the ranks of other technical “triumphs” such as the zeppelin, the supersonic transport and the fission breeder reactor that turned out to be unwanted and unused.’ His Technology Review article was followed by an adapted version in the Washington Post entitled ‘Our Energy Ace in the Hole Is a Joker: Fusion Won’t Fly.’ These public criticisms caused a furore in fusion research and led to a war of letters between Lidsky and PPPL director Harold Furth lasting many months. Shortly after the articles were published, Lidsky was stripped of his associate director title at the Plasma Fusion Center and he became a pariah in the fusion community.

But Lidsky’s message was not entirely negative. He acknowledged the attractions of limitless fuel and minimal radioactive waste from fusion, but in essence he thought that fusion had taken a wrong turn and needed to start again by focusing on a different reaction that produces no neutrons: the fusion of hydrogen and boron-11. This seems ideal, but boron has five times the positive charge of hydrogen, making fusion much harder to achieve. Although some schemes for fusing hydrogen and boron have been proposed – including using Sandia’s Z Machine – none have yet been tested.

Although these concerns were expressed three decades ago, many of them hold true today. Better materials and techniques exist now, but the basic physics remains the same. Fusion enthusiasts concede that there are some major challenges ahead but it is not that they can’t be solved; they just haven’t been solved yet. Just because something is hard, it doesn’t mean we shouldn’t attempt it. But even many of the most ardent fusion enthusiasts concede that commercial fusion power is unlikely before 2050. That view is supported by another more recent report from EPRI which sought to find out if any fusion technologies existing now might be useful to the power industry in the near term. Published in 2012, it examined magnetic as well as inertial confinement fusion approaches and some of the alternative schemes but concluded that all were in an early stage of technical readiness and none would be ready for use in the next thirty years. It suggested that fusion research efforts ought to pay more attention to the problem of generating electricity instead of fixating on the scientific feasibility of producing excess energy.

So is fusion the energy dream that is destined never to be fulfilled? Fusion is often compared to fission, both having been born out of the postwar enthusiasm for all things technological. Fission proved its worth astonishingly fast: the splitting of heavy elements was discovered in 1938; the first atomic pile produced energy in 1942; and the first experimental electricity-producing plant started in 1951. Thirteen years from discovery to electricity. But the comparison with fission is misleading: it hardly takes any energy at all to cause a uranium-235 nucleus to split apart and release some of its store of energy; and as a fuel, solid uranium is easy to handle. Fusion, in contrast, requires temperatures ten times those in the core of the Sun and its fuel is unruly plasma. When fusion research began, scientists had little real knowledge of how plasmas behave and, although great strides have been taken, there is still a lot we don’t know about plasma physics.

Fission had another advantage in its early years: it was born into the breakneck wartime effort to develop an atomic bomb. The early atomic reactors were essential to that effort because they produced plutonium, so money was poured into their development. After the war, the rapid research continued as military planners saw fission reactors as a perfect power source for submarines. In fact, the light-water reactor, which has come to dominate the nuclear power industry, is little more than an adapted submarine power plant and, with the benefit of hindsight, wasn’t the best choice for land-based electricity generation. All of that development would probably have taken decades if carried out by civilian scientists in peacetime.

Fusion was also born in the military research labs of Britain, the United States and the Soviet Union and at first was kept classified for the same reason as fission: because it could be used to create plutonium. But as soon as military planners realised it would be no easier than using a fission reactor, they lost interest in controlled fusion. Since then, fusion research has struggled along, buffeted by cycles of feast and famine as government enthusiasm for alternative sources of energy has waxed and waned. It has never enjoyed clear enthusiastic support from government such as the Apollo programme received following President Kennedy’s pledge to put a man on the Moon. This is one reason for fusion research’s obsession with achieving break-even. A clear demonstration that fusion can generate an excess of energy will grab the headlines and generate a wave of excitement. Scientists and engineers will then be clamouring to join the ranks of fusion researchers and government funding will come pouring in. That’s the hope, anyway.

Some technological dreams just do take time to come to fruition. Look at the history of aviation. The Wright brothers’ flight in 1903 wasn’t the start of the process; aviation pioneers had been struggling to get aloft for decades before that. The first twelve-second flight at Kitty Hawk was just the demonstration of feasibility – analogous to break-even in fusion, perhaps? Orville and Wilbur had no idea how their invention would develop next. They couldn’t possibly have imagined the huge jet airliners of today, let alone the spacefaring pleasure craft of Virgin Galactic. But those developments didn’t happen instantly: it took more than four decades, and the accelerated development of two world wars, before jet engines and pressurised cabins became the norm. Fusion is still at the wooden struts, wire and canvas stage of development. Future fusion power plants may look nothing like a puffed up version of ITER.

The cost and time that it will take to make fusion work has to be balanced against the enormous benefits it would bring. Assuming that all the engineering hurdles described by the likes of Lidsky can be overcome, what would a world powered by fusion be like? The current partners in ITER represent more than half the world’s population, so the technological know-how to build fusion reactors will be widespread – there will be no monopoly. Nor will any nation have a stranglehold over fuel supplies. Every country has access to water. There will be no more mining for coal or digging up tar sands; no more oil rigs at sea or in fragile habitats on land; no more pipelines scything across wildernesses; and no more oil tankers or oil spills. The geopolitics of energy – with all the accompanying corruption, coups and wars of access – will disappear. Countries with booming economic growth, such as China, India, South Africa and Brazil, will no longer have to resort to helter-skelter building of coal-fired and nuclear power stations. It is highly unlikely that fusion power will be ‘too cheap to meter,’ as US Atomic Energy Commission chief Lewis Strauss claimed in 1954, but it won’t damage the climate, it won’t pollute and it won’t run out. How can we not try?

Getting there will not be easy and it won’t happen unless society at large and its governments, as well as fusion scientists, want it. Lev Artsimovich, the Soviet fusion pioneer who led their effort for more than twenty years, was once asked when fusion energy would be available. He replied: ‘Fusion will be ready when society needs it.’