We are very familiar with the idea that humans are everywhere; that wherever you go in the world you will probably find people there already. We are an unusual species in that we have a near-global distribution. And although people around the world may look quite different from each other, and speak different languages, they can nevertheless recognise each other as distant cousins.

But where and when did our species first appear? What are the essential characteristics of our species? And how did people end up being everywhere? These are rephrasings of fundamental questions. Who are we? What does it mean to be human? Where do we come from? For thousands of years, such questions have been explored through philosophy and religion, but the answers now seem to lie firmly within the grasp of an empirical approach to the world and our place within it. By peering deep into our past and dragging clues out into the light, science can now provide us with some of the answers to the questions that people have always asked.

They are questions that have always captivated me. As a medical doctor and anatomist (I lecture in anatomy on the medical course at Bristol University), I am fascinated by the structure and function of the human body, and the similarities and differences between us and other animals. We are certainly apes; our anatomy is incredibly similar to that of our nearest relations, chimpanzees. I could put a chimpanzee arm bone, or humerus, in an exam for medical students and they wouldn’t even notice that it wasn’t human.

But there are obviously things that mark us out – not as special creations, but as a species of African ape that has, quite serendipitously, evolved in ways that enabled our ancestors to survive, thrive and expand across the whole world. There are aspects of anatomy that are entirely unique to us; unlike our arms, our spines, pelvis and legs are very different from those of our chimp cousins, and no one would mistake a human skull for that of another African ape. It’s a very distinctive shape, not least because we have such enormous brains for the size of our bodies. And we use our big brains in ways that no other species appears to.

Unlike our closest ape cousins, we make tools and manipulate our environments to an extent that no other animal does. Although our species evolved in tropical Africa, this ability to control the interface between us and our surroundings means that we are not limited to a particular environment. We can reach and survive in places that should seem quite alien to an African ape. We have very little in the way of fur, but we can create coverings for our bodies that help to keep us cool in very hot climates and warm in freezing temperatures. We make shelters and use fire for warmth and protection. Through planning and ingenuity, we create things that can carry us across rivers and even oceans. We communicate, not just through complicated spoken languages but through objects and symbols that allow us to create complex societies and pass on information from generation to generation, down the ages. When did these particular attributes appear? This is a key question for anyone seeking to define our species – and to track the presence of our ancestors through the traces of their behaviour.

The amazing thing is – it is possible to find those traces, those faint echoes of our ancestors from thousands and thousands of years ago. Sometimes it could be an ancient hearth, perhaps a stone tool, that shows us where and how our forebears lived. Occasionally we find human remains – preserved bones or fossils that have somehow avoided the processes of rot and decay and fragmentation to be found by distant descendants grubbing around in caves and holes in the ground, in search of the ancestors.

I’ve always been intrigued by this search, by the history that can be reconstructed from the few clues that have been left behind. And at this point in time, we are very lucky to have evidence emerging from several different fields of science, coming together to provide us with a compelling story, with a better understanding of our real past than any humans have ever had before. From the study of bones, stones and the genes within our living bodies comes the evidence of our ancestors, of who we are, of where we came from – and of how we ended up all over the world.

When the BBC offered me the opportunity to follow in the footsteps of the ancients, to delve into the past, to meet people, see artefacts and fossils for myself and visit the places that seem most sacred to those searching for real meanings, I couldn’t wait to get started. I took a year off from teaching anatomy and looking at medieval bones in the lab, and set off on a worldwide journey in search of our ancestors.

The Human Family Tree

My journey would take me all around the world, starting in Africa and then following in the footsteps of our ancestors into Asia and around the Indian coastline, all the way to Australia, north into Europe and Siberia, and, eventually, to the last continents to be peopled: the Americas.

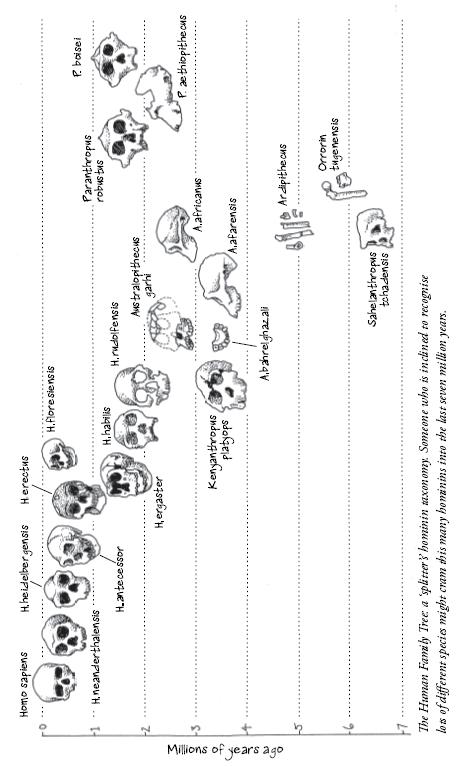

Modern humans are just the latest in a long line of two-legged apes, technically known as hominins. We’ve grown used to thinking of ourselves as rather special, and a quick glance at the human family tree shows us that we’re in a rather unusual position at the moment, being (as far as we know) the only hominin species alive on the planet. Going back into prehistory, the family tree is quite bushy, and there were often several species knocking around at the same time. By 30,000 years ago, it seems there were only two twigs left on the hominin family tree: modern humans and our close cousins, the Neanderthals. Today, only we remain.

The ancestral home of hominins is Africa, although some species, including our own, have made it out into other continents at various times. Whether we actually met up with our ‘cousins’ on our ancient wanderings is something I’ll be investigating in this book. Certainly, it seems that there was some overlap in Europe, and that for a good few thousand years modern humans and Neanderthals were sharing the continent.

It may sound strange but it’s actually quite difficult to know exactly how many different species of ancient hominins there were. It’s something that prompts a huge amount of debate. The world of palaeontology – the branch of science that peers into the past to examine extinct and fossil species – is inhabited by ‘lumpers’ and ‘splitters’. Lumpers use very wide definitions of species to group lots of fossils together under one species name. Splitters, as the term suggests, divide them up into lots of different species. But which group is right? It’s hard to know, and this is one of the debates that enlivens this science. Both lumpers and splitters are looking at the same evidence – but making different interpretations.

Trying to decide if two populations really are different enough from each other to be labelled as separate species is more difficult than it seems. Some species can even interbreed and produce fertile, hybrid offspring. But, basically, species are populations that are diagnosably different from each other, in terms of their genes or their morphology (the way their bodies are constructed) – or both.

With long-dead fossil animals, all palaeontologists have to go on is the skeleton, and sometimes only fragments of bones. So the problem of species definition becomes even more difficult. Looking at the skeletons of living animals, they can get an idea of the range of morphological variation in a species (because, even within a species, animals come in a variety of shapes and sizes). They can also measure the level of morphological difference between species. This gives them a benchmark for how similar skeletons are within a species, and how different they need to be to be classed as separate species. Then the palaeontologist can apply that standard to sort fossil animals into species. It’s a knotty problem, and it’s not really that surprising that different palaeontologists, each of whom may have spent a lifetime studying the fossils, can reach different conclusions.

Indeed, some palaeontologists shy away from talking about ancient ‘species’ at all. The eminent physical anthropologist William White Howells suggested we call these groupings ‘palaeodemes’ (‘ancient populations’) instead. But we can recognise evolving lineages in the fossil record, and giving distinct populations a genus (e.g. Homo) and species (e.g. sapiens) name provides a useful handle and helps when we’re trying to reconstruct family trees.1

Within palaeoanthropology, the discipline that looks at fossil hominins in particular, taxonomies range from extreme lumping, with some researchers calling all hominins from the last million years Homo sapiens, to extreme splitting, with other scientists finding room for eight or more species. Chris Stringer, palaeoanthropologist at the Natural History Museum in London, has recognised four species during and since the Pleistocene (in the last 1.8 million years): Homo erectus, Homo heidelbergensis (the putative common ancestor of modern humans and Neanderthals), Homo sapiens and Homo neanderthalensis,2 although the recent discovery of the tiny ‘Hobbit’ skeletons in Indonesia requires us to make room for Homo floresiensis as well.

Throughout this book I use the word ‘human’ in an inclusive but nevertheless precise sense to mean any species in the genus Homo, whereas ‘modern human’ refers to our own species, Homo sapiens. In the same way, ‘Neanderthals’ are Homo neanderthalensis.

Each one of these human species made it out of Africa, to Eurasia. Homo erectus got all the way to Java and China by about one million years ago. About 800,000 years ago, another lineage formed and expanded: Homo heidelbergensis fossils have been found in Africa and Europe. The European branch of this population went on to give rise to Neanderthals, about 300,000 years ago. Modern humans sprang from the African population, some around 200,000 years ago – and their descendants spread across the globe.

This is a version of events that is now accepted by most palaeoanthropologists, and is supported by the weight of fossil evidence and genetic studies. It is known in the jargon as the ‘recent African origin’ or ‘Out of Africa’ model. But although this is now the majority view, it is not the only theory about how modern humans evolved and ended up everywhere. Some palaeoanthropologists still argue that archaic species like Homo erectus and heidelbergensis, having spread from Africa into Asia and Europe, then ‘grew up’ into modern humans in all of these continents. At the end of the twentieth century, a great debate raged over which version, the recent African origin or the ‘Regional Continuity’ (also called ‘Multiregional Evolution’), was a more accurate representation of events. Since then, the evidence – genetic, fossil and climatological – has stacked up quite impressively in favour of a recent African origin,3, 4 but there is still a minority of scholars who argue for multiregionalism. There are also some palaeoanthropologists, who, while accepting a recent African origin, suggest that modern humans may have interbred with other archaic species as they spread into other continents, for instance mixing with the Neanderthals in Europe.2

Reconstructing the Past

Generally speaking, someone who studies ancient human ancestry is a called a palaeoanthropologist. Palaeoanthropology is a sphere of enquiry that essentially started off with fossil-hunting, but which today draws on many other disciplines (so that, for example, people who now end up contributing to palaeoanthropology may come from fields as disparate as genetics and climate science).

When Charles Darwin wrote The Descent of Man in 1871, not a single early human fossil had been discovered, but he nonetheless tentatively suggested that Africa might just be the homeland of the human species:

In each great region of the world the living animals are closely related to the extinct species of the same region. It is, therefore, probable that Africa was formerly inhabited by extinct apes closely allied to the gorilla and chimpanzee; and as these two species are now man’s nearest allies, it is somewhat more probable that our early progenitors lived on the African continent than elsewhere.

Then fossils started to appear. For a long time, the study of fossils formed the basis of palaeoanthropology, supplemented with comparisons with the anatomy of living humans and our closest relatives, the African apes: chimpanzees and gorillas. Scientists in this specific field might call themselves physical or biological anthropologists. Much of their work is focused on bones; after all, that’s normally all that is preserved in fossils.

As well as looking at the physical remains of our ancestors, palaeoanthropology also draws on the clues left behind – the traces of the material culture of past people, in other words archaeology. Palaeolithic archaeologists are, by necessity, experts in recognising and interpreting stone tool types. Some engage in experimental archaeology, testing out methods of making and using ancient tools and other cultural items. The insights from such practical work can be profound.

The earth itself contains ‘memories’ of past climate and geography held in sediments and layers of ice. Unlocking these secrets has armed palaeoanthropologists with powerful tools for reconstructing the human family tree, and for understanding the environments in which our ancestors lived. Geologists now join the fray, as people who understand how landscapes are formed, how sediments are laid down, how caves are made, and dating experts often come from this field. The study of both fossils and archaeological remains has benefited hugely from advances in dating techniques, meaning that we can now pin fairly precise ages on clues from the deep past. The study of climate change in the past is called palaeoclimatology.

As well as digging for physical remains in the ground, there are clues to our ancestry held in the DNA (deoxyribonucleic acid, the stuff of life) of everyone alive today. Geneticists involved in palaeoanthropology often come from a background of medical genetics – where genes responsible for particular diseases or conditions are tracked down. But differences in our genes can also be used to reconstruct past histories. An exciting new development is the possibility of obtaining ancient DNA from fossil bones – providing another way of approaching the species question.

Linguists have also tried to reconstruct human histories, by looking at language families. However, most linguists feel that languages cannot be reliably traced back more than 10,000 years, although, as we shall see, there are some interesting insights emerging from studies combining linguistics with genetics.

In my journey around the world I have visited many communities of indigenous people in different continents. Many of those I have met have been given different names at different times by outsiders, some of which carry racist or, at the very least, derogatory overtones. I have always tried to use terms to describe people that they themselves are happy with, which is why, for example, in the first part of ‘African Origins’, I refer to the people of the Kalahari as ‘Bushmen’, a name they use in English to refer to themselves. Similarly, people of mixed European and sub-Saharan African ancestry in South Africa refer to themselves as ‘coloureds’; the Evenki of Siberia call themselves by that name, and the same goes for the Semang and Lanoh tribes of Malaysia, the Native Americans of Canada and North America, and the Aboriginal Australians.

The Ice Age

This story of ancient human migrations across the world is set almost entirely within the later stages of what geologists call the Pleistocene period, or the Ice Age. In its entirety, the Pleistocene lasted from 1.8 million years ago to 12,000 years before the present. Although our species appears only in the late Pleistocene, by the end of that period modern humans had made their way into every continent (except Antarctica). In some chapters we will also dip our toes into the Holocene, the period that followed the Pleistocene or Ice Age, and in which we’re still living today.

As we look deep into the past, over vast stretches of time, the apparent stability of geography and climate that we perceive as individuals melts away and we see instead a picture of changing climate, with sea levels and whole ecosystems in flux. The population expansions and migrations of our ancient ancestors were governed by climate change and its effect on the ancient environment. Reconstructing past climates, or palaeoclimates, is an exciting field of science that draws on ancient clues that have been ‘frozen in time’ as well as our understanding of the relationship between the earth and the sun.

The earth’s orbit is not a perfect circle, and so there are times (spanning thousands of years) when the earth is nearer the sun, and warmer, and other times when it is further away, and consequently colder. These cycles last around 100,000 years. As well as this, the tilt of the earth’s axis varies, on a 41,000-year cycle, affecting the degree of difference between the seasons. The earth also wobbles a little around its axis, on a 23,000-year cycle. There are times when the factors affecting tilt and orbit work together to create exceptional chilliness – a glacial period. At other times, the factors come together to produce a very warm period, called an interglacial. This theory was developed by the Serbian mathematician Milutin Milankovitch in the early twentieth century.5, 6

During the 1960s and 1970s, researchers were able to pin down ice ages with increasing levels of accuracy using deep-sea cores, samples drilled from the seabed. Those cores contain the shells of tiny marine animals, called foraminifera, and the carbonate in their shells contains different isotopes of oxygen. The two isotopes of relevance here are 16O, the lighter, ‘normal’ kind, and 18O, a heavier version. Both are present in the ocean, but water that evaporates from the oceans contains more of the lighter kind. This means that water precipitating from the atmosphere – as rain, hail, snow or sleet – also contains more of the lighter 16O than the seas. And it’s that water, falling on to land or ice caps, which becomes frozen into large ice sheets during an ice age. That means there’s more of the heavier 18O left behind in the seas, and more of it gets incorporated into those tiny shells, during an ice age.7 So marine cores, which can be dated using uranium series dating and by looking at the way the earth’s magnetic pole has switched in the past, hold an amazing record of past climate and ice ages.

Formations in limestone caves – in stalagmites, stalactites or flowstone, or in the useful, all-embracing jargon, ‘speleothem’ (from the Greek for cave deposit) – also contain a record of past climate, depending on the proportions of oxygen isotopes that are present in the water that forms them. At any one time, the ratio of heavy and light oxygen isotopes in that water depends on global temperatures – and how much water is locked up as ice, as well as on local air temperatures and the amount of rainfall. While deep-sea cores are useful for looking at global climate, speleothem is very useful for investigating how climate has varied in a specific locality. Another indicator of past climates is pollen: soil samples containing pollen can be analysed to show the range of plants that were living in a particular area.

The Pleistocene was a period marked by repeated glaciations and ending with the last ice age. As ice sheets grew and then shrank back, sea levels would fall and rise. With differences of up to 60 million km3 in the amount of water locked up as ice, sea levels fluctuated by up to 140m.7 The oxygen isotopes trapped in deep-sea cores and speleothem can be used to draw up a series of alternating cold and warm stages called ‘oxygen isotope stages’, often abbreviated to OIS. Just looking at the last 200,000 years, there have been three major cold periods (corresponding with oxygen isotope stages or OIS 2, 4 and 6) interspersed with four warmer periods (OIS 1, 3, 5 and 7). But the Pleistocene really was one long, cold ice age. Interglacials account for less than 10 per cent of the time.7

At the moment we’re enjoying a nice, warm interglacial, oxygen isotope stage 1. The last full-glacial period, OIS 2, lasted from 13,000 to 24,000 years ago. The peak of this most recent cold phase, around 18,000 to 19,000 years ago, is known as the ‘Last Glacial Maximum’ (or ‘LGM’). OIS 3, from 24,000 to 59,000 years ago, was a bit warmer and more temperate, though still much colder than the present, and is called an ‘interstadial’. OIS 4, from 59,000 to 74,000 years ago, was another full-glacial period, though nowhere near as cold as OIS 2.4, 8 OIS 5, the last (sometimes called the ‘Eemian’ or ‘Ipswichian’) interglacial, was a warm, balmy period, lasting from about 130,000 to 74,000 years ago. Before that, there was another glacial period, OIS 6, starting at about 190,000 years after the preceding interglacial, OIS 7.

This level of detail might seem a bit excessive, but our ancient ancestors were very much at the mercy of the climate (as we still are today). For instance, there was a population expansion during the wet warmth of OIS 5, and a crash, or ‘bottleneck’, during the cold dryness of OIS 4. And sea levels fluctuated according to how much water was locked up in ice: during cold, dry periods, sea levels were significantly lower – by as much as 100m – than during warm, wet periods. Between 13,000 and 74,000 years ago (i.e. during OIS 2–4), the world was a drier, colder place than it is today. Although the map of the world was generally very similar, there was more land exposed; many of today’s islands would have been joined to the mainland, and in places where the coast slopes gently the shoreline would have been much further out than it is today. This is of particular significance to archaeologists looking for traces of our ancestors along those ancient coasts – which are now submerged.

Stone Age Cultures

Archaeologists classify periods differently from geologists, depending on what humans were doing at the time. During the Stone Age, humans (including Homo sapiens and their ancestors) were making stone tools. This is before metals – copper, tin, iron – were discovered and used. In fact, in the scheme of things, metal-working is a very recent invention.

The Stone Age is traditionally divided up into the Palaeolithic (old stone age – roughly corresponding with the Pleistocene period), Mesolithic (middle stone age) and Neolithic (new stone age). These stages happened at different times in different places, so it can become quite confusing. The categories are also based on European prehistory, where much early archaeological work was carried out. But in terms of global archaeology, western Europe is a bit of a backwater, even a cul-de-sac9 and so the terminology that has grown up there is sometimes rather unhelpful when we’re trying to understand what was happening in the rest of the world. However, the categories at least provide us with a vocabulary and some kind of framework to help us think about the deep past.

Table showing the relationship between geological periods, oxygen isotope stages and what humans were getting up to at the time.

Each stage is characterised by different styles and ways of making stone tools, but also by differences in the broader lifestyles of people. Put very simply (really too simply, as we shall see later), the Palaeolithic lifestyle was that of a nomadic hunter-gatherer, the Mesolithic saw a trend towards settling down, and the Neolithic saw the beginning of settled villages, cities, agriculture, pottery and organised religion.

Throughout the Palaeolithic, and in the Mesolithic, too, our ancestors were nomadic. They left barely a trace of their passing – no buildings, and very little in the way of possessions – and those material possessions that they did have were often made of what we now think of as biodegradable materials, so they have long since disappeared. When we find stone tools, we are often looking at something that was just a part of a more complex piece of equipment. Sometimes there are hints from polished areas of the stone tool suggesting how it might have been tied to something. Very rarely are the conditions right for organic materials – like pieces of wood or animal hide – to be preserved. When you consider the scarcity of the remains, it’s quite amazing that we can find the occasional trace and, from this, reconstruct part of our collective (pre)history.

During the Palaeolithic period, there are changes in the types of stone tools people were making, and the period is divided up into Lower, Middle and Upper Palaeolithic (or, in Africa, the Early, Middle and Later Stone Age). Stone tools start to appear in the ground, in what is grandly termed the ‘archaeological record’, around 2.5 million years ago, made by early members of our own genus, Homo. These are crude, pebble tools, and the toolkit or stone tool ‘technology’ is called Oldowan after the sites excavated by Mary Leakey in the Olduvai Gorge. These basic tools continued to be made for hundreds of thousands of years. Our early ancestors were not great innovators! But we have to grant them some skill. In the wild, chimpanzees make tools out of easily modified materials like sticks or grass stems, and use stones to crack nuts; chimpanzees in captivity can be taught to make stone tools, but their products are still not as good as those Oldowan tools.10

The next stone toolkit to come along is called the Acheulean. And this toolkit is not found only in Africa. In fact, it is named after the site of St Acheul in France, where a characteristic ‘hand axe’ was discovered in the nineteenth century. Acheulean tools are found in Africa from about 1.7 million years ago, but it’s not until 600,000 years ago that they are found in Europe. The tool from St Acheul is actually quite late: it dates to between 300,000 and 400,000 years ago. By 250,000 years ago, this technology had disappeared. Slightly strangely, this hand axe technology never reached East Asia. The fossil record suggests that people – probably Homo erectus – first made their way out of Africa around a million years ago, so it’s unlikely that the East Asian pebble-tool-makers were direct descendants of the Oldowan people in Africa; they are more likely to have been, culturally, ‘Acheuleans’ who gave up making hand axes as they moved east.10

Hand axes are pointed, teardrop-shaped tools, flaked on both sides. It seems that nobody knows much about how these tools were used: were they designed for use in the hand, or hafted on to a shaft? Many archaeologists prefer simply to call them ‘bifaces’ (a general term for tools flaked on two sides). Acheulean bifaces are much more refined (though still big, chunky things) than Oldowan tools. Some of the bifaces are quite beautifully symmetrical, and many archaeologists have suggested that their form is governed by aesthetics as well as function. It’s a tempting but ultimately conjectural idea, as there is no other evidence for any art at this time. And, once again, there seems to be extreme conservatism in tool-making throughout this period: there was very little invention. Over the huge time span of the Acheulean – from 1.7 million to 250,000 years ago – that culture hardly changed.10

But then a new sort of culture appeared. South of the equator in Africa, it’s called the Middle Stone Age (MSA). Similar tools in North Africa, Europe and western Asia are called Middle Palaeolithic, or Mousterian, the latter a name that comes from the Neanderthal site of Le Moustier in south-west France. These labels carry with them a great deal of historical baggage, and the distinction between Africa and Eurasia is not particularly helpful. What we can say is that these tools seem to have been made by the archaic species Homo heidelbergensis, as well as by its (probable) daughter species: Homo sapiens and Neanderthals.

The MSA/Middle Palaeolithic differs from the Acheulean in that bifaces disappear from the toolkits. And tools are often made from stones that have first been shaped into a tool blank, or ‘prepared core’ – although, actually, the distinction isn’t that easy as this technique was also used in the Acheulean. From studies of the wear on MSA/Middle Palaeolithic tools, it seems that the people were regularly mounting, or ‘hafting’, stone points on to shafts (although, as I mentioned, it is possible though not proven that so-called Acheulean bifaces were hafted). The new generation of tools, and ways of making them, were much more varied than the preceding stone tool technologies. There were other developments during this stage: people started to collect reddish, iron-rich rocks, perhaps for use as pigment; the first hearths appear; they had control of fire; and they started to bury their dead. From the composition of their bones, it also seems that people started to eat more meat in this period. Although people had hunted before, judging from artefacts such as the 400,000-year-old Schöningen spears from Germany, archaeologists believe that it is in the MSA/Middle Palaeolithic that hunting – not just scavenging – became routine.10

About 40,000 years ago, there was another change: to what is called the Later Stone Age (LSA) in Africa, and the Upper Palaeolithic in Eurasia. A huge and varied range of stone tools emerged, and people were also regularly making things out of bone. They were also using ‘true’ projectile weapons – spear-throwers with darts and bow and arrow (as opposed to just hand-cast spears)11 – and they were building shelters, fishing and burying their dead with a degree of ritual that had not really been seen before. They also created magnificent art – particularly in Europe. Although this was probably not the first art (as there’s much earlier evidence of pigment use in Africa), the painted caves of Spain and France are quite exceptional. From the fossils that have been discovered alongside archaeological finds, it is generally believed that the Later Stone Age and Upper Palaeolithic were made by just one species: Homo sapiens, modern humans. Us. Some palaeoanthropologists believe that the appearance of this new phase marks the relatively sudden beginning of truly ‘modern’ human behaviour10 but others think that it is possible to see traces of fully modern behaviour much earlier, even before 100,000 years ago. They also suggest that this behaviour developed gradually, mirroring the physical, biological transition to a modern form.9, 12

The continuing debate goes to show that it’s actually very difficult trying to tease out how and when this behavioural transition, to something we can truly recognise as modern human, happens. As far as stone tools are concerned, it’s hard to find a clear signature of the earliest modern human tools. To begin with, the earliest modern humans were manufacturing exactly the same type of toolkits as their parent and sister species, heidelbergensis and Neanderthals; they all made bog-standard, Middle Stone Age tools. But there is a distinct MSA toolkit, from the northern Sahara, that has been attributed to modern humans. Similar to other MSA toolkits in many ways, the Aterian includes stemmed or ‘tanged points’ (perhaps spear- or arrowheads). At a site in Morocco, further evidence of ‘modern behaviour’ has been discovered, alongside Aterian tools, in the form of shell beads.13 Even so, before the LSA and the Upper Palaeolithic, it is difficult to discern the presence of modern humans based on stone tools alone. So fossilised skeletons become like holy grails for those seeking evidence of the earliest modern humans.

Dating Fossils and Archaeology

It is important to understand something about the techniques archaeologists can now draw on to date their discoveries. Dating is at the very centre of some of the biggest controversies and knottiest problems in palaeoanthropology.

Relative dating often involves judging the age of something by its position in the ground. So, for instance, you might judge something to be Iron Age if it lay underneath a Roman mosaic but on top of a Bronze Age burial. A more scientific approach, sometimes referred to as ‘absolute dating’, involves some means of measuring the age of the object itself, or at least the layer that it is buried in. Absolute dating techniques relevant to the period we’re considering include radiometric and luminescence dating.

Radiometric techniques work by measuring the levels of different radioactive isotopes in materials. Radioactive isotopes decay over time, from one form to another. If the proportions of those forms can be measured, and the rate of decay is known, then the date of the object can be calculated.

The best-known radiometric dating technique is radiocarbon dating. The radioactively unstable C14 isotope decays to stable C12 over time. As C14 is present in the atmosphere, plants trap it when they photosynthesise, and animals eating plants also obtain it. This means that a living plant or animal contains a proportion of C14 to C12 that matches the ratio in the atmosphere. But when the plant or animal dies, it stops taking in any more C14; the C14 it already contains gradually decays to C12. So, by knowing the rate of decay, and then by measuring the proportions of the carbon isotopes in an organic object, whether that’s a piece of wood, some charcoal or a bone, you can work out when that organic thing was last alive.

The precision of radiocarbon dating has recently improved with the use of accelerator mass spectrometry (AMS), which has also pushed the useful limit of radiocarbon dating back to 45,000 years ago. Accuracy has also improved with pre-treatment of samples to remove contamination from modern carbon, and with calibration, to take account of the fact that the amount of C14 in the atmosphere has changed over time (dates given in this book are calibrated, calendar years, rather than ‘radiocarbon years’). Radiocarbon dates published before these advances – before 2004 – need to be treated with caution. Generally speaking, when archaeological materials have been redated using the new, improved techniques, the dates turn out to between 2000 and 7000 years older than the previous estimates. An added advantage of AMS radiocarbon dating is that it requires only a minute sample from a precious archaeological object. AMS radiocarbon dating is the best way of dating organic things – as long as they are less than 45,000 years old.14 Beyond that, and if we’re interested in early modern humans and their forays out of Africa, going back more than 50,000 years ago, we have to look to other methods.

Two other radiometric dating techniques that can be used to date rocks are uranium series and potassium-argon dating. Uranium series dating uses radioactive isotopes of uranium and thorium, which decay to stable lead isotopes. It depends on soluble isotopes being precipitated and then changing to insoluble forms, so it can be applied to speleothem and coral. Potassium-argon (and argon-argon) dating is used to date volcanic rocks. Argon can escape from molten rock, but is trapped in solidified lava. So if archaeological finds or fossils are found between layers of speleothem (in limestone caves) or between layers of volcanic tuff from ancient volcanic eruptions, these techniques can provide a date, or at least a date range, for the discoveries.

A relatively new technique that is proving incredibly helpful in Palaeolithic archaeology is luminescence dating. It is used to pin a date on the last time that grains of quartz or feldspar were exposed to either heat or light. It can be used to date the layers of sediment that an object is buried in, or sometimes even to date an object itself if it was heated – for instance, a piece of pottery or a hearth stone. Luminescence dating is a very powerful tool, useful for pinning an age on objects that are just a few years old, all the way to things that have existed for a few million years.15

The way that luminescence dating works is, I think, quite mind-blowing. When grains made of natural quartz crystals (i.e. grains of sand) are exposed to ionising radiation – from naturally occurring radioactive elements like uranium as well as cosmic rays – electrons get trapped in tiny flaws inside their crystal structure. Light or heat makes the crystal release its electrons. But once a quartz grain is buried, it starts to accumulate electrons again … until somebody comes along and digs it up. Samples for luminescence dating have to be kept completely in the dark when they are collected.

Back in the lab, the quartz grains are sorted out from the sample, under very dim, red light. Then they are exposed either to heat (in thermoluminescence, or TL, dating) or light (in optically stimulated luminescence, or OSL, dating). Then the crystals within them release their trapped electrons – making them glow. By measuring this luminescence, while knowing levels of natural radiation at the place where the quartz grains were buried (from other sediment samples and measures of cosmic radiation), the length of time that the crystal was buried can be estimated.15

Another method that measures levels of trapped electrons, again resulting from bombardment with radiation within sediments, is electron spin resonance (ESR). This technique works well for ageing tooth enamel, which is also a crystalline material – so it is very useful for dating hominin fossils.16

Genetic Studies

Quite recently, another branch of science has begun to provide important clues about our ancestry, about how we are all related to each other and even about the way the world was colonised. This time, however, the evidence is not buried in the ground but inside us, because the DNA contained in each cell of each of our bodies holds a record of our ancestry. Getting samples of DNA is surprisingly simple – and painless. DNA can be collected from volunteers using just a cheek brush or saliva swab. These samples contain cells, and inside those cells is the precious DNA.

While everyone’s DNA is mostly identical, there are some differences. There have to be, otherwise we’d all look exactly the same, clones of each other. Some genes govern our appearance, while others control the machinery of life. There are differences in those genes, too. You can’t tell just by looking at someone, but they might have a different blood group from you, a slightly different enzyme for breaking something down inside their cells. The differences in these active genes and the protein products they make are constrained by natural selection. If a mutation happens in an important gene, it could make the protein product of that gene work better, worse, or perhaps have no effect at all.

If the effect of a mutated gene is deleterious, it may be that the individual carrying it won’t be able to survive at all, or, perhaps, won’t live long enough to pass their genes on. So the mutant gene will disappear from the mix of genes in the population, or ‘gene pool’. If the product of a mutant gene proves advantageous, it could be that the individual with it gains a better chance of survival, and is therefore very likely to pass the new version of that gene on to their offspring. So, gradually, over many generations, a really advantageous gene can spread through a population. If the mutation is neutral, then it is pure chance whether it sticks around or disappears from the gene pool.

But there are also long stretches of our DNA that mean nothing to the cell; they are the bits between genes which are never ‘read’ to produce proteins. Sometimes they contain parts of old, disused genes, or historical bits of genetic material inserted into the chromosomes by viruses. These unused sections are not subject to natural selection like the working genes. Alterations appearing by random mutations in these regions will not be weeded out in the same way. This means that they are quite useful for tracing genetic lineages.

Most of our DNA is coiled up in chromosomes inside the nuclei of our cells; there is also a little bit of DNA in tiny capsules inside the cell. These are mitochondria, the tiny ‘power stations’ of the cell, taking fuel – sugar – and burning it to produce energy. The genes in the mitochondrial DNA have a very specific but incredibly important job, controlling energy transformation inside the cell. To a large extent, because they’re so hidden away, they too are protected from pruning by the grim reaper of natural selection. Mutations accumulate more rapidly in mtDNA than in the nuclear DNA.17 So this means that mtDNA is particularly useful for reconstructing genetic family trees. Geneticists can assume that there is a standard rate of mutation within the mitochondrial DNA, and that, unless they really hamper the work of the mitochondria, those mutations will persist.

The other important thing about mitochondrial DNA is that it does not get mixed up at each generation like the nuclear genes. Gametes (eggs and sperm) contain only half the number of chromosomes contained in every other cell in your body. But when the sperm and the egg are first made, it’s not simply a question of dividing the pairs of chromosomes up – before that happens, each pair of chromosomes swaps DNA with its partner, in a process called recombination. That means that the twenty-three chromosomes left in the gamete contain new mixes of DNA that weren’t there in the father or mother.

Sexual reproduction – with this shuffling of genes at each generation – means that genetically ‘new’ and different individuals keep being created. This in turn creates variability within the gene pool. This variation is incredibly important: it means that, if circumstances change, if the environment changes around us, there will be some individuals who may be able to survive better than others. Biology cannot predict what changes may be needed at some far-off point in the future, but species that have developed this way of ‘future-proofing’ themselves, through sexual reproduction, have been successful in the past – and so we still do it today. But for geneticists trying to trace genes back through generations, it is a nightmare, because the genes keep jumping about.

Mitochondrial DNA, on the other hand, does not get involved in recombination, and stays, chaste and untouched, inside the mitochondria – which we all inherit from our mothers. The sperm from our father contributes just its nucleus with twenty-three chromosomes at fertilisation. The egg also contains twenty-three chromosomes, as well as all the other cellular machinery – including mitochondria. This means that all your mitochondria, and the DNA they contain, are inherited from your mother. And she got hers from her mother, and so on. Geneticists can therefore trace back maternal lineages using mitochondrial DNA (mtDNA). Of the nuclear DNA in our chromosomes, there is actually one bit that doesn’t recombine, and that is part of the Y chromosome (which only men have). So this can be used to trace back paternal lineages.

In fact, other genes, in the nuclear DNA, can be traced back, although the history of these genes is more convoluted and much harder to track back through time than the non-recombining bit of the Y chromosome or mtDNA. Techniques for analysing DNA, for reading the sequence of nucleotide building blocks, are getting better and faster, almost by the day. Many labs are not looking at just individual genes from mtDNA or nuclear DNA but are now attempting to read all the DNA – mapping entire mitochondrial and even nuclear genomes. It’s an exciting time.

In terms of investigating our ancestry, it’s those tiny differences in our DNA, nuclear or mitochondrial, that are important. The traditional approach to studying genetic variation was ‘population genetics’, where frequencies of different gene types are compared between different populations. The problem with this approach is that it is particularly subject to distortions, as people migrate and populations mix. The approach of building ‘family trees’ of genes, from mtDNA, the Y chromosome and other nuclear DNA, makes for a much clearer picture of our inter-relatedness and our ancestry. The branch points of the trees correspond with the appearance of specific mutations.18

There are obviously ethical issues involved in the collecting of DNA: it should be done only with the consent of the individual concerned, it should be used only for the purposes initially laid out, and should not be passed on to any third parties. Some people have worried that genetic analyses of human variation might be used for a racist agenda, but these fears can be allayed as this science actually contains a strong anti-racist message. As eminent geneticist Luigi Luca Cavalli-Sforza has put it: ‘Studies of human population genetics and evolution have generated the strongest proof that there is no scientific basis for racism, with the demonstration that human genetic diversity between populations is small, and perhaps entirely the result of climatic adaptation and random [genetic] drift’.18

The Illusion of the Journey and the Folly of Hero-Worship

This book is about several different sorts of journey. There are the physical journeys made by our ancestors as they spread around the world. Then there is a more abstract, philosophical journey, with the gradual transformation of body and mind to something we can recognise as fully modern human. And then, there is my own physical and mental journey. I spent six months travelling around the world, meeting all sorts of experts and indigenous people, and experiencing the huge range of environments that humans manage to survive in today, from the frozen taiga of Siberia to the blazing aridity of the Kalahari.

When we cast our minds back and imagine our ancestors, sometimes surviving against the odds, and managing to make their way into and survive in the most extreme environments, it may inspire us with humility, awe and great admiration. And it certainly is an awe-inspiring story: from the origin of our species in Africa, to the colonisation of the globe.

But it’s all too easy to start thinking of this journey as a heroic struggle against adversity, and to imagine our ancestors setting out with the explicit intention of colonising the world. In fact, this ‘human journey’ is a metaphor – as it wasn’t really a journey at all – and they had no such goal in mind. I think that words like ‘journey’ and ‘migration’ are useful metaphors for describing how populations have moved across the face of the earth over vast stretches of time, but it’s very important to realise that our ancestors were not on some kind of quest to get on and colonise the world. Certainly, they were nomadic and they would have moved around the landscape as the seasons changed, but most of the time they would not have been purposefully moving on from one place to another. It’s just that, as populations (of humans or other animals) expand, they spread out. But I think it’s acceptable to use words like journey and migration to mean something more abstract, a diaspora happening over thousands of years. So there was no quest, and no heroes. We may feel humbled by the survival of our species, though set about by vicissitudes, and we may marvel at our ancestors’ ingenuity and adaptability – but we must remember, through it all, that they were just people – like you and me.