To continue reading about cosmology, go to ‘Pathways to cosmic oblivion’ on page 216.

To continue reading about cosmology, go to ‘Pathways to cosmic oblivion’ on page 216.The desire to solve mysteries or understand anomalies in the world around us is the driving force of science. Puzzles such as why apples fall downwards and why liquids get hot when you stir them enough have led us to important insights. One of the delightful or, depending on your viewpoint, frustrating things about the world’s mysteries is that dispelling one often reveals others. So here are four mysteries, unsolved or partly solved, that will hopefully lead to new understanding – and almost certainly to new riddles.

In the first chapter we discovered how the universe developed in the big bang from an energy-packed blob the size of a pea. But what happened before that point – how did that nascent cosmos emerge from nothing? Physical evidence to answer this question is non-existent and our understanding is confused by a cloud of metaphysical issues and misconceptions. To cut through the fog, here’s physicist Paul Davies.

Can science explain how the universe began? Even suggestions to that effect have provoked an angry and passionate response from many quarters. Religious people tend to see the claim as a move to finally abolish God the Creator. Atheists are equally alarmed, because the notion of the universe coming into being from nothing looks suspiciously like the creation, ex nihilo, of Christianity. The general sense of indignation was well expressed by writer Fay Weldon. ‘Who cares about half a second after the big bang?’ she railed in 1991 in a scathing newspaper attack on scientific cosmology. ‘What about the half a second before?’ What indeed. The simple answer is that, in the standard picture of the cosmic origin, there was no such moment as ‘half a second before’.

To see why, we need to examine this standard picture in more detail. The first point to address is why anyone believes the universe began at a finite moment in time. How do we know that it hasn’t simply been around for ever? Most cosmologists reject this alternative because of the severe problem of the second law of thermodynamics. Applied to the universe as a whole, this law states that the cosmos is on a one-way slide towards a state of maximum disorder, or entropy. Irreversible changes, such as the gradual consumption of fuel by the sun and stars, ensure that the universe must eventually ‘run down’ and exhaust its supplies of useful energy. It follows that the universe cannot have been drawing on this finite stock of useful energy for all eternity.

Direct evidence for a cosmic origin in a big bang comes from three observations. The first, and most direct, is that the universe is still expanding today. The second is the existence of a pervasive heat radiation that is neatly explained as the fading afterglow of the primeval fire that accompanied the big bang. The third strand of evidence is the relative abundances of the chemical elements, which can be correctly accounted for in terms of nuclear processes in the hot dense phase that followed the big bang.

But what caused the big bang to happen? Where is the centre of the explosion? Where is the edge of the universe? Why didn’t the big bang turn into a black hole? These are some of the questions that bemused members of the audience always ask whenever I lecture on this topic. Though they seem pertinent, they are in fact based on an entirely false picture of the big bang. To understand the correct picture, it is first necessary to have a clear idea of what the expansion of the universe entails. Contrary to popular belief, it is not the explosive dispersal of galaxies from a common centre into the depths of a limitless void. The best way of viewing it is to imagine the space between the galaxies expanding or swelling.

The idea that space can stretch, or be warped, is a central prediction of Einstein’s general theory of relativity, and has been well enough tested by observation for all professional cosmologists to accept it. According to general relativity, space-time is an aspect of the gravitational field. This field manifests itself as a warping or curvature of space-time, and when it comes to the large-scale structure of the universe, such warping occurs in the form of space being stretched with time.

A helpful, albeit two-dimensional, analogy for the expanding universe is a balloon with paper spots stuck to the surface. As the balloon is inflated, so the spots, which play the role of galaxies, move apart from each other. Note that it is the surface of the balloon, not the volume within, that represents the three-dimensional universe.

Now, imagine playing the cosmic movie backwards, so that the balloon shrinks rather than expands. If the balloon were perfectly spherical (and the rubber sheet infinitely thin), at a certain time in the past the entire balloon would shrivel to a speck. This is the beginning.

Translated into statements about the real universe, I am describing an origin in which space itself comes into existence at the big bang and expands from nothing to form a larger and larger volume. The matter and energy content of the universe likewise originates at or near the beginning, and populates the universe everywhere at all times. Again, I must stress that the speck from which space emerges is not located in anything. It is not an object surrounded by emptiness. It is the origin of space itself, infinitely compressed. Note that the speck does not sit there for an infinite duration. It appears instantaneously from nothing and immediately expands. This is why the question of why it does not collapse to a black hole is irrelevant. Indeed, according to the theory of relativity, there is no possibility of the speck existing through time because time itself begins at this point.

This is perhaps the most crucial and difficult aspect of the big bang theory. The notion that the physical universe came into existence with time and not in time has a long history, dating back to St Augustine in the 5th century. But it took Einstein’s theory of relativity to give the idea scientific respectability. The key feature of the theory of relativity is that space and time are part of the physical universe, and not merely an unexplained background arena in which the universe happens. Hence the origin of the physical universe must involve the origin of space and time too.

But where could we look for such an origin? Well, the theory of relativity permits space and time to possess a variety of boundaries or edges, technically known as singularities. One type of singularity exists in the centre of a black hole. Another corresponds to a past boundary of space and time at the big bang. The idea is that, as you move backwards in time, the universe becomes more and more compressed and the curvature or warping of space-time escalates without limit, until it becomes infinite at the singularity. Very roughly, it resembles the apex of a cone, where the fabric of the cone tapers to an infinitely sharp point and ceases. It is here that space and time begin.

Once this idea is accepted, it is immediately obvious that the question ‘What happened before the big bang?’ is meaningless. There was no such epoch as ‘before the big bang’, because time began with the big bang. Unfortunately, the question is often answered with the bald statement ‘There was nothing before the big bang’, and this has caused yet more misunderstandings. Many people interpret ‘nothing’ in this context to mean empty space, but as I have been at pains to point out, space simply did not exist prior to the big bang.

Perhaps ‘nothing’ here means something more subtle, like pre-space, or some abstract state from which space emerges? But again, this is not what is intended by the word. As Stephen Hawking has remarked, the question ‘What lies north of the North Pole?’ can also be answered by ‘nothing’, not because there is some mysterious Land of Nothing there, but because the region referred to simply does not exist. It is not merely physically, but also logically, non-existent. So too with the epoch before the big bang.

In my experience, people get very upset when told this. They think they have been tricked, verbally or logically. They suspect that scientists can’t explain the ultimate origin of the universe and are resorting to obscure and dubious concepts like the origin of time merely to befuddle their detractors. The mindset behind such outraged objection is understandable: our brains are hardwired for us to think in terms of cause and effect. Because normal physical causation takes place within time, with effect following cause, there is a natural tendency to envisage a chain of causation stretching back in time, either without any beginning, or else terminating in a metaphysical First Cause, or Uncaused Cause, or Prime Mover. But cosmologists now invite us to contemplate the origin of the universe as having no prior cause in the normal sense, not because it has an abnormal or supernatural prior cause, but because there is simply no prior epoch in which a preceding causative agency – natural or supernatural – can operate.

Nevertheless, cosmologists have not explained the origin of the universe by the simple expedient of abolishing any preceding epoch. After all, why should time and space have suddenly ‘switched on’? One line of reasoning is that this spontaneous origination of time and space is a natural consequence of quantum mechanics. Quantum mechanics is the branch of physics that applies to atoms and subatomic particles, and it is characterised by Werner Heisenberg’s uncertainty principle, according to which sudden and unpredictable fluctuations occur in all observable quantities. Quantum fluctuations are not caused by anything – they are genuinely spontaneous and intrinsic to nature at its deepest level.

For example, take a collection of uranium atoms undergoing radioactive decay due to quantum processes in their nuclei. There will be a definite time period, the half-life, after which half the nuclei should have decayed. But according to Heisenberg it is not possible, even in principle, to predict when a particular nucleus will decay. If you ask, having seen a particular nucleus decay, why the decay event happened at that moment rather than some other, there is no deeper reason, no underlying set of causes, that explains it. It just happens.

The key step for cosmogenesis is to apply this same idea not just to matter, but to space and time as well. Because space-time is an aspect of gravitation, this entails applying quantum theory to the gravitational field of the universe. The application of quantum mechanics to a field is fairly routine for physicists, though it is true that there are special technical problems associated with the gravitational case that have yet to be resolved. The quantum theory of the origin of the universe therefore rests on shaky ground.

In spite of these technical obstacles, one may say quite generally that once space and time are made subject to quantum principles, the possibility immediately arises of space and time ‘switching on’, or popping into existence, without the need for prior causation, entirely in accordance with the laws of quantum physics.

If a big bang is permitted by the laws of physics to happen once, such an event should be able to happen more than once. In recent years a growing posse of cosmologists has proposed models of the universe involving many big bangs, perhaps even an infinite number of them. In the model known as eternal inflation there is no ultimate origin of the entire system, although individual ‘pocket universes’ within the total assemblage still have a distinct origin. The region we have been calling ‘the universe’ is viewed as but one ‘bubble’ of space within an infinite system of bubbles. In what follows I shall ignore this popular elaboration and confine my discussion to the simple case where only one bubble of space – a single universe – pops into existence.

Even in this simple case, the details of the cosmic birth remain subtle and contentious, and depend to some extent on the interrelationship between space and time. Einstein showed that space and time are closely interwoven, but in the theory of relativity they are still distinct. Quantum physics introduces the new feature that the separate identities of space and time can be ‘smeared’ or ‘blurred’ on an ultra-microscopic scale. In a theory proposed in 1982 by Hawking and American physicist Jim Hartle, this smearing implies that, closer and closer to the origin, time is more and more likely to adopt the properties of a space dimension, and less and less likely to have the properties of time. This transition is not sudden, but blurred by the uncertainty of quantum physics. Thus, in Hartle and Hawking’s theory, time does not switch on abruptly, but emerges continuously from space. There is no specific first moment at which time starts, but neither does time extend backwards for all eternity.

Unfortunately, the topic of the quantum origin of the universe is fraught with confusion because of the publicity given to a preliminary, and in my view wholly unsatisfactory, theory of the big bang based on an instability of the quantum vacuum. According to this alternative theory, first mooted by Edward Tryon in 1973, space and time are eternal, but matter is not. It suddenly appears in a pre-existing and unexplained void due to quantum vacuum fluctuations. In such a theory, it would indeed involve a serious misnomer to claim that the universe originated from nothing: a quantum vacuum in a background space-time is certainly not nothing!

However, if there is a finite probability of an explosive appearance of matter, it should have occurred an infinite time ago. In effect, Tryon’s theory and others like it run into the same problem of the second law of thermodynamics as most models of an infinitely old universe.

Of course, this attempt to explain the origin of the universe is based on an application of the laws of physics. This is normal in science: one takes the underlying laws of the universe as given. But when tangling with ultimate questions, it is only natural that we should also ask about the status of these laws. One must resist the temptation to imagine that the laws of physics, and the quantum state that represents the universe, somehow exist before the universe. They don’t – any more than they exist north of the North Pole. In fact, the laws of physics don’t exist in space and time at all. They describe the world, they are not ‘in’ it. However, this does not mean that the laws of physics came into existence with the universe. If they did – if the entire package of physical universe plus laws just popped into being from nothing – then we cannot appeal to the laws to explain the origin of the universe. So to have any chance of understanding scientifically how the universe came into existence, we have to assume that the laws have an abstract, eternal character. The alternative is to shroud the origin in mystery and give up.

It might be objected that we haven’t finished the job by baldly taking the laws of physics as given. Where did those laws come from? And why those laws rather than some other set? This is a valid objection. I have argued that we must eschew the traditional causal chain and focus instead on an explanatory chain, but inevitably we now confront the logical equivalent of the First Cause – the beginning of the chain of explanation.

In my view it is the job of physics to explain the world based on lawlike principles. Scientists adopt differing attitudes to the metaphysical problem of how to explain the principles themselves. Some simply shrug and say we must just accept the laws as a brute fact. Others suggest that the laws must be what they are from logical necessity. Yet others propose that there exist many worlds, each with differing laws, and that only a small subset of these universes possess the rather special laws needed if life and reflective beings like ourselves are to emerge. Some sceptics rubbish the entire discussion by claiming that the laws of physics have no real existence anyway – they are merely human inventions designed to help us make sense of the physical world. It is hard to see how the origin of the universe could ever be explained with a view like this.

In my experience, almost all physicists who work on fundamental problems accept that the laws of physics have some kind of independent reality. With that view, it is possible to argue that the laws of physics are logically prior to the universe they describe. That is, the laws of physics stand at the base of a rational explanatory chain, in the same way that the axioms of Euclid stand at the base of the logical scheme we call geometry. Of course, one cannot prove that the laws of physics have to be the starting point of an explanatory scheme, but any attempt to explain the world rationally has to have some starting point, and for most scientists the laws of physics seem a very satisfactory one. In the same way, one need not accept Euclid’s axioms as the starting point of geometry; a set of theorems like Pythagoras’s would do equally well. But the purpose of science (and mathematics) is to explain the world in as simple and economic a fashion as possible, and Euclid’s axioms and the laws of physics are attempts to do just that.

In fact, it is possible to quantify the degree of compactness and utility of these explanatory schemes using a branch of mathematics called algorithmic information theory. Obviously, a law of physics is a more compact description of the world than the phenomena that it describes. For example, compare the succinctness of Newton’s laws with the complexity of a set of astronomical tables for the positions of the planets. Although as a consequence of Kurt Gödel’s famous incompleteness theorem of logic, one cannot prove a given set of laws, or mathematical axioms, to be the most compact set possible, one can investigate mathematically whether other logically self-consistent sets of laws exist. One can also determine whether there is anything unusual or special about the set that characterises the observed universe as opposed to other possible universes. Perhaps the observed laws are in some sense an optimal set, producing maximal richness and diversity of physical forms. It may even be that the existence of life or mind relates in some way to this specialness. These are open questions, but I believe they form a more fruitful meeting ground for science and theology than dwelling on the discredited notion of what happened before the big bang.

To continue reading about cosmology, go to ‘Pathways to cosmic oblivion’ on page 216.

To continue reading about cosmology, go to ‘Pathways to cosmic oblivion’ on page 216.

The placebo effect has been called ‘the power of nothing’. It exerts a healing influence mediated by the mind. But if you think you know how it works, you may be in for a surprise: recent studies have revealed a deeply mysterious effect that threatens the credibility of modern medicine. Michael Brooks finds out why.

It seemed like a good idea until I saw the electrodes. Dr Luana Colloca’s white coat offered scant reassurance. ‘Do you mind receiving a series of electric shocks?’ she asked.

I could hardly say no – after all, this was why I was here. Colloca’s mentor, Fabrizio Benedetti of the University of Turin, had invited me to come and experience their placebo research first hand. Colloca strapped an electrode to my forearm and sat me in a reclining chair in front of a computer screen. ‘Try to relax,’ she said.

First, we established my pain scale by determining the mildest current I could feel, and the maximum amount I could bear. Then Colloca told me that, before I got another shock, a red or a green light would appear on the computer screen.

A green light meant I would receive a mild shock. A red light meant the shock would be more severe, like the jolt you get from an electric fence. All I had to do was rate the pain on a scale of 1 to 10, mild to severe.

After 15 minutes – and what seemed like hundreds of shocks – the experiment ended with a series of mild shocks. Or so I thought, until Colloca told me that these last shocks were in fact all severe.

I had felt the ‘electric fence’ jolts as a series of gentle taps on the arm because my brain had been conditioned to anticipate low pain whenever it saw a green light – an example of the placebo effect.

Benedetti watched the procedure with a smile on his face. He was not sure his team could induce a placebo response in me if I knew I was about to be deceived. As it turns out, I succumbed, hook, line and sinker.

Such is the power of placebo. This was once thought to be a simple affair, involving little more than the power of positive thinking. Make people believe they are receiving good medical care – with anything from a sugar pill to a kindly manner – and in many cases they begin to feel better without any further medical intervention.

However, Benedetti and others are now claiming that the true nature of placebo is far more complex. The placebo effect, it turns out, can lead us on a merry dance. Drug trials, Benedetti says, are particularly problematic. ‘An ineffective drug can be better than a placebo in a standard trial,’ says Benedetti.

The opposite can also be true, as Ted Kaptchuk of Harvard Medical School in Boston points out. ‘Often, an active drug is not better than placebo in a standard trial, even when we can be confident that the active drug does work,’ he says.

Some researchers are so taken aback by the results of their studies that they are calling for the very term ‘placebo’ to be scrapped. Others suggest the latest findings undermine the very foundations of evidence-based medicine. ‘Placebo is ruining the credibility of medicine,’ Benedetti says.

How did it come to this? After all, the foundation of evidence-based medicine, the clinical trial, is meant to rule out the placebo effect.

If you’re testing a drug such as a new painkiller, it’s supposed to work like this. First, you recruit the test subjects. Then you assign each person to one of two groups randomly, to ensure there is no bias in the way members of the groups are chosen. One group gets the painkiller, the other gets a dummy treatment. Then, you might think, all you have to do is compare the two groups.

It’s not that simple, though, because this is where the placebo problem kicks in. If people getting an experimental painkiller expect it to work, it will work to some extent – just as seeing a green light reduced the pain I felt when shocked. If those in the control group know they’re getting a dummy pill whereas those in the other group know they’re getting the ‘real’ drug, the experimental painkiller might appear to work better than the dummy when in fact the difference between the groups is entirely due to the placebo effect.

So it’s crucial not to tell the subjects what they are getting. Those running the trial should not know either, so they cannot give anything away, creating the gold standard of clinical trials, the double-blind randomised controlled trial. This does not eliminate the placebo effect, but should make it equal in both groups. According to conventional wisdom, in a double-blind trial any ‘extra’ effect in the group given the real drug must be entirely down to the drug’s physical effect.

Benedetti, however, has shown this is not necessarily true. His early work in this area involved an existing painkiller called a CCK-antagonist. First, he performed a standard double-blind randomised controlled trial. As you would expect, the CCK-antagonist performed better than the placebo. Standard interpretation: the CCK-antagonist is an effective painkiller.

Now comes the mind-boggling part. When Benedetti gave the same drug to volunteers without telling them what he was doing, it had no effect.1 ‘If it were a real painkiller, we should expect no difference compared to the routine overt administration,’ he says. ‘What we found is that the covert CCK-antagonist was completely ineffective in relieving pain.’

Benedetti’s team has since shown that the combination of a patient’s expectation plus the administration of the CCK-antagonist stimulates the production of natural painkilling endorphins. It has been known since 1978 that the placebo effect alone can relieve pain in this way. What Benedetti has uncovered, however, is a far more complex interaction between a drug and the placebo effect. His work suggests that the CCK-antagonist is not actually a painkiller in the conventional sense, but more of a ‘placebo amplifier’ – and the same might be true of many other drugs.

‘We can never be sure about the real action of a drug,’ says Benedetti. ‘The very act of administering a drug activates a complex cascade of biochemical events in the patient’s brain.’ A drug may interact with these expectation-activated molecules, confounding the interpretation of results.

This could be true of some rather famous – and profitable – substances. Benedetti has found that diazepam, for instance, doesn’t reduce anxiety in patients after an operation unless they know they are taking it. The placebo effect is required in order for it to be effective. It’s not yet clear if this is also true of diazepam’s other effects.

Even with drugs that do have direct effects independently of patients’ expectations, the strength of these effects can be influenced by expectation. If you don’t tell people that they are getting an injection of morphine, you have to inject at least 12 milligrams to get a painkilling effect, whereas if you tell them, far lower doses can make a difference.

Such findings prove that we need to change the way trials are done, Benedetti says. He thinks this is true of all placebo-controlled trials, not just those involving conditions in which placebos can have a strong effect, such as those on pain.

The alternatives include Benedetti’s hidden treatment approach, where participants are not always told when they are getting a drug, and the ‘balanced placebo design’, in which you tell some people they got the drug when they actually got the placebo and vice versa.

These approaches are a great way of teasing apart true drug effects from placebo, says Franklin Miller of the US National Institutes of Health. The problem is the degree of deception involved. ‘There is no way we’re going to be able to do clinical trials that involve deceiving patients about what they are getting. I don’t see that as a useable method,’ he says.

Colloca disagrees. With hidden treatments, a patient might not know when the drug infusion starts and ends, but they know that a drug will be given. Therefore, she argues, there is full informed consent.

For Kaptchuk, the issue is not just teasing apart drug effects from placebo; it’s the very notion that only treatments that are better than placebo have any value. ‘It’s never enough to just test against placebo,’ he says.

In a study published in 2008, his team compared three ‘treatments’ for irritable bowel syndrome.2 One group got sham acupuncture and lots of attention. The second group also got sham acupuncture, but no attention. A third group of patients just got left on a ‘waiting list’.

Patients in both sham acupuncture groups did better than those kept on the fake waiting list. However, the group who had felt listened to and consulted about their symptoms, feelings and treatments reported an improvement that was equivalent to the ‘positive’ trial results for drugs commonly used to treat irritable bowel syndrome – drugs that are supposed to have been proved better than placebo. Does this finding mean the drugs should not have been approved, even though patients are better off with either drugs or placebo than no treatment at all?

This study shows how a placebo can be boosted by combining factors that contribute to its effect. And all sorts of factors can be involved. Even word of mouth can help, Colloca says, such as learning that a treatment has worked for others.

Conditioning through repetition, as in the process I went through, is another important factor. ‘Many trials use the repeated administration of drugs, thus triggering learning mechanisms that lead to increased placebo responsiveness,’ Benedetti says.

This is yet another reason to change clinical trials, he argues. It could explain, for instance, why the placebo effect appears to be growing stronger in clinical trials, causing problems for drug companies attempting to prove their products are better than placebo.

The issue isn’t just about disentangling the effect of a placebo from that of a drug. It’s also about harnessing its power. For instance, Colloca thinks the conditioning effect could be exploited to reduce doses of painkilling drugs with potentially dangerous side effects.3

The problem with trying to exploit the placebo effect, says Miller, is that the term means very different things to different people. Many doctors don’t believe placebo has any effect other than to placate those demanding some kind of treatment. ‘People say it’s noise, or nothing, or something just to please the patient,’ Miller says.

Those involved with clinical trials, by contrast, tend to overestimate the power of placebo. Consider the way trials are carried out. If the people in the control group – the ones who receive the placebo – get better, it’s almost always attributed to the placebo effect. But in fact, there are many other reasons why those in the control group can improve. Many conditions get better all by themselves given enough time, for instance. To distinguish between the apparent effect of a placebo and its actual effect, you have to compare a placebo treatment with no treatment at all, as in the irritable bowel study.

In an article published in 2008, Miller and Kaptchuk argue that the very notion of placebo has became so laden with baggage that it should be ditched.4 Instead, they suggest that doctors and researchers should think in terms of ‘contextual healing’ – the aspect of healing that is produced, activated or enhanced by the context of the clinical encounter, rather than by the specific treatment given.

Whatever you call it, trying to harness the placebo effect raises tricky ethical issues: can doctors exploit it without lying to patients? Maybe. If my shocking experience is anything to go by, knowing you are getting a placebo does not necessarily stop it working.

‘It’s a complicated issue, but one that deserves a lot more attention,’ Miller says. ‘Finding ethically appropriate ways to tap the use of placebo in clinical practice is where the field needs to be moving.’

Doctors, however, are not hanging around waiting for the results of rigorous studies that show whether or not placebos can be used effectively and ethically for specific conditions. Surveys suggest around half of doctors regularly prescribe a placebo and that a substantial minority do so not just to get patients out of the consulting room but because they believe placebos produce objective benefits.

Are they doing their patients a disservice? In 2001, Asbjørn Hróbjartsson of the Nordic Cochrane Institute in Stockholm did a meta-analysis of 130 clinical trials that compared the placebo group with a no-treatment group, to reveal the ‘true’ placebo effect. The studies involved around 7,500 patients suffering from about 40 different conditions ranging from alcohol dependence to Parkinson’s disease.

The meta-analysis concluded that, overall, placebos have no significant effects. Two years later the team published a follow-up study with data from 11,737 patients. ‘The results are similar again,’ Hróbjartsson says. Placebos are overrated and largely ineffective, he concludes, and doctors should stop using them. A third review of 202 trials delivers the same message.5

However, if you consider only studies whose outcomes are measured by patients’ reports, such as how much pain they feel, placebos do appear to have a small but significant effect, he says. In other words, the placebo effect can make you feel better – even if you aren’t actually better.

Does this mean it’s not a real effect? Was I deluded when I reported feeling severe shocks as mild ones? ‘What does “real” mean in this situation?’ responds Hróbjartsson. ‘My concern is not so much whether effects of placebo are real or not, but whether there is evidence for clinically relevant effects.’

Giving patients plenty of TLC is where placebo intervention should end, he thinks. ‘Most of us working in the field think that’s just another way of saying “Be a good doctor.”’

Colloca and Benedetti think there is scope for doing much better than that. ‘We already know that placebos don’t work everywhere, therefore the small magnitude of the placebo effect in that meta-analysis is not surprising at all,’ Benedetti says. ‘It is as if you wanted to test the effects of morphine in gut disorders, pain, heart diseases, marital discord, depression and such like. If you average the effects of morphine across all these conditions, the outcome would be that overall morphine is ineffective.’

The other reason not to take the meta-analyses too seriously is the evidence that placebo can have measurable biochemical effects. The release of painkilling endorphins, for instance, has been confirmed by showing that drugs which block endorphins also block the placebo effect on pain, and by brain scans that ‘light up’ endorphins. Placebos have also been shown to trigger the release of dopamine in people with Parkinson’s disease. In 2004, Benedetti demonstrated that, after conditioning, individual neurons in the brains of Parkinson’s patients respond to a salt solution in the same way as they do to a genuine drug designed to relieve tremors.

When it comes to the placebo effect, it seems, nothing is simple. We still have a lot to learn about this elusive phenomenon.

For more on the power of nothing, go to ‘When mind attacks body’ on page 133.

For more on the power of nothing, go to ‘When mind attacks body’ on page 133.

What have the appendix, wisdom teeth and the coccyx got in common? They all do nothing except occasionally cause pain, right? So why have we got them? One suggestion is that they are remnants of organs that served us well in our evolutionary past. It’s a neat idea, but evidence to support it is not always easy to find, as Laura Spinney discovers.

Vestigial organs have long been a source of perplexity and irritation for doctors and of fascination for the rest of us. In 1893, a German anatomist named Robert Wiedersheim drew up a list of 86 human ‘vestiges’, organs ‘formerly of greater physiological significance than at present’. Over the years, the list grew, then shrank again. Today, no one can remember the score. It has even been suggested that the term is obsolete, useful only as a reflection of the anatomical knowledge of the day. In fact, these days many biologists are extremely wary of talking about vestigial organs at all.

This may be because the subject has become a battlefield for those who refuse to accept that evolution exists: the creationist and intelligent design lobbies. They argue that none of the items on Wiedersheim’s original list are now considered vestigial, so there is no need to invoke evolution to explain how they lost their original functions. While they are right to question the status of some organs that were formerly considered vestiges, denying the concept altogether flies in the face of the biological facts. While most biologists prefer to steer clear of what they see as a political debate, Gerd Müller, a theoretical biologist from the University of Vienna, is fighting to bring the concept back into the scientific arena. ‘Vestigiality is an important biological phenomenon,’ he says.

Part of the problem, says Müller, is semantic: people have come to think of vestigial organs as useless, which is not what Wiedersheim said. In an attempt to clear up the confusion, Müller has come up with a more explicit definition: vestigial structures are largely or entirely functionless as far as their original roles are concerned – though they may retain lesser functions or develop minor new ones. Müller points out that it is useful to know if a given structure is vestigial, both for taxonomic purposes – understanding how different species are related to one another – and for medical reasons, as in the case of an organ that has no obvious use in adults but turns out to be crucial in development.

Nobody doubts that some human structures that were once considered vestigial have proved to be far from redundant in the light of growing medical knowledge. For example, Wiedersheim’s original list included such eminently useful structures as the three smallest toes and the valves in veins that prevent blood flowing backwards. It also contained several organs we now know to be part of the immune system, such as the adenoids and tonsils, lymphatic tissues that produce antibodies, and the thymus gland in the upper chest, which is important for the production and maturation of T-lymphocytes.

Some of Wiedersheim’s vestiges have since been identified as hormone-secreting glands – notably the pituitary at the base of the brain, which regulates homeostasis, and the pineal located deep in the brain, which secretes the hormone melatonin. Melatonin is best known for synchronising the activity of our internal organs, including the reproductive organs, with the diurnal and seasonal cycles, but it is also a potent antioxidant that protects the brain and other tissues from damage, so slowing down the ageing process.

Then there is the male nipple. The most showily useless of all human structures would seem to be a dead cert for continued inclusion on Wiedersheim’s list. However, evolutionary biologist Andrew Simons of Carleton University in Ottawa says any claim that it is vestigial is bogus. To be vestigial, an organ or something from which it is derived must have had a function in the first place. ‘There is no reason to believe that male nipples ever served any function,’ says Simons. Instead, they persist because all human foetuses share the same basic genetic blueprint and males retain a feature that is useful in females because there is no adaptive cost in doing so.

Natural selection shapes living organisms to survive, Müller points out. Once their survival is ensured, the organism may very well retain non-adaptive or non-functional features, provided they are not a burden. This is one reason why we shouldn’t expect anatomical structures to be perfectly adapted to their function (or lack of one), says Simons. It also complicates the identification of truly vestigial structures.

Another problem arises when trying to show that a modern structure has lost function with respect to its ancestral form. Take the appendix, a small worm-like pouch that protrudes from the cecum of the large intestine. It was long thought to be a shrivelled-up remnant of some larger digestive organ – primarily because it is a lot less prominent than its counterpart in rabbits, with which it had previously been compared. In 1980, G. B. D. Scott of London’s Royal Free Hospital put that assumption to the test. He compared the appendix in different primate species and found the human appendix to be among the largest and most structurally distinct from the cecum. ‘It develops progressively in the higher primates to culminate in the fully developed organ seen in the gorilla and man,’ he wrote.

Scott concluded that the appendix is far from functionless in apes and humans. Recent evidence from another quarter seems to support his finding. A study by R. Randal Bollinger and colleagues at Duke University School of Medicine at Durham, North Carolina, found that the human appendix acts as a ‘safe house’ for helpful, commensal bacteria, providing them with a place to grow and, if necessary, enabling them to re-inoculate the gut should it lose its normal microbial inhabitants – for example, as a result of illness.1 Although people who have had their appendix removed seem to suffer no ill effects, team member Bill Parker points out that the operation is mainly performed in the developed world. ‘If you lived in a traditional culture, any time before 1800, or in a developing country where they don’t have sewer systems, you are going to need your appendix,’ he says. Parker suspects that far from being vestigial, the specialised appendix evolved out of a cecum that had the more general twin functions of housing good bacteria and aiding digestion.

That would explain Scott’s finding. However, there is an alternative explanation that allows for the possibility that the appendix is a vestigial organ. In 1998, evolutionary theorists Randolph Nesse of the University of Michigan, Ann Arbor, and the late George Williams of the State University of New York at Stony Brook argued that while you might expect natural selection to eliminate the annoying human appendix if it could, we might paradoxically be stuck with it.2 They pointed out that a smaller, thinner appendix would be more likely to become blocked by inflammation and inaccessible to a cleansing blood supply, increasing the risk of life-threatening infections. Their conclusion was that ‘larger appendixes are thus actually selected for,’ even though they may no longer have a role.

The jury is still out on the human appendix, but examples from other animals leave no doubt that vestigiality is a real phenomenon. Look no further than the wings on flightless birds for an unequivocal example of a vestige, says palaeontologist Gareth Dyke of University College Dublin. The loss of flight in large, ground-living birds happened relatively recently – within the past 50 million years – and usually as a result of the birds being restricted to an island, or because of the loss of terrestrial predators. The ostrich is an extreme case, because its wings have already lost some of the bones that were present in its airborne ancestors. ‘The feathers too are modified,’ Dyke says. ‘They are not flight feathers any more. There’s no structure to them. They are just really fluffy, downy feathers.’

So, with the benefit of modern scientific knowledge, what are the most convincing examples of vestigial structures in humans? The New Scientist top-five list runs as follows: the vomeronasal organ, goose bumps, Darwin’s point, the tail bone and wisdom teeth (see ‘Top five human vestiges’ below). There are undoubtedly more: it depends how wide you cast your net, says Müller. Some blood vessels have become reduced in size and function over time, and thinking smaller still, there must be chemical messengers and genes that qualify.

As the late evolutionary biologist Stephen Jay Gould pointed out, nobody ever said evolution was perfect. The existence of something as spectacularly de trop as the ostrich wing is only a problem for those who believe in an intelligent designer. On the other hand, the list of vestigial organs should still be considered a work in progress. Anything that appears to be entirely without function is suspicious, says Müller, and probably just waiting to be assigned one. Whether we are talking about useless vestiges or anatomical structures that have taken on a new lease of life, however, it is hard to ignore the evidence that human beings are walking records of their evolutionary past.

Many animals secrete chemical signals called pheromones that carry information about their gender or reproductive state, and influence the behaviour of others. In rodents and other mammals, pheromones are detected by a specialised sensory system, the vomeronasal organ (VNO), which consists of a pair of structures that nestle in the nasal lining or the roof of the mouth. Although most adult humans have something resembling a VNO in their nose, neuroscientist Michael Meredith of Florida State University in Tallahassee has no hesitation in dismissing it as a remnant.

‘If you look at the anatomy of the structure, you don’t see any cells that look like the sensory cells in other mammalian VNOs,’ he says. ‘You don’t see any nerve fibres connecting the organ to the brain.’ He also points to genetic evidence that the human VNO is non-functional. Virtually all the genes that encode its cell-surface receptors – the molecules that bind incoming chemical signals, triggering an electrical response in the cell – are pseudo-genes, and inactive.

So what about the puzzling evidence that humans respond to some pheromones? The late Larry Katz and a team at Duke University, North Carolina, found that as well as the VNO, the main olfactory system in mice also responds to pheromones. If that is the case in humans too then it is possible that we may still secrete pheromones to influence the behaviour of others without using a VNO to detect them.

Though goose bumps are a reflex rather than a permanent anatomical structure, they are widely considered to be vestigial in humans. The pilomotor reflex, to give them one of their technical names, occurs when the tiny muscle at the base of a hair follicle contracts, pulling the hair upright. In birds or mammals with feathers, fur or spines, this creates a layer of insulating warm air in a cold snap, or a reason for a predator to think twice before attacking. But human hair is so puny that it is incapable of either of these functions.

Goose bumps in humans may, however, have taken on a minor new role. Like flushing, another thermoregulatory mechanism, they have become linked with emotional responses – notably fear, rage or the pleasure, say, of listening to beautiful music. This could serve as a signal to others. It may also heighten emotional reactions: there is some evidence, for instance, that a music-induced frisson causes changes of activity in the brain that are associated with pleasure.

Around the sixth week of gestation, six swellings of tissue called the hillocks of Hiss arise around the area that will form the ear canal. These eventually coalesce to form the outer ear. Darwin’s point, or tubercle, is a minor malformation of the junction of the fourth and fifth hillocks of Hiss. It is found in a substantial minority of people and takes the form of a cartilaginous node or bump on the rim of their outer ear, which is thought to be the vestige of a joint that allowed the top part of the ancestral ear to swivel or flop down over the opening to the ear.

Technically considered a congenital defect, Darwin’s point does no harm and is surgically removed for cosmetic reasons only. However, the genetics behind it tell an interesting tale, says plastic surgeon Anthony Sclafani of the New York Eye and Ear Infirmary. The trait is passed on according to an autosomal dominant pattern, meaning that a child need only inherit one copy of the gene responsible to have Darwin’s point. That suggests that at one time it was useful. However, it also has variable penetration, meaning that you won’t necessarily have the trait even if you inherit the gene. ‘The variable penetration reflects the fact that it is no longer advantageous,’ Sclafani says.

A structure that is the object of reduced evolutionary pressure can, within limits, take on different forms. As a result, one of the telltale signs of a vestige is variability. A good example is the human coccyx, a vestige of the mammalian tail, which has taken on a modified function, notably as an anchor point for the muscles that hold the anus in place. The human coccyx is normally composed of four rudimentary vertebrae fused into a single bone. ‘But it’s amazing how much variability there is at this spot,’ says Patrick Foye, director of the Coccyx Pain Center at New Jersey Medical School in Newark. Whereas babies born with six fingers or toes are rare, he says, the coccyx can and often does consist of anything from three to five bony segments. What’s more, there are more than 100 medical reports of babies born with tails. This atavism arises if the signal that normally stops the process of vertebrate elongation during embryonic development fails to activate on time.

Most primates have wisdom teeth (the third molars), but a few species, including marmosets and tamarins, have none. ‘These are probably evolutionary dwarfs,’ says anthropologist Peter Lucas of George Washington University, Washington DC. He suggests that when the body size of mammals reduces rapidly, their jaws become too small to house all their teeth, and overcrowding eventually results in selection for fewer or smaller teeth.3 This seems to be happening in Homo sapiens.

Robert Corruccini of Southern Illinois University in Carbondale says the problem of overcrowding has been exacerbated in humans in the past four centuries as our diet has become softer and more processed. With less wear on molars, jaw space is at an even higher premium, ‘so the third molars, the last teeth to erupt, run out of space to erupt’, he says. Not only are impacted wisdom teeth becoming more common, perhaps as many as 35 per cent of people have no wisdom teeth at all, suggesting that we may be on an evolutionary trajectory to losing them altogether.

If you want to read about other things that do nothing, go to ‘Busy doing nothing’ on page 93.

If you want to read about other things that do nothing, go to ‘Busy doing nothing’ on page 93.

Anaesthetics are incredibly valuable drugs. After you’ve received one, you feel absolutely nothing, which is why they are so useful in surgery. They also show great promise in helping to answer one of humanity’s biggest questions – what is consciousness? Linda Geddes finds out more, some of it from personal experience.

I walk into the operating theatre feeling vulnerable in a draughty gown and surgical stockings. Two anaesthetists in green scrubs tell me to stash my belongings under the trolley and lie down. ‘Can we get you something to drink from the bar?’ they joke, as one deftly slides a needle into my left hand.

I smile weakly and ask for a gin and tonic. None appears, of course, but I begin to feel light-headed, as if I really had just knocked back a stiff drink. I glance at the clock, which reads 10.10 am, and notice my hand is feeling cold. Then, nothing.

I have had two operations under general anaesthetic in 12 months. On both occasions I awoke with no memory of what had passed between the feeling of mild wooziness and waking up in a different room. Both times I was told that the anaesthetic would make me feel drowsy, I would go to sleep, and when I woke up it would all be over.

What they didn’t tell me was how the drugs would send me into the realms of oblivion. They couldn’t. The truth is, no one knows.

The development of general anaesthesia has transformed surgery from a horrific ordeal into a gentle slumber. It is one of the commonest medical procedures in the world, yet we still don’t know how the drugs work. Perhaps this isn’t surprising: we still don’t understand consciousness, so how can we comprehend its disappearance?

That is starting to change, however, with the development of new techniques for imaging the brain or recording its electrical activity during anaesthesia. ‘In the past five years there has been an explosion of studies, both in terms of consciousness, but also of how anaesthetics might interrupt consciousness and what they teach us about it,’ says George Mashour, an anaesthetist at the University of Michigan in Ann Arbor. ‘We’re at the dawn of a golden era.’

Consciousness has long been one of the great mysteries of life, the universe and everything. It is something experienced by every one of us, yet we cannot even agree on how to define it. How does the small sac of jelly that is our brain take raw data about the world and transform it into the wondrous sensation of being alive? Even our increasingly sophisticated technology for peering inside the brain has, disappointingly, failed to reveal a structure that could be the seat of consciousness.

Altered consciousness doesn’t only happen under a general anaesthetic of course – it occurs whenever we drop off to sleep, or if we are unlucky enough to be whacked on the head. But anaesthetics do allow neuroscientists to manipulate our consciousness safely, reversibly and with exquisite precision.

It was a Japanese surgeon who performed the first known surgery under anaesthetic, in 1804, using a mixture of potent herbs. In the west, the first operation under general anaesthetic took place at Massachusetts General Hospital in 1846. A flask of sulphuric ether was held close to the patient’s face until he fell unconscious.

Since then, a slew of chemicals have been co-opted to serve as anaesthetics, some inhaled, like ether, and some injected. The people who gained expertise in administering these agents developed into their own medical specialty. Although long overshadowed by the surgeons who patch you up, the humble ‘gas man’ does just as important a job, holding you in the twilight between life and death.

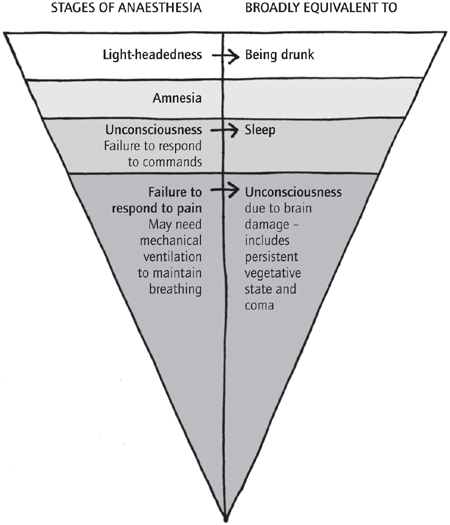

Consciousness may often be thought of as an all-or-nothing quality – either you’re awake or you’re not – but as I experienced, there are different levels of anaesthesia (see figure below). ‘The process of going into and out of general anaesthesia isn’t like flipping a light switch,’ says Mashour. ‘It’s more akin to a dimmer switch.’

A typical subject first experiences a state similar to drunkenness, which they may or may not be able to recall later, before falling unconscious, which is usually defined as failing to move in response to commands. As they progress deeper into the twilight zone, they fail to respond to even the penetration of a scalpel – which is the point of the exercise, after all – and at the deepest levels may need artificial help with breathing.

You are feeling sleepy

Losing consciousness under anaesthesia is like turning down a dimmer switch

These days anaesthesia is usually started off with injection of a drug called propofol, which gives a rapid and smooth transition to unconsciousness, as happened with me. (This is also what Michael Jackson was allegedly using as a sleeping aid, with such unfortunate consequences.) Unless the operation is only meant to take a few minutes, an inhaled anaesthetic, such as isoflurane, is then usually added to give better minute-by-minute control of the depth of anaesthesia.

So what do we know about how anaesthetics work? Since they were first discovered, one of the big mysteries has been how the members of such a diverse group of chemicals can all result in the loss of consciousness. Many drugs work by binding to receptor molecules in the body, usually proteins, in a way that relies on the drug and receptor fitting snugly together like a key in a lock. Yet the long list of anaesthetic agents ranges from large complex molecules such as barbiturates or steroids to the inert gas xenon, which exists as mere atoms. How could they all fit the same lock?

For a long time there was great interest in the fact that the potency of anaesthetics correlates strikingly with how well they dissolve in olive oil. The popular ‘lipid theory’ said that instead of binding to specific protein receptors, the anaesthetic physically disrupted the fatty membranes of nerve cells, causing them to malfunction.

In the 1980s, though, experiments in test tubes showed that anaesthetics could bind to proteins in the absence of cell membranes. Since then, protein receptors have been found for many anaesthetics. Propofol, for instance, binds to receptors on nerve cells that normally respond to a chemical messenger called GABA. Presumably the solubility of anaesthetics in oil affects how easily they reach the receptors bound in the fatty membrane.

But that solves only a small part of the mystery. We still don’t know how this binding affects nerve cells, and which neural networks they feed into. ‘If you look at the brain under both xenon and propofol anaesthesia, there are striking similarities,’ says Nick Franks of Imperial College London, who overturned the lipid theory in the 1980s. ‘They must be triggering some common neuronal change, and that’s the big mystery.’

Many anaesthetics are thought to work by making it harder for neurons to fire, but this can have different effects on brain function, depending on which neurons are being blocked. So brain-imaging techniques such as fMRI, which tracks changes in blood flow to different areas of the brain, are being used to see which regions of the brain are affected by anaesthetics. Such studies have been successful in revealing several areas that are deactivated by most anaesthetics. Unfortunately, so many regions have been implicated that it is hard to know which, if any, are the root cause of loss of consciousness.

But is it even realistic to expect to find a discrete site or sites acting as the mind’s ‘light switch’? Not according to a leading theory of consciousness that has gained ground in the past decade, which states that consciousness is a more widely distributed phenomenon. In this ‘global workspace’ theory, incoming sensory information is first processed locally in separate brain regions without us being aware of it.1 We only become conscious of the experience if these signals are broadcast to a network of neurons spread through the brain, which then start firing in synchrony.

The idea has recently gained support from recordings of the brain’s electrical activity using electroencephalograph (EEG) sensors on the scalp, as people are given anaesthesia. This has shown that as consciousness fades there is a loss of synchrony between different areas of the cortex – the outermost layer of the brain important in attention, awareness, thought and memory.2

This process has also been visualised using fMRI scans. Steven Laureys, who leads the Coma Science Group at the University of Liège in Belgium, looked at what happens during propofol anaesthesia when patients descend from wakefulness, through mild sedation, to the point at which they fail to respond to commands. He found that while small ‘islands’ of the cortex lit up in response to external stimuli when people were unconscious, there was no spread of activity to other areas, as there was during wakefulness or mild sedation.3

A team led by Andreas Engel at the University Medical Centre in Hamburg has been investigating this process in still more detail by watching the transition to unconsciousness in slow motion. Normally it takes about 10 seconds to fall asleep after a propofol injection. Engel has slowed it down to many minutes by starting with just a small dose, then increasing it in seven stages. At each stage he gives a mild electric shock to the volunteer’s wrist and takes EEG readings.

We know that upon entering the brain, sensory stimuli first activate a region called the primary sensory cortex, which runs like a headband from ear to ear. Then further networks are activated, including frontal regions involved in controlling behaviour, and temporal regions towards the base of the brain that are important for memory storage.

Engel found that at the deepest levels of anaesthesia, the primary sensory cortex was the only region to respond to the electric shock. ‘Long-distance communication seems to be blocked, so the brain cannot build the global workspace,’ says Engel, who presented the work at the Society for Neuroscience annual meeting in 2010. ‘It’s like the message is reaching the mailbox, but no one is picking it up.’

What could be causing the blockage? Engel has EEG data suggesting that propofol interferes with communication between the primary sensory cortex and other brain regions by causing abnormally strong synchrony between them. ‘It’s not just shutting things down. The communication has changed,’ he says. ‘If too many neurons fire in a strongly synchronised rhythm, there is no room for exchange of specific messages.’

The communication between the different regions of the cortex is not just one-way; there is both forward and backward signalling between the different areas. EEG studies on anaesthetised animals suggest it is the backwards signal between these areas that is lost when they are knocked out.

In October 2011, Mashour’s group published EEG work showing this to be important in people too. Both propofol and the inhaled anaesthetic sevoflurane inhibited the transmission of feedback signals from the frontal cortex in anaesthetised surgical patients. The backwards signals recovered at the same time as consciousness returned.4 ‘The hypothesis is whether the preferential inhibition of feedback connectivity is what initially makes us unconscious,’ he says.

Similar findings are coming in from studies of people in a coma or persistent vegetative state (PVS), who may open their eyes in a sleep–wake cycle, although remaining unresponsive. Laureys, for example, has seen a similar breakdown in communication between different cortical areas in people in a coma. ‘Anaesthesia is a pharmacologically induced coma,’ he says. ‘That same breakdown in global neuronal workspace is occurring.’

Many believe that studying anaesthesia will shed light on disorders of consciousness such as coma. ‘Anaesthesia studies are probably the best tools we have for understanding consciousness in health and disease,’ says Adrian Owen of the University of Western Ontario in London, Canada.

Owen and others have previously shown that people in a PVS respond to speech with electrical activity in their brain. More recently he did the same experiment in people progressively anaesthetised with propofol. Even when heavily sedated, their brains responded to speech. But closer inspection revealed that those parts of the brain that decode the meaning of speech had indeed switched off, prompting a rethink of what was happening in people with PVS.5 ‘For years we had been looking at vegetative and coma patients whose brains were responding to speech and getting terribly seduced by these images, thinking that they were conscious,’ says Owen. ‘This told us that they are not conscious.’

As for my own journey back from the void, the first I remember is a different clock telling me that it is 10.45 am. Thirty-five minutes have elapsed since my last memory – time that I can’t remember, and probably never will.

‘Welcome back,’ says a nurse sitting by my bed. I drift in and out of awareness for a further undefined period, then another nurse wheels me back to the ward, and offers me a cup of tea. As the shroud of darkness begins to lift, I contemplate what has just happened. While I have been asleep, a team of people have rolled me over, cut me open, and rummaged about inside my body – and I don’t remember any of it. For a brief period of time ‘I’ had simply ceased to be.

My experience leaves me with a renewed sense of awe for what anaesthetists do as a matter of routine. Without really understanding how, they guide hundreds of millions of people a year as close to the brink of nothingness as it is possible to go without dying. Then they bring them safely back home again.

If you want to find out more about feeling nothing, go to ‘Ride the celestial subway’ on page 142.

If you want to find out more about feeling nothing, go to ‘Ride the celestial subway’ on page 142.