Level 7: Audio

The human brain is so easily manipulated and enchanted by sound. Great audio has played a starring role in every influential game ever. We all have fond memories of our favorite game’s theme tunes and sound effects. Audio can make or break a game. I’ll say it 100 times in this chapter, until you believe me: audio IS NOT optional. It can’t be left until the end, and it can’t be an afterthought. It must be planned and developed along with the rest of your assets, in order to achieve your artistic vision.

The good news is that, because messing around with music and sound effects has such a dramatic impact, it’s extremely rewarding to experiment with. Even some quick-and-dirty sound effects can add real “pop” to your games and prototypes—and at this stage, we need all the help we can get if we want to craft a winning IGC entry.

In this chapter, we’ll cover more about sound production than we may ever need to know—from the fundamentals of adding (and producing) music and sound effects to polishing and mastering sound for inclusion in games. Along the way, we’ll also make a generic asset loader so we can display a loading screen while we preload our large audio and image files.

Old-school Techniques

Before we explore the current state of audio in HTML5, a little history. Historically, audio has been a second-class citizen on the Web. During the period of mainstream adoption of the internet, the most frequent way you’d encounter any noise at all was via horrific-sounding MIDI tunes embedded in web pages.

MIDI is a simple format (still often used in music production) for storing song information—note pitches, note lengths, vibrato settings and so forth. A song can be encoded in MIDI format and then replayed. MIDI describes only the notes; it doesn’t include any actual audio. Musicians can feed the MIDI data to their high-end synthesizers and drum machines, which will faithfully play back what was recorded.

But internet users in the ’90s didn’t have high-end synthesizers and drum machines. They had cheap sound cards with painful, built-in MIDI sounds. The Web during this era was a cacophony of bad MIDI jingles and pop songs played by children’s toys. It was a dark, dark time.

It was possible to include and control audio files by dynamically including links in the head of a web page, but support across browsers was patchy. The only respite came from third-party Flash plugins. Flash included decent sound-effect and audio playback capabilities for use with animations and games that could be harnessed as a standalone audio player.

This gave rise to libraries that are still sometimes used as fallbacks when support for modern methods fails. The “playback via Flash” technique remained the de facto way to make sound and music on the Web until the uptake of HTML5 and the Web Audio API.

Audio on the Web

The Web Audio API had its first public draft in 2011. It was several years before it became widely available, though it’s now the standard way to play sounds on the Web. But it’s also oh-so-much more than a simple sound player.

While older libraries could only play back pre-recorded files (perhaps with some minor processing ability, such as affecting stereo panning or modifying the volume), the Web Audio API can also manipulate and affect existing audio files, as well as generate sounds from scratch.

The API is modular. It accepts a variety of input sources and can route them through different audio processing units before outputting them to a particular destination (usually your computer speakers). This provides a lot of power and flexibility. That power comes at the cost of complexity. The Web Audio API is unwieldy, and has a steep learning curve.

To simplify things, there’s a more “web-like” companion, the HTML5 Audio element, which is used in the same manner as other HTML entities—via an HTML tag within a web page:

<audio src="/lib/amenBeat.mp3" loop />

And just like other HTML entities, we can create and control them completely via JavaScript. This is one way of getting synchronized sound in our games.

Playing Sounds

Audio in games is not optional. I’m serious! Audio is such a very powerful mechanism for conveying action and meaning that even simple sound effects will dramatically improve the feel of your game.

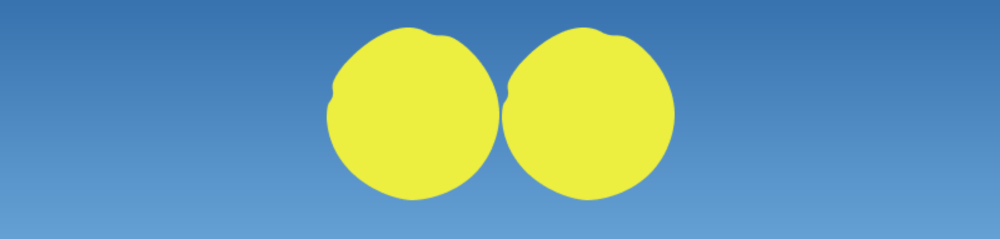

To illustrate this, consider an example. (Of course, the example works much better aurally than in a print, so make sure to try the “plop” demo in the examples.) It goes like this. Imagine two identical circles—one on the left side of the screen, one on the right, and both at the same height. The two circles move horizontally, crossing at the center of the screen. When they reach the other side, they move back again until they reach their start positions.

Something interesting happens when we introduce a simple sound. If, at the very moment the two circles intersect, we make a “plop” sound (like a tennis racket hitting a ball), suddenly there’s a strong illusion that the two circles actually collide and rebound! Nothing changes with the drawing code: our ears hear the sound, and our brains are tricked into thinking a collision has happened. This is exactly the kind of illusion we need to harness for developing immersive experiences.

The plop example also demonstrates the simplest way to get some sound to play via JavaScript. Create a new Audio element and assign the source, in exactly the same way we’d create an Image element:

const plop = new Audio();

plop.src = "./res/sounds/plop.mp3";

This is equivalent to embedding an <audio /> tag in the main web page … but created on demand when required throughout our game. The audio is loaded once and can be played back whenever we want. When the “tennis balls” collide (well, when their x position offsets are < 5 pixels apart) we call play on the Audio element:

if (Math.abs(x1 - x2) < 5) {

plop.play();

}

Besides play, there’s a whole host of properties and operations that can be performed on the sound file. (See MDN for examples.) Because we’ll be using sound in every project (no exceptions!) we’ll integrate this into our core library, wrapping some of the most useful audio functions.

Create a new folder, sound, in the root library folder. Inside that, create a file called Sound.js (and expose it in Pop’s index.js). Sound will be a class that accepts several parameters and exposes an API for controlling audio:

class Sound {

constructor(src, options = {}) {

this.src = src;

this.options = Object.assign({ volume: 1 }, options);

// Configure Audio element

...

}

play(options) {}

stop() {}

}

The parameters are src—the URL to the audio file resource, and an optional options object that will specify the behavior of the sound element. This includes a default parameter volume—the volume level to play back the sound, from 0 (silence) to 1 (maximum loudness).

Object.assign

Object.assign specifies a “target” object, followed by one or more “source” objects. (You can specify multiple arguments after the source target.) The properties from the source objects are all copied to the target. If there are matching keys, the target will be overwritten. We specify volume: 1 as a default. If the options object also has a key volume, this will take precedence.

For the time being, our Sound class is simply a wrapper over Audio that exposes play and stop methods. We’ll enhance it with more features soon. Each of our custom Sound instances will be backed by an HTML5 Audio element:

// Configure Audio element

const audio = new Audio();

audio.src = src;

audio.addEventListener("error", () =>

throw new Error("Error loading audio resource: " + audio.src)

false);

As in the tennis-ball example, we create an Audio element with new Audio() and set the location of the audio file to the src attribute. We also attach an event listener that will fire if any errors occur while loading (especially useful for finding bad file paths!).

Now it’s time to fill out our play and stop API methods. play also accepts an object with options, so we can temporarily override the default settings each time the sound plays, if needed:

play(overrides) {

const { audio, options } = this;

const opts = Object.assign({ time: 0 }, options, overrides);

audio.volume = opts.volume;

audio.currentTime = opts.time;

audio.play();

}

After merging any options in the function parameter with the Sound’s default options, pull out the final volume value and set it on the audio object. By default, the time option is set to 0 (the very beginning of the audio file) and the Audio element’s currentTime is set to time

In our tennis-ball example, we can switch in our new Sound class rather than hand-roll a new Audio object. Because both our API and the Audio element have a play method (and we’re happy with the default options), the only change we have to make is to change our creation code:

const plop = new Sound("res/sounds/plop.mp3");

Let’s make our game a whole lot noisier. Pengolfin’ is just crying out for some audio attention—starting with a catchy theme tune! Have a listen to it. I hope you find it suitable for our game, because it’s the one we’ll make ourselves a little later in this chapter. Load the Sound assets the same way we load Texture assets. In the constructor function of TitleScreen, create an object to group the sounds and load our title song, which is located at res/sounds/theme.mp3:

const sounds = {

plop: new Sound("res/sounds/plops.mp3"),

theme: new Sound("res/sounds/theme.mp3", { volume: 0.6 })

};

Keeping Things Tidy

As with texture assets, we define it at the top of the file. It isn’t necessary to store all our references together in a sounds object. We could assign it directly to a variable (const theme = new Sound("...");), but it helps to keep things organized.

By default, our sounds are played at the maximum volume. If everything plays at maximum, there’s nowhere for us to go if we end up with some sounds that have been recorded quieter than others. (For more on this, see the “Mixing” section later in this chapter. Also see the “These go to eleven” scene from the rockumentary This is Spinal Tap.)

To give us some breathing room, we play the theme song with a volume of 0.6 (60% of the maximum). Eventually, we’ll also want to give ultimate control of the volume to the player via a settings menu. After you’ve defined the sound, it can be played as soon as the game loads, in the TitleScreen constructor:

sounds.theme.play();

A title screen, complete with a snazzy audio accompaniment. However, if you press the space bar to start the game, you’ll notice that the title song continues playing in the main GameScreen. Stop it with the Audio API method pause. We’ll wrap this in a helper method that we’ll call stop:

stop() {

this.audio.pause();

}

I’m calling it stop rather than pause because the play method restarts the audio from the beginning every time, and the net effect is the same as stopping the audio. I’m not sure this was the cleanest implementation, but it’s the most common use case in games, so I’m sticking with it. The most logical time to stop the theme song is after the player presses the space bar (before we leave the screen):

if (controls.action) {

sounds.theme.stop();

this.onStart({..});

}

Playing SFX

Our system isn’t just for playing songs. It works equally as well for playing sound effects—when a player shoots, walks, or collects a power-up. Add the Sound in the entity’s class file, and call play as required.

While we’re here, we may as well start building on the basics of our sound library. The first addition is a property to indicate if a given sound is currently playing or not. We might not want to call play again (for example) if the sound is already playing. To implement it, we need to keep track of a few related actions and events.

The playing property is a boolean flag on the Sound object. It’s initialized to false, just after the other sound options have been defined. At the end of the play method, set the flag to true:

this.playing = true;

And at the end of the stop method, set it to false:

this.playing = false;

This takes care of user actions that start and stop a sound. But we also have to know when the sound has reached the end (when it stops playing on its own). Thankfully, the Audio element fires an ended event especially for this case. Track this in the same way we did for the error event:

audio.addEventListener("ended", () => this.playing = false, false);

Each Sound object now has an accurate playing flag that we can use in our games. For example, to only play the theme song if it’s not already playing, just ask:

if (!sounds.theme.playing) {

sounds.theme.play();

}

The last bit of core functionality we’ll add is the ability to modify the volume of the sound, even if it’s currently playing. To do this, add volume getter and setter methods to the Sound prototype to report and set the Audio element’s volume:

get volume () {

return this.audio.volume;

},

set volume (volume) {

this.options.volume = this.audio.volume = volume;

}

Notice we also store volume in the set method as the new default volume of the sound. This is so that if we set the volume with theme.volume = 0.5, any future play calls will also be at volume 0.5.

Repeating Sounds

By default, all of our sounds are one-shot. They play through from start to end, then stop. That’s perfect for most effects (like power-up twinkles and collision explosions). Other times, we’ll want sounds to loop over and over.

The obvious case is for music loops. You can have a short snippet of a song—say, 16 or 32 beats long—that repeats many times. Looping can also be employed for game sound effects that have to play for an indeterminate length of time. For example, a jetpack should be noisy whenever it’s activated. A bass-heavy wooosh should be blasting for as long as the player is holding down the jetpack button.

To facilitate this, add the loop property as one of our possible options when creating a Sound instance. If looping is set to true, we’ll apply it to the Audio element (via its corresponding loop property):

if (options.loop) {

audio.loop = true;

}

Then we’ll define it in the options object when making our game’s loopable sound element:

const sounds = {

swoosh: new Sound("./res/sounds/swoosh.m4a", {volume: 0.2, loop: true})

};

For our test, if the player is holding down the up button (controls.y() is less than 0), the jetpack is applying its upward force and the relevant woosh sounds should play:

// Player pressed up (jet pack)

if (controls.y < 0) {

sounds.swoosh.play();

...

}

Argh, what is this?! If you test out this version, you’ll notice our jetpack has become a lot noisier—but not in a good way. What’s happening is that for every frame the user accelerates, the play command is issued and the sound restarts from the beginning, creating a horrible stuttering mess. The solution is to guard against this by using the playing property:

if(!sounds.swoosh.playing) {

sounds.swoosh.play();

}

Because the “loop” option is set to true, the sound will play continuously. Even after the user has let go of the up key. Oh dear. To fix this, you need to stop the sound if it’s playing and the user isn’t trying to use their jetpack:

// controls.y is not less than 0

else {

sounds.swoosh.stop();

}

Polyphonic Sounds

In the last section, we witnessed the situation where accidentally hitting play on a sound every frame resulted in unpleasant, stuttered playback. One question you might ask is why it was stuttering and not creating a discordant, clamorous cacophony—a thousand jetpacks sounded at once?

The answer is that the Audio element is monophonic. It can only generate one sound at a time. Which sucks for us: we’d prefer a polyphonic system that can play several instances of the sound simultaneously. Imagine your game has shooting. If the firing rate is faster than the length of the sound file, each time the player fires, the previous sound will be cut off by the next.

Luckily, the Audio API is capable of playing multiple monophonic Audio elements at the same time. We can fake a polyphonic Sound by creating several Audio elements with same audio source. We then play them one after the other! This is known as a sound pool, and is something we’ll want to be able to reuse easily. Let’s add it to the library as sound/SoundPool.js. The pool will be an array of identical Sound instances:

class SoundPool {

constructor(src, options = {}, poolSize = 3) {

this.count = 0;

this.sounds = [...Array(poolSize)]

.map(() => new Sound(src, options));

}

}

The structure of the SoundPool is very similar to the API provided by Sound. The only difference is it requires a parameter that is poolSize: the number of copies of the sound to add to the pool. The SoundPool constructor holds an array (of length poolSize) where each element is mapped to a new Sound object. The result is a series of sounds with exactly the same source and options:

play(options) {

const { sounds } = this;

const index = this.count++ % sounds.length;

sounds[index].play(options);

}

Each time play() is called, we get the index of the current sound in the sound pool. This is done by incrementing the count, then taking the remainder of the number of sounds in the pool, looping over and over the array in order. We then play it with any options provided, exactly the same as if we called play on a Sound element directly.

If we want to call stop on the sound pool, we need to iterate over each sound and stop them all:

// stop ALL audio instances of the pool

stop() {

this.sounds.forEach(sound => sound.stop());

}

And that’s our polyphonic sound pool. It’s used the same way as the regular Sound—except with extra “phonic”! To really hear the effect, we’ll play our plop sound repeatedly every 200ms:

const plops = new SoundPool("res/sounds/plop.mp3", {}, 3);

const rate = 0.2;

let next = rate;

game.run(t => {

if (t > next) {

next = t + rate;

plops.play();

}

});

It sounds fine with a poolSize of 3—but set it lower and you’ll hear the stuttering from earlier. The poolSize should be set on a case-by-case basis in a way that’s optimal for your game. If you set it too small, you’ll hear the sounds being cut off. If you set it too large, you’ll be wasting system resources. It’s best to go for the smallest value possible that still sounds good.

Randomly Ordered Sounds

Like game design, sound design is about intentionally sculpting an experience to meet an artistic vision. When sound design is done well, the players often won’t even notice; it’s just natural and engaging. If it’s done badly, however, it will drive them crazy! The most sure-fire way to make your players go loopy is to repeat an audio phrase over and over and over. The human brain is excellent at recognizing patterns, and also quick to tire of them.

This is usually a problem in games when a single sound is used for a core mechanic that might happen hundreds (or thousands) of times during play—such as shooting a weapon, or the sound of footsteps. In the real world, each footstep will sound ever so slightly different. Different surfaces, different room acoustics, different angles of impact … they all combine to subtly alter the pitch and tone that hits our ears.

Luckily for us, the human brain is also easy to trick. We don’t need too much variation to break the monotony. If we create a few different samples with slightly different sonic characteristics, and play them in a random order, the result will be enough to fool the player.

We’ll call this approach a “sound group”. A sound group is a manager for randomly selecting and playing one from a bunch of Sounds. It can go in the sound folder as SoundGroup.js:

class SoundGroup {

constructor(sounds) {

this.sounds = sounds;

}

}

The SoundGroup expects an array of objects that have play and stop methods (so, either Sound or SoundPool objects from our library). To add one to a game, define the SoundGroup by supplying the list of Sounds to choose from:

const squawk = new SoundGroup([

new Sound("res/sounds/squawk1.mp3"),

new Sound("res/sounds/squawk2.mp3"),

new Sound("res/sounds/squawk3.mp3")

])

If you preview each of the audio files individually, you hear that they’re various takes on a “penguin” squawking. (Honestly, I have no idea what sound a penguin actually makes; it’s just me and a microphone. Jump ahead to the “Sound Production” section if you want to see how it’s done!) It’s the small variations that we’re looking for to create a more natural effect. The number of unique sounds you’ll need will depend on the situation. A few is enough for Pengolfin’, as the sounds don’t happen too often. But more is always better.

Next we need to add the play and stop methods. stop is easy: it’s the same as SoundPool, where we loop over the sounds and stop each of them. And play is only slightly more complicated:

play(opts) {

const { sounds } = this;

math.randOneFrom(sounds).play(opts);

}

It chooses a random element from the sound list and plays it. Now, whenever the penguin hits a surface with force, it squawks out one of the random variations by calling squawk.play().

This approach is simple, but you can build on it to suit your games. For example, you could implement a round-robin approach for selecting the next sound (rather than just randomly), or even create a strategy that combines the two: it chooses randomly, but never plays the same sound twice. Whatever you can do to break up a monotonous repeating sound will be greatly appreciated by your players!

An Asset Manager

Assets are resource files that must be loaded to be used in a game. Because we’ve been running the games locally (serving them from our own computer to our browser) everything loads up pretty much instantly. But when we release our games to the wide world, they’ll live on a server in the sky where others can play them.

Downloading large files over the internet can take a considerable amount of time. What happens if we try to play a sound or render a sprite that hasn’t loaded yet? At the very least, we’ll have invisible sprites or silent audio! Not a good first impression. To remedy this, we should display a loading screen—a progress bar, or animation—so the player knows something is happening, and we can build up some excitement for the game to come.

An asset manager is used to facilitate this and handle the loading of assets. We no longer need to create raw HTMLImageElements (via new Image()) or HTMLAudioElements (via new Audio()) directly, but can instead let the asset manager do it for us. When we want an image, we’ll ask the asset manager for it: Asset.image("res/Images/tiles.png").

Dependencies

ll of the required files can be known before the game starts, thanks to the JavaScript module system. When a module is required, any dependencies of that module are also required. This generates a tree of all the required source code files. If we define our assets at the top level of our source file, we can keep track of necessary files as the tree is being traversed.

Our asset manager will be called Assets.js, and becomes another part of the Pop library. It will manage the loading, progress reporting, and caching of all our game assets. Here’s the skeleton for our first pass at an API for loading game assets:

const cache = {};

let remaining = 0;

let total = 0;

const Assets = {

image(url) {

// Load image

},

sound(url) {

// Load sound

}

};

We define some local variables to keep track of the state of the loading files: a key/value cache, and a couple of counters to keep track of progress. The exported API contains methods to load an image (Assets.image(url)) and a method to load a sound file (Assets.sound(url)). Shortly, we’ll also add some event listeners to inform external users of the loading progress.

The asset manager does some housekeeping operations that are homogeneous regardless of the type of asset to load: checking the cache, updating counters, and so on. We’ll extract this into a function called load:

// Helper function for queuing assets

function load(url, maker) {

if (cache[url]) {

return cache[url];

}

const asset = maker(url, onAssetLoad);

remaining++;

total++;

cache[url] = asset;

return asset;

}

There’s quite a lot going on in this little function. It revolves around the cache object that’s defined at the start of the file. cache acts as a hash map that links URL strings to DOM asset objects. For example, after we’ve loaded a couple of files, the cache may look like this:

{

"res/Images/bunny.png": HTMLImageElement,

"res/sounds/coins.mp3": HTMLAudioElement,

...

}

If we ask for the same URL twice, we get the object from the cache and don’t bother loading it again. This is the if check at the start of the load function: if the cache key already exists, return the asset straight away, and we’re done.

Browser Caching

It would obviously be really bad to load an image ten times if it’s used in ten different entities, but in reality the browser itself has been acting as a cache for us already. It remembers if we recently loaded a file and retrieves it from its own cache.

If the key isn’t found, the asset needs to be loaded. The remaining and total counts are incremented. total is just for our records (so we can calculate a percentage for showing a progress bar on the loading screen) and remaining will decrement every time an asset loading completes. When it gets back to 0, everything is loaded.

The load function requires a second parameter called maker. maker is a function that will do the actual asset creation. We’ll have one for images and one for sounds (and more later, if required—for example, to load JSON as an asset). The output of load will be the reference to the asset—so we can return this directly from our Assets.image API:

// Load an image

image(url) {

return load(url, (url, onAssetLoad) => {

const img = new Image();

img.src = url;

img.addEventListener("load", onAssetLoad, false);

return img;

});

}

The image loader returns the result of calling load. The parameters we feed it will be the user-supplied URL, and the function that creates an HTMLImageElement and sets the source. It then attaches an event listener that will fire as soon as the image is done loading. When the load function calls our image loader function, it supplies us this function to call when loading is done. This is what enables our asset manager to keep track of what remains to be loaded.

Higher Order Functions

A function that accepts a function as a parameter, or returns a function as a result, is called a higher-order function. A normal function abstracts over values, acting as a black box operating on numbers and strings. A higher-order function abstracts over actions. They let you reuse and combine behavior which can then become the input for even more complex combinations. For our purpose, higher-order functions let us abstract the concept of monitoring the state of a loading asset.

The key step for our asset manager is that a maker function must call the onAssetLoad function (which we’ll define shortly) as soon as loading is done. For an image, we attach the function to an event listener that’s called when the image’s built-in load event fires.

Now that we can preload, how do we work this into our library? Ideally, we’d prefer not to change how the end user makes games with Pop, so we’ll take care of this under the hood, in Texture.js:

import Assets from "./Assets.js";

class Texture {

constructor(url) {

this.img = Assets.image(url);

}

}

If you compare before and after, not much has changed: the work of creating the DOM element and setting the source attribute has been moved to the asset loader, rather than directly in the library. Existing games that use Texture will still continue to work as they did before.

Now we need to do it all over again for sound files. Again, we use the load helper method, but we create a new Audio element. An audio file is loaded (enough to play some audio, at least) when the canPlay event fires. Because this event can potentially fire multiple times, the event handler should be removed after the first time it’s called:

// Load an audio file

return load(url, (url, onAssetLoad) => {

const audio = new Audio();

audio.src = url;

const onLoad = e => {

audio.removeEventListener("canplay", onLoad);

onAssetLoad(e);

};

audio.addEventListener("canplay", onLoad, false);

return audio;

}).cloneNode();

This is the same idea with our Image loader, but with one slight difference: at the end, we call cloneNode() to create a clone of the Audio node. If we didn’t do this, the same reference would be returned each time. If we used the reference as part of a SoundGroup, every time we restarted one sound, all sounds would reset.

As with Texture, we can update Sound.js to use the manager to load the asset:

class Sound {

constructor(src, options) {

...

// Configure the Audio element

const audio = Assets.sound(src);

...

}

}

Asset Manager Events

So far, we’ve gained nothing from moving asset loading to an asset manager. To make it useful, we need a way to expose some events for us to listen to while the assets are loading. We won’t run our game until the asset manager gives us the go-ahead:

Assets.onProgress((done, total) => {

console.log(`${done / total * 100}% complete`);

});

Assets.onReady(() => {

// Remove loading screen & start the game

titleScreen();

Game.run();

});

The function in onProgress will get called after each individual resource loads, and the function in onReady will get called once (and only once) when all resources are loaded. The event management coordination is done inside the onAssetLoad function that gets called by the “maker” functions we created above.

const readyListeners = [];

const progressListeners = [];

let completed = false;

function onAssetLoad(e) {

if (completed) {

console.warn("Warning: asset defined after preload.", e.target);

return;

}

// Update listeners with the new state

...

}

The asset manager gets a flag, completed (which notes if everything loaded) as well as two new arrays for storing any callback functions that get registered from our game. As each asset completes, it calls onAssetLoad. We start with a sanity check: if we’ve already decided everything is loaded, it warns us if we have a new file outside our system. (This could happen if we declared a new Texture object dynamically inside an entity.) The warning will let the library user know they should move the declaration up and out of the entity class so it can be properly preloaded.

// Update listeners with the new state

remaining--;

progressListeners.forEach(cb => cb(total - remaining, total));

if (remaining === 0) {

// We're done loading

done();

}

Otherwise, we decrement the count of remaining files to load. First, we call all functions in progressListeners and keep them posted with how things are progressing. If there are 0 remaining files, we set completed to true and call any callback functions in readyListeners, via a done function:

function done() {

completed = true;

readyListeners.forEach(cb => cb());

}

The last step is to expose a way to add callbacks via the Assets API. All we have to do is add the callback function to either the readyListeners or progressListeners array. For onReady, we also check if remaining is 0. If this is the case, the game doesn’t have any assets to load at all, so it calls done itself:

onReady(cb) {

readyListeners.push(cb);

// No assets to load in this game!

if (remaining === 0) {

done();

}

},

onProgress(cb) {

progressListeners.push(cb);

},

onProgress and onReady will now notify us on the state of the world. We’ll use onReady to prevent a game from running before all its assets are loaded by integrating it with our Game.js helper. Only after the “ready” event fires can we start the main loop:

import Assets from "./Assets.js";

class Game {

run(gameUpdate = () => {}) {

Assets.onReady(() => {

// Normal game loop

});

}

}

This is a simple asset loader that could be improved in several ways. We could introduce more error handling capabilities, as well as take on more responsibility for any cross-browser differences with various file formats. It could even be extended to let us load assets in batches rather than needing to load everything up front. However, it will serve us well enough for most projects, and we can be confident that everything will be loaded when serving our games to our players.

Sound Production

That’s enough messing around with housekeeping details. We came here to make some noise. But wait a minute: how do you actually make noise? I mean, where does the sound come from? How can we capture or generate it? How can we modify it? How can we make it sound good? The answer is sound production! It’s an expansive and supercool field all of its own. We’ll borrow some of the techniques and essentials for making our games sound as good as they look.

Tools and Software

There’s a long history of using computers to generate sound. Much of today’s modern music production is now done in software, but early software synthesizers (such as Csound from 1985) could only work offline—with long programs fed in as input and (some time later) raw audio files generated as output. Since then, computers have become vastly more powerful, and your laptop can now run a recording studio’s worth of real-time software synthesizers simultaneously without breaking a sweat. This has opened up a huge market for off-the-shelf software synths that run the gamut from faithful recreations of classic audio hardware to wacky and original inventions.

Deep Note

The famous THX “Deep Note” that played at the start of movies was generated offline via a script and then recorded onto high-quality tape to be played in cinemas. However, the composer (programmer Dr James “Andy” Moorer) used random numbers as part of the score. He was never again able to reproduce the exact, well-known original.

These days, it’s most common that audio software is one of two kinds—a host or a plugin. A host is the workhorse of your music creation environment. It lets you record, sequence, and cut/copy/paste the model of sound. You can record notes and play them back with any audio plugin. Plugins are individual synthesizers (or effects) that will work across hosts. These define what sounds you can make, and how you can process them.

Of the hosts, there are two main types (though the distinction is often murky): digital audio workstations (DAWs) and sound editors. DAWs are to music creation what IDEs are to coding. They let you compose, edit, assemble, arrange, and effect your audio creations in a single application. Sound editors generally focus on tweaking, editing, and mastering your final sound rather than generating new sounds.

The original DAWs attempted to copy the analog tools that had been used in music studios for decades. They can record and play back multiple audio tracks at the same time, which can be mixed and modified individually. Back when disk space and processing power were limited, a modest home computer would struggle to play back more than a couple of high-quality audio files, so early DAWs like Pro Tools were a combination of expensive audio hardware and software. As computers got faster and cheaper, more raw audio processing could be done purely in software.

Because early 8-bit home computers were so limited in power, they relied on dedicated sound chips to produce music and effects. To score and compose songs, the computer musician would use a music tracker. Trackers worked very differently from analog, multi-track recorders. They did have multiple “tracks” of sounds that played back simultaneously to make a song, but rather than long, continuous audio recordings, they were made of instructions for the built-in sound chips. The 16-bit era extended this to also allow sound samples (short sound files) to be sequenced.

For example, there might be a sample for a kick drum. Using an instruction like C-5 01 15 would play a C note in the 5th octave, using the 01 instrument sample, at volume 15. Because the samples were so small, long compositions took up much less space. Additionally, player software could be embedded in games, allowing songs to change dynamically in response to the state of the game. A huge music scene (the tracker scene) grew from the early trackers that continues to this day.

Today, most DAWs are a hybrid of traditional, multi-track recorder, MIDI editor and music tracker. Cubase, Logic Pro, and Ardour (open source) follow the traditional multi-track recording approach. Max MSP (or the open-source equivalent Pure Data) follows in the footsteps of CSound—but in real time, and with GUI. Re-noise, Sun Vox (open source), and FL Studio are more closely related with music trackers than multi-track recorders. Ableton Live and BitWig Studio are good examples of DAWs that broke away from early software music packages and focused on music performance and experimentation. They’re extremely popular with DJs and electronic music producers.

Sound-effect Generators

Actually, let’s not get ahead of ourselves. DAWs are heavy, serious packages—especially if you just want a few quick sounds for your game. One far less intimidating piece of software that should be a part of every game developer’s audio toolkit is a sound-effect generator. Sound-effect generators are great fun to play with. They’re standalone synthesizers (that is, not a plugin for a DAW) designed to make it absurdly easy to create random, 8-bit sound effects such as explosions, pickup noises, power-up effects and more—no experience necessary!

There are many sound-effect generators, but the most well-known one is called Sfxr by Tomas Pettersson (aka DrPetter), released in 2007. Since then, there have been various clones for different platforms, such as Bfxr, a Flash port you can try on the Web. Load up the sound generator and hit “randomize” until it makes a sound you want: it can’t get any easier than that! If you find a sound you kind of like, but it’s not perfect, hit the “mutation” button to get other similar sounds. And if that’s not quite right either, get tweaking the hundreds of parameters!

That Sounds Familiar

Sound-effect generators are extremely fun and practical, but you’re not going to win any awards for originality using these sounds. The ease and popularity of effects generators means the results are very recognizable. If you just don’t have time to improve them, then go for it! Otherwise, consider adding extra effects (or layering them with other sounds) in your DAW or sound editor to make them original again!

Samples and DIY Recording

The job of game creator requires you to wear many hats. One of them is a “Foley artist” hat. A Foley artist creates sound effects and incidental sounds. If an actor puts keys in their pocket, the Foley artist makes the jangly sound. Often the method of producing the sound doesn’t relate at all to what’s seen on screen. Flapping a pair of leather gloves for flying bat wings, or punching some Corn Flakes for walking on gravel: a Foley artist does whatever it takes to create the illusion of natural sounds.

Getting the perfect sound to match an action in your game will require a lot of searching and testing. But often you won’t have that kind of time: you just need to get some sounds in the game right now. The tried-and-true method for making sounds fast is … mouth sounds! Humans are pretty good at making noise, and what easier way to get kind of the sound you’re after than gurgling directly into your computer microphone?

You don’t need to be a beat-box expert to get usable effects. With even the smallest amount of tweaking, you can get some very effective results. They also act as excellent placeholders and references while you develop your game. Some sounds (such as those of the undead groaning zombies in Minecraft) stay there forever.

The first thing you’ll need is a microphone. Your computer likely has a built-in microphone, or you can use the mic in your cellphone earbuds. Anything that lets audio in. Next, we need some software for recording. You probably also have something already on your computer to take audio notes, but we’ll need to level up and use a sound editor.

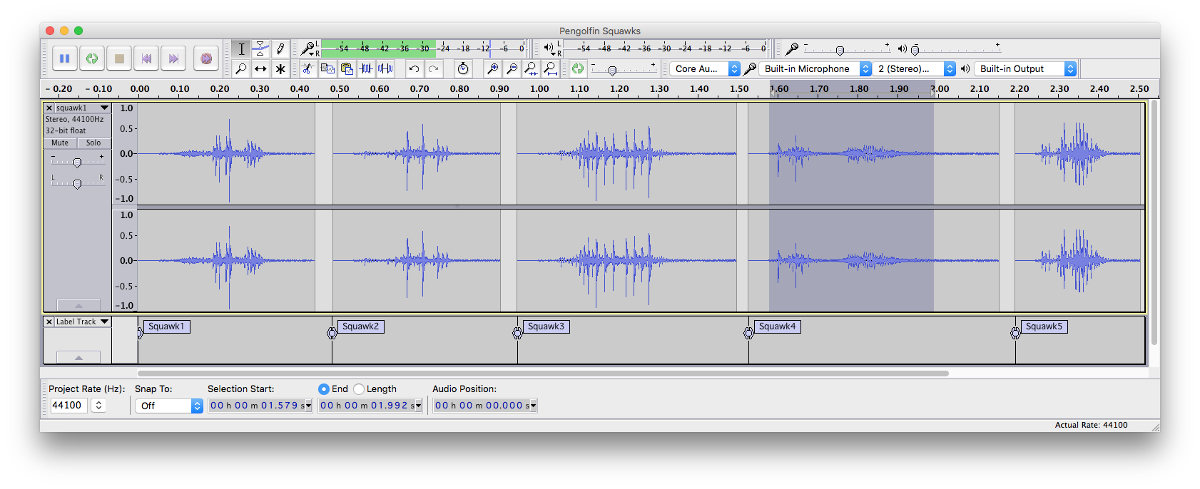

A sound editor is the Photoshop of audio—the Swiss army knife of sound. It lets you record, cut, paste, tweak, and polish your audio files. The best free, cross-platform sound editor is Audacity. It’s been around for a long time, and although it feels a bit clunky in comparison to some of its expensive rivals, it’s very capable, and more than adequate for our needs.

To prepare for recording, create a new sound file (FILE -> NEW) and select your audio input device. (It should be correct by default, but there’s a small icon of a microphone, with a dropdown box listing all the possible input methods.) You can also choose to record either stereo or mono audio, but stereo is the default. The human voice is a monophonic sound source (and many microphones are mono), but it’s best to work in stereo for when we do post-processing effects that apply individually on each channel.

Then hit the red circular record button. Audio data should be filling the screen—and if you make some noise, it should be clearly reflected in the waveform. We’re in business. Start making some noises: bloops, beeps, swooshes, squelches. Whatever you need!

If we’re going to start polishing up Pengolfin’ for the IGC, we should start with some general penguin sounds. I’m not 100% sure what “general penguin sounds” are, but we at least need some penguin grunts and squawks as it flies around, colliding with mountains and such. We’ll also want a solid thwack when propelling the projectile. We’ll record a ruler swatting a phone book to get that.

After you’ve done a bunch of squawking, grunting, and thwacking, hit the stop button and you’ll have something to work with. Play the audio back and listen for promising candidates. Also keep your ear out for possible issues with the audio—crackling or popping or background noises. These can be easy to miss if you’re not paying close attention.

Don’t Go to Eleven

For the best quality, you want to be as loud as possible without causing “clipping”. Clipping occurs when the sound level goes above the maximum capacity of the recording system. Any data outside of this range will be chopped off. If you zoom in close and notice flat areas in your audio, it has clipped and will sound distorted. If you record too quietly, you’ll have more noise (background hiss) in the final result. Record raw sounds as cleanly and loudly as possible while avoiding any clipping.

Cropping and Editing

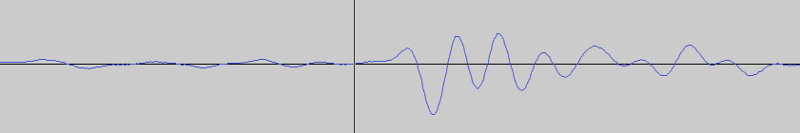

With source material in hand, we can start chopping it up and “effecting” it. Start by selecting the area that contains a sound you like, with a generous amount of space before and after. Copy it, then create a new window and paste it in. We need to find good start and end points for the sample. This can be important, because if you’re not careful you can end up with very audible “click” sounds.

There are two ways to avoid this. One is to zoom in extremely close—as far as your audio editor will allow—and cut the waveform at a zero crossing. This is the point where the waveform crosses over from positive to negative, or negative to positive. The volume level here is 0, so we don’t get a click. Removing everything before the zero crossing will give us a nice clean start to our sample.

The other way we can achieve this is by adding a tiny fade at the beginning and end of the sample. To do this, zoom in quite close and select a very short section at the start of the sound. Then click on Effect in the main menu and select Fade In. The start of the selection is attenuated to zero (silent), and it linearly rises to the original volume. You can do the same at the end of the sample, but with Fade Out. The fade length should be short enough not to be audible, but avoid any clicks or pops.

Often your sound will naturally have a long tail, where the sound has finished and is fading out to silence. Because of noise introduced along the recording signal path, it’s a good idea to have a fade-out that’s quite long too. Select the area where the sound has become almost silent and drag until the end. Fade out this entire section to create a noise-free transition.

Layering Sounds

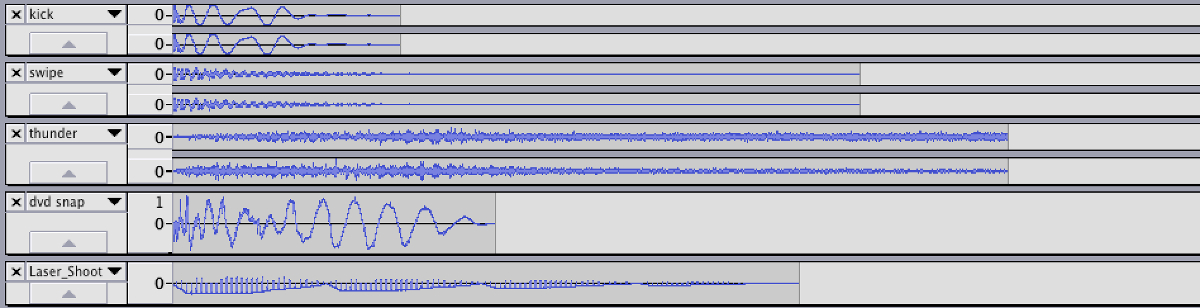

An excellent tip for making impressive audio effects is to layer multiple sounds that make up a single effect. The swoosh-thwack for launching our penguin can be beefed up with additional sounds. To make a swoosh-ier swoosh, I recorded swinging a broken car radio aerial. To make a thwack-ier thwack, I mixed in a bass drum sound for some low-end punch. I snapped a DVD-R in half to get a nice crack sound. Then I layered in a very faint sound of rolling thunder that I found copyright free on FreeSound.com. Finally, I added a wobbly sound from Sfxr. The result is the swooshiest, thwackiest sound you’ve ever heard.

Layering (combined with mixing and effecting) can turn pedestrian sounds into beasts. Experiment relentlessly, and remember to always listen to your effect in context with your graphics. Sometimes sounds that seem like they should be perfect just don’t work in a game, while other things you never dreamed would work end up bringing your game to life!

Zany Effects

Getting good source material is the best way to ensure a great end result (“garbage in, garbage out”, as they say). If you’re stuck with something less than stellar, or you just want to see how far you can push your recordings, then sometimes you can strike gold by getting wacky with audio effects.

Most audio editors will have a selection of built-in effects (usually with the option to install third-party plugins for more variety). The best thing you can do when starting out is go nuts with the built-in effects and learn what they all do! You’ll probably go massively overboard with them. Back up often, and every once in a while go back and make sure you didn’t lose anything that made the original special.

When making effects for games, some commonly useful effects are pitch-shifting and delays. Pitch-shifting means making a sound play at a different pitch than it was recorded at. It can produce great results when shifting in either direction: shift down, and our penguin-squawks sound like dinosaur growls; shift up, and our penguin becomes a lot more comical. Pitch-shifting also makes things you record not sound like you—which can help if you’re embarrassed when listening to your own voice!

Delays are when a sound is fed back to itself to produce echoes, chorus, and phaser effects. A nice chorus or phaser effect will fatten up a dull sound or make it more futuristic. Echoes will change the feeling of the “space” in a game. Be consistent with sounds that are supposed to be playing in the same space as each other.

Don’t be afraid to chain effects either. Reverse your sound, pitch-shift it up a couple of semitones, overlay a fat swirling chorus … BOOM! As with any software, it’ll take a while to learn what all the tools do and when’s the best time to use them. Sometimes you’ll conjure up an effect that sounds absolutely fantastic in your editor, but doesn’t work aesthetically with your game. Toss it out and save it for another project! Sounds must contribute to the aesthetic of your game.

Mastering Effects

Before you go too crazy with effects, you should ensure your raw recordings are as usable as possible. There’s a handful of core effects and techniques that are used to make more professional-sounding effects and recordings. These subtle (less zany) post-processing effects are often used as part of a track’s mastering phase (that we’ll look at more later), but can also help to clean up and improve the raw input.

Firstly, if you recorded the sound too quietly, you can use a volume amplifier (Effect -> Amplify in Audacity) to bring the overall level up. Amplifying raises the level of all sounds equally. If you do too much, the loudest part of the sound will clip and create distortion. To avoid this, you can use the normalize effect that will amplify the sound as much as possible without clipping.

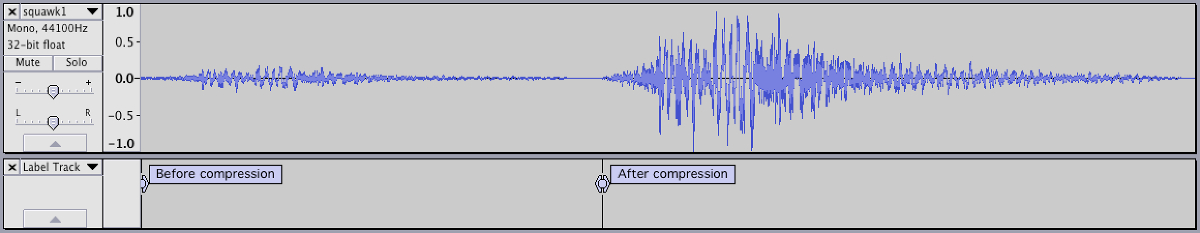

Often, this still isn’t good enough. A lot of times you won’t be able to make the quiet parts of your sound loud enough without clipping the loud parts. The solution is to apply an audio compressor. A compressor magically reduces only loud parts of your sound file (you can define what “loud” means on a sound-by-sound basis). It then applies an overall makeup gain so the entire sound becomes louder without introducing clipping.

This Is Not the Same as File Size Compression!

Don’t confuse audio compression with file compression. This has nothing to do with file size, and everything to do with audio volume!

Audio compression is a black art, but is really important for making your music work in a variety of playback environments. Done well, compression can make your overall mix louder, more impressive, and more “professional”. But if you overdo it, the sound can lose clarity and punch, or introduce too much background hiss.

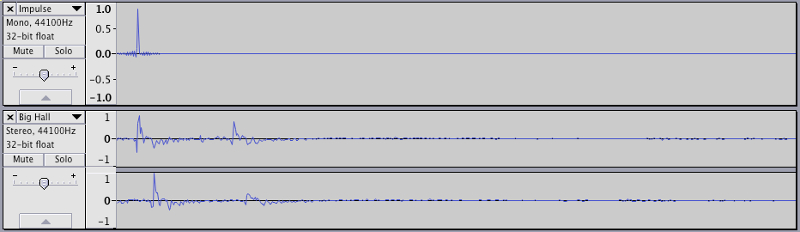

Another essential effect—probably the essential effect—is reverb. Reverb adds a slight echo to a recording, giving it a feeling of space. As humans, we rely on natural reverb for locating sound sources in our environment. If you clap your hands in a large concrete hall, it sounds very different from clapping your hands in your carpeted bedroom. Your brain uses reverb to understand direction and distances. Using this in your games can be very powerful. Using it in your music can make things sound more natural and real and (like singing in the shower) can make things sound better.

The final core effects you should be familiar with are equalizers (EQs) and filters. These might not always be necessary (and filters can also be abused as a creative effect). They’re familiar fixtures of most home stereos, and involve selectively boosting or cutting certain frequency ranges in the sound.

Filters come in a variety of types. The most common filters are the high-pass (reduces low frequencies), low-pass (reduces high frequencies), and band-pass (reduces high and low frequencies, leaving the middle area untouched). There are thousands of filter plugins available, and they’re an excellent tool for radically shaping a sound. Even our humble mouth sounds can sound like massive explosions with a chunky, low-pass filter, some heavy compression, and a wash of echoey reverb.

These are the main DSP effects for improving and sculpting good raw sounds. During this phase, you must carefully and constantly A/B test any changes you make to ensure you’re actually improving things. However, when it comes to effects for creative purposes, there are no rules and no limits—so feel free to apply as many effects as extremely as you can!

Recording Tips

For the purposes of game development, we can happily adopt the “whatever works” approach to sound design: whatever gets good noises in our game is good enough. But sound design is also a field with a long history, and there are lots of rules of thumb and general guidelines to help us achieve better results …

Use the Best Gear You Can Get Your Hands On

There’s a world of difference between the microphone in your laptop and a Neumann U87 (which will set you back several thousand dollars). You can pick up a decent microphone pretty cheaply these days. Just remember that a microphone captures analog sounds, which need to be converted to digital for use in our games. Just like the mic, the analog-to-digital converters in your laptop are generally pretty average. Some microphones include their own converters (if they plug directly in via USB, for example). Otherwise, you’ll need to get an audio interface.

Also be aware that there are different types of microphones—primarily dynamic and condenser microphones. Condensers are great for recording vocals, because they’re extremely sensitive and can pick up a wide frequency range. But they’re generally more expensive and may require phantom power—a 48-volt power source often supplied by mixing desks and preamps. If it plugs in via USB, it’ll be ready to go out of the box.

Find a Quiet Recording Environment

It’s easy to ignore a lot of the day-to-day noises that surround us. Sometimes you won’t even notice they exist—until a passing car or a sneezing roommate ruins an otherwise fantastic recording. Be mindful of the sounds around you, and try to record in the quietest part of your house—during the dead of night!

Watch Your Signal Level

When recording, keep a close eye on the input level of your sound source. If it’s too low, you’ll be capturing too much background and signal-chain noise. If it’s too loud, you’ll get clipping that, in most cases, will render the recording unusable. Most recording software has level indicators that include a red light that remains lit if any clipping occurs so you know you’ve gone too far.

Reduce the Echo

Even in the dead of night, there’s a hidden source of noise that will mess up your sounds: room reverb. Any time you record, you pick up the natural reverb of the room you’re in. A good way to test this is to stand in the center of the room and clap (once) sharply and loudly. Reverb is the sound bouncing around the room, reflecting off the walls, slowly decaying. In a room with poor sound absorption you’ll hear a flanging robotic effect. That’s bad for sound recording. (Even a room with beautiful natural reverb might not be what you need for your effects; and you can’t remove the reverb after it’s recorded.)

To fix the echo, get creative! You need to dampen the sound reflecting off of hard, parallel surfaces. Pull up your bed mattress and lean it against the wall. Hang blankets around the room. Litter the ground with sweaters. Pull books half out of the bookshelf to create uneven surfaces. Try the clap-test again after each change. The “deader” the sound, the better.

Music for Games

We’ve already put on the Foley artist hat—and we’re making this whole game from scratch by ourselves—so why not take it all the way and be the musician too? Music is a very important (and often neglected) aspect of game creation. It’s sometimes added as an afterthought, or omitted entirely—which is a grave mistake! Music is a powerful and efficient tool for establishing mood and feeling, for building tension and excitement, and for controlling the pacing of a piece. The movie industry knows it, the big game studios know it—and you should know it, too!

There are various options when you want to get sound in your games. The easiest is to grab some pre-made tracks with a suitable copyright license and plonk it in. The problem with this approach is that anyone else can use that track for their game too, and there’s no chance of getting any variations or changes made to the track. It’s often obvious and distracting when a game mixes song sources, so choose this path at your peril.

The next option is to find a game music composer and work with them. This might not be as difficult as you imagine: there are lots of aspiring game musicians out there looking for a chance to feature their work in finished games! If you look on popular game developer forums—such as TIGSource or Reddit Gamedev—you’ll find many musicians looking for projects. Collaborating with others can be very rewarding and fun. Be sure to lay out expectations clearly at the beginning (and establish milestones to track progress) to avoid wasting each other’s time.

If you want original music but don’t want to start collaborating with others, the only choice left is to do it yourself! Yes! Your first efforts might not go platinum, but the reward will be a game that’s completely your own creation! There are many music-making packages available and (like choosing a text editor or an IDE) the one for you will be a personal choice. If you don’t have a lot of experience, you can start with GarageBand or Mixcraft—which offer drag-and-drop music creation from a stack of pre-packaged loops. After that, you’ll have to try them all and see what you like. My personal favorites are Ableton Live and SunVox.

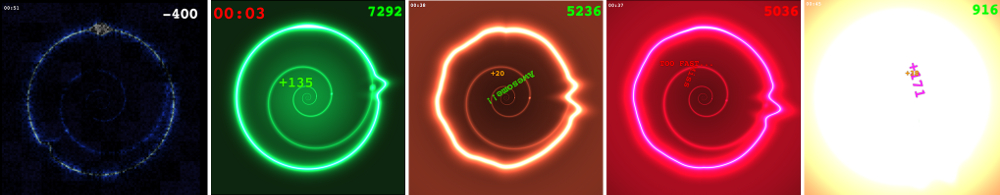

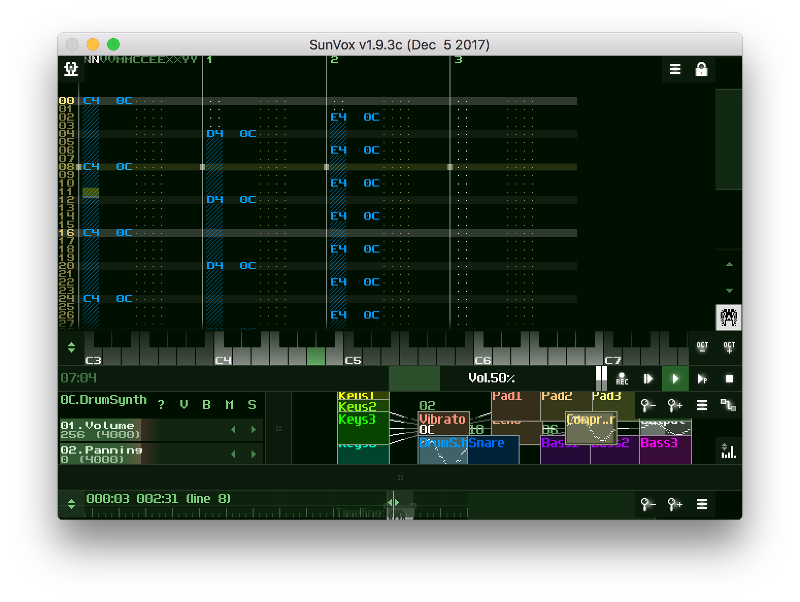

Creating a Track from Scratch

Pengolfin’ needs a theme song that’s fitting for a fun-filled carnival on the South Pole. Most likely it should be in a major key (as otherwise it would be a sorrow-filled carnival in the South Pole), with a simple melody played in some kind of quirky, squishy bell or plucky sounds. Something bright and happy. I’ve chosen to make our theme song in SunVox. SunVox is an audio tracker that’s free and open source, available on many platforms (including mobile devices), and has some built-in synthesizers—enabling us to make a whole song with no extra requirements.

Trackers let you sequence sounds in a spreadsheet-like matrix. Each row plays one at a time, and any sounds on the same row will play simultaneously. Each cell determines which sound triggers, at what note. The main advantage of trackers—back when computer memory was measured in kilobytes—was that a small number of sound samples could be reused and arranged into a full song. It’s less important these days, but trackers are still fun to use and easy to program.

To kick things off (drum-based pun there), we’ll make a simple beat. Honestly, you can play any percussive sounds at regular intervals and they’ll sound good. But starting with a stock-standard beat will be more familiar to your listeners, and you can tweak it from there. We’ll go with the ol’ boom-ka-boom-ka beat. Play a bass drum (also called a “kick drum”, because real-life drummers use their feet for it) on every beat, a snare drum in between beats, and a closed hi-hat (chht) over the top of both of them:

1 2 3 4 1 2 3 4

Kick: X - - - X - - - X - - - X - - -

Snare: - - X - - - X - - - X - - - X -

Hi hat: - X - X - X - X - X - X - X - X

When you play this back, it sounds like this (if you sing the boom-kas and a friend does the chts):

1 & 2 & 3 & 4 &

you: boom ka boom ka

friend: cht cht cht cht

Rock and roll! Let’s transpose this into SunVox. Because we’re never playing the bass and snare at the same time, we could put them in the same channel (a column in the matrix)—but for clarity we’ll separate them. Each row represents one beat, every four rows is a bar (the first beat of a bar is colored slightly differently). In each cell you can place one note by pressing a keyboard key. This will determine the pitch. For drum sounds, it’s best to use their original pitch (which is generally the C note).

To make a sequence, first put SunVox in “edit” mode (to toggle), then place a kick drum sound in the first cell of the first channel. Then move down to the 5th row (the second bar) and place another, then the 9th row (the third bar) … and so on. Play it back: you’ve just made the classic four-on-the-floor drum loop! In the next channel, add a snare sound and put it between the kick drums (on beats 3, 7, 11 …). Finally, add a hi-hat on every second row. You’ve got yourself a beat.

It’s time for the most important ingredient in our theme song—the melody. This is what we want to get stuck in people’s heads and have them associate with our game. This is the time for magic. Select a synthesizer sound you like and start pressing keys on your keyboard until you make something catchy. Alternatively, just sequence some random things until you get something useful. (The safest approach is to space these evenly apart, on the drum beat.) Easy!

Making Music

This sounds like a pretty hand-wavy description of writing music. But seriously, plopping any old thing down will often sound better than you expect. It will take years of musical training to understand why it sounds good (or how to make it sound like you want) but you’ve been listening to music your whole life and have picked up the basics by osmosis. I promise.

Once you have a melody, it’s time for a bass line. The bass line tends to follow the rhythm of the drum beat: it’s the glue between the beat and the melody. It needs to sit well with both. It’s easiest to start with the same note as the melody, and play one note per bar. We’ll add a tuba-like oomph-ah on each bar to make a bass line that feels fun.

That’s all we really need! Each page of notes in a tracker is called a pattern. The patterns can be arranged into a complete song. Our song arrangement will start with only the melody pattern, followed by the full song repeated four times. Humans like their music in multiples of four: four beats to a bar, played a multiple of four times. When you’re a pro, you can make some 5/4-time polyrhythmic jazz … but to begin with, keep it simple.

What Else?

This is a very simple song, and maybe you’re wondering what else you could add? After a melody and bass line, the next most common addition to a song is a “pad”. A pad is a rich sound like orchestra strings. It sits in the background and fills up the sonic space without making things sound cluttered.

The theme song is done; it’s ready to be exported for final mastering in our audio editor. As it stands, the song ends very abruptly (there’s no nice fade out, or ending). That’s what we want, because the final song will be imported in our game as new Sound("res/sound/theme.mp3", { loop: true }) and will loop around nicely when it gets to the end.

Music is about sequencing and layering sounds. The ideas behind making a song in any music package (or even in real life, with real instruments) will be similar to what we’ve done with SunVox, even if the user interface is completely different!

The Web Audio API

After using synthesizers in music packages like SunVox, you might start wondering, “But where does the sound come from?”

It’s kind of wizardry—summoning a sonic soundscape out of thin air. The original hardware analog synthesizers worked by combining electronic oscillator circuits that produced periodic recurring signals: if you cycle the circuit twenty thousand times a second, you’re producing an audible sine wave! These raw waveforms can then be mixed, re-routed, and filtered (removing certain frequencies, boosting others) to shape individual notes and create interesting timbres and effects.

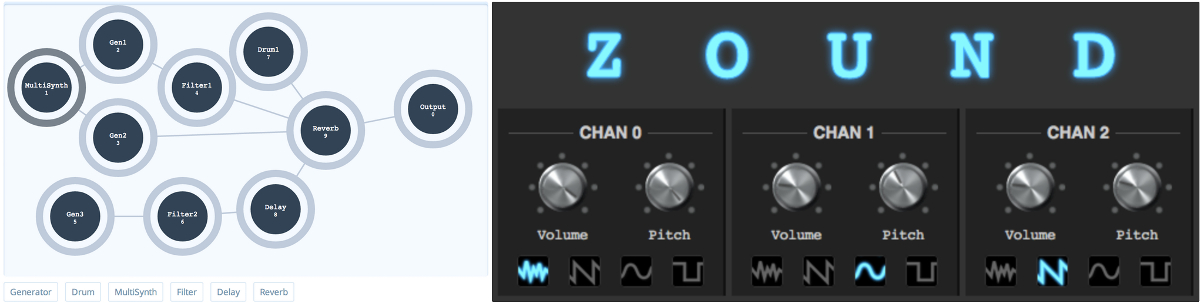

Software synthesizers emulate this process in code. Modular synthesizers have basic components (oscillators and filters) that can be networked in a myriad of ways to create complex musical systems. The system consists of a series of black-box modules that perform different audio tasks. Each module has one or more inputs and one or more outputs. They can be connected together to make more complex effects (which also can be packaged to form new black-box modules).

The Web Audio API provides a collection of these digital signal processing (DSP) components for generating and manipulating audio. The components (called audio nodes) are networked to form an audio routing graph capable of all manner of sound processing magic. The primary audio sources for the graph are either Audio elements (sound files), or oscillators that generate pure tones. The primary output will usually be the master destination—the user’s speakers.

Web Audio API

I won’t sugar-coat it: the Web Audio API is daunting and complex and a bit weird. As a result, there are many hoops to jump through to get things working the way you might expect. It is powerful, though, and it has some really nifty features. If you like the idea of wrangling audio in weird and wonderful ways, then it’s worth reading the spec and diving in further.

To work with the Web Audio API, we require an audio context, which is similar to the Canvas graphics context. This is available in the browser as window.AudioContext:

const ctx = new AudioContext();

Once you have an instance of the AudioContext, you can start to make the graph connections, which is the only way to get work done. The Web Audio API is all about connect-ing modular boxes together:

const master = ctx.createGain();

master.gain.value = 0.5;

master.connect(ctx.destination);

Our first audio node is a GainNode. A GainNode is created with the helper method ctx.createGain(). Gain is a module that attenuates or boosts its input source. It’s a volume knob. All audio nodes have a connect method for joining the audio routing graph (as well as a disconnect for leaving it). The parameter for connect should be another node. In our example, the GainNode is connected to a special node ctx.destination—which is the main audio output (your speakers!).

Audio Nodes

There are many basic audio nodes, and they’re all instantiated via helper methods off the audio context (such as ctx.createGain()) rather than directly with new GainNode()—as they can only exist with a reference to the current audio context.

Audio nodes are controlled by tweaking their AudioParam parameters. Each node will have zero or more parameters that are relevant to its functionality. A GainNode has only one AudioParam—gain. By setting the node’s gain value (gain.value) to 0.5, any input will come out half as loud as it went in. AudioParams can be set directly, or can be scheduled and automated over time (see the “Timing and Scheduling” section).

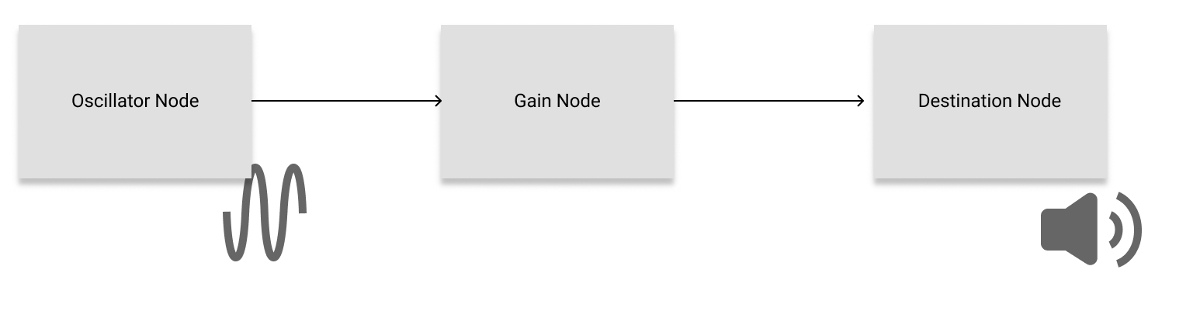

Oscillators

To generate sound magically out of nothing, the ctx.createOscillator() function creates an OscillatorNode audio node. The oscillator works like its hardware equivalent—repeating over and over fast enough to generate a cyclical waveform that we can hear (well, assuming we set the frequency audio parameter in the human hearing range, or that you’re a bat).

Look After Your Ears

Protect your ears: you can’t buy a new pair! While working with the Web Audio API, you’ll inevitably connect a node to the wrong place and end up with a feedback loop that will be very, very loud. If you’re wearing headphones, this can damage your hearing. Always work at a reasonable level, and add gain nodes with initial low volumes while you test new things.

const osc = ctx.createOscillator();

osc.type = "sine";

osc.frequency.value = 440;

osc.connect(master);

osc.start();

This creates a pure sine wave at a frequency of 440 Hz (the musical note A, called the “pitch standard”). The oscillator is connected to our volume node, which is connected to your speakers. The type property defines the shape of the repeating wave. The default is sine, and others include square, sawtooth, and triangle. Try them out. (I think you’ll find square is the best.)

The audio parameter frequency determines the pitch and is measured in hertz (Hz). Humans generally can hear between 20 Hz and 20,000 Hz. Try them out. (Just watch your volume: don’t damage your ears!) Listening to pure tones can quickly become unpleasant, so we’ll stop the sound as soon as you hit the space bar:

game.run(() => {

if (keys.action) {

osc.stop();

}

});

You Can’t Restart a Stopped Oscillator

Once you stop an oscillator (or other sound buffer, as we’ll see shortly) you can’t start it again. This is the weird nature of the Web Audio API. The idea is to remain as stateless as possible by simply creating things as you need them. Under the hood, things are optimized for instantiating large quantities of new audio objects. We’ll see how to handle multiple notes shortly.

A single oscillator is very annoying, but it’s a start. The beauty of modular audio systems is the ability to compose simple nodes into a complex timbre or effect.

Combining Oscillators

A far more interesting sound can be made from two oscillators. (In fact, many famous, old-school analog synthesizers contained only two oscillators, usually along with a white noise generator.) To combine the oscillators, just create a new one and also connect it to master. The settings need to be different, as otherwise it’ll just be the same sound but louder!

const osc2 = ctx.createOscillator();

osc2.type = "square";

osc2.frequency.value = 440 * 1.25;

osc2.connect(master);

osc2.start();

Here we have a square wave playing at a frequency 1.25 times higher than the sine wave. This ratio sounds nice. (Actually, it’s a ratio of 5/4, which happens to be a “major third” above the original A note.) It’s connected to our master gain. If we were going to extend this mini-synth, we would want to create its own “output gain” node, so the volume of both oscillators could be controlled with one audio parameter, without affecting anything else in the audio graph.

Moving into Our Library

As mentioned, the Web Audio API is reasonably complicated and powerful—so it’s actually quite difficult to make a lovely API without sacrificing that power. In our library, we’ll just expose a couple of helpful things—the audio context, and a master gain node. For the rest, you’ll have to roll up your sleeves and do it yourself.

Create a new file at sound/webAudio.js. It will export an object with a hasWebAudio flag (so we can test before we use it), along with ctx audio context and master gain node:

const hasWebAudio = !!window.AudioContext;

let ctx;

let master;

ctx and master are initially undefined. They’ll be “lazy loaded” the first time we ask for them. This is so we don’t create an audio context if it’s not needed, or not supported on the platform:

export default {

hasWebAudio,

get ctx() {

// Set up the context

...

return ctx;

},

get master() {

return master;

}

};

The first time you ask for ctx, it will run the getter function and create the context and gain nodes, and connect to the user’s speakers:

// Set up the context

if (!ctx && hasWebAudio) {

ctx = new AudioContext();

master = ctx.createGain();

master.gain.value = 1;

master.connect(ctx.destination);

}

Everything should connect to the master gain node, rather than directly to the speakers. This way, it’s possible to implement overall volume control, or mute/unmute sound. Anywhere we want to do Web Audio work, we can grab a reference to the global context or connect nodes to the master gain node:

const { ctx, master } = webAudio;

An extension of this approach would be to make two additional gain nodes—say, sfx and music—both connecting to master. Any sounds would connect to the relevant channel and could be controlled independently in your game.

Audio Element as a Source

Oscillators can be useful for making quick sound effects, but your game probably won’t be generating sound from scratch in real time; it’ll be playing kick-ass sound samples and rockin’ some tunes you made earlier. Although this is already possible via the Audio element, we can’t do real-time effects on them until they’re part of the audio graph.

For this purpose, there’s ctx.createMediaElementSource(). It accepts an Audio (or Video) element as input and converts it to a MediaElementAudioSourceNode, which is an AudioNode that can be connect-ed to other nodes!

const plopNode = ctx.createMediaElementSource(plops.audio);

plopNode.connect(master);

This is the simplest way to get a sound into the Web Audio API world if it’s already loaded as an Audio element. In this state, it’s easy to apply some cool sound effects to it and do other audio post-processing (which we’ll do shortly).

Source as an Audio Buffer

The MediaElementAudioSourceNode is easy, but a bit limited in its abilities: it can only do what a regular Audio element can do (play, stop … that’s about it). A more powerful, programmatic element is an AudioBufferSourceNode. An AudioBufferSourceNode can play back and manipulate in-memory data (stored in an AudioBuffer). It offers the ability to modify the detuning, playback rate, loop start and end points, and can be used for dynamically processing a buffer of audio.

The downside is it’s harder to set up and use. You need to load the sound from a file in a special format, then decode it as a buffer, and finally assign the buffer to the AudioBufferSourceNode. Then it can be used in our audio graph. We’ll treat the sound buffer as a new asset type; it’s a good test of our asset loading system. Asset.soundBuffer will do the heavy lifting of loading the file and decoding it:

soundBuffer(url, ctx) {

return load(url, (url, onAssetLoad) => {

...

});

},

What should we return for a “buffer”, though? An image returns a new Image, and audio returns a new Audio element. A buffer is weirder: it doesn’t have a handy placeholder we can easily return before loading. This is the perfect use case for a JavaScript promise. A promise is a promise. “I promise I’ll give you a buffer … eventually.”

fetch(url)

.then(r => r.arrayBuffer())

.then(ab => new Promise((success, failure) => {

ctx.decodeAudioData(ab, buffer => {

onAssetLoad(url);

success(buffer);

});

}));

We’re using fetch to grab a sound file from a URL. The fetch function happens to return a promise too—so we can use .then() to wait for the result of fetching. This is the beauty of promises: you can combine them and handle all asynchronous tasks in a consistent manner.

When fetch returns, we extract the result as an arrayBuffer. (Other possible functions include text() and json(), depending on the type of file you’re fetching.) The result of this is an arrayBuffer that we can decode as audio using ctx.decodeAudioData. This is an asynchronous operation, so we create a new Promise to wrap the callback function (so everything stays in promise-land).

A promise takes a function as a parameter, and this function is called with two of its own functions—success and failure. When the functionality you want to accomplish is complete, you call one of these functions to resolve the promise and get on with things.

That’s part one done—making an AudioBuffer. Part two is to make an AudioBufferSourceNode to play it. These are lightweight nodes like oscillators: you create them each time you want to play a buffer. In sound/SoundBuffer.js, we’ll make something that acts a lot like a regular Sound, but has a default output node to connect to:

class SoundBuffer {

constructor(src, options = {}) {

this.options = Object.assign(

{ volume: 1, output: master },

options

);

// Configure Audio element

const audio = Assets.soundBuffer(src, ctx);

...

}

}

By default, it’ll connect to our webAudio.master gain node—but you can override this in options any time you call .play():

play(overrides) {

const { audio, options } = this;

const opts = Object.assign({ time: 0 }, options, overrides);

audio.then(buffer => {

// ...

});

}

In play, we collate our options and get a reference to the promise from our asset loader. Because it’s a promise, we can call .then to retrieve the audio buffer. (Once a promise is resolved, calling then will give us the same result each time. It doesn’t load anything again.) To play the buffer, we create an AudioBufferSourceNode with ctx.createBufferSource:

const source = ctx.createBufferSource();

source.buffer = buffer;

source.volume = opts.volume;

if (opts.speed) {

source.playbackRate.value = opts.speed;

}

source.connect(opts.output);

source.start(0, opts.time);

This again mimics Sound, but there are some new abilities. One is to set the playbackRate so we can alter the pitch on each play. This is great for varying sound effects like bullets and walking as we did earlier, without requiring any additional sound files.

Effecting a Sound Source

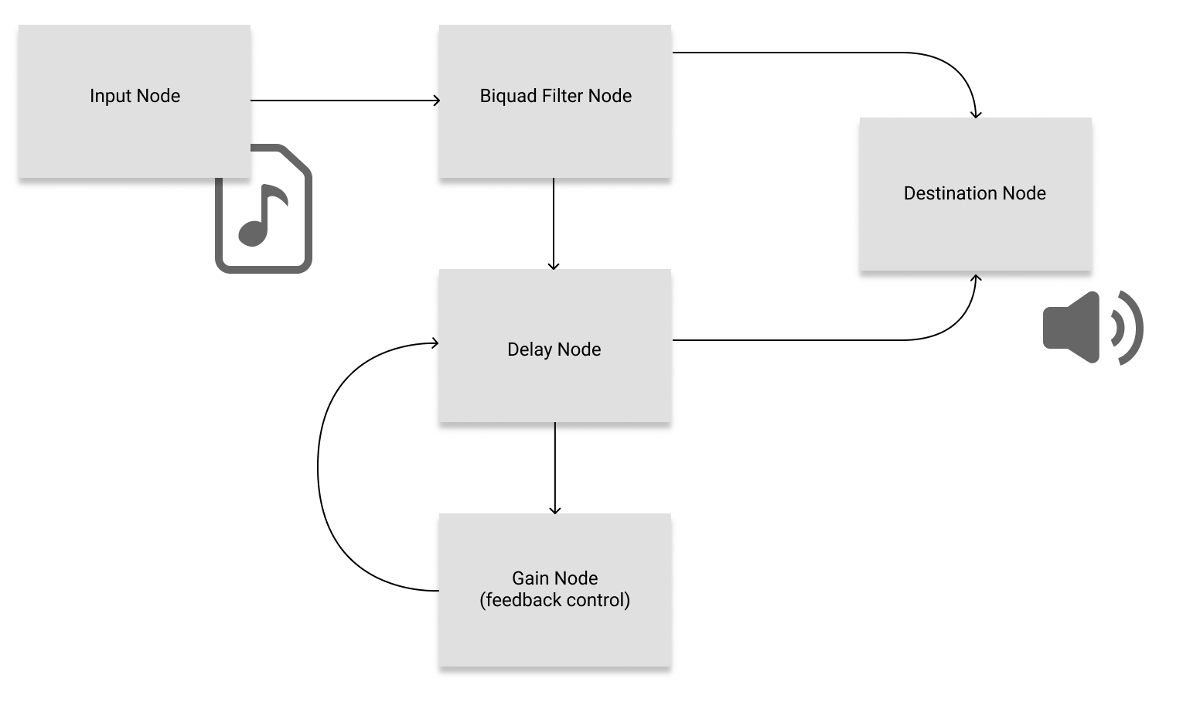

Once we have a source audio node ready and loaded (be it in the form of an Oscillator, MediaElementAudioSourceNode, or AudioBufferSourceNode) we can start making some effects to spice up our sounds. Audio effects are made by routing audio sources through one or more modules. We’ve already seen one “effect”—a simple gain node volume control. Cooler effects just require more modules linked together! There are several Web Audio API nodes that are helpful for making a variety of DSP effects:

GainNode(createGain): volume controlDelayNode(createDelay): pause before outputting the input sourceBiquadFilterNode(createBiquadFilter): alter the volume of certain frequencies of the input sourceDynamicsCompressorNode(createDynamicsCompressor): apply audio compression to the inputConvolverNode(createConvolver): use convolution to apply a reverb effect to an input source.

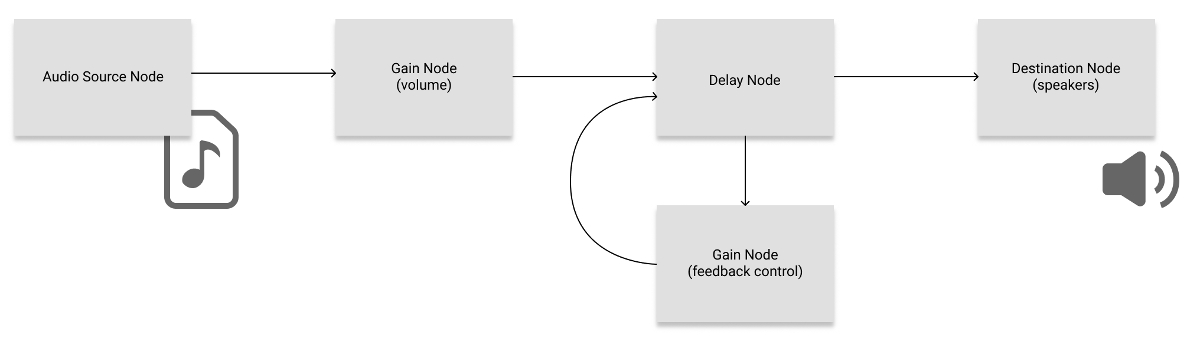

We’re going to create an “underwater in a cavern” effect for Pengolfin’. When our penguin ball falls into the water off the edge of the screen, the theme tune switches from a bright crisp sound to a gurgling underwater effect. The effect will consist of a filter, a delay, and a gain node connected together. The filter dampens the high-frequency sounds, and the delay and gain work together to make a feedback loop to make an underwater-y echo.

function inACavern(ctx, destination) {

const filter = ctx.createBiquadFilter();

const delay = ctx.createDelay();

const feedback = ctx.createGain();

...

return filter;

}

Our effect generator will be a function that returns the filter node. This is the audio node to connect source sounds that we’d like to have effected. The filter will be connected to a node specified in the destination parameter. Now our effect can have an input and output to the audio graph.

delay.delayTime.value = 0.2;

feedback.gain.value = 0.5;