CHAPTER 4

The Brain Reveals Its Secrets

People often say the brain is the most complicated thing in the universe. They conclude from this that there will not be a simple explanation for how it works, or that perhaps we will never understand it. The history of scientific discovery suggests they are wrong. Major discoveries are almost always preceded by bewildering, complex observations. With the correct theoretical framework, the complexity does not disappear, but it no longer seems confusing or daunting.

A familiar example is the movement of the planets. For thousands of years, astronomers carefully tracked the motion of the planets among the stars. The path of a planet over the course of a year is complex, darting this way and that, making loops in the sky. It was hard to imagine an explanation for these wild movements. Today, every child learns the basic idea that the planets orbit the Sun. The motion of the planets is still complex, and to predict their course requires difficult mathematics, but with the right framework, the complexity is no longer mysterious. Few scientific discoveries are hard to understand at a basic level. A child can learn that the Earth orbits the Sun. A high school student can learn the principles of evolution, genetics, quantum mechanics, and relativity. Each of these scientific advances was preceded by confusing observations. But now, they seem straightforward and logical.

Similarly, I always believed that the neocortex appeared complicated largely because we didn’t understand it, and that it would appear relatively simple in hindsight. Once we knew the solution, we would look back and say, “Oh, of course, why didn’t we think of that?” When our research stalled or when I was told that the brain is too difficult to understand, I would imagine a future where brain theory was part of every high school curriculum. This kept me motivated.

Our progress in trying to decipher the neocortex had its ups and downs. Over the course of eighteen years—three at the Redwood Neuroscience Institute and fifteen at Numenta—my colleagues and I worked on this problem. There were times when we made small advances, times we made large advances, and times we pursued ideas that at first seemed exciting but ultimately proved to be dead ends. I am not going to walk you through all of this history. Instead, I want to describe several key moments when our understanding took a leap forward, when nature whispered in our ear telling us something we had overlooked. There are three such “aha” moments that I remember vividly.

Discovery Number One: The Neocortex Learns a Predictive Model of the World

I already described how, in 1986, I realized that the neocortex learns a predictive model of the world. I can’t overstate the importance of this idea. I call it a discovery because that is how it felt to me at the time. There is a long history of philosophers and scientists talking about related ideas, and today it is not uncommon for neuroscientists to say the brain learns a predictive model of the world. But in 1986, neuroscientists and textbooks still described the brain more like a computer; information comes in, it gets processed, and then the brain acts. Of course, learning a model of the world and making predictions isn’t the only thing the neocortex does. However, by studying how the neocortex makes predictions, I believed we could unravel how the entire system worked.

This discovery led to an important question. How does the brain make predictions? One potential answer is that the brain has two types of neurons: neurons that fire when the brain is actually seeing something, and neurons that fire when the brain is predicting it will see something. To avoid hallucinating, the brain needs to keep its predictions separate from reality. Using two sets of neurons does this nicely. However, there are two problems with this idea.

First, given that the neocortex is making a massive number of predictions at every moment, we would expect to find a large number of prediction neurons. So far, that hasn’t been observed. Scientists have found some neurons that become active in advance of an input, but these neurons are not as common as we would expect. The second problem is based on an observation that had long bothered me. If the neocortex is making hundreds or thousands of predictions at any moment in time, why are we not aware of most of these predictions? If I grab a cup with my hand, I am not aware that my brain is predicting what each finger should feel, unless I feel something unusual—say, a crack. We are not consciously aware of most of the predictions made by the brain unless an error occurs. Trying to understand how the neurons in the neocortex make predictions led to the second discovery.

Discovery Number Two: Predictions Occur Inside Neurons

Recall that predictions made by the neocortex come in two forms. One type occurs because the world is changing around you. For example, you are listening to a melody. You can be sitting still with your eyes closed and the sound entering your ears changes as the melody progresses. If you know the melody, then your brain continually predicts the next note and you will notice if any of the notes are incorrect. The second type of prediction occurs because you are moving relative to the world. For example, as I lock my bicycle in the lobby of my office, my neocortex makes many predictions about what I will feel, see, and hear based on my movements. The bicycle and lock don’t move on their own. Every action I make leads to a set of predictions. If I change the order of my actions, the order of the predictions also changes.

Mountcastle’s proposal of a common cortical algorithm suggested that every column in the neocortex makes both types of predictions. Otherwise, cortical columns would have differing functions. My team also realized that the two types of prediction are closely related. Therefore, we felt that progress on one subproblem would lead to progress on the other.

Predicting the next note in a melody, also known as sequence memory, is the simpler of the two problems, so we worked on it first. Sequence memory is used for a lot more than just learning melodies; it is also used in creating behaviors. For example, when I dry myself off with a towel after showering, I typically follow a nearly identical pattern of movements, which is a form of sequence memory. Sequence memory is also used in language. Recognizing a spoken word is like recognizing a short melody. The word is defined by a sequence of phonemes, whereas a melody is defined by a sequence of musical intervals. There are many more examples, but for simplicity I will stick to melodies. By deducing how neurons in a cortical column learn sequences, we hoped to discover basic principles of how neurons make predictions about everything.

We worked on the melody-prediction problem for several years before we were able to deduce the solution, which had to exhibit numerous capabilities. For example, melodies often have repeating sections, such as a chorus or the da da da dum of Beethoven’s Fifth Symphony. To predict the next note, you can’t just look at the previous note or the previous five notes. The correct prediction may rely on notes that occurred a long time ago. Neurons have to figure out how much context is necessary to make the right prediction. Another requirement is that neurons have to play Name That Tune. The first few notes you hear might belong to several different melodies. The neurons have to keep track of all possible melodies consistent with what has been heard so far, until enough notes have been heard to eliminate all but one melody.

Engineering a solution to the sequence-memory problem would be easy, but figuring out how real neurons—arranged as we see them in the neocortex—solve these and other requirements was hard. Over several years, we tried different approaches. Most worked to some extent, but none exhibited all the capabilities we needed and none precisely fit the biological details we knew about the brain. We were not interested in a partial solution or in a “biologically inspired” solution. We wanted to know exactly how real neurons, arranged as seen in the neocortex, learn sequences and make predictions.

I remember the moment when I came upon the solution to the melody-prediction problem. It was in 2010, one day prior to our Thanksgiving holiday. The solution came in a flash. But as I thought about it, I realized it required neurons to do things I wasn’t sure they were capable of. In other words, my hypothesis made several detailed and surprising predictions that I could test.

Scientists normally test a theory by running experiments to see if the predictions made by the theory hold up or not. But neuroscience is unusual. There are hundreds to thousands of published papers in every subfield, and most of these papers present experimental data that are unassimilated into any overall theory. This provides theorists like myself an opportunity to quickly test a new hypothesis by searching through past research to find experimental evidence that supports or invalidates it. I found a few dozen journal papers that contained experimental data that could shed light on the new sequence-memory theory. My extended family was staying over for the holiday, but I was too excited to wait until everyone went home. I recall reading papers while cooking and engaging my relatives in discussions about neurons and melodies. The more I read, the more confident I became that I had discovered something important.

The key insight was a new way of thinking about neurons.

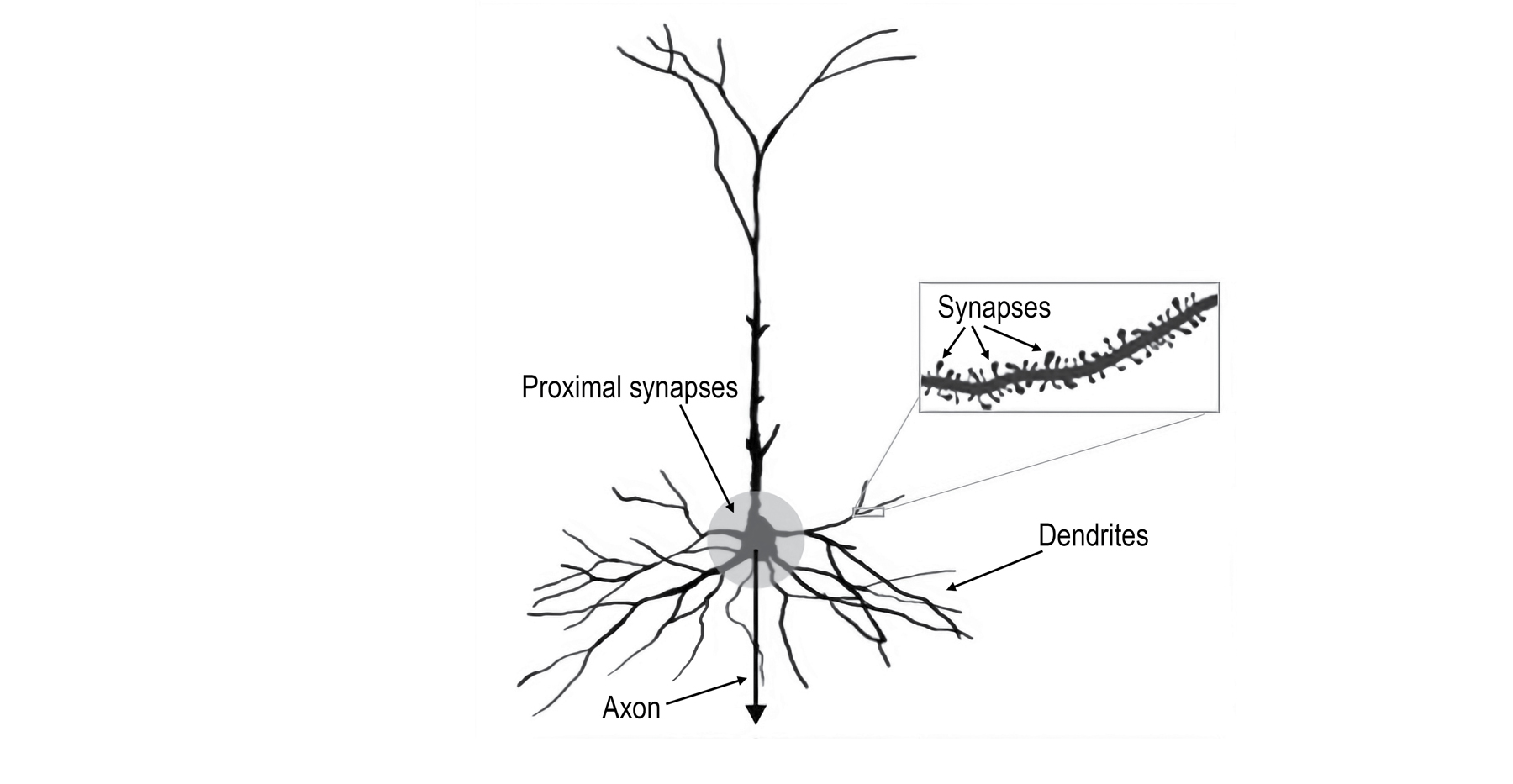

A typical neuron

Above is a picture of the most common type of neuron in the neocortex. Neurons like this have thousands, sometimes tens of thousands, of synapses spaced along the branches of the dendrites. Some of the dendrites are near the cell body (which is toward the bottom of the image), and some dendrites are farther away (toward the top). The box shows an enlarged view of one dendrite branch so you can see how small and tightly packed the synapses are. Each bump along the dendrite is one synapse. I’ve also highlighted an area around the cell body; the synapses in this area are called proximal synapses. If the proximal synapses receive enough input, then the neuron will spike. The spike starts at the cell body and travels to other neurons via the axon. The axon was not visible in this picture, so I added a down-facing arrow to show where it would be. If you just consider the proximal synapses and the cell body, then this is the classic view of a neuron. If you have ever read about neurons or studied artificial neural networks, you will recognize this description.

Oddly, less than 10 percent of the cell’s synapses are in the proximal area. The other 90 percent are too far away to cause a spike. If an input arrives at one of these distal synapses, like the ones shown in the box, it has almost no effect on the cell body. All that researchers could say was that the distal synapses performed some sort of modulatory role. For many years, no one knew what 90 percent of the synapses in the neocortex did.

Starting around 1990, this picture changed. Scientists discovered new types of spikes that travel along the dendrites. Before, we knew of only one type of spike: it started at the cell body and traveled along the axon to reach other cells. Now, we’d learned that there were other spikes that traveled along the dendrites. One type of dendrite spike begins when a group of twenty or so synapses situated next to each other on a dendrite branch receive input at the same time. Once a dendrite spike is activated, it travels along the dendrite until it reaches the cell body. When it gets there, it raises the voltage of the cell, but not enough to make the neuron spike. It is like the dendrite spike is teasing the neuron—it is almost strong enough to make the neuron active, but not quite.

The neuron stays in this provoked state for a little bit of time before going back to normal. Scientists were once again puzzled. What are dendrite spikes good for if they aren’t powerful enough to create a spike at the cell body? Not knowing what dendrite spikes are for, AI researchers use simulated neurons that don’t have them. They also don’t have dendrites and the many thousands of synapses found on the dendrites. I knew that distal synapses had to play an essential role in brain function. Any theory and any neural network that did not account for 90 percent of the synapses in the brain had to be wrong.

The big insight I had was that dendrite spikes are predictions. A dendrite spike occurs when a set of synapses close to each other on a distal dendrite get input at the same time, and it means that the neuron has recognized a pattern of activity in some other neurons. When the pattern of activity is detected, it creates a dendrite spike, which raises the voltage at the cell body, putting the cell into what we call a predictive state. The neuron is then primed to spike. It is similar to how a runner who hears “Ready, set…” is primed to start running. If a neuron in a predictive state subsequently gets enough proximal input to create an action potential spike, then the cell spikes a little bit sooner than it would have if the neuron was not in a predictive state.

Imagine there are ten neurons that all recognize the same pattern on their proximal synapses. This is like ten runners on a starting line, all waiting for the same signal to begin racing. One runner hears “Ready, set…” and anticipates the race is about to begin. She gets in the blocks and is primed to start. When the “go” signal is heard, she gets off the blocks sooner than the other runners who weren’t primed, who didn’t hear a preparatory signal. Upon seeing the first runner off to an early lead, the other runners give up and don’t even start. They wait for the next race. This kind of competition occurs throughout the neocortex.

In each minicolumn, multiple neurons respond to the same input pattern. They are like the runners on the starting line, all waiting for the same signal. If their preferred input arrives, they all want to start spiking. However, if one or more of the neurons are in the predictive state, our theory says, only those neurons spike and the other neurons are inhibited. Thus, when an input arrives that is unexpected, multiple neurons fire at once. If the input is predicted, then only the predictive-state neurons become active. This is a common observation about the neocortex: unexpected inputs cause a lot more activity than expected ones.

If you take several thousand neurons, arrange them in minicolumns, let them make connections to each other, and add a few inhibitory neurons. The neurons solve the Name That Tune problem, they don’t get confused by repeated subsequences, and, collectively, they predict the next element in the sequence.

The trick to making this work was a new understanding of the neuron. We previously knew that prediction is a ubiquitous function of the brain. But we didn’t know how or where predictions are made. With this discovery, we understood that most predictions occur inside neurons. A prediction occurs when a neuron recognizes a pattern, creates a dendrite spike, and is primed to spike earlier than other neurons. With thousands of distal synapses, each neuron can recognize hundreds of patterns that predict when the neuron should become active. Prediction is built into the fabric of the neocortex, the neuron.

We spent over a year testing the new neuron model and sequence-memory circuit. We wrote software simulations that tested its capacity and were surprised to find that as few as twenty thousand neurons can learn thousands of complete sequences. We found that the sequence memory continued to work even if 30 percent of the neurons died or if the input was noisy. The more time we spent testing our theory, the more confidence we gained that it truly captured what was happening in the neocortex. We also found increasing empirical evidence from experimental labs that supported our idea. For example, the theory predicts that dendrite spikes behave in some specific ways, but at first, we couldn’t find conclusive experimental evidence. However, by talking to experimentalists we were able to get a clearer understanding of their findings and see that the data were consistent with what we predicted. We first published the theory in a white paper in 2011. We followed this with a peer-reviewed journal paper in 2016, titled “Why Neurons Have Thousands of Synapses, a Theory of Sequence Memory in the Neocortex.” The reaction to the paper was heartening, as it quickly became the most read paper in its journal.

Discovery Number Three: The Secret of the Cortical Column Is Reference Frames

Next, we turned our attention to the second half of the prediction problem: How does the neocortex predict the next input when we move? Unlike a melody, the order of inputs in this situation is not fixed, as it depends on which way we move. For example, if I look left, I see one thing; if I look right, I see something else. For a cortical column to predict its next input, it must know what movement is about to occur.

Predicting the next input in a sequence and predicting the next input when we move are similar problems. We realized that our sequence-memory circuit could make both types of predictions if the neurons were given an additional input that represented how the sensor was moving. However, we did not know what the movement-related signal should look like.

We started with the simplest thing we could think of: What if the movement-related signal was just “move left” or “move right”? We tested this idea, and it worked. We even built a little robot arm that predicted its input as it moved left and right, and we demonstrated it at a neuroscience conference. However, our robot arm had limitations. It worked for simple problems, such as moving in two directions, but when we tried to scale it to work with the complexity of the real world, such as moving in multiple directions at the same time, it required too much training. We felt we were close to the correct solution, but something was wrong. We tried several variations with no success. It was frustrating. After several months, we got bogged down. We could not see a way to solve the problem, so we put this question aside and worked on other things for a while.

Toward the end of February 2016, I was in my office waiting for my spouse, Janet, to join me for lunch. I was holding a Numenta coffee cup in my hand and observed my fingers touching it. I asked myself a simple question: What does my brain need to know to predict what my fingers will feel as they move? If one of my fingers is on the side of the cup and I move it toward the top, my brain predicts that I will feel the rounded curve of the lip. My brain makes this prediction before my finger touches the lip. What does the brain need to know to make this prediction? The answer was easy to state. The brain needs to know two things: what object it is touching (in this case the coffee cup) and where my finger will be on the cup after my finger moves.

Notice that the brain needs to know where my finger is relative to the cup. It doesn’t matter where my finger is relative to my body, and it doesn’t matter where the cup is or how it is positioned. The cup can be tilted left or tilted right. It could be in front of me or off to the side. What matters is the location of my finger relative to the cup.

This observation means there must be neurons in the neocortex that represent the location of my finger in a reference frame that is attached to the cup. The movement-related signal we had been searching for, the signal we needed to predict the next input, was “location on the object.”

You probably learned about reference frames in high school. The x, y, and z axes that define the location of something in space are an example of a reference frame. Another familiar example is latitude and longitude, which define locations on the surface of Earth. At first, it was hard for us to imagine how neurons could represent something like x, y, and z coordinates. But even more puzzling was that neurons could attach a reference frame to an object like a coffee cup. The cup’s reference frame is relative to the cup; therefore, the reference frame must move with the cup.

Imagine an office chair. My brain predicts what I will feel when I touch the chair, just as my brain predicts what I will feel when I touch the coffee cup. Therefore, there must be neurons in my neocortex that know the location of my finger relative to the chair, meaning that my neocortex must establish a reference frame that is fixed to the chair. If I spin the chair in a circle, the reference frame spins with it. If I flip the chair over, the reference frame flips over. You can think of the reference frame as an invisible three-dimensional grid surrounding, and attached to, the chair. Neurons are simple things. It was hard to imagine that they could create and attach reference frames to objects, even as those objects were moving and rotating out in the world. But it got even more surprising.

Different parts of my body (fingertips, palm, lips) might touch the coffee cup at the same time. Each part of my body that touches the cup makes a separate prediction of what it will feel based on its unique location on the cup. Therefore, the brain isn’t making one prediction; it’s making dozens or even hundreds of predictions at the same time. The neocortex must know the location, relative to the cup, of every part of my body that is touching it.

Vision, I realized, is doing the same thing as touch. Patches of retina are analogous to patches of skin. Each patch of your retina sees only a small part of an entire object, in the same way that each patch of your skin touches only a small part of an object. The brain doesn’t process a picture; it starts with a picture on the back of the eye but then breaks it up into hundreds of pieces. It then assigns each piece to a location relative to the object being observed.

Creating reference frames and tracking locations is not a trivial task. I knew it would take several different types of neurons and multiple layers of cells to make these calculations. Since the complex circuitry in every cortical column is similar, locations and reference frames must be universal properties of the neocortex. Each column in the neocortex—whether it represents visual input, tactile input, auditory input, language, or high-level thought—must have neurons that represent reference frames and locations.

Up to that point, most neuroscientists, including me, thought that the neocortex primarily processed sensory input. What I realized that day is that we need to think of the neocortex as primarily processing reference frames. Most of the circuitry is there to create reference frames and track locations. Sensory input is of course essential. As I will explain in coming chapters, the brain builds models of the world by associating sensory input with locations in reference frames.

Why are reference frames so important? What does the brain gain from having them? First, a reference frame allows the brain to learn the structure of something. A coffee cup is a thing because it is composed of a set of features and surfaces arranged relative to each other in space. Similarly, a face is a nose, eyes, and mouth arranged in relative positions. You need a reference frame to specify the relative positions and structure of objects.

Second, by defining an object using a reference frame, the brain can manipulate the entire object at once. For example, a car has many features arranged relative to each other. Once we learn a car, we can imagine what it looks like from different points of view or if it were stretched in one dimension. To accomplish these feats, the brain only has to rotate or stretch the reference frame and all the features of the car rotate and stretch with it.

Third, a reference frame is needed to plan and create movements. Say my finger is touching the front of my phone and I want to press the power button at the top. If my brain knows the current location of my finger and the location of the power button, then it can calculate the movement needed to get my finger from its current location to the desired new one. A reference frame relative to the phone is needed to make this calculation.

Reference frames are used in many fields. Roboticists rely on them to plan the movements of a robot’s arm or body. Reference frames are also used in animated films to render characters as they move. A few people had suggested that reference frames might be needed for certain AI applications. But as far as I know, there had not been any significant discussion that the neocortex worked on reference frames, and that the function of most of the neurons in each cortical column is to create reference frames and track locations. Now it seems obvious to me.

Vernon Mountcastle argued there was a universal algorithm that exists in every cortical column, yet he didn’t know what the algorithm was. Francis Crick wrote that we needed a new framework to understand the brain, yet he, too, didn’t know what that framework should be. That day in 2016, holding the cup in my hand, I realized Mountcastle’s algorithm and Crick’s framework were both based on reference frames. I didn’t yet understand how neurons could do this, but I knew it must be true. Reference frames were the missing ingredient, the key to unraveling the mystery of the neocortex and to understanding intelligence.

All these ideas about locations and reference frames occurred to me in what seemed like a second. I was so excited that I jumped out of my chair and ran to tell my colleague Subutai Ahmad. As I raced the twenty feet to his desk, I ran into Janet and almost knocked her over. I was anxious to talk to Subutai, but while I was steadying Janet and apologizing to her, I realized it would be wiser to talk to him later. Janet and I discussed reference frames and locations as we shared a frozen yogurt.

It took us over three years to work out the implications of this discovery, and, as I write, we still are not done. We have published several papers on it so far. The first paper is titled “A Theory of How Columns in the Neocortex Enable Learning the Structure of the World.” This paper starts with the same circuit we described in the 2016 paper on neurons and sequence memory. We then added one layer of neurons representing location and a second layer representing the object being sensed. With these additions, we showed that a single cortical column could learn the three-dimensional shape of objects by sensing and moving and sensing and moving.

For example, imagine reaching into a black box and touching a novel object with one finger. You can learn the shape of the entire object by moving your finger over its edges. Our paper explained how a single cortical column can do this. We also showed how a column can recognize a previously learned object in the same manner, for example by moving a finger. We then showed how multiple columns in the neocortex work together to more quickly recognize objects. For example, if you reach into the black box and grab an unknown object with your entire hand, you can recognize it with fewer movements and in some cases in a single grasp.

We were nervous about submitting this paper and debated whether we should wait. We were proposing that the entire neocortex worked by creating reference frames, with many thousands active simultaneously. This was a radical idea. And yet, we had no proposal for how neurons actually created reference frames. Our argument was something like, “We deduced that locations and reference frames must exist and, assuming they do exist, here is how a cortical column might work. And, oh, by the way, we don’t know how neurons could actually create reference frames.” We decided to submit the paper anyway. I asked myself, Would I want to read this paper even though it was incomplete? My answer was yes. The idea that the neocortex represents locations and reference frames in every column was too exciting to hold back just because we didn’t know how neurons did it. I was confident the basic idea was correct.

It takes a long time to put together a paper. The prose alone can take months to write, and there are often simulations to run, which can take additional months. Toward the end of this process, I had an idea that we added to the paper just prior to submitting. I suggested that we might find the answer to how neurons in the neocortex create reference frames by looking at an older part of the brain called the entorhinal cortex. By the time the paper was accepted a few months later, we knew this conjecture was correct, as I will discuss in the next chapter.

We just covered a lot of ground, so let’s do a quick review. The goal of this chapter was to introduce you to the idea that every cortical column in the neocortex creates reference frames. I walked you through the steps we took to reach this conclusion. We started with the idea that the neocortex learns a rich and detailed model of the world, which it uses to constantly predict what its next sensory inputs will be. We then asked how neurons can make these predictions. This led us to a new theory that most predictions are represented by dendrite spikes that temporarily change the voltage inside a neuron and make a neuron fire a little bit sooner than it would otherwise. Predictions are not sent along the cell’s axon to other neurons, which explains why we are unaware of most of them. We then showed how circuits in the neocortex that use the new neuron model can learn and predict sequences. We applied this idea to the question of how such a circuit could predict the next sensory input when the inputs are changing due to our own movements. To make these sensory-motor predictions, we deduced that each cortical column must know the location of its input relative to the object being sensed. To do that, a cortical column requires a reference frame that is fixed to the object.