CHAPTER 2

Ideation

An Artificial Intelligence Primer

The evolution of digital computers can be traced all the way back to the 1800s. The 1800s were an era of steam engines and large mechanical machines. It was during this era that Charles Babbage drew up the notes for making a difference engine.1 The difference engine was an automatic calculator that worked on the principle of second-order derivatives to calculate a series of values from a given equation. This breakthrough paved the way for modern computers. After the invention of the difference engine, Babbage turned his attention to solving more equations and giving a programming ability to his machines. His new machine was called the analytical engine.

Another key figure in this era of computing was Ada Lovelace. She prepared extensive notes to aid in the understanding and generalization of the analytical engine.2 For her contributions, she is generally considered to be the world's first programmer. Although she erroneously rejected that computers were capable of creative and decision-making processes, she was the first to correctly note that computers could be the generalized data processing machines we see today.

Alan Turing, in his seminal paper introducing the Turing test,3 met Lovelace's objections head on, saying that the analytical engine had the property of being “Turing complete” similar to programming language today and that with sufficient storage and time, it could be programmed to complete the Turing test. Turing further claimed that Lovelace and Babbage were under no obligation to describe all that could be achieved by the computer.

The Turing test (aka the “imitation game”), constructed by Turing in the same paper, is a game where two players, A and B, try to fool the third player, C, about their genders. This has been modified over the years to the “standard” Turing test where either A or B is a computer and the other is a human and C must determine which is which (see Figure 2.1). The critical question that Turing was trying to answer using this game is “Can machines communicate in natural language in a manner indistinguishable from that of a human being?”4 Turing postulated about machines that can learn new things using the same techniques that are used to teach a child. The paper deduced, quite correctly, that these thinking machines would be effectively black boxes since they were a departure from the paradigm of normal computer programming. The Turing test is still undefeated as of this writing, but we are well on our way to breaking the test and moving on to the greener pastures of intelligence. Although many chatbots have claimed to break the test, it has not been defeated without cheating and using tricks and hacks that do not guarantee a long-term correct result.

FIGURE 2.1 The Standard Interpretation of the Turing Test5

Modern AI has come a long way from the humble beginnings of the analytical engine and the one simple question of “Can machines think?” Today, we have AI that can understand the sentiment and tone of a text message, identify objects in image, search through thousands of documents quickly, and almost converse with us flawlessly with natural language. Artificial intelligence has become a magic assistant in our phones that awaits our questions in natural language, interprets them, and then returns an answer in the same language, instead of just showing a web result. In the next section, we will have a brief look at the state of modern AI and its current set of capabilities.

Natural Language Processing

The ability to converse with humans, as humans do with one another, has been one of the most coveted feats of AI ever since a thinking machine has been thought of. The Turing test measures a computer's ability to “speak” with a human and fool that person into thinking they are speaking to another human. This branch of AI, known as natural language processing (NLP), deals with the ability of the computer to understand and express itself in a natural language. This has proven to be especially difficult, since human conversations are loaded with context and deep meanings that are not explicitly communicated and are simply “understood.” Computers are bad at dealing with such loosely defined problems, since they work on well-defined programs that are unambiguous and clear. For example, the phrase “it is raining cats and dogs” is difficult for a computer to understand without the entirety of history and literature accessible inside the computer. To us, such a sentence is obvious even if we're previously unaware of the meaning, because we have the entire context of our lives to judge that raining animals is an impossibility.

Programmatic NLP

The first chatbots and natural language processing programs used tricks and hacks to translate human speech into computer instructions. ELIZA was one of the first few programs to make people believe with certain limitations that it was capable of intelligent speech. This was accomplished by Joseph Weizenbaum at the Massachusetts Institute of Technology (MIT) Artificial Intelligence Laboratory in the 1960s. ELIZA was designed to mimic psychologists by echoing the user's answer back to them. In this way, the computer seemed to hold intelligent conversation, but it clearly was not. There are other forms of NLP seen in the 1980s; they were text-based adventure games. The games understood a certain set of verbs—such as go, run, fight, and eat—and modified their feedback based purely on language parsing. This was accomplished by having a set of words that the game understood mapped to functions that would execute based on the keyword. The limitation of words that could be stored in memory meant that these early natural language parsers could not understand everything and would return errors and thus ruin the illusion very quickly.

A method of using programming techniques to parse natural language, programmatic NLP uses string parsing with regular expressions (regex) along with a dictionary of words the program can execute on. The regular expressions match the specified patterns, and the program adjusts its control flow based on the information gleaned from sentences, discarding everything except the main word. For example, the following is a simple regular expression that could be used to determine possible illness names:

diagnosed with \w+This example looks for the phrase “diagnosed with” followed by a single word, which would assumingly be the name of an illness (such as “diagnosed with cancer”). A more complex regular expression is required to identify illnesses with multiple words (such as “diagnosed with scarlet fever”). A full discussion of regular expressions is outside the scope of this book—Mastering Regular Expressions6 by Jeffrey Friedl is a great resource if you want to learn more.

Although the techniques we've discussed can make wondrous leaps in parsing a language, they fall short very quickly when applied more generally, because the dictionary supplied with the program can be exhausted. This is where AI steps in and outperforms these traditional methods by a huge margin. Natural languages, while they do follow the rules of grammar, follow rules that are not universal and thus something that holds meaning in one region might not hold true for another region. Languages vary so much because they are a fluid concept; new words are being added constantly to the vernacular, old idioms and words are retired, grammar rules change. This makes a language a perfect candidate for stochastic modeling and other statistical analysis, covered under the umbrella term of machine learning for natural language processing.

Statistical NLP

The techniques we've described are limited in scope to what can be achieved via parsing. The results turn out to be unsatisfactory when used for longer conversations or larger bodies of texts like an encyclopedia or the body of literature on even just one illness (per our earlier example). This necessitates a method that can learn new concepts while trying to understand a text, much like a human does. This method must be able to encounter new words in the same way that a human does and ask questions about what they mean given their context. Although a true AI agent that can perform automatic dictionary and other necessary contextual lookups instantly is years away, we can improve on the programmatic parsing of text by performing a statistical analysis.

For almost all the AI-based natural language parsers, there are some key steps in the algorithm: tokenization and keys, term frequency–inverse document frequency (tf-idf), probability, and ranking. The first step in parsing a sentence is chunking. Chunking is the process of breaking down a sentence based on a predetermined criterion; for example, a single word or multiple words in the order subject-adverb-verb-object, and so forth. Each such chunk is known as a token. The set of tokens is then analyzed and the duplicates are discarded. These unique chunks are the “keys” to the text. The tokens and their unique keys are used as the building blocks for probability distributions and for understanding the text in more detail. The next step is to identify the frequency distribution of the tokens and keys in the training data. A histogram of the number of occurrences of each key in the text can be used to plot the data to help better visualize the data. Using these frequencies, we can arrive at probabilities that a word will be followed by another in the text. Some words like “a” and “the” will be used the most, whereas others like names, proper nouns, and jargon will be more sparingly used. The frequency by which a given word appears in a document is called a term frequency and the frequency of the same word across various documents is called inverse document frequency. Inverse document frequency aids in reducing the impact of commonly used words like “a” and “the.”

Machine Learning

Machine learning is the broad classification of techniques that involve generating new insights by statistical generalization of the earlier data. Machine learning algorithms typically work by minimizing a penalty or maximizing a reward. A combination of functions, parameters, and weights come together to make a machine learning model. The learning techniques can be grouped into three major categories: supervised learning, unsupervised learning, and reinforcement learning. Supervised learning is the most common type of technique used for constructing AI models, whereas unsupervised learning is more useful for identifying patterns in an input. Supervised learning will calculate a cost for each answer in the training dataset that was incorrectly answered during the training phase. Based on this error estimate, the weights and parameters of the function are adjusted recursively so that we end up with a general function that can match the training questions with answers with a high level of confidence. This process is known as back propagation. Unsupervised learning is only given a dataset without any corresponding answers. The objective here is to find a general function to better describe the data. Sometimes, unsupervised learning is also used for simplifying the input stream to be fed into a supervised learning model. This simplification reduces the complexity required for supervised learning. Just as cost and error is defined as two functions in supervised learning, under reinforcement learning an arbitrary cost is assigned based on the action taken. Such a cost will need to minimize or maximize a similar arbitrary reward. In this example:

Raw Data: [fruit: apple, animal: tiger, flower: rose]supervised learning would be provided with the entirety of this set of data and would use the answers—say, “apple is a fruit”—to test itself. In unsupervised learning, only the following data would be given to the algorithm:

[apple, tiger, rose]and the algorithm would then find a pattern among the given data. Reinforcement learning could have the computer guessing the type of noun, and the user would assign a penalty/reward for each correct/incorrect guess accordingly.

Markov Chains

In mathematics, a stochastic process is a random variable that changes with time. This random variable can be modeled if it has a Markov property. A Markov property indicates that the state of the variable is affected only by the present state; if you know the present state, you can predict the future state with reasonably high accuracy. Markov chains work on the principle of the Markov property; by incrementally “guessing” the next word, a sentence can be formed, and sentences together make a paragraph, and so on. The Markov chain uses the tf-idf frequencies to assess the probability of each word that can appear next and then chooses the word with the highest probability. Because the reliance on tf-idf is quite high, Markov chains require large amounts of training data to be accurate. It is of vital importance that Markov models are parametrized and developed properly. Such a statistical approach has been proven better than modeling grammar rules. Variations on this model include smoothing the frequency and giving access to more data to the model. Although Markov chains are very fast and can give a better result than traditional approaches, they have their limitations and are unable to produce long forms of coherent text on a particular topic.

Hidden Markov Models

A hidden Markov model is one where the state of the program is “hidden.” Unlike the regular models, which act in a very deterministic method, hidden Markov models have the possibility of having infinite states and deriving more information than a regular Markov model. Hidden Markov models have had a recent resurgence when combined with technologies such as Word2vec (developed by Google), which can create word embedding. Nevertheless, the application of stochastic processes to languages has severe limitations, even with the larger numbers of parameter values possible with hidden Markov models. Hidden Markov models, just as traditional models, also require large amounts of data to reach better accuracy. Neural networks can be used with smaller datasets, as you'll see next.

Neural Networks

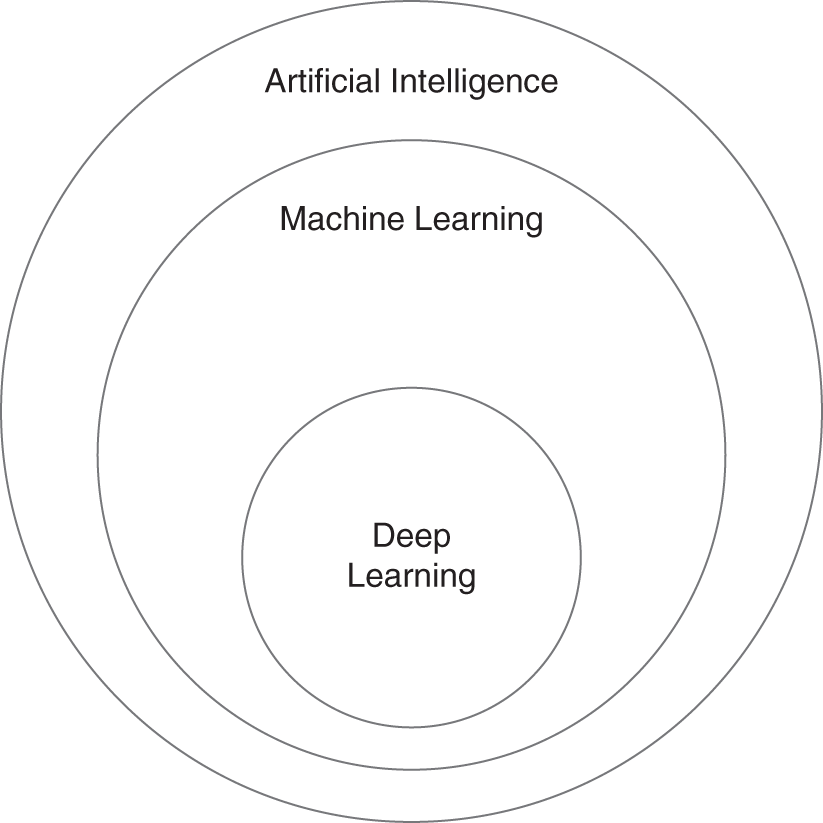

Neurons are the smallest decision-making biological component in a brain. Although it is currently impossible to identically model biological neurons inside a computer, we have been successful in approximately modeling how they work. Neurons are connected together in a neural network and accept some input, perform an operation on it, and then generate an output. In a neural network, the output is determined by the “firing” of a digital neuron. Neural networks can be as simple as a single node (see Figure 2.2), or they can contain multiple layers with multiple neurons per layer (see Figure 2.3). This neural network–based approach is also referred to as deep learning because of the potentially vast number of neural layers that a single model can contain. Deep learning is a type of machine learning that is considered AI (see Figure 2.4).

FIGURE 2.2 A Neural Network with a Single Neuron

FIGURE 2.3 A Fully Connected Neural Network with Multiple Layers

FIGURE 2.4 A Venn Diagram Describing How Deep Learning Relates to AI

One use case for deep learning is creating neural networks that are capable of creating new sentences from scratch based on a prompt. In this case, neural networks serve as a probability calculator that ranks the probability of a word “making sense” as part of a sentence. Using the simplest form of a neural “network,” a single neuron could be asked what the next word is in the new sentence it is generating, and based on the training data, it would reply with a best guess for the next word.

Words are not stored directly in the neuron's memory but instead are encoded and decoded as numbers using word embedding. The encoding process converts the word to a number, which is more easily manipulated by the computer, whereas the decoding process converts the number back to a word. Such a simplistic sentence-generating model with a single neuron can hardly be used for any serious applications. In practice, the number of neurons would be directly proportional to the complexity of text being analyzed and the quality of expected output. Adding more neurons (either in the same layer or by adding additional layers) does not automatically make a neural network better. The techniques to improve a neural network will vary based on the problem at hand, and the model will need to be adjusted for unexpected outcomes. In a practical implementation, there would be multiple neurons, each having a specific weight; the weight of the neuron would determine the final output of the neural network. This method of adjusting weights by looking at the output during the training stage is known as a back propagation neural network.

If we pass the output received from a neuron back through it again, it would give us a better opportunity of analyzing and generating the data. A recurrent neural network (RNN) does exactly that, and multiple passes are made recursively, feeding the output back into the neuron as input. RNNs have proved to be much better at understanding and generating large amounts of text than traditional neural networks. A further improvement over RNNs are long short-term memory (LSTM) neural networks. LSTM networks are able to remember previous states as well, and then output an answer based on these states. LSTM networks can also be finely tuned with gate-like structures that can restrict what information is input and output by a neuron. The gates use pointwise multiplication to determine if all or no information will go in through the gate. The gates are operated by a sigmoid layer that judges each point of data to determine whether the gate should be opened or closed. Further variations include allowing the gate to view the output of a neuron and then pass a judgment, thus modifying the output on the fly.

Chatbots and text generators are some of the biggest use cases for NLP-based neural networks. Speech recognition is another area where such neural networks are used. Amazon's Alexa uses an LSTM.7

Image Recognition/Classification

Images contain a lot of data, and the permutation and combination of each pixel can change the output drastically. We can preprocess these images to reduce the size of the input by adding a convolutional layer that reduces the size of the input data, thus reducing the computing power required to scan and understand larger images. For image processing, it is critical to not lose sight of the bigger picture—a single pixel cannot tell us if we are looking at an image of a plane or a train. The process of convolution is defined mathematically to mean how the shape of one function affects another. In a convolutional neural network (CNN), maximum pooling and average pooling are also applied after the convolutional process to further reduce the parameters and generalize the data. This processed data from the image is then fed into a fully connected neural network for classification. CNNs have proven their effectiveness in image recognition; they are very efficient at recognizing images, and the reduced parameterization aids in making simpler models.

Becoming an Innovation-Focused Organization

The world of technology moves at a high speed. Innovation will be the key business differentiator going forward. The advantage of being the company to present a key piece of innovation in the marketplace is game changing. This first-mover advantage can be achieved only via the relentless pursuit of innovation and rigorous experimentation with new ideas. Innovations using AI can also lead to cost-saving practices, offering the competitive edge needed to fiscally outmaneuver the competition. Organizations should develop a culture of innovation by establishing suitable processes and incentives to encourage their workforce.

A culture of innovation is hard to implement correctly, but it yields splendid results when done well. Your organization should have policies that encourage innovation and not limit it. A motivated workforce is the cornerstone of innovative thinking. If the employees are unmotivated, they will think only about getting through the day and not about how to make their systems more efficient.

IBM pioneered the concept of Think Fridays, where employees are encouraged to spend Friday afternoons working on self-development and personal research projects.8 This in part has led IBM to being called one of the most innovative companies given that 2018 marks their 26th consecutive year of being the entity with the highest number of U.S. patents issued for the year.9

Google similarly has a 20 percent rule that allows employees to work on personal projects that are aligned with the company's strategy.10 This means that Google employees, for the equivalent of one day a week, are allowed to work on a project of their own choosing. This morale-boosting perk enables employees to work on what inspires while Google maintains the intellectual property their employees generate. Famously, Gmail and Google Maps came out of 20 percent projects and are now industry-leading Google products in their respective fields.

Not every organization needs to be as open as Google, but even a 5 percent corporate-supported allowance can have a big impact, because employees will use this time to focus on revolutionizing the business and will feel an increased level of creative validation with their work. Ensuring that employees are adequately enabled and motivated is a task of paramount importance and gives them the tools to modernize and adapt their workflows.

An organization that fosters creativity among its employees is bolstered to succeed. It will be the first among its peers to develop and implement solutions that will directly impact key metrics and result in savings of time and money. When existing business processes can be revamped, major transformations of 80 to 90 percent increased efficiency and productivity are possible. The main idea is to allow employees time to think freely. Every employee is a subject matter expert about their own job. Transferring this personal knowledge into shared knowledge for the organization can lead to a valuable well of ideas.

Innovation should be a top priority for the modern organization. At its core, prioritizing innovation means adapting the business to the constantly changing and evolving technological landscape. A business that refuses to innovate will slowly but surely wither away.

The organization that chooses to follow the mantra “Necessity is the mother of all invention” will continuously lag behind its peers. It will be following a reactive approach instead of a proactive approach. Such an organization will, by definition, be perpetually behind the technological curve. With that said, there are certain upsides to this strategy. If you are merely following the curve, you avoid the mistakes made by early adopters and can learn from those mistakes. Such an organization would save on short-lived, interim technologies that are discarded and projects that are shelved after partial implementation.

At the other end of the spectrum is an organization that yearns for change. This organization will try to implement new technologies like AI and robotics as much as it can. This proactive policy has the potential to yield far greater returns than the organization that follows the curve. This modern business, however, will need to control and manage its change costs carefully. It runs the risk of budget overruns and faces the disastrous possibility of being thrown completely out of the race. Care should be taken to minimize the cost of mistakes by employing strategies like feedback loops, diversification, and change management, all of which we will discuss in more detail in the coming sections. The rewards in this scenario are great and will lead an organization to new heights.

This path of constant innovation and learning is the smartest path to follow in the modern world. Passively reacting to technological changes only once they have become an industry standard will greatly hamper any organization.

Idea Bank

Organizational memory can be fickle. To aid the growth of an innovative organization, an “idea bank” should be maintained. The idea bank stores all ideas that have been received but not yet implemented. An innovation focus group should be given the authority to add and delete ideas from the bank, though the idea bank should be adequately monitored and protected since it will contain the way forward for your organization and possibly quite a few classified company internals.

An organization should designate a group of managers to focus on innovation on an ongoing basis as part of their jobs. Members of this group should be selected so that all departments are represented. This innovation focus group should hold regular (weekly, monthly, or quarterly) meetings, which will include reviewing new suggestions from other employees as well as feedback and suggestions received from other stakeholders like vendors and customers. Such a group would have the official responsibility of curating and reviewing the idea bank.

The idea bank should allow submissions from all levels of the organization, with their respective heads as filters. The final say for inclusion in the idea bank should be left with the innovation focus group. This allows the idea bank to grow rich with potential ideas for implementing changes to the way the organization works while maintaining quality control. Employees should also be rewarded if an idea they submit gets executed. This will provide employees with more motivation for submitting and implementing ideas.

Approved submissions should be clear and complete so that it is possible to pick up and implement proposals even in the absence of their authors. A periodic systematic review of the idea bank should be conducted to ascertain which ideas are capable of immediate implementation. Table 2.1 shows an example of an idea bank.

TABLE 2.1 A sample idea bank

| Idea | Estimated impact | Estimated investment | Submitter |

| Automated Venture Evaluation | Increase revenues by 20% | $100K over 3 months | Margaret Peterson, CEO |

| AI Support Chatbot | Save 30% of the support budget | $250K over 6 months | Mallory McPherson-Wehan, Customer Service Manager |

| Manufacturing Workflow Automation | Improve manufacturing by 10% | $50K over 2 weeks | Zack Kimura, Engineering Lead |

| Advertising Channel Optimization | Reduce required advertising budget by 10% | $100K over 2 months | Mike Laan, Social Media Marketing |

Business Process Mapping

Business process mapping can be a major asset in helping you to identify tasks that can be automated or improved on. Each business process should have a clear start and finish, and your map should contain the detailed steps that will be followed for a complete process. Flowcharts and decision trees can be used to map processes and their flow within the organization. Additionally, any time taken by moving from one step to the next should be recorded. This additional monitoring will help identify any bottlenecks in the process flow. Another document that can be used to help chart the flow of processes is a detailed list of everyone's jobs and their purposes in your organization.

Who? What? How? Why? Asking these four questions for every process will ensure that all data necessary for the process map is available. These simple questions can generate complex answers necessary to find and fill the gaps. The questions should be asked recursively—that is, continuing to drill down into each answer—to ensure that all the necessary information is generated. As an example, let's map the details for a department store chain starting at the store level:

- Who controls the stores?

- Store manager

- Who controls the store manager?

- Reports to the VP of Finance

- What does the store manager control inside the store?

- Processes related entry inward/outward and the requisition of goods

FIGURE 2.5 An Enhanced Organizational Chart

- How does the store manager control the stores?

- Uses custom software written 10 years ago

- Why does the store manager control the store?

- To ensure that company property is not stolen or otherwise misused

- Who controls the store manager?

- Store manager

- What is in the stores?

- …

- How does it get there?

- …

- Why is it needed?

- …

This example has answers to critical questions as we draw up the organizational flowcharts, information flow diagrams, and responsibilities. Figure 2.5 starts this process by providing an interaction diagram between the actors in the system and the roles they play. For instance, does the 10-year-old software still address the needs of the store with minimal service disruptions?

Flowcharts, SOPs, and You

With the information collected so far, we can prepare our organizational flowchart. Organizational flowcharts are a helpful tool for viewing the processes currently being followed in your organization. Standard operating procedure (SOP) documents are great to use along with manuals to help trace the flow of information and data throughout an organization, but SOPs will only get you so far. Conducting interviews and observing processes firsthand as they happen will give more specific insight to the deviations and edge cases that arise from SOPs.

As an example, an organization could have the following SOP:

This policy will have quite a few practical exceptions. The marketing manager could send the data themselves if the assistant is on leave, or sometimes the accountant might prepare the invoice only based off the email received without a copy of the invoice requisition form. Such practical nuances can only be ascertained in interviews and a review of what was actually done. The SOP in this case is also a perfect example of one that can be automated using NLP or via the use of more structured forms by allowing direct entry into the system, with the accounting function being done automatically. Such a system, when implemented correctly, would strengthen the internal controls by requiring compulsorily documented assent from the managers, without which an invoice would not be printed.

Information Flows

Another vital tool in your toolbox of idea discovery is the tracking of information and dataflows throughout your organization. Tracking what data is passed across various departments, and then how it is processed, will lead to fresh insights on work duplication, among many other efficiency issues. Processes that have existed for years may have a lot of information going back and forth, with minimal value-added. Drawing up flowcharts for these dataflows will allow you to visualize the organization as a whole (see Figure 2.6 and Figure 2.7). It helps to think of the entire business as a data processing unit, with external information being the inputs and internal information and reports sent outside being the final output. For example:

FIGURE 2.6 An Information Flow Before an AI System

FIGURE 2.7 An Information Flow After an AI System

In this scenario, the production schedule can be made automatically using AI with the marketing forecasts and the constraints of the production team. This efficiency improvement can free up the production manager's time for higher-value activities.

Coming Up with Ideas

Once the process flowcharts, timesheets, information flows, and responsibilities are established, it is time to analyze this wealth of data and generate ideas from the existing setup about how to revamp it. Industry best practices should be adopted once the gaps have been identified. Every process should be analyzed for information like its value-added to internal and external stakeholders, time spent, data required, source of data, and so forth. The idea is to find processes that can be revamped to provide substantial improvements that will justify any revamp investment.

A note of caution as you embark on idea discovery: When you are holding a hammer, everything looks like a nail. Care should be taken that processes that do not need any upgrading are not being selected for revamping. Frivolously upgrading processes could lead to disastrous results; for every new process, modification has a cost. A detailed cost–benefit analysis is a must when implementing a process overhaul to identify any value that will be added or reduced. This will ensure that axing or modifying a process does not completely erode a necessary value, which could cause the business to fail.

Value Analysis

Artificial intelligence can drastically change the level of efficiency at which your organization can operate. Mapping out all your business processes and performing a value analysis for the various stakeholders in your company will help you isolate processes that need to be modified using an AI system. “Value” is a largely subjective concept at this point and need only be shared with the stakeholders who are directly affected. We must, however, consider both internal and external stakeholders when making this decision. For example, your company's tax filing adds no direct value to your customers. However, it is important to stay in business and avoid late fees. Therefore, tax filing has important ramifications and is thus deemed “valuable” to a government stakeholder. The major stakeholders in a business can be grouped into five categories:

- Customers

- Vendors

- Employees

- Investors

- Governments

Each of these stakeholders expects to receive a different kind of value from the business. It is in the business's best interest to provide maximum value at the cheapest cost to each stakeholder in order for the business to remain solvent.

The investigation into existing processes can start with interviews, followed by drawing flowcharts for processes to be done by a user. Every step in the flowchart needs to be assessed for value provided to each of the various stakeholders. Remember that “value” here does not exclusively mean monetary value. Some processes, for example, can provide value in terms of control structure, prevention of fraud, or misuse of company resources. Such a process would need to be retained, even though the process itself might not generate any pecuniary revenue. The key takeaway is to ensure that your costs are justified and that each process is necessary while keeping in mind that “value” can take many different forms and is dependent on the specific stakeholder's interest.

The value analysis will help to identify processes that can be overhauled in major ways. If the process is adding little value to the customer (stakeholder), then it should be axed. If the process feels like it can be improved on, it should be added to the idea bank. The addition of value to a stakeholder can be considered a critical factor for identifying and marking processes. A process like delivery and shipment, done correctly, adds a sizable value to the end customer. After all, delivery is one of the first experiences a customer will have with your organization after a purchase has been made. First impressions do matter and can buy some goodwill over the lifetime of a customer.

This process of identifying value can take a long time to complete for all the processes that a business undertakes. In this regard, the entire business process list should be segmented based on a viable metric that covers entire processes from bottom to top, and each segment should be analyzed individually. For instance, with a company that manufactures goods, the processes can be segmented based on procurement, budgeting, sales, and so forth.

A Value Analysis Example

Widgets Inc. sells thousands of products on its website, Widgets.com. The CEO notices that new products take about two days to go live after they are received. The CEO asks the chief technology officer (CTO) to draw up the process map and plot the bottlenecks and more time-consuming parts of the process. The CTO starts this task by examining each of the processes in question as they are outlined in the company's records. Training manuals, system documentation, and an enterprise resource planning process are some of the documents the CTO uses to create a detailed flowchart for each of the processes she examines. Process manuals are often created using theoretical descriptions of their processes, as opposed to practical ones, so these records may not reflect the actuality of what each worker is doing and can offer misleading data on a specific process's functionality. Keeping this in mind, our CTO conducts interviews with the employees involved in each of the processes she examines. She marks any discrepancies and the actual time taken for each step against those listed in the flowchart. An example of this flowchart is shown in Figure 2.8.

The CTO notices that step 5, where marketing adds content and tags, takes an average of four hours for each product. Multiple approvals are involved, and approvers typically only look to ensure that the content roughly matches the product and that it is not offensive in any way.

FIGURE 2.8 A Sample Process Flowchart

The CTO submits her findings to the CEO:

This idea can be implemented right away, or it can be filed for later use in the idea bank, to be implemented once the organization chooses to allocate resources.

Sorting and Filtering

The idea bank, due to its very nature, will quickly become a large database of useless information if it is not periodically sorted and ranked. Due to economic, time, and occasionally legal constraints, it is impossible to implement all ideas at once. Because of this fact, it is necessary to filter and sort the idea bank by priority in order to realize the benefits of maintaining one in the first place. For instance, ideas that require minimal investment but that have a large cost savings impact should be prioritized first. Conversely, ideas that will take a long time to implement and that have little impact should be low in the idea bank prioritization list, perhaps never being implemented. That said, even low-priority ideas should never be deleted from the idea bank because in the future, circumstances might change to improve the idea's priority. With a long list of prioritized ideas on hand, the next step is to start giving them some structure.

Ranking, Categorizing, and Classifying

Ranking the items in the idea bank based on various metrics will help the future decision makers get to the good ideas faster. Ideas should be ranked separately on various dimensions that enable filtering and prioritization. Some good examples of dimensions to consider when ranking ideas are estimated implementation time, urgency, and capital investment. A point-scoring system can help immensely with this. The relative scale for point values should be clearly established and set out at the start so that subsequent users of the data bank are not left wondering why “automate topic tagging” is prioritized over “predictive failure analysis for factory machinery.”

One of the easiest ways to start organizing our idea bank is by grouping the brainstormed ideas into similar categories. Groups can be as wide or as narrow as you want them to be. Categories should fulfill their purpose of being descriptive while still being broad enough to adequately filter ideas. Here are some examples of the kinds of groups that may be useful for filtering ideas:

-

Time

Each idea is classified according to the time taken for development of an AI solution and the time needed to change management structures and practices: specifically, short-term (within the next year), medium-term (between one and five years), and long-term (longer than five years). Although these will be only estimates, they are dictated roughly by how quickly you believe your organization may change, as well as a general idea of the technological feasibility to implement the idea.

-

Capital Allocation

Priority evaluation based on the anticipated capital necessary to make each idea functional. Capital includes the initial investment, along with recurring maintenance costs and routine costs (if any). Large ideas are ones that involve more than 20 percent of annual profits, medium ideas at 10 to 20 percent of annual profits, and small ideas at less than 10 percent of annual profit required.

Employee Impact

Priority evaluation considering the estimated impact that ideas will have on employees, in terms of labor hours, changing their workflows and processes, and streamlining efficiency. Categories should exist to emphasize the net-positive impact of implementation and should therefore also consider the number of employees expected to be affected by implementation. Impact scores for each idea would then be multiplied by weighted values based on the percentage of the organization's employees expected to be noticeably affected by implementation.

Risk

Every idea should have a risk classification. A risk assessment should be done for threats to the business due to implementation of the idea and a suitable risk category should be awarded: High, Medium, or Low.

Expected Returns on the Idea

The expected returns on an idea should be identified. These can be in the nature of cost savings or incremental revenue. Projects with zero returns can also be considered for implementation if the effect on other key performance indicators is positive.

Number of Independent Requests for an Idea

Perhaps three people suggested your organization interact completely without the use of manual processes such as the filling out of paper forms. Keeping a count of how many independent individuals suggest the same idea can be a quick gauge as to which ideas are most needed or desired, or at least give an impression of similarly regarded issues within the organization.

FIGURE 2.9 An Example Grouping of Ideas

Selecting better tags will ultimately aid the decision makers in filtering and sorting through the idea bank. At this stage, most of these tags and information should be educated guesses and not actual thorough investigations into the feasibility of an idea. The classifications are merely tools to maneuver through the list in a structured manner. A sample idea grouping using time and risk is shown in Figure 2.9.

Reviewing the Idea Bank

Reviewing the idea bank on a regular basis will be significantly easier, and better ideas will consistently rise to the top, if the methods of ranking and sorting are well implemented. As company priorities change, the urgency metrics of ideas will also change. If new technology becomes available or an organization finishes a previously roadblocking project, the estimated implementation time for ideas might change as well. This periodic reevaluation will ensure that also the best ideas are always being selected for the following step.

The idea review is as crucial as the ideas themselves and should be done by a special focus group to ensure that the best ideas are selected for implementation. Selecting an idea for implementation costs time and money, so it is imperative that only the best ideas from those submitted are selected. Implementing ideas in a haphazard manner might incur consequences that could prove disastrous for the organization. The review meetings should also note what ideas were selected for implementation and explain their reasons for doing so. This explanatory nature will aid the future decision makers in avoiding the same mistakes of the past.

After selection, a cost–benefit analysis should be undertaken for ideas that require major changes in workflow or a large capital investment. These new prioritization processes should be efficient and not just increase bureaucracy for the employees of the organization. Whenever possible, a trial run should be attempted before implementing any idea organization-wide. Innovation for the sake of innovation will merely saddle an organization with increased costs, increase unnecessary bureaucratic structures, and leave everyone unhappy.

Brainstorming and Chance Encounters

In an innovative company, it is critical to have your employees motivated and discussing ideas. All major discoveries in the modern era (since the 1600s) can credit the use of the scientific method, which is the process of

- Making an observation

- Performing research

- Forming a hypothesis and predictions

- Testing the hypothesis and predictions

- Forming a conclusion

- Iterating and sharing results

The scientific method relies on criticism and constant course correction to maintain the integrity and accuracy of its findings. The same can be applied to an organization's innovation method by allowing people to critique, discuss, and debate. Providing ample time and space to share ideas and discuss possible innovations is paramount for an organization's growth. These collaborative consortiums, or brainstorm sessions, work best when conducted at regular and predictable intervals, in small, functional groups, and guided by an organizer who is a peer of the participants. Small groups of employees being guided through strategic thought-sharing sessions will allow the organization to gain insights from many different sources, help employees lend their personal voices to their organization while feeling validated, and also help clarify the organization's intentions, plans, and obstacles to its employees, unifying them in their goals.

A distinction needs to be made at this point between constructive criticism and frivolous or destructive criticism. Useful critiques must always be directed at the idea, not at the person who had the idea. Criticisms should offer data that directly conflicts with the statement presented or should present issues that may conflict in a way that will help them be managed or avoided. Wherever possible, criticism should not be about shooting down an idea, but about how to safeguard it or pivot it into something more viable and sustainable.

Debate enables us to look at a concept from a different perspective, offering us new insight. It is very important for an organization to come up with new ideas, and equally important for it to strike down bad ones. A single person can easily become biased, and bias can be difficult to spot in one self. When presented constructively, hearing opposing viewpoints allows a group to overcome the internal biases of an individual.

In every organization the managers are a critical link, trained to understand the core of the business. They understand the elements that help run the business efficiently and effectively. Asking the managers to submit a quarterly report for ideas that can be implemented on a quarterly or semiannual basis can also be an effective source for gathering ideas, filtered through the people who are likely to best understand the company's needs, or at least the needs of their own particular department.

One final idea, which research is proving to be even better than regular brainstorming sessions, is encouraging chance encounters and meetings. Chance encounters among members of different teams will lead to spontaneous and productive discussions and increased ease of communication, and result in improved understanding with which to generate higher-quality ideas than those from larger, scheduled brainstorms. Chance encounters can be encouraged by creatively designing workspaces. For instance, Apple designed their Infinite Loop campus with a large atrium where employees can openly have discussions. During these discussions, employees might see someone they have been wanting to talk to. In this way, a productive exchange, which may not have otherwise happened, is able to take place through this chance encounter.

Cross-Departmental Exchanges

In an organization, multiple departments need to work with one another to attain the objectives of the organization. Exchanges between departments typically only occur as much as minimally needed to get the job done. The downside to this “needs”-based approach is that two departments that could come together and offer each other valuable new perspectives on process sharing and troubleshooting rarely interact. Accounting is a function that needs a familiarity with every employee in an organization, but this cross-talk needed by accounting, relating to the filing of reimbursement claims and other documentation needs of the department, can be too one-sided for chance encounters and too tangential for members of accounting to join in the brainstorming sessions of other departments. On the flipside, it is easily possible for brainstorming sessions to be set up in a cross-functional manner. Many principles can be shared from one expert to another if they are aware of the role that the other person plays. The avenues of such cross-talk need not be limited just to meetings and brainstorming sessions. Users should be provided and encouraged to use more collaborative programs like discussion forums, social events, interdepartmental internships, cross-departmental hiking trips, and so forth. The key is to let two departments become comfortable enough to share their workflow and ideas among themselves. An approach like this will help the organization to grow and adopt newer technologies across domains.

While implementing departmental cross-talks, care should be taken that the “walls” that separate the departments due to ethical, legal, and privacy-based concerns are not taken down. “Walls” is legalese for the invisible walls separating two departments within an organization, whose objectives and integrity demand independence from each other, where comingling could lead to conflicts of interest. In an investment bank, for example, the people marketing the product should not be made aware of material nonpublic financial information received from the clients. Control should not be sacrificed for ideation.

In another example, Auto Painting Inc. paints cars for companies with fleets of cabs. The accounting manager generates invoices based on data received in emails from the marketing department. Due to this manual process, invoices are sometimes delayed, causing a cash flow problem for the company. In an effort to automate, Auto Painting Inc. hires a development team to develop a new website. Luckily, the company has forums on an internal intranet, the use of which is encouraged, especially for cross-talk among departments. The account manager and the developer for the website converse over the procedure for generating invoices. The developer suggests that with an implementation of an NLP algorithm, the generation of invoices can be automated, saving hours of company time and money. The idea is fast-tracked based on the company's priorities, and in a few months, the overall company cash flow is improved.

AI Limitations

It is very important to understand the limitations of artificial intelligence. Knowing what you cannot do right now will help you to temporarily reject ideas that could be out of reach. The thing to bear in mind while rejecting ideas is to ensure that you do not lose sight of ideas generated if the technology has not reached you yet. The AI field can still be considered to be in its nascency, and it is a very actively researched field. To ensure great ideas that are currently blocked by AI's limitations are not lost, they should be committed to the idea bank. The idea should be recorded with the current blocker to ensure that when technology catches up, your organization can start implementing the idea and get the first-mover advantage. Although there are many specific limitations of AI frameworks as of this writing, here are a number of general limitations that are currently true:

Generalizations

Artificial intelligence as it currently stands cannot solve problems with a single, general approach. A good candidate problem that can give you a good return on investment should have a well-defined objective. A bad candidate problem is one that is more loosely defined and has a broad scope. The narrower your scope, the faster a solution will be developed. For instance, an AI system that tries to generate original literature is a very hard problem, whereas learning to generate answers from a script based on finding patterns among questions is a problem with a much smaller scope and therefore much easier to solve. In technical terms, a “strong artificial intelligence” is an AI that can pass the Turing test (speaking with a human and convincing the human that it is not an AI) or other tests that aim to prove the same levels of cognition. Such programs are likely years, if not decades, away from any kind of market utilization. On the other hand, “weak artificial intelligence” is AI designed to solve smaller, targeted problems. They have limited goals, and the datasets from which they learn are finite. A business example is tagging products based on their dimensions, classification, and so forth. This is a very important task in most businesses. In this case, tagging based on model number is easier, compared to identifying products based on images.

Cause and Effect

A lot of artificial intelligence in today's world is a black box. A black box is something you feed data to and it gives you an answer. There is no reason (at least one easily understood by humans) why certain answers were chosen over others. For projects like translations, this does not matter, but for projects involving legal liability or where “explainability” of logic is of high importance, this could be troublesome. One type of AI model assigns weights to each possible answer component and then decides, based on training, whether to reduce or increase weights. Such a model is trained using an approach called backpropagation. These weights have no logic besides leading the AI closer to a correct answer based on the training data. Hence, it is imperative to assess AI for ethical and legal concerns regarding “explainability.” This topic is discussed further by our AI expert, Jill Nephew, in Appendix A.

Hype

Artificial intelligence is cutting-edge technology. With every cutting-edge technology comes its own marketing hype that is created to prey on the asymmetry of information between the users and the researchers. It is important to do your due diligence on any AI products or consultants before using them to build a solution. Ask for customer references and demonstrations to see for yourself. It is very important to ensure that AI being developed is feasible in terms of current technological capability.

Bad Data

Artificial intelligence, which is built using supervised learning techniques, relies heavily on its training data set. If the training data set is garbage, the answers given will also be garbage. The data needs to be precise, accurate, and complete. In terms of development of an AI project, data availability should be ensured at the conception of the project. A lack of data at a later stage will cause the entire project to fail, and more time and resources to have been wasted. Good data is a critical piece of the AI development puzzle.

Empathy

Most AI lacks empathy of all manner and kind. This can be a problem for chatbots and other AI being developed for customer service or human communication. AI cannot build trust with people the way a human representative can. Disgruntled users are more likely to feel even more frustrated and annoyed after a failed interaction with a chatbot or an Interactive Voice Response (IVR) system. For this reason, it is necessary to always have human interventionists ready to step in if needed. AI cannot necessarily make a customer feel warm and welcome. It would be best not to use AI chatbots or other such programs in places where nonstandard and unique responses are needed for every question. AI is more suitable in service positions that are asked the same questions repeatedly but phrased differently. Here is an example of such a scenario inside an IT company that provides support for a website: “How to reset my password?”; “How do I change my password?”; “Can I change my password?” Such similar questions can be handled very well by an AI program, but an interaction on what laws are applicable to a particular case is very difficult to implement and will require resources that are greater in orders of magnitude.

Pitfalls

Here are some pitfalls that you may encounter during ideation.

Pitfall 1: A Narrow Focus

Artificial intelligence is an emerging field with wide applications. Although trying to solve every problem with artificial intelligence is not the right approach, care should be taken to explore new potential avenues and ensure that your focus is not too narrow. During the ideation stage, it is essential to be as broad-minded as possible. For instance, consider how AI might be able to improve not only your core business but also auxiliary functions such as accounting. Doing so while acknowledging the limits of real-world applications will facilitate idea generation. Some applications for AI can also be relatively abstract, benefiting from lots of creative input. All ideas that are considered plausible, even those in the indeterminate future, should be included in the idea bank.

Pitfall 2: Going Overboard with the Process

It is easy to get carried away with rituals, thus sidelining the ultimate goal of generating new ideas. Rituals such as having regular meetings and discussions where people are free to air their opinions are extremely important. Apart from these bare necessities, however, the focus should be placed on generating ideas and exploring creativity, rather than getting bogged down by the whole process. The process should never detract from the primary goal of creating new ideas.

Pitfall 3: Focusing On the Projects Rather than the Culture

For an organization, the focus should be on creating a culture of innovation and creativity rather than generating ideas for current projects. A culture of innovation will outlast any singular project and take your organization to new heights as fresh ideas are implemented. Creating such a culture might involve a change in the mindset around adhering to old processes, striving to become a modern organization that questions and challenges all its existing practices, regardless of how long things have been done that way. Such a culture will help your organization much more in the long run than just being concerned with implementing the ideas of the hour.

Pitfall 4: Overestimating AI's Capabilities

Given machine learning's popularity in the current tech scene, there are more startups and enterprises putting out AI-based systems and software than ever before. This generates a tremendous pressure to stay ahead of the competition, sometimes using just marketing alone. Although incidents of outright fraud are rare, many companies will spin their performance results to show their products in the best possible light. It can therefore be a challenge to determine whether today's AI can fulfill your lofty goals or if the technology is still a few years out. This fact should not prevent you from starting your AI journey since even simple AI adoption can transform your organization. Rather, let it serve as a warning to be aware that AI marketing may not always be as it seems.

Action Checklist

- ___ Start by building a culture of innovation in your organization. Ideas will come from the most unexpected places once this culture is set in place.

- ___ Form an innovation focus group consisting of top-level managers who have the authority to make sweeping changes.

- ___ Start maintaining an idea bank.

- ___ Gather ideas via scrutinizing standard operating procedures, process value analyses, and interviews.

- ___ Sort and filter the idea bank using well-defined criteria.

- ___ Do timely reviews to trim, refine, and implement ideas.

- ___ Learn about existing AI technologies to gain a realistic feel for their capabilities.

- ___ Apply the idea bank to the AI models learned in the previous step to find those ideas that are suitable for the implementation of AI.