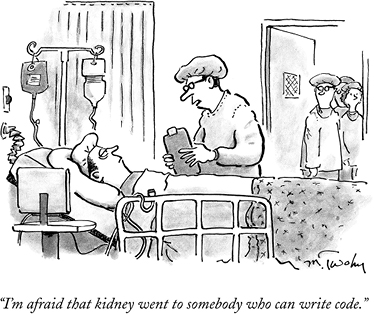

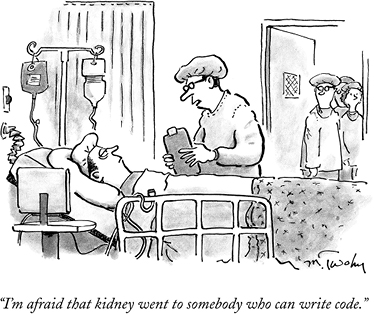

Figure 1 According to this 2015 New Yorker cartoon, people who can code are more valuable than others. Mike Twohy, New Yorker, June 25, 2015. Reprinted with permission from Condé Nast.

The coding literacy movement is in full swing. Websites such as Codecademy.org and Code.org have made teaching computer programming to everyone their central mission. Code.org not only has the backing of Facebook founder Mark Zuckerberg and Microsoft founder Bill Gates; it also convinced then U.S. Representative Eric Cantor and U.S. President Barack Obama to record video spots promoting a “code for everyone” agenda. (President Obama was called the “Coder in Chief” while promoting Code.org’s Hour of Code initiative.) New York Mayor Bill de Blasio argued that “Too many students are learning to type when they should be learning to code.”1 The merits of “everyone” learning to code are debated not only on Reddit.com’s tech-focused forums but also on National Public Radio and in Mother Jones and the New York Times.2 Google has given $50 million to the Made with Code initiative for the purpose of encouraging young women to code.3 MIT’s Scratch community and language, codeSpark’s game The Foos, and the programmable robots Dot and Dash aim to teach kids to code.4 Coding has even made it into the kindergarten curriculum before kids can read.5 Raspberry Pi, LilyPad Arduinos, and other physical computing tools bring coding to wearable technologies and other physical applications.6 To emphasize the universality and importance of computer programming, promotional materials for coding often invoke the concept of literacy and draw parallels that associate reading and writing code with reading and writing text. They point to the fact that although the technology of code is now everywhere, the ability to read and write it is not (figure 1).

Figure 1 According to this 2015 New Yorker cartoon, people who can code are more valuable than others. Mike Twohy, New Yorker, June 25, 2015. Reprinted with permission from Condé Nast.

What does it mean to call computer programming a literacy? Since the late nineteenth century, reading has been promoted as a moral good under the term literacy. This heritage of moral goodness persists in public debates about reading and writing, which is especially apparent in the periodic waves of moral panic observed in articles about education and illiteracy.7 Typified by an infamous 1975 article in Newsweek, “Why Johnny Can’t Write,” these fears of failing schools and of generations of illiterates signaling the downfall of society have been around since at least the late nineteenth century. However, literacy research indicates that literacy rates in the United States aren’t falling; rather, what we consider literacy is changing.8 There is little contemporary or historical consensus on what literacy is or what kinds of skills one needs to be literate.9 Thus, as a morally good but undefined concept, literacy can serve as a cipher for the kind of knowledge a society values. The various types of skills that come to be popularly named literacies reflect the perception of necessary and good skills for a society: health literacy, financial literacy, cultural literacy, visual literacy, technological literacy, and so forth.

However, what’s called a literacy reflects more than just popular perception. Because of literacy’s heritage of moral goodness, calling something a literacy raises the stakes for acquiring that knowledge. To lack the knowledge of something popularly considered literacy is to be illiterate. Beyond the practical disadvantages associated with missing a critical kind of knowledge, one can be penalized for the immorality of illiteracy—for dragging society down. People with poor financial literacy might, in addition to not enjoying ready access to monetary resources, fail credit checks and thus be denied employment because of their stigmatized status. Because literacy is deemed to be important to the status and financial health of a nation, to be illiterate is to be a less productive citizen. Thus, to call programming—or anything else—a literacy is to draw attention and resources to it by mobilizing the long history of reading and writing’s popular association with moral goodness and economic success, and thus connecting programming to the health of a nation and its citizens.

But there is something more than pragmatism in the rhetorical connection of literacy to computer programming. First, the idea that programming is connected to literacy is more than a fad. Educators and programming professionals have made the connection between writing and programming since at least 1961—almost as soon as computers became commercially and educationally viable. Computer programming also appears to have parallels to writing that go beyond its rhetorical framing: it is a socially situated, symbolic system that enables new kinds of expressions as well as the scaling up of preexisting forms of communication. Like writing, programming has become a fundamental tool and method to organize information. Throughout much of the world, computer code is infrastructural: layered over and under the technology of writing, computer code now structures much of our contemporary communications, including word processing, e-mail, the World Wide Web, social networking, digital video production, and mobile phone technology. Our employment, health records, and citizenship status, once recorded solely in text, are now cataloged in computer databases. The ubiquity of computation means that programming is emerging from the exclusive domain of computer science and penetrating professions such as journalism, biology, design—and, through library databases and the digital humanities, even the study of literature and history. Because every form of digital communication is ultimately built on a platform of computer code, programming appears to be more fundamental than other digital skills dubbed literacies, especially those associated only with computer usage. Other literacies and activities build on programming, and so we might think of programming as a platform literacy. Programming is a literacy we can build other activities and knowledge on, as we have done with reading and writing in human languages. Because of its affordances for information creation, organization, and dissemination, the practice of programming bears other significant similarities to reading and writing. Programming and writing are both socially inflected by the contexts in which they are learned and circulated and are materially shaped by the technologies that support and distribute them. Literacy is a weighty term, to be sure. But in the case of programming, it also appears to be apt.

In this book, I treat these persistent links between computer programming and literacy as an invitation to consider questions about the nature of both. How can we understand the ways that computer programming is changing our practices and means of communication? And how do we account for new modes and technologies in literacy? Pursuing these questions, as I do in the chapters that follow, shifts our understandings of both programming and literacy. Looking at programming from the perspective of literacy and literacy from the perspective of programming, I make two central arguments: (1) programming shows us what literacy looks like in a moment of profound change; (2) the history and practices of reading and writing human languages can provide useful comparative contexts for contemporary programming. I intend the title of this book—Coding Literacy—to reflect both of these central arguments. Coding (noun) is a type of literacy, and programming is re-coding (verb) literacy.10 That is, computer programming is augmenting an already diverse array of communication skills important in everyday life, and because of the computer’s primary role in all digital literacies, programming’s augmentation of literacy fundamentally reconfigures it. Literacy becomes much larger, and as it grows, the relationships and practices it characterizes change. When literacy includes coding, the ways we experience, teach, and move with our individual skills, social paradigms, communication technologies, and information all shift.

Literacy is a theoretically rich way to understand the relationship between communication and technology, in part because those who study literacy have long grappled with what it means for humans to work with socially situated, technological systems of signs.11 But when computer enthusiasts invoke literacy in their calls for the broader teaching of programming, they generally do so without conveying its historical or social valences. Seeing programming in light of the historical, social, and conceptual contexts of literacy helps us to understand computer programming as an important phenomenon of communication, not simply as another new skill or technology. It also helps us to make sense of the rhetoric about “coding as a new literacy.” Sylvia Scribner argued that how we define literacy is not simply an academic problem. What falls under its rubric gets implemented in large-scale educational programs and popular perceptions about who is and isn’t literate.12 Schooling, in turn, shapes perceptions of how literacy gets defined and what social consequences are faced by those considered illiterate.13

As programming is following a trajectory similar to that taken by reading and writing in society, the theoretical tools of literacy help us to understand the history and future of identities and practices of programming. While reading was once done collectively such that whole families or communities could rely on the abilities of a few, we now believe everyone should be able to read. And while writing was once a specialized skill with a high technical bar for entry—one needed to know how to prepare parchment, make ink, maintain writing instruments, or bind pages—it is now an expected skill for all members of developed societies. As reading and writing became more generalized, the diversity of their uses proliferated beyond their clerical and religious origins. Writers don’t have to be “good”—as defined in particular, narrow, disciplinary ways—to benefit from their literacy skills. Most of us write for mundane but ultimately powerful activities: grocery lists, blogs, diaries, workplace memos and reports, letters and text messages to family and friends, fan fiction, and wills. People put their literacy to all kinds of uses, many of which are never anticipated by the institutions and teachers they learn from.14

Similarly, the bar to programming was once much higher—access to expensive mainframes, training programs, or a background in math or engineering was needed. But now, with ubiquitous computational devices and free lessons online, access to learning programming is easier. At the same time, the uses for code have diversified. The ability to read, modify, and write code can be useful not just for high-profile creative or business applications but also for organizing personal information, analyzing literature, publishing creative projects, interfacing with government data, and even simply participating in society. Literacy research can, then, provide a new lens on the intertwined social and technological factors in programming. It also offers fresh approaches to teaching programming—programming not as a problem-solving tool, but as a species of writing. And thinking of programming as literacy may help us to prepare for a possible future where the ability to compose symbolic and communicative texts with computer code is widespread.

Thinking about programming as written communication also jolts us to confront the ways literacy is changing. The field of literacy studies has now embraced a proliferation of new digital literacies pertaining to video games, audio and video composition, Web writing, and many other domains and genres and practices. However, these multiple literacies are often presented as atomized—proliferating and bouncing against each other in an ever-expanding field of digital and global communication. Considered separately, their multitude overwhelms us. Which literacies are enduring and which are tied to specific technologies likely to become obsolete? How do we choose among these literacies for educational programs and our own edification? A closer look indicates they are not separate at all: digital literacies operate as a complex ecology. Considered comprehensively, what digital literacies all have in common is computer programming. As a fundamental condition of contemporary writing, programming is the substrate in which these reactions occur; it’s the platform on which they’re constructed. Focusing on programming allows us to see this ecology of digital literacies more fully. We can, without attaching long-term significance to ephemeral technologies, take into account the computational, programmable devices that are now joining a panoply of other read-write tools. As programming now reveals a new assortment of social practices and technologies that constitute contemporary literacy, it can show us what a complex ecology of literacy looks like in a moment of profound change.

To suggest the ways that programming is becoming part of literacy, I often use the colloquial term coding in this book. The differences between programming and coding are hotly debated, but no clear boundaries exist between the terms, and they can be synonymous. Each term implicates different stakeholders—and diverse stakes—in the conversations about programming’s contemporary uses and relevance. Coding echoes the popular rhetoric of the “learn to code” movement and signals a move away from programming as a profession. Programmer is the more standard term in job titles, but coding is associated with young companies trying to make the practice hipper (e.g., job ads for a “ninja coder”). The crispness of code lends itself well to publicity—or shorter URLs, as in the case of Code.org.15 Scripting, a third term that is sometimes in this mix, can refer to simpler programming that doesn’t need to be compiled, or that is specific to just one software suite, or that glues together more extensive code libraries and functions. But with the further development of so-called scripting languages such as JavaScript or ActionScript or TypeScript, the term has shed its technical specificity and gathered a rhetorical resonance instead. Scripting now can refer to simpler code or library combining or can be directed derogatorily at those who don’t seem to know much about programming; for instance, at “script kiddies” who write malicious or faulty code and spread it indiscriminately on the Internet. Of the three terms commonly referring to the practice of writing code for computers, coding fits best with the focus of this book because it opens programming up to a wider population. I generally use the term programming when I am referring to the basic act and concept and coding when I’m discussing the more political and social aspects of writing code for computers. There is, inevitably, some overlap.

As this debate about terms suggests, language matters when we talk about computer programming. What we understand about programming changes with the words and lenses we choose to use for it. And when people use literacy in reference to programming, it generally indicates that they want to encourage or highlight the phenomenon of programming becoming a more widely held and generally applicable skill. That the words we use can indicate cultural ideas was central to Raymond Williams’s keywords project, which sought not to define terms or pin them down, but instead to map them out rhetorically: to trace their histories, their stakeholders, and their dynamic cultural and social geographies. By mapping literacy as a heavily circulated term in programming, as I do in chapter 1, we can see programming’s historical and cultural implications in a new light.16 We can ask questions about programming invoked by literacy: Who programs, and who can call themselves a programmer? How is programming learned and sponsored? How is programming used? How do technologies and social factors intersect in programming? Similarly, Janet Abbate argues that how the computer is “cast”—for instance, as an adding machine or typewriter or piece of precision engineering—can influence whom we think of as its ideal users.17 For example, metaphors of computing as akin to math made it more accessible to women than metaphors of engineering, Abbate observes: women were well represented in math majors and had a history of calculating, whereas they were largely shut out of engineering fields. Seeing literacy as a “keyword” not only indexes the users and uses of computing; it also reveals the contradictions between broad programming education programs as literacy and the narrower disciplinary concerns of computer science. My approach suggests that because programming is so infrastructural to everything we say and do now, leaving it to computer science is like leaving writing to English or other language departments.

Seeing coding through literacy mobilizes the insights we’ve gained from decades of research on social factors involved in learning literacy, helping us to explain who programs, how they do it, and why they learn. Although early literacy research credited reading and writing for organized government, the best of Western culture, and dramatic leaps in human intelligence, research since the 1980s has been much more cautious and has put the technological and cognitive achievements of literacy in social contexts. The result is a much more complicated picture of how writing systems are learned, circulated, and used by individuals, cultures, and nations. Scholars of New Literacy Studies, a sociocultural approach to literacy that emerged in the 1980s, found that social factors are critical to our understanding of what literacy is, who can access it, and what counts as effective literacy.18 In Brian Street’s “ideological model” of literacy, someone who has acquired literacy in one context may not be functionally literate in another context because literacy cannot be extricated from its ideology.19 As James Paul Gee points out, even a task as basic as reading an aspirin bottle is an interpretive act that draws on knowledge acquired in specific social contexts.20 Shirley Brice Heath’s canonical ethnographic study of literacy shows that the people whom literacy-learners see using and valuing literacy can affect how they take it up.21 Victoria Purcell-Gates demonstrates in her work on cycles of low literacy that children growing up in environments where text is absent and literacy is marginalized have few ways to assimilate literacy into their lives.22 As Elspeth Stuckey has argued, literacy can even be a performance of violence in the way that it perpetuates systematic oppression.23 If we think of coding in terms of literacy rather than profession, these insights from literacy studies show how we must think critically to expand access to coding.

Through such insights on social context, the concept of coding literacy helps to expand this access, or to support “transformative access” to programming in the words of rhetorician Adam Banks.24 For Banks, transformative access allows people “to both change the interfaces of that system and fundamentally change the codes that determine how the system works.”25 Changing the “interface” of programming might entail more widespread education on programming. But changing “how the system works” would move beyond material access to education and into a critical examination of the values and ideologies embedded in that education. Programming as defined by computer science or software engineering is bound to echo the values of those contexts. But a concept of coding literacy suggests programming is a literacy practice with many applications beyond a profession defined by a limited set of values. The webmaster, game maker, tinkerer, scientist, and citizen activist can benefit from coding as a means to achieve their goals. As I argue in this book, we must think of programming more broadly—as coding literacy—if the ability to program is to become distributed more broadly. Thinking this way can help change “how the system works.”

I am certainly not the first to claim that the ability to program should be more equitably distributed. I join many others in popular discourse as well as other scholars in media studies and education—a lineage I outline in chapter 1. Equity in computer programming, especially along gender lines, has been the goal of countless studies and grants in computer science education.26 And my concept of coding literacy is related to what some have called “procedural literacy,”27 “computational literacy,”28 “computational thinking,”29 or what I have in the past called “proceduracy,”30 and it is roughly compatible with most of them. Jeannette Wing’s concept of “computational thinking,” perhaps the most influential of these terms, relies on principles of computer science (CS) to describe its spectrum of abilities.31 “Procedural literacy,” for Ian Bogost, “entails the ability to reconfigure concepts and rules to understand processes, not just on the computer, but in general.”32 In this definition, Bogost equates literacy more with reading than writing—the understanding of processes rather than the representation of them. He ascribes the “authoring [of] arguments through processes” to the concept of “procedural rhetoric.”33 For Bogost, understanding the procedures of digital video games or recombining blocks of meaning in games can be procedural literacy, too.34 While I agree that games can be a window into understanding the processes that underwrite software, I believe this turn away from programming sidesteps the powerful social and historical dynamics of composing code. Michael Mateas attends to code more specifically, and his approach to procedural literacy35 most closely resembles mine. Although he focused on new media practitioners in his discussion of procedural literacy, he noted “one can argue that procedural literacy is a fundamental competence for everyone, required [for] full participation in contemporary society.”36 I embrace and extend this wide vision of procedural literacy from Mateas.

Andrea diSessa37 shares my concern with programming per se, and his model of the social, cognitive, and material “pillars” that support literacy is compatible with my approach to coding literacy. His term and concept of computational literacy, which was intended to break with the limited and skills-based term computer literacy, emphasizes the action of computation over the artifact of the computer. Mark Guzdial, who also uses the term computational literacy, has been a longtime advocate of the fact that anyone can—and should—learn computer programming and, like diSessa, thinks that programming is an essential part of computational literacy.38 Guzdial argues that programming provides the ideal “language for describing the notional machine” through which we can learn computing.39 We learn arithmetic with numbers and poetry with written language, Guzdial points out; similarly, we should learn computing through the notation that best represents the computer’s processes and potential.40

My approach is generally compatible with all of these other approaches; ultimately, I think we are all talking about roughly the same thing with slight differences in language and emphasis. Guzdial’s and diSessa’s computational literacy are illustrative: I agree with almost every detail of their description of what this literacy is and should be—except that I think fields outside of CS should be able to shape it and teach it as well. Like computational literacy, coding literacy points to the underlying mechanisms of the literacy of computer programming rather than the instrument on which one performs it. As computing becomes more deeply embedded in digital devices, the tools of coding literacy are beyond what we might traditionally consider a “computer”; consequently, we need a concept of literacy that abstracts it away from its specific tools. I often use the term computational literacy alongside coding literacy in this book. I don’t use “computational thinking” here because Wing strongly emphasizes the link to CS and doesn’t use the term literacy. I believe it’s about more than thinking; it’s also about reading and building and writing. Although the language of literacy matters to my argument here, the term it’s paired with matters far less.

To this discussion of procedural/computational/coding literacy, I bring expertise on the literacy side, rather than the computer programming or computer science side. For this reason, I am also less concerned about defining the precise skills that make up coding or computational literacy than I am about their social history, framing, and circulation. As we will see below, lessons from literacy teach us that prescriptions for literacy are always contingent, so I do not attempt to pin one down for coding literacy. At any rate, hammering out the details of what coding literacy or computational thinking looks like in a curriculum or classroom is not the focus for this book.

A number of good models have been presented by computer science educators, however, which may serve as a general heuristic for my argument. Here is one I like from Ed Lazowska and David Patterson that provides a bit more specifics than most:

“Computational thinking”—problem analysis and decomposition, algorithmic thinking, algorithmic expression, abstraction, modeling, stepwise fault isolation—is central to an increasingly broad array of fields. Programming is not just an incredibly valuable skill (although it certainly is that)—it is the hands-on inquiry-based way that we teach computational thinking.41

Wing’s description of computational thinking is too long to quote here, but she covers everything from error detection, preparing for future use, describing systems succinctly and accurately, knowing what part of complex systems can be black-boxed, and searching Web pages for solutions.42 Both of these prescriptions for computational thinking rely on fundamental concepts taught in CS classes, suggesting that the sole responsibility for teaching this literacy or form of thinking should be in CS. Aside from that, their descriptions work fine for our purposes here.43

Other specifications that I sidestep in this book include which languages might be best for coding literacy and what a hierarchy of literacy skills might be. Caitlin Kelleher and Randy Pausch’s taxonomy of programming languages for novices is an excellent resource for specific languages.44 More generally, Yasmin Kafai and Quinn Burke describe the need for languages to have low floors, high ceilings, wide walls, and open windows. That is, a language should have a low barrier to entry (low floors), allow a lot of room for growing in proficiency (high ceilings), make room for a diversity of applications (wide walls), and enable people to share what they write (open windows).45 While most descriptions of computational literacy avoid specific discussion of how one might progress toward such goals, the UK Department for Education issued statutory guidance that nicely outlines goals for the four key stages of their program in computing: key stage 1 addresses writing simple programs and understanding what algorithms are; key stage 2 moves into variables and error detection; key stage 3 asks students to “design, use and evaluate computational abstractions that model the state and behaviour of real-world problems and physical systems”; and key stage 4 is focused on independent and creative development in computing. Although outlining or advocating for specific programs of coding literacy is out of scope for this book, these sites and others provide helpful guidelines.

My approach to coding literacy in this book builds on these models of computing by providing a socially and historically informed perspective on it as a literacy practice. A vision of coding with diverse applications has implications not only for what identities and contexts may be associated with computer programming but also for the ways we conceive of literacy more generally. Put another way, just as coding literacy reshapes computer programming, it also reshapes literacy.

The relationship between programming as a practice and computer science as a discipline is a central tension in the contemporary programming campaigns that invoke literacy, so their history calls for a little unpacking. Although programming is usually framed from the perspective of CS, it predates CS. Donald Knuth traces the field of CS back to 1959, when the Association for Computer Machinery (ACM) claimed “‘If computer programming is to become an important part of computer research and development, a transition of programming from an art to a disciplined science must be effected.’”46 Programming was the dirty, practical, hands-on work with computers, and academic computer scientists often wanted a cleaner, theoretical identification with mathematics. As programming become more central than math in computing curriculum in the 1970s, Matti Tedre argues that “many serious academics cringed at the idea of equating academic computing with programming, which, since the 1950s, had gained a reputation as an artistic, thoroughly unscientific practice.”47 Knuth notes that since the late 1950s, the ACM has been continually working toward framing computing as a science rather than an art. Along with this effort to move computing toward science, a 1968 conference tried to apply the concept of engineering to software and came up with “software engineering,” which Mary Shaw argues is still an aspirational term.48

As businesses and professionals have attempted to wrestle the difficult problems of code into predicable and workable components—as well as elevate the status of programming from its origins with women computers in World War II—they’ve tried to professionalize it.49 Programming only emerged as a dominant term in the early 1950s, and then consolidated as a profession in the 1960s.50 Even then, the profession was porous because places like IBM recruited untrained potential programmers through aptitude tests; most programming training was on the job.51 There have been numerous attempts to certify and professionalize computer programming, as Nathan Ensmenger’s, Janet Abbate’s, and Michael Mahoney’s historical work on software engineering attests. But programming has always resisted neat containment within CS, and this has become more apparent as languages and technologies become more accessible to those outside of universities and corporations in the computer-related industry.

Attempts to make computer programming a domain solely for professionals have failed, I would argue, because programming is too useful to too many professions. University physicists and biologists continued to write their own code after the birth of computer programming as a profession, and still do now. Once the financial barrier to computer use was lowered in the 1970s, we saw the rise of hobbyists who found computers useful and fun. The existence and practices of end-user programming has been a live topic in business and computer science for decades.52 Many university science departments offer applied programming classes. And outside of formal schooling, with the advent of the World Wide Web and more accessible languages such as HTML, CSS, Javascript, Ruby, Scratch, and Python, as well as thousands of online resources to help people teach themselves programming, it appears that more and more people are doing just that. The learn-to-code websites Codecademy.org and Code.org boast 25 million and 260 million learners, respectively.53 Organizations such as #YesWeCode, Black Girls Code, Girl Develop It, and Google’s Made with Code are targeted to groups underrepresented in programming as a profession.54 Coding boot camps run by for-profit and nonprofit institutions, who often promise to help people get jobs, may soon be eligible for federal grants in the United States.55 While some of these coding resources are directed at helping people to become professional programmers, they appeal far more broadly.

In these materials and many discussions of teaching programming more broadly, the discipline of computer science and programming are often collapsed. The rhetoric often goes like this: since we need more programmers than we have now, computer science must be recruited to professionally train them. An example of this conflation of terms is in the mission statement of Code.org, a prominent promoter of widespread programming education as discussed in chapter 1. Their mission statement’s many revisions from 2013 to 2015 reveal some of the tension between computer science and programming in these efforts.56 From August to September 2013, for example, Code.org changed its mission from “growing computer programming education” to “growing computer science education” (emphasis added).57

In a December 2013 blog post, I drew attention to this rhetorical collapsing of code and computer science in Code.org’s mission statement at the time (emphasis added):

Code.org is a non-profit dedicated to expanding participation in computer science education by making it available in more schools, and increasing participation by women and underrepresented students of color. Our vision is that every student in every school should have the opportunity to learn computer programming. We believe computer science should be part of the core curriculum in education, alongside other science, technology, engineering, and mathematics (STEM) courses, such as biology, physics, chemistry and algebra.58

I wondered why they said they wanted to promote computer programming, when the statement and the rest of the site seemed focused on computer science. I argued that: “Its rhetoric about STEM education, the wealth of jobs in software engineering, and the timing of the Hour of Code initiative with Computer Science Education Week all reflect the ways that Code.org—along with many other supporters and initiatives—conflate programming with computer science.”59

In a comment on my post, Hadi Partovi, a cofounder of Code.org, clarified that this conflation was deliberate, but not meant to deceive:

We intentionally conflate ‘code’ and ‘computer science’, not to trick people, but because for most people the difference is irrelevant. Our organizational brand name is “code.org,” because that was a short URL, but our polls show that people feel more comfortable with the word “computer science,” and so we use both, almost interchangeably. Again, not because we want to trick people from one to the other, but because when you know nothing about programming or CS, they are practically equal and indistinguishable.

As the most expedient approach to its objective, Partovi notes that Code.org was simply mobilizing a popular conception of coding as equivalent to CS.60 When Guzdial, Wing, and others argue for CS-for-everyone, they don’t necessarily conflate CS with programming; however, they do subordinate programming to CS, which opens the door for this conflation in popular treatments of coding literacy.

In a critique of coding literacy efforts, we can see a similarly problematic conflation between professional software engineering and a more generalized skill of programming. Jeff Atwood, the cofounder of the popular online programming forum Stack Overflow, claimed we do not need a new crop of people who think they can code professional software—people such as then New York City Mayor Michael Bloomberg, who in 2012 pledged his participation in Codecademy’s weekly learn-to-code e-mails. Atwood writes: “To those who argue programming is an essential skill we should be teaching our children, right up there with reading, writing, and arithmetic: can you explain to me how Michael Bloomberg would be better at his day to day job of leading the largest city in the USA if he woke up one morning as a crack Java coder?”61 As several of Atwood’s commenters pointed out, his argument presents programming as a tool only for professionals and discounts the potential benefits of programming in other professions or activities. Could Bloomberg learn something from programming even if he didn’t end up being good enough at Java to get a job writing it? Mark Guzdial responded to Atwood, homing in on the language of “programmer” and “code” that Atwood used: “Not everyone who ‘programs’ wants to be known as a ‘programmer.’”62 For Guzdial, Atwood’s notion of programming as a profession-only endeavor discounted the many end-user programmers and other non-software engineers folk who might find programming useful in their lives. “There’s a lot of code being produced, and almost none of it becomes a ‘product,’” Guzdial points out.

As programming becomes more relevant to fields outside of computer science and software engineering, a tension is unfolding between the values for code written in those traditional contexts and values for code written outside of them. In the sciences, where code and algorithms have enabled researchers to process massive and complex data sets, the issue of what qualifies as “proper code” is quite marked. For example, Scientific American reported that code is not being released along with the rest of the methods used in scientific experiments, in part because scientists may be “embarrassed by the ‘ugly’ code they write for their own research.”63 A discussion of the article on Hacker News, a popular online forum for programmers, outlined some of the key tensions in applying software engineering values to scientific code. As one commenter argued, the context for which code is written matters: “There’s a huge difference between the disposable one-off code produced by a scientist trying to test a hypothesis, and production code produced by an engineer to serve in a commercial capacity.”64 Code that might be fine for a one-off experiment—that contains, say, overly long functions, duplication, or other kinds of so-called code smells65—might not be appropriate for commercial software that is, say, composed by a large team of programmers or maintained for decades across multiple operating systems. Mary Shaw, an influential Carnegie Mellon University computer science professor and software engineer, points out that it’s important to distinguish software that facilitates online mashups and baseball statistics from software that governs vehicle acceleration: the former could be done by anyone without much harm, whereas the latter calls for more systematic approaches and oversight.66 If the values governing the mission-critical uses of code are applied more generally, they can discount casual, low stakes, or experimental uses of code.

Computer science values theoretical principles of design and abstraction, and software engineering emphasizes modularity, reusability, and clarity in code, as well as strategies such as test-driven development, to support codebases over long terms. Programming is often (though not always) taught in CS with abstract problem sets concerning the Fibonacci sequence and the Tower of Hanoi, which can make programming seem quite distant from art and science and language. While CS often emphasizes the technical and abstract over the social aspects of programming, CS education researchers have noted that singular and dominating approaches to CS can turn people away. Jane Margolis and Allan Fisher found that approaches to CS disconnected from real-world concerns tended to deter women from majoring in CS at Carnegie Mellon University in the 1990s.67 In 1990, Sherry Turkle and Seymour Papert pointed out the problem of dominating values in programming education and argued that the field must make more room for epistemological pluralism. In particular, they noted that the abstract and formal thinking so valued in academics needed to be augmented by applied and concrete approaches to programming, especially if it were to appeal to more women. They describe the value of Claude Levi-Strauss’s bricolage approach: recombining code blocks, trial and error, and knowledge through concrete experimentation.68 There may be an important role for abstract problems and systematic approaches to software in CS training and for the production of code in manufacturing, engineering, and banking contexts, but the abstract approach tends to appeal to the kinds of people who are already well represented in CS departments and software engineering positions. Although these CS education researchers offer insight into new ways to configure CS, few of them consider extending programming education out from the CS curriculum.

But my objective here is not to critique CS curriculum. Instead, I want to draw attention to the problem of overextending CS and software engineering values into other domains where programming can be useful. The conflation of computer programming with CS or software engineering precludes many other potential values and possibilities for computer programming. We might agree that applying the principles of bridge engineering to home landscaping is overkill and would be cost and time prohibitive. Or that requiring all writing to follow the model of academic literary analysis might limit the utility and joy of other kinds of writing. Siphoning the production of code solely through the field of CS is like requiring everyone to become English majors to learn how to write. Moreover, letting the needs of CS or software engineering determine the way we value coding more generally can crowd out other uses of code, such as live-coded DJ performances, prototyping, one-time use scripting for data processing, and video-game coding. Allowing approaches to coding from the arts and humanities can make more room for these uses. These approaches might include (among many others): Noah Wardrip-Fruin’s concept of “expressive processing,” which uncovers the layers of programmatic, literary, and visual creative affordances of software in electronic literature and games; Michael Mateas’s procedural literacy for new media scholars; N. Katherine Hayles’s electronic literature analysis that allows computation to be “a powerful way to reveal to us the implications of our contemporary situation”; Fox Harrell’s computational narrative; or Stephen Ramsay’s “algorithmic criticism,” which uses coding to reveal interpretive possibilities in literary texts.69 Nick Montfort’s Exploratory Programming for the Arts and Humanities offers an introduction to creative programming in Python and Processing that is compatible with these approaches.70 This book is already being adopted in some humanities and arts courses, and creative computing courses are occasionally offered in CS departments, but these courses are still the exception.

Beyond precluding alternative values, this collapse of programming with CS or software engineering can shut entire populations out. In paradigms such as Atwood’s, programming is limited to the types of people already welcome in its established professional context. This is clearly a problem for any hopes of programming abilities being distributed more widely. CS as a discipline and programming as a profession have struggled to accommodate certain groups, especially women and people of color. In other words, historically disadvantaged groups in the domain of literacy are also finding themselves disadvantaged in programming. Programming was welcoming to women when the division of labor was between male engineers and designers and female programmers and debuggers, but now it is heavily male-dominated.71 As a profession, programming has resisted a more general trend of increased participation rates of women evidenced in previously male-dominated fields such as law and medicine.72 The U.S. Bureau of Labor Statistics reported that only 23.0% of computer programmers in 2013 were women.73 The numbers of Hispanic and black technical employees at major tech companies such as Google, Microsoft, Twitter, and Facebook are very low, and even lower than the potential employee pool would suggest.74 High-profile sexism exhibited at tech conferences and fast-paced start-ups now appears to be compounding the problem.75

Nathan Ensmenger has shown that personality profiling was used for hiring and training programmers in the 1960s; businesses selected for “antisocial men.”76 Although it is no longer practiced explicitly, Ensmenger argues that this personality profiling still influences the perception of programmers as stereotypically white, male, and socially awkward. This is now sometimes called the “geek gene” mindset—the belief that people are born wired to program or they’re not. Guzdial explains how this mindset can be a problem for introducing people to computing:

The most dangerous part of the “Geek Gene” hypothesis is that it gives us a reason to stop working at broadening participation in computing. If people are wired to program, then those who are programming have that wiring, and those who don’t program must not have that wiring. Belief in the “Geek Gene” makes it easy to ignore female students and those from under-represented minority groups as simply having the wrong genes.77

Margolis and Fisher note that the obsessive and stereotypical behavior of the CS student who stays up all night programming actually applies to very few CS students, and these few students are more likely to be men. Women and men alike distance themselves from this image to carve out an alternative model for themselves, but the “geek” stereotype hurts women more than men.78 A quick Google image search of “geek” illustrates one reason why: I see glasses and button-up shirts on awkward-looking people who are almost exclusively white men, or occasionally Asian men, or young white women provocatively dressed in tight shirts that say “I ♥ geeks” and glasses and microskirts. Hundreds of results down in a recent search, I finally saw one African-American male: the character Steve Urkel, played by Jaleel White in the 1990s sitcom Family Matters. (I finally gave up scrolling without hitting results for anyone visibly Latino/a or a nonwhite/non-Asian female). Rhetorician Alexandria Lockett recalled this gendered, racialized, and problematic rhetoric of who is and isn’t a “programmer” in a provocative talk about code switching in language and technologies titled “I am not a computer programmer.”79 The recent rise of the “brogrammer,” associated with start-up culture only partially in jest, suggests a new kind of identity for programmers—as “bros,” or young, male, highly social and risk-taking fratboys.80 Although the so-called rise of the brogrammer suggests that programming can accommodate people beyond the socially awkward white male, it also means that these featured identities are still narrow and inadequate.81

For all of these reasons, I find it surprising that computer science and programming are persistently paired in the promotional materials and arguments for coding literacy. Hadi Partovi suggests that the two are indistinguishable to someone who doesn’t know much about either, and I think he’s right. But when the stereotypes for professional programmers and computer scientists are weighted so heavily toward white male “geeks,” why emphasize that link? Programming can be useful to many professions and activities and can accommodate a much wider range of interests and of identities than those of most people currently associated with computer science. But even Guzdial, who argues in his book-length treatment of teaching programming that computer science methods for teaching programming are too narrow and overemphasize “geek culture,” fails to imagine a way for programming to be taught outside of computer science.82

Programming is already taught outside of CS, however—in libraries, digital humanities classrooms, information science schools, and literature classrooms.83 As John Kemeny (the coinventor of BASIC) wrote about the undergraduate curriculum, there are benefits to extricating programming from computer science: “If computer assignments are routinely given in a wide variety of courses—and faculty members expect students to write good programs—then computer literacy will be achieved without having a disproportionate number of computer science courses in the curriculum.”84 As programming moves beyond a specialized skill and into a more common and literacy-like practice, we must broaden the language and concepts we use to describe it. As Kemeny notes, there are benefits to teaching coding across the curriculum, and CS certainly has a place in more wide-reaching programming initiatives. A major argument of this book is that literacy studies does, too.

Programming is indeed part of computer science. We can also come at it another way—through writing. As this book shows, seeing programming through writing and literacy allows us to put it in a longer historical and cultural context of information management and expression. But we must keep in mind the particular technical limitations and affordances that distinguish programming from writing in human languages.

Programming is the act and practice of writing code that tells a computer what to do. In most modern programming languages, the code that a programmer writes is different from the code that a computer reads. What the programmer types is generally referred to as source code and is legible to readers who understand the programming language. This code often contains words that might be recognizable to speakers of English: jump, define, print, end, for, else, and so forth.85 The source code a programmer writes usually gets compiled or interpreted by an intermediary program that translates the human-readable language to language the computer can parse. This translating program (called an assembler, compiler, or interpreter depending on how it translates the code) is specific to each programming language and each machine.86 The compiler’s translation of the source code is generally called object code, and with the object code translation, the computer can follow the directions the programmer has written. The computer parses object code, but it is often not readable by humans. For this reason, much commercial software is distributed in object code format to keep specific techniques from competitors. This is, of course, only a simplified explanation of code; in truth, the layers between source code and machine code are multiple and variable—even more so as machines and programming languages grow more complex.87 And much of contemporary programming is done as bricolage: adding functions or variables or updating interfaces, combining libraries and chunks of code into bigger programs. Although I use this basic explanation to introduce programming, programming in the wild rarely looks this clean and simple.

Programming has a complex relationship with writing; it is writing, but its connection to the technology of code and computational devices also distinguishes it from writing in human languages. Programming is writing because it is symbols inscribed on a surface and designed to be read. But programming is also not writing, or rather, it is something more than writing. The symbols that constitute computer code are designed not only to be read but also to be executed, or run, by the computer. So, in addition to being a type of writing, programming is the authoring of processes to be carried out by the computer. As Ian Bogost describes, “one authors code that enforces rules to generate some kind of representation, rather than authoring the representation itself.”88 The programmer writes code, which directs the computer to perform certain operations. Because it represents procedures to be executed by a computer, programming is a type of action as well as a type of writing. When “x = 4” is written in a program, the statement tells the programmer that x equals 4, but it also makes that statement true—it sets x as equal to 4. In other words, computer code is simultaneously a description of an action and the action itself. The fidelity between code’s description of action and the action itself is a matter much debated. Alexander Galloway has written that “code is the only language that is executable,”89 a popular but not quite accurate conception. Wendy Hui Kyong Chun breaks this conception down by pointing out, via Matthew Kirschenbaum, the materiality of memory involved in a computer’s execution of language. Source code isn’t logically equivalent to machine code, and the distance between these forms of programming was recognized even by Alan Turing, Chun asserts.90 While the relationship between code language and execution is slippery, code’s status as simultaneously description and action means it is both text and machine, a product of writing as well as engineering.91

Programming became a kind of writing when it moved from physical wiring and direct representation of electromechanics to a system of symbolic representation. The earliest mechanical and electrical computers relied on wiring rather than writing to program them. To name one example: the ENIAC, completed in 1946 at the University of Pennsylvania, was programmed by switching circuits or physically plugging cables into vacuum tubes. Each new calculation required rewiring the machine, essentially making the computer a special-purpose machine for each new situation.92 With the development in 1945 of the stored program concept,93 the computer could become a general-purpose machine. The computer’s program could be stored in memory, along with its data. This revelatory design, which was put into practice with the ENIAC’s successor, the EDVAC, moved the concept of programming from physical engineering in wiring to symbolic representation in written code. At this moment, computers became the alchemical combination of writing and engineering, controlled by both electrical impulses and writing systems. The term programming itself reveals this lineage in electrical engineering: programming, which contains the Greek root gram meaning “writing,” was first borrowed by John Mauchly from the context of electrical signal control in his description of ENIAC in 1942.94

In subsequent years, control of the computer through code has continued to trend away from the materiality of the device and toward the abstraction of writing systems.95 To illustrate: each new revision of Digital Equipment Corporation’s popular PDP computer in the 1960s required a new programming language because the hardware had changed, but by the 1990s, the Java programming language’s “virtual machine” offered an effectively platform-independent programming environment. Over the past 60 years, many designers of programming languages have attempted to make more writer-friendly languages that increase the semantic value of code and release writers from needing to know details about the computer’s hardware. Some important changes along this path in programming language design include the use of words rather than numbers, language libraries, code comments, automatic memory management, structured program organization, and the development of programming environments to enhance the legibility of code. Because these specifics change, Hal Abelson argues that thinking about programming as tied only to the technology of the computer is like thinking about geometry as tied only to surveying instruments.96 As the syntax of computer code has grown to resemble human language (especially English), the requirements for precise expression in programming have indeed changed—but have not been eliminated.97

David Nofre, Mark Priestley, and Gerard Alberts argue that this abstraction of programming away from the specificities of a machine is the reason we now think of controlling a computer through the metaphor of language and writing; that is, we write in a language computers can understand. In the 1950s and 1960s, universities, industry, and the U.S. military collectively tackled the critical problem of getting various incompatible computers to coordinate and to share code, which eventually led to the development of ALGOL. Nofre, Priestley, and Alberts claim that thinking of programming as communication with and across computers led to the ability to think of programs and programming languages as objects of knowledge in and of themselves. When computational procedures could be abstracted and distilled into formal algorithms—a combination of mathematics and writing—computer science as a field of study could develop.98 While the dream of a universal language for computers hasn’t played out as computer scientists in the 1950s and the developers of ALGOL imagined, the abstraction of computer control, the movement from engineering to math and writing, is essential for the conceptual approach to programming that I take here.

I see programming as the constellation of abilities to break a complex process down into small procedures and then express—or “write”—those procedures using the technology of code that may be “read” by a nonhuman entity such as a computer. To write code, a person must be able to express a process in hyper-explicit terms and procedures that can be evaluated by recourse to explicit logic rules. To read code, a person must be able to translate those hyper-explicit directions into a working model of what the computer is doing. Programming builds on textual literacy skills because it entails textual writing and reading, but it also entails process design and modeling that echo the practices of engineering. Although the computer is now the exemplar “reader” for this kind of procedural writing, a focus on the computer per se is shortsighted and misleading as the processes of computing make their way into more and more technologies.

Developments in programming languages and computer hardware have led many to believe that the knowledge needed to program will soon be obsolete; that is, once the computer can respond to natural human language, there will be no need to write code. As early as 1961, Peter Elias claimed that training in programming languages would soon cease because “undergraduates will face the console with such a natural keyboard and such a natural language that there will be little left, if anything, to the teaching of programming. [At this point, we] should hope that it would have disappeared from the curricula of all but a moderate group of specialists.”99 At first glance, Elias’s claim appears to be supported by modern touchscreen interfaces such as that of the iPad. Thousands of apps, menus, and interfaces promise to deliver the power of programming to those who do not know how to write code. Collectively, they suggest that we can drag and drop our way to problem solving in software.

Elias’s argument that computer interfaces and languages will evolve to be so sophisticated that very few people will need to know how to compose or read code is perhaps the most persuasive against the idea that programming is a growing part of literacy, and that it will someday become as necessary as reading and writing in human languages. Inevitably, programming will change as computing and computers change—just as literacy has changed along with its underlying technologies. But the historical trajectory of programming language development I’ve outlined here suggests that it is unlikely to become equivalent to human language or shed its requirements of precision—as well as the benefits that come with that (more on this in chapter 2). This was the dream of “automatic programming” in the 1950s, which Grace Hopper insisted was a dream of sales departments—never of programmers: “We still had to tell the compiler what to compile. It wasn’t automatic. The way the sales department saw it was we had the computer writing the programs; they thought it was automatic.”100 Then, as now, any kind of programming still requires logical thinking and attention to explicit expressions of procedures. The central importance of programming is unlikely to dwindle with the increasing sophistication of computer languages.

In fact, if the history of literacy is any model—and in this book I argue it is—then the development of more accessible programming languages, libraries, techniques, and technologies will increase rather than decrease pressures on this new form of literacy. For writing, more sophisticated and more widely distributed technologies seem to have put more rather than less pressure on individual literacy, ratcheting up the level of skill needed for one to be considered “literate.”101 Indeed, as computers have become more accessible and languages easier to learn and use, programming appears to be moving further away from the domain of specialists—contrary to Elias’s hope. As programming has become (at least in some ways) easier to master and as computational devices grow more common, it has become more important to the workplace and more integrated into everyday life—emulating and sometimes taking over the functions assigned to writing. Bombastically, Silicon Valley venture capitalist Marc Andreessen says that “software is eating the world”—taking over industries from hiring to shopping to driving.102 What Andreessen doesn’t mention, however, is that many of these industries were once organized through written communication.

The world wasn’t always organized by written communication either, of course. Writing became a substitute for certain functions of personal, face-to-face relationships during different historical eras. But despite the fact that contracts could stand in for personal agreements and written letters could stand in for oral conversations, people did not stop talking to each other. In other words, writing has never unseated orality altogether. As Walter Ong noted, “Writing does not take over immediately when it first comes in. It creates various kinds of interdependence and interaction between itself and underlying modalities.”103 In the same way, code and computation is not replacing writing. Instead, it has layered itself over and under our infrastructure of writing and literacy. The databases that governments and employers now require programmers to maintain often make themselves visible to us in the form of written records: paystubs, immunization records, search results, Social Security cards. Much of our textual writing is done on computers, through software programs: word processing, e-mail, texting on smartphones. Code is writing, but it also underwrites writing. As we have become increasingly surrounded by computation, we have, in many ways, become even more embedded in writing.104

So, writing is still with us. But code is taking on many of the jobs we previously assigned to writing and documentation. To name a big (brother) example, the surveillance project of government, which could scale up with the help of writing and records, can scale up again. A U.S. National Security Administration data center that opened in 2013 in Utah can store perhaps a yottabyte of data such as phone numbers, e-mails, and contacts on its servers.105 We don’t actually know how the data are being processed, but we can assume it is a combination of computation and human reading: search strings, speech-to-text conversion, and human intuition and hypotheses. The fact that the stock market and banking are now relying heavily on computational processes sometimes leads to strange glitches like the flash crash of May 6, 2010. Amid high volatility and worries about the Greek economy, a firm executed a large trade with a computer-implemented trading algorithm, which used variable inputs to determine what to buy and sell. This kind of trade just months earlier would have taken 5 hours to execute, but the algorithm sped it up to just 20 minutes. The other algorithms of high-frequency trading firms then kicked in and traded huge volumes of stock back and forth rapidly, leading to a spiral in prices that plummeted the New York Stock Exchange in just minutes.106 Humans on the periphery of these algorithms took much longer to figure out what had happened to crash the market so quickly.

The use of code can allow us to accelerate aspects of surveillance, law, finance, information, and bureaucracy, but, perhaps less frighteningly, it can also facilitate greater accountability in governance. Applications designed to monitor spending and political donations can lead to more transparent governance, in the same way that text can serve not only to keep records on citizens but also as paper trails for government officials. For example, the Sunlight Foundation is committed “to improving access to government information by making it available online, indeed redefining ‘public’ information as meaning ‘online,’”107 They offer programs and apps that allow people to track state bills (Open States), view the many golf outings, breakfasts and concerts that comprise the political fundraising circuit (Party Time), and access the deleted Tweets of politicians (Politwhoops).108 Local governments such as the City of Pittsburgh’s have implemented open data initiatives and hired analytics experts focused on making government and community information more accessible.109 The Western Pennsylvania Regional Data Center provides Pittsburghers easy access to maps of vehicle crashes, permit violations, and vacant lots available for adoption.110 As Michele Simmons and Jeff Grabill explain, openly accessible information can also allow people to monitor potential polluters in their neighborhoods. Critical to this monitoring is the accessibility of the information; an online database is more accessible than boxes of files tucked away in a municipal office. To be accessible, Simmons and Grabill insist that these “democratized data,” even if digital and online, also need to be organized in a way that allows people to retrieve the data they want and understand its context—which involves attention to the design and programming of the database.111 Literally democratizing data was “MyBO,” Barack Obama’s social network website that helped him win the U.S. presidential election in 2008.112

In smaller ways, we have outsourced many of our personal tasks to computers that serve, process, and store writing. We get recommendations for where to eat from online Yelp databases where people write and share reviews. We make dinners by searching terms such as “chicken fennel roast” on sites like Allrecipes.com, where cooks upload recipes and review them—all in writing, of course. You can broadcast your inspiration for home remodeling projects in images and words on Pinterest, move money around bank accounts online, and keep track of your calendar with your phone, which is really a pocket computer. It is, of course, still possible to retrieve recipes from the dusty box in your kitchen or physically visit the bank or keep a written agenda. But many of those written or personal transactions still have computation lurking underneath: digital print layout, bank databases and records, and so forth.

Just as writing now circulates over a layer of computation, the circulation of computation has depended on an infrastructure of writing—in particular, print. Prior to the Internet, handbooks documented programming languages. In the 1970s and 1980s, when home computers were becoming popular, computer programs circulated through print magazines such as Compute or Dr. Dobb’s. Tracing the ways BASIC programs were swapped in the 1980s, Nick Montfort et al. explain that books and magazines printed short programs that allowed people to do fun things with their new home computers.113 Print infrastructure and a culture of sharing spread the knowledge of programming as well as a more general awareness of the existence and function of computation. Montfort et al. write, “The transmission of BASIC programs in print wasn’t a flawless, smooth system, but it did encourage engagement with code, an awareness of how code functioned, and a realization that code could be revised and reworked, the raw material of a programmer’s vision.”114 And underneath that structure of print was another familiar tool of storage and transmission: human memory. Many BASIC programs were simple enough for people to remember them and then recite them to demonstrate their cleverness to others, passing them on in the process.115

While writing substituted for certain functions of personal relationships during different historical eras, it did more than that: it facilitated new modes of interaction between individuals and between citizens and their governments. Authority ascribed to literary texts, letters written to family members far away (aiding in immigration116), and complex modes of surveillance were all not simply replacements for earlier oral interactions. No one knew at first what computers were meant for—they were universal machines, technologies upon which we could inscribe all sorts of things: educational value, home uses, play, and so forth. It wasn’t clear what aspects of our routines and lives they would replace or augment. Now we can see that they eclipse certain face-to-face interactions and forms of documentation. But, like writing, computation also creates new relations and new possibilities for communication and information. These new possibilities include everything from the “virtual migration” of technology employees in India who work for American companies117 to direct written contact that people have with celebrities through the medium of Twitter or to the extended network of friends and acquaintances that people can maintain through Facebook—which can jarringly connect one’s high school friends, officemates, and ex-boyfriends from around the world through comments on a photo of a roasted chicken and fennel dinner.118 Many of these new modes of communication haven’t replaced writing but have instead augmented the systems that convey writing, changing writing’s audience, access, storage, and distribution in the process.

As the contexts for communication are perpetually in flux, literacy is as well, which makes it very difficult to define. Literacy researchers generally agree that it is socially shaped and circulated, that it relies on and travels in material forms, and that the ways it is performed or taken up by individuals is ineluctably influenced by these social and material factors. But beyond those central principles are the dragons that always lurk in the spaces between abstractions and realities. Sylvia Scribner notes that people have been trying to pin down the concept of literacy for almost as long as the word has been in circulation.119 Like others before her, she could offer no fixed definition, but instead she proposed thinking of literacy in metaphors that frame some of the functions the concept of literacy serves in a society. These metaphors function similarly to Raymond Williams’s keywords in that they indicate what a society values enough to name literacy. Scribner’s metaphors point to the role literacy plays in helping individuals function in society, to the ways literacy is attached to power, and to ideas framing literacy as morally good. While these indices are all valid in the concept of literacy used in this book, more useful is her concept of the metaphor itself. Literacy is a lot of things. Its metaphors never tell the whole story, but by allowing us to take a particular angle into literacy, they show us glimpses of the ways communications happen in a society.120 So, in forwarding coding literacy, I don’t mean to suggest that we can’t think of literacy (or coding) in any other way. But I do want to provide a particularly fruitful way of considering how both literacy and programming are working now.

Given that any definition of literacy is one motivated by what it can illuminate, here’s the one I use in this book: Literacy is a widely held, socially useful and valued set of practices with infrastructural communication technologies. This definition takes into account the current consensus in literacy studies that literacy is a combination of individual communication skills, a material system, and the social situation in which it is inevitably embedded. It also assumes that literacy refers to something like mass literacy; that is, a skill can do all of the things literacy does, but if it’s not being practiced by a wider variety of people, I don’t think of it as literacy. Literacy according to this book has both functional and rhetorical components—what makes it both “socially useful and valued.” Literacy reflects real knowledge requirements (its functional component) as well as the perception of what kind of knowledge is required to get around in society (its rhetorical component). These components are entangled: what people perceive as literacy affects not only its social value but also its social utility. In other words, if a skill is believed to be more useful, it is more useful, in part because of the social cachet accorded to it.

A focus on an “infrastructural communication technology” is meant to draw attention to literacies connected to power and to differentiate them from other useful skills. Susan Leigh Star describes “infrastructure” as a comprehensive, societally embedded, structural standard that is largely transparent to insiders.121 She writes, “We see and name things differently under different infrastructural regimes. Technological developments move from either independent or dependent variables, to processes and relations braided in with thought and work.”122 When infrastructure shifts, the technologies and practices built on it shift as well. A concept of infrastructure thus allows us to focus on the central processes of daily life. To become more widely held and useful and valued, the practices of writing and programming both had to be done with technologies that were infrastructural and thus suited for more people to use: not too expensive, unwieldy, or prone to break down. Imagine mass textual literacy with stone tablets or mass programming literacy with 1950s mainframes. No, we needed a technology more capable of becoming infrastructure on which to circulate and practice writing and programming. Writing and programming both look quite different depending on the technologies they’re enacted in—quills, Fortran, laptops. This book tries to trace the practices that stay consistent across these technologies. But note that when these specific technologies become widely available and easy to use, they can facilitate more widespread literacy.

Finally, I often use the word literacy rather than literacies. In each society, literacy looks different, and most societies have some concept of literacy.123 Moreover, literacy practices depend on technologies that are constantly changing and evolving. Desktop image-editing software puts new emphasis on visual design literacy, online social media platforms generate combined textual/social/visual literacies, and digital audio-mixing programs give new life and possibilities to sound literacies. Thus, current practice in literacy research is to talk about literacies rather than a monolithic literacy, which often presumes a static, elite literacy to be the norm. As these so-called new literacies multiply, it has become increasingly difficult to sort out which literacies matter most, and how any of them are related to the paradigmatic core of literacy: reading and writing human symbols. The zeal to name many socially contingent literacies as equally excellent or important has dampened the power dynamics embedded in different technologies. As Anne Wysocki and Johndan Johnson-Eilola have argued, when we call everything a literacy, we empty the term of its explanatory power.124 While attention to each of these new literacies may be necessary, we need a way to understand the intertwined phenomena of literacies and technologies more comprehensively. Therefore, I will sometimes refer to a singular literacy here—not to deny the cultural, material, and historical diversity of literacies, but to focus attention on the skills and technologies (such as writing and programming) that are fundamental to the circulation and communication of information in any society. Earlier I referred to both programming and writing as platform literacies, and that’s what I mean: together they provide the foundation on which the ecology of contemporary communication is built.

Lots of practices rely on human skills with technologies, and many of them help us to do and think new things. While some would call these literacies, Andrea diSessa stops short and calls them “material intelligences,” or “intelligence[s] achieved cooperatively with external materials.”125 According to diSessa, several things need to happen for a material intelligence to graduate into a literacy. Its material component or technology must first become infrastructural to a society’s communication practices; in other words, the stuff to practice it must be widely available and reasonably easy to use.126 The possibilities of composition with that representational form must then become widespread and diverse.127 And finally, so many people must become versed in this ability as to make the ability itself infrastructural. That is, society can begin to assume that most people have this ability and proceed to build institutions on that assumption. Today in the United States, for instance, advertisements, education, governance, grocery stores, and even yard sale and lost cat signs assume that most people will be able to read them. This makes literacy social in a way that material intelligences are not: individuals can benefit from material intelligences, but a literacy gains its value in circulation.128

According to diSessa, then, programming is a material intelligence but not yet a literacy. However, the fact that computers and software are already part of our societal infrastructure and everyday lives means that programming could become a literacy. Optimistically, diSessa believes it will, and that “A computational literacy will allow civilization to think and do things that will be new to us in the same way that the modern literate society would be almost incomprehensible to preliterate cultures.”129 Toward this goal, diSessa outlined an educational project by which this literacy might be achieved. My objective is different: whereas he concretized the premise of programming as literacy, I use the premise to step back and provide a historical and theoretical panorama of literacy and programming. I wonder: what would this computational or coding literacy look like? And I dig into what we know about textual literacy in order to answer that question.