Provenance

Like the Brothers Grimm, who compiled fairy tales from interviews with grandmothers, or Dushko Petrovich and Roger White, who collected accounts of memorable art assignments from artists and teachers alike, we have assembled favorite computational art and design prompts from friends, colleagues, mentors, and students, documenting the strategies and pedagogies of a community teaching creative visual production through code. Petrovich and White remind us that legendary assignments can stay with you for life, often resurfacing when one reenters the classroom as a teacher: “Most artists, when they begin to teach, will pass along—consciously or not—assignments they themselves were once given.” 1 Assignments are fodder for adaptation and are continually shared, forked, recontextualized, subverted, and renewed, a process that often makes their authorship plural and ambiguous.

Public records documenting art and design pedagogy are scarce and poorly maintained, especially in contrast to notable works of art and design, which are discussed in critical texts and collected by museums. In their extraordinary compilation of graphic design assignments, Taking a Line for a Walk: Assignments in Design Education, Nina Paim, Emilia Bergmark, and Corinne Gisel lament that, in design education, “the layer of language that runs alongside this process is often neglected. Words fly out of a teacher's or a student's mouth and quickly disappear into thin air. Instructions and specifications, corrections and questions, fuse with practical work. And assignments, if written down at all, are rarely considered something worth saving.” 2 In the realm of computational media arts and design, it has been more common for classroom syllabi and curricula to appear online; these materials, however, are subject to the uniquely digital vagaries of data preservation: link rot and bit rot. Web servers for old courses are rarely considered something worth maintaining; the “walled gardens” of many courseware systems restrict public access; and computer arts courses prior to 1994 precede the World Wide Web altogether, eluding search engines. The Internet is astonishingly fragile, and even within the few years we have spent writing this book, links to many noteworthy resources have gone dark, and projects have been quietly retired from creator portfolios—a process that is producing an undeniable amnesia throughout the field.

In this section, we lay out the sources from which we encountered or developed the assignments in this collection. We cannot and do not claim that the information here is definitive. To trace the origins, history, and provenance of these assignments would require extensive oral history research, and remains a worthy challenge for art and education historians of the future. In addition to the gaps in our knowledge due to the absence or loss of documentation, we also acknowledge that the information below contains oversights and blind spots owing to our lack of familiarity with non-English-speaking art and technology educational communities, and we fully acknowledge that our perspective is not representative of the rest of the world.

While many of the assignments in this book have been adapted from the syllabi of our teachers and peers, a few were primarily inspired by projects that we admire. In discussing the provenance of the assignments in their book, Paim, Bergmark, and Gisel call this process of back-formation “reconstruction,” and we choose similar vocabulary here. In particular, our assignments Personal Prosthetic, Parametric Object, and Virtual Public Sculpture were inspired by the works that illustrate their respective modules, and are not otherwise discussed below.

In other cases, our assignments rely on technologies like speech recognition, 3D printing, augmented reality, or machine learning that have only recently become widely accessible. As educators and practitioners have only had a relatively brief time to experiment with these tools, methods for how to teach the creative use of these technologies within art and design contexts are still (quickly) emerging. In such cases, we have taken the liberty to devise the assignment briefs ourselves. This is the case for assignments including Voice Machine, Bot, Personal Prosthetic, Parametric Object, and Virtual Public Sculpture.

Finally, some of the educational approaches documented here spread from the community of John Maeda's Aesthetics + Computation Group at the MIT Media Laboratory, where one of the authors of this book (Golan Levin) was a graduate student alongside Casey Reas and Ben Fry (who co-founded the Processing initiative). With this context in mind, it is important to note that some of the assignments presented in this book have been drawn directly from personal experience and firsthand accounts from our peers, and some have been plucked from a buzzing zeitgeist—distilled from the research, educational materials, and social media posts of a highly interconnected community of artists, teachers, and students.

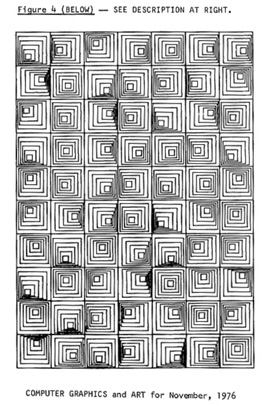

The Iterative Pattern exercise extends from the earliest practices in plotter-based computer art—the first university courses for which arose in the early-to-mid 1970s. Writing in 1977, Grace C. Hertlein, a professor of computer science at California State University at Chico, details a list of notable “computer art systems” actively used in higher education at the time: “in Jerusalem, by Vladimir Bonacic; Reiner Schneeberger (University of Munich); Jean Bevis (Georgia State University); Grace C. Hertlein (California State University system); John Skelton (University of Denver); Katherine Nash and Richard Williams (University of Minnesota at Minneapolis).” 3

An example of work by one of Reiner Schneeberger's students, Robert Stoiber, is shown below, a grid of nested squares.4 In this picture, in which “the middle point of each square was obtained by chance,” it is clear that students were directed to explore iterative loops and randomness. In a 1976 article, Schneeberger describes the context in which this work was produced, a summer course taught in collaboration with Professor Hans Daucher of the Department of Art: “This is a report of the first computer graphics course for students of art at the University of Munich. […] A further objective to be realized was for every student to be able to generate aesthetically appealing computer graphics after only the first lecture period.” Schneeberger mentions that the art students experienced greater than normal hardship, as all of the computer programming work had to be performed “locally at the Computer Center, some ten kilometers distant from the instructional site.” 5

Iterative patternmaking is now a standard exercise in texts on creative coding, especially where iteration techniques are introduced. Examples include Casey Reas and Chandler McWilliams's Form+Code in Design, Art, and Architecture (2010)6 and Hartmut Bohnacker et al.'s Generative Design: Visualize, Program, and Create with Processing (2012).7

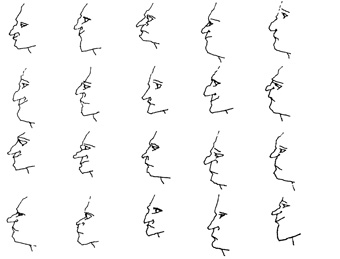

In his book Design as Art (1966), Bruno Munari presents the results of a challenge he gave himself: how many different ways could he draw the human face?8 Mark Wilson later transposed this assignment to the realm of computer arts education in Drawing with Computers (1985). Wilson describes a hypothetical face-generation software program called METAFACE, analogous to Donald Knuth's METAFONT (1977), that his reader is encouraged to develop:

The human face could be schematically rendered with a sparse set of lines and circles similar to the minimal description of the alphabet. It would be possible to write a program—let's call it METAFACE—that would emulate some of the extraordinary variations of the face. The parameters for the various visual descriptions of the face would be given to the program: the size of the eyes, the location of the eyes, and so forth. Depending on the ambitiousness of the programmer, the program could become exceedingly complex.9

Wilson also illustrated the variety of faces such a program might produce:

Lorenzo Bravi, an educator at the design department of IUAV of Venice and later at the ISIA of Urbino, gave an influential generative face assignment called “Parametric Mask” in 2010. Computational face designs by Bravi's students were used to create Bla Bla Bla, a sound-reactive application for iPhone and iPad.10 In a 2011 brief, Casey Reas, acknowledging both Munari and Bravi, invited his students at UCLA to make microphone-reactive faces.11 Face generator exercises have since become commonplace in introductory creative technology courses; dozens of examples can be found at OpenProcessing.org, written by educators such as Julia Pierre, Steffen Klaue, Rich Pell, Isaac Muro, and Anna Mª del Corral.

“The Clock” is an evergreen creative coding assignment, and was the original inspiration for this book. An August 2019 survey of 847 classrooms on OpenProcessing.org revealed scores of clock projects—assigned by a bevy of international educators including Amy Cartwright, Sheng-Fen Nik Chien, Tomi Dufva, Scott Fitzgerald, June-Hao Hou, Cedric Kiefer, Michael Kontopoulos, Brian Lucid, Monica Monin, Matti Niinimäki, Ben Norskov, Paolo Pedercini, Rich Pell, Julia Pierre, Rusty Robison, Lynn Tomaszewski, Andreas Wanner, Mitsuya Watanabe, and Michael Zöllner. The particular text of the clock assignment presented in this volume is most closely adapted from Golan's version, “Abstract Clock: A diurnally-cyclic dynamic display,” which he assigned in his Fall 2004 Interactive Image course at Carnegie Mellon.12

The graphic representation of time has long figured into both analog and digital design education. An assignment to devise a graphic system that displays and contrasts the rhythms of sixteen different calendars (including Aztec, Chinese, Gregorian, etc.) was given at the Yale School of Art in 1982 by Greer Allen, Alvin Eisenman, and Jane Greenfield.13 The first computational clock assignment that we know of was assigned by John Maeda in his Fall 1999 Organic Form course at the MIT Media Lab. This course—whose students included Golan as well as future computational media educators like Elise Co, Ben Fry, Aisling Kelliher, Axel Kilian, Casey Reas, and Tom White — examined “the nature of symbolic descriptions that are creatively coerced into representations that react to both internal changes in state and external changes in environment.” In his clock assignment, shown below, Maeda asked students to use the DBN programming environment to “create a display of time that does not necessarily depict the exact progress of time, but rather the abstract concept of time.” 14

Maeda's assignment extended from his own artistic exploration of time displays in his 1996 12 O’Clocks project. Introducing a simplified clock project in his 1999 book, Design by Numbers, Maeda writes that “time is the most relevant subject to depict by means of a dynamically changing form.[…] Given the ability to computationally observe the progress of time, a form that can reflect the time is easily constructed.” 15

The computational clock assignment spread quickly to other universities in the early 2000s. The Computer-Related Design graduate course at Royal College of Art, London, was an early such center for creative coding education.16 Citing Maeda's clocks as an inspiration, RCA instructors Rory Hamilton and Dominic Robson asked graduate students to design a “timepiece” in an interaction design course in February 2002. Hamilton and Robson's conceptually oriented “pressure project” is agnostic as to medium:

This simple brief is to look at the nature of clocks and other time measuring devices. How do we use them? Why do we use them? What is their meaning? Restyling of clocks are numerous: but we ask you not to restyle but to rethink. Not to reskin existing clocks but to come up with a completely new way of looking at time. Your design should be beautiful, engaging, and work. Whatever medium you choose we should all be able to understand and use your system.17

A 2005 article by a group of Georgia Tech faculty highlights the clock as a key assignment in Computing as an Expressive Medium, a core graduate level course taught by Michael Mateas. For Mateas, who asks students to “display the progress of time in a non-traditional way,” the clock is not an exercise in utilitarian design, but an “expressive project” whose “goal is to start students thinking about the procedural generation of imagery as well as responsiveness to input, in this case both the system clock, and potentially, mouse input.” 18

In his Fall 2008 Comparative Media Studies workshop at MIT, Nick Montfort asked students to develop clocks (“a computer program that visually indicates the current hour, minute, and second”).19 Casey Reas introduced the clock assignment to UCLA in Spring 2011. Reas's version leaves open the question of whether the students’ clock should be literal or abstract, and instead places a primary emphasis on an iterative design process of sketching and ideation. Asking students to “create a ‘time visualization,’ aka a clock,” Reas requires students to bring “at least five different ideas, each with six drawings to show how the clock changes in time.” 20

In September 2017, NYU ITP educator Dan Shiffman canonized the clock assignment for a wide audience in his popular Coding Train video channel on YouTube. Citing Maeda's 12 O’Clocks and Golan Levin's Fall 2016 course materials, Shiffman's “Coding Challenge #74: Clock with p5.js” video has accumulated (as of May 2020) more than 350,000 views.21

As a 2006 survey by George Kelly and Hugh McCabe shows, the challenge of procedurally generating landscapes and terrains has been a fixture in game design and computer graphics literature since the mid-1980s.22 Whereas most early computer graphics research was concerned with achieving realism, the introduction of software development environments into art schools in the early 2000s created a context in which the problem could be imaginatively addressed by students steeped in the traditions of conceptual art, performance, film, and art history. The language of the Generative Landscape assignment presented here (populated with “body parts, hairs, seaweed, space junk, [or] zombies”) is adapted from a prompt given by Golan in his Fall 2005 Interactive Image course.23

Nick Montfort's Comparative Media Studies workshop in 2008 also featured a “Generated Landscape” assignment. Navigability is a key requirement of Montfort's assignment, which stipulates that a user be able to “move around a large virtual space, seeing one window of this space at a time.” 24 This assignment is included and discussed in Montfort's 2016 book, Exploratory Programming for the Arts and Humanities, which provides sample code for readers to “create a virtual, navigable space.” 25 A more tightly constrained “Noisy Landscapes” assignment also appears in Bohnacker et al., Generative Design.26

John Maeda asked students to create a virtual creature in his Fall 1999 course, Fundamentals of Computational Media Design (MAS.110) at MIT. “Inspired by a diagram of a cell in The Biology Coloring Book, I asked my class to re-interpret the canonical drawing of an amoeba in a medium of their choice.” 27 Influenced by this prompt, Golan initiated the Singlecell.org project in January 2001, inviting creators like Lia, Marius Watz, Casey Reas, and Martin Wattenberg to create interactive creatures for an “online bestiary of online life-forms reared by a diverse group of computational artists and designers.” 28 An even more open-ended virtual creature assignment was presented by Lukas Vojir's influential Processing Monsters project in 2008, which collected Processing-based code sketches from scores of contributors around the world:29

I'm trying to get as much people as possible, to create simple b/w [black and white] monster in Processing, […] while the bottom line is to encourage other people to learn Processing by showing the source code. So if you feel like you can make one too and be part of it, the rules are simple: Strictly black and white + mouse reactive.30

“Creature” assignments in courses taught by Chandler McWilliams and John Houck at UCLA between 2007 and 2009 made explicit mention of Vojir's Processing Monsters, as well as animistic simulation models like Valentino Braitenberg's vehicles. The UCLA assignments emphasized the use of Java classes and object-oriented programming to develop virtual creatures with parameterized appearances and behaviors.31 More recently, OpenProcessing.org has come to host creature assignments by educators including Tifanie Bouchara, Margaretha Haughwout, Caroline Kassimo-Zahnd, Cedric Kiefer, Rose Marshack, Matt Richard, Matt Robinett, and Kevin Siwoff. Some of these assignments invite students to use flocking behaviors explained by Craig Reynolds in his influential 1999 “Steering Behaviors For Autonomous Characters” 32 and popularized in Dan Shiffman's Nature of Code book and associated videos.33

The concept of custom picture elements in computer arts extends from experiments in the 1960s by Leon Harmon and Ken Knowlton at Bell Labs; Danny Rozin's renowned Wooden Mirror (1999) and other interactive mirror sculptures; and photomosaic work by Joseph Francis and Rob Silvers, among others. The ability of Processing and other creative coding toolkits to provide straightforward access to image pixel data means that a “custom pixel” assignment can be a productive way to support instruction in introductory image processing. Exercises of this sort appear in Casey Reas and Ben Fry's Processing: A Programming Handbook for Visual Designers and Artists (2007);34 in Ira Greenberg's Processing: Creative Coding and Computational Art (2007);35 in Dan Shiffman's Learning Processing (2008);36 in Andrew Glassner's Processing for Visual Artists: How to Create Expressive Images and Interactive Art (2010);37 in Reas and McWilliams's Form+Code (2010);38 and in Bohnacker et al.'s Generative Design (2012)39.

The assignment as presented here derives from Golan's Fall 2004 Interactive Image course. He asked students to “create a ‘custom picture element’ with which to render your image. At no time should the original image be seen directly.” 40

Our Drawing Machine assignment extends from a tradition of experimental interactive paint programs developed by artists during the late 1980s and 1990s, such as Paul Haeberli's DynaDraw, Scott Snibbe's Motion Sketch and Bubble Harp, Toshio Iwai's Music Insects, and John Maeda's conceptually oriented drawing tools, Radial Paint, Time Paint, and A-Paint (or Alive-Paint).41 By 1999, Maeda had formalized this type of inquiry as an educational assignment. In his book Design by Numbers, he presents an exercise involving the construction of an ultra-minimal paint program: a loop that continually sets a canvas pixel to black, at the location of the cursor. In a section entitled “Special Brushes,” Maeda writes: “Perhaps the most entertaining exercise in studying digital paint is the process of designing your own special paintbrush. There really is no limit to the kind of brush you can create, ranging from the most straightforward to the completely nonsensical. How you approach this creative endeavor is up to you.” 42 Maeda offers some potential responses to this assignment, including a pen whose ink changes color over time; a calligraphy brush with a diagonal tip; and a vector drawing tool for polylines. Exemplary student responses to this prompt were published by JT Nimoy in 2001 (then an undergraduate intern in Maeda's group at MIT) as a collection of twenty interactive “Scribble Variations,” 43 and by Zach Lieberman in his 2002 master's thesis at Parsons School of Design, “Gesture Machines.” Lieberman's interactive thesis projects were published at the now-defunct Remedi Project online gallery44 and were revived for a lecture presentation recorded at the 2015 Eyeo Festival.45

Variants of the drawing tool assignment proliferated in the early 2000s. In a Fall 2003 course at UCLA, Casey Reas asked students to develop a “mouse-based drawing machine.” 46 In a Spring 2004 course, he asked students to

Have a concrete idea about the type of images your machine will construct and be prepared to explain this idea during the critique. Build your project to have a large range in the quality of drawings generated and to have a large range of formal contrasts. It's very difficult to program representational images, so it's advised to focus on constructing abstract drawings. Do not use any random values, but instead rely on other sources of data […] as the generators of form and motion.47

Golan's 2000 master's thesis, “Painterly Interfaces for Audiovisual Performance,” developed under John Maeda's supervision at MIT, presented a collection of five interactive software programs for the gestural creation and performance of dynamic imagery and sound. Based on insights from this work, Golan assigned a “custom drawing program” in his Fall 2004 course at CMU, Introduction to Interactive Graphics.48 In Spring 2005, he refined this drawing program to one in which “the user's drawings come to life” by making “a drawing program which augments the user's gesture in an engaging manner.” 49

In a 2005 article, Michael Mateas describes a “drawing tool” assignment given in his graduate-level course at Georgia Tech, Computing as an Expressive Medium. Mateas asks students to

Create your own drawing tool, emphasizing algorithmic generation / modification / manipulation. […] The goal of this project is to explore the notion of a tool. Tools are not neutral, but rather bear the marks of the historical process of their creation, literally encoding the biases, dreams, and political realities of its creators, offering affordances for some interactions while making other interactions difficult or impossible to perform or even conceive.50

In his Winter 2007 and Spring 2008 Interactivity courses at UCLA, Chandler McWilliams gave assignments that explored the nuanced differences between a mouse-based “drawing machine” and a “drawing tool.” 51 Since that time, a panoply of drawing tool assignments have been published online at OpenProcessing.org, in online classrooms taught by Antonio Belluscio, Tifanie Bouchara, Tomi Dufva, Briag Dupont, Rachel Florman, Erik Harloff, Cedric Kiefer, Steffen Klaue, Andreas Koller, Brian Lucid, Yasushi Noguchi, Paolo Pedercini, Julia Pierre, Ben Schulz, Devon Scott-Tunkin, and Bridget Sitkoff, among others. The assignment has also featured in books on introductory creative coding, such as Bohnacker et al.'s Generative Design (2012).52

The design principles of parameterization and modularity are foundational considerations in traditional typography education, quite apart from the use of code and digital techniques. A 1982 pencil-and-paper assignment by Hans-Rudolf Lutz at Ohio State University, for example, asked students to render an alphabet with five stages of transitions between consecutive letters.53 An assignment by Laura Meseguer, given in 2013 at the Escuela Universitaria de Diseño e Ingeniería de Barcelona, asked students to design a modular typeface from a small set of hand-drawn graphical elements. According to Meseguer, “working with this modular method will help you to understand the architecture of letterforms and modularity, coherence, and harmony inherent to type design.” 54

As a creative coding assignment, our Modular Alphabet project descends most directly from prompts and student work developed in John Maeda's 1997 Digital Typography course at the MIT Media Lab, which focused on the “algorithmic manipulation of type as word, symbol, and form.” 55 Maeda assigned “Pliant Type” and “Unstable Type” projects that asked students (working in Java) to “design a vector-based typeface that transforms well” and to “design a parameterized typeface with inherently unstable properties.” 56 Peter Cho's project Type Me, Type Me Not emerged from Maeda's prompts, and established the template for the assignment as reconstructed here.57 Cho's writeup for the course gallery page explains that “at some point in the class I became fixated with the idea of constructing letters from only circular pie pieces, using the fillArc method of the Java graphics class. […] Since each letter is devised from two filled arcs, it is easy to make transitions between letters in a smooth way.” 58 The text of the assignment in the present volume is adapted from Golan's “Intermorphable Alphabet: A custom graphic alphabet,” which he assigned in his 2004 Interactive Image course.59

Computational “parametric type” assignments, extending from the logic of Knuth's METAFONT (1977), focus on the use of parameters to control continuous properties of a typeface. An assignment that illustrates this is “Varying the Font Outline (P 3.2.2)” in Generative Design,60 in which the reader is prompted to explore how a code variable may govern a property such as the typeface's overall slant, or something more unconventional, like wiggliness.

During the first decade of the 2000s, Judith Donath and her graduate students in the MIT Media Lab's Sociable Media Group created “data portraits,” formulating principles for producing and understanding media objects that depict their subjects’ accumulated data rather than their faces. Donath argued that “calling these representations ‘portraits’ rather than ‘visualizations’ shifts the way we think about them.” 61 Concurrently, Nick Felton's diaristic, assignment-like “Annual Report” information visualizations, which he published from 2005 through 2014 and are now part of MoMA's permanent collection, were highly influential in establishing a precedent for computationally designed, data-driven self-portraiture.

By the early 2010s, the use of personal fitness trackers and smartphone selfies had become widespread, pushing an evolution in how data self-portraiture was both implemented and described. The wording of the assignment in this book is adapted from an exercise in Golan's Spring 2014 Interactive Art & Computational Design course at CMU, which asked students to “develop a visualization which offers insights into some data you care about. This project will probably take the form of a “Quantified Selfie”: a computational self-portrait developed from any (one or more) of the data-streams you produce.” 62

Our assignment is inspired by installation works by artists including Michael Naimark, Krystof Wodiczo, Christopher Baker, Andreas Gysin + Sidi Vanetti, HeHe (Helen Evans and Heiko Hansen), Jillian Mayer, Joreg Djerzinski, and Pablo Valbuena. Valbuena's computationally generative Augmented Sculpture artwork, presented at the 2007 Ars Electronica Festival and widely viewed online,63 was also particularly influential to creative technologists, and spurred the development of commercial projection mapping software like MadMapper and Millumin. With the help of these tools, projection mapping has become a staple in progressive theater scenography, and is taught in many graduate programs in video and media design.

Golan gave a version of this assignment (“a poetic gesture projected on a wall”) in fall 2013. Students were asked to write code in Processing, using the Box2D physics library, in order to generate real-time animated graphics that related both visually and conceptually to wall features like power outlets and doorknobs.64

The first public competition to create “a strictly one-button game”—that we know of—was held in April 2005 by Retro Remakes, an online community of independent game developers.65 Soon after, in a Gamasutra article that would be widely cited in subsequent game design syllabi, Berbank Green discussed in-depth design issues in the low-level mechanics of one-button games.66

In December of 2009, the experimental game design collective Kokoromi announced the GAMMA IV competition for one-button games, whose winners were presented to a large audience at the March 2010 Game Developers Conference. Responding to an efflorescence of “high-tech” game controllers that had just been released to the market, with interfaces like “gestural controls, multi-touch surfaces, musical instruments, voice recognition—even brain control,” Kokoromi proposed “that game developers can still find beauty in absolute simplicity.” 67 In August of 2009, the wildly popular one-button game Canabalt was released online.68 For his Winter 2009 Interactivity course at UCLA, Chandler McWilliams introduced the one-button game as a classroom exercise:

Develop a simple one-button game, meaning the interface is a single button. Focus your ideas on expressing a theme while making an interesting experience. Do not be overly concerned with how the game behaves technically, great games can be made very simply. Instead consider how common video and board games operate and what features of these artifacts you can reinterpret in interesting ways. The game need not involve scores or levels; it can be a playful experience. Remember that conceptual and visual development is as important as technical achievement.69

Paolo Pedercini also mentions one-button games (and “no-button games”) in a CMU Experimental Game Design syllabus from Fall 2010.70

One-button games are now a popular and well-established genre. As of August 2019, the indie game distribution website Itch.io, for example, reported hosting over 2800 unique one-button games.

Our assignment is influenced by mid-1990s telematic media artworks, such as Paul Sermon's Telematic Dreaming (1992), Scott Snibbe's Motion Phone (1995), and Rafael Lozano-Hemmer's The Trace (1995). This assignment also has roots in the “Shared Paper” exercise that John Maeda discusses in Design by Numbers. (1999).71 Maeda describes a publicly accessible web server that stores 1000 numbers, whose values could be read and modified by user code. Building on this ultra-simple platform, Maeda presents a “Collaborative Drawing” project, in which mouse coordinates are shared from one person to another,72 and “Primitive Chat,” in which a single letter is sent at a time.73

Maeda elaborates on this assignment in Creative Code (2004):

The standard final assignment I used to set my class [in 1997] was to transmute a stream of data communication. The communication stream in question was the server, which used to pass messages from any connected client to all connected clients simultaneously in the way that Internet-based chat systems work. I stopped setting this assignment because of the technical difficulties of keeping the server running reliably.74

A precursor to the Browser Extension assignment was Alex Galloway's Carnivore project (2000–2001), in which he invited 15 artists to contribute custom software clients that visualized network traffic captured by a packet sniffer. Each “Carnivore Client” provided a different lens on internet communication. In 2008–2009, Google and Mozilla introduced add-on ecosystems for their Chrome and Firefox web browsers, kicking off a wave of shareable experimentation. The creation of commercial browser extensions as a mode of art practice arose at that time, boosted by the popular success of Steve Lambert's Add Art, a browser plugin that automatically replaces online advertisements with artworks. Lambert's project informs our assignment, and we took additional inspiration from artworks by Allison Burtch, Julian Oliver, Lauren McCarthy, and others.

The 2013 Snowden revelations catalyzed artistic engagement with issues surrounding digital privacy, security, surveillance, anonymity, and cryptographic technologies.75 Keeping in mind certain cultural practices and artworks that problematized these themes, Tega developed this prompt for Social Software, a 2015 class at Purchase College. It draws on research and projects by practitioners like Addie Wagenecht, Julian Oliver, Adam Harvey, David Huerta and many others.

A variety of mathematics educators have also written about the pedagogic value of cryptography for engaging students from non-technical backgrounds, including Brian Winkel, Neal Koblitz, Manmohan Kaur, and Lorelei Koss.76 These authors discuss cryptography in the context of both mathematics education and general education, and although they aim to cultivate mathematical competencies, rather than designerly or artistic skill sets, they each observe that the study of cryptography brings together the political, social and technical dimensions of math and computation.

Speech-based human-computer interaction in real time became a practical reality for creative experimentation in the late 2010s, as we were writing this book. In 2017, Google's Creative Lab division, seeking to encourage developers to adopt their Google Home platform and Dialogflow speech-to-text toolkit, sponsored exploratory investigations into “what's possible when you bring open-ended, natural conversation into games, music, storytelling, and more.” 77 Among those supported by this “Voice Experiments” initiative was programmer and artist Nicole He, who created projects like Mystery Animal, a game in which the computer pretends to be an animal and users have to guess what it is by asking spoken questions. In Fall 2018, He taught a course at NYU ITP entitled Hello, Computer: Unconventional Uses of Voice Technology. The course objective was to

give students the technical ability to imagine and build more creative uses of voice technology. Students will be encouraged to examine and play with the ways in which this emerging field is still broken and strange. We will develop interactions, performances, artworks or apps exploring the unique experience of human-computer conversation.78

Our Voice Machine assignment draws inspiration from He's syllabus and projects by her students;79 from Google's Voice Experiments program; and from pioneering early works of speech-based interactive media art, such as David Rokeby's The Giver of Names (1990).

Data collection is a starting point for education in a wide range of fields, whether in engineering, the natural sciences, social sciences, communication design (e.g., in information visualization), or contemporary art (in critical cultural practices). Several of our peers have given assignments that ask creative coding students to collect data using an API (Jer Thorp at NYU ITP)80 or that ask them to develop “scrapers” for computationally harvesting information from the Internet (Sam Lavigne at NYU ITP and the School for Poetic Computation).81 The assignment we present here, however, specifically requires students to develop custom hardware to collect measurements from some dynamic system in the physical world.

The premise of data-collection-as-art comes from approaches used by many artists, including Hans Haacke, Mark Lombardi, On Kawara, Natalie Jeremijenko, Beatriz da Costa, Brooke Singer, Catherine D’Ignazio, Eric Paulos, Amy Balkin, and Kate Rich. It also draws on citizen science projects like Safecast, the Air Quality Egg, Smart Citizen Platform, Pachube, and the many instructables and online tutorials for DIY data collection tools. Our assignment was adapted from an assignment in Golan's Electronic Media Studio 2 syllabus, given to art students at CMU in 2013.82

The Extrapolated Body assignment is inspired by interactive artworks like Myron Krueger's Videoplace (~1974–1989) and the long history of expressive uses of offline motion capture in Hollywood computer graphics. Casey Reas's UCLA syllabi from the mid-2000s are some of the earliest extant records of assignments for the computational, real-time augmentation of bodies captured with cameras and computer vision; an exercise from Winter 2004, for example, asks students to use “the unencumbered body as the interface to interacting with a piece of software. Develop and implement an idea for the interaction between two people via a projection, camera, and computer. Remember that through processing the camera data, it is possible to track the body, track colors, determine the direction of motion, read gestures, etc.” 83 A related assignment from Winter 2006 asks students to “Develop a concept for a video mirror which utilizes techniques of computer vision.” 84

From Lauren McCarthy's “Mask” assignment, we have borrowed the premise of asking students to not only develop body-responsive software, but simultaneously craft a performance that uses that software. For her Winter 2019 Interactivity course at UCLA, McCarthy writes:

Write or select a short text (one paragraph or less) that you will read/perform for the class. Based on the text, design and build a virtual mask that you will use to perform the text. Using the provided code template, make your mask react to audio, changing as the volume of your voice changes. This project will be evaluated based on how the face relates to the text, the variation of the mask (how much it changes), the design of the mask, and your performance of it.85

The Synesthetic Instrument assignment asks students to develop a tool for the simultaneous performance of sound and image. Our formulation extends from Golan's master's thesis work at the MIT Media Lab (1998–2000), which itself took inspiration from 1990s audiovisual performance instruments by Toshio Iwai especially. In April 2002, in his Audiovisual Systems and Machines (“Avsys”) graduate course at the Parsons School of Design, Golan translated this research problem into an assignment in “Simultaneous real-time graphics and sound”:

Your assignment is to develop a system which responds to some kind of input (for example, the mouse, the keyboard, some kind of real-time data-stream, etc.) through the real-time generation of synthetic sound and graphics. […] Your system must not use canned (pre-prepared) audio fragments or samples. Therefore it will be necessary for you to code your own digital synthesizer, from scratch. To create a relationship between the image and sound, it is presumed that you will also need to appropriately map the data which describes your visual simulation, to the inputs of your sound synthesizer. When creating your system, consider some of the following possible issues, to which there are no ‘correct’ answers: are the sound and image commensurately plastic, or is one more malleable than the other? Are the sound and image tightly related, or indirectly linked? What is the quality of your use of negative space, in the sound as well as the image? Are rhythms evident in either image or sound, or both?86