Chapter 22

Fundamentals of Sensory Systems

In bringing information about the world to an individual, sensory systems perform a series of common functions. At its most basic, each system responds with some specificity to a stimulus, and each employs specialized cells—the peripheral receptors—to translate the stimulus into a signal that all neurons can use. Because of their physical or chemical specialization, the many types of receptors transduce the energy in light, heat, mechanical, and chemical stimulation into a change in membrane potential. That initial electrical event begins the process by which the central nervous system (CNS) constructs an orderly representation of the body and of things visible, audible, or chemical. To bridge the distance between peripheral transduction and central representation, messages are carried along lines dedicated to telling the CNS what has taken place in the external world and where it has happened. Such precision requires that labor be divided among neurons so that not only different stimulus energies (light vs. mechanical deformation) but also different stimulus qualities (steady indentation vs. high-frequency vibration of the skin) are analyzed by separate groups of neurons.

In addition to their organization along labeled lines, sensory systems perform common types of operations. Foremost among these is the ability of each system to compare events that occur simultaneously at different receptors, a process that serves to bring out the greatest response where the difference in stimulus strength (contrast) is greatest. At late stages in sensory processing, systems make comparisons with past events and with sensations received by other sensory systems. These comparisons are the fundamental bases of perception, recognition, and comprehension.

This chapter gives an overview of the functional attributes and patterns of organization displayed by the auditory, olfactory, somatosensory, gustatory and visual systems; and it outlines the physiological and anatomical principles common to all sensory systems. When variations on a common theme exist, they are discussed with the goal of bringing the general pattern into sharper focus.

Sensation and Perception

The Function of Each Sensory System Is to Provide the CNS with a Representation of the External World

Because of the changes that occur around an individual, each sensory system has the task of providing a constantly updated representation of the external world. Accomplishing this task is no simple feat because it requires a close interaction between ascending or stimulus-driven mechanisms and descending or goal-directed mechanisms. Together these two mechanisms evoke sensations, give rise to perceptions, and activate stored memories to form the basis of conscious experience. Ascending mechanisms begin with the activity of peripheral receptors, which together form an initial neural representation of the external world. Descending mechanisms work to sort out from the large amount of sensory input those events that require immediate attention. In doing so, the descending mechanisms alter ascending inputs in ways that optimize perception.

Perception of a sensory experience can change even though the input remains the same. A classic example is seen in the image of a vase that can also be perceived as two faces, pointed nose to nose (Fig. 22.1). In this case, the image remains the same—the sensory input remains constant—but the perception of what is being viewed changes as the goal of the viewer changes or as his or her attention wanders. Using this example, it is apparent that detection of a stimulus and recognition that an event has occurred are usually called sensation; interpretation and appreciation of that event constitute perception.

Figure 22.1 An example of a figure that can elicit different perceptions (faces or vase) even though stimulus and sensation remain constant. The mind can “see” purple figures against a blue background or a blue figure against a purple background.

Psychophysics Is the Quantitative Study of Sensory Performance

A psychophysical experiment determines the quantitative relationship between a stimulus and a sensation in order to establish the limits of sensory performance (Stevens, 1957). Such an experiment relies on reports from a subject who is asked to judge quantitatively the presence or magnitude of a stimulus as careful adjustments in the physical attributes are made. One example of threshold detection is the two-point limen, in which two blunt probes, separated by a distance that is progressively enlarged or reduced over a series of trials, are applied to the skin surface. The minimum separation distance at which a subject reports two stimuli half the time and one stimulus the other half is taken as the detection threshold. That distance can be measured accurately and is found to vary markedly across the body surface; the two-point limen is smallest for the fingertips and largest for the skin of the back. Other studies, such as those exploring the detection of relative magnitudes of stimuli, can include assessments of object heaviness, loudness of sound, or brightness of light. Studies of this sort have been combined with neurophysiological experiments to compare reports from subjects (sensory behavior) with the responses of single cells (neuronal physiology). Through this procedure the neural mechanisms underlying sensory perception can be examined.

Some general principles hold for all sensation measured in psychophysical experiments. As just pointed out, one principle is that of threshold for detecting a difference between stimuli. Studies look to determine a difference threshold by asking what just noticeable difference (JND) between two stimuli (two lights of different brightness) an observer can detect. E. Weber was the first to formally recognize that small differences between two minimal stimuli are easier to detect than small differences between two robust stimuli. One example is the ability of a subject to detect a difference between two light objects that weigh 0.1 and 0.2 kilograms versus a difference between two heavy objects that weight 10.1 and 10.2 kilograms. The former is much easier than the latter. Weber’s law states that the difference threshold for a stimulus is a constant fraction of intensity (the Weber fraction). The formula, ΔI/I = k describes that law, where I is the intensity of a baseline stimulus, ΔI is the JND between baseline and a second stimulus, and k is the Weber fraction. It is important to recognize that k may be a constant of a particular value for one feature, such as the frequency of sound, but the value changes markedly for another feature of the same sense (sound pressure level). The Weber fraction for sound frequency is exceedingly low (roughly .003), whereas that for sound pressure level is relatively high (0.15). For purposes of comparison, the Weber fraction for luminance is 0.02 and that for concentration of an odorant molecule is 0.10.

G. Fechner proposed that every JND between one stimulus and the next is an equal increment in the magnitude of sensation. That would mean a JND is proportional to a physical variable. His law is formalized in the equation S = k/log I, where S is the sensory experience in terms of magnitude, I is the physically measured intensity of a stimulus, and k is a constant. There is strong intuitive value to this equation because it states the magnitude of a sensory experience is logarithmically related to the physical intensity. Lifting 1 kg and 2 kg produces very different sensory experiences, but lifting 10 kg and 11 kg produces almost the same experience, even though the added weight was equal in the two cases. S. Stevens recognized a century later that rather than a logarithmic relation, perceived sensation and physical intensity were related by a power function, described by the equation S = kIP. Yet the exponent of I could be infinite, depending on the relationship of neural response to stimulus intensity. V. Mountcastle and his colleagues proposed that for mechanosensation the relationship between the physical properties of a stimulus and the response of individual neurons is linear. And most recently, K. Johnson and his colleagues (Johnson et al., 2002) have shown that for the complex percept of roughness perception, a linear relationship exists between subjective experience and neural activity. Thus, a basic law of psychophysics emerges: how an observer perceives a stimulus is a linear function of the intensity of that stimulus.

Receptors

Receptors Are Specific for a Narrow Range of Input

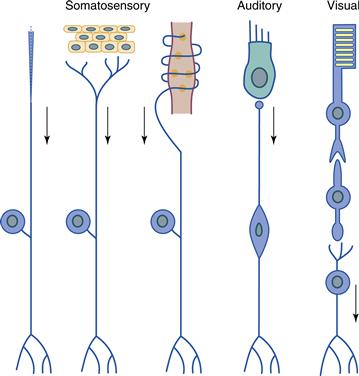

Neurons of the brain and spinal cord do not respond when they are touched or when they are exposed to sound or light or odors. Each form of energy must be transduced by a population of specialized cells, which converts the stimulus into a signal that all neurons understand. In every sensory system, cells that perform this transduction step are called receptors (Fig. 22.2). For each of the fundamental types of stimuli (mechanical, chemical, or thermal energy or light), there is a separate population of receptors selective for the particular form of energy. Even within a single sensory system, there are classes of receptors that are particularly sensitive to one stimulus (e.g., heat or cold) and not another (muscle stretch). This specificity in the receptor response is a direct function of differences in receptor structure and chemistry.

Figure 22.2 Receptor morphology and relationship to ganglion cells in the somatosensory, auditory, and visual systems. Receptors are specialized structures that adopt different shapes depending on their function. In the somatosensory system the receptor is a specialized peripheral element that is associated with the peripheral process of a sensory neuron. In the auditory and visual systems, a distinct type of receptor cell is present. In the auditory system, the receptor (hair cell) synapses directly on the ganglion cell, whereas in the visual system, an interneuron receives synapses from the photoreceptor and in turn synapses on the retinal ganglion cell. Adapted from Bodian (1967).

Receptor Types Vary across Sensory Systems

Systems differ in the number of distinct receptor types they incorporate, and a correlation exists between the number of receptor types displayed by a system and the types of stimuli that system is able to detect. In the somatosensory system, a large number of receptor types exist to detect many types of stimuli. Separate receptors exist to transduce a variety of mechanical stimuli, including steady indentation of hairless skin, deformation of hair, vibration, increased or decreased skin temperature, tissue destruction, and stretch of muscles or tendons (Fig. 22.2). In the auditory system, two classes of receptor—the inner and outer hair cells of the cochlea—transduce mechanical energy of the basilar membrane, which is set in motion by sound waves (Dallos, 1996). Here, the motility of outer hair cells provides an additional amplification of the basilar membrane motion to increase sensitivity and allow sharp tuning to sound frequency. The inner hair cells respond to the amplified vibrations and excite the large population of neurons upon which they synapse. Thus the two types of receptors act in concert to transduce a single type of stimulus. In the visual system, transduction is performed by two broad classes of receptor in the retina: rods and cones. The number of cone types varies from one in some species to two in many species to three in a few species, but cones in general are tuned to ranges of wavelengths of light. Rods are much more sensitive to light—their intracellular amplification of a single photon’s capture generates a much larger current than is achieved in cones—and so they enable vision when light is dim. Olfactory receptors also vary in number from a few hundred types in primates to more than a thousand in rodents. Here the difference between one olfactory sensory neuron (OSN) and the next is a subtle variation on a common theme, since each OSN differs from its neighbor in the primary sequence of a single receptor protein. As that protein varies, so does the OSN’s sensitivity to odorant molecules.

Receptors Perform a Common Function in Unique Fashion

All receptors transduce the energy to which they are sensitive into a change in membrane voltage. The task of the receptor is to transmit that voltage change by one route or another to a class of neurons—usually referred to as ganglion cells—that send their axons into the brain or spinal cord (Fig. 22.2). Systems vary in the mechanism whereby receptors and ganglion cells interact. Most receptors in the somatosensory system are part of multicellular organs, the neural components of which are the terminal specializations of dorsal root ganglion cell axons. An appropriate stimulus applied to a somatosensory receptor produces a generator potential—a graded change in membrane voltage—that, when large enough, leads to action potentials that can be carried over a considerable distance into the central nervous system (CNS). The same approach is used by the olfactory system, since OSNs not only transduce the stimulus of an odorant molecule into a change in membrane potential but also conduct those action potentials into the CNS. We refer to these types of neurons, ones that combine the functions of transduction in the periphery with conduction into the CNS, as long-axon receptors.

Receptor cells of the auditory, vestibular, visual, and gustatory systems are separate, specialized cells that transduce a stimulus and then transmit the resulting signal to the nearby process of a neuron. Because the distances between receptor and target neuron are small, none of these short receptors generate action potentials; they signal their response by a passive flow of current that changes the release of neurotransmitter from either an axon or the base of the cell. These systems differ, however, in the path between receptor and ganglion cell. In the inner ear and in the tongue, sensory receptor cells are modified epithelial cells and not neurons. Nevertheless, they form chemical synapses with the processes of ganglion cells so that these ganglion cells take on the response properties of the receptor cells that innervate them. For these systems, the synapse between a receptor cell and a ganglion cell is little more than the conversion of an analog signal (graded changes in membrane potential) into a digital signal (action potentials). That is not the case in the retina, where photoreceptors relay their response through populations of interneurons interposed between them and retinal ganglion cells (Fig. 22.2). Because of this additional synapse and the opportunity it affords for summation and comparison of receptor signals, the retinal ganglion cell response differs appreciably from that of photoreceptors.

The mechanisms whereby receptor cells transduce and transmit signals are known in greater or lesser detail for each system. Visual transduction is a well-understood, rapid process in which a weak signal (a single photon) can be amplified greatly through a biochemical cascade, leading to the closure of thousands of Na+ channels and a hyperpolarizing response (Yau & Baylor, 1989). For auditory and vestibular hair cells and somatosensory mechanoreceptors, mechanical deformation of a part of the cell gets transduced into a change in membrane voltage by mechanically gated ion channels, the molecular identity of which is still not known. The response of somatosensory mechanoreceptors is of a single sign because mechanically gated ion channels open and allow depolarizing current to enter (Katz, 1950). This requirement for depolarization may result from the demands placed on the somatosensory ganglion cell to generate action potentials and transmit information over long distances. In contrast, hair cell receptors of the auditory and vestibular systems generate a biphasic response. When protruding villi of a hair cell, called stereocilia, are deflected in one direction, transducer channels open and the cell is depolarized. Yet with deflection of stereocilia in the opposite direction, the same channels close and the cell is hyperpolarized (Hudspeth, 1985). Because sound usually produces a back-and-forth deflection of stereocilia, the result is a back-and-forth movement of the receptor potential—at least for low and moderate frequencies of sound. Thus, the receptor output contains temporal information about the waveform of an acoustic stimulus.

Receptors Have Characteristic Patterns of Position and Density

Receptors are not scattered randomly across the sensory surface. An orderly arrangement of receptors exists along the skin, inner ear, retina, olfactory epithelium, and the lining of the tongue and throat. In the human retina, for example, photoreceptors adopt a hexagonal packing array (Wassle & Boycott, 1991) in the region of highest density, called the fovea. Moreover, only cones are found in the fovea, and for that of humans and other Old World primates, only Red (long wavelength) and Green (middle wavelength) cones are found in the center of the fovea. Hair cells of the vestibular system are even more tightly sequestered, since they occupy very small regions in the semicircular canals and the otolith organs. In the skin, the arrangement of receptors is not nearly so orderly but the density of cutaneous receptors varies markedly across the skin surface. By far the greatest density of receptor terminals is found at the fingertips and the mouth, whereas receptors along the surface of the back are at least an order of magnitude less frequent. In each system, the differences in peripheral innervation density are tightly correlated with spatial acuity. Regions of highest receptor density are also the regions of highest acuity in vision (fovea) and somatic sensation (fingertip).

A perfect test case for innervation density and acuity is seen in the auditory system of microbats. Because these animals use echolocation to navigate and find prey, the auditory system greatly overrepresents the frequencies of sound a bat emits as a probing signal and the surrounding frequencies of Doppler-shifted sounds that echo from objects. Throughout most of the cochlea, the physical properties of the basilar membrane change in a steady fashion so that cochlear hair cells display a progressive shift in the frequency that excites them best. But at the frequencies represented in the Doppler-shifted echo, both the amount of basilar membrane dedicated to those frequencies and the density of inner hair cells along that region increase markedly. The result is a much greater acuity for these information-rich frequencies than for all other frequencies.

Receptors Are the Sites of Convergence and Divergence

The relationship between receptor and ganglion cell is seldom exclusive. Most commonly, a single ganglion cell receives input from several receptors, and, in many cases, a single receptor sends information to two or more ganglion cells. Convergence and divergence go hand in hand for the somatosensory system, since a single spot on the skin is often innervated by axons of several ganglion cells, while at the same time the axon of a single ganglion cell can branch to end across a swath of skin. In the somatosensory system, however, the amount of divergence and convergence varies with the class of receptor involved (e.g., thermal receptor vs. mechanoreceptor) and the location of the receptor on the body surface (e.g., shoulder vs. fingertip). Similar features are seen in the mammalian visual system, since divergence and convergence dominate different parts of the retina populated by different receptor types. In the cone-rich central retina, each cone provides as many as five ganglion cells with their main visual drive, whereas in the rod-rich periphery, a few dozen rods supply each ganglion cell with its visual input. In its precision and in its implications for sensory processing, nothing approaches the divergence seen in the cochlea, where a single inner hair cell can be the source of all input received by at least 20 ganglion cells (humans) or as many as 35 ganglion cells (gerbils). Thus, what emerges from a comparison across systems is that convergence and divergence from receptor to ganglion cell vary directly with the demands placed on the system at the specific location. When spatial resolution is a requirement, the convergence of receptor inputs onto individual ganglion cells is low. When detection of weak signals is necessary, convergence is high. When receptor input is used for a complex function or for multiple functions, divergence of input from a single receptor onto many ganglion cells occurs.

Receptors Vary in Their Embryonic Origin

For auditory, vestibular, somatosensory, and olfactory systems, the various classes of receptors and ganglion cells are part of the peripheral nervous system, generated as progeny of neuroblasts located in neural crests and sensory placodes. That is not the case for photoreceptors and retinal ganglion cells. The retina is generated as a protrusion of the embryonic diencephalon and thus all its neurons and supporting cells are CNS derivatives of neural tube origin. As a result of their origin, receptors and ganglion cells of the auditory, vestibular, and somatosensory systems and the OSNs of the nasal epithelium are supported by classes of nonneuronal cells that include modified epithelial supporting cells and Schwann cells. Photoreceptors and retinal ganglion cells, by contrast, are supported by CNS neuroglial cells. Most dramatic of all the consequences resulting from this difference in origin is the ability of axons in somatosensory peripheral nerves to regenerate and reinnervate targets after they are damaged, as opposed to the complete and permanent loss of visual function when optic nerves are cut or crushed.

For all systems except the olfactory, the receptor neurons you were born with are the ones you will live with. Nothing new is added. OSNs, however, have short lives, since they die off and are replaced every 60 days or so. What is seen for all systems, however, is the progressive decline in the number of receptors and in sensory acuity with normal aging. Somatosensory mechanoreceptors in humans are reduced by more than half from the age of 25 years to 65 years. Degeneration of photoreceptors is common as is a progressive reduction in hair cells of both the cochlea and the vestibular organs. Even the OSNs fail to keep up with the ravages of age, since the rate of generation does not match the rate of degeneration. The result in every case is a marked reduction in acuity that can lessen the hedonic value of foods and flowering plants, render a person deaf or blind, and leave him unsteady while standing or walking.

Peripheral Organization and Processing

Sensory Information Is Transmitted along Labeled Lines

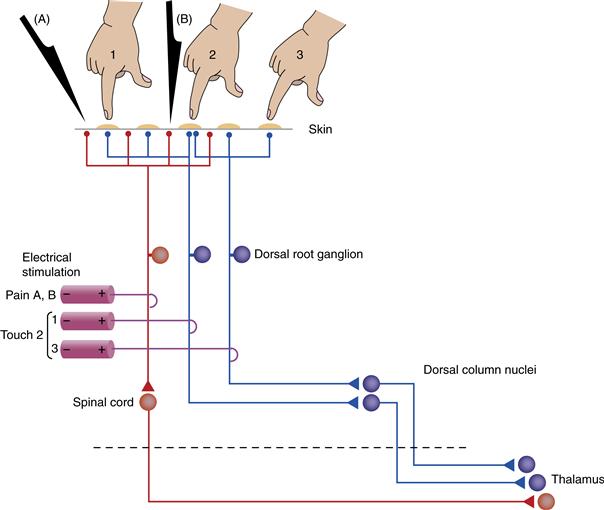

A long-appreciated principle that unites structure and function in a sensory system is the doctrine of specific energy, or the labeled line principle. This principle states that when a particular population of neurons is active, the conscious perception is of a specific stimulus (Fig. 22.3). For example, activity in one particular population of somatosensory neurons (colored orange in Figure 22.3), leads the CNS to interpret it as a painful stimulus, no matter whether the stimulus is natural (a sharp instrument jabbed into the skin) or artificial (electrical stimulation of the appropriate axons). An entirely separate population of neurons (colored blue in Figure 22.3) would signal light pressure. Why this is so can be seen from the fact that receptors are selective not only in what drives them, but also in the postsynaptic targets with which they communicate. Each ganglion cell transmits its activity into a well-defined region of the CNS, after which a strictly organized series of synaptic connections relays information in a sequence that eventually leads to the thalamus and then to the cerebral cortex (Darian-Smith et al., 1996). It is this orderly relay from receptor to ganglion cell to central neurons at each of several stations that makes up a labeled line. All sensory information arising from a single class of receptors is referred to as a modality (e.g., the sensations of pain and light pressure involve distinct modalities). Thus, the existence of labeled lines means that neurons in sensory systems carry specific modalities.

Figure 22.3 Example of labeled lines in the somatosensory system. Two dorsal root ganglion (DRG) cells (blue) send peripheral axons to be part of a touch receptor, whereas a third cell (red) is a pain receptor. By activating the neurons of touch receptors, direct touching of the skin or electrical stimulation of an appropriate axon produces the sensation of light touch at a defined location. The small receptive fields of touch receptors in body areas such as the fingertips permit distinguishing the point at which the body is touched (e.g., position 1 vs. position 2). In addition, convergence of two DRG axons onto a single touch receptor on the skin permits touch stimulus 2 to be localized precisely. Electrical stimulation of both axons produces the same sensation, although localized to somewhat different places in the skin. Sharp stimuli (A, B) applied to nearby skin regions selectively activate the third ganglion cell, eliciting the sensation of pain. Electrical stimulation of that ganglion cell or of any cell along that pathway also produces a sensation of pain along that region of skin. Stimuli A and B, however, cannot be localized separately with the pain receptor circuit that is drawn. As the labeled lines project centrally, they cross the midline (decussate) and project to separate centers in the thalamus.

Topographic Projections Dominate the Anatomy and Physiology of Sensory Systems

Receptors in the retina and body surface are organized as two-dimensional sheets, and those of the cochlea form a one-dimensional line along the basilar membrane. Receptors in these organs communicate with ganglion cells and those ganglion cells with central neurons in a strictly ordered fashion, such that relationships with neighbors are maintained throughout. This type of pattern, in which neurons positioned side by side in one region communicate with neurons positioned side-by-side in the next region, is called a topographic pattern. As an example, the two touch-sensitive neurons in Figure 22.3 innervate somewhat different positions in the skin. Thus, light touch at position 3 will activate the rightmost ganglion cell in the dorsal root ganglion, whereas touch at position 1 will activate the neighboring ganglion cell. The central projections of these cells are kept separate and activate different targets in the thalamus and above. The end result is a map of the sensory surface of the skin. A different topography is seen in the olfactory system. OSNs divide the nasal epithelium into zones in which sensitivity to a particular range of odorants is maximally represented. A large-scale map has the OSNs in neighboring zones of the epithelium communicate with neighboring regions in the olfactory bulb. Yet the small-scale map from epithelium to bulb displays a fundamental rearrangement in which all OSNs expressing a particular receptor protein send convergent inputs to a single synaptic region (a glomerulus) in the bulb. In general, topographies are a place code for sensory information in which the location of a particular neuron tells you what that neuron responds to, both in place along the sensory surface and in modality.

Neural Signaling Is by a Combination of Rate and Temporal Codes

A great deal of research has been aimed at determining the codes by which neurons signal the presence and the intensity of a stimulus. In addition to place codes, neurons can signal information in the rate at which they respond and in the temporal pattern of their response. For a given receptor, the firing rate or frequency of action potentials signals the strength of the sensory input. The perceived intensity arises from an interaction between this firing rates and the number of neurons activated by a stimulus. Together the number of neurons active with any sensory stimulus and the level of their activity give rise to an intensity code. This is the kind of code used by retinal ganglion cells to signal the intensity (luminance) of light and by spiral ganglion cells to signal the intensity (sound pressure level) of sound. Temporal codes are also used in some systems. For instance, the phase-locking ability of auditory neurons extends to sound frequencies up to a couple thousand cycles per second (kHz), and this code is used for the perception of the pitch of sounds. In addition, all sensory systems must deal with the fact that stimuli can move, as with vibratory stimuli on the skin. This temporal information in a stimulus is carried by the time-varying pattern of activity in small groups of receptors and central neurons.

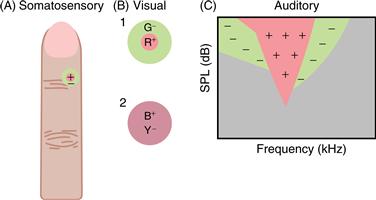

Lateral Mechanisms Enhance Sensitivity to Contrast

A hallmark of all sensory systems is the ability of neurons at even the earliest stages of central processing to integrate the activity of more than one receptor. The most common and easily understood of these mechanisms is lateral (or surround) inhibition (Fig. 22.4). By this mechanism, a sensory neuron displays a receptive field with an excitatory center and an inhibitory surround (Kuffler, 1953. Such a mechanism serves to enhance contrast: each neuron responds optimally to a stimulus that occupies most of its center but little of its surround. In some cases the comparisons involve receptors of different types so that the center and surround differ not only in sign (excitation vs. inhibition, ON vs. OFF), but also in the stimulus quality to which they respond.

Figure 22.4 Center/surround organization of receptive fields is common in sensory systems. In this organization, a stimulus in the center of the receptive field produces one effect, usually excitation, whereas a stimulus in the surround area has the opposite effect, usually inhibition. (A) In the somatosensory system, receptive fields display antagonistic centers and surrounds because of skin mechanics. (B) In the retina and visual thalamus, a common type of receptive field is antagonistic for location and for wavelength. Receptive field 1 is excited by turning on red light (R) at its center and is inhibited by turning on green light (G) in its surround. Receptive field 2 is less common and is antagonistic for wavelength (blue vs. yellow) without being antagonistic for the location of the stimuli. Both are generated by neural processing in the retina. (C) In the auditory system, primary neurons are excited by single tones. The outline of this excitatory area is known as the tuning curve. When the neuron is excited by a tone in this area, the introduction of a second tone in flanking areas usually diminishes the response. This “two-tone suppression” is also generated mechanically, as is seen in motion of the basilar membrane of the cochlea. All of these center/surround organizations serve to sharpen responses over that which would be achieved by excitation alone.

One such example occurs in visual neurons that possess centers and surrounds responsive to stimulation of different types of cones. These cells display a combination of spatial contrast (the difference in the location of cones that produce center and surround) and chromatic contrast (the difference in the visible wavelengths to which these cones respond best). Similar types of responses are evident in the somatosensory system, where the difference between center and surround is the location on the skin from which each is activated. In this case, skin mechanics produce receptive fields with a central hot spot of activity and a surrounding inactive zone. In the auditory system, lateral suppressive areas (Fig. 22.4) are also produced by mechanics, in this case the mechanics of the basilar membrane of the cochlea. These two-tone suppression areas are demonstrated by exciting the auditory nerve fiber with a tone in the central excitatory area and observing the response decrease caused by a second tone in flanking areas (Sachs & Kiang, 1968). In all these sensory systems, center/surround organization serves to sharpen the selectivity of a neuron either for the position of the stimulus or for its exact quality by subtracting responses to stimuli of a general or diffuse nature.

Central Pathways and Processing

Axons in Each System Cross the Midline on Their Way to the Thalamus

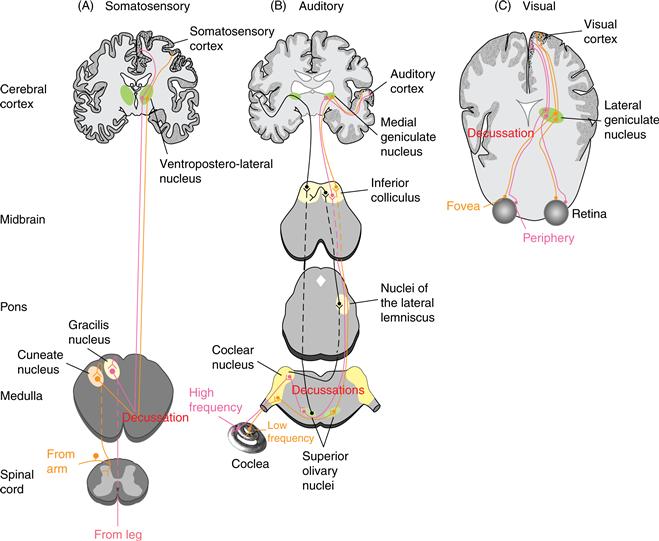

Axons of ganglion cells entering the CNS form the initial stage in a pathway through the thalamus to the cerebral cortex (Fig. 22.5). Axons in visual, somatosensory, and auditory systems cross the midline—they decussate—prior to reaching the thalamus, but those of the olfactory and gustatory systems do not. A single, incomplete decussation occurs in animals with frontally placed eyes, where slightly more than half the axons of the optic nerve cross the midline at the optic chiasm. Near complete decussations at the optic chiasm are seen in animals with laterally placed eyes. Decussation in the somatosensory system is nearly total, since all but a small group of axons cross the midline in the spinal cord or brainstem. These decussations serve two broad functions: They bring together into one hemisphere all axons carrying information from half the visual world or they bring somatosensory information into alignment with visual input and motor output. In contrast, multiple decussations occur in the auditory system prior to the thalamus, since comparison of input from the two ears is the dominant requirement of sound source localization. Nevertheless, at high levels in the auditory system, one side of the brain is concerned mainly with processing information about sound sources located toward the opposite side of the body, as demonstrated by lesion/behavioral studies of sound localization.

Figure 22.5 Comparison of central pathways of sensory systems. In every case, soon after peripheral input arrives in the brain, decussations result in one hemifield being represented primarily by the brain on the opposite side. Each pathway has a unique nucleus in the thalamus and several unique fields in the cerebral cortex. Within each of these areas, the organized mapping that is established by receptors in the periphery is preserved.

Specific Thalamic Nuclei Exist for Each Sensory System

Information from all sensory systems except the olfactory are relayed through the thalamus on its way to the cerebral cortex (Fig. 22.5). Olfactory information reaches primary olfactory cortex without a relay in thalamus. Yet even in this sense, perception of odorants and discrimination of one odorant from another occurs only after a thalamic relay. This relay of sensory input through the thalamus involves either a single large nucleus or, in the case of the somatosensory systems, two nuclei: one for the body and one for the face. In each thalamic nucleus, synaptic circuits are said to be secure because activity in presynaptic axons usually leads to a postsynaptic response. Within the thalamic nuclei, neurons performing one function (e.g., relay of discriminative touch) are segregated from those performing another (e.g., relay of pain and temperature). Even within one function, mappings of neurons are preserved so that there is separation of neurons providing touch information from the arm versus from the leg and of neurons responding to low versus high sound frequencies (Fig. 22.5). Usually, for each thalamic nucleus, there is a population of large neurons and one or two populations of small neurons (Jones, 1981). In each case the larger neurons carry the most rapidly transmitted signals from the periphery to the cortex.

Multiple Maps and Parallel Pathways

Nuclei in the central pathways often contain multiple maps. For instance, in the auditory system, axons of spiral ganglion cells divide into branches as they enter the CNS and terminate in three subdivisions of the cochlear nucleus. Each division contains its own map of sound frequency that was originally established by the cochlea. The greatest number of maps generated from ganglion cell input is found in the visual system of primates, in which as many as 12 separate retinotopic maps are stacked on top of one another in the lateral geniculate nucleus (LGN, in the thalamus), which receives direct input from the retina. Such a large number of distinct maps in the LGN is indicative of inputs from ganglion cells that vary in location, structure, and function. For vision, one idea is that surface features such as color and form are carried along a path separate from the one that handles three-dimensional features of motion and stereopsis. The functional significance of multiple maps in general, however, remains to be clarified. Perhaps the need for multiple parallel paths exists because of the relatively slow speed and the limited capacity of single neurons. So rather than have the same group of neurons perform different functions in serial order, each of several parallel groups performs a separate function. This leads eventually to the problem of binding together all features of a stimulus into a coherent percept, the neural basis for which may be the synchronized activity of neurons across several areas of the cerebral cortex (Singer, 1995).

Sensory Cortex

Sensory Cortex Includes Primary and Association Areas

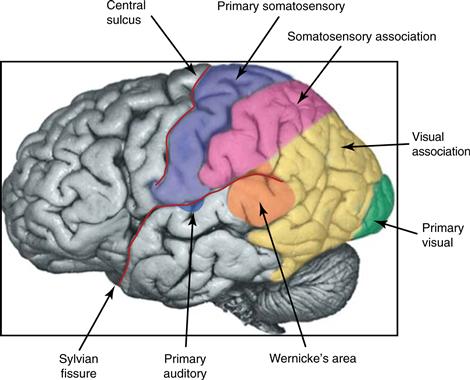

Axons of sensory relay nuclei of the thalamus project to a single area or a collection of neighboring areas of the cerebral cortex, thereby providing them with a precise topographic map of the sensory periphery. These parts of the cortex are frequently referred to as primary sensory areas (Fig. 22.6). Neighboring areas with which the primary areas communicate directly or by a single intervening relay area are sensory association areas.

Figure 22.6 The location of primary sensory and association areas of the human cerebral cortex. The primary auditory cortex is mostly hidden from view within the Sylvian fissure. From Guyton and Hall (2000).

Response Mappings and Plasticity

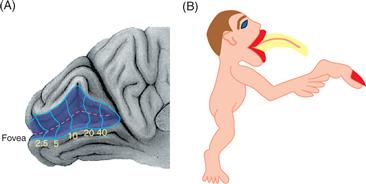

Each area of sensory cortex shares with its subcortical components a map of at least part of the sensory periphery. Thus, retinotopic, somatotopic, and tonotopic maps are evident in the relevant areas of cortex; no such map exists for the chemical senses, however. The retina and skin are two-dimensional sheets so the map of the sensory periphery on the surface of the cortex is a simple transformation of the peripheral representation onto the cortex. In the auditory periphery, there is a one-dimensional mapping of frequency. This tonotopic mapping is faithfully represented along one dimension of cortical distance, and the orthogonal direction may map a second, as yet undiscovered, property.

As indicated above, the distribution of receptors is uneven for most systems. Thus, the fovea of the primate retina and the fingertips of the primate hand are regions that possess a high density of receptors with small receptive fields. Such an uneven distribution of neurons devoted to a structure is further amplified in the CNS. A much greater percentage of neural machinery subserves the representation of the retinal fovea or the fingertips than deals with the representation of other regions of the retina or body surface (Fig. 22.7). This expansion of a representation in the CNS, referred to as a magnification factor, appears particularly impressive in humans, in whom a very large part of primary visual cortex is devoted to the couple of millimeters of retina in and around the fovea. In the auditory cortex, such magnification of frequency representation is again seen in echolating bats, since the region devoted to the Doppler-shifted signaling frequency is much enlarged over that for any other set of frequencies.

Figure 22.7 Examples of sensory magnification in the visual and somatosensory systems. (A) Determination of a visual field map in the human primary visual cortex shows that more than half this area is devoted to the central 10° of the visual field. Very little is devoted to the visual periphery beyond 40°. From Horton and Hoyt (1991). (B) Figure of how the human body would appear if the body surface were a perfect reflection of the map in the first somatosensory cortex. The mouth and tongue and the tip of the index finger enjoy a greatly enlarged representation in the thalamus and cortex.

It is now clear that maps of sensory cortex are not fixed and immutable but rather plastic. In the somatosensory system, if input from a restricted area of the body surface is removed by severing a nerve or by amputation of a digit, the portion of the cortex that was previously responsive to that region of the body surface becomes responsive to neighboring regions (Merzenich et al., 1984). In the auditory system, following high-frequency hearing loss, the portion of cortex previously responsive to high frequencies becomes responsive to middle frequencies. Frequency tuning of neurons or auditory cortex can also be shifted with classical conditioning methods (Weinberger, 2007). Such plasticity of cortical maps requires some time to be established and could result from strengthening of already established lateral connections or from growth of new connections. It is likely but not firmly established that the same types of mechanisms cause cortical changes during the processes of learning and memory.

A Common Structure Exists for Sensory Cortex

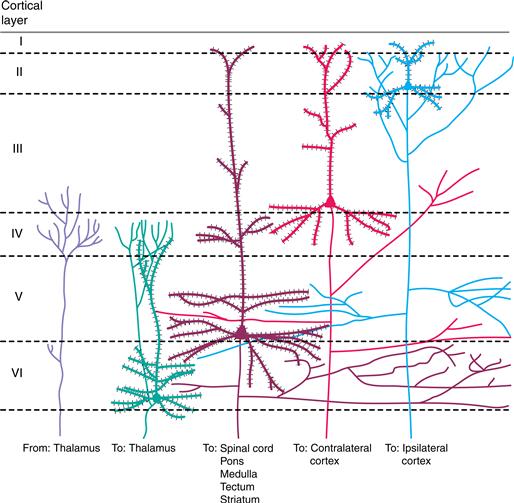

Neurons in areas of the sensory cortex (and most other areas of the cerebral cortex) are organized into six layers. The middle layers (III and IV) are the main site of termination of axons from the thalamus (Fig. 22.8). In the primary sensory cortices, these middle layers are enlarged and contain many small neurons. Because the small cells resemble grains of sand in standard histological preparations, the sensory areas are themselves referred to as granular areas of cortex.

Figure 22.8 Cellular organization of the sensory cortex into six layers (I–VI). Inputs from the thalamus terminate mainly in layers III and IV. The main output neurons of the cortex are pyramidal cells, which are distributed in different layers according to their projections. Descending projections are to the thalamus (neurons in layer VI) or to the spinal cord, pons and medulla, tectum, or striatum (neurons at various levels in layer V). Ascending projections to other “higher” cortical centers are often from neurons located above layer IV; some of these projections are to the same hemisphere (layer II) or to the opposite hemisphere (layer III). Adapted from Jones (1984).

Columnar Organization

Properties other than place in the periphery are mapped in primary sensory areas of the cortex. The third spatial dimension of the cortex, that of depth, arranges neurons in adjacent 0.5- to 1-mm-wide regions, referred to as columns (Mountcastle, 1997). In these columns, neurons stacked above and below one another are fundamentally similar but differ significantly from neurons on either side of them. One example of columns with a clear anatomical correlate is the division of the primary visual cortex of most primates and some carnivores into a series of alternating regions dominated by the right and left retinas. Each ocular dominance column contains cells driven exclusively or predominantly by one eye; adjacent columns are dominated by the other eye. Other properties, such as selectivity for the orientation of a visual stimulus and the contrast between it and the surround, are also arranged in columns of primary visual cortex (Hubel, 1988). Similar types of columns are evident in the somatosensory system as regions of modality and place specificity. They are most clearly seen in the representation of mystacial vibrissae in rodent somatosensory cortex, where a one-to-one matching of vibrissa with cortical territory gives rise to structures called barrels. In the auditory system, neurons within a column generally share the same best frequency and the same type of binaural interaction characteristic: either one ear excites the neurons and the other inhibits or suppresses the response to the first ear (suppression column) or one ear excites and the other ear also excites or facilitates the response to the first ear (summation column). Moreover, in areas of nonprimary cortex, the feature displayed most commonly by neurons of a particular area is one that often comes to occupy columns. A good example is found in the middle temporal area (MT or V5) of visual association cortex, where neurons are tuned for the direction of a moving visual stimulus. Neurons selective for one particular direction of visual stimulus movement are organized into columns through the depth of MT; these are flanked by columns of neurons tuned for other directions of movement (Albright, Desimone, & Gross, 1984). So consistent are these findings among sensory, motor, and association areas that columnar organization is viewed as a principal organizing feature for all of the cerebral cortex (Mountcastle, 1997).

Stereotyped Connections Exist for Areas of Sensory Cortex

Neurons of the cerebral cortex send axons to subcortical regions throughout the neuraxis and to other areas of the cortex (Fig. 22.8). Subcortical projections are to those nuclei in the thalamus and brainstem that provide ascending sensory information. By far the most prominent of these is to the thalamus: the neurons of a primary sensory cortex project back to the same thalamic nucleus that provides input to the cortex. This system of descending connections is truly impressive because the number of descending corticothalamic axons greatly exceeds the number of ascending thalamocortical axons. These connections permit a particular sensory cortex to control the activity of the very neurons that relay information to it. One role for descending control of thalamic and brainstem centers is likely to be the focusing of activity so that relay neurons most activated by a sensory stimulus are more strongly driven and those in surrounding less well activated regions are further suppressed.

A large number of cortical neurons project to other areas of cortex (Fig. 22.8). Cortico-cortical projections link primary and association areas of the sensory cortex and establish parallel paths so that different aspects of vision, audition, and somatic sensation come to be handled by different areas of cortex. These connections establish a hierarchy within a system, such that “ascending” or “forward” connections begin with neurons from superficial cortical layers (I–III) and end with axonal terminations mainly in layers III and IV of higher cortical regions. Similarly, the ascending projection from the thalamus terminates mainly in these layers in primary sensory cortices. Corresponding descending projections from higher to lower cortical regions begin in the deep or superficial cortical layers and project to layers outside of III and IV. In addition to projections to the ipsilateral hemisphere of cortex, there are also projections to the contralateral hemisphere via the corpus callosum and other commissures. In visual and somatosensory systems, these commissural connections are restricted in origin and termination; they exist to unite the representation of midline structures into a coherent percept (Hubel, 1988).

Response Complexity of Cortical Neurons

Responses of cortical neurons in primary sensory cortices are more complex than those seen for neurons in the periphery. One example is seen in the primary visual area of the cerebral cortex, where neurons are responsive to stimuli that are not concentric circles (center and surround) but elongated lines possessing a specific orientation. Comparable synthesis of simpler inputs to reconstruct more complex features of stimulus is apparent at higher levels in the visual system (Logothetis & Sheinberg, 1996) and in the somatosensory and auditory areas of the cerebral cortex.

Physiologically, processing and selectivity for stimulus features become progressively more complex within the hierarchically organized pathways that connect primary with association areas of the cortex (Gallant & Van Essen, 1994). In the visual system, separate “streams” involved in visuosensory and eventually visuomotor functions have been described; one is responsible for using visual cues to drive appropriate eye movements and the other for dealing with the tasks of visual perception (Gallant & Van Essen, 1994). In the somatosensory system, separate motor and limbic paths exist to perform much the same functions for the entire body, supplying sensory input to coordinate and adjust motor output and using complex input from many receptor types to match the shape of a tactual stimulus with one already stored in memory (Johnson & Hsiao, 1992). In the association pathways of the human auditory system, a specialized area of cortex, Wernicke’s area, plays a fundamental role in processing speech and language information and in communicating with Broca’s area to form a speech motor response. These streams are not separate because traditionally viewed “motor” areas such as Broca’s are now known to become activated in comprehension tasks. Apparent from this pattern in association areas of the cortex is the continued pressure for a division of labor within each sensory system, not one that produces separate paths for analyzing elemental features of a stimulus but one that combines those features either to elicit appropriate movements or to match a stimulus with an internal representation of the world.

Summary

The functional organization of sensory systems shares common themes of transduction, relay, organized mappings, parallel processing, and central modification. It is no surprise that a case has been made for a common phylogenetic origin of sensory systems. Differences among the systems, however, demonstrate that each has existed and operated independently for as long as there have been vertebrates. What remains in overview is a well-ordered basic plan from periphery to perception that has been modified in its details as variations in niche have led to specializations in function.

References

1. Albright TD, Desimone R, Gross CG. Columnar organization of directionally selective cells in visual area MT of the macaque. Journal of Neurophysiology. 1984;51:16–31.

2. Bodian D. Neurons, circuits and neuroglia. In: Quarton GC, Melnechuk T, Schmitt FO, eds. The neurosciences: A study program. New York, NY: The Rockefeller Press; 1967:6–24.

3. Dallos P. Overview: Cochlear neurobiology. In: Dallos P, Popper AN, Fay RR, eds. The cochlea. New York, NY: Springer-Verlag; 1996:1–43.

4. Darian-Smith I, Galea MP, Darian-Smith C, Sugitani M, Tan A, Burman K. The anatomy of manual dexterity: The new connectivity of the primate sensorimotor thalamus and cerebral cortex. Advances in Anatomy, Embryology, and Cell Biology. 1996;133:1–142.

5. Gallant JL, Van Essen DC. Neural mechanisms of form and motion processing in the primate visual system. Neuron. 1994;13:1–10.

6. Guyton JE, Hall AC. Textbook of medical physiology 10th ed. New York, NY: W. B. Saunders and Company; 2000.

7. Horton JC, Hoyt WF. The representation of the visual field in human striate cortex: A revision of the classic Holmes map. Archives of Ophthalmology. 1991;109:816–824.

8. Hubel DH. Eye, Brain and Vision New York, NY: Freeman; 1988.

9. Hudspeth AJ. The cellular basis of hearing: The biophysics of hair cells. Science. 1985;230:745–752.

10. Johnson KO, Hsiao SS. Tactual form and texture perception. Annual Review of Neuroscience. 1992;15:227–250.

11. Johnson KO, Hsiao SS, Yoshioka T. Neural coding and the basic law of psychophysics. The Neuroscientist. 2002;6:111–121.

12. Jones EG. Functional subdivision and synaptic organization of the mammalian thalamus. International Review of Physiology. 1981;25:173–245.

13. Jones EG. Laminar distribution of cortical efferent cells. In: Peters A, Jones EG, eds. New York, NY: Plenum; 1984;521–553. Cerebral Cortex. Volume 1.

14. Katz B. Depolarization of sensory terminals and the initiation of impulses in the muscle spindle. Journal of Physiology (London). 1950;111:261–282.

15. Kuffler SW. Discharge patterns and functional organization of mammalian retina. Journal of Neurophysiology. 1953;16:37–68.

16. Logothetis NK, Sheinberg DL. Visual object recognition. Annual Review of Neuroscience. 1996;19:577–621.

17. Merzenich MM, Nelson RJ, Stryker MP, Cynader MS, Schoppmann A, Zook M. Somatosensory cortical map changes following digit amputation in adult monkeys. Journal of Comparative Neurology. 1984;224:591–605.

18. Mountcastle VB. The columnar organization of the neocortex. Brain. 1997;120:701–722.

19. Sachs MB, Kiang NYS. Two-tone inhibition in auditory-nerve fibers. Journal of the Acoustical Society of America. 1968;43:1120–1128.

20. Singer W. Time as coding space in neocortical processing: A hypothesis. In: Gazzaniga MS, ed. The cognitive neurosciences. Cambridge, MA: MIT Press; 1995;91–104.

21. Stevens SS. On the psychophysical law. Psychological Review. 1957;64:153–181.

22. Wassle H, Boycott BB. Functional architecture of the mammalian retina. Physiological Review. 1991;71:447–480.

23. Weinberger NM. Auditory associative memory and representational plasticity in primary auditory cortex. Hearing Research. 2007;70:226–251.

24. Yau K-W, Baylor DA. Cyclic GMP-activated conductance of retinal photoreceptor cells. Annual Review of Neuroscience. 1989;12:289–328.

Suggested Readings

1. Beurg M, Fettiplace R, Nam J-H, Ricci A. Localization of inner hair cell mechanotransducer channel using high-speed calcium imaging. Nature Neuroscience. 2009;12:553–558.

2. Dowling JE. The retina: An approachable part of the brain Cambridge, MA: Belknap Press; 1987.