Chapter 46

Attention

Introduction

Intuition, together with cognitive and psychophysical experiments, shows that the brain is limited in the amount of information it can process at any moment in time. For instance, when people are asked to identify the objects of a briefly presented scene, they become less accurate as the number of objects increases. Similarly, when people concentrate on one demanding task (e.g., mental arithmetic) this typically comes at the expense of performance on other simultaneous activities (e.g., recalling a familiar tune). Limitations on the ability to simultaneously carry out multiple cognitive or perceptual tasks reflect the limited capacity of some stage or stages of sensory processing, decision making, or behavioral control. As a result of such computational bottlenecks, it is necessary to have neural mechanisms in place to ensure the selection of stimuli, or tasks, that are immediately relevant to behavior. “Attention” is a broad term denoting the mechanisms that mediate this selection.

In his Principles of Psychology published in 1890, William James wrote, “Everyone knows what attention is. It is the taking possession by the mind in clear and vivid form, of one out of what seem several simultaneously possible objects or trains of thought… . ” In modern neuroscience—specifically, neurophysiological research with animals—attention is defined with respect to the external world, as the focusing on one of many possible sensory stimuli. Despite their apparent discrepancies, these definitions have two features in common. They highlight the selective character of attention and highlight the cognitive nature of this selection: the fact that, whether directed without or within, attention guides selection of stimuli or thoughts.

Over the past several decades, research has concentrated on the relation between attention and sensory perception. Clearly, it is possible to focus on selected stimuli in any sensory modality—sights, sounds, smells, and touch. Most studies, however, have examined vision, the dominant sensory modality for humans and nonhuman primates. These experiments have investigated visual attention at multiple levels, ranging from behavior to the single neuron. This chapter outlines the view of attention that has emerged from these studies. A key observation is that attending to an object enhances the influence of that object over behavior and the extent to which it can be accurately perceived and reported. Conversely, withdrawing attention, either by force of the behavioral context or following certain brain lesions, can render observers practically blind to visual events. A second key point is that the attentional processes that underlie perceptual selection may also guide the voluntary eye movements with which foveate animals, such as monkeys and humans, scan the environment. A final point is that attention is closely coordinated with an ongoing task, gathering information that serves the current actions. In terms of neural organization, although some neural centers have been closely linked with the control of attention, attention appears to affect the activity of neurons at almost all levels of the visual system.

Definitions and Varieties of Attention

Empirical studies have generally adopted two operational definitions of attention. Some studies, heralding back to early studies of Pavlovian learning, define an attended stimulus as one that preferentially controls behavior. Other, psychophysically based studies adopt a narrower definition, whereby an attended stimulus is one whose discriminability or detectability is modified (typically increased) during performance of a task. In general, these two definitions are well-aligned with each other. Consider for example coming to a busy intersection and examining the traffic light before deciding what to do next. By the first definition we would say the light is attended since it controls one’s future action (stop or proceed). By the second definition we would also say the light was attended, since the subject will be able to report the color of the light. We note, however, that while the equivalence of action-based and perception-based attention has been assumed in the field, the mechanisms of these processes may not be identical and are under active investigation.

Just as they have the capacity to flexibly link attention to action, individuals have great flexibility as to how, why, and to what precisely they attend. Attending to an object often is accompanied by overt orienting toward that object using the eyes and possibly also the head and body. When a person enters a room, it is natural to turn in that person’s direction. However, one can also attend covertly to objects without looking directly at them. When driving, one can monitor a passing car while continuing to look straight ahead. Covert attention can improve peripheral visual acuity, thereby extending the functional field of view. Although one can direct attention without moving the eyes, the converse does not appear to be true: experiments show that covert attention must be deployed to an object before that object can be targeted with an eye movement. Indeed, saccades—rapid eye movements used most commonly for scanning the environment—may be considered a motor manifestation of visual attention, a relationship that is discussed in more detail later.

Certain external stimuli can summon attention in and of themselves. These can be physically salient objects, such as especially large, bright, or loud objects, or stimuli with special learned significance, such as one’s own name or a mother’s face. This mode of attentional engagement, known as exogenous or stimulus driven, ensures that salient and potentially important external events do not pass unnoticed even if they are not being actively sought out. However, purposeful behavior often requires that attention be directed voluntarily, or endogenously, based on internally defined goals and against potential external distractions. When reading, one purposefully directs one’s attention from one word to the next, tuning out noise and other distractions. In natural behavior, endogenous and exogenous factors interact continuously to control the allocation of sensory processing (Egeth & Yantis, 1997).

Another important issue is what is attended. Attention can be directed to a location in space, regardless of what happens to be at that location. It can also be directed to a feature, as when one searches within a complex scene for an object of a particular shape or color. Attention can also select whole objects, as demonstrated by studies showing that when attention is directed to one feature of an object, the other features of the same object are selected automatically for visual processing and by studies finding that when attention is directed to one of two semitransparent objects, attending to a feature of one object impairs processing of the features of the other object. Finally, attention can select entire object categories in highly abstract ways (e.g., when you cross the street, you are looking for cars regardless of their different appearances). In each of these cases, attention has been found to facilitate processing of the attended location, feature, object, or object category, as assessed at the behavioral and neural levels (Peelen et al., 2009; Reynolds & Chelazzi, 2004).

Attention is thus highly flexible and can be deployed in a manner that best serves the organism’s momentary behavioral goals: to locations, features, or objects, based on internal goals or the external environment, with or without accompanying orienting movements. It is important to keep in mind that although these phenomena can be isolated in laboratory experiments, all varieties of attention operate seamlessly during natural behavior.

Neglect Syndrome: A Deficit of Spatial Attention

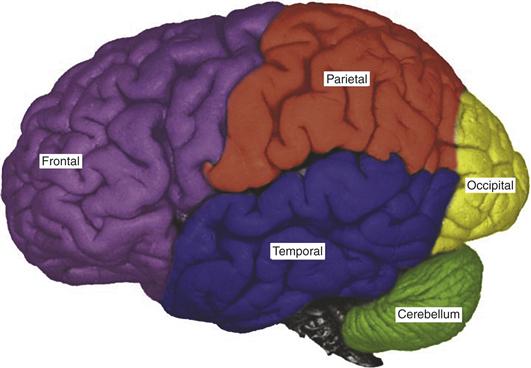

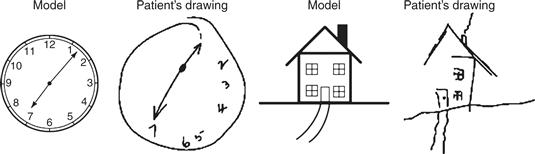

Studies of patients with brain lesions have identified regions of the brain that are involved in attention (see Fig. 46.1, which shows the major divisions of the human cortex). In the cortex, unilateral lesions in the parietal lobe, the frontal lobe, and the anterior cingulate cortex (Heilman, 1979; Vallar, 1993) in humans may cause a profound inability to attend to certain spatial regions, a syndrome known as spatial neglect. At the subcortical level, lesions of the basal ganglia or of the pulvinar thalamic nucleus, which is heavily connected with the parietal cortex, can also cause neglect. In severe cases, patients with neglect behave as if the world contralateral to their lesioned hemisphere (the contralesional world) has ceased to exist. For example, a patient with neglect following a right hemisphere lesion may fail to read from the left side of a book, may ignore the food on the left side of the plate, or may remain unaware of the numerals on the left side of a clock (Fig. 46.2). Neglect patients may also be reluctant to initiate movement in contralesional space, with or without external sensory stimulation. Because the critical feature causing an object to be ignored is its location, this type of neglect is thought of as primarily a disorder of spatial attention.

Figure 46.1 Lateral view of a human brain. Frontal (purple), parietal (orange), temporal (blue), and occipital (yellow) lobes are outlined.

Figure 46.2 Two drawings that were made by a patient with spatial neglect. The patient was asked to copy the two models (clock, house). In each case, the copies exclude important elements that appeared on the left side of the model, indicating that the patient was unable to process information about the left side of the model.

The behavioral impairments in neglect cannot be explained by simple sensory or motor deficits (Mesulam, 1999). Neglect patients have normal vision in the contralesional visual field once their attention has been directed there, and they have no hemiparesis that could account for their reluctance to move. In addition, the extent of their difficulty is not immutable, as a sensory or motor deficit would be, but is strongly affected by the goals, expectations, and motivational state of the patient. Thus, neglect represents a failure to select, in some circumstances, the appropriate portions of a sensory representation. Neglect operates on high-level representations that differ considerably from the raw sensory input. For example, visual neglect is not confined only to retinal visual coordinates (affecting only objects in the contralesional visual field) but can affect objects in the contralesional space relative to the patient’s body, relative to the patient’s gaze, or relative to an external object or scene. Thus, neglect affects a visual representation that incorporates information about the position of the body with high-level visual information. Neglect affects not only the perception of the present sensory environment, but also the processing of memorized or imagined scenes.

A related but milder attentional deficit, known as extinction, can follow more limited brain lesions or can occur during recovery from the acute phase of neglect. In visual extinction, patients are able to orient toward a contralesional stimulus presented in isolation, but fail to notice it if the same stimulus is presented simultaneously with an ipsilateral distracter. Extinction-like deficits are demonstrated readily with the spatial cuing task (Posner, Walker, Friedrich, & Rafal, 1984). In these tasks, subjects are cued to attend to the left or right visual field. Unimpaired observers are better able to process information at the cued location, but can also detect stimuli at an uncued location. Compared to unimpaired observers, neglect patients are slowed only mildly in their ability to detect targets in the contralesional visual field following valid symbolic or peripheral cues in the same field. However, they have enormously prolonged latencies for detecting contralateral targets if their attention had been misdirected to the ipsilesional field by means of an invalid cue. No impairment is seen when patients are cued to the contralesional field but asked to detect an ipsilesional target. Extinction-like phenomena also have been demonstrated in search tasks in which patients are asked to find a target object located at various locations in a complex scene. The time needed to find a target in contralesional space increases in proportion with the number and salience of ipsilesional distracters.

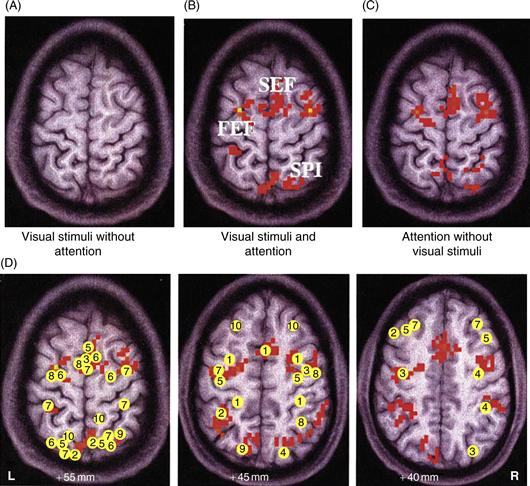

Attention Affects Neural Activity in the Human Visual Cortex in the Presence and Absence of Visual Stimulation

Human functional brain imaging studies are in agreement with both single-cell physiology and neuropsychological studies in identifying areas in the parietal, frontal, and cingulate cortices as being especially active in relation to spatially directed attention. When observers attend to a location in space in anticipation of the appearance of a stimulus, this is accompanied by elevated levels of activity in a fronto-parietal network consisting of the superior parietal lobule (SPL), the frontal eye field (FEF), and the supplementary eye field (SEF) extending into the anterior cingulate cortex (Fig. 46.3). This elevation in activity persists throughout the expectation period and when stimuli later appear at the attended location (Fig. 46.4B). It is important to note that the fronto-parietal attentional control network is not limited to controlling spatial attention. It is also activated when subjects select nonspatial information. Object- or feature-based attention engages the fronto-parietal attention network as well, indicating a general domain-independent mechanism of attentional control (Yantis & Serences, 2003).

Figure 46.3 Regions in human brain activated by attention and regions associated with neglect. (A) Visual stimulation did not activate the frontal or parietal cortex reliably when attention was directed elsewhere in the visual field. (B) When the subject directed attention to a peripheral target location and performed an object discrimination task, a distributed fronto-parietal network was activated, including the SEF, the FEF, and the SPL. (C) The same network of frontal and parietal areas was activated when the subject directed attention to the peripheral target location in the expectation of the stimulus onset—in the absence of any visual input whatsoever. This activity therefore may not reflect attentional modulation of visually evoked responses, but rather attentional control operations themselves. (D) Meta-analysis of studies investigating the spatial attention network. Axial slices at different Talairach planes are indicated. Talairach (peak) coordinates of activated areas in the parietal and frontal cortex from several studies are indicated (For references, see Kastner & Ungerleider, 2000). R, right hemisphere; L, left hemisphere.

Figure 46.4 Directed attention in humans with and without visual stimulation. (A) Time series of fMRI signals in V4. Directing attention to a peripheral target location in the absence of visual stimulation led to an increase of baseline activity (textured blocks), which was followed by a further increase after the onset of stimuli (gray-shaded blocks). Baseline increases were found in both the striate and the extrastriate visual cortex. (B) Time series of fMRI signals in FEF. Directing attention to the peripheral target location in the absence of visual stimulation led to a stronger increase in baseline activity than in the visual cortex; the further increase of activity after the onset of stimuli was not significant. Sustained activity was seen in a distributed network of areas outside the visual cortex, including SPL, FEF, and SEF, suggesting that these areas may provide the source for the attentional top-down signals seen in visual cortex.

Adapted from Kastner et al. (1999).

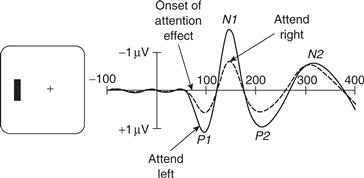

Frontal and parietal areas have been associated with the control of spatial attention, but they are not usually considered crucial for visual processing or object recognition. There is converging evidence from event-related potential (ERP) and functional imaging studies that feedback signals from the fronto-parietal areas that control the allocation of attention can affect the neural processing of visual information in the human visual cortex. In a typical experiment, identical visual stimuli are presented simultaneously to corresponding peripheral field locations to the right and to the left of a central fixation point. Subjects are instructed to direct attention covertly to the right or the left by a symbolic cue presented at the fixation point and to detect the occurrence of a visual stimulus or, in some cases, discriminate a feature of the attended stimulus. Directing attention to the left hemifield increases stimulus-evoked activity in the extrastriate visual cortex of the right hemisphere, whereas directing attention to the right hemifield increases activity in the extrastriate visual cortex of the left hemisphere (Heinze et al., 1994) (Fig. 46.5). Thus, responses to stimuli are enhanced on the side of the extrastriate cortex that contains representations of the attended hemifield. As is the case in the fronto-parietal areas, this increase in response is observed during the period prior to appearance of a stimulus, reflecting the effects of feedback signals from the attentional control areas.

Figure 46.5 Attentional modulation of event related potentials (ERPs). In a typical experiment, subjects fixate a central cross and attend either to the left or right visual field. Stimuli are then presented to the left and right visual fields in a rapid sequence. This idealized example illustrated the common finding that the average ERP elicited by an attended visual field stimulus contains larger P1 and N1 components.

In one imaging study (Kastner, Pinsk, De Weerd, Desimone, & Ungerleider, 1999), subjects were cued to direct attention covertly to a target location in the periphery of the visual field and to anticipate the onset of visual stimuli. Visual stimuli occurred with a delay of several seconds. Neural activity associated with spatially directed attention in the absence and in the presence of visual stimulation could therefore be distinguished. As was the case in the fronto-parietal control network, fMRI signals increased when attention was directed to the target location and before any visual stimulus was present on the screen. This increase in baseline activity was followed by an additional increase when stimuli appeared at the attended location (Fig. 46.4A), reflecting attention-dependent increases in the stimulus-evoked response itself. These studies and related single-unit studies in the monkey show that spatial attention effects are topographically organized and retinotopically specific, resulting in increased activity among neurons coding the attended location. Taken together, these findings suggest that an important way that spatial attention modulates visual processing is by amplifying the neural signals for stimuli at an attended location.

Functional brain imaging studies and single-unit recording studies in nonhuman primates have found evidence that nonspatial attention also modulates sensory cortices. One such study found that selective attention to shape, color, or speed enhanced activity in the regions of the extrastriate visual cortex that selectively process these same attributes. Attention to shape and color led to response enhancement in regions of the posterior portion of the fusiform gyrus, including area V4. Attention to speed led to response enhancement in areas MT/MST (Corbetta, Miezin, Dobmeyer, Shulman, & Petersen, 1991). In other studies, attention to faces or houses led to response enhancement in areas of the midanterior portion of the fusiform gyrus, areas responsive to the processing of faces and objects. These results support the idea that selective attention to a particular stimulus attribute modulates neural activity in those extrastriate areas that preferentially process the selected attribute.

Single Unit Recording Studies in Nonhuman Primates Provide Convergent Evidence for A Fronto-Parietal Attentional Control System

Studies in monkeys have supported the conclusion that frontal and parietal cortices play a role in controlling the allocation of attention (Bushnell et al., 1981). These studies point to two interconnected areas, one in the parietal cortex, the lateral intraparietal area (LIP), and one in the frontal lobe, the frontal eye field (FEF), as being important for spatial attention. Many neurons in FEF and LIP have spatially selective visual receptive fields, meaning that they respond to visual stimuli that activate a limited region of the retina. These receptive fields are retinotopic (they are linked to the retina and move relative to the external world every time the eye moves) and mostly contralateral, so that the FEF and LIP in one side of the brain represent the locations of objects in the contralateral portion of the visual field. Thus, the neurons provide a map of stimulus location in retinotopic coordinates.

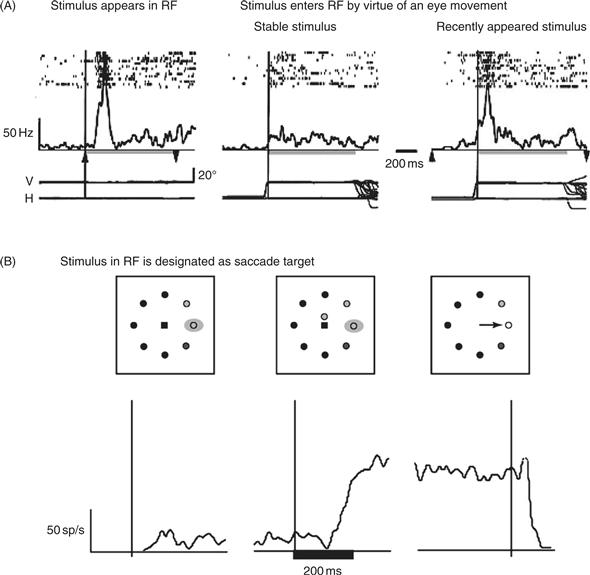

Unlike the maps found in early visual areas (including the retina itself), the maps in LIP and FEF do not indicate the mere presence of an object in the world but respond selectively to objects that are likely to be attended, whether by virtue of their physical properties (conspicuity) or behavioral relevance. This was shown in a task where monkeys made eye movements (saccades) across a stable visual background, such that inconspicuous objects moved in and out of a neuron’s receptive field (Gottlieb et al., 1998). LIP neurons responded little if a stable object entered their receptive field (Fig. 46.6A, center). However, they responded strongly if the identical object was presented with an abrupt onset that rendered it salient. Neural responses are strong if the object appears directly in the receptive field as is commonly the case in physiological experiments, but also if the object appears elsewhere and enters the receptive field a fraction of a second later by virtue of the subject’s eye movement (A, right). An additional experiment showed that neurons also respond to stimuli that are not physically salient but become relevant, for example, by being selected as the target of the next saccade (B). These findings suggest that LIP integrates bottom-up and top-down factors relevant for attention and encode the priority of objects in the world. A similar proposal has been advanced for the FEF, and may also hold for area 7a, a parietal area adjacent to LIP (Constantinidis & Steinmetz, 2001).

Figure 46.6 LIP neurons encode a spatial salience representation. (A) LIP neurons are sensitive to the physical conspicuity of objects in the receptive field. An LIP neuron responds strongly when a visual stimulus is flashed in its receptive field (left). The same neuron does not respond if the physically identical stimulus is stable in the world and enters the receptive field by virtue of the monkeys’ eye movement (center). The neuron again responds if the stimulus entering the receptive field is rendered salient by flashing it on and off on each trial (before it enters the receptive field). In each panel, rasters show the times of individual action potentials relative to stimulus onset (left) or the eye movement that brings the stimulus in the receptive field (center and right). Traces underneath the rasters show average firing rate. Bottom traces show the horizontal and vertical eye position. The rapid eye movement (saccade) that brings the stimulus in the receptive field is seen as the abrupt deflection in eye position traces. (B) LIP neurons are sensitive to the behavioral relevance of objects in the receptive field. Traces show average firing rate of an LIP neuron when the monkey is cued to make a saccade to a stimulus in the receptive field. In the left panels the monkey is fixating and a stable stimulus is in the receptive field. The neuron has low firing rate. In the center panel the monkey receives a cue instructing him to make a saccade to the receptive field. The neuron gradually increases its response. In the right panel the monkey executes the instructed saccade. The neural response remains high until after the eye movement.

A subsequent experiment linked the selective responses in LIP with attention measured behaviorally, as a transient enhancement of perceptual sensitivity (Bisley & Goldberg, 2003). In this experiment monkeys were shown a brief visual stimulus whose location they had to remember for a later saccade. During the memory period, a salient but irrelevant distractor flashed in the visual field. The authors measured the locus of attention at different times and locations by using a secondary contrast discrimination task. Even though the eyes remained still during the memory period, attention shifted—moving to the distractor location immediately after the flash and back to the saccade target later during the delay period. These dynamics were encoded in the neural activity in LIP: attention was pinned at the distractor location for as long as the LIP response to the distractor exceeded that to the target, and flipped to the saccade goal when the balance of activity shifted in LIP. Thus, the selective visual responses in LIP are driven both by salient and task-relevant stimuli and reflect the momentary locus of attention. Similar correspondences are found for FEF neurons in visual search tasks where subjects must direct attention to one of many stimuli in a display (Thompson & Bichot, 2005).

Direct evidence that LIP and FEF are involved in attention comes from experiments that chemically inactivate or microstimulate these areas and measure the effects of these manipulations on attention. Inactivation of the LIP or FEF using microinjections of muscimol (a GABA-A receptor agonist that temporarily silences spiking activity at the injection site) produces deficits in target selection during visual search. In the FEF, subthreshold microstimulation biases covert attention toward the location represented by the neurons at the stimulation site. Microstimulation of the FEF also modulates neural activity at corresponding locations in area V4, consistent with the idea that FEF neurons send top-down feedback to earlier visual areas.

In addition to modulating visual processing, LIP and FEF influence saccade motor planning through their descending connections to the superior colliculus and brainstem oculomotor centers. Microstimulation and neural recordings suggest that these areas form a continuum of visuo-motor transformation, with LIP having primarily a visual role and the FEF being related to both visual selection and oculomotor output. Consistent with this idea, thresholds for electrically evoking saccades are high in LIP but much lower in the FEF. This suggests that LIP is more closely related to visual selection whereas FEF has a stronger influence on eye movement output. Similarly, neural recording studies show that LIP neurons are more closely related to visual selection whereas FEF encodes both visual selection and oculomotor output. In these studies monkeys are trained to make eye movements toward blank spatial locations, which may be opposite a salient visual cue, dissociating visual and motor selection. Such tasks show that LIP neurons predominantly encode stimulus location but provide scant motor information, responding only weakly before nonvisually guided saccades. The FEF contains a population of neurons that are predominantly sensitive to visual cues, but also contains neurons with mixed visuo-motor properties and a small class of neurons—“movement neurons”—that are tightly coupled with saccade output. These neurons respond to saccades but not to simple visual inputs and ramp their responses to a constant level (threshold) prior to a saccade, suggesting that they reflect a fixed saccade threshold. These findings suggest that the brain implements a continuous transformation starting with a stage of visual selection mediated by visual and visuo-motor neurons in LIP and FEF, and culminating in motor selection mediated by movement neurons in FEF.

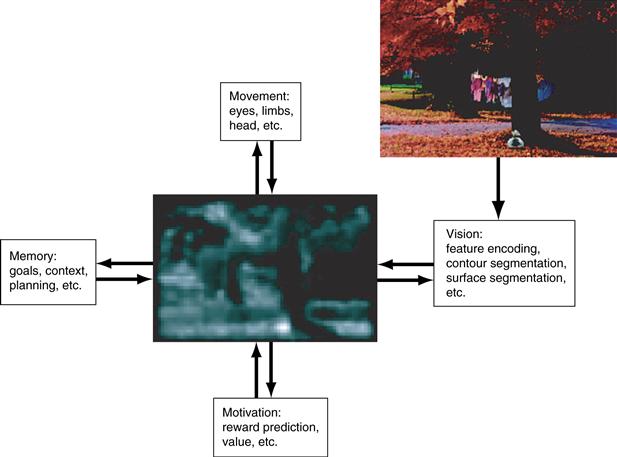

The properties of LIP and FEF neurons described above have lent support to the idea that the attentional system uses a central stage of visual selection—a “salience” or “priority map” that indicates a common currency for directing attention (Fig 46.7). Computational vision models show that systems that contain such a centralized architecture are more efficient than those implementing a more distributed selection in feature-specific visual areas. In the latter case attention would be allocated through competition between saliency signals in individual feature domains: for example, a color-selective area may tend to direct attention to a location of a color pop-out, competing with a motion selective area that attracts attention to a location with salient motion. By allowing these separate feature signals to converge to a common stage the brain can more efficiently coordinate visual selection as well as coordinate selection with action.

Figure 46.7 A salience representation can be viewed as an intermediate representation that interacts with multiple behavioral systems (visual, motor, cognitive, and motivational) and fashions a unified signal of “salience” based on multiple task demands. By virtue of feedback connections to these systems the salience map can help coordinate output processing in multiple “task-relevant areas.”

Experiments in recent years have begun to address a related and equally important question: namely, how are attention and eye movements coordinated with an ongoing task? How do neurons in FEF and LIP “know” which of the many available stimuli is related to one’s current actions? The answer that is emerging from recent work suggests that this learning may be mediated by feedback regarding actions and reward to selective visual representations.

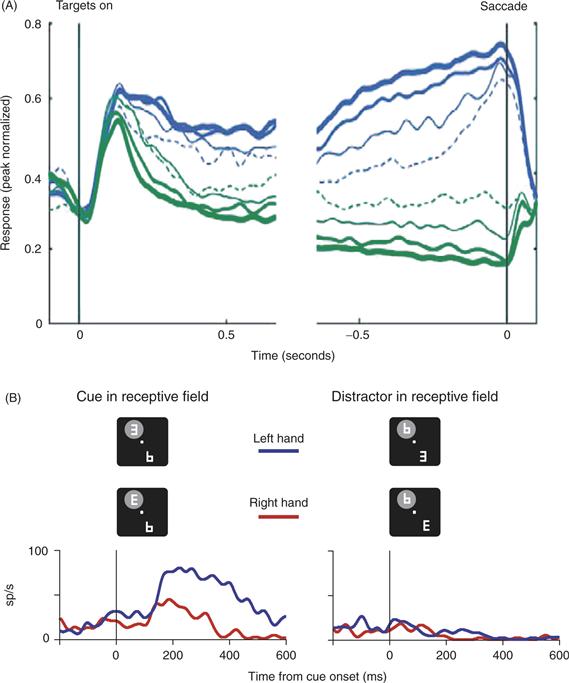

Studies from multiple laboratories show that visual selection response in LIP is not stereotyped but is shaped by the task associations of the attended stimulus. Among the factors modulating LIP activity are the shape or color of a search target, the statistical relationship between a target and salient stimulus, and the category of a visual cue in a categorization task. Figure 46.8A shows an example of modulatory feedback related to the motor response with which a monkey reported a perceptual discrimination (Oristaglio, Schneider, Balan, & Gottlieb, 2006). Monkeys were trained to find a cue in an array of distractors, and, without shifting gaze to the cue, report the orientation of the cue with a manual release. Monkeys reported whether the cue was right- or left-facing by releasing a bar grasped with, respectively, their right or left paw. LIP neurons responded much more strongly to a cue, relative to a distractor, in their receptive fields (Fig. 46.8A, left vs. right panels). Thus, neurons encoded the location of the cue, as would be expected based on previous work. Unexpectedly, however, the cue selection response was modulated by the monkey’s choice of limb. The neuron illustrated in Figure 46.8A responded more strongly to the cue in its receptive field if the monkey released the left bar than if she released the right bar, while other neurons showed the opposite preference, responding more for right than for left bar release. This finding was surprising because the motor response was nontargeting—not directed to a particular spatial location—and was performed outside the monkey’s field of view. Thus, the visual selection signal in LIP was modulated by a nonspatial variable, the limb that was associated with the selected visual cue. While the significance of this nonspatial signal is not yet fully understood, an intriguing possibility is that it may represent feedback through which the brain learns the relation of the cue to a particular motor output and hence, its “relevance” in the behavioral task.

Figure 46.8 (A) LIP responses are modulated by reward probability. Monkeys performed a dynamic foraging task in which they tracked the changing reward values of each of two saccade targets. Traces represent population responses for neurons with significant effects of reward probability. Blue traces represent saccades toward the receptive field, and green traces represent saccades opposite the receptive field. Traces are further subdivided according to local fractional income (reward probability during the past few trials): solid thick lines, 0.75–1.0; solid medium lines, 0.5–0.75; solid thin line, 0.25–0.5; dotted thin lines, 0–0.25. Activity for saccades toward the receptive field increased, and that for saccades away decreased as function of local fractional income, resulting in more reliable spatial selectivity (difference between the two saccade directions) with increasing reward probability. (B) LIP neurons are modulated by limb motor planning. Monkeys viewed a display containing a cue (a letter “E”) and several distracters. Without moving gaze from straight ahead, they reported the orientation of the cue by releasing one of two bars grasped with their hands. The neuron illustrated here responded much more strongly when the cue rather than a distracter appeared in its receptive field (left versus right panels). This cue-related response was modulated by the manual release: the neuron responded much more when the monkey released the left than when she released the right bar (blue versus red traces). Limb modulations did not always accompany a bar release, but were found only when the attended cue was in the receptive field. Bar-release latencies were on the order of 400–500 ms.

A particularly significant source of feedback carries information about expected reward. An illustration of reward effects in LIP comes from an experiment where monkeys were free to choose between two saccade directions (either toward or away from the receptive field), and the probability that any one direction would be rewarded was systematically varied (Fig. 46.8B; Sugrue, Corrado, & Newsome, 2004). Monkeys apportioned their choices in relation to reward probability, tending to choose the target with the higher probability of reward. LIP responses to the selected target increased as a function of reward probability (blue traces). A later experiment showed that reward expectancy modulates responses to salient stimuli even if these are not saccade targets, consistent with the predominantly visual nature of the selective response in LIP (Peck, Jangraw, Suzuki, Efem, & Gottlieb, 2009). This suggests that priority maps may associate stimuli with rewards, possibly guiding attentional allocation so as to optimize performance in a task.

Attention Increases Neuronal Responses and Boosts the Clarity of Signals Generated by Neurons in Parts of the Visual System Devoted to Processing Information about Objects

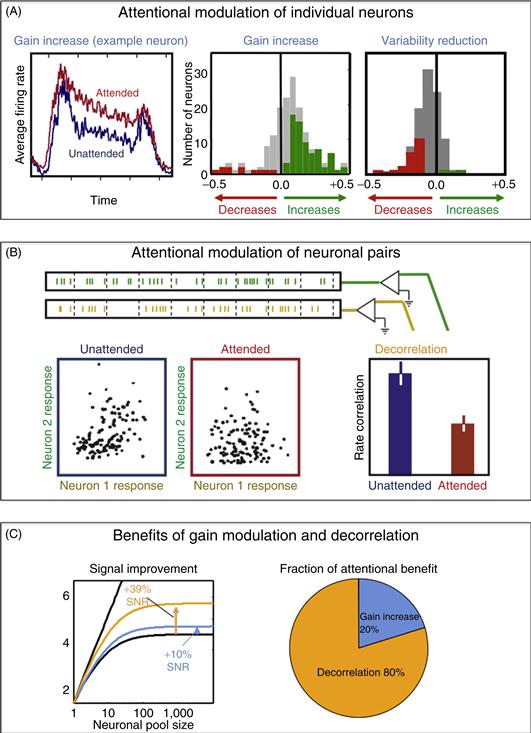

Consistent with the previously mentioned functional imaging studies, single-unit recording studies in the visual cortices have found that when attention is directed to a single stimulus in the receptive field, there is often an increase in the firing rates of neurons that respond to the attended stimulus (Reynolds & Chelazzi, 2004). This is illustrated in Figure 46.9A. On the left is the average firing rate of an individual neuron recorded in macaque Area V4. The neuron’s response was higher when the monkey attended to the stimulus within the neuron’s receptive field (red line) than when attention was directed away to a distant stimulus (blue). This attention-dependent increase in mean firing rate is typically observed when attention is directed toward a single neuron within the receptive field, as shown in the middle figure of A, which shows the distribution of attentional modulation across the population, with positive values corresponding to neurons exhibiting attention-dependent increases in mean response and negative values corresponding to reductions. Gray bars indicate neurons whose mean firing rates were not significantly modulated by attention. In addition to this modulation of mean firing rate, attention also reduces the variability of the neuronal response over time (Mitchell, Sundberg, & Reynolds, 2007). This is illustrated in the rightmost figure of A. Here, positive values correspond to neurons whose responses became more variable (“noisier”) when attention was directed toward the stimulus within the receptive field, and negative values, to neurons whose responses became less variable.

Figure 46.9 Attention increases the mean firing rates of individual neurons and the degree to which their firing rates covary over time. (A) Attention-dependent increases in firing rate. (B) Attention-dependent reductions in response covariation. (C) Under the assumption that downstream neurons pool the signals of the afferent neuronal population, observed levels of attention-dependent decorrelation of low-frequency (<10 Hz) fluctuations in firing rate result in increases in signal quality four times as large as the improvement attributable to increases in firing rate.

How much does this reduction in variability translate into improved signaling across a neuronal population? This depends on whether the variability of neuronal responses that is reduced by attention is correlated across the neuronal population. Variability that is uncorrelated can be averaged away when the population responses are pooled by a downstream neuron, but correlated variability cannot be averaged out. Cortical neuronal responses are typically somewhat correlated with one another (Harris & Thiele, 2011), as shown in Figure 46.9B. Here, neuronal responses were recorded simultaneously from two neurons, and the spiking responses were summed over brief time intervals. The resulting spike counts for a pair of V4 neurons are shown in the blue panel on the left. The responses of the two neurons were correlated, with stronger responses in Neuron 1 typically being accompanied with stronger responses in Neuron 2. This correlation was substantially reduced when attention was directed toward the stimulus that fell within the two neurons’ receptive fields, as illustrated in the red panel, center. Across the population, this firing rate correlation was diminished by approximately one-half, as shown in the panel on the right (Mitchell, Sundberg, & Reynolds, 2009).

So attention modulates the mean firing rates of neurons (a change in their gain) and simultaneously decorrelates the moment-to-moment variability in individual neurons’ firing rates. Theoretical calculations enable us to calculate the improvement in the signal quality (signal-to-noise ratio) resulting from these increases in the gain and reductions in correlation of the afferent responses (Zohary, Shadlen, & Newsome, 1994). This is illustrated in the left figure of Figure 46.9C, which shows the signal-to-noise ratio of the pooled signal as a function of the number of neurons in the pool. If the interneuronal variability is uncorrelated, the signal-to-noise ratio increases linearly with the logarithm of the number of neurons in the pool (straight line). If neuronal variability is correlated across neurons, this imposes an upper bound on the signal-to-noise ratio of the pooled signal. This is shown in the saturating black curve, which was calculated based on correlations actually observed in macaque V4 neurons when attention was directed away from the stimulus. The blue line shows the modest increase in asymptotic signal quality that results from the measured attention-dependent increase in gain. The yellow line shows the comparatively larger increase in signal quality that results from observed attention-dependent reductions in low-frequency response correlations (Mitchell et al., 2009). The improvements due to gain and decorrelation are shown in the figure to the right. Decorrelation accounts for the lion’s share of attention-dependent improvement in signal quality (Cohen & Maunsell, 2009; Mitchell et al., 2009).

Microstimulation studies support the conclusion that attention-dependent changes in firing rate within the visual cortices result from feedback signals that are generated in the fronto-parietal attentional control network. Moore and Armstrong (2003) measured the locations in FEF where stimulating current would move the eyes, the “movement field” of the stimulation site. They then reduced the stimulating current to a level too low to evoke an eye movement, and observed the effect of this stimulation on the responses of neurons in visual area V4. They found that FEF stimulation caused V4 neurons to respond more robustly to a stimulus that appeared at the movement field within the V4 neuron’s receptive field (Fig. 46.10A). Figure 46.10B shows responses of a single V4 neuron with and without stimulation. The time course of stimulus presentation (RF stim) and current injection (FEF stim) are illustrated at the top of the panel. The neuronal responses in the two conditions are indicated at the bottom of the figure. Stimulation was injected 500 ms after the appearance of the stimulus, at which point the stimulus-evoked response had begun to diminish in strength. The average response on microstimulation trials (gray) was elevated following electrical stimulation, relative to nonstimulation trials.

Figure 46.10 Electrical stimulation of FEF increases neuronal responsiveness in V4. (A) Current was injected into FEF while neuronal activity was recorded from V4. (B) The visual stimulus appeared in the receptive field for one second (RF stim). 500 ms after the onset of the visual stimulus, a low level of current was applied to a site in FEF for 50 ms (FEF stim). The response of a single V4 neuron with (gray) and without (black) FEF microstimulation appears below. The apparent gap in response reflects the brief period during which V4 recording was paused while current was injected into FEF, when, for technical reasons, the stimulating current interfered with the ability to record action potentials. Following stimulation, the V4 neuron had elevated responses on trials when FEF was electrically stimulated, as compared to trials without FEF stimulation.

Attention Modulates Neural Responses in the Lateral Geniculate Nucleus

The lateral geniculate nucleus (LGN) is the thalamic station in the retinocortical projection and traditionally has been viewed as the gateway to the visual cortex. In addition to retinal afferents, the LGN receives input from multiple sources including striate cortex, the thalamic reticular nucleus (TRN), and the brainstem. The LGN therefore represents the first stage in the visual pathway at which cortical top-down feedback signals could affect information processing.

It has proven difficult to study attentional response modulation in the LGN using single-cell physiology due to the small RF sizes of LGN neurons and the possible confound of small eye movements. Initially, several single-cell physiology studies failed to demonstrate attentional modulation in the LGN, supporting a notion that selective attention affects neural processing only at the cortical level. This notion was first revisited using fMRI in humans (O’Connor, Fukui, Pinsk, & Kastner, 2002). As in visual cortex, different modulatory effects of attention were found. Neural responses to attended visual stimuli were enhanced relative to the same stimuli when unattended. As in cortex, this effect of attentional response enhancement was shown to be spatially specific in the LGN. And directing attention to a location in the absence of visual stimulation and in anticipation of the stimulus onset increased neural baseline activity. Together, these studies indicate that the LGN appears to be the first stage in the processing of visual information that is modulated by attentional top-down signals.

These studies were suited to compare the magnitude of the attention effects across the visual system. The magnitude of all attention effects increased from early to more advanced processing levels along both the ventral and dorsal pathways of visual cortex. This is consistent with the idea that attention operates through top-down signals that are transmitted via cortico-cortical feedback connections in a hierarchical fashion. This idea is supported by single-cell recording studies, which have shown that attention effects in area TE of inferior temporal cortex have a latency of approximately 150 ms, whereas attention effects in V1 have a longer latency of approximately 230 ms. According to this account, one would predict smaller attention effects in the LGN than in striate cortex. Surprisingly, it was found that all attention effects tended to be larger in the LGN than in striate cortex. This finding suggests that attentional response modulation in the LGN is unlikely to be due solely to corticothalamic feedback from striate cortex, but may be further influenced by additional sources of input. In addition to corticothalamic feedback projections from V1, which comprise about 30% of its modulatory input, the LGN receives another 30% of modulatory inputs each from the TRN and the parabrachial nucleus of the brain stem. This view has been corroborated by a recent physiology study (McAlonan, Cavanaugh, & Wurtz, 2008) that demonstrated concurrent response modulation in parvo- and magnocellular LGN and the TRN. While the response amplitudes evoked by an attended stimulus increased relative to those evoked by unattended stimuli in the LGN, they decreased in the TRN and also preceded the LGN responses, suggesting a release of inhibitory control of the TRN over the LGN. These effects occurred in the first 100 ms after stimulus onset. A second effect of attention on the LGN manifested 200 ms after stimulus onset and was independent of the TRN. It is conceivable that this late response modulation reflects cortical feedback via V1. Given its afferent input the LGN may be in an ideal strategic position to serve as an early gatekeeper in attentional gain control.

The Visual Search Paradigm has been used to Study the Role of Attention in Selecting Relevant Stimuli from within A Cluttered Visual Environment

So far this chapter has considered the situation when attention is cued to a location (or a feature) in the visual field. However, one is rarely told in advance to attend to a particular location. Normally, one needs to find a particular object in a complex world that is composed of a large number of stimuli. Psychologists have used a visual search paradigm to understand how attention selects behaviorally relevant stimuli out of a group of other stimuli.

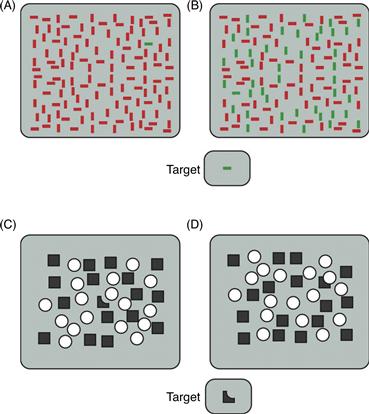

In a typical task, observers are asked to search among an array of stimuli and indicate whether a particular target is present in the array. This task is easier under some conditions than others. For example, it is easy to determine whether a horizontal green bar is present in Figure 46.11A. It takes longer to make this judgment for stimuli in Figure 46.11B. Treisman and Gelade (1980) found that searches for a target with a unique feature, like the one illustrated in Figure 46.11A, can be completed quickly regardless of the number of elements in the search array; the target seems to “pop out” of the search array. Such searches often are referred to as efficient. In contrast, Treisman and Gelade (1980) found that the amount of time required to find a target that is defined by conjunctions of elementary features (e.g., a horizontal green among horizontal reds and vertical greens, as in Fig. 46.11B) increases linearly with the number of elements in the array. Such searches often are referred to as inefficient. The amount of time added per item depends on stimulus conditions, but a rule of thumb is that each additional item in the array adds about 50 ms to the amount of time taken to locate the target. The fact that it takes time to locate the target in Figure 46.11B implies that the visual system is limited in capacity. If it were not, the target could be identified immediately by evaluating every element in the array simultaneously.

Figure 46.11 Efficient and inefficient visual searches. (A) Pop-out search. The horizontal green bar differs from all other array elements in a single feature, color. As a result, it pops out immediately, regardless of how many elements are in the array. (B) The same target is difficult to find when it is embedded among elements that cannot be differentiated from it on the basis of a single feature (color or orientation). (C) The dark target pops out among squares and circles. (D) When the same target is perceived to be a square occluded behind a circle, it is difficult to find among squares and circles. Because this arrangement of the array interferes with visual search, this suggests that integration of objects into wholes occurs prior to the activation of attentional mechanisms used in visual search. Thus, these mechanisms are evidently not necessary for completion.

Adapted from Rensink and Enns (1998).

Where is the Computational Bottleneck as Revealed by Search Tasks?

Considerable debate exists regarding the locus and nature of the computational bottleneck that causes the capacity limitations that are revealed by visual search tasks. One influential theory, feature integration theory (FIT; Treisman & Gelade, 1980), proposes that capacity limitations occur at very early stages of cortical processing where “elementary” features are bound into coherent objects. This theory proposes that in the “pop-out” case, where the target differs from distracters in a single elemental feature, a search can be completed efficiently because there is no need to bind together different elementary features. Thus, a green target among red distracters (Fig. 46.11A) can be found in parallel by V1 neurons that respond to green but not red stimuli, without the need for visual attention. However, in a conjunction search, where each of the elementary target features (color and orientation) is shared with distracters, the observer must bind elementary features into coherent object representations before search can occur. According to this account, one cannot process multiple objects simultaneously because in order to avoid misconjoining features from different objects, attention selects features from one location at a time. Consistent with this idea, patient RM, who suffered damage to his parietal attentional control system, often misconjoins the shape and color of letters, even after viewing them for up to 10 seconds; that is, he mixes up which letters are presented in which colors.

However, the idea that limited capacity occurs at early stages of processing has been challenged. For example, some studies suggest that parts of objects are integrated into wholes prior to the application of attention. Consider the task of searching for the oddly shaped object appearing at the bottom of Figure 46.11 (adapted from Rensink & Enns, 1998). This odd shape pops out from among the squares and circles in Figure 46.11C, but it is harder to find the same shape in Figure 46.11D. The difference is that in Figure 46.11D, the oddly shaped part appears to be a square that is occluded by a superimposed circle; that is, the visual system completes the shape of the occluded square, hiding it among the other squares. Because this completion stops the square from popping out, the completion operation must occur prior to visual search.

Further evidence that features can be integrated preattentively into objects comes from the finding that when an observer attends to one feature of an object (e.g., its orientation), other features of the object are selected automatically as well. In a now classic study, Duncan (1984) found that observers could easily make simultaneous judgments about two features of the same object (e.g., its orientation and whether or not it contained a gap). Making the second judgment did not interfere with the first judgment. However, observers were severely impaired when they simultaneously judged the same two features, one on each of two different stimuli. This finding suggests that when attention is directed to one feature of an object, all the features that make up the object can automatically be selected together because the features are already linked together into objects. These sorts of observations suggest that limited capacity results from information processing bottlenecks that occur relatively late in processing, after features are already integrated into wholes. One possibility is that the bottleneck occurs when objects enter a limited capacity working memory. Under this view, attention plays the role of selecting which objects pass through the bottleneck.

These two perspectives are not mutually exclusive. Some feature integration may occur preattentively (e.g., Rensink & Enns, 1998), but other types of stimulus integration might require spatial selection. As described earlier, attention has been found to modulate neural activity at all stages of visual processing including early stages (Motter, 1993; O’Connor et al., 2002), where neuronal responses first show signs of grouping the features and parts of objects into wholes, as well as late stages, which are likely involved in selecting stimuli for storage in working memory. Thus, both early and late stages of processing may act as limited capacity bottlenecks.

Neuronal Receptive Fields are A Possible Neural Correlate of Limited Capacity

Extracellular recording studies of attention in awake, behaving monkeys have revealed that the receptive fields of individual neurons might contribute to the limitation in visual capacity. As visual information traverses the successive cortical areas of the ventral stream that underlie object recognition (Ungerleider & Mishkin, 1982), the sizes of receptive fields increase from less than a degree of visual arc in primary visual cortex (area V1) to about 20° of visual arc in area TE, the last purely visual area in the ventral visual processing stream (see Chapter 44). Similar increases in receptive field size are observed in the dorsal visual processing stream. In a typical scene, such large receptive fields will contain many different objects. Therefore, a likely explanation for why one cannot process many different objects in a scene simultaneously is that neurons, whose signals are limited in bandwidth, cannot simultaneously send signals about all the stimuli inside their receptive fields. This idea implies that processing limitations exist at all levels of processing but that they become more pronounced in higher order visual areas, where receptive field sizes are larger. How then do neurons transmit signals about behaviorally relevant stimuli in their receptive fields?

One proposal is that the selective processing of behaviorally relevant stimuli is accomplished by two interacting mechanisms: competition among potentially relevant stimuli and biases that determine the outcome of this competition. For example, when an observer is asked to detect the appearance of a target at a particular location, this task is thought to activate spatially selective feedback signals in cortical areas, such as parts of the parietal and frontal cortex discussed earlier. These cortical areas provide a task-appropriate spatial reference frame and transmit signals to the extrastriate cortex, where they bias competition in favor of stimuli that appear at the attended location. Similarly, when an observer searches for an object in a cluttered scene, feature-selective feedback is thought to bias competition in favor of stimuli that share features in common with the searched-for object. When competition is resolved, the winning stimulus controls neuronal responses, and other stimuli are effectively filtered out of the visual stream.

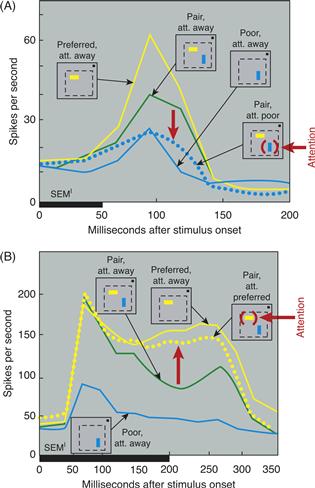

Studies in monkeys have compared the effect of attention on neuronal responses with single and multiple stimuli in the receptive field. Consistent with the proposal just outlined, larger changes in firing rate occur when multiple stimuli appear within the receptive field (and one stimulus must be attended), as compared to when the attended stimulus appears alone and there is no competition to be resolved (Moran & Desimone, 1985). According to the biased competition model just described, neurons that respond to a stimulus should be suppressed when a second stimulus is added that activates a competing population of neurons. To test this idea, pairs of stimuli were presented that activated two nearby populations of neurons (Reynolds, Chelazzi, & Desimone, 1999). Monkeys attended away from the receptive fields of the neurons. The first stimulus was chosen to be of a color and orientation that would strongly activate the recorded neuron. Then, a second stimulus, which elicited a response in a nearby population of neurons, was added. As predicted, the addition of this second stimulus partially suppressed the responses of many neurons to the first stimulus. To test whether attentional feedback would resolve competition in favor of the neurons that responded to the attended stimulus, attention was then directed to the poor stimulus. As predicted, this often magnified the suppressive influence of the poor stimulus, further reducing the firing rates of many neurons (Fig. 46.12A). In a final experiment, attention was directed to the preferred stimulus. Consistent with the resolution of competition in favor of the neurons that responded to the preferred stimulus, directing attention to the preferred stimulus strongly reduced the suppressive effect of the poor stimulus (Fig. 46.12B).

Figure 46.12 Attention to one stimulus of a pair filters out the effect of the ignored stimulus. (A) The x-axis shows time (in milliseconds) from stimulus onset, and the thick horizontal bar indicates stimulus duration. Small iconic figures illustrate sensory conditions. Within each icon, the dotted line indicates the receptive field, and the small dot represents the fixation point. The location of attention inside the receptive field is indicated in red. Attention was directed away from the receptive field in all but one condition. The preferred stimulus is indicated by a horizontal yellow bar and the poor stimulus by a vertical blue bar. In fact, the identity of both stimuli varied from cell to cell. The yellow line shows the response of a V2 neuron to the preferred stimulus. The solid blue line shows the response to the poor stimulus. The green line shows the response to the pair. The addition of the poor stimulus suppressed the response to the preferred stimulus. Attention to the poor stimulus (red arrow) magnified its suppressive effect and drove the response down to a level (dotted blue line) that was similar to the response elicited by the poor stimulus alone. (B) Response of a second V2 neuron. The format is the same as in A. As in the neuron above, the response to the preferred stimulus was suppressed by the addition of the poor stimulus. Attention directed to the preferred stimulus filtered out this suppression, returning the neuron to a response (dotted yellow line) similar to the response that was elicited when the preferred stimulus appeared alone inside the RF.

Adapted from Reynolds et al. (1999).

These findings, and related studies conducted over the past decade, have led to increasingly refined models of attentional selection, grounding our understanding of the automatic gain control circuitry that adjusts visual sensitivity in response to variation in the strength of sensory input (Reynolds & Chelazzi, 2004). Gain control is necessitated by the enormous range of stimulus intensity, such as luminance, luminance contrast, and color contrast that occurs within the visual environment. Take, for example, the change in the number of photons that enter the eye. This varies a million fold from a moonlit night to a sunny day. Evolution has endowed us with a variety of automatic gain control mechanisms, which, together, help us to remain sensitive to subtle changes in sensory input in the face of such enormous variation in intensity. These mechanisms range from the pupillary response to gain control mechanisms within the retina, the lateral geniculate nucleus, and the visual cortices. Decades of research in anesthetized animals have elucidated these neural mechanisms, and it appears that the attentional system has co-opted some of them, including suppressive circuitry within the striate and extrastriate visual cortices. Models in which these gain control circuits are modulated by feedback from attentional control centers have proven able to account for attention-dependent changes in sensitivity, and competitive selection of task-relevant stimuli from among task-irrelevant distracters (Reynolds et al., 1999; Reynolds & Heeger, 2009). It thus appears that while automatic gain control mechanisms may originally have evolved to act in response to changes in the external environment, they also play a key role in endogenously generated attentional selection.

Filtering of Unwanted Information in Humans

Functional brain imaging studies suggest that in the human visual cortex, just as in the monkey cortex, mechanisms exist such that multiple stimuli compete for neural representation and unwanted information can be filtered out (Kastner, De Weerd, Desimone, & Ungerleider, 1998). In one fMRI study, subjects maintained fixation while they were presented with colorful visual stimuli in four nearby locations in the periphery of the visual field (Kastner et al., 1998). Stimuli were presented under two different conditions. In a sequential condition, a single stimulus appeared in one of the four peripheral locations, then another appeared in a different location, and so on, until each of the four stimuli had been presented in the four different locations. In a simultaneous condition, the same four stimuli appeared in the same four locations, but they were presented at the same time. Thus, physical stimuli were identical in the two conditions except that in the second case, all four stimuli appeared simultaneously, which should encourage competitive interactions among the stimuli. The subjects’ task was to count letters at fixation, thereby ignoring the peripheral stimulus presentations. Activation of V1 and ventral stream extrastriate areas V2 to TEO was found under both presentation conditions. Although the fMRI signal was similar in the two presentation conditions in V1, activation was reduced in the simultaneous condition compared to the sequential condition in V2. This reduction in activity was especially pronounced in V4 and TEO. The most straightforward interpretation of this result is that simultaneously presented stimuli interacted in a mutually suppressive way. This sensory suppression effect may be a neural correlate of competition in the human visual cortex. Importantly, the suppression effects appeared to be scaled to the receptive field size of neurons within visual cortical areas; that is, the effects were larger in areas with larger receptive fields. This observation suggests that, as in the monkey visual cortex, competitive interactions occur most strongly at the level of the receptive field (Kastner et al., 1998).

The effects of spatially directed attention on multiple, competing visual stimuli have been studied in a variation of the same paradigm (Kastner et al., 1998). In addition to the two different visual presentation conditions, sequential and simultaneous, two different attentional conditions were tested, unattended and attended. During the unattended condition, attention was directed away from the visual display by having subjects count the occurrences of letters at the fixation point. In the attended condition, subjects were instructed to attend covertly to the stimulus location that was closest to the fixation point and to count the occurrences of one of the four stimuli. The attended condition led to greater increases in fMRI signals to simultaneously presented stimuli than to sequentially presented stimuli. Thus, in this case, attention partially cancelled out the suppressive interactions among competing stimuli. The magnitude of the attentional effect was greater where the suppressive interactions among stimuli had been strongest, such that the strongest reduction of suppression occurred in areas V4 and TEO. These findings support the idea that directed attention enhances information processing of stimuli at attended locations by counteracting the suppression induced by nearby stimuli that are competing for limited processing resources. In this way, unwanted distracting information is filtered out effectively.

Unwanted information can not only be filtered out effectively by top-down mechanisms that resolve competitive interactions among multiple objects, but also by bottom-up mechanisms that are defined by the context of the stimuli. As we have seen earlier, if a salient stimulus is present in a cluttered scene, it will be effortlessly and quickly detected regardless of the number of distracters, suggesting that competition is biased in favor of the salient stimulus. It has been shown that bottom-up influences related to stimulus context of a visual display such as a single, salient stimulus that pops out from a homogeneous, or several stimuli that are grouped together by a Gestalt rule such as collinearity or similarity can partially or even entirely overcome competitive interactions in a multiple-stimulus display. A pop-out display condition, in which a single item differed from the others, was compared to a heterogeneous display, in which all four stimuli were different. Subjects were engaged in a difficult letter detection task at fixation and never attended to the pop-out or heterogeneous displays that were shown in the periphery of the visual field. Competitive interactions among multiple stimuli were eliminated in area V4 when the stimuli were presented in the context of pop-out displays relative to heterogeneous displays, indicating that competition was biased in favor of the salient stimulus in a bottom-up stimulus context-dependent fashion. These pop-out effects appeared to originate from area V1, where the strongest responses to pop-out displays were found. The effects of pop-out on competitive interactions among multiple stimuli appeared to operate in an automatic fashion, thus independently of attentional top-down control. Taken together, the studies reviewed in this section indicate that both top-down attention and bottom-up stimulus-driven mechanisms are critical in resolving competitive interactions among multiple stimuli at intermediate processing levels of visual cortex, thereby filtering out unwanted information from cluttered visual scenes.

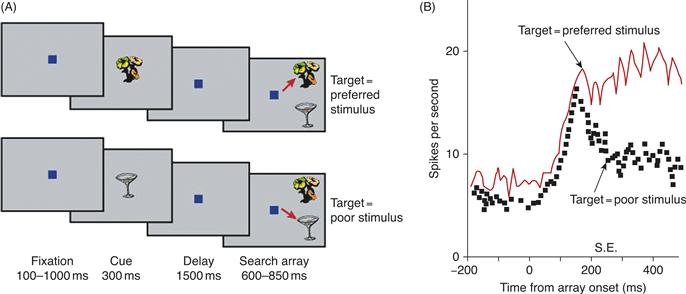

Competition can be Biased by Nonspatial Feedback

The findings just summarized support the proposal that competitive neural circuits can be biased by spatially selective feedback signals. As illustrated in Figure 46.13, feature-selective feedback can also bias competition. Responses of neurons in area TE were recorded as monkeys searched for a target (Chelazzi, Duncan, Miller, & Desimone, 1998). On each trial, the monkey viewed a cue stimulus that appeared at the fixation point. After a delay, an array of stimuli (the search array) appeared within the receptive fields of neurons in area TE. The monkey’s task was to indicate whether a target matching the cue stimulus was present in the array, by making a saccade to the target. Locations of the stimuli were selected at random so that the monkey could not know where the target would appear, if it appeared at all. During the delay period, many TE neurons had a higher baseline firing rate when the cue stimulus was a preferred stimulus for the cell than when it was a poor stimulus; that is, neurons that were tuned to respond to the target were activated while the monkey prepared to search for the target. An interesting possibility is that this activation might bias the visual system toward detecting the target object, just as spatially specific feedback is thought to bias the system toward a particular location. Consistent with this, within 150 to 200 ms after the search array appeared, the response of the neuron increased or decreased, depending on whether the target was, respectively, a preferred or a poor stimulus for the cell. That is, shortly after the appearance of the search array, the activity of the cell reflected the identity of the target stimulus and was no longer influenced by the nontarget stimulus, even though the nontarget stimulus was still present physically within its receptive field.

Figure 46.13 Responses of inferior temporal (IT) neurons during a memory-guided visual search. (A) Task. Monkeys fixated a spot on a computer screen and were shown a central cue (here, either the flower or the cup). After a delay, two (or more) stimuli appeared within the receptive field and the monkey had to saccade to the stimulus that had appeared earlier as the cue. Sometimes (top four images), the cue was a preferred stimulus for the cell (the flower) and the monkey had to saccade to the preferred stimulus. On separate trials (lower four images), the cue was a poor stimulus (the cup) and the monkey had to saccade to the poor stimulus. (B) Neuronal responses. During the delay period, IT neurons showed an elevated baseline activity that reflected the cue stored in memory. The spontaneous firing rate was higher on trials in which the cue was a preferred stimulus for the cell, relative to trials when the cue was a poor stimulus. After the search array appeared, the responses separated, increasing or decreasing depending on whether the cue was, respectively, a preferred or poor stimulus for the cell. This separation occurred well before the onset of the saccade, which is indicated by the vertical bar on the horizontal axis.

Biases in the form of nonspatial feedback are potent mechanisms to overcome the clutter imposed by natural environments. For example, when we search for objects in the real world, we may form concrete (e.g., looking for a familiar face in a crowd of people) or more abstract (e.g., looking for cars when crossing the street) search templates that facilitate the detection of the behaviorally relevant information that helps to filter out the distracting clutter. Recent studies on attentional selection from natural scenes have shown that the representation of objects in visual cortex is entirely task-dependent (Peelen et al., 2009). Subjects were asked to look for people or cars in a large set of briefly presented natural scenes. Task-relevant objects were represented in visual cortex, even at spatially unattended locations, while distracter objects did not get processed up to category level, even when spatially attended. Search templates for the target category were shown to pre-activate object-selective cortex in category-specific ways. The strength of preactivation could be used as a reliable predictor of task performance during the detection task. More generally, internally generated templates may provide powerful biases in generating predictions about sensory and behavioral context that enable flexible behavior.

Conclusions

The behavioral manifestations of attention are clearly not the result of operations in any one dedicated neural center. Selective attention affects neural activity at all levels of the visual system and other sensory systems, with the possible exception of neurons in the sensory periphery. A few structures located at the top of the visual system hierarchy in the parietal, frontal, and cingulate cortices are thought to be relatively more specialized for generating the signals that guide selective attention. These are among the areas that are thought to provide feedback signals that influence sensory processing in areas that are specialized for analyzing the physical properties of the sensory environment.

Answers are beginning to be available to the question of why attention is necessary in the first place. A possible computational bottleneck may be built into all levels of the visual system in the form of spatial receptive fields. The fact that several objects within a certain spatial region can activate the same neuron reduces the ability of such neurons to transmit accurate information about each one of these objects. Automatic gain control mechanisms appear to have been co-opted by the attentional system to mediate selection of attended stimuli from among task-irrelevant distracters. Other bottlenecks will doubtlessly be revealed in future research.

References

1. Bisley JW, Goldberg ME. Neuronal activity in the lateral intraparietal area and spatial attention. Science. 2003;299(5603):81–86.

2. Bushnell MC, Goldberg ME, Robinson DL. Behavioral enhancement of visual responses in monkey cerebral cortex I Modulation in posterior parietal cortex related to selective visual attention. Journal of Neurophysiology. 1981;46:755–772.

3. Chelazzi L, Duncan J, Miller EK, Desimone R. Responses of neurons in inferior temporal cortex during memory-guided visual search. Journal of Neurophysiology. 1998;80(6):2918–2940.

4. Cohen MR, Maunsell JHR. Attention improves performance primarily by reducing interneuronal correlations. Nature Neuroscience. 2009;12:1594–1600.

5. Constantinidis C, Steinmetz M. Neuronal responses in area 7a to multiple stimulus displays: II responses are suppressed at the cued location. Cerebral Cortex. 2001;11(7):592–597.

6. Corbetta M, Miezin FM, Dobmeyer S, Shulman GL, Petersen SE. Attentional modulation of neural processing of shape, color, and velocity in humans. Science. 1991;248:1556–1559.

7. Duncan J. Selective attention and the organization of visual information. Journal of Experimental Psychology General. 1984;113(4):501–517.

8. Egeth HE, Yantis S. Visual attention: Control, representation, and time course. Annual Review of Psychology. 1997;48:269–297.

9. Gottlieb JM, Kusunoki M, Goldberg ME. The representation of visual salience in monkey parietal cortex. Nature. 1998;391:481–484.

10. Harris KD, Thiele A. Cortical state and attention. Nature Reviews Neuroscience. 2011;12:509–523.

11. Heilman KM. Neglect and related disorders. In: Valenstein E, ed. Clinical neuropsychology. New York: Oxford University Press; 1979;268–307.

12. Heinze HJ, Mangun GR, Burchert W, et al. Combined spatial and temporal imaging of brain activity during visual selective attention in humans. Nature. 1994;372:543–546.

13. Hillyard SA, Vento LA. Event-related brain potentials in the study of visual selective attention. Proceedings of the National Academy of Sciences of the United States of America. 1998;95:781–787.

14. Itti L, Koch C. Computational modelling of visual attention. Nature Reviews Neuroscience. 2001;2(3):194–203.

15. Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annual Review of Neuroscience. 2000;23:315–342.

16. Kastner S, De Weerd P, Desimone R, Ungerleider LG. Mechanisms of directed attention in the human extrastriate cortex as revealed by functional MRI. Science. 1998;282:108–111.

17. Kastner S, Pinsk MA, De Weerd P, Desimone R, Ungerleider LG. Increased activity in human visual cortex during directed attention in the absence of visual stimulation. Neuron. 1999;22:751–761.

18. Kohn A, Smith MA. Stimulus dependence of neuronal correlation in primary visual cortex of the macaque. Journal of Neuroscience. 2005;25:3661–3673.

19. Kowler E, Anderson E, Dosher B, Blaser E. The role of attention in the programming of saccades. Vision Research. 1995;35(13):1897–1916.

20. Lovejoy LP, Krauzlis RJ. Inactivation of primate superior colliculus impairs covert selection for perceptual judgments. Nature Neuroscience. 2010;13(2):261–266.

21. McAlonan K, Cavanaugh J, Wurtz RH. Guarding the gateway to cortex with attention in visual thalamus. Nature. 2008;456:391–394.

22. Mesulam MM. Spatial attention and neglect: Parietal, frontal and cingulate contributions to the mental representation and attentional targeting of salient extrapersonal events. Philosophical Transactions of the Royal Society of London Series B, Biological Sciences. 1999;354(1387):1325–1346.

23. Mitchell JF, Sundberg KA, Reynolds JH. Differential attention-dependent response modulation across cell classes in macaque visual area V4. Neuron. 2007;55(1):131–141.

24. Mitchell JF, Sundberg KA, Reynolds JH. Spatial attention decorrelates intrinsic activity fluctuations in macaque area V4. Neuron. 2009;63:879–888.

25. Moore T, Armstrong KM. Selective gating of visual signals by microstimulation of frontal cortex. Nature. 2003;421:370–373.

26. Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229(4715):782–784.

27. Motter BC. Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. Journal of Neurophysiology. 1993;70:909–919.

28. O’Connor DH, Fukui MM, Pinsk MA, Kastner S. Attention modulates responses in the human lateral geniculate nucleus. Nature Neuroscience. 2002;5:1203–1209.

29. Oristaglio J, Schneider DM, Balan PF, Gottlieb J. Integration of visuospatial and effector information during symbolically cued limb movements in monkey lateral intraparietal area. Journal of Neuroscience. 2006;26(32):8310–8319.

30. Peck CJ, Jangraw DC, Suzuki M, Efem R, Gottlieb J. Reward modulates attention independently of action value in posterior parietal cortex. Journal of Neuroscience. 2009;29(36):11182–11191.

31. Peelen MV, Fei-Fei NP. Neural mechanisms of rapid scene categorization in human visual cortex. Nature. 2009;460:94–98.

32. Posner MI, Walker JA, Friedrich FJ, Rafal RD. Effects of parietal lobe injury on covert orienting of visual attention. Journal of Neuroscience. 1984;4:1863–1874.

33. Rensink RA, Enns JT. Early completion of occluded objects. Vision Research. 1998;38(15–16):2489–2505.

34. Reynolds JH, Chelazzi L. Attentional modulation of visual processing. Annual Review of Neuroscience. 2004;27:611–647.

35. Reynolds JH, Heeger DJ. The normalization model of attention. Neuron. 2009;61 168–85.

36. Reynolds JH, Chelazzi L, Desimone R. Competitive mechanisms subserve attention in macaque areas V2 and V4. Journal of Neuroscience. 1999;19(5):1736–1753.

37. Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304(5678):1782–1787.

38. Thompson KG, Bichot NP. A visual salience map in the frontal eye field. Progress in Brain Research. 2005;147:251–262.

39. Treisman AM, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12(1):97–136.

40. Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Mansfield RJW, Goodale MA, eds. The analysis of visual behavior. Cambridge, MA: MIT Press; 1982;549–586.

41. Vallar G. The anatomical basis of spatial neglect in humans. In: Robertson IH, Marshall JC, eds. Unilateral neglect: Clinical and experimental studies. Hillsdale, NJ: Lawrence Erlbaum; 1993;27–62.

42. Yantis S, Serences J. Neural mechanisms of space-based and object based attentional control. Current Opinion in Neurobiology. 2003;13:187–193.