MIA BLOOM AND AYSE LOKMANOGLU

▶ FIELDWORK LOCATION: ISIS ENCRYPTED ONLINE PLATFORM

Terrorist groups have embraced the internet. For many groups, online space is the default platform for recruitment, strategic communication, and fund-raising. ISIS has retained a “first-mover (or most-innovative-mover) advantage in the media realm.”1 Seized ISIS materials reveal that online videos constitute a first step toward recruitment, followed by contact, online involvement, and eventually physical emigration to the “Caliphate.”2 Interviews with captured operatives reveal that 77 percent of active lone shooters become “self-radicalized through Internet forums and other forms of media.”3 As ISIS lost all of its territory in the former Caliphate, the shift to the virtual space has provided a new lease on life. Online propaganda was such a fundamental part of ISIS’s raison d’etre that a 2018 Soufan Center report explained the privileged position accorded the media personnel (videographers, producers, and editors) who crafted it:

The group paid members of its media team nearly seven times the salary of an average foot soldier. Even more striking, recruits with a background in production, editing, or graphic design were being afforded with the rank of emir, a clear signal of their value to the organization.4

The Telegram platform played a crucial role in showcasing this material as well as in recruiting and coordinating ISIS attacks in Europe and rest of the world.5 It was used to organize ghazawat (media raids), a digital attack to spread hashtags and messages and make them go viral. These raids hearken back to the classical period of military raids in the desert, except they are now done within the safe space of Telegram and launched into open and accessible internet platforms.6 For example, in 2016 Rachid Kassim urged his three hundred Telegram followers to carry out what prosecutors have termed “terrorisme de proximité,”7 which resulted in several attacks including the killing of a couple in Magnaville, two teen jihadis killing 86-year-old Father Jacques Hamel in Rouen, an attack involving an explosive-laden vehicle near Notre Dame in Paris, and the shooting of a police officer in Les Mureaux via his Telegram channel “Sabre de la Lumière.”8

Telegram is a free, cross-platform app with invited chat rooms and channels that permit public-public, public-private, and private-private communications for more than one hundred million users. It allows users to engage in secret chats and to share messages, documents, videos, and photos. When messages are deleted on one end of a chat, they disappear on the other end. A user can set a “self-destruct timer” that also deletes the message once it is viewed. The “end-to-end encryption [means] nobody but the sender and recipient can read the messages.”9 These security features, cross-platform construction, and secret chat option offer a secure environment for interaction between ISIS and its network. However, “the general public remains relatively uninformed about the complex ways in which many jihadists maintain robust yet secretive online presences.”10 For most, the language barrier is a challenge because the majority of ISIS and jihadi Telegram networks operate in Arabic. For others, securing access to the network can be tricky.

For the past three years we have conducted virtual field research on ISIS encrypted networks.11 On the Telegram app, ISIS manipulates an environment rich with addictive properties, creating online spaces that encourage group identity, shared opinions, and dominant ideologies, while exploiting an individual’s need to be a part of the group.12

Moving our field research to the virtual space brought with it several challenges. The first was getting our university to approve the research through its institutional review board (IRB). By definition, much of the material we captured in screenshots was posted by people using anonymous or pen names. Because of the built-in public nature of online posting and the anonymity of participants, we applied for and were able to get an IRB waiver for the research project. However, the caveat was that we would observe but not participate in chat rooms. It was crucial that we maintained a safe environment for research and did not end up accidentally radicalizing others if we posted graphic materials or disseminated ISIS communications. We also hoped to avoid a midnight visit by the FBI, who might be monitoring jihadi communications and trace our IP addresses.

Nevertheless, this research raises particular ethical challenges, in part because it is relatively new. The “Belmont Report” was published in 1979,13 a time when anonymized social media could not have been foreseen, and this protocol does not address issues of digital ethnography. Our research followed the strictest IRB guidelines, and the authors anonymized all identifiable information in the distribution of data in all presentations and publications. It was a priority to ensure the safety of both the subjects and the researchers in pursuit of the spirit of the “Belmont Report.”

Academic researchers may fail to grasp the intricacies of the online space, and we are limited in what we can observe because of the graphic nature of the material and the 24/7 postings across every time zone in the ISIS global network. The ideal scenario for this research would be to follow the research protocols laid out by Martyn Frampton, Ali Fisher, and Nico Prucha,14 who have created unique algorithms to “auto scrape” all content across tens of thousands of channels. They then analyze the captured materials using big data tools. In contrast to what Frampton, Fisher, and Prucha are doing, we hand-collected all of the materials and engaged a number of coders to ensure intercoder reliability. To date, we have more than 20,461 unique images, postings, or other content, and we are creating a searchable database that will be available to researchers in the future.

Individual collection of data adds an additional nuance and layer to the research that provides insight into the behavioral dynamics of the social media platforms and how the platforms sustain user engagement. These behaviors indicate a “social media addiction” that cannot be observed or measured when automated scraping of data is employed. For instance, no observations can be made of users’ reactions when channels become unavailable or how the platform exploits participants’ “fear of missing out.” Individual researchers can observe how participants in the chat group sustained or increased their screen time when organizations employed the addictive strategies of varying channel content while at the same time limiting access if participants did not engage within a predetermined time period. If participants do not join within the time limit, they experience an emotional loss of having missed out. Being able to observe these behaviors is necessary to understanding the big picture of how terrorist organizations construct emotional and psychological dependency.

Collecting the content from the channels and chat rooms became exceptionally challenging once ISIS “admins” (channel administrators) became worried about “lurkers” such as journalists, academics, think tank researchers, and security personnel who might monitor the platform for reasons other than subscribing to the radical jihadi ideology. The admins for the channels keep track of accounts that do not post or engage other members in chats. This eventually brought us to the attention of the admins.

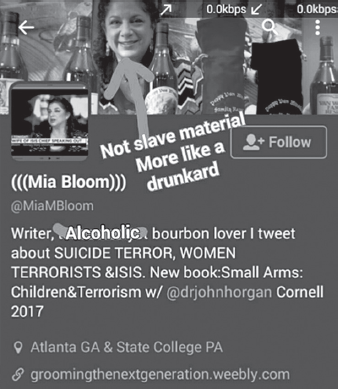

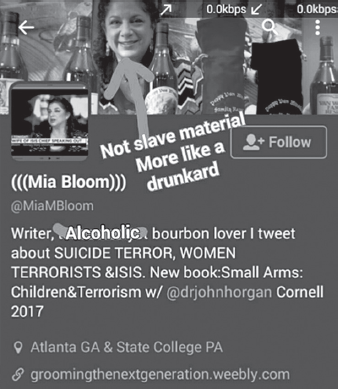

To observe the platform, you must join a channel or a chat by clicking on a dedicated link within a designated period of time. This is usually limited to a maximum of thirty minutes, but for some of the more exclusive channels the time limit might be only ten minutes. Participants in these groups can contact one another (including researchers who are only observing the channel) through direct messages. In previous versions of Telegram, members of a chat could call the number associated with others’ accounts. One day in June 2017, after joining two new links (while sitting in the office where we conducted all of our research), one of the project laptops began to ring. We had not realized that the newly updated version of Telegram could be used for direct communication (like WhatsApp or Skype). When ISIS calls, it is probably best not to answer. We managed to quickly post an Arabic direct message to the member who had called that “I was busy and being monitored at my workplace.” However, this blatant excuse placed the account in the admin’s sights for increased scrutiny. Shortly thereafter, a series of threats was posted that included photoshopped screen shots of one of our Twitter accounts and called Dr. Mia Bloom a “drunkard and an alcoholic” (see figure 19.1).

FIGURE 19.1 ISIS photoshopped image of Mia Bloom’s Twitter account.

Although it was mildly amusing that Bloom was not considered “slave market worthy” (this was more compliment than insult), the images of beheadings directly messaged to Bloom were far less entertaining.

At first glance, field research in the virtual world might appear to place the researcher far from danger. One is not located in a conflict zone and, seemingly, is not at risk. However, some ISIS social media fanatics will issue specific threats, may call for your assassination, or publish private or identifying information about you (“dox you”), and provide their network with the details of where you live and work. We explain later in this essay how one amateur journalist had to be remanded to federal protective custody after trying to use his anonymous account on Telegram to “interview” ISIS supporters. One has to weigh the gravity of any threat against the assumption that fantasists online routinely issue hollow threats from the safety of their parents’ basement.

Nevertheless, it is crucial that researchers take precautions before conducting this type of research. The platform assumes that the user will use a pen name, but you should not use your own personal mobile device, nor should you include any personal details in your account. There is a fine line between engaging in deceit or concealment and safeguarding oneself during high-risk (virtual) field research. The story of how our team was found out illustrates this dilemma (see figure 19.2).

FIGURE 19.2 Threatening Telegram message to Bloom.

ETHICS: THE IMPORTANCE OF CONFIDENTIALITY AND ANONYMITY

As you begin to conduct virtual research on Telegram, there is no step-by-step instruction booklet or “Telegram for Dummies” guide that you can use. The skills required to maintain access to the platform are an illustration of what Michael Kenney terms techne, or on-the-job training.15 At the outset, many errors and mistakes are likely.

First, we recommend using an approved virtual private network (VPN) to protect the location of the researcher because Telegram includes the location in the user profile. The institutions from which the research is conducted should be able to provide approved, possibly air gapped,16 laptops with a secure VPN that is automatically launched with each login to the Telegram application. The laptops we use for research are dedicated project equipment that contain no personal information, to protect the researchers.

Second, the phone used to create the unique Telegram account should not contain any personal information. After purchasing a prepaid mobile device, the researcher should create a unique Telegram account exclusively for the purpose of research. For example, if you use your own cell phone number or if you have ever posted an image of yourself connected to that number, it will be archived by Telegram and is difficult to delete. Changing your name or photo doesn’t automatically erase the archived information, and you have to manipulate the app on a mobile device (not a laptop) to ensure that you delete all previously posted information and images associated with that account. Laith Al Khouri, the Middle East and North Africa director of Flashpoint, noticed these mistakes and alerted our Minerva research team at Georgia State University to the potential danger of creating an account under someone’s real name and then trying to make it anonymous after the fact. This was probably how Dr. Mia Bloom’s profile was discovered.

Attempting to befriend people in chat rooms poses an additional danger because ISIS admins are suspicious of lurkers, assuming them to be spies or government agents. In one case we observed, an eager journalism student who wanted to write about his experiences on the platform was doxed by members of the chat room who posted his email account, cell phone number, and home address. They also revealed his Facebook, Twitter, and Instagram accounts and called for his beheading. This situation became sufficiently alarming that the FBI intervened and provided the amateur journalist with federal protection. In short, although this research might sound “cool” to the outside observer, it can be dangerous if appropriate precautions are not taken.

In virtual field research, researchers face unique risks. The content ISIS generates on its channels includes graphic images that are shocking, upsetting, and often intended to horrify.17 We advise researchers not to view or collect materials prior to meals and to avoid viewing content before going to bed (so as not to induce nightmares). Taking many breaks and having a secondary research project to switch from the graphic images to more mundane materials may forestall posttraumatic stress disorder (PTSD) for some researchers. We have observed the unintended consequences of sustained research on this platform may lead to psychotic breaks or antisocial behavior. Having an on-staff mental health professional is recommended for this type of work. We bring in a psychologist every year to offer support and advice for students who might be disturbed by the images and deliberately do not code the many violent videos we find on the platform. We leave these to other researchers with stronger stomachs. Like scholars who focus on sexual grooming or child pornography, we provide a dedicated private computer for use on these projects. This is preferable to using public machines, lest a passerby glances at your computer and glimpses executions, maiming, or immolation.

Furthermore, virtual research requires patience and many hours of screen time. Amarnath Amarasingam has reported that the life span of most ISIS Telegram channels averages two hundred days.18 One needs to constantly add new channels to maintain access in case channels are shuttered or an account is flagged and access is lost for lack of engagement with others (for not posting or answering questions because of IRB limitations). The channel and chat invitation links are time sensitive, and if you are not logged on to the platform, you probably will miss the narrow time window to add new groups or chats. ISIS relies on this to sustain user engagement; anyone on the network is required to monitor the platform 24/7. Links expire and others might be shut down. Joining back up or monitoring duplicate channels is one way to assure the continuity of the research over the period of analysis. Even skipping one day could translate into a loss of access because some admins note how regularly an individual account logs on and might kick off an account if their online presence is irregular.

As a long-standing member of a group or a channel, your account may be flagged for direct communication. The interaction is generally succinct and in Arabic. Often it is no more than a salutation to ascertain whether the account holder is a lurker, or worse, a researcher observing and reporting on ISIS activities. Most of these approaches entail a brother (or sister) posting a direct message saying “hello how are you?” or posting ISIS specific emojis. Our IRB prohibited engagement, but we were allowed to post an emoji in some situations to prevent losing access to a network after we had lost access to several dozen channels by not participating or responding to direct messages. The contacts happened infrequently, and we could deter further conversation by posting something meaningless such as a ninja jihadi or an image (gif) of a short prayer. However, in some cases the communication was more personal. One of our original accounts designated by the word Umm (meaning “mother of” and signifying that it belonged to a female) received a few offers of marriage or requests to move to Syria (Sham) even though the account contained no images or any supplementary information other than that the account holder was a woman (see figure 19.3). For all other problems that might arise, we suggest consulting the latest privacy guidelines from the Electronic Frontier Foundation.19

FIGURE 19.3 Chat with a jihadi suitor.

ETHICS: DATA COLLECTION AND DISTRIBUTION

The ethics of data collection are complicated by the fact that many of the existing protocols were written with medical research in mind—forty years before the appearance of social media—and are a poor guide for ethical online research. Every research team needs to address ethical concerns prior to data collection and distribution. Digital ethnography and social media research are shifting in real time, and updated research guidelines are needed that include online anonymized data collection. The European Union has taken several initiatives, beginning in September 2018, implementing new regulations to limit the dissemination of terrorist content from the internet.20 The United Kingdom Counter-Terrorism and Border Security Act has made it illegal to view content deemed to support violence and terrorism.21 However, these new regulations have created a backlash from both the public and academic researchers who argue that these restrictions limit freedom of expression and hinder future potential research.22

More broadly, virtual field research on violent extremist social media raises a number of questions that we encourage researchers to consider: What does it mean to provide anonymity to subjects who themselves have anonymized their identities on social media? If the users themselves chose names for their “public” social media persona, is additional anonymization necessary? In the case of members in ISIS Telegram channels, are keeping the identities of the participants ethical and safe?23

DATA COLLECTED

The propaganda we collected includes battlefield reports, photo reports, videos, magazines, and discussions about lone actor attacks in the West. Some chat rooms publish a membership list (and indicate who is currently online), allowing members to engage one another in secret chats and allowing administrators to monitor and police the network. This opens additional research opportunities to conduct field experiments and ascertain the extent of online social media addiction in the future.

From the outset, ISIS’s “virtual world” pushed its narrative online to recruit, sow fear, and spread its propaganda to audiences worldwide. Now that ISIS has been dislodged militarily from Iraq and Syria, the virtual world is the new battlefield from which to coordinate lone actor attacks against Western targets while expanding its influence on its affiliates in the Middle East and Southeast Asia. Today this virtual world is the only arena in which the Islamic State can promote its strength and endurance.24

The platform has provided us with the ability to distinguish between attacks directed by ISIS and attacks merely inspired by the group. We were able to do this by monitoring the observable variations in the recounting of events. For example, attacks in Dhaka (July 2016) or Tehran (June 2017) were posted live to Telegram in real time as they unfolded, whereas attacks in San Bernardino (December 2015) or Orlando (June 2016) were presented with different degrees of detail and information well after the fact. This research has yielded a breadth of data about the inner workings of the ISIS network. It shows how ISIS governed as a proto state in Iraq and Syria and provides unique insights into its Caliphate strategy for expansion and shifts to the affiliates.25

Conducting fieldwork in the virtual space of violent extremist organizations is increasingly emerging as a popular area of research, whether it is for jihadi groups, extreme right-wing organizations, or new bad actors such as incels. New ways of conducting this research unfold on a daily basis with the emergence of new platforms, apps, and technology. It is important to acknowledge and highlight the potential benefits and pitfalls of using new media to research violent extremist organizations. Political science, criminology, and sociology appear to be heading toward codifying field research in the virtual space, but it is crucial to protect the researcher and the subjects of our research. Be extremely cautious and, for the time being, follow ethical guidelines to the best of your ability.

______

Mia Bloom is professor of communication at Georgia State University.

Ayse Lokmanoglu is a graduate student at Georgia State University.

PUBLICATIONS TO WHICH THIS FIELDWORK CONTRIBUTED:

• Bloom, Mia, and Chelsea Daymon. “Addicted to Terror? Charting the Psychological Qualities of ISIS Social Media,” Orbis 62, no. 3 (2018): 372–88.

• Bloom, Mia, Hicham Tiflati, and John Horgan. “Navigating ISIS’ Preferred Platform: Telegram,” Terrorism and Political Violence, 2017 (online publication) 10.1080/09546553.2017.1339695.

• Winkler, Carol, and Ayse Lokmanoglu, “Communicating Terrorism and Counterterrorism.” In The Handbook of Communication and Security, ed. Bryan C. Taylor and Hamilton Bean. New York: Routledge, 2019.

NOTES

1. Daniel Milton, Communication Breakdown: Unraveling the Islamic State’s Media Efforts (New York: Combating Terrorism Center, U.S. Military Academy, October 2016), 1.

2. Joby Warrick, Black Flags: The Rise of ISIS (New York: Anchor Books, 2016).

3. Joel A. Capellan, “Lone Wolf Terrorist or Deranged Shooter? A Study of Ideological Active Shooter Events in the United States, 1970–2014,” Studies in Conflict & Terrorism 38, no. 6 (2015): 407, https://doi.org/10.1080/1057610X.2015.1008341.

5. Seth Cantey and Nico Prucha, “Online Jihad: Monitoring Jihadist Online Communities,” Online Jihad: Monitoring Jihadist Online Communities (blog), 2019, https://onlinejihad.net/.

11. The research was supported by the Minerva Research Initiative, Department of Defense Grant No N00014-16-1-3174; any opinions, findings, or recommendations expressed are those of the authors alone and do not reflect the views of the Office of Naval Research, the Department of the Navy, or the Department of Defense.

12. Mia Bloom and Chelsea Daymon, “Addicted to Terror? Charting the Psychological Qualities of ISIS Social Media,” Orbis 62 (2018): 372–88.

15. Michael Kenney, “Beyond the Internet: Mētis, Techne, and the Limitations of Online Artifacts for Islamist Terrorists,” Terrorism and Political Violence 22, no. 2 (2010): 177–97.

16. An “air gapped” device is one that has never been connected to the internet.

23. We also question the assumption that the sources of research funding inherently prejudice research. Please refer to chapter 33 by Erica Chenoweth and chapter 34 by Zachariah Cherian Mampilly in this book for extensive discussions on the issue of government funding and research.

25. Aisha Ahmad, Jihad & Co.: Black Markets and Islamist Power (Oxford: Oxford University Press, 2017).