In this chapter, we make a specific analysis of the behavior of symmetric tensors, with respect to the rank and the decomposition.

We will see, indeed, that besides their utility to understand some models of random systems, symmetric tensors have a relevant role in the study of the algebra and the computational complexity of polynomials.

7.1 Generalities and Examples

Definition 7.1.1

A cubic

tensor is a tensor of type  where all the

where all the  ’s are equal, i.e., a tensor of type

’s are equal, i.e., a tensor of type  (n times).

(n times).

and for any permutation

and for any permutation  on the set

on the set  , it satisfies

, it satisfies

Example 7.1.2

When T is a square matrix, then the condition for the symmetry of T simply requires that  for any choice of the indices. In other words, our definition of symmetric tensor coincides with the plain old definition of symmetric matrix, when T has dimension 2.

for any choice of the indices. In other words, our definition of symmetric tensor coincides with the plain old definition of symmetric matrix, when T has dimension 2.

, then T is symmetric if and only if the following equalities hold:

, then T is symmetric if and only if the following equalities hold:

symmetric tensor is the following:

symmetric tensor is the following:

Remark 7.1.3

. Namely, it is defined by a set of linear equations:

. Namely, it is defined by a set of linear equations:

.

.As a vector space itself, the space of symmetric tensors of type  , n times, is usually denoted by

, n times, is usually denoted by  .

.

Of course, from the point of view of multi-linear forms,  coincides with the space of symmetric multi-linear maps

coincides with the space of symmetric multi-linear maps  .

.

7.2 The Rank of a Symmetric Tensor

Next step is the study of the behavior of symmetric tensors with respect to the rank. It is easy to realize that there are symmetric tensors of rank 1, i.e., the space  intersects the set of decomposable tensors. Just to give an instance, look at:

intersects the set of decomposable tensors. Just to give an instance, look at:

The following proposition determines how one construct decomposable, symmetric tensors.

Proposition 7.2.1

, n times. Then T is symmetric, of rank 1, if and only if there exist a nonzero scalar

, n times. Then T is symmetric, of rank 1, if and only if there exist a nonzero scalar  and a nonzero vector

and a nonzero vector  with:

with:

), then we may assume

), then we may assume  .

.Proof

,

,  , then T cannot be zero by Proposition 6.2.8, thus it has rank 1. Moreover if

, then T cannot be zero by Proposition 6.2.8, thus it has rank 1. Moreover if  , then for any multi-index

, then for any multi-index  and for any permutation

and for any permutation  :

:

, where no

, where no  can be 0, by Proposition 6.2.8. Write

can be 0, by Proposition 6.2.8. Write  and fix a multi-index

and fix a multi-index  such that

such that  , ...,

, ...,  . Then

. Then  cannot vanish. Define

cannot vanish. Define  . Then we claim that

. Then we claim that  . Namely, for all j we have, by symmetry:

. Namely, for all j we have, by symmetry:

, so that

, so that  . Similarly we can define

. Similarly we can define  ,...,

,...,  , and obtain that

, and obtain that  , ...,

, ...,  . Thus, if

. Thus, if  , then

, then

of

of  and define

and define  . Then

. Then  .

.

Notice that purely algebraic properties of K can be relevant in determining the shape of a decomposition of a tensor.

Remark 7.2.2

In the sequel, we will often write  for

for  , d times.

, d times.

If K is algebraically closed, then a symmetric tensor  of rank 1 has a finite number (exactly: d) decompositions as a product

of rank 1 has a finite number (exactly: d) decompositions as a product  .

.

Namely if  , then by Proposition 6.2.9 there exists a scalar

, then by Proposition 6.2.9 there exists a scalar  such that

such that  and moreover

and moreover  , thus w is equal to v multiplied by a dth root of unity.

, thus w is equal to v multiplied by a dth root of unity.

Passing from rank 1 to higher ranks, the situation becomes suddenly more involved.

- First choice. The rank of a symmetric tensor

is simply its rank as a tensor in

is simply its rank as a tensor in  , i.e., it is the minimum r for which one has r decomposable tensors

, i.e., it is the minimum r for which one has r decomposable tensors  ,...,

,..., with

with

- Second choice. The rank of a symmetric tensor

is the minimum r for which one has r symmetric decomposable tensors

is the minimum r for which one has r symmetric decomposable tensors  ,...,

,..., with

with

Then, the natural question is about which choice gives the correct definition. Here, correct definition means the definition which proves to be most useful, for the applications to Multi-linear Algebra and random systems.

The reader could be disappointed in knowing that there is no clear preference between the two options: each can be preferable, depending on the point of view.

Thus, we will leave the word rank for the minimum r for which one has a decomposition  , with the

, with the  ’s not necessarily symmetric (i.e., the first choice above).

’s not necessarily symmetric (i.e., the first choice above).

Then, we give the following:

Definition 7.2.3

of a symmetric tensor

of a symmetric tensor  is the minimum r for which one has r symmetric decomposable tensors

is the minimum r for which one has r symmetric decomposable tensors  ,...,

,..., with

with

Example 7.2.4

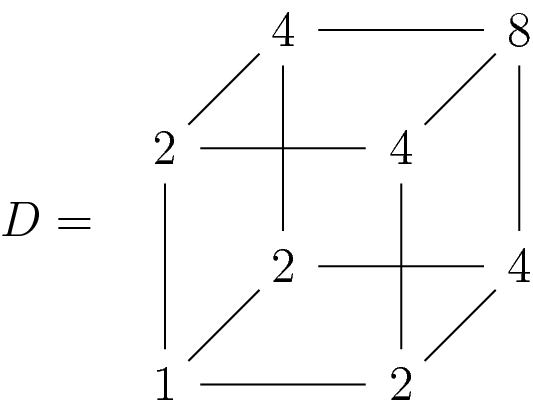

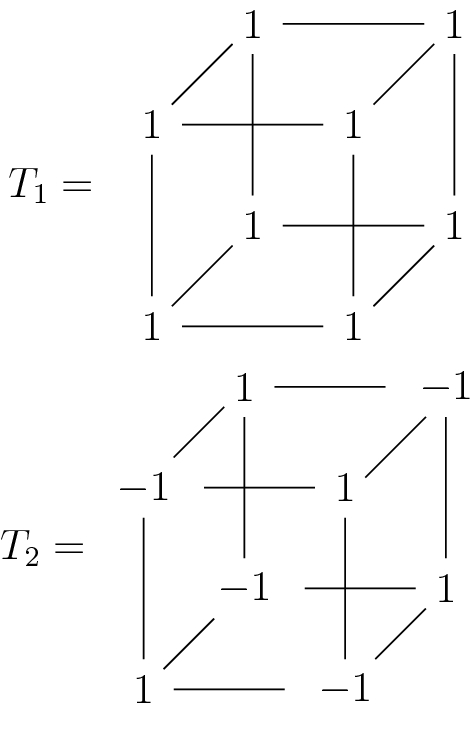

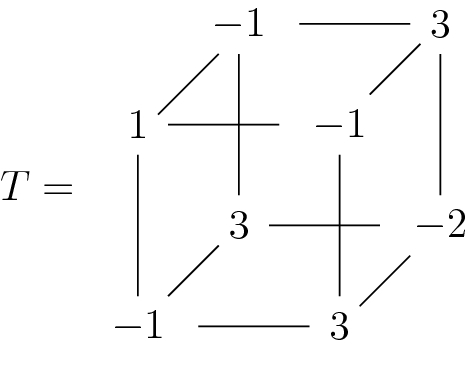

The symmetric tensor

has not rank 1, as one can compute by taking the determinant of some face.

T has rank 2, because it is expressible as the sum of two decomposable tensors  , where

, where

and  ,

,  .

.

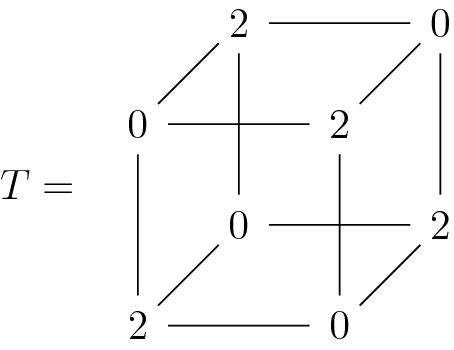

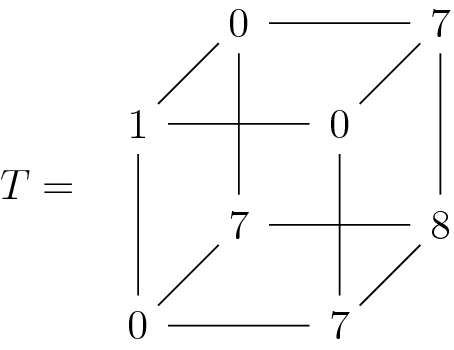

Example 7.2.5

The tensor (over  ):

):

is not decomposable. Let us prove that the symmetric rank is bigger than 2.

. Then we have

. Then we have

and

and  , where

, where  are two cubic roots of unit, not necessarily equal. But then

are two cubic roots of unit, not necessarily equal. But then  and

and  , so that

, so that  yields

yields  , which cannot hold, because

, which cannot hold, because  is not a cubic root of unit.

is not a cubic root of unit.Remark 7.2.6

Proposition 7.2.1 shows in particular that any symmetric tensor of (general) rank 1 has also the symmetric rank equal to 1.

Shitov’s example is quite peculiar: the tensor has dimension 3 and type  , whose rank is very high with respect of general tensors of same dimension and type.

, whose rank is very high with respect of general tensors of same dimension and type.

The difficulty in finding examples where the two ranks are different, despite the large number of concrete tensors tested, suggested to the French mathematician Pierre Comon to launch the following:

Problem 7.2.7

(Comon 2000) Find conditions such that the symmetric rank and rank of a symmetric tensor coincide.

In other words, find conditions for  such that if there exists a decomposition

such that if there exists a decomposition  in terms of tensors of rank 1, then there exists also a decomposition with the same number of summands, in which each

in terms of tensors of rank 1, then there exists also a decomposition with the same number of summands, in which each  is symmetric, of rank 1.

is symmetric, of rank 1.

The condition is known for some types of tensors. For instance, it is easy to prove that the Comon Problem holds for any symmetric matrix T (and this is left as an exercise at the end of the chapter).

The reader could wonder that such a question, which seems rather elementary in its formulation, could yield a problem which is still open, after being studied by many mathematicians, with modern techniques.

This explains a reason why, at the beginning of the chapter, we warned the reader that problems that are simple for Linear Algebra and matrices can suddenly become prohibitive, as the dimension of the tensors grows.

7.3 Symmetric Tensors and Polynomials

Homogeneous polynomials and symmetric tensors are two apparently rather different mathematical objects, that indeed have a strict interaction, so that one can skip from each other, translating properties of tensors to properties of polynomials, and vice versa.

The main construction behind this interaction is probably well known to the reader, for the case of polynomials of degree 2. It is a standard fact that one can associate a symmetric matrix to quadratic homogeneous polynomial, in a one-to-one correspondence, so that properties of the quadratic form (as well as properties of quadratic hypersurfaces) can be read as properties of the associated matrix.

The aim of this section is to point out that a similar correspondence holds, more generally, between homogeneous forms of any degree and symmetric tensors of higher dimension.

Definition 7.3.1

of cubic tensors of dimension d and the space of homogeneous polynomials of degree d in n variables (i.e., the dth graded piece

of cubic tensors of dimension d and the space of homogeneous polynomials of degree d in n variables (i.e., the dth graded piece  of the ring of polynomials

of the ring of polynomials ![$$R=K[x_1,\dots ,x_n]$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq89.png) ), defined by sending a tensor T to the polynomial

), defined by sending a tensor T to the polynomial  such that

such that

, one immediately sees that the two matrices

, one immediately sees that the two matrices

.

.The correspondence becomes one to one (and onto) when restricted to symmetric tensors. To see this, we need to introduce a piece of notation.

Definition 7.3.2

For any multi-index  , we will define the multiplicity

, we will define the multiplicity  as the number of different permutations of the multi-index.

as the number of different permutations of the multi-index.

Definition 7.3.3

![$$R=K[x_1,\dots ,x_n]$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq95.png) be the ring of polynomials, with coefficients in K, with n variables. Then there are linear isomorphisms

be the ring of polynomials, with coefficients in K, with n variables. Then there are linear isomorphisms

of the previous map

of the previous map

Example 7.3.4

, then t(G) is the symmetric matrix

, then t(G) is the symmetric matrix

Example 7.3.5

It is an easy exercise to prove that the two maps p and t defined above are inverse to each other.

Once the correspondence is settled, one can easily speak about the rank or the the symmetric rank of a polynomial.

Definition 7.3.6

For any homogeneous polynomial ![$$G\in K[x_1,\dots ,x_n]$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq98.png) , we define the rank

(respectively, the symmetric rank) of G as the rank (respectively, the symmetric rank) of the associated tensor t(G).

, we define the rank

(respectively, the symmetric rank) of G as the rank (respectively, the symmetric rank) of the associated tensor t(G).

Example 7.3.7

The polynomial  has rank 3, since the associated tensor t(G) is exactly the

has rank 3, since the associated tensor t(G) is exactly the  symmetric tensor of Example 7.2.5.

symmetric tensor of Example 7.2.5.

Proposition 7.3.8

has dimension

has dimension

Proof

This is obvious once one knows that  is the dimension of the space of homogeneous polynomials

is the dimension of the space of homogeneous polynomials  of degree d in n variables. We prove it for the sake of completeness.

of degree d in n variables. We prove it for the sake of completeness.

Since monomials of degree d in n variables are a basis for  , it is enough to count the number of such monomials.

, it is enough to count the number of such monomials.

The proof goes by induction on n. For  the statement is easy: we have

the statement is easy: we have  monomials, namely

monomials, namely  .

.

variables

variables  . Every monomial of degree d in n variables is obtained by multiplying

. Every monomial of degree d in n variables is obtained by multiplying  by any monomial of degree

by any monomial of degree  in

in  . Thus, we have 1 monomial with

. Thus, we have 1 monomial with  , n monomials with

, n monomials with  ,...,

,...,  monomials with

monomials with  , and so on. Summing up

, and so on. Summing up

, by standard facts on binomial coefficients.

, by standard facts on binomial coefficients.

7.4 The Complexity of Polynomials

In this section, we rephrase the results on the rank of symmetric tensors in terms of the associated polynomials.

It will turn out that the rank decomposition of a polynomial is the analogue of a long-standing series of problems in Number Theory, for the expression of integers as a sum of powers.

In principle, from the point of view of Algebraic Statistic, the complexity of a polynomial is the complexity of the associated symmetric tensor. So, the most elementary case of polynomials corresponds to symmetric tensor of rank 1. We start with a description of polynomials of this type.

Remark 7.4.1

Before we proceed, we need to come back to the multiplicity of a multi-index  , introduced in Definition 7.3.2.

, introduced in Definition 7.3.2.

is linked with the coefficient of the monomial

is linked with the coefficient of the monomial  . Notice that

. Notice that  need not be distinct, so the monomial

need not be distinct, so the monomial  could be written unproperly. The usual way in which

could be written unproperly. The usual way in which  is written is:

is written is:

indicates the times in which i occurs in the multi-index J.

indicates the times in which i occurs in the multi-index J. . Indeed a permutation changes the multi-index, unless it simply switches indices

. Indeed a permutation changes the multi-index, unless it simply switches indices  which are equal. Since the number of permutations over a set with m elements is m!, then one finds that

which are equal. Since the number of permutations over a set with m elements is m!, then one finds that

Proposition 7.4.2

Let G be a homogeneous polynomial of degree d in n variables, so that  .

.

Then t(G) has rank 1 if and only if there exists a homogeneous linear polynomial ![$$L\in K[x_1,\dots ,x_n]$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq129.png) , such that

, such that  .

.

Proof

It is sufficient to prove that  , where

, where  , if and only if

, if and only if  .

.

To this aim, just notice that the coefficient of the monomial  in

in  is the sum of the entries of the tensors

is the sum of the entries of the tensors  whose multi-index J satisfies

whose multi-index J satisfies  . These entries are all equal to

. These entries are all equal to  and their number is m(J). On the other hand, by the well-known Newton formula,

and their number is m(J). On the other hand, by the well-known Newton formula,  is exactly the coefficient of the monomial

is exactly the coefficient of the monomial  in the power

in the power  .

.

Corollary 7.4.3

![$$G\in K[x_1,\dots ,x_n]_d$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq142.png) is the minimum r for which there are r linear homogeneous forms

is the minimum r for which there are r linear homogeneous forms ![$$L_1,\dots ,L_r\in K[x_1,\dots ,x_n]$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq143.png) , with

, with

The symmetric rank is the number that computes the complexity of symmetric tensors, hence the complexity of homogeneous polynomials, from the point of view of Algebraic Statistics. Hence, it turns out that the simplest polynomials, in this sense, are powers of linear forms. We guess that nobody will object to the statement that powers are rather simple!

We should mention, however, that sometimes the behavior of polynomials with respect to the complexity can be much less intuitive.

For instance, the rank of monomials is usually very high, so that the complexity of general monomials is over the average (and we expect that most people will be surprised). Even worse, efficient formulas for the rank of monomials were obtained only very recently by Enrico Carlini, Maria Virginia Catalisano, and Anthony V. Geramita (see [2]). For other famous polynomials, as the determinant of a matrix of indeterminates, we do not even know the rank. All we have are lower and upper bounds, not matching.

such that

such that  The problem has been generalized by the English mathematician Edward Waring, who asked in 1770 for the minimum integer r(k) such that any positive integer G can be written as a sum of r(k) powers

The problem has been generalized by the English mathematician Edward Waring, who asked in 1770 for the minimum integer r(k) such that any positive integer G can be written as a sum of r(k) powers  . In other words, find the minimum r(k) such that any positive integers are of the form

. In other words, find the minimum r(k) such that any positive integers are of the form

The determination of r(k) is called, from then, the Waring problem for integers. Because of the analogy, the symmetric rank of a polynomial is also called the Waring rank.

For integers, few values of r(k) are known, e.g.,  ,

,  ,

,  . There are also variations on the Waring problem, as asking for the minimum

. There are also variations on the Waring problem, as asking for the minimum  such that all positive integers, except for a finite subset, are the sum of

such that all positive integers, except for a finite subset, are the sum of  kth powers (the little Waring problem).

kth powers (the little Waring problem).

Going back to the polynomial case, as for integers, a complete description of the maximal complexity that a homogeneous polynomial of the given degree in a given number of variables can have, is not known. We have only upper bounds for the maximal rank. On the other hand, we know the solution of an analogue to the little Waring problem, for polynomials over the complex field.

Theorem 7.4.4

![$$\mathbb C[x_1,\dots ,x_n]_d$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq152.png) ; or also: all polynomials outside a Zariski closed subset of the space

; or also: all polynomials outside a Zariski closed subset of the space ![$$\mathbb C[x_1,\dots ,x_n]_d$$](../images/485183_1_En_7_Chapter/485183_1_En_7_Chapter_TeX_IEq153.png) , see Remark 9.1.10) is

, see Remark 9.1.10) is

, any n, where

, any n, where  ;

; ,

,  , where

, where  ;

; ,

,  , where

, where  .

. ,

,  , where

, where  .

. ,

,  , where

, where  .

.

The original proof of this theorem requires the Horace method. It is long and difficult and occupies a whole series of papers [3–7].

For specific tensors, an efficient way to compute the rank requires the use of inverse systems, which will be explained in the next chapter.

7.5 Exercises

Exercise 11

Prove that the two maps p and t introduced in Definition 7.3.3 are linear and inverse to each other.

Exercise 12

Prove Comon’s Problem for matrices: a symmetric matrix M has rank r if and only if there are r symmetric matrices of rank 1,  ,...,

,...,  , such that

, such that  .

.

Exercise 13

Prove that the tensor T of Example 7.2.5 cannot have rank 2.

Exercise 14

Prove that the tensor T of Example 7.2.5 has symmetric rank  (so, after Exercise 13, also the rank is 3).

(so, after Exercise 13, also the rank is 3).