naturalism See PHYSICALISM

Necker cube See MULTISTABLE PERCEPTION

neural coding See CORRELATES OF CONSCIOUSNESS; SINGLE-CELL STUDIES: HUMAN; SINGLE-CELL STUDIES: MONKEY

neural correlates of consciousness See CORRELATES OF CONSCIOUSNESS, SCIENTIFIC PERSPECTIVES

neural stimulation An important focus in the study of conscious perception has been the search for the neural *correlates of consciousness (Koch 2005). In many typical approaches, investigators seek to find whether or not consciousness is correlated with activation in a given brain area (or a specific neuronal ensemble within an area). While these studies have provided many important insights, activation observed in electrophysiological, imaging, or other studies does not constitute proof that a given neuronal process is necessary, let alone sufficient, for conscious perception. The study of different types of lesions in humans and animal models has provided some clues about the necessity of certain areas for conscious activity. Establishing sufficiency may require showing that stimulation of a particular brain area (or specific neurons within an area) leads to conscious sensations.

One of the techniques used to stimulate the human brain is *transcranial magnetic stimulation (TMS). The non-invasive nature of this technique makes it a valuable research and clinical tool in spite of its low spatial resolution. In animals, investigators can inject current into smaller clusters of neurons in different areas of the brain using the same type of thin microwires employed to monitor *single-neuron spiking activity in electrophysiological experiments. In a paradigmatic study, it was shown that electrical microstimulation can bias perceptual decisions based on sensory information in macaque monkeys (Salzman et al. 1990). The investigators studied an area of dorsal visual cortex involved in motion discrimination (visual area V5). The monkeys were shown an image containing multiple dots moving in random directions. A fraction of those dots were moving coherently in a particular direction and, after training, the animals became quite proficient at indicating this overall direction of motion. During this motion discrimination task, the investigators used microwires to monitor the neuronal activity in area V5 and to perform electrical microstimulation. Current injection led to an enhanced behaviorual response in the direction corresponding to the preferred direction of the neuron recorded from the same microwire (Salzman et al. 1990). Similar observations were obtained in completely different tasks and brain areas, including shape discrimination in inferior temporal cortex (Afraz et al. 2006) and tactile discrimination in somatosensory cortex (Romo et al. 1998). Interestingly, in the latter case, the investigators even managed to induce a tactile sensation in the absence of any concomitant tactile input.

In general, these elegant and rigorous studies in monkeys show that, upon activating neurons selective to particular features through electrical stimulation, the animal’s behaviour can be biased to favour those features. These observations suggest that current injection into potentially small neuronal clusters could induce specific percepts depending on the exact stimulation areas and conditions. Yet, the implications derived from these observations remain unclear. First, it is not yet known how many neurons or what types of neurons and circuits are activated through this technique (see, however, Brecht et al. 2004). Secondly, the behavioural effects are not necessarily a direct consequence of the stimulation protocol (particularly with high simulation currents). For example, it is conceivable that the activation of visual area V5 leads to enhancement in its target areas, that the activity in those target areas leads to conscious perceptions and that these conscious percepts are ultimately the ones responsible for the observed behavioural effects. Perhaps even more importantly, given that the animals need to be highly overtrained in order to report their percepts, the brain circuits activated after these extensive training periods might bypass the normal conscious processing of information (e.g. although learning how to drive a car may require conscious efforts, by and large driving proceeds without much awareness after learning, particularly for a well-known route).

In order to address some of these difficulties, it would be interesting to use similar experimental protocols in human subjects. Unfortunately, extending these studies to the human brain is very challenging, given the invasive nature of the recording and stimulation procedures. However, under some particular circumstances, it is possible to directly stimulate the human brain. Electrical stimulation of the human brain is usually performed in the clinical context of Parkinson’s disease, intractable pain relief, or while mapping seizures in epileptic patients. When subjects with intractable *epilepsy are candidates for potential surgical resection of the epileptogenic tissue, they may be implanted with deep electrodes in order to more precisely map the seizure areas and minimize excision of other important brain tissue. In these studies, it has been possible to monitor human single-neuron activity (Engel et al. 2005) and also to electrically stimulate the human brain (see e.g. Penfield and Perot 1963, Libet 1982).

Electrical stimulation in human epileptic patients through intracranial electrodes has produced rather remarkable observations including motor and speech output, olfactory, tactile, visual and/or auditory sensations, fear or anxiety, feelings of familiarity, and many others (Penfield and Perot 1963). In fact, many of these stimulation studies helped shape the current dominant paradigm of functional specialization in the cerebral cortex whereby distinct brain areas are involved in specialized mental processes (Penfield and Perot 1963). In general, stimulation of sensory or motor cortex leads to the corresponding sensory feelings or behavioural output (although much remains to be understood about the map from stimulation parameters to sensation or behaviour). For example, electrical stimulation in primary visual cortex may lead to a visual sensation topographically matching the one obtained by natural stimulation in the corresponding area of the retina (Brindley and Lewin 1968). The arguments discussed above about direct vs indirect effects of stimulation still hold; this visual sensation does not necessarily imply that primary visual cortex is the locus of the conscious percept for vision. Electrical stimulation of association areas including the hippocampus, amygdala, perirhinal, and entorhinal cortex usually leads to much more complex and variable outputs (Penfield and Perot 1963, Halgren et al. 1978). Interestingly, the sensations evoked by electrical stimulation of temporal lobe targets have been likened to the type of *hallucinatory effects that may take place during epileptic seizures and some psychotic states, or also to *dreams. These observations may suggest the existence of a common substrate in the temporal lobe for the kind of sensory experience that occurs in the absence of concomitant external input during dreams, hallucinations, seizures, and electrical stimulation.

Libet and colleagues showed that electrical stimulation in the primary somatosensory cortex area can lead to subjective experience in human subjects. A further characterization of the stimulation train duration and intensity conditions that can give rise to conscious sensations leads to the notion that a certain activation threshold needs to be reached for electrical activity to activate consciousness (Libet 1982; see also Ray et al. 1999). This notion of a threshold requirement to elicit conscious activity has played an important role in the development of a theoretical framework to understand consciousness (Koch 2005).

There are still many open questions to further our understanding of how electrical stimulation in the brain leads to conscious percepts, including (1) the biophysics and mechanisms of activation through electrical stimulation and (2) how to dissociate direct from indirect effects of stimulation. Conceivably, techniques could be developed in the future to stimulate only specific neurons or neuronal circuits without affecting other circuits; this may therefore allow investigators to measure the direct and specific effects of the stimulation procedure. In spite of the multiple unknowns, electrical stimulation offers the promise of eventually being able to causally link physiologically observed correlates of consciousness with the actual conscious percepts.

GABRIEL KREIMAN

Afraz, S. R., Kiani, R., and Esteky, H. (2006). ‘Microstimulation of inferotemporal cortex influences face categorization’. Nature, 442.

Brecht, M., Schneider, M., Sakmann, B., and Margrie, T. (2004). ‘Whisker movements evokes by stimulation of single pyramidal cells in rat motor cortex’. Nature, 427.

Brindley, G. S. and Lewin, W. S. (1968). ‘The sensations produced by electrical stimulation of the visual cortex’. Journal of Physiology, 196.

Engel, A. K., Moll, C. K., Fried, I., and Ojemann, G. A. (2005). ‘Invasive recordings from the human brain: clinical insights and beyond’. Nature Reviews Neuroscience, 6.

Halgren, E., Walter, R. D., Cherlow, D. G., and Crandall, P. H. (1978). ‘Mental phenomena evoked by electrical stimulation of the human hippocampal formation and amygdala’. Brain, 101.

Koch, C. (2005). The Quest for Consciousness.

Libet, B. (1982). ‘Brain stimulation in the study of neuronal functions for conscious sensory experiences’. Human Neurobiology, 1.

Penfield, W. and Perot, P. (1963). ‘The brain’s record of auditory and visual experience. A final summary and discussion’. Brain, 86.

Ray, P. G., Meador, K. J., Smith, J. R., Wheless, J. W., Sittenfeld, M., and Clifton, G. L. (1999). ‘Physiology of perception. Cortical stimulation and recording in humans’. Neurology, 52.

Romo, R., Hernandez, A., Zainos, A., and Salinas, E. (1998). ‘Somatosensory discrimination based on cortical microstimulation’. Nature, 392.

Salzman, C., Britten, K., and Newsome, W. (1990). ‘Cortical microstimulation influences perceptual judgments of motion direction’. Nature, 346.

neuroethics Neuroethics is a relatively new field that addresses the ethical, legal, and social issues raised by progress in neuroscience.

1. Brain, mind, and ethics

2. Ethical importance of consciousness

3. Neurological disorders of consciousness

4. Behaviour and brain activity as indices of mental processing

What makes neuroethics distinctive from bioethics more generally is that the brain is the organ of the mind. Most neuroethical issues derive a special level of interest and importance from this fact. For example, bioethicists have long grappled with the ethics of enhancing healthy human beings, e.g. administering human growth hormone to short but otherwise normal children. Issues that arise in this context include safety and fairness. These same issues arise for brain enhancements, but in addition we confront new issues because brain enhancements have the unique potential to change our cognitive abilities, emotional responses, and personalities. Issues such as personal identity—am I the same person on drugs such as fluoxetine (Prozac) or amphetamines as off?—and mental autonomy— am I entitled to determine my own psychological experience pharmacologically?—do not arise with biological enhancements of organs other than the brain (Farah 2005).

Consciousness is arguably the aspect of mental activity that has the greatest ethical importance. Without the ability to enjoy life or suffer, both of which involve conscious experience, it is hard to see how a being could have morally relevant interests. With these abilities a being clearly has moral status: e.g. we should avoid causing suffering to that being, assuming that all other things are equal. A being that is conscious of its own existence, and wants that existence to continue, should be accorded an even higher moral status: e.g. killing it would be wrong, even if the killing could be accomplished without causing suffering.

Consciousness figures more or less centrally in most neuroethical issues. It lies at the heart of one neuroethical issue in particular. This issue concerns severely *brain-damaged patients who have lost the ability to communicate through normal behavioural and linguistic channels. The result is a painfully immediate version of the classic philosophical ‘problem of other minds’ (Farah 2008). In particular, the profound epistemological problem of how we can know who or what has a conscious mind, and what the conscious experience of another is like, becomes a practical problem in the case of such patients.

Absence of verbal or non-verbal communication does not necessarily imply absence of a conscious mind. The detection of consciousness in those with whom we cannot communicate—including not only severely brain-damaged humans, but also immature humans and non-human animals—is therefore an important ethical goal, and has recently become the subject of study in cognitive neuroscience and neuroethics.

Several categories of neurological condition are relevant to discussions of consciousness after severe brain damage (see Laureys et al. 2004 for a review). The concept of brain death refers to a state in which the whole brain or the brain stem has essentially ceased to function. Braindead patients may be sustained on life support for short periods, but medically and legally they are considered dead.

The category of vegetative state encompasses patients with preserved vegetative brain functions, including sleep–wake cycles, respiration, cardiac function, and maintenance of blood pressure, without any evidence of accompanying cognitive functions. Vegetative patients may vocalize and move spontaneously and may even orient to sounds and briefly fixate objects with their eyes, but they neither speak nor obey commands and are therefore assumed to lack conscious awareness (Multi-Society Task Force on PVS, 1994).

The diagnostic category of minimally conscious state (MCS) is distinguished from the vegetative state by the presence, possibly intermittent, of a limited form of responsiveness or communication. Indicative behaviours include the ability to follow simple commands (e.g. ‘blink your eyes’), to respond to yes/no questions verbally or by gesture, any form of intelligible verbalization, or any purposeful behaviours that are contingent upon and relevant to the external environment (Giacino et al. 2002).

Finally, in the locked-in state patients are fully aware but paralysed as a result of the selective interruption of outgoing (efferent) motor connections. In its most classic form, a degree of preserved voluntary eye movement allows communication, e.g. answering questions with an upward gaze for ‘yes’ or spelling words by selecting one letter at a time with eye movements. For other patients, the de-efferentation is more complete and no voluntary behaviour is possible (Bauer et al. 1979).

How do we know whether a patient is aware? We reason by analogy, much as J. S. Mill did in response to the problem of other minds. If a patient can display behaviours that, in us, are associated with cognition and awareness, then we attribute cognition and awareness to the patient. Unfortunately, the argument from analogy falls short of solving the problem. It simply begs the question of whether an incommunicative individual is conscious, and seems to lead us to the wrong answer in the case of locked-in patients.

*Functional neuroimaging has recently been used to measure the brain response to meaningful stimuli in severely brain-damaged patients, including patients classified as being in minimally conscious states and vegetative states. In some cases the brain activity recorded in response to stimuli indicates surprisingly preserved cortical information processing. For example, in one vegetative patient, visually presented photographs of faces activated face-specific processing areas of the brain (Menon et al. 1998). More recently, two MCS patients were played tapes of speech either forwards (meaningful) or backwards (meaningless). As with neurologically intact people, the brains of these patients distinguished the two types of speech (Schiff et al. 2005). Perhaps the most startling discovery so far was the apparently voluntary execution of a mental imagery task by a vegetative patient (who later recovered). When Owen and colleagues (2006) instructed the patient to imagine playing tennis she activated parts of the motor system, and when they asked her to imagine visiting each of the rooms of her home she activated parts of the brain’s spatial navigation system. Furthermore, her patterns of brain activation were indistinguishable from those of normal subjects.

Is the brain imaging approach to the problem of other minds just a high-tech version of the same unworkable argument from analogy? I would argue that brain imaging in principle delivers more relevant information about mental states than behaviour. The gist of this argument is that, whereas behaviour is caused by mental states, and thus may serve as an (imperfect) indicator of patients’ mental status, brain states are not caused by mental states or vice versa. Rather, according to most contemporary views of the mind–body relation, mental states are brain states or, at a minimum ‘supervene on’ brain states (e.g. Davidson 1970, Clark 2001). Either way, mental states are non-contingently related to brain states; assuming a sufficiently advanced cognitive neuroscience, one could not know a brain state without also knowing the mental state.

In closing, it is worth noting that some of the same scientific, epistemological, and ethical issues arise in the case of two other groups of non-communicative or minimally communicative individuals: immature humans and non-human animals. If fetuses, babies, or animals consciously suffer, they cannot tell us in the same ways that a normal mature human would, but we would nevertheless want to avoid causing such suffering. It is possible that we could obtain relevant evidence concerning the capacity for consciousness in such cases through empirical investigations of neural correlates of consciousness in such individuals.

MARTHA J. FARAH

Bauer, G., Gerstenbrand, F., and Rumpl, E. (1979). ‘Varieties of the locked-in syndrome’. Journal of Neurology, 221.

Clark, A. (2001). Mindware: An Introduction to the Philosophy of Cognitive Science.

Davidson, D. (1970). ‘Mental events’. In Essays on Actions and Events.

Farah, M. J. (2005). ‘Neuroethics: The practical and the philosophical’. Trends in Cognitive Sciences, 9.

Farah, M. J. (2008). ‘Neuroethics and the problem of other minds: Implications of neuroscience for the moral status of brain-damaged patients and non-human animals’. Neuroethics, 1.

Giacino, J. T. et al. (2002). ‘The minimally conscious state: definition and diagnostic criteria’. Neurology, 58.

Laureys, S., Owen, A. M., and Schiff, N. D. (2004). ‘Brain function in coma, vegetative state, and related disorders’. Lancet Neurology, 3.

Menon, D. K. et al. (1998) ‘Cortical processing in the persistent vegetative state’. Lancet, 352.

Mill, J. S. (1979). The Collected Works of John Stuart Mill, Volume IX—An Examination of William Hamilton’s Philosophy and of The Principal Philosophical Questions Discussed in his Writings, ed. J. M. Robson.

Multi-Society Task Force on PVS (1994). ‘Medical aspects of the persistent vegetative state (1)’. New England Journal of Medicine, 330.

Owen, A. M., Coleman, M. R., Boly, M., Davis, M. H., Laureys, S., and Pickard, J. D. (2006). ‘Detecting awareness in the vegetative state’. Science, 313.

Schiff, N. D. et al. (2005). ‘fMRI reveals large-scale network activation in minimally conscious patients’. Neurology, 64.

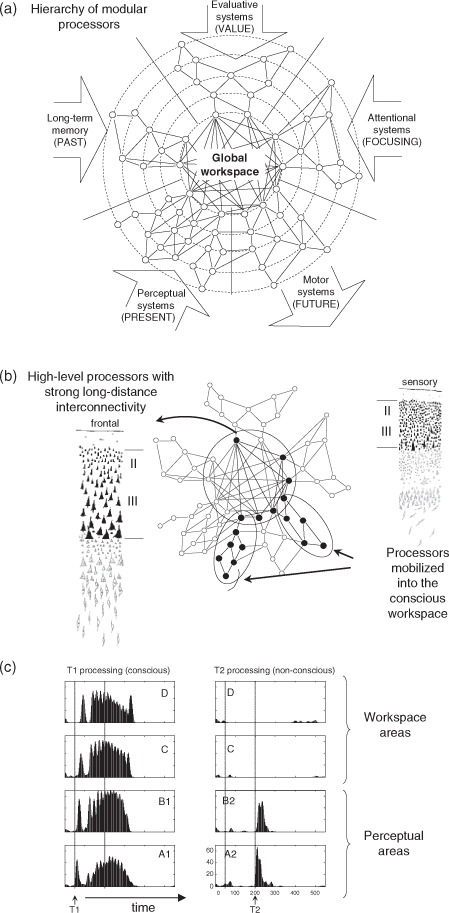

neuronal global workspace The cognitive theory of a *global workspace was developed by Baars (1989). Here we describe a cognitive neuroscientific hypothesis, partially derived from Baars’ architecture, and termed the ‘global neuronal workspace’ (GNW; Dehaene et al. 1998, 2003, 2006, Dehaene and Naccache 2001).

1. Neuronal basis of the global workspace

2. Simulations and predictions

3. Empirical evidence

4. Conclusion

Baars (1989), extending earlier proposals by Shallice and Posner, proposed a cognitive architecture in which a collection of specialized non-conscious processors, all operating in parallel, compete for access to the global workspace (GW), a capacity-limited system which allows the processors to flexibly exchange information. At any given time, the current content of this workspace is thought to correspond to the *contents of consciousness.

Baars (1989) initially proposed that the GW related to the reticular formation of the brainstem and to nonspecific thalamic nuclei. Dehaene and collaborators (Dehaene et al. 1998, 2003, 2006, Dehaene and Naccache 2001), however, argued that to allow for the sharing of detailed, highly differentiated information, a larger-capacity system was needed and had to rely on cortical pyramidal neurons with long-distance cortico-cortical connections. Thus, the neuronal basis of the GW was proposed to consist of a highly distributed set of large pyramidal neurons which interconnect distant specialized cortical and subcortical processors and can broadcast signals at the brain scale. Those neurons break the modularity of the nervous system, not only because they link high-level cortical areas, but also because they can convey top-down, NMDA-receptor mediated amplification signals to almost all cortical regions. Thanks to this top-down mobilization mechanism, information represented within essentially any cortical area can be temporarily amplified, gain access to the workspace, and be broadcasted back to multiple regions beyond those initially activated. This brain-scale broadcasting creates a global availability that results in the possibility of verbal or non-verbal report and is experienced as a conscious state.

It is important to understand that the GW is not uniquely localized to a single cortical area, but is highly distributed. Nevertheless, pyramidal neurons with large cell bodies, broad dendritic trees, and very long axons are denser in some areas than in others. In all primates, prefrontal, cingulate, and parietal cortices, together with the superior temporal sulcus, are closely interlinked by a dense network of long-distance connections. At the beginning of the 20th century, Von Economo already noted that these regions are characterized by a thick layer of large pyramidal cells, particularly in cortical layers II and III, that send and receive long-distance projections, including through the corpus callosum. From these observations, Dehaene et al. (1998) predicted that these areas would be systematically activated in brain-imaging studies of conscious-level processing, but that finer intracranial *electroencephalography (EEG) or *single-cell recordings would ultimately identify a broadcasting of conscious contents to many other sites—a recently upheld prediction (see section 3 below).

The nature of the association areas that contribute most directly to the workspace can explain which operations are typically associated with conscious-level processing. These areas included five sets of brain systems (Dehaene et al. 1998): high-level perceptual processors (e.g. inferotemporal cortex), evaluation circuits (e.g. amygdala, cingulate, and orbitofrontal regions), planning and motor intention systems (e.g. prefrontal and premotor areas), long-term memory circuits (e.g. hippocampus and parahippocampal regions), and attention-orienting circuits (e.g. posterior parietal cortices). Thanks to the tight interconnections of these five systems, perceptual information which is consciously accessed can be laid down in episodic memory, be evaluated, and lead to attention reorienting and the formation of novel behavioural plans (including verbal reports).

Parts of the GW architecture have been simulated, based either on networks of formal neurons (Dehaene et al. 1998), or on more realistic thalamocortical columns of integrate-and-fire units (Dehaene et al. 2003, Dehaene and Changeux 2005). These simulations have identified a set of essential properties of the GNW architecture.

Feedforward excitation followed by ignition. During processing of a brief external stimulus, two stages can be distinguished: perceptual activation first propagates in an ascending feedforward manner, then this bottom-up activity is amplified by top-down connections, thus leading to a sustained global reverberating ‘ignition’.

Central competition. Each ignited state is characterized by the distributed but synchronous activation of a subset of thalamocortical columns, whose topology defines the contents of consciousness, the rest of the workspace neurons being inhibited. This inhibition temporarily prevents other stimuli from entering the GNW, thus creating a serial bottleneck and phenomena similar to the *attentional blink and *inattentional blindness.

All-or-none ignition. Ignition corresponds to a sharp dynamic phase transition and is therefore all-or-none: a stimulus either fully ignites the GNW, or its activation quickly dies out. This all-or-none property defines a sharp threshold for access to consciousness by external stimuli.

Oscillations and synchrony. Ignition is accompanied by an increase in membrane voltage oscillations, particularly in the high-frequency *gamma band (40 Hz and above), as well as by increased synchrony between distant cortical sites.

Stochasticity. Conscious access is stochastic: due to fluctuations in spontaneous activity, identical stimuli may or may not pass the ignition threshold.

Subliminal versus preconscious states. Two major factors may prevent ignition and therefore conscious access. First, the stimulus strength may be too weak, in which case its bottom-up activation progressively dies out as it climbs through the perceptual hierarchy (subliminal stimulus). Second, even with sufficient strength, the stimulus may be blocked because the GNW is already occupied by another competing conscious representation (preconscious stimulus).

Graded levels of vigilance. The ignition threshold is affected by ascending neuromodulatory vigilance signals. A minimal level of ascending neuromodulation exist, below which ignited states cease to be stable. This transition from and to a ‘vigil’ state corresponds to a Hopf bifurcation in dynamic systems theory, which can mimic a gradation of states of consciousness, from high attention to drowsiness or coma (Dehaene and Changeux 2005).

Spontaneous activity. The GNW is the seat of permanent spontaneous activity. Thus, global ignited states can also occur in the absence of external stimulation, due to spontaneous activity alone, in which case activation tends to start in high-level areas and to propagate top-down to lower areas. Even in the absence of external stimulation, the GNW ceaselessly passes through a series of metastable states, each consisting in a global brain-scale with a subset of active processors. Although stochastic, these states are constantly sculpted and selected by vigilance signals and reward systems (mediated by cholinergic, dopaminergic, and other neuromodulatory pathways).

Many predictions of the GNW model have been supported. In otherwise very different paradigms, neurophysiological and *functional brain imaging experiments have systematically related the conscious reportability of perceptual stimuli to both an amplification of activation in the brain areas coding the relevant content (e.g. area V5 for motion) and the additional activation of a prefrontoparietal network (Haynes et al. 2005). Temporally resolved studies of identical stimuli that did or did not lead to a subjective conscious experience revealed an early non-conscious feedforward activation, followed by a late anterior and global activation only on conscious trials. This conscious activation is late, durable, all-or-none, exhibits a non-linear stochastic threshold, and involves a distributed set of areas including prefrontal cortex (Sergent et al. 2005, Del Cul et al. 2007). When a stimulus crosses the threshold for consciousness, gamma-band oscillations are enhanced (Melloni et al. 2007), and long-distance synchrony selectively appears, particularly in the beta band (13–30 Hz; Gross et al. 2004), with a primary origin in prefrontal cortex accompanied by a global broadcasting to 70% of randomly selected cortical sites (Gaillard et al. 2009). Attended but masked stimuli evoke a detectable but weak propagation of activation (subliminal state), while non-atttended stimuli evoke an initial strong activation followed by sudden blocking occurring largely at the prefrontal level (preconscious state; Sigman and Dehaene 2008). Finally, even in the absence of stimulation, prefrontoparietal networks are spontaneously active in vigil subjects and their metabolism tracks states of vigilance, shows a disproportionate decrease during *sleep, vegetative state, or coma (see BRAIN DAMAGE).

The GNW theory is a precise and testable proposal backed up by considerable evidence. One challenge for this theory will be to account, not only for conscious access during specific processing tasks, but also for conscious experience in the absence of a task. Ned Block, amongst others, while not denying the idea of a global access, doubt that it can account for ‘phenomenal awareness’. Naccache and I (2001) have argued that the illusion of a rich phenomenal world rests upon the variety of preconscious representations that can, at any moment, become brought in the GNW and therefore into consciousness. According the GNW, consciousness is both tightly limited (a single representation is accessed at one time) and yet enormously broad in its potential scope.

STANISLAS DEHAENE

Baars, B. J. (1989). A Cognitive Theory of Consciousness.

Dehaene, S. and Changeux, J. P. (2005). ‘Ongoing spontaneous activity controls access to consciousness: a neuronal model for inattentional blindness’. PLoS Biology, 3.

—— and Naccache, L. (2001). ‘Towards a cognitive neuroscience of consciousness: basic evidence and a workspace framework’. Cognition, 79.

——, Kerszberg, M., and Changeux, J. P. (1998). ‘A neuronal model of a global workspace in effortful cognitive tasks’. Proceedings of the National Academy of Sciences of the USA, 95.

——, Sergent, C., and Changeux, J. P. (2003). ‘A neuronal network model linking subjective reports and objective physiological data during conscious perception’. Proceedings of the National Academy of Sciences of the USA, 100.

——, Changeux, J. P., Naccache, L., Sackur, J., and Sergent, C. (2006). ‘Conscious, preconscious, and subliminal processing: a testable taxonomy’. Trends in Cognitive Science, 10.

Del Cul, A., Baillet, S., and Dehaene, S. (2007). ‘Brain dynamics underlying the nonlinear threshold for access to consciousness’. PLoS Biology, 5.

Gaillard, R., Dehaene, S., Adam, C. et al. (2009). ‘Converging intra-cranial markers of conscious access’. PLoS Biology, 7.

Gross, J., Schmitz, F., Schnitzler, I. et al. (2004). ‘Modulation of long-range neural synchrony reflects temporal limitations of visual attention in humans’. Proceedings of the National Academy of Sciences of the USA, 101.

Haynes, J. D., Driver, J., and Rees, G. (2005). ‘Visibility reflects dynamic changes of effective connectivity between V1 and fusiform cortex’. Neuron, 46.

Melloni, L., Molina, C., Pena, M., Torres, D., Singer, W., and Rodriguez, E. (2007). ‘Synchronization of neural activity across cortical areas correlates with conscious perception’. Journal of Neuroscience, 27.

Sergent, C., Baillet, S., and Dehaene, S. (2005). ‘Timing of the brain events underlying access to consciousness during the attentional blink’. Nature Neuroscience, 8.

Sigman, M. and Dehaene, S. (2008). ‘Brain mechanisms of serial and parallel processing during dual-task performance’. Journal of Neuroscience, 28.

neurophenomenology Neurophenomenology was originally proposed by Francisco Varela (1996) as a response to the *hard problem of consciousness as defined by Chalmers. It is intended not as a theory of consciousness that would constitute a solution to the hard problem, but as a methodological response that maps out a non-reductionistic approach to discovering a solution. Neurophenomenology works on both sides of the problem by incorporating phenomenological method into experimental cognitive neuroscience. *Phenomenology is an important part of this approach because it anchors both theoretical and empirical investigations of consciousness in embodied and situated experience as it is lived through, and as it is expressed as verbally articulated descriptions in the first person, in contrast to third-person correlates of experience or abstract representations (Varela and Thompson 2003). Neurophenomenology thus attempts to naturalize phenomenology, in the sense of providing an explicitly naturalized account of consciousness, specifically integrating first-person data into an explanatory framework where experiential properties and processes are made continuous with the properties and processes accepted by the natural sciences (see Roy et al. 1999).

Neurophenomenology follows Edmund Husserl in understanding phenomenology to be a methodologically guided reflective examination of experience. Some authors also adopt Buddhist traditions and contemplative techniques for this purpose (Varela et al. 1991). In neurophenomenological experiments both the experimenter(s) and experimental subjects receive some level of training in phenomenological method. This training, as Varela proposed it, includes the practice of phenomenological bracketing—the setting aside of opinions or theories about one’s own experience or about consciousness in general. Subjects bracket their ordinary attitudes in order to shift attention from what they experience, or what they think they experience, to how they experience it. Varela identified three steps in phenomenological method:

1 Suspending beliefs or theories about experience and what is experienced (phenomenologists call this the epoche)

2 Gaining intimacy with the domain of investigation (this involves a focused description of experience and of how things appear in our experience)

3 Sharing descriptions and seeking intersubjective verification.

The phenomenological method can be either self-induced by subjects familiar with it, or guided by the experimenter through a set of open questions—questions not directed at opinions or theories, or by the experimenter’s expectations, but at the subject’s current experience. Using this method subjects provide focused descriptions that form the basis of specific, stable, and intersubjectively verifiable experiential categories that can guide the scientist in the analysis and interpretation of neurophysiological data. The goal, in the service of a science of consciousness, is to make the explanandum, in this case some relevant aspect of consciousness, more precise by providing an accurate description of it.

Several studies have shown the practicality of integrating this method with neuroscientific experiments. Varela and colleagues (Rodriguez et al. 1999), studying correlations between subjective experience and brain synchronization showed a relation between conscious perceptive moments and large-scale neural synchrony in the *gamma band. Lutz et al. (2002) build on this research and introduce more specific phenomenological methods. They study the variability in *electroencephalographic (EEG) measures of brain activity that appears in many experimental situations, presumed to be caused by the fact that experimental subjects sometimes get distracted from experimental tasks. The variability may be caused by fluctuations in the subject’s attentive state, their spontaneous thought-processes, strategy decisions for carrying out experimental tasks, etc. The significance of these fluctuations is usually missed because experimenters tend to average results across a series of trials and across subjects, thereby washing out any interference caused by these subjective parameters. Lutz and his colleagues decided to ask whether the effect of these subjective parameters could be measured. Their neurophenomenological approach combined first-person data (reports guided by phenomenological method), EEG, and the dynamical analysis of neural processes to study subjects exposed to a three-dimensional perceptual *illusion.

Subjects were first trained to use phenomenological method, as outlined above. They developed descriptions (refined verbal reports) of their experience through a series of preliminary or practice trials on a well-known depth perception task. Through this training subjects became knowledgeable about their own experience, defined their own categories descriptive of the subjective parameters, and were able to report on the presence or absence or degree of distractions, inattentive moments, cognitive strategies, etc. when performing the task. Specifically, subjects were able to indicate whether their attention was steady, fragmented or disrupted, and based on these descriptions, descriptive categories were defined a posteriori and used to classify the trials into phenomenologically based clusters. Subjects were then able to use these categories as a reporting shorthand during the main trials when the experimenters measured reaction times, recorded electrical brain activity, and correlated the subject’s own report of each trial. This process revealed subtle changes in the subject’s experience which were correlated with both reaction times and dynamic measurements of the transient patterns of local and long-distance synchrony occurring between oscillating neural populations, specified as a dynamic neural signature (DNS). For example, characteristic patterns of phase synchrony recorded in the frontal electrodes prior to the stimulus correlated to the degree of preparation as reported by subjects. Lutz et al. showed that DNSs differentially condition the behavioural and neural response to the stimulus.

Neurophenomenological methods have also been used to study the pre-seizure experience of *epileptic patients. Experiences that occur at the beginning of a seizure often involve the visual and auditory modalities in the form of illusions or *hallucinations. The patient may see a scene, a face, or hear a voice or music or experience *déjà vu. The patient is often aware of the illusionary nature of his experience. Penfield (1938) showed that these experiences can be reproduced by electrical stimulation of the temporal lobe in epileptic patients during surgical procedures. More controversially, it may be possible for a subject to voluntarily affect a neuronal epileptic activity. Penfield and Jasper (1954), for example, described the blocking of a parietal seizure by the initiation of a complex mathematical calculation. Subjects have also been trained in biofeedback techniques to reduce seizure frequency (Fenwick 1981). In studies of a patient with an unusually focal and stable occipitotemporal epileptic discharge, Le Van Quyen et al. (1997a, 1997b) discovered various clusters of unstable rhythms in the finer dynamic patterns of brain activity in the structure of the epileptic spike patterns. In subsequent studies of 47 patients the researchers used neurophenomenological methods to correlate changes in these patterns with pre-ictal (pre-seizure) experiences (Le Van Quyen et al. 1999, 2001; see Le Van Quyen and Petitmengin 2002).

The experimental protocol used in these experiments conducted by the Varela group shows that it is possible to integrate phenomenology and experimental procedures, and so allow for the mutual illumination of first-person accounts and third-person data. Phenomenology contributes to the analytic framework insofar as the categories that are generated in phenomenological description are used to interpret EEG data. Although neurophenomenologists emphasize long-range dynamic neural synchronization, they frame the problem of consciousness in terms that are not reducible to brain processes alone. They view consciousness as radically embodied, and they appeal to enactive models for understanding the embodied and environmentally embedded nature of the brain dynamics that underlie consciousness (Thompson and Varela 2001, Varela and Thompson 2003, see SENSORIMOTOR APPROACH TO (PHENOMENAL) CONSCIOUSNESS). In this regard they exploit phenomenological concepts of situated and enactive agency as found in writers such as Heidegger and Merleau-Ponty.

In a less strict sense the term ‘neurophenomenology’ has been used to signify any attempt to employ introspective data or phenomenal reports in neuroscientific experiments. Many scientific experiments use phenomenal reports. Whether such experiments count as neurophenomenological in the strict sense, however, depends on the amount and nature of training the subjects receive, and the focus of their reports. For example, if the subjects in a fMRI *masking experiment are simply asked if they see a target, the variable phenomenal reports may be a critical measure used to assess the neural activity being measured, but it is not clear to what extent these reports are reports about the target or reports about the subject’s experience of the target (Gallagher and Overgaard 2005).

SHAUN GALLAGHER

Fenwick, P. (1981). ‘Precipitation and inhibition of seizures’. In Reynolds, E. and Trimble, M. (eds) Epilepsy and Psychiatry.

Gallagher, S. and Overgaard, M. (2005). ‘Introspections without introspeculations’. In Aydede, M. (ed.) Pain: New Essays on the Nature of Pain and the Methodology of its Study.

Le Van Quyen, M. and Petitmengin, C. (2002) ‘Neuronal dynamics and conscious experience: an example of reciprocal causation before epileptic seizures’. Phenomenology and the Cognitive Sciences, 1.

——, Adam, C., Lachaux, J. P. et al. (1997a). ‘Temporal patterns in human epileptic activity are modulated by perceptual discriminations’. Neuroreport, 8.

——, Martinerie, J., Adam, C., and Varela, F. J. (1997b). ‘Unstable periodic orbits in a human epileptic activity’. Physical Review E, 56.

——, Martinerie, J., Baulac, M., and Varela, F. J. (1999). ‘Anticipating epileptic seizure in real time by a nonlinear analysis of similarity between EEG recordings’. NeuroReport, 10.

——, Martinerie, J., Navarro, V. et al. (2001). ‘Anticipation of epileptic seizures from standard EEG recordings’. Lancet, 357.

Lutz, A. et al. (2002) ‘Guiding the study of brain dynamics by using first-person data: synchrony patterns correlate with ongoing conscious states during a simple visual task’. Proceedings of the National Academy of Sciences of the USA, 99.

Penfield, W. (1938). ‘The cerebral cortex in man. I. The cerebral cortex and consciousness’. Archives of Neurology and Psychiatry, 40.

Penfield, W. and Jasper, H. (1954). Epilepsy and the Functional Anatomy of the Human Brain.

Rodriguez, E., George, N., Lachaux J. P., Martinerie J., Renault B., and Varela F. J. (1999). ‘Perception’s shadow: long-distance synchronization in the human brain’. Nature, 397.

Roy, J-M., Petitot, J., Pachoud, B. and Varela, F. J. (1999). ‘Beyond the gap: an introduction to naturalizing phenomenology’. In Petitot, J. et al. (eds) Naturalizing Phenomenology: Issues in Contemporary Phenomenology and Cognitive Science.

Thompson, E. and Varela, F. (2001). ‘Radical embodiment: neural dynamics and consciousness’. Trends in Cognitive Sciences, 5.

Varela, F. J. (1996). ‘Neurophenomenology: a methodological remedy to the hard problem’. Journal of Consciousness Studies, 3.

—— and Thompson, E. (2003). ‘Neural synchrony and consciousness: a neurophenomenological perspective’. In Cleeremans, A. (ed.) The Unity of Consciousness: Binding, Integration, and Dissociation.

——, ——, and Rosch, E. (1991). The Embodied Mind.

neuropsychology and states of disconnected consciousness Brain-damaged patients can be a source of highly privileged knowledge. Not only can they tell us what capacities can be disturbed in relative isolation from others, which anatomical systems of the brain are important for their processing, but they also offer a special route to the study of consciousness—because it turns out, surprisingly, that in virtually all of the major cognitive categories that are seriously disturbed by *brain damage, there can be remarkably preserved functioning without the patients themselves being aware of the residual function. Detailed evidence regarding all the syndromes reviewed here and some theoretical considerations can be found in Weiskrantz (1997). In this entry only highlights and more recent evidence are cited.

1. Residual function following brain damage

2. Blindsight and related phenomena

3. Commentary and capacity

4. Summing up

The best and most thoroughly studied example of this ‘performance without awareness’ is the *amnesic syndrome, a severe memory disorder. Amnesic syndrome patients are grossly impaired in remembering recent experiences, even after an interval as short as a minute. The patients need have no impairment of short-term memory, e.g. in reciting back strings of digits, nor need they have any perceptual or intellectual impairments, but they have grave difficulty in acquiring and holding new information (anterograde amnesia). Memory for events from before the onset of the injury or brain disease is also typically affected (retrograde amnesia), especially for those events that occurred a few years before the brain damage. Older knowledge can be retained—the patients retain their vocabulary and acquired language skills, they know who they are, they may know where they went to school, although they may be vague even about such early knowledge. The disorder is severely crippling, and yet there is evidence of good storage of new experiences.

Experimentally robust evidence was reported in the 1950s of the famous patient, H. M., who became severely amnesic after bilateral surgery to structures in the medial portions of his temporal lobes for the relief of intractable *epilepsy. It soon became apparent in studies by Brenda Milner and colleagues that H. M. was able to learn various perceptual and motor skills, such as mastering the path of a pursuit rotor in which one must learn to keep a stylus on a narrow track on a moving drum. He was also able to learn mirror drawing. He was retained such skills excellently from session to session, but he demonstrated no awareness of having remembered the experimental situations, nor could he recognize them.

The examples of perceptual-motor skill learning and possibly even of classical conditioning did not stretch credulity unduly, because such skills themselves can become more or less automatic, devoid of cognition. But counter-intuitive results were much more pressing when it was shown that amnesic subjects could also retain information about verbal material, but again without recognition or acknowledgement. The novel demonstration depended on showing lists of pictures or words and, after an interval of some minutes, testing for recognition for each item in a standard yes/no test. Not surprisingly, the patients performed at the level of chance. But when asked to ‘guess’ the identity of pictures or words from difficult fragmented drawings, however, they were much better able to do so for those items to which they had been exposed (Warrington and Weiskrantz 1968).

Another way of demonstrating this phenomenon was to present just some of the letters of the previously exposed word, e.g. the initial pair or triplet of letters The patients showed enhanced ability for finding the correct words to which they had been exposed earlier compared to control items to which they had not been exposed, a phenomenon called *priming—the facilitation of retention induced by previous exposure. The demonstration of retention by amnesic patients has been repeatedly confirmed, and retention intervals can be as long as several months: Successful retention for H. M. was reported over an interval of four months by Milner and colleagues. Such patients, in fact, can learn a variety of novel types of tasks and information, such as new words, new meanings, new rules. Learning of novel pictures and diagrams has also been demonstrated.

An early response by some memory researchers was that the positive evidence might be just be normal but ‘weak’ memory. But when dissociations started to appear for other specific forms of memory disorders that were different from the amnesic syndrome, it gradually became clear that there are a number of different *memory systems in the brain operating in parallel, although of course normally in interaction with each other. For example, other subjects had brain damage elsewhere that caused them to lose the meanings of words, but without behaving like amnesic subjects; that is, they could remember having been shown a word before, and remember that on that occasion they also did not know its meaning. Other brain-damaged subjects could have very impoverished short-term memory—being able to repeat back only one or two digits from a list—and yet were otherwise normal for remembering events and recognizing facts. They could remember that they could only repeat back two digits when tested the day before!

Another example of residual function following brain damage is that of unilateral neglect, associated with lesions of the right parietal lobe in humans. The patients behave as though the left half of their visual (and sometimes also their tactile) world is missing. Even though the subjects neglect the left half their visual world, it can be shown that ‘missing’ information is being processed by the brain. A report was made by Bisiach and Rusconi (1990) of unilateral neglect patients who were shown drawings of intact or wine glasses that were broken on the left, neglected side. In that study some patients actually followed the contour of the drawing with their hands but failed to report the details on its left side, and when questioned said they would prefer the unbroken wine glass. Other studies have asked whether an ‘unseen’ stimulus in the neglected left field can ‘prime’ a response by the subject to a stimulus in the right half-field. Thus, Ladavas et al. (1993) have shown that a word presented in the right visual field was processed faster when the word was preceded by a brief presentation of an associated word in the ‘neglected’ left field. When the subject was actually forced to respond directly to the word on the left, e.g. by reading it aloud, he was not able to do so—it was genuinely ‘neglected’. A similar demonstration by others used pictures of animals and fruit in the left neglected field as primes for pictures in the right which their patients had to classify as quickly as possible, either as animals or fruit, by pressing the appropriate key. The unseen picture shown on the left generated faster reaction times when it matched the category of the picture on the right. And so material, both pictorial and verbal, of which the subject has no awareness in the left visual field, and to which he or she cannot respond explicitly, nevertheless gets processed. The subject may not ‘know’ it, but some part of the brain does.

There is a type of memory disorder that is socially very awkward for the patient. Prosopagnosia (see AGNOSIA) is an impairment in the ability to recognize and identify familiar faces. The problem is not one of knowing that a face is a face—it is not a perceptual difficulty, but one of facial memory. The condition is associated with damage to the inferior posterior temporal lobe, especially in the right hemisphere. The condition can be so severe that patients do not recognize the faces of members of their own family. But it has been demonstrated clearly that the autonomic nervous system can ‘tell’ the difference between familiar and unfamiliar faces. Tranel and Damasio (1985) carried out a study in which patients were required to pick out familiar from unfamiliar faces. They scored at chance, but notwithstanding their skin conductance responses were reliably larger for the familiar faces than for the unfamiliar faces. And so the patients do not ‘know’ the faces, but some part of their autonomic nervous system obviously does.

Even for that uniquely human cognitive achievement, the skilled use of language, when severely disturbed by brain damage, evidence exists of residual processing of which subjects remain unaware. One example comes from patients who cannot read whole words, although they can painfully extract the word by reading it ‘letter by letter’. This form of acquired dyslexia is associated with damage to the left occipital lobe. In a study by Shallice and Saffran (1986), one such patient was tested with written words that he could not identify—he could neither read them aloud nor report their meanings. Nevertheless he performed reliably above chance on a lexical decision task, when asked to guess the difference between real words and nonsense words. Moreover, he could correctly categorize words at above chance levels according to their meanings, using forced-choice responding to one of two alternatives. He could say e.g. whether the written name of a country belonged inside or outside Europe, despite his not being able to read or identify the word aloud or explicitly give its meaning.

An even more striking outcome emerges from studies of patients with severe loss of linguistic comprehension and production, aphasia. An informative study that tackled both grammatical and semantic aspects of comprehension in a severely impaired patient is that by Tyler (1988), who presented the subject with sentences that were degraded either semantically or syntactically. The subject succeeded in being able to follow the instruction to respond as quickly as possible whenever a particular target word was uttered. It is known that normal subjects are slower to respond to the target word when it is in a degraded context than in a normal sentence. Even though Tyler’s aphasic patient was severely impaired in his ability to judge whether a sentence was highly anomalous or normal, his pattern of reaction times to target words in a degraded context showed the same pattern of slowing characteristic of normal control subjects. By this means it was demonstrated that the patient retained an intact capacity to respond both to the semantic and grammatical structure of the sentences. But he could not use such a capacity, either in his comprehension or his use of speech. Tyler distinguishes between the *online use of linguistic information, which was preserved in her patient, and its exploitation offline.

Perhaps the most celebrated and earliest evidence of function without awareness has come from the study of *commissurotimized patients, colloquially known as ‘split-brain’ patients (see Sperry 1974). This well-known work, the subject of much theoretical discussion, clearly demonstrated instances in which information directed to the right (silent) hemisphere could not be named or ‘seen’, but could nevertheless be demonstrated to have been processed through categorization, pointing, and other responses.

Thus, neuropsychology has exposed a large variety of examples in which, in some sense, awareness is ‘disconnected’ from a capacity to discriminate or to remember or to attend or to read or to speak. Of course, there is nothing surprising in our performing without awareness—it could even be said that most of our bodily activity is unconscious, and that many of our interactions with the outside world are carried on ‘automatically’ and, in a sense, thoughtlessly. What is surprising about these examples from neuropsychology is that in all these cases the patients are unaware in precisely the situations in which we would normally expect someone to be very much aware.

Perhaps the most dramatic and most counter-intuitive of all such examples is blindsight, the residual visual function that can be demonstrated after damage to the visual cortex. The story actually starts with animal research, some of it more than a century old, and the underlying neuroanatomy of the visual system. The major target of the eye lies in the occipital lobe (after a relay via the thalamus), in the striate cortex (V1). While this so-called geniculostriate pathway is the largest one from the eye destined for targets in the brain, it is not the only one. There are at least nine other pathways from the retina to targets in the brain that remain open after blockade or damage to the primary visual cortex.

From the end of the 19th century (e.g. as summarized in William James) until quite recently the standard view was that lesions of the visual cortex cause blindness. The change in outlook almost 100 years later arose when patients were tested in the way that one is forced to test animals, i.e. without depending on a verbal response, showing that human subjects can discriminate stimuli in the blind fields even though they were not aware of them (Weiskrantz et al. 1974, Weiskrantz 1986). There are conditions under which subjects do have some kind of awareness, and this has turned out to be of interest in its own right. That is, with some stimuli, especially those with very rapid movement or sudden onset, a subject ‘knows’ that something had moved in the blind field, even though he/she did not ‘see’ it as such. This has been dubbed blindsight type 2. With other stimuli the subject could discriminate by forced-choice guessing without any awareness of them (blind-sight type 1). The distinction has allowed one to carry out functional imaging of the contrast between ‘aware’ and ‘unaware’ in blindsight (Sahraie et al. 1997) in the oft-studied subject, G. Y., showing differential foci for the two states.

It is not only in the visual mode that examples of ‘unaware’ discrimination capacity have been reported. There are reports of ‘blind touch’ and also a report of ‘deaf hearing’. Cases of blind touch, following parietal lobe damage, are closely similar to the early accounts of blindsight. A similar recent case has been described more recently by Rossetti et al. (1995), who have invented the splendid oxymoron, ‘numbsense’.

Because many subjects resist answering questions about stimuli they cannot ‘see’, indirect methods of testing for residual visual processing have been developed that allow firm inferences to be drawn about its characteristics without forcing an instrumental response to an unseen stimulus (Marzi et al. 1986). For example, responses to stimuli in the intact hemifield can be shown to be influenced by ‘unseen’ stimuli in the blind hemifield, as with visual completion or by visual summation between the two hemifields. Of the various indirect methods, pupillometry offers a special opportunity because the pupil is surprisingly sensitive to spatial and temporal parameters of visual stimuli in a quantitatively precise way. Barbur and his colleagues have shown that the pupil constricts sensitively, among other properties, to movement, to colour, to contrast and spatial frequency of a grating, and that the acuity estimated by pupillometry correlates closely with that determined by conventional psychophysical methods in normal subjects (Barbur and Forsyth 1986). The method is available not only for testing normal visual fields, but for the blind fields of patients, for animals, for human infants—indeed for any situation where verbal interchange is impossible or is to be avoided. There is good correspondence between the results of pupillometry and stimulus detection found with forced-choice guessing in a human blindsight subject and also from pupillometry in hemianopic monkeys.

The impetus for blindsight research actually started with results from monkeys with striate cortex lesions, and it is now seems that it may be possible to complete the circle. From the novel experiments of Cowey and Stoerig (1995) it has been found that the monkey with a unilateral lesion of striate cortex also has blindsight in the sense that it apparently treats visual stimuli in the affected half-field as non-visual events, i.e. ‘blanks’ (see BLINDSIGHT). A recent critique of the animal work has been offered by Mole and Kelly (2006) who argue that the animal (but not the human) results could reflect an attentional bias towards stimuli in the intact hemifield rather than a genuine judgemental decision. The similarity of human and monkey results looks encouraging and persuasive but perhaps the human–animal door awaits firm and final closure.

What all of the neuropsychological examples cited here—from amnesia, neglect, agnosia, aphasia, blind-sight, etc.—have in common is that the subject fails to make a commentary (a genuine voluntary commentary, not just a reflexive or automatic response) that parallels the actual capacity: there is good performance in the absence of acknowledged awareness. One theoretical possibility (among many) discussed in detail elsewhere (Weiskrantz 1997) is that the awareness may not be the instigator that enables a report to be made of an event or a capacity, but that it is the commentary system itself that actually endows awareness—in the medium lies the message. The commentary, of course, need not be verbal; the tail-wag of a dog often can be remarkably communicative and informative in this very context. Nor need a commentary be physically issued; the important step is in the specific and episodic activation of the commentary system. It may be of non-trivial significance that the locus of areas of fMRI activity in the aware state, at least for blindsight for both patients and normal subjects (in whom simulated blindsight was induced by backward masking), lie well forward of the sensory processing areas.

First, across the whole spectrum of cognitive neuropsychology there are residual functions of good capacity that continue in the absence of the subject’s awareness. Second, by comparing aware with unaware modes, with matched performance levels, there is a route to brain imaging of these two modes by comparing brain states when a subject is aware or when he or she is unaware but performing well. Third, in order to determine whether the subject is aware or not aware, it cannot be done by studying the discriminative capacity alone—it can be good in the absence of awareness. To go back to an earlier distinction, we have to go offline to do this. In operational terms, within the blindsight mode, but in similar terms for all of the syndromes, we have to use something like the ‘commentary key’ in parallel with the ongoing discrimination, or (as in the animal experiments, in the absence of a commentary key) to try to obtain an independent classification of the events being discriminated. The commentary key is the output of a commentary system of the brain that offers a possible theoretical inroad into concepts of conscious awareness. Fourth, wherever the brain capacity for making the commentary exists, it is certainly outside the specialized visual processing areas, which may be necessary but not sufficient for the task.

LARRY WEISKRANTZ

Barbur, J. L. and Forsyth, P. M. (1986). ‘Can the pupil response be used as a measure of the visual input associated with the geniculo-striate pathway?’ Clinical Visual Science, 1.

Bisiach, E. and Rusconi, M. L. (1990). ‘Break-down of perceptual awareness in unilateral neglect’. Cortex, 26.

Cowey, A. and Stoerig, P. (1995). ‘Blindsight in monkeys’. Nature, 373.

Lavadas, E., Paladini, R., and Cubelli, R. (1993). ‘Implicit associative priming in a patient with left visual neglect’. Neuropsychologia, 31.

Marzi, C. A., Tassinari, G., Aglioti, S., and Lutzemberger, L. (1986). ‘Spatial summation across the vertical meridian in hemianopics: a test of blindsight’. Neuropsychologia, 30.

Mole, C. and Kelly, S. (2006). ‘On the demonstration of blindsight in monkeys’. Mind and Language, 21.

Rossetti, Y., Rode, G., and Boisson, D. (1995). ‘Implicit processing of somaesthetic information: a dissociation between where and how?’ NeuroReport, 6.

Sahraie, A., Weiskrantz, L., Barbur, J. L., Simmons, A., Williams, S. C. R., and Brammer, M. L. (1997). ‘Pattern of neuronal activity associated with conscious and unconscious processing of visual signals’. Proceedings of the National Academy of Sciences of the USA, 94.

Shallice, T. and Saffran, E. (1986). ‘Lexical processing in the absence of explicit word identification: evidence from a letter-by-letter reader’. Cognitive Neuropsychology, 3.

Sperry, R. W. (1974). ‘Lateral specialization in the surgically separated hemispheres’. In Schmitt, F. O. and Worden, F. G. (eds) The Neurosciences: Third Study Program.

Tranel, D. and Damasio, A. R. (1985). ‘Knowledge without awareness: an autonomic index of facial recognition by prosopagnosics’. Science, 228.

Tyler, L. K. (1988). ‘Spoken language comprehension in a fluent aphasic patient’. Cognitive Neuropsychology, 5.

Warrington, E. K. and Weiskrantz, L. (1968). ‘New method of testing long-term retention with special reference to amnesic patients’. Nature, 217.

Weiskrantz, L. (1986). Blindsight.

—— (1997). Consciousness Lost and Found. A Neuropsychological Exploration.

——, Warrington, E. K., Sanders, M. D., and Marshall, J. (1974). ‘Visual capacity in the hemianopic field following a restricted occipital ablation’. Brain, 97.

non-conceptual content Typically, for a large variety of psychological states such as beliefs, thoughts, desires, hopes, perceptual experiences, sensations, emotions, etc., to be in such a state is to be conscious or aware of something: thus, to think about Dallas is to be conscious of that city; to see a kangaroo is to be visually aware of that particular marsupial. And, it is traditionally assumed, this sort of consciousness essentially consists in the fact that such mental states represent the objects, properties, relations, states-of-affairs, etc., that they are of or about—in other words, they have a representational content. For instance, that ‘Dallas is a big city’ may be the content of one’s thought about Dallas; that ‘there is a kangaroo in front of me’, the content of one’s visual experience.

One important issue about the nature of such content is whether the representational content of all conscious psychological states is conceptual, or whether there are in fact two different kinds of content such that, if thoughts like beliefs and judgements do indeed represent the world in a conceptualized manner, perceptual experiences, sensations, and perhaps even some emotions, have an entirely different kind of content—a non-conceptual one.

Thus, proponents of conceptualism about mental content have it that all conscious psychological states whose function is to represent parts of the subject’s environment have a conceptual content, in the sense that what they represent—and more importantly, how they represent it—is fully determined by the conceptual capacities the subject brings to bear in such a state. One’s awareness of the world, on this view, is entirely constrained by one’s concepts. In contrast, their critics—non-conceptualists—argue that at least some psychological states (including, in particular, those representational mental states whose consciousness uncontroversially involves a distinctive phenomenology, or phenomenal character, like perceptual experiences and sensations) have a content that is not conceptual. On that view, one’s consciousness of one’s environment need not be mediated by one’s concepts.

One of the central questions about this dispute concerns the distinction between two kinds of content— conceptual and non-conceptual—and what it really amounts to. Another concerns the link between these distinct kinds of content and consciousness: in particular, why is it that those psychological states whose consciousness is phenomenal are supposed to represent the world in a non-conceptualized manner?

1. Conceptual content?

2. Arguments for conceptualism—and against

3. Non-conceptual content and phenomenal consciousness

So what does the difference between conceptual and non-conceptual content consist in? Essentially the following: if one is in a conscious psychological state the content of which is conceptual, one must possess and deploy certain relevant concepts. In contrast, conscious psychological states with non-conceptual content do not require concepts: one can be in such a state without possessing or deploying concepts for the things thus represented (or any other concept, for that matter).

But what does it mean to ‘deploy’ a concept in a psychological state (and what does it mean to ‘possess’ a concept)? Here is a brief sketch of an answer. Whatever concepts are (and here we face a profusion of theories, with little consensus in sight), the possession of a concept is usually accompanied by certain psychological capacities: the sorts of capacities which play an essential role in thinking and reasoning. For instance, possession of the concept ‘kangaroo’ usually comes with such capacities as the ability to (1) think about kangaroos, to (2) recognize kangaroos and to (3) discriminate them from koalas and other non-kangaroos, to (4) draw inferences about kangaroos, etc. (Note that possession of distinct kinds of concepts may come with different kinds of capacities.) One might then say that to deploy the concept ‘kangaroo’ in a thought about kangaroos is simply to exercise some such capacities while thinking that thought—to use the concept in that thought.

Thus, the representational content p of a conscious psychological state is conceptual if, in order to be in that state with that content, one must exercise the sorts of conceptual capacities typically associated with concepts for the things p represents. In other words, to say of a psychological state that it has a conceptual content is to say that it has the particular content that it does ‘by virtue of the conceptual capacities [ … ] operative in [it]’ (McDowell 1994:66). For instance, the representational content of the thought that ‘there is a friendly kangaroo living nearby’ is determined by the conceptual capacities the thinker exercises when consciously thinking that thought—capacities associated with the use of concepts like ‘kangaroo’, ‘friendly’, ‘living nearby’, etc. Had the subject exercised capacities associated with distinct concepts (such as the concept ‘koala’, say) the content of her thought would have been different—i.e. about koalas, not kangaroos. (Note the term ‘operative’ above: it is the actual deployment of certain conceptual capacities associated with particular concepts that is supposed to determine the representational content of a conscious psychological state, on this view—not just the possession of such concepts, or the ability to use them: after all, we possess many concepts for many different things, and the mere fact that one possesses such concepts underdetermines the conceptual content of one’s given conscious psychological state, since it is insufficient to specify which concepts are actually used in that occurrent mental state).

Finally, the relation of determination between concepts (or the deployment of associated conceptual capacities) and the representational content of conscious psychological states can be captured in terms of the following supervenience thesis—(CT): no representational difference (difference in content) without a conceptual difference. That is, according to (CT), any difference in what conscious psychological states represent—or how they represent it—comes with a difference in the concepts deployed by the subjects in such states. The representational content of a conscious psychological state is non-conceptual, on the other hand, if it is not so determined.

The distinction between conceptual and non-conceptual content is particularly significant when it comes to understanding the nature of the sort of consciousness constitutive of perceptual experiences. Conceptualists (Brewer 1999, McDowell 1994) take it that perceptual experiences are very much like thoughts—or, indeed, are a kind of thought—in the way they represent the environment: the deployment of concepts is necessary for perceptual representation. Non-conceptualists (Evans 1982, Heck 2000, Peacocke 1992, 2001, Tye, 1995), on the other hand, suspect that conceptualists over-intellectualize experiences: there can be perceptual representation without any conceptual meddling.

Why accept conceptualism, then? The following considerations have been advanced in its support:

(1) The argument from understanding: in order to be in any conscious psychological state with a representational content p, one must be able to understand p. But understanding is a conceptual matter: it requires concepts. Therefore, since perceptual experiences are conscious psychological states with content, being in such a state requires the possession of concepts (Peacocke 1983).

(2) Conceptual influence: thesis (CT) predicts that the deployment of different concepts can give rise to states with different content. Such variability seems to apply to perceptual experiences. For instance, once you realize that what you first took for the sound of applause on the stereo is in fact the sound of rain on the roof, your auditory experience of that sound—in particular, the way in which the sound is represented—changes (Peacocke 1983). Similarly, sentences uttered by French speakers are experienced differently depending on whether you understand French or not. This variability, according to conceptualists, shows that thesis (CT) is true of the representational content of experience.

(3) The epistemic argument: perceptual experiences serve an epistemic function: they can justify or provide reasons for some of our beliefs about the external world. Seeing a kangaroo in front of you provides you with a reason to believe that there is a kangaroo in front of you. But according to conceptualists, something is a reason for a belief only if there is a rational and inferential connection between such a reason and the content of your belief. And inferential connections, the argument continues, only hold between states with conceptual content. Hence, if perceptual experiences provide reasons for beliefs, they must have a conceptual content (McDowell 1994, Brewer 1999; and for critical discussion, Heck 2000, Peacocke 2001, Byrne 2005).

Critics of conceptualism have not only offered various diagnoses for the failure of the above arguments, they have advanced considerations against conceptualism. For instance:

(4) Fineness of grain: the representational content of perceptual experiences can be very fine-grained: visual experiences, for instance, can represent highly specific shades of colour and the differences between them, thus allowing us to discriminate many such shades. It seems plausible, however, that normal subjects do not possess concepts for all these specific colours. Hence, it seems, the content of fine-grained experiences is not conceptual (Evans 1982, Peacocke 1992).

(5) Informational richness: perceptual experiences are not just fine-grained, they can also be replete with information. For instance, a visual experience can convey a lot of information about a busy street scene and the many objects in it (Dretske 1981)— information which need not be fine-grained or specific, note. The whole scene can be simultaneously represented in your experience, even if you only notice some of the elements in that scene. But then, it seems unlikely that you can simultaneously deploy concepts for everything in front of you. For if the way in which we usually deploy concepts in thoughts and beliefs is anything to go by, it seems as though it takes time for a normal subject to consciously think about all the objects present in a visual scene. Hence, perceptual experiences with an informationally rich content must have a non-conceptual content.

(6) Concept acquisition: Empiricism about concept acquisition seems true, at least for some concepts: we learn certain concepts, such as the concept ‘red’, on the basis of experience—experiences of red, in this case. But if conceptualism is true, one must already possess a concept for redness in order to be visually presented with instances of red. Hence, if the representational content of experience is conceptual, it is hard to see how one could acquire a concept like ‘red’ on the basis of experiences of red (Peacocke 1992, 2001).

(7) Animal/infant perception: intuitively, it seems as though young infants and many animals can have perceptual experiences which represent the environment in much the same way as ours. Yet it also seems plausible that animals and young infants do not possess concepts—or, at least, not as many concepts as adult subjects do. Hence, the representational content of the perceptual experiences of animals and young infants must be non-conceptual. But then, so must those of adult subjects, given the similarity between our experiences and theirs (Dretske 1993).

Of course, just as non-conceptualists resist arguments for conceptualism, there is a variety of responses available to conceptualists (see, in particular, Brewer 1999).

Finally, there is the question of the relationship between these distinct kinds of representational content and consciousness—and in particular, between non-conceptual content and phenomenal consciousness. For conceptualists, we have seen, to be conscious of something is to be in a psychological state with a conceptual content. But many psychological states also have a distinctive *phenomenal character or phenomenology: there is something distinctive it is like to be in such a state. For instance, there is something it is like to taste a lemon tart, quite distinct from what it is like to taste a meat pie, and even more distinct from what it is like to see the sun rise on Sydney harbour (see ‘WHAT IT’S LIKE’). States with a distinctive phenomenal character are phenomenally conscious.

Not all conscious mental states have phenomenal consciousness, however (at least not uncontroversially): for instance, there does not seem to be any distinctive way it is like to think that the Democrats will lose the next election, or so it is often pointed out. Of course, the conscious entertaining of such a thought may be accompanied by various emotions or moods (joy, despair, frustration), which can be phenomenally conscious. But this should not be taken to show that the thought itself has a distinctive phenomenology (indeed, the fact that distinct phenomenal characters may be associated with that particular thought, as well as with many other thoughts, suggests that such a thought does not have a distinctive phenomenal character of its own).