As we consider significantly reducing the U.S. nuclear arsenal—through unilateral actions and in conjunction with others—it is important to consider the historical contours of the U.S. nuclear arsenal. What drove the spectacular U.S. arms buildup during the Cold War? How should we understand the post–Cold War reductions? And what are the barriers to reductions to much lower numbers?

The key to understanding is the internal and largely hidden logic of U.S. plans to use nuclear weapons should deterrence fail. U.S. nuclear war planning is not a barrier to cutting to an arsenal of 1,500 or somewhat fewer strategic nuclear weapons, but the deep logic that guides planning for use is likely, if not contravened, to prove a barrier to a significantly smaller arsenal, on the order of 100 to 500 nuclear weapons, on the way to zero.

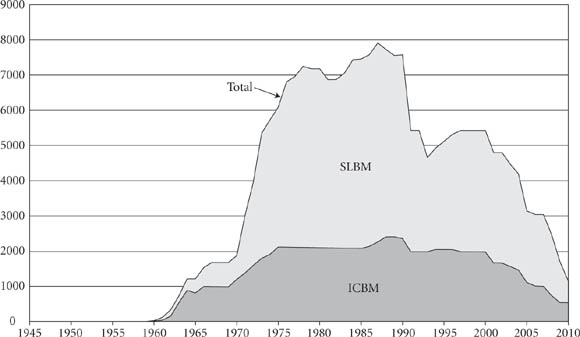

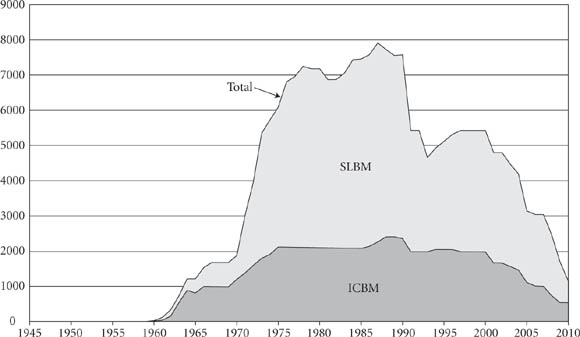

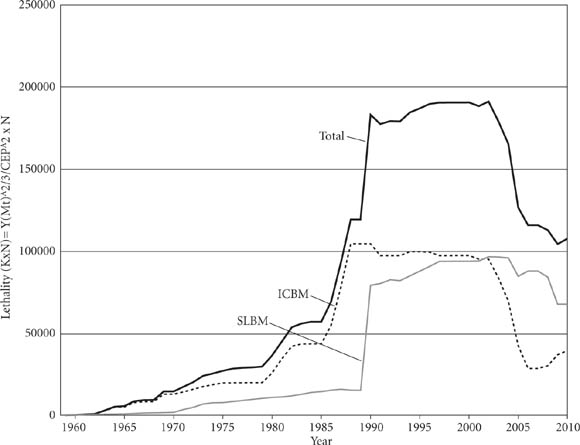

First, I sketch out the contours of the U.S. strategic nuclear arsenal from its inception to the present. Over time, the U.S. arsenal grew remarkably more lethal—that is, more able to destroy specific “hard targets” such as missile silos and command bunkers. Most notable is the exponential increase in lethality from 1980 to 1990, and the peak reached in 2002—more than a decade after the collapse of the Soviet Union.

Second, I explain the underlying logic and process of planning for nuclear war. Nuclear war plans are contingency plans that shape the options available in the event deterrence fails. The planning process drives weapons numbers and is an arena in which battles over numbers are fought. The basic idea is to be able to limit damage from an enemy’s attack through an ability to destroy enemy forces, command structure, and communications prior to their launch; disrupt an attack early in the launch process; or stop an enemy’s ability to continue to fight. All have been part of U.S. nuclear war planning, but the first two have been the most important. Their logic is incorporated in and inseparable from detailed organizational routines for targeting nuclear weapons—including designating targets, rating target “hardness,” and evaluating the overall effectiveness of the U.S. attack. These routines, and the logic they embody, have been surprisingly persistent. Unless changed, they are likely to prove an insuperable barrier to achieving deep nuclear arms reductions and, ultimately, getting to zero.

Third, I examine the role of this targeting logic in the post–Cold War era. How did the United States arrive at approximately 2,200 strategic weapons on alert in 2010? What role is targeting logic likely to play at 1,000 weapons, or 500 weapons? And what will allow for a drop to a force that is an order of magnitude smaller? As we will see, reaching a nuclear stockpile of a few hundred, or a few tens of weapons, on the way to zero will require radical changes in the basic assumptions of U.S. nuclear war planning.

The scale of destruction that nuclear weapons cause is important to understand but difficult to grasp. The atomic bombs detonated at Hiroshima and Nagasaki in August 1945 are frequently referred to as “small,” the equivalent of the explosive power of 15,000 and 21,000 tons of dynamite, respectively. But these are “small” only in comparison to the destructive potential of the post–World War II nuclear weapons arsenals, including today’s.

McGeorge Bundy, national security advisor to presidents John F. Kennedy and Lyndon Johnson, provides a sense of scale: “In the real world of real political leaders . . . a decision that would bring even one hydrogen bomb on one city of one’s own country would be recognized in advance as a catastrophic blunder; ten bombs on ten cities would be a disaster beyond history; and a hundred bombs on a hundred cities are unthinkable.” Wise words, but according to historian David A. Rosenberg, the U.S. Air Force had identified a hundred city centers for atomic attack by the fall of 1947, and by the fall of 1949, the U.S. atomic war plan “called for attacks on 104 urban targets with 220 atomic bombs, plus a re-attack reserve of 72 weapons.”1 Today’s arsenal is capable of inflicting far greater damage.

From the end of World War II to the late 1980s, both the United States and Soviet Union/Russia steeply increased the total numbers of warheads in their bomber, ICBM, and SLBM forces, ultimately reaching levels of 11,500 to 14,000 strategic nuclear weapons each (and, counting nonstrategic weapons, 30,000 to 40,000 weapons each).2 In the post–Cold War period, both countries greatly decreased those forces. Historically, the overall arsenals have grown and shrunk roughly in tandem. The United States has had a preponderance of its warheads in the strategic bomber force and the SLBM force; the Soviet Union/Russia has had a preponderance of warheads in its ICBM force. In this chapter we will look at the U.S. side.

Figure 4.1. U.S. ICBM and SLBM warheads, 1945–2010. Sources: Archive of Nuclear Data from Natural Resources Defense Council’s Nuclear Program, http://www.nrdc. org/nuclear/nudb/dafig4.asp, and dafig6.asp; and Robert S. Norris and William M. Arkin, “Nuclear Notebook: U.S. Nuclear Forces, 2000,” Bulletin of the Atomic Scientists (May/June 2000): 69–71, and succeeding “nuclear notebooks,” Bulletin, 2001–10.

The picture is very different when we use a broad measure that compares ICBM and SLBM destructive capabilities over time (see Figure 4.2). Specifically, lethality, K, is a comparative measure of “hard-target kill capability”: the greater the lethality, the greater the ability of a weapon, or a class of weapons, to destroy hard targets such as missile silos, hardened communication sites, and command bunkers. Comparing different values of K shows the relative ability of nuclear weapons of varying yields and accuracy to destroy enemy hard targets.3 Lethality is not a measure of the full destructive potential of nuclear weapons. It does not measure the fire damage caused by nuclear weapons on targets or on human beings, nor the radiological effects, nor potential effects on climate.4 It is, however, a concept highly consonant with the deep logic of nuclear war planning.

Figure 4.2. Comparative and total lethality of U.S. ICBMs and SLBMs, 1959–2010. Sources: http://www.nrdc.org/nuclear/nudb/dafig3.asp#fiftynine, datab5.asp; datab7. asp; Robert S. Norris and William M. Arkin, “Nuclear Notebook: U.S. Nuclear Forces, 2000,” Bulletin of the Atomic Scientists (May/June 2000): 69–71; and succeeding “nuclear notebooks,” Bulletin, 2001–10.

The measure for lethality does not depend on knowing the capability to destroy specific hard targets, nor does it require specification of all the structures being targeted at a particular time or over time.5 Instead, by taking into account yield and accuracy, lethality gives us a simple way to compare weapons systems. The most important component of lethality is accuracy: making a warhead twice as accurate has the same effect as increasing the yield—that is, the explosive power—eight times.

Lethality can be measured per warhead or in aggregate. To get a feel for lethality, let us compare the first U.S. ICBM warhead deployed, the W-49 Atlas, in 1959, to the lethality of the Trident D-5 W88 warhead, first deployed in 1990 and currently deployed. The Atlas warhead had an explosive power (yield) of 1.44 megatons and an accuracy, measured as circular error probable (CEP) of 1.8 nautical miles (n.m.), or approximately 11,000 feet. CEP is the radius of a circle within which half the warheads aimed at a target are expected to fall. Thus a CEP of 1.8 nautical miles means that half the warheads are expected to fall within that distance, and the other half beyond. The Trident warhead has a yield of 0.475 megaton and a CEP of 0.06 nautical mile, or an accuracy of a little over the length of a football field. The lethality per re-entry vehicle (K) was 0.4 for the Atlas; for the Trident it is 169. In other words, with approximately one-third the yield and thirty times the accuracy, a single Trident D-5 W-88 warhead is approximately 425 times as lethal as was the Atlas.

Lethality gives us a very different sense of destructive capability than simpler measures that focus on numbers of warheads. If we look just at numbers (Figure 4.1), we see a precipitous drop in warhead numbers in 1991 and then a more sustained drop beginning in 2001, until, by 2009, the total number of strategic missile warheads is the same as in 1969. Focusing on lethality (Figure 4.2) shows us that just as the Cold War began to thaw, the lethality of the U.S. missile force increased exponentially. This was because of the deployment of the Minuteman III W-78 in 1980 (accurate to 0.12 nautical mile), the highly accurate MX warhead in 1986 (accuracy 0.06 n.m.), and the equally accurate Trident D-5 W-88 in 1990. At the same time, the Poseidon C-3 (accuracy 0.25 n.m.) was retired in 1990, and, beginning in 1991, the Trident D-5 W-76 (0.12 n.m.) began to replace the older Trident C-4 (accuracy 0.25 n.m.). Indeed, the lethality of the U.S. missile force increased more than three times from 1985 to 1990 and actually increased slightly more until it peaked in 2002, more than a dozen years after the collapse of the Soviet Union. In 2009, lethality was almost half its peak level. Although in 2010, the U.S. had about the same number of deployed warheads as it did in 1969, the lethality of the force was more than seven times greater.

The damage-limiting logic of nuclear war planning and the organizational routines based on that logic have been, and remain, deeply ingrained in decisions about the numbers and capabilities of the U.S. nuclear arsenal.

To get a sense of proportion, let us turn to late 1960, when the U.S. government first integrated a series of regional nuclear war plans into one plan called the Single Integrated Operational Plan, or SIOP. The first SIOP was designated by the fiscal year for which it was operative: SIOP-62. Not surprisingly, the planning took place at the headquarters of the Strategic Air Command (SAC) in Omaha, Nebraska. The commander of SAC also directed the multiservice staff in charge of the SIOP, the Joint Strategic Targeting Planning Staff (JSTPS).

At this time, America’s overwhelming destructive power lay in its bomber force of more than 3,000 nuclear weapons. The ICBM and SLBM force of 137 warheads—30 Atlas D and 27 Atlas E warheads with individual yields of 1.4 and 3.75 megatons, respectively, and 80 Polaris warheads of 600 kilotons each—was a huge force by historical standards, and small only in comparison to the bomber force.

According to journalist Fred Kaplan, the nuclear war plan “SIOP-62 . . . called for sending in the full arsenal of the Strategic Air Command—2,258 missiles and bombers carrying a total of 3,423 nuclear weapons—against 1,077 ‘military and urban-industrial targets’ throughout the ‘Sino-Soviet Bloc.’”6 More than 800 of these targets were military. Of the military targets, about 200 were nuclear forces: 140 bomber bases, approximately 30 submarine bases, and 10 to 25 Soviet ICBMS.7 Of the total targets, about 200 were urban-industrial targets—that is, industrial targets largely located in populated areas.

In an internal government memo, economist Carl Kaysen described the consequences of executing SIOP-62. At the time, he was trying to craft a much smaller first-use nuclear option for President Kennedy for possible use during the Berlin crisis. According to Kaysen, if all U.S. strategic nuclear forces were executed as planned in SIOP-62, they would be expected to kill 54 percent of the population of the Soviet Union, “including 71% of the urban population, and . . . to destroy . . . 82% of the buildings, as measured by floor space.” Further, Kaysen continued, “there is reason to believe that these figures are underestimated; the casualties, for example, include only those of the first 72 hours.”8

Built into the targeting plan above was a set of algorithms—abstract to handle and catastrophic to realize—based on determination of the following:

• What counts as a target and how many are there?

• How “hard” or resistant are those targets to damage?

• Given a planned attack, what damage is to be expected against enemy assets?

These three questions involved a great deal of investigative and analytical work, but also embodied highly subjective collective judgments colored by in tense interservice, industrial, and political rivalries. The eventual answers had broad implications for the numbers, characteristics, and types of weapons systems procured. Moreover, once judgments were embedded in routines, they became difficult to undo, either because they had resulted from hard political negotiations or because people came to take them for granted.

The U.S. nuclear war plan, the SIOP, was a “capabilities plan” that allocated existing weapons to specific targets.9 The allocation process was complicated. But decisions about targeting also resulted from “pull” and “push” dynamics that both led to and justified arguments for more nuclear weapons. Anticipation of more and “harder” targets provided a “pull” to procure more weapons that could destroy those targets. The arrival of new nuclear weapons in the arsenal provided a “push” to find and justify targets at which to aim those warheads.

U.S. nuclear war planning—the planning process in which specific weapons are allocated to be delivered and to destroy specific targets—comes directly out of the experience of World War II strategic bombing. Despite the fact that large areas would inevitably be destroyed by strategic nuclear weapons, planners treat these weapons as though they destroy specific targets. Wider “collateral” damage does not “count” as an organizational goal and therefore is not “credited”—in fact, it is generally invisible—in war plans. Individual targets are classified into types—for example, strategic nuclear forces, other military targets, or urban-industrial targets.

In addition, all targets are characterized in terms of their geographic coordinates, function, and structural type. This mapping and categorizing is a huge analytical enterprise that I have elsewhere termed a vast “census of . . . destruction.” Like all censuses, the results reflected purpose and invented categories. In this case, war planners enumerated the specific structures and equipment slated for destruction, determined the aim points for U.S. weapons, and worked out the complicated logistics of routing weapons and timing possible attacks.10

The activity was explained to the public in terms of deterrence, but it seems better to think of the detailed and highly secret process as one of contingency planning should deterrence fail. (According to an official history, in the early 1970s, the targeting staff considered recommending the “inclusion of ‘deterrence’ as a major objective of the SIOP” but decided against it “since the SIOP was a capabilities plan rather than an objectives plan.” Objectives were listed in the war plan, but these were objectives once war began.)11

Finally, the armed services—especially the Air Force—used current and especially projected targets to argue for future weapon requirements in a process that David Rosenberg dubbed “bootstrapping.” According to Rosenberg, in January 1952, Air Force chief of staff General Hoyt Vandenberg told President Dwight Eisenhower, “Even allowing for incomplete intelligence . . . there appeared to be ‘perhaps five or six thousand Soviet targets which would have to be destroyed in the event of war’ [emphasis added].” Rosenberg continued, “This would require a major expansion in weapons production. . . . Air Force–generated target lists were used to justify weapons production, which in turn justified increased appropriations to provide matching delivery capability. . . . As intelligence improved, Air Force target lists steadily outpaced accelerating stockpile growth.”12

The targets that drove most of the Air Force’s weapons requirements and soaked up the vast majority of its warheads were Soviet strategic nuclear forces. Hence, the Air Force’s preferred strategy was “counterforce”: U.S. nuclear forces to counter, or attack, Soviet forces to “limit damage” to the United States.

The more that Soviet forces increased or were projected to, the more the target lists expanded, which then “pulled” weapons requirements up to be able to meet damage goals against those targets. The Air Force had organizational interests in maintaining a strong defense industrial base, but this does not mean war planners did not genuinely believe the scope of the threat.

The logic of “bootstrapping” continued throughout the Cold War—and after. A year and a half after the 1985 Reykjavik meeting between President Ronald Reagan and Soviet premier Mikhail Gorbachev that had led to a warming in relations, and more than a year after Gorbachev had made a serious and detailed nuclear arms reduction proposal, an Air Force general assigned to the joint strategic target planning staff explained, “The U.S. is in a weapon-poor target-rich environment. The Soviets are in the opposite situation. We find ourselves in a deficit position.” A decade later, after the Soviet Union had dissolved, the United States was still described as being in a “target-rich environment.”13

For the Navy, for much of the Cold War, the process worked somewhat differently. Here the dominant mission was not to limit damage in the event of war but to penetrate Soviet missile defenses—a mission dictated in part by the challenges of launching missiles from submarines at sea, which resulted in much lower accuracies than Air Force land-based missiles. MIRV technology provided a path to more warheads per launcher for both the Air Force and the Navy. While the Air Force chose relatively few high-yield warheads (until the deployment of the MX in 1986, with ten warheads per missile), the Navy chose more numerous lower-yield—and, largely by necessity, less accurate—weapons. When the Poseidon C-3 missiles carrying ten warheads began to replace Polaris missiles carrying one or three warheads, a “push” dynamic began, requiring the target planning staff to find more targets. An official history of the targeting staff cites “spectacular growth” in SIOP warheads from mid-1971 to mid-1972. The same history notes: “The introduction of Multiple Independently Targeted Reentry Vehicles (MIRVs) in the inventory has resulted in an increase in the number of weapons as well as an increase in the number of [Designated Ground Zeros] required to efficiently utilize these new weapons.”14 In plain English: more targets were put into the war plan so that weapons could be aimed at them. Greater growth lay ahead: from 1971 to 1978, ICBM warheads increased from 1,444 to 2,144. Primarily because of increases in Poseidon C-3 warheads, SLBM warheads increased from 1,664 to 5,120.

At the same time, 1971–72, John S. Foster, the Pentagon’s director of defense research and engineering, led a closely held targeting review panel that worked to rationalize the targeting of this “profusion of warheads in excess of those needed to fulfill the major attack options against Soviet strategic [nuclear] forces and key economic facilities.” The review, and subsequent targeting, placed much greater emphasis on “other military targets” and “economic recovery targets,” both, not coincidentally, amenable to destruction by Poseidon warheads.15

Analytically more interesting were economic recovery targets. Formally, the government’s new goal was to be able to “retard significantly the ability of the USSR to recover from a nuclear exchange and regain the status of a 20th century military and industrial power more quickly than the United States.”16 Destroying an enemy’s industrial base had always been part of the U.S. nuclear war plan. However, ensuring that the enemy’s economy will recover more slowly than your own required many more warheads. According to a Foster panel participant, the question was, “Could you really keep a country from recovering economically? The answer is no, you can’t do it with one strike. Everybody agreed to that. . . . We found out . . . that there is something worse than nuclear war. It’s two nuclear wars in succession . . . two to six weeks apart.”17

Then, unexpectedly, the push dynamic—the necessity to target the vast number of warheads coming into the arsenal—turned back into a pull dynamic. When the government asked academics to develop economic recovery models, they found that the Soviet economy could recover surprisingly quickly to prewar levels. RAND analysts Michael Kennedy and Kevin Lewis explained, “The U.S. force committed to the attack in such models often runs to several thousand warheads,” yet “typical results suggest full recovery to prewar GNP within about five years.” Varying assumptions still led to “perhaps fifteen years” at the outside to make a full recovery.18 According to political scientist Scott Sagan, one implication was that “significantly larger numbers of weapons were required to achieve the counter-recovery objective,” and he suggests that by the late 1970s more than half of the targets were urban-industrial.19

In President Jimmy Carter’s administration, reviews of targeting policy began to shift emphasis away from economic recovery and toward greater damage to Soviet military targets. Most important was the 1978 Nuclear Policy Targeting Review led by government analyst Leon Sloss. In political scientist Janne Nolan’s words, “Instead of cutting weapons to fit the shrinking target list, the study ‘kept the same number of weapons and allocated more to each target,’ according to one analyst. It justified this by raising the damage criteria, a more exacting standard imposed to determine what was needed to destroy the targets.” According to Sagan, Reagan administration policy was consistent with policy under the Carter administration: a decreased emphasis on economic targets, an increased emphasis on counterforce targeting, and also an increased emphasis on “holding the Soviet leadership directly at risk.”20

Consistent with these doctrinal shifts, both the Carter and Reagan administrations accelerated counterforce programs such as the MX and the Trident D-5 programs,21 which would not, however, be deployed until 1986 and 1990, respectively. The MX had a yield of 300 kilotons and an exceptional accuracy of 0.06 nautical mile, or 365 feet. With the deployment of the Trident D-5 W-88 warhead with a yield of 475 kilotons and accuracy equal to that of the MX, for the first time the Navy had a weapon with a hard-target kill capability as good as or better than the Air Force’s. Not coincidentally, with the anticipated D-5 deployment, and the very significant political problems encountered by the Air Force, as one after another survivable basing mode for the MX was eliminated, the Navy began to exhibit a willingness to take on the Air Force directly in making claims for survivable counterforce capability.22

Over time, U.S. intelligence also discerned that the Soviets were “hardening” their weapons and command structures. This sounds like a claim about modifying the structures, as in digging deeper underground bunkers or using more reinforced concrete. Indeed, it can mean this.

But in the engineering community, hardness also identifies a point of failure. An engineer might say a building is resistant to, or can withstand, a magnitude 8 earthquake but not an 8.2 quake. The choice of the point of failure is crucial. For engineers and architects, it determines the design, construction, and cost of putting up structures. For nuclear targeteers, it works in reverse: it allows them to decide what is required to cause “moderate” damage (often referred to as turning the structure into “gravel”), or “severe” damage (“dust”). The greater the required damage, the “harder” the structure. Targeting a building for severe damage boosts its hardness rating, while targeting it for moderate damage would result in a lower hardness rating.23 In other words, damage criteria result from goals, not from inherent characteristics of structures and are written into the vocabulary of physical vulnerability. The implications are important: saying the Soviet or Russian target base got harder could mean a difference in construction, or it could mean a U.S. decision that “severe,” not “moderate,” damage was now required, or both.

Target hardness has great implications for weapons procurement: the “harder” the targets, the stronger the claims for more accurate weapons, and sometimes for higher-yield weapons. That logic operated in the early 1970s, when the Strategic Air Command said it required a new more accurate and higher yield ICBM, the MX, because the Soviet target base had hardened. According to Air Force general Russell Dougherty, who became the commander-in-chief of SAC in 1974, and, as commander, was also the director of JSTPS, “In the early ’70s . . . we needed the accuracy. . . . That was one of the big drivers for the MX [because the Soviet target base] had changed character, it had hardened.”24

Both targets and damage criteria were, and are, incorporated into an algorithm that calculates, for every weapon type matched to relevant targets, the expected effectiveness of attack.25 The overall calculation is termed expected damage, or damage expectancy, abbreviated DE; it is a compound probability composed of four other probabilities:

• that a weapon or class of weapons survives before being launched (prelaunch survivability, or PLS);

• that a weapon does not fail for mechanical or other internal reasons (probability weapon is reliable, or PRE);

• that a weapon penetrates enemy air or other defenses (probability of penetration, or PTP);

• that a weapon destroys the target, determined by a combination of accuracy, weapon yield, height of burst, and target hardness (probability of “kill,” or PK).

Stated otherwise:

DE = PLS x PRE x PTP x PK

If an insufficient number of weapons is available to “cover” the chosen targets or if the targets are deemed too hard for those weapons to destroy, then the probability of kill will be considered unacceptably low—leading to calls for more and “higher-quality” weapons.

Every aspect of damage expectancy is subject to the politics and negotiation of interservice rivalry and weapons procurement. For example, retired Navy vice admiral Gerald E. Miller, who was detailed to the joint planning staff as the Navy representative when the first SIOP was being developed in late summer 1960, describes how the staff established a policy committee to interpret the brief political guidance it had received and to iron out many other issues. The committee, with voting representatives from each of the four services plus other experts, met almost every day to work through problems.26

According to Admiral Miller:

When we came to Polaris, eight had been fired [obviously in tests]. Four got to the target. . . . So the weapons system reliability [PRE] is only going to be 50%. Well, Jesus, if . . . you’re down here to 50%, what kind of a weapon system is that?! And we haven’t even talked about the probability of the weapon not doing the damage because its accuracy’s not very good. So you’re talking about a useless weapon system if its reliability isn’t very good. So a big battle goes on in the conference room . . . for a couple, three days. And finally [the head of SAC and the director of JSTPS] General Power himself came down and sat at the head of the table and said, “All right, I’ll resolve this. Let it be.”27

Prelaunch survivability (PLS), or what is called “planning factor,” is also a highly negotiated number. Indeed, in the original negotiations over Polaris missiles, General Power had argued that the PLS for Polaris was not 1, but less. However, the policy committee determined—not surprisingly, given that submarines could not be detected—that the correct value was 1.28

Conversely, the Strategic Air Command has historically argued for a very high PLS for the ICBM force, somewhere between 0.98 and 1.00.29 To get a prelaunch survivability number that high does not require going first, but requires a launch either on warning or very early in the course of a nuclear attack on the United States. This contradicts more common notions of survivability, such as being able to “ride out an attack,” as submarines can do for long periods of time. To the Air Force, PLS is composed of two probabilities: the probability of survival (PS), which extends to the moment before the impact of incoming warheads, and the probability of launch (PL). The Air Force believes that “the probability of survival for each silo has been unity . . . but erodes very quickly following a [nuclear] attack on the force. . . . Before impact of . . . weapons, the PS is unity and the PL governs the PLS value.”30 It seems a tortured use of ordinary language to think of pre-launch survivability as including the probability of launch. But for the Air Force, “survive” is a transitive verb: a commander can “survive” his forces by launching them.31

Thus the logic of nuclear war planning sets the terms by which military personnel understand threat and by which goals and requirements shape the development of weapons systems. At the same time, the war planning process sets the terms of competition for political, military, and industrial rivalry. Planners justify weapons in terms of the compound algorithm of damage expectancy. The most important damage goals are “severe damage” to “hard targets,” including hardened communication and command sites and, especially, nuclear forces.

In 2000, defense analyst Bruce Blair wrote an op-ed explaining why top American military officers insisted that current nuclear policy prevented them from shrinking the arsenal to fewer than 2,000 to 2,500 strategic nuclear weapons: “The reason for [the military’s] position is a matter of simple arithmetic, buried in the nation’s strategic war plan and ultimately linked to presidential guidance.” Blair’s “simple arithmetic” derives from the numbers and types of nuclear targets in the war plan and the level of expected damage, as discussed above. Not counting hundreds of targets in China and elsewhere, Blair said:

[T]here are about 2,260 so-called vital Russian targets on the list today, only 1,100 of them actual nuclear arms sites within Russia. [In addition,] we have nuclear weapons aimed at 500 “conventional” targets—the buildings and bases of a hollow Russian army on the verge of disintegration; 160 leadership targets, like government offices and military command centers, in a country practically devoid of leadership; and 500 mostly crumbling factories that produced almost no armaments last year.32

The Bush administration had a somewhat different set of targets, but the target categories were fundamentally the same as those in 2000 and earlier, including “critical war-making and war-supporting assets such as WMD forces [read: nuclear forces] and supporting facilities, command and control facilities, and the military and political leadership.”33

When President Barack Obama took office in 2009, the United States had approximately 2,200 strategic nuclear weapons on alert—about the same number it had in 2000. In the first year, the new administration eliminated a few hundred of those weapons. More important, in early April 2010, the administration completed two major documents on nuclear forces and doctrine. President Obama and Russian president Medvedev signed a new arms control agreement, the New START treaty. The U.S. and Russian strategic nuclear arsenals will each be reduced to 1,550 warheads. A decade after Blair’s op-ed, the U.S. military has agreed to “cover” somewhat fewer targets, and therefore requires fewer weapons.

Even more revealing, the Obama administration completed a far-reaching Nuclear Posture Review (NPR), requiring myriad negotiations among the military, the departments of defense, state, energy, and the White House. A crucial issue was the purpose of nuclear weapons: in particular, should the NPR say that the sole purpose of nuclear weapons is to deter? Had it said so, achieving much smaller numbers of nuclear weapons would have been on the table. Instead, and no doubt in deference to military preferences, the NPR says, “The fundamental role of U.S. nuclear weapons . . . is to deter attack on the United States, our allies, and partners.” In other words, besides deterrence, U.S. nuclear weapons have another role: actual use if deemed necessary. Relatedly, a declaration of no first use was removed from an early draft of the document, again indicating that in some situations the United States reserves the right to use nuclear weapons. Given possible use, what determines force size? The NPR is clear: “Russia’s nuclear force will remain a significant factor in determining how much and how fast we are prepared to reduce U.S. forces.” The deep logic of U.S. nuclear war planning has not changed. The United States targets Russian nuclear forces; until the Russians eliminate those targets, the U.S. military will resist much deeper reductions in U.S. forces. Had the Nuclear Posture Review explicitly directed the military to cut the nation’s deployed nuclear weapons arsenal to, say, 500 warheads, it would have forced the military to revise its definition of target coverage and damage criteria.34

Since the end of the Cold War—and especially since the post-2007 movement to create a nuclear-free world, and as seen in the Nuclear Posture Review—the military’s logic has diverged sharply from civilian analysts’ assumptions about the use of nuclear force.35 The latter views as neither legitimate nor credible the possibility of launching hundreds or more nuclear weapons, possibly first, for military purposes against an adversary’s nuclear forces or closely related command and communication nodes.

Instead, in a post–Cold War twist on what earlier had been termed “punitive retaliation,” “minimum deterrence,” or “existential deterrence,” various analysts have called for a commitment to no first use. They generally argue that the only purpose of nuclear weapons is to deter and, if necessary, to respond to the use of nuclear weapons by other countries. In either case, damage should be as “small” as possible.36 In the words of an influential study by the National Academy of Sciences’ Committee on International Security and Arms Control, “The United States should adopt a strategy . . . that would be based neither on predetermined prompt attacks on counterforce targets nor on automatic destruction of cities. . . . If they were ever to be used, [nuclear weapons] would be employed against targets . . . in the smallest possible numbers. . . . The operational posture of the much smaller forces must be designed for deliberate response rather than reaction in a matter of minutes.”37 How small is “much smaller forces”? Some analysts focus on initial reductions to 1,000 nuclear weapons; others foresee a “minimization point” of 500 weapons by 2025; others a total in the “low hundreds.”38

Getting from 500 or somewhat fewer deployed weapons to elimination will be much more difficult. Some advocate a thorough reassessment of possibilities at 500. Others claim that to get to zero it is necessary “to create cooperative geopolitical conditions, regionally and globally, making the prospect of major war or aggression so remote that nuclear weapons are seen as having no remaining deterrent utility.”39 Still others argue that very low numbers of weapons, and zero, would create strong incentives to rearm, and those who retained only a few weapons, or who quickly built back up to a few weapons, would have large advantages over those who did not “break out.” The anxiety over the actions of others could, or would, increase the risk of war.40 By some accounts, stability and deterrence near or at a zero level require the ability to reconstitute nuclear arsenals. In particular, the United States must have a virtual or latent capacity to build nuclear weapons: a “responsive nuclear infrastructure” composed of excellent facilities, skilled weapons designers who can assess and solve potential problems, and a strong scientifically based experimental program to retain and hone skills.41 This may not sound like nuclear abolition, but for some, an agile, “capability-based deterrent” is precisely the requirement for achieving and maintaining a world free of nuclear weapons.42

Clearly, the problems of achieving stability at very low numbers, and zero, are formidable. Nonetheless, we must first get from here to there. To reach 500 weapons or fewer will require nothing less than the upending of the fundamental assumptions of U.S. nuclear war planning: a counterforce strategy that threatens an adversary’s or adversaries’ strategic nuclear forces, leadership, and communications.

Only a few analysts have discussed what targeting options at very low numbers should, or could, look like. A force of a few hundred strategic nuclear warheads, the National Academy committee says,

implies a drastic change in strategic target planning. A force of a few hundred can no longer hold at risk a wide spectrum of the assets of a large opponent, including its leadership, key bases, communication nodes, troop concentrations, and the variety of counterforce targets now included in the target lists. The reduced number of weapons would be sufficient to fulfill the core function [of deterrence], however, through its potential to destroy essential elements of the society or economy of any possible attacker.43

Despite the acknowledgment that much lower numbers will require drastic changes in targeting procedures, the National Academy study does not spell out which “essential elements of society” should be targeted.

Political scientist Charles Glaser is braver in his specificity, arguing that limited nuclear options can range from “pure demonstration attacks, which inflict no damage but communicate U.S. willingness to escalate further” to “more damaging countervalue attacks . . . made against isolated industrial facilities, a small town, or even a small city. Without minimizing the horror of attacks against people, we should acknowledge that there is a vast difference between these attacks and a full-scale attack against major Russian population centers.”44

Only a single detailed study, From Counterforce to Minimal Deterrence: A New Nuclear Policy on the Path toward Eliminating Nuclear Weapons (2009), has analyzed the consequences of a U.S. nuclear attack on a “small” set of targets.

Analysts from the Federation of American Scientists and the Natural Resources Defense Council devised a target set that is a “tightly constrained subset set of countervalue targets,” which they term “infrastructure targeting,” of twelve large industrial targets in Russia: three oil refinery targets; three iron and steel works; two aluminum plants; one nickel plant; and three thermal electric power plants. They ran a number of possible attacks on these plants, some of which are isolated from population centers. The results were, as we would expect, devastating. “With weapons equivalent to the . . . most common U.S. warhead [the 100 kiloton W-76], over a million people would be killed or injured by an attack of just one dozen warheads. This suggests to us that the current U.S. arsenal is vastly more powerful than needed.”45

We are faced, then, with deep contradiction. On the one hand, a number of civilian analysts call for a no-first-use, limited retaliation policy with an arsenal ranging from a few hundred to 1,000 nuclear weapons. But these analysts offer no argument about how and with what political effect one nuclear weapon or tens of nuclear weapons could be used in war—other than some implicit notion of shock or inflicted punishment leading to the enemy’s capitulation. They do predict very large numbers of casualties, making it difficult to see how the benefits of such use could outweigh the moral recoil and loss of international standing that would result. But perhaps the implicit idea is more radical: the U.S. government may threaten to use nuclear weapons but, in fact, cannot use them at all, or can use them only after being attacked to then wreak vengeance—thus deterring a direct attack.46 This does not seem unreasonable. But it runs counter to military logic, identity, and entrenched organizational algorithms.

On the other hand, the U.S. military personnel involved in nuclear targeting operate with deeply ingrained understandings and organizational routines that led to exceedingly large numbers of nuclear weapons during the Cold War and to very high levels of lethality afterward. Weapons numbers have since come down, but they appear to be “sticky” at about 1,500 deployed strategic warheads. If the military were amenable to eliminating another 1,000 weapons, the National Academy committee is persuasive in arguing that a force of a few hundred weapons will have to be organized around very different principles.

What will it take to get there? Certainly it will require a more far-reaching internal agreement than we can see in the Obama administration’s 2010 Nuclear Posture Review. Such an agreement will cut against deeply ingrained military understandings, and against some vested interests as well. Politically, such an agreement cannot be imposed, and but will require strong allies within the military who are willing to accept and implement it. Whether such allies already exist is unclear. The best chance for success rests in finding or creating military leaders who are willing to rethink the basis of U.S. nuclear war planning, and who are able to persuade their colleagues about the advantages of doing so.

1. McGeorge Bundy, “To Cap the Volcano,” Foreign Affairs 48, no. 1 (October 1969): 10; David Alan Rosenberg, “The Origins of Overkill: Nuclear Weapons and American Strategy, 1945–1960,” International Security 7, no. 4 (Spring 1983): 15, 17.

2. Committee on International Security and Arms Control, National Academy of Sciences, The Future of U.S. Nuclear Weapons Policy (Washington, DC: National Academy Press, 1997), p. 16.

3. I have used Bruce G. Blair’s formula in Strategic Command and Control: Redefining the Nuclear Threat (Washington, DC: Brookings Institution, 1985), Table A-3, “Lethality of U.S. and Soviet Strategic Missile Forces, 1975 and 1978,” p. 310. K is lethality per re-entry vehicle: (K) = Yield of warhead (in megatons)2/3/accuracy of re-entry vehicle (circular error probable, or CEP2). Intuitively, K, lethality, is a ratio, an area divided by an area, normalized to megatons. I am grateful to Theodore A. Postol for explaining this to me in an intuitive way. I thank Michael Chaitkin, Jane Esberg, Michael Kent, and Carmella Southward for excellent assistance in collecting, analyzing, and presenting the data in Figures 4.1 and 4.2.

4. See Lynn Eden, Whole World on Fire: Organizations, Knowledge, and Nuclear Weapons Devastation (Ithaca, NY: Cornell University Press, 2004); Alan Robock and Owen Brian Toon, “Local Nuclear War, Global Suffering,” Scientific American 302, no. 1 (January 2010): 74–81.

5. To measure the ability to destroy specific targets, one uses single-shot kill probabilities; see Matthew G. McKinzie et al., The U.S. Nuclear War Plan: A Time for Change (Washington, DC: Natural Resources Defense Council, June 2001), pp. 42–45.

6. Fred Kaplan, “JFK’s First-Strike Plan,” Atlantic (October 2001), n.p.

7. Scott D. Sagan, “SIOP-62: The Nuclear War Plan Briefing to President Kennedy,” International Security 12, no. 1 (Summer 1987): 26–28.

8. Carl Kaysen to General Maxwell Taylor, Military Representative to the President, “Strategic Air Planning and Berlin,” 5 September 1961, Top Secret, excised copy, with cover memoranda to Joint Chiefs of Staff Chairman Lyman Lemnitzer, released to National Security Archive, pp. 2–3. Source: U.S. National Archives, Record Group 218, Records of the Joint Chiefs of Staff, Records of Maxwell Taylor, in “First Strike Options and the Berlin Crisis,” September 1961, William Burr, ed., National Security Archive Electronic Briefing Book No. 56 (25 September 2001), at http://www.gwu.edu/~nsarchiv/NSAEBB/ NSAEBB56/.

9. See Rosenberg, “Origins of Overkill,” p. 7; Headquarters, Strategic Air Command, History and Research Division, “History of the Joint Strategic Target Planning Staff: Preparation of SIOP-63 [effective 1 July 1962],” January 1964, Top Secret, excised copy, p. 14, in “New Evidence on the Origins of Overkill,” in The Nuclear Vault, William Burr, ed., National Security Archive Electronic Briefing Book No. 236 (1 October 2009), at http://www.gwu.edu/~nsarchiv/nukevault/ebb236/index.htm.

10. Eden, Whole World on Fire: “census of . . . destruction,” p. 107; “enumerated specific structures . . . routing and timing,” pp. 32–24, 97, 108.

11. M. E. Hayes, Strategic Air Command Historical Staff, “History of the Strategic Target Planning Staff, SIOP-4 H/I, July 1970–June 1971,” 6 January 1972, pp. 2, 3, at DoD FOIA online Reading Room, at http://www.dod.gov/pubs/foi/reading_room/467.pdf.

12. Rosenberg, “Origins of Overkill,” p. 22 (emphasis added).

13. Not for attribution comment, Bellevue, Nebraska, 21 May 1987; Hans Kristensen, “Targets of Opportunity,” Bulletin of Atomic Scientists (September/October 1997): 27.

14. Walton S. Moody, Strategic Air Command Historical Staff, “History of the Joint Strategic Target Planning Staff, SIOP-4 J/K, July 1971–June 1972,” n.d., p. 21; Appendix “F,” p. 69 (emphasis added), at DoD FOIA online Reading Room, at http://www.dod. gov/pubs/foi/reading_room/468.pdf.

15. Terry Terriff, The Nixon Administration and the Making of U.S. Nuclear Strategy (Ithaca, NY: Cornell University Press, 1995), pp. 133–34.

16. Janne E. Nolan, Guardians of the Arsenal: The Politics of Nuclear Strategy (New York: Basic Books, 1989), pp. 110–11, quoting from Annual Defense Department Report for Fiscal 1978 (Washington, DC: GPO, 1977), p. 68.

17. Unattributed interview with Lynn Eden, Arlington, Virginia, 3 November 1987.

18. When academics were asked: Nolan, Guardians of the Arsenal, pp. 110–11; Michael Kennedy and Kevin N. Lewis, “On Keeping Them Down; or, Why Do Recovery Models Recover So Fast?” in Strategic Nuclear Targeting, Desmond Ball and Jeffrey Richelson, eds. (Ithaca, NY: Cornell University Press, 1986), pp. 195–96.

19. Scott D. Sagan, Moving Targets: Nuclear Strategy and National Security (Princeton: Princeton University Press, 1989), pp. 46–47 (emphasis added).

20. Nolan, Guardians of the Arsenal, pp. 134–35; Sagan, Moving Targets, pp. 50–53, quotation at p. 51.

21. Sagan, Moving Targets, p. 52.

22. Graham Spinardi, From Polaris to Trident: The Development of US Fleet Ballistic Missile Technology (Cambridge: Cambridge University Press, 1994), pp. 150–51.

23. On damage criteria, see Eden, Whole World on Fire, pp. 32–34; 288–89.

24. General Russell Dougherty (USAF, ret.), interview with Lynn Eden, McLean, Virginia, 30 October 1987.

25. See the excellent discussion of the algorithms of targeting in Theodore A. Postol, “Targeting,” in Managing Nuclear Operations, John D. Steinbruner, Ashton B. Carter, and Charles A. Zracket, eds. (Washington, DC: Brookings Institution, 1987), pp. 379–80.

26. Vice Admiral Gerald E. Miller (USN, ret.), interview with Lynn Eden, Arlington, Virginia, 10 July 1989.

27. Ibid.

28. Ibid.

29. The argument for a very high PLS was made in what the Air Force called its “Minimum Risk Briefing,” a briefing on what forces were required in order to “minimize risk” to the United States. Theodore A. Postol, conversations with Lynn Eden, Stanford, California, 8 February 1988, and 16 June 2009.

30. George J. Seiler, Strategic Nuclear Force Requirements and Issues (Air Power Research Institute, Maxwell Air Force Base, Alabama: Air University Press, 1983), pp. 60–61.

31. Air Force general, conversation with Lynn Eden, Bellevue, Nebraska, 20 May 1987. “Time dependent variable”: Seiler, Strategic Nuclear Force Requirements, p. 60.

32. Bruce G. Blair, “Trapped in the Nuclear Math,” op-ed, New York Times, 12 June 2000; see also Hans M. Kristensen, “Obama and the Nuclear War Plan,” Federation of American Scientists Issue Brief, 25 February 2010.

33. “Critical war-making”: Hans M. Kristensen, Robert S. Norris, and Ivan Oelrich, From Counterforce to Minimal Deterrence: A New Nuclear Policy on the Path toward Eliminating Nuclear Weapons, Federation of American Scientists and the Natural Resources Defense Council, Occasional Paper No. 7, April 2009, p. 9, also p. 22.

34. U.S. Department of Defense, Nuclear Posture Review Report (April, 2010), pp. 15, 30. Compare Gary Schaub, Jr. and James Forsyth, Jr., “An Arsenal We Can All Live With,” New York Times, May 23, 2010.

35. See the “Gang of Four”: George P. Shultz, William J. Perry, Henry A. Kissinger, and Sam Nunn, “A World Free of Nuclear Weapons,” Wall Street Journal, 4 January 2007; Shultz et al., “Toward a Nuclear-Free World,” Wall Street Journal, 15 January 2008.

36. “Punitive retaliation”: Charles Glaser, Analyzing Strategic Nuclear Policy (Princeton: Princeton University Press, 1990), pp. 52–54; “minimum deterrence”: Robert Jervis, Psychology and Deterrence (Baltimore, MD: Johns Hopkins University Press, 1985), p. 146; “existential deterrence”: McGeorge Bundy, “The Bishops and the Bomb,” review of The Challenge of Peace: God’s Promise and Our Response, pastoral letter of the U.S. Bishops on War and Peace, New York Review of Books 30, no. 10 (16 June 1983): 4.

37. Committee on International Security and Arms Control, Future of U.S. Nuclear Weapons Policy, pp. 6–8.

38. On 1,000 weapons, see David Holloway, “Further Reductions in Nuclear Weapons,” in Reykjavik Revisited: Steps toward a World Free of Nuclear Weapons, George P. Shultz, Steven P. Andreasson, Sidney D. Drell, and James E. Goodby, eds. (Stanford:Hoover Institution Press, 2008), pp. 1–45; on 500 weapons, see Report of the International Commission on Nuclear Non-Proliferation and Disarmament, Gareth Evans and Yoriko Kawaguchi, cochairs, Eliminating Nuclear Threats: A Practical Agenda for Global Policymakers (15 December 2009); on 1,000 and then reductions to “roughly 300 nuclear weapons—of which at least 100 were secure, survivable, and deliverable,” see Committee on International Security and Arms Control, Future of U.S. Nuclear Weapons Policy, pp. 76–78, quotation here on p. 80.

39. Thorough reassessment at 500: unattributed statement, Stanford University, 2 November 2009; creating “cooperative geopolitical conditions”: International Commission on Nuclear Non-Proliferation and Disarmament, Eliminating Nuclear Threats, p.205.

40. Thomas C. Schelling, “The Role of Deterrence in Total Disarmament,” Foreign Affairs 40, no. 3 (April 1962): 392–406; Schelling, “A World without Nuclear Weapons?” Daedalus 138, no. 4 (Fall 2009): 124–29; Charles L. Glaser, “The Flawed Case for Nuclear Disarmament,” Survival 40, no. 1 (Spring 1998): 112–28.

41. An early argument to this effect is Jonathan Schell, The Fate of the Earth, first published in 1982 and now available in Schell’s The Fate of the Earth and The Abolition (Stanford: Stanford University Press, 2000).

42. Joseph C. Martz, “The United States’ Nuclear Weapons Stockpile and Complex: Past, Present and Future,” talk at Center for International Security and Cooperation, Stanford University, 10 November 2009.

43. Committee on International Security and Arms Control, Future of U.S. Nuclear Weapons Policy, p. 80.

44. Charles L. Glaser, “Nuclear Policy without an Adversary: U.S. Planning for the Post-Soviet Era,” International Security 16, no. 4 (Spring, 1992): 52.

45. Kristensen et al., From Counterforce to Minimal Deterrence, pp. 31, 34, 41.

46. This assumes that an attack can be attributed; one cannot retaliate if one does not know whom to address.