5

ORGANIZING OUR TIME

What Is the Mystery?

Ruth was a thirty-seven-year-old married mother of six. She was planning dinner for her brother, her husband, and her children to be served at six P.M. At 6:10, when her husband walked into the kitchen, he saw that she had two pots going on the stove, but the meat was still frozen and the salad was only partly made. Ruth had just picked up a tray of dessert and was getting ready to serve it. She had no awareness that she was doing things in the wrong order, or in fact that a proper order existed.

Ernie began his career as an accountant and was promoted to comptroller of a home building firm at age thirty-two. His friends and family considered him to be especially responsible and reliable. At age thirty-five he abruptly put all his savings into a partnership with a sketchy businessman and soon after had to declare bankruptcy. Ernie drifted through job after job and was fired from each for being late, disorganized, and a general deterioration of his ability to plan anything or to properly prioritize his tasks. He required more than two hours to get ready for work in the morning, and often spent entire days doing nothing more than shaving and washing his hair. Ernie suddenly had lost the ability to properly evaluate future needs: He adamantly refused to get rid of useless possessions such as five broken television sets, six broken fans, assorted dead houseplants, and three bags crammed full of empty frozen orange juice cans.

Peter had been a successful architect with a graduate degree from Yale, a special talent for math and science, and an IQ 25 points above average. Given a simple assignment of reorganizing a small office space, he found himself utterly perplexed. He spent nearly two hours preparing to begin the project, and once he started, he inexplicably kept starting over. He made several preliminary sketches of idea fragments but was unable to connect those ideas or to refine the sketches. He was well aware of his disordered thinking. “I know what I want to draw, but I just don’t do it. It’s crazy . . . it’s as if I’m getting a train of thought and then I start to draw it, and then I lose the train of thought. And, then, I have another train of thought that’s in a different direction and the two don’t [meet] . . . and this is a very simple problem.”

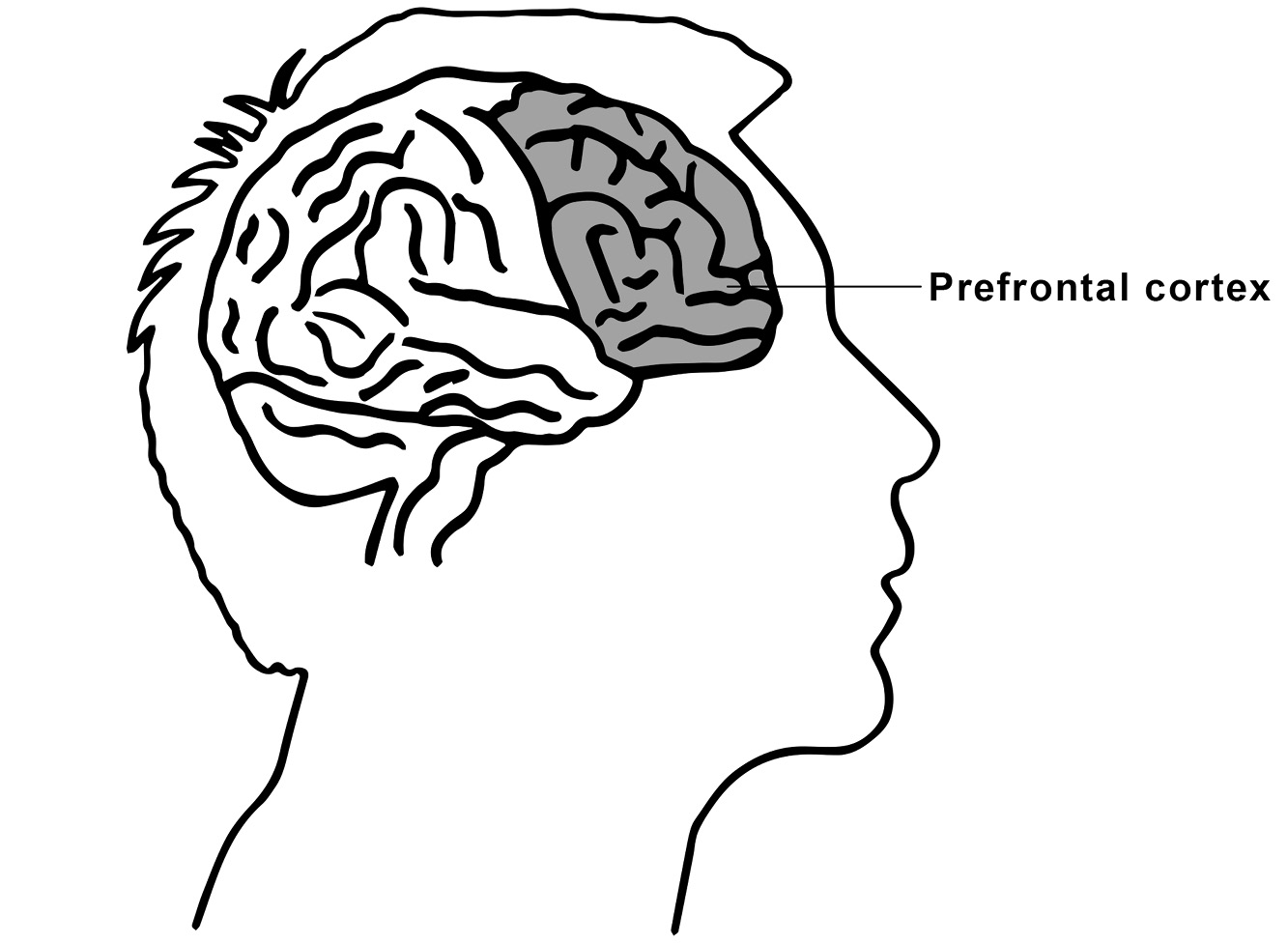

What Ruth, Ernie, and Peter have in common is that shortly before these episodes, all three suffered damage to their prefrontal cortex. This is the part of the brain I wrote about before, which, along with the anterior cingulate, basal ganglia, and insula, helps us to organize time and engage in planning, to maintain attention and stick with a task once we’ve started it. The networked brain is not a mass of undifferentiated tissue—damage to discrete regions of it often results in very specific impairments. Damage to the prefrontal cortex wreaks havoc with the ability to plan a sequence of events and thereby sustain calm, productive effort resulting in the accomplishment of the goals we’ve set ourselves in the time we have. But even the healthiest of us sometimes behave as though we’ve got frontal lobe damage, missing appointments, making silly mistakes now and then, and not making the most of our brain’s evolved capacity to organize time.

The Biological Reality of Time

Both mystics and physicists tell us that time is an illusion, simply a creation of our minds. In this respect, time is like color—there is no color in the physical world, just light of different wavelengths reflecting off of objects; as Newton said, the light waves themselves are colorless. Our entire sense of color results from the visual cortex in our brains processing these wavelengths and interpreting them as color. Of course that doesn’t make it subjectively any less real—we look at a strawberry and it is red, it doesn’t just seem red. Time can be thought of similarly as an interpretation that our brains impose on our experience of the world. We feel hungry after a certain amount of time has passed, sleepy after we’ve been awake for a certain amount of time. The regular rotation of the earth on its axis and around the sun leads us to organize time as a series of cyclical events, such as day and night and the four seasons, that in turn allow us to mentally register the passage of time. And having registered time, more so than ever before in human history, we divide up that time into chunks, units to which we assign specific activities and expectations for what we’ll get done in them. And these chunks of time are as real to us as a strawberry is red.

Most of us live by the clock. We make appointments, wake and sleep, eat, and organize our time around the twenty-four-hour clock. The duration of the day is tied to the period of rotation of the earth, but what about the idea to divide that up into equal parts—where did that come from? And why twenty-four?

As far as we know, the Sumerians were the first to divide the day into time periods. Their divisions were one-sixth of a day’s sunlight (roughly equivalent to two of our current hours). Other ancient time systems reckoned the day from sunrise to sunset, and divided that period into two equal divisions. As a result, these ancient mornings and afternoons would vary in length by season as the days got longer and shorter.

The three most familiar divisions of time we make today continue to be based on the motions of heavenly bodies, though now we call this astrophysics. The length of a year is determined by the time it takes the earth to circle the sun; the length of a month is (more or less) the time it takes the moon to circle the earth; the length of a day is the time it takes the earth to rotate on its axis (and observed by us as the span between two successive sunrises or sunsets). But further divisions are not based on any physical laws and tend to be based on historical factors that are largely arbitrary. There is nothing inherent in any biological or astrophysical cycle that would lead to the division of a day into twenty-four equal segments.

The current practice of dividing the clock into twenty-four comes from the ancient Egyptians, who divided the day into ten parts and then added an hour for each of the ambiguous periods of twilight, yielding twelve parts. Egyptian sundials in archeological sites testify to this. After nightfall, time was kept by a number of means, including tracking the motion of the stars, the burning of candles, or the amount of water that flowed through a small hole from one vessel to another. The Babylonians also used fixed duration with twenty-four hours in a day, as did Hipparchus, the ancient Greek mathematician and astronomer.

The division of the hour into sixty minutes, and the minutes into sixty seconds is also arbitrary, deriving from the Greek mathematician Eratosthenes, who divided the circle into sixty parts for an early cartographic system representing latitudes.

For most of human history, we did not have clocks or indeed any way of accurately reckoning time. Meetings and ritual get-togethers would be arranged by referencing obvious natural events, such as “Please drop by our camp when the moon is full” or “I’ll meet you at sunset.” Greater precision than that wasn’t possible, but it wasn’t needed, either. The kind of precision we’ve become accustomed to began after railroads were built. You might think the rationale is that railroad operators wanted to make departure times accurate and standardized as a convenience for customers, but it really grew out of safety concerns. After a series of railroad collisions in the early 1840s, investigators sought ways to improve communication and reduce the risk of accidents. Prior to that, timekeeping was considered a local matter for each city or town. Because there did not exist rapid forms of communication or transportation, there was no practical disadvantage to one location being desynchronized from another—and no way to really tell! Sir Sandford Fleming, a Scottish engineer who had helped design many of the railroads in Canada, came upon the idea of worldwide standard time zones, which were adopted by all Canadian and U.S. railroads in late 1883. The United States Congress didn’t make it into law until the Standard Time Act was passed thirty-five years later.

Still, what we call hours, minutes, and days are arbitrary: There is nothing physically or biologically critical about the day being divided into twenty-four parts, or the hour and minute being divided into sixty parts. They were easy to adopt because these divisions don’t contradict any inherent biological process.

Are there any biological constants to time? Our life span appears to be limited to about one hundred years (plus or minus twenty) due to aging. One theory used to be that life span limits are programmed into the genes to limit population size, but this has been dismissed because, in the harsh conditions of the wild, most species don’t live long enough to age, so there would be no threat of overpopulation. A few species don’t age at all and so are technically immortal. These include some species of jellyfish, flatworms (planaria), and hydra; the only causes of death in them are from injury or disease. This is in stark contrast to humans—of the roughly 150,000 people who die in the world each day, two-thirds die from age-related causes, and this number can reach 90% in peaceful industrialized nations, where war or disease is less likely to shorten life.

Natural selection has very limited or no opportunities to exert any direct influence on the aging process. Natural selection will tend to favor genes that have good effects on the organism early in life, prior to reproductive age, even if they have bad effects at older ages. Once an individual has reproduced and passed on his or her genes to the next generation, natural selection no longer has a means by which to operate on that person’s genome. This has two consequences. If an early human inherited a gene mutation that rendered him less likely to reproduce—a gene that made him vulnerable to early disease or simply made him an unattractive mate—that gene would be less likely to show up in the next generation. On the other hand, suppose there are two gene mutations that each conferred a survival advantage and made this early human especially attractive, but one of them has the side effect of causing cancer at age seventy-five, decades after the most likely age at which an individual reproduces. Natural selection has no way to discourage the cancer-causing gene because the gene doesn’t show itself until long after it has been passed on to the next generation. Thus, genetic variations that challenge survival at an old age—variations such as a susceptibility to cancer, or weakening of the bones—will tend to accumulate as one gets older and farther away in time from the peak age of reproduction. (This is because such a small percentage of organisms reproduce after a certain age that any investment in genetic mechanisms for survival beyond this age benefits a very small percentage of the population.) There is also the Hayflick limit, which states that cells can divide only a maximum number of times due to errors that accumulate during successive cell divisions. The fact that we not only die but are aware that our time is limited has different effects on us across the life span—something I write about at the end of this chapter.

At the level of hours and minutes, the most relevant constants are: human heart rates, which normally vary from 60 to 100 beats per minute; the need to spend roughly one-third of our time sleeping in order to function properly; and without cues from the sun, our bodies will drift toward a twenty-five-hour day. Biologists and physiologists still don’t know why this is so. Moving down to the level of time that occurs at 1/1000 of a second are biological constants with respect to the temporal resolution of our senses. If a sound has a gap in it shorter than 10 milliseconds, we will tend not to hear it, because of resolution limits of the auditory system. For a similar reason, a series of clicks ceases to sound like clicks and becomes a musical note when the clicks are presented at a rate of about once every 25 milliseconds. If you’re flipping through static (still) pictures, they must be presented slower than about once every 40 milliseconds in order for you to see them as separate images. Any faster than that and they exceed the temporal resolution of our visual system and we perceive motion where there is none (this is the basis of flipbooks and motion pictures).

Photographs are interesting because they can capture and preserve the world at resolutions that exceed those of our visual system. When this happens, they allow us to see a view of the world that our eyes and brains would never see on their own. Shutter speeds of 125 and 250 provide samples of the world in 8 millisecond and 4 millisecond slices, and this is part of our fascination with them, particularly as they capture human movement and human expressions. These sensory limits are constrained by a combination of neural biology and the physical mechanics of our sensory organs. Individual neurons have a range of firing rates, on the order of once per millisecond to once every 250 milliseconds or so.

We have a more highly developed prefrontal cortex than any other species. It’s the seat of many behaviors that we consider distinctly human: logic, analysis, problem solving, exercising good judgment, planning for the future, and decision-making. It is for these reasons that it is often called the central executive, or CEO of the brain. Extensive two-way connections between the prefrontal cortex and virtually every other region of the brain place it in a unique position to schedule, monitor, manage, and manipulate nearly every activity we undertake. Like real CEOs, these cerebral CEOs are highly paid in metabolic currency. Understanding how they work (and exactly how they get paid) can help us to use their time more effectively.

It’s natural to think that because the prefrontal cortex is orchestrating all this activity and thought, it must have massive neural tracts for back-and-forth communication with other brain regions so that it can excite them and bring them on line. In fact, most of the prefrontal cortex’s connections to other brain regions are not excitatory; they’re the opposite: inhibitory. That’s because one of the great achievements of the human prefrontal cortex is that it provides us with impulse control and, consequently, the ability to delay gratification, something that most animals lack. Try dangling a string in front of a cat or throwing a ball in front of a retriever and see if they can sit still. Because the prefrontal cortex doesn’t fully develop in humans until after age twenty, impulse control isn’t fully developed in adolescents (as many parents of teenagers have observed). It’s also why children and adolescents are not especially good at planning or delaying gratification.

When the prefrontal cortex becomes damaged (such as from disease, injury, or a tumor), it leads to a specific medical condition called dysexecutive syndrome.

The condition is recognized by the kinds of planning and time coordination deficits that Ruth the homemaker, Ernie the accountant, and Peter the architect suffered from. It is also often accompanied by an utter lack of inhibition across a range of behaviors, particularly in social settings. Patients may blurt out inappropriate remarks, or go on binges of gambling, drinking, or sex with inappropriate partners. And they tend to act on what is right in front of them. If they see someone moving, they have difficulty inhibiting the urge to imitate them; if they see an object, they pick it up and use it.

What does all this have to do with organizing time? If your inhibitions are reduced, and you’re impaired at seeing the future consequences of your actions, you tend to do things now that you might regret later, or that make it difficult to properly complete projects you’re working on. Binge-watch an entire season of Mad Men instead of working on the Pensky file? Eat a donut (or two) instead of sticking to your diet? That’s your prefrontal cortex not doing its job. In addition, damage to the prefrontal cortex causes an inability to effectively go forward or backward in time in one’s mind—remember Peter the architect’s description of starting over and over and not being able to move forward. Dysexecutive syndrome patients often get stuck in the present, doing something over and over again, perseverating, revealing a failure in temporal control. They can be terrible at organizing their calendars and To Do lists due to a double whammy of neural deficits. First, they’re unable to place events in the correct temporal order. A patient with severe damage might attempt to bake the cake before having added all the ingredients. And many frontal lobe patients are not aware of their deficit; a loss of insight is associated with these frontal lobe lesions, such that patients generally underestimate their impairment. Having an impairment is bad enough, but if you don’t know you have it, you’re liable to go headlong into situations without taking proper precautions, and end up in trouble.

As if that weren’t enough, advanced prefrontal cortex damage interferes with the ability to make connections and associations between disparate thoughts and concepts, resulting in a loss of creativity. The prefrontal cortex is especially important for generating creative acts in art and music. This is the region of the brain that is most active when creative artists are functioning at their peak.

If you’re interested in seeing what it’s like to have prefrontal cortex damage, there’s a simple, reversible way: Get drunk. Alcohol interferes with the ability of prefrontal cortex neurons to communicate with one another, by disrupting dopamine receptors and blocking a particular kind of neuron called an NMDA receptor, mimicking the damage we see in frontal lobe patients. Heavy drinkers also experience the frontal lobe system double whammy: They may lose certain capabilities, such as impulse control or motor coordination or the ability to drive safely, but they aren’t aware that they’ve lost them—or simply don’t care—so they forge ahead anyway.

An overgrowth of dopaminergic neurons in the frontal lobes leads to autism (characterized by social awkwardness and repetitive behaviors), which mimics frontal lobe damage to some degree. The opposite, a reduction of dopaminergic neurons in the frontal lobes, occurs in Parkinson’s disease and attention deficit disorder (ADD). The result then is scattered thinking and a lack of planning, which can sometimes be improved by the administration of L-dopa or of methylphenidate (also known by its brand name Ritalin), drugs that increase dopamine in the frontal lobes. From autism and Parkinson’s, we’ve learned that too much or too little dopamine causes dysfunction. Most of us live in a Goldilocks zone where everything is just right. That’s when we plan our activities, follow through on our plans, and inhibit impulses that would take us off track.

It may be obvious, but the brain coordinates a large share of the body’s housekeeping and timekeeping functions—regulating heart rate and blood pressure, signaling when it’s time to sleep and wake up, letting us know when we’re hungry or full, and maintaining body temperature even as the outside temperature changes. This coordination takes place in the so-called reptilian brain, in structures we share with all vertebrates. In addition to this, there are the higher cognitive functions of the brain handled by the cerebral cortex: reasoning, problem solving, language, music, precision athletic movement, mathematical ability, art, and the mental operations that support them, including memory, attention, perception, motor planning, and categorization. The entire brain weighs three pounds (1.4 kg) and so is only a small percentage of an adult’s total body weight, typically 2%. But it consumes 20% of all the energy the body uses. Why? The perhaps oversimplified answer is that time is energy.

Neural communication is very rapid—it has to be—reaching speeds of over 300 miles per hour, and with neurons communicating with one another hundreds of times per second. The voltage output of a single resting neuron is 70 millivolts, about the same as the line output of an iPod. If you could hook up a neuron to a pair of earbuds, you could actually hear its rhythmic output as a series of clicks. My colleague Petr Janata did this many years ago with neurons in the owl’s brain. He attached small thin wires to neurons in the owl’s brain and connected the other end of the wires to an amplifier and a loudspeaker. Playing music to the owl, Petr could hear in the neural firing pattern the same pattern of beats and pitches that were in the original music.

Neurochemicals that control communication between neurons are manufactured in the brain itself. These include some relatively well-known ones such as serotonin, dopamine, oxytocin, and epinephrine, as well as acetylcholine, GABA, glutamate, and endocannabinoids. Chemicals are released in very specific locations and they act on specific synapses to change the flow of information in the brain. Manufacturing these chemicals, and dispersing them to regulate and modulate brain activity, requires energy—neurons are living cells with a metabolism, and they get that energy from glucose. No other tissue in the body relies solely on glucose for energy except the testes. (This is why men occasionally experience a battle for resources between their brains and their glands.)

A number of studies have shown that eating or drinking glucose improves performance on mentally demanding tasks. For example, experimental participants are given a difficult problem to solve, and half of them are given a sugary treat and half of them are not. The ones who get the sugary treat perform better and more quickly because they are supplying the body with glucose that goes right to the brain to help feed the neural circuits that are doing the problem solving. This doesn’t mean you should rush out and buy armloads of candy—for one thing, the brain can draw on vast reserves of glucose already held in the body when it needs them. For another, chronic ingestion of sugars—these experiments looked only at short-term ingestion—can damage other systems and lead to diabetes and sugar crash, the sudden exhaustion that many people feel later when the sugar high wears off.

But regardless of where it comes from, the brain burns glucose, as a car burns gasoline, to fuel mental operations. Just how much energy does the brain use? In an hour of relaxing or daydreaming, it uses eleven calories or fifteen watts—about the same as one of those new energy-efficient lightbulbs. Using the central executive for reading for an hour takes about forty-two calories. Sitting in class, by comparison, takes sixty-five calories—not from fidgeting in your seat (that’s not factored in) but from the additional mental energy of absorbing new information. Most brain energy is used in synaptic transmission, that is, in connecting neurons to one another and, in turn, connecting thoughts and ideas to one another. What all this points to is that good time management should mean organizing our time in a way that maximizes brain efficiency. The big question many of us ask today is: Does that come from doing one thing at a time or from multitasking? If we only do one thing at a time, can we ever hope to catch up?

Mastering the See-Saw of Events

The brain “only takes in the world little bits and chunks at a time,” says MIT neuroscientist Earl Miller. You may think you have a seamless thread of data coming in about the things going on around you, but the reality is your brain “picks and chooses and anticipates what it thinks is going to be important, what you should pay attention to.”

In Chapters 1 and 3, I talked about the metabolic costs of multitasking, such as reading e-mail and talking on the phone at the same time, or social networking while reading a book. It takes more energy to shift your attention from task to task. It takes less energy to focus. That means that people who organize their time in a way that allows them to focus are not only going to get more done, but they’ll be less tired and less neurochemically depleted after doing it. Daydreaming also takes less energy than multitasking. And the natural intuitive see-saw between focusing and daydreaming helps to recalibrate and restore the brain. Multitasking does not.

Perhaps most important, multitasking by definition disrupts the kind of sustained thought usually necessary for problem solving and for creativity. Gloria Mark, professor of informatics at UC Irvine, explains that multitasking is bad for innovation. “Ten and a half minutes on one project,” she says, “is not enough time to think in-depth about anything.” Creative solutions often arise from allowing a sequence of altercations between dedicated focus and daydreaming.

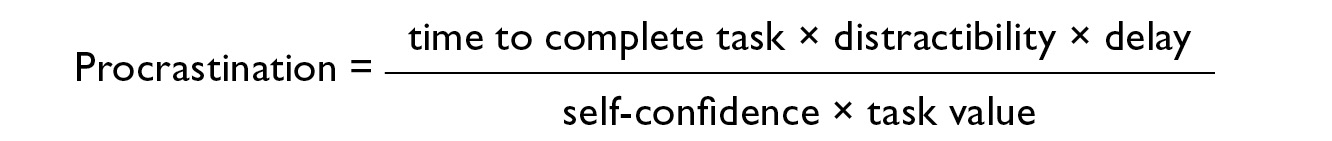

Further complicating things is that the brain’s arousal system has a novelty bias, meaning that its attention can be hijacked easily by something new—the proverbial shiny objects we use to entice infants, puppies, and cats. And this novelty bias is more powerful than some of our deepest survival drives: Humans will work just as hard to obtain a novel experience as we will to get a meal or a mate. The difficulty here for those of us who are trying to focus amid competing activities is clear: The very brain region we need to rely on for staying on task is easily distracted by shiny new objects. In multitasking, we unknowingly enter an addiction loop as the brain’s novelty centers become rewarded for processing shiny new stimuli, to the detriment of our prefrontal cortex, which wants to stay on task and gain the rewards of sustained effort and attention. We need to train ourselves to go for the long reward, and forgo the short one. Don’t forget that the awareness of an unread e-mail sitting in your inbox can effectively reduce your IQ by 10 points, and that multitasking causes information you want to learn to be directed to the wrong part of the brain.

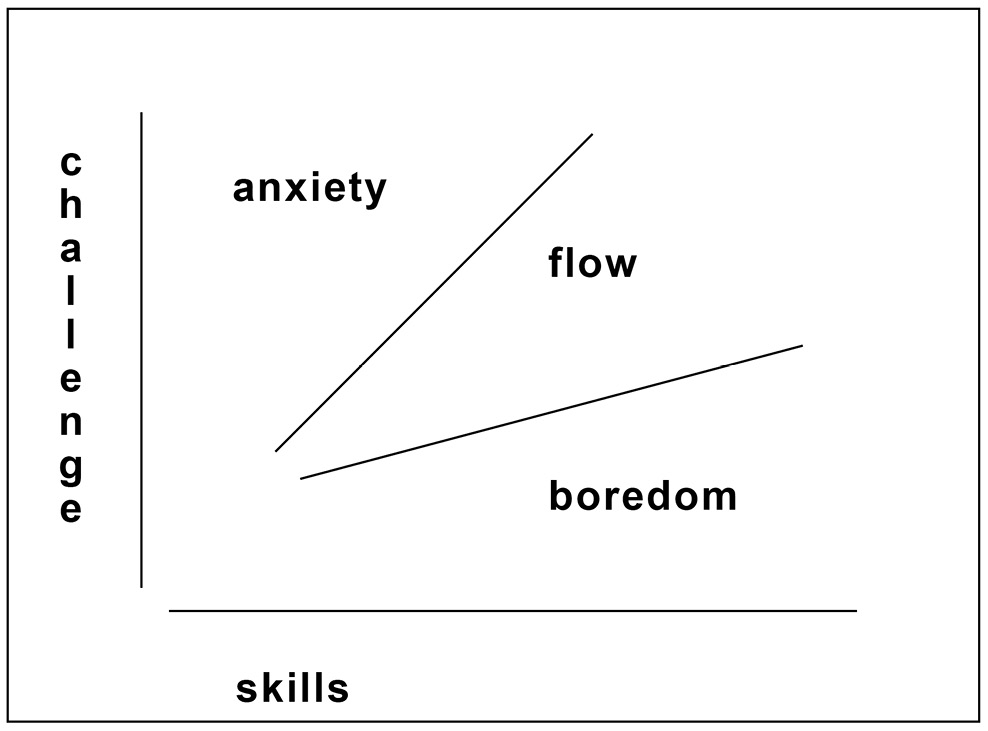

There are individual differences in cognitive style, and the trade-off present in multitasking often comes down to focus versus creativity. When we say that someone is focused, we usually mean they’re attending to what is right in front of them and avoiding distraction, either internal or external. On the other hand, creativity often implies being able to make connections between disparate things. We consider a discovery to be creative if it explores new ideas through analogy, metaphor, or tying together things that we didn’t realize were connected. This requires a delicate balance between focus and a more expansive view. Some individuals who take dopamine-enhancing drugs such as methylphenidate report that it helps them to stay motivated to work, to stay focused, and to avoid distractions, and that it facilitates staying engaged with repetitious tasks. The downside, they report, is that it can destroy their ability to make connections and associations, and to engage in expansive, creative thinking—underscoring the see-saw relationship between focus and creativity.

There is an interesting gene known as COMT that appears to modulate the ease with which people can switch tasks, by regulating the amount of dopamine in the prefrontal cortex. COMT carries instructions to the brain for how to make an enzyme (in this case, catechol-O-methyltransferase, hence the abbreviation COMT) that helps the prefrontal cortex to maintain optimal levels of dopamine and noradrenaline, the neurochemicals critical to paying attention. Individuals with a particular version of the COMT gene (called Val158Met) have low dopamine levels in the prefrontal cortex and, at the same time, show greater cognitive flexibility, easier task switching, and more creativity than average. Individuals with a different version of the COMT gene (called Val/Val homozygotes) have high dopamine levels, less cognitive flexibility, and difficulty task switching. This converges with anecdotal observations that many people who appear to have attention deficit disorder—characterized by low dopamine levels—are more creative and that those who can stay very focused on a task might be excellent workers when following instructions but are not especially creative. Keep in mind that these are broad generalizations based on aggregates of statistical data, and there are many individual variations and individual differences.

Ruth, Ernie, and Peter were stymied by everyday events such as cooking a meal, clearing the house of broken, unwanted items, or redecorating a small office. Accomplishing any task requires that we define a beginning and an ending. In the case of more complex operations, we need to break the whole thing into manageable chunks, each with its own beginning and ending. Building a house, for example, might seem impossibly complicated. But builders don’t look at it that way—they divide the project into stages and chunks: grading and preparing the site, laying the foundation, framing the super structure and supports, plumbing, electrical, installing drywall, floors, doors, cabinets, painting. And then each of those stages is further divided into manageable chunks. Prefrontal cortex damage, among other things, can lead to deficits both in event segmentation—that’s why Peter had trouble rearranging the office—and in stitching the segmented events back into the proper order—why Ruth was cooking the food out of order.

One of the most complicated things that humans do is to put the components of a multipart sequence in their proper temporal order. To accomplish temporal ordering, the human brain has to set up different scenarios, a series of what-ifs, and juggle them in different configurations to figure out how they affect one another. We estimate completion times and work backward. Temporal order is represented in the hippocampus alongside memory and spatial maps. If you’re planting flowers, you dig a hole first, then take the flowers out of their temporary pots, then put the flowers in the ground, then fill the hole with dirt, then water them. This seems obvious for something we do all the time, but anyone who has ever tried to put together IKEA furniture knows that if you do things in the wrong order, you might have to take it apart and start all over from the beginning. The brain is adept at this kind of ordering, requiring communication between the hippocampus and the prefrontal cortex, which is working away busily assembling a mental image of the finished outcome alongside mental images of partly finished outcomes and—subconsciously most of the time—picturing what would happen if you did things out of sequence. (You really don’t want to whip the cream after you’ve spooned it onto the pie—what a mess!)

More cognitively taxing is being able to take a set of separate operations, each with their own completion time, and organize their start times so that they are all completed at the same time. Two common human activities where this is done make an odd couple: cooking and war.

You know from experience that you can’t serve the pie just as it comes out of the oven because it will be too hot, or that it takes some time for your oven to preheat. Your goal of being able to serve the pie at the right time means you need to take into account these various timing parameters, and so you probably work out a quick, seat-of-the-pants calculation about how long the combined pie cooking and cooling period is, how long it will take everyone to eat their soup and their pasta, and what an appropriate period might be to wait between the time everyone finishes the main course and when they’ll want dessert (if you serve it too quickly, they may feel rushed; if you wait too long, they may grow impatient). From here, we work backward from the time we want to serve the pie to when we need to preheat the oven to ensure the timing is right.

Wartime maneuvers also require essentially the same precise organization and temporal planning. In World War II, the Allies took the German army by surprise, using a series of deceptions and the fact that there was no harbor at the invasion site; the Germans assumed it would be impossible to maintain an offensive without shipborne materials. Unprecedented amounts of supplies and personnel were spirited to Normandy in secret so that artificial, portable harbors could be swiftly constructed at Saint-Laurent-sur-Mer and Arromanches. The harbors, code-named Mulberry, were assembled like an enormous jigsaw puzzle and, when fully operational, could move 7,000 tons of vehicles, supplies, and personnel per day. The operation required 545,000 cubic yards of concrete, 66,000 tons of reinforcing steel, 9,000 standards of timber (approximately 1.5 million cubic feet), 440,000 square yards of plywood, and 97 miles of steel wire rope, taking 20,000 men to build it, all of which had to arrive in the proper order and at the proper time. Building it and transporting it to Normandy without detection or suspicion is considered one of the greatest engineering and military feats in human history and a masterpiece of human planning and timing—thanks to connections between the frontal lobes and the hippocampus.

The secret to planning the invasion of Normandy was that, like all projects that initially seem overwhelmingly difficult, it was broken up deftly into small tasks—thousands of them. This principle applies at all scales: If you have something big you want to get done, break it up into chunks—meaningful, implementable, doable chunks. It makes time management much easier; you only need to manage time to get a single chunk done. And there’s neurochemical satisfaction at the completion of each stage.

Then there is the balance between doing and monitoring your progress that is necessary in any multistep project. Each step requires that we stop the actual work every now and then to view it objectively, to ensure we’re carrying it out properly and that we’re happy with the results so far. We step back in our mind’s eye to inspect what we did, figure out whether we need to redo something, whether we can move forward. It’s the same whether we’re sanding a fine wood cabinet, kneading dough, brushing our hair, painting a picture, or building a PowerPoint presentation. This is a familiar cycle: We work, we inspect the work, we make adjustments, we push forward. The prefrontal cortex coordinates the comparison of what’s out-there-in-the-world with what’s in your head. Think of an artist who evaluates whether the paint she just applied had a desirable effect on the painting. Or consider something as simple as mopping the floor—we’re not just blindly swishing the mop back and forth; we’re ensuring that the floor comes clean. And if it doesn’t, we go back and scrub certain spots a little more. In many tasks, both creative and mundane, we must constantly go back and forth between work and evaluation, comparing the ideal image in our head with the work in front of us.

This constant back-and-forth is one of the most metabolism-consuming things that our brain can do. We step out of time, out of the moment, and survey the big picture. We like what we see or we don’t, and then we go back to the task, either moving forward again, or backtracking to fix a conceptual or physical mistake. As you now know well, such attention switching and perspective switching is depleting, and like multitasking, it uses up more of the brain’s nutrients than staying engaged in a single task.

In situations like this, we are functioning as both the boss and the employee. Just because you’re good at one doesn’t mean you’ll be any good at the other. Every general contractor knows painters, carpenters, or tile setters capable of great work, but only when someone is standing by to give perspective. Many subcontractors actually doing the work have neither the desire nor the ability to think about budgets or make decisions about the optimum trade-off between time and money. Indeed, left to their own devices, some are such perfectionists that nothing ever gets finished. I once worked with a recording engineer who blew through a budget trying to make one three-minute song perfect before I was able to stop him and remind him that we still had eleven other songs to do. In the world of music, it’s no accident that only a few artists produce themselves effectively (Stevie Wonder, Paul McCartney, Prince, Jimmy Page, Joni Mitchell, and Steely Dan). Many, many PhD students fall into this category, never finishing their degrees because they can’t move forward—they’re too perfectionistic. The real job in supervising PhD students isn’t teaching them facts; it’s keeping them on track.

Planning and doing require separate parts of the brain. To be both a boss and a worker, one needs to form and maintain multiple, hierarchically organized attentional sets and then bounce back and forth between them. It’s the central executive in your brain that notices that the floor is dirty. It forms an executive attentional set for “mop the floor” and then constructs a worker attentional set for doing the actual mopping. The executive set cares only that the job is done and is done well. It might find the mop, a bucket the mop fits into, the floor cleaning product. Then, the worker set gets down to wetting the mop, starting the job, monitoring the mop head so you know when it’s time to put it back in the bucket, rinsing the head now and then when it gets too dirty. A good worker will be able to call upon a level of attention subordinate to all that and momentarily become a kind of detail-oriented worker who sees a spot that won’t come out with the mop, gets down on his hands and knees, and scrapes or scrubs or uses whatever method necessary to get that spot out. This detail-oriented worker has a different mind-set and different goals from those of the regular worker or boss. If your spouse walks in, after the detail guy has been working for fifteen minutes on a smudge off in the corner, and says, “What—are you crazy!? You’ve got the entire floor left to do and the guests will be here in fifteen minutes!” the detail guy is pulled up into the perspective of the boss and sees the big picture again.

All this level shifting, from boss down to worker down to detail worker and back again, is a shifting of the attentional set and it comes with the metabolic costs of multitasking. It’s exactly the reason a good hand car wash facility has these jobs spread out among three classes of workers. There are the car washers who do just the broad strokes of soaping down and rinsing the whole car. When they’re done, the detail guys come in and look closely to see if there are any leftover dirty spots, to clean the wheels and bumpers, and present the car to you. There’s also a boss who’s looking over the whole operation to make sure that no worker spends too much or too little time at any one point or on any one car. By dividing up the roles in this way, each worker forms one, rather than three, attentional sets and can throw himself into that role without worrying about anything at a different level.

We can all learn from this because we all have to be workers in one form or another at least some of the time. The research says that if you have chores to do, put similar chores together. If you’ve collected a bunch of bills to pay, just pay the bills—don’t use that time to make big decisions about whether to move to a smaller house or buy a new car. If you’ve set aside time to clean the house, don’t also use that time to repair your front steps or reorganize your closet. Stay focused and maintain a single attentional set through to completion of a job. Organizing our mental resources efficiently means providing slots in our schedules where we can maintain an attentional set for an extended period. This allows us to get more done and finish up with more energy.

Related to the manager/worker distinction is that the prefrontal cortex contains circuits responsible for telling us whether we’re controlling something or someone else is. When we set up a system, this part of the brain marks it as self-generated. When we step into someone else’s system, the brain marks it that way. This may help explain why it’s easier to stick with an exercise program or diet that someone else sets up: We typically trust them as “experts” more than we trust ourselves. “My trainer told me to do three sets of ten reps at forty pounds—he’s a trainer, he must know what he’s talking about. I can’t design my own workout—what do I know?” It takes Herculean amounts of discipline to overcome the brain’s bias against self-generated motivational systems. Why? Because as with the fundamental attribution error we saw in Chapter 4, we don’t have access to others’ minds, only our own. We are painfully aware of all the fretting and indecision, all the nuances of our internal decision-making process that led us to reach a particular conclusion. (I really need to get serious about exercise.) We don’t have access to that (largely internal) process in others, so we tend to take their certainty as more compelling, in many cases, than our own. (Here’s your program. Do it every day.)

To perform all but the simplest tasks requires flexible thinking and adaptiveness. Along with the many other distinctly human traits discussed, the prefrontal cortex allows us the flexibility to change behavior based on context. We alter the pressure required to slice a carrot versus slicing cheese; we explain our work differently to our grandma than to our boss; we use a pot holder to take something out of the oven but not out of the refrigerator. The prefrontal cortex is necessary for such adaptive strategies for living daily life, whether we’re foraging for food on the savanna or living in skyscrapers in the city.

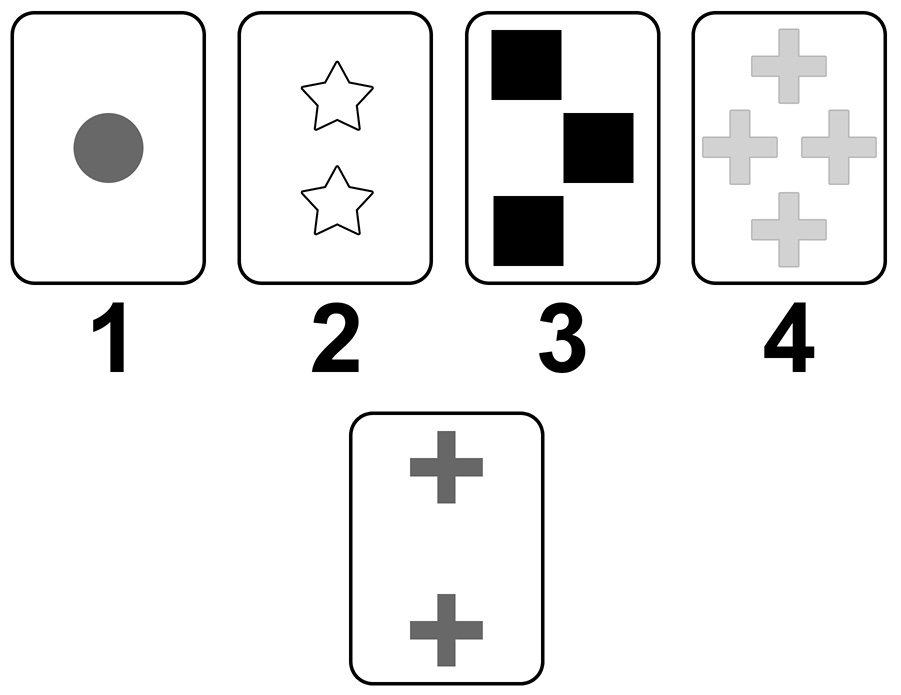

The balance between flexible thinking and staying on task is assessed by neuropsychologists using a test called the Wisconsin Card Sorting Test. People are asked to sort a deck of specially marked cards according to a rule. In the example below, the instruction might be to sort the new, unnumbered card according to the shade of gray, in which case it should be put on pile 1. After getting used to sorting a bunch of cards according to this rule, you’re then given a new rule, for example, to sort by shape (in which case the new card should be put on pile 4) or to sort by number (in which case the new card should be put on pile 2).

People with frontal lobe deficits have difficulty changing the rule once they’ve started; they tend to perseverate, applying an old rule after a new one is given. Or they show an inability to stick to a rule, and err by suddenly applying a new rule without being prompted. It was recently discovered that holding a rule in mind and following it is accomplished by networks of neurons that synchronize their firing patterns, creating a distinctive brain wave. For example, if you’re following the shading rule in the card sorting task, your brain waves will oscillate at a particular frequency until you switch to follow shape, and then they’ll oscillate at a different frequency. You can think of this by analogy to radio broadcasts: It’s as though a given rule operates in the brain on a particular frequency so that all the instructions and communication of that rule can remain distinct from other instructions and communications about other rules, each of which is transmitted and coordinated on its own designated frequency band.

Reaching our goals efficiently requires the ability to selectively focus on those features of a task that are most relevant to its completion, while successfully ignoring other features or stimuli in the environment that are competing for attention. But how do you know what factors are relevant and what factors aren’t? This is where expertise comes in—in fact, it could be said that what distinguishes experts from novices is that they know what to pay attention to and what to ignore. If you don’t know anything at all about cars and you’re trying to diagnose a problem, every screech, sputter, and knock in the engine is potential information and you try to attend to them all. If you’re an expert mechanic, you home in on the one noise that is relevant and ignore the others. A good mechanic is a detective (as is a good physician), investigating the origins of a problem so as to learn the story of what happened. Some car components are relevant to the story and some aren’t. The fact that you filled up with a low-octane gasoline this morning might be relevant to the backfiring. The fact that your brakes squeak isn’t. Similarly, some temporal events are important and some aren’t. If you put in that low-octane gas this morning, it’s different than if you did it a year ago.

We take for granted that movies have well-defined temporal frames—scenes—parts of the story that are segmented with a beginning and an end. One way of signaling this is that when one scene ends, there is a break in continuity—a cut. Its name comes from analog film; in the editing room, the film would be physically cut at the end of one event, and spliced to the beginning of another (nowadays, this is done digitally and there is no physical cutting, but the digital editing tools use a little scissors icon to represent the action, and we still call this a cut, just as we “cut and paste” with our word processors). Without cuts signifying the end of a scene, it would be difficult for the brain to process and digest the material as it became a single onslaught of information, 120 minutes long. Of course modern filmmaking, particularly in action movies, uses far more cuts than was previously the norm, as a way to engage our ever hungrier appetite for visual stimulation.

Movies use the cut in three different ways, which we’ve learned to interpret by experience. A cut can signify a discontinuity in time (the new scene begins three hours later), in place (the new scene begins on the other side of town), or in perspective (as when you see two people talking and the camera shifts from looking at one face to looking at the other).

These conventions seem obvious to us. But we’ve learned them through a lifetime of exposure to comics, TV, and films. They are actually cultural inventions that have no meaning for someone outside our culture. Jim Ferguson, an anthropologist at Stanford, describes his own empirical observation of this when he was doing fieldwork in sub-Saharan Africa:

When I was living among the Sotho, I went into the city one day with one of the villagers. The city is something he had no experience with. This was an intelligent and literate man—he had read the Bible, for example. But when he saw a television for the first time in a shop, he couldn’t make heads or tails of what was going on. The narrative conventions that we use to tell a story in film and TV were completely unknown to him. For example, one scene would end and another would begin at a different time and place. This gap was completely baffling to him. Or during a single scene, the camera would focus on one person, then another, in order to take another perspective. He struggled, but simply couldn’t follow the story. We take these for granted because we grew up with them.

Film cuts are extensions of culturally specific storytelling conventions that we also see in our plays, novels, and short stories. Stories don’t include every single detail about every minute in a character’s life—they jump to salient events, and we have been trained to understand what’s going on.

Our brains encode information in scenes or chunks, mirroring the work of writers, directors, and editors. To do that, the information packets, like movie scenes, must have a beginning and an ending. Implicit in our management of time is that our brains automatically organize and segment the things we see and do into chunks of activity. Richard is not building a house today or even building the bathroom, he is preparing the kitchen floor for the tile. Even Superman chunks—he may wake up every morning and tell Lois Lane, “I’m off to save the world today, honey,” but what he tells himself is the laundry list of chunked tasks that need to be done to accomplish that goal, each with a well-defined beginning and ending. (1. Capture Lex Luthor. 2. Dispose of Kryptonite safely. 3. Hurl ticking bomb into outer space. 4. Pick up clean cape from dry cleaner.)

Chunking fuels two important functions in our lives. First, it renders large-scale projects doable by giving us well-differentiated tasks. Second, it renders the experiences of our lives memorable by segmenting them with well-defined beginnings and endings—this in turn allows memories to be stored and retrieved in manageable units. Although our actual waking time is continuous, we can easily talk about the events of our lives as being differentiated in time. The act of having breakfast has a more or less well differentiated beginning and ending, as does your morning shower. They don’t bleed into one another in your memory because the brain does the editing, segmenting, and labeling for you. And we can subdivide these scenes at will. We make sense of the events in our lives by segmenting them, giving them temporal boundaries. We don’t treat our daily lives as undifferentiated moments, we group moments into salient events such as “brushing my teeth,” “eating breakfast,” “reading the newspaper,” and “driving to the train station.” That is, our brains implicitly impose a beginning and an ending to events. Similarly, we don’t perceive or remember a football game as a continuous sequence of action, we remember the game in terms of its quarters, downs, and specific important plays. And it’s not just because the rules of the game create these divisions. When talking about a particular play, we can further subdivide: We remember the running back peeling off into the open; the quarterback dodging the defensive linemen; the arm of the quarterback stretched back and ready to throw; the fake throw; and then the quarterback suddenly running, stride-by-stride, for a surprise touchdown.

There is a dedicated portion of the brain that partitions long events into chunks, and it is in—you guessed it—the prefrontal cortex. An interesting feature of this event segmentation is that hierarchies are created without our even thinking about them, and without our instructing our brains to make them. That is, our brains automatically create multiple, hierarchical representations of reality. And we can review these in our mind’s eye from either direction—from the top down, that is, from large time scales to small, or from the bottom up, from small time scales to large.

Consider a question such as asking a friend, “What did you do yesterday?” Your friend might give a simple, high-level overview such as “Oh, yesterday was like any other day. I went to work, came home, had dinner, and then watched TV.” Descriptions like these are typical of how people talk about events, making sense of a complex dynamic world in part by segmenting it into a modest number of meaningful units. Notice how this response implicitly skips over a lot of detail that is probably generic and unremarkable, concerning how your friend woke up and got out of the house. And the description jumps right to his or her workday. This is followed by two more salient events: eating dinner and watching TV.

The proof that hierarchical processing exists is in the fact that normal, healthy people can subdivide their answer into increasingly smaller parts if you ask them to. Prompt them with “Tell me more about the dinner?” and you might get a response like “Well, I made a salad, heated up some leftovers from the party we had the night before, and then finished that nice Bordeaux that Heather and Lenny brought over, even though Lenny doesn’t drink.”

And you can drill down still more: “How exactly did you prepare the salad? Don’t leave anything out.”

“I took some lettuce out of the crisper in the refrigerator, washed it, sliced some tomatoes, shredded some carrots, and then added a can of hearts of palm. Then I put on some Kraft Italian dressing.”

“Tell me in even more detail how you prepared the lettuce. As though you were telling someone who has never done this before.”

“I took out a wooden salad bowl from the cupboard and wiped it clean with a dish towel. I opened the refrigerator and took out a head of red leaf lettuce from the vegetable crisper. I peeled off layers of lettuce leaves, looked carefully to make sure that there weren’t any bugs or worms, tore the leaves into bite-size pieces, then soaked them in a bowl of water for a bit. Then I drained the water, rinsed the leaves under running water, and put them in a salad spinner to dry them. Then I put all the now-dry lettuce into the salad bowl and added the other ingredients I mentioned.”

Each of these descriptions holds a place in the hierarchy, and each can be considered an event with a different level of temporal resolution. There is a natural level at which we tend to describe these events, mimicking the natural level of description I wrote about in Chapter 2—the basic level of categories in describing things like birds and trees. If you use a level of description that is too high or too low in the hierarchy—which is to say, a level of description that is unexpected or atypical—it is usually to make some kind of point. It seems aberrant to use the wrong level of description, and it violates the Gricean maxim of quantity.

Artists often flout these norms to make an artistic gesture, to cause the audience to see things differently. We can imagine a film sequence in which someone is preparing a salad, and every little motion of tearing lettuce leaves is shown as a close-up. This might seem to violate a storytelling convention of recounting information that moves the story forward, but in surprising us with this seemingly unimportant lettuce tearing, the filmmaker or storyteller creates a dramatic gesture. By focusing on the mundane, it may convey something about the mental state of the character, or build tension toward an impending crisis in the story. Or maybe we see a centipede in the lettuce that the character doesn’t notice.

The temporal chunking that our brains create isn’t always explicit. In films, when the scene cuts from one moment to another, our brains automatically fill in the missing information, often as a result of a completely separate set of cultural conventions. In television shows from the relatively modest 1960s (Rob and Laura Petrie slept in separate twin beds!), a man and a woman might be seen sitting on the edge of the bed kissing before the scene fades to black and cuts to the next morning, when they wake up together. We’re meant to infer a number of intimate activities that occurred between the fade-out and the new scene, activities that could not be shown on network TV in the 1960s.

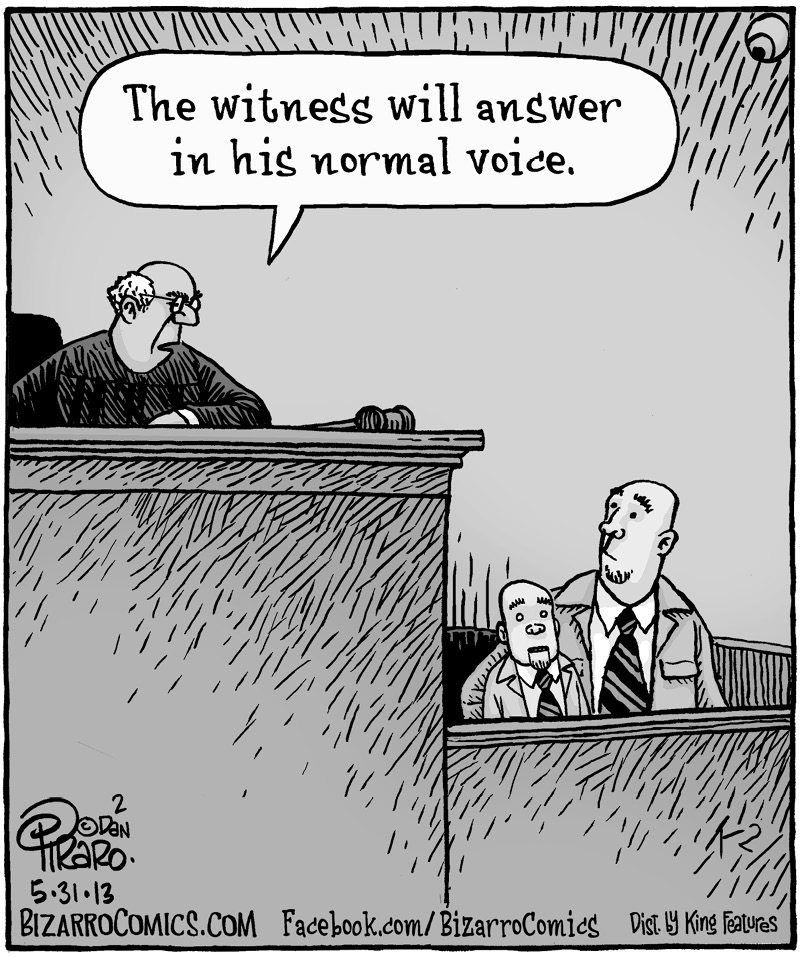

A particularly interesting example of inference occurs in many single-panel comics. Often the humor requires you to imagine what happened in the instant immediately before or immediately after the panel you’re being shown. It’s as though the cartoonist devised a series of four or five panels to tell the story and has chosen to show you only one—and typically not even the funniest one but the one right before or right after what would be the funniest panel. It’s this act of audience participation and imagination that makes the single-panel comic so engaging and so rewarding—to get the joke, you actually have to figure out what some of those missing panels must be.

Take this example from Bizarro:

The humor is not so much in what the judge is saying but in our imagining what must have gone on in the courtroom moments before to elicit such a warning! Because we are coparticipants in figuring out the joke, cartoons like these are more memorable and pleasurable than ones in which every detail is handed to us. This follows a well-established principle of cognitive psychology called levels of processing: Items that are processed at a deeper level, with more active involvement by us, tend to become more strongly encoded in memory. This is why passive learning through textbooks and lectures is not nearly as effective a way to learn new material as is figuring it out for yourself, a method called peer instruction that is being introduced into classrooms with great success.

Sleep Time

You go to bed later or get up earlier. A daily time-management tactic we all use and barely notice revolves around that large block of lost time that can make all of us feel unproductive: sleep. It’s only recently that we’ve begun to understand the enormous amount of cognitive processing that occurs while we’re asleep. In particular, we now know that sleep plays a vital role in the consolidation of events of the previous few days, and therefore in the formation and protection of memories.

Newly acquired memories are initially unstable and require a process of neural strengthening or consolidation to become resistant to interference, and to become accessible to us for retrieval. For a memory to be accessible means that we can retrieve it using a variety of different cues. Take, for example, that lunch of shrimp scampi I had at the beach a few weeks ago with my high-school buddy Jim Ferguson. If my memory system is functioning normally, by today, any of the following queries should be able to evoke one or more memories associated with the experience:

- Have I ever eaten shrimp scampi?

- When’s the last time I had seafood?

- When’s the last time I saw my friend Jim Ferguson?

- Does Jim Ferguson have good table manners?

- Are you still in touch with any friends from high school?

- Do you ever go out to lunch?

- Is it windy at the beach this time of year?

- What were you doing last Wednesday at one P.M.?

In other words, there are a variety of ways that a single event such as a lunch with an old friend can be contextualized. For all of these attributes to be associated with the event, the brain has to toss and turn and analyze the experience after it happens, extracting and sorting information in complex ways. And this new memory needs to be integrated into existing conceptual frameworks, integrated into old memories previously stored in the brain (shrimp is seafood, Jim Ferguson is a friend from high school, good table manners do not include wiping shrimp off your mouth with the tablecloth).

In the last few years, we’ve gained a more nuanced understanding that these different processes are accomplished during distinct phases of sleep. These processes both preserve memories in their original form, and extract features and meaning from the experiences. This allows new experiences to become integrated into a more generalized and hierarchical representation of the outside world that we hold inside our heads. Memory consolidation requires that our brains fine-tune the neural circuits that first encountered the new experience. According to one theory that is gaining acceptance, this has to be done when we’re asleep, or otherwise the activity in those circuits would be confused with an actually occurring experience. All of this tuning, extraction, and consolidation doesn’t happen during one night but unfolds over several sequential nights. Disrupted sleep even two or three days after an experience can disrupt your memory of it months or years later.

Sleep experts Matthew Walker (from UC Berkeley) and Robert Stickgold (from Harvard Medical School) note the three distinct kinds of information processing that occur during sleep. The first is unitization, the combining of discrete elements or chunks of an experience into a unified concept. For example, musicians and actors who are learning a new piece or scene might practice one phrase at a time; unitization during sleep binds these together into a seamless whole.

The second kind of information processing we accomplish during sleep is assimilation. Here, the brain integrates new information into the existing network structure of other things you already knew. In learning new words, for example, your brain works unconsciously to construct sample sentences with them, turning them over and experimenting with how they fit into your preexisting knowledge. Any brain cells that used a lot of energy during the day show an increase of ATP (a neural signaling coenzyme) during sleep, and this has been associated with assimilation.

The third process is abstraction, and this is where hidden rules are discovered and then entered into memory. If you learned English as a child, you learned certain rules about word formation such as “add s to the end of a word to make it plural” or “add ed to the end of a word to make it past tense.” If you’re like most learners, no one taught you this—your brain abstracted the rule by being exposed to it in multiple instances. This is why children make the perfectly logical mistake of saying “he goed” instead of “he went,” or “he swimmed” instead of “he swam.” The abstraction is correct; it just doesn’t apply to these particular irregular verbs. Across a range of inferences involving not just language but mathematics, logic problems, and spatial reasoning, sleep has been shown to enhance the formation and understanding of abstract relations, so much so that people often wake having solved a problem that was unsolvable the night before. This may be part of the reason why young children just learning language sleep so much.

Thus, many different kinds of learning have been shown to be improved after a night’s sleep, but not after an equivalent period of being awake. Musicians who learn a new melody show significant improvement in performing it after one night’s sleep. Students who were stymied by a calculus problem the day it was presented are able to solve it more easily after a night’s sleep than an equivalent amount of waking time. New information and concepts appear to be quietly practiced while we’re asleep, sometimes showing up in dreams. A night of sleep more than doubles the likelihood that you’ll solve a problem requiring insight.

Many people remember the first day they played with a Rubik’s Cube. That night they report that their dreams were disturbed by images of those brightly colored squares and of them rotating and clicking in their sleep. The next day, they are much better at the game—while asleep, their brains had extracted principles of where things were, relying on both their conscious perceptions of the previous day and myriad unconscious perceptions. Researchers found the same thing when studying Tetris players’ dreams. Although the players reported dreaming about Tetris, especially early on in their learning, they didn’t dream about specific games or moves they had made; rather, they dreamed about abstract elements of the game. The researchers hypothesized that this created a template by which their brains could organize and store just the sort of generalized information that would be necessary to succeed at the game.

This kind of information consolidation happens all the time in our brains, but it happens more intensely for tasks we are more engaged with. Those calculus students didn’t simply glance at the problem during the day, they tried actively to solve it, focused attention on it, and then reapproached it after a night’s sleep. If you are only dimly engaged in your French language tapes, it is unlikely your sleep will help you to learn grammar and vocabulary. But if you struggle with the language for an hour or more during the day, investing your focus, energy, and emotions in it, then it will be ripe for replay and elaboration during your sleep. This is why language immersion works so well—you’re emotionally invested and interpersonally engaged with the language as you attempt to survive in the new linguistic environment. This kind of learning, in a way, is hard to manufacture in the classroom or language laboratory.

Perhaps the most important principle of memory is that we tend to remember best those things we care about the most. At a biological level, neurochemical tags are created and attached to experiences that are emotionally important; and those appear to be the ones that our dreams grab hold of.

All sleep isn’t created equal when it comes to improving memory and learning. The two main categories of sleep are REM (rapid eye movement) and NREM (non-REM), with NREM sleep being further divided into four stages, each with a distinct pattern of brain waves. REM sleep is when our most vivid and detailed dreams occur. Its most obvious feature is temporary selective muscle suppression (so that if you’re running in your dream, you don’t get out of bed and start running around the house). REM sleep is also characterized by low-voltage brain wave patterns (EEG), and the rapid, flickering eyelid movements for which it is named. It used to be thought that all our dreaming occurs during REM sleep, but there is newer evidence that we can dream during NREM sleep as well, although those dreams tend to be less elaborate. Most mammals have physiologically similar states, and we assume they’re dreaming, but we can’t know for sure. Additional dreamlike states can occur just as we’re falling asleep and just as we’re waking up; these can feature vivid auditory and visual imagery that seem like hallucinations.

REM sleep is believed to be the stage during which the brain performs the deepest processing of events—the unitization, assimilation, and abstraction mentioned above. The brain chemicals that mediate it include decreases in noradrenaline and increased levels of acetylcholine and cortisol. A preponderance of theta wave activity facilitates associative linking between disparate brain regions during REM. This has two interesting effects. The first is that it allows our brains to draw out connections, deep underlying connections, between the events in our lives that we might not otherwise perceive, through activating thoughts that are far-flung in our consciousness and unconsciousness. It’s what lets us perceive, for example, that clouds look a bit like marshmallows, or that “Der Kommissar” by Falco uses the same musical hook as “Super Freak” by Rick James. The second effect is that it appears to cause dreams in which these connections morph into one another: You dream you’re eating a marshmallow and it suddenly floats up to the sky and becomes a rain cloud; you’re watching Rick James on TV and he’s driving a Ford Falcon (the brain can be a terrible punster—Falco becomes Falcon); you’re walking down a street and suddenly the street is in a completely different town, and the sidewalk turns to water. These distortions are a product of the brain exploring possible relations among disparate ideas and things. And it’s a good thing they happen only while you’re asleep or your view of reality would be unreliable.

There’s another kind of distortion that occurs when we sleep—time distortion. What may seem like a long, elaborate dream spanning thirty minutes or more may actually occur within the span of a single minute. This may be due to the fact that the body’s own internal clock is in a reduced state of activation (you might say it is asleep, too) and so becomes unreliable.

The transition between REM and NREM sleep is believed to be mediated by GABAergic neurons near the brainstem, those same neurons that act as inhibitors in the prefrontal cortex. Current thinking is that these and other neurons in the brain act as switches, bringing us from one state to the other. Damage to one part of this brain region causes a dramatic reduction in REM sleep, while damage to another causes an increase.

A normal human sleep cycle lasts about 90–100 minutes. Around 20 of those minutes on average are spent dreaming in REM sleep, and 70–80 are NREM sleep, although the length varies throughout the night. REM periods may be only 5–10 minutes at the beginning of the night and expand to 30 minutes or more later in the early morning hours. Most of the memory consolidation occurs in the first two hours of slow-wave, NREM sleep, and during the last 90 minutes of REM sleep in the morning. This is why drinking and drugs (including sleep medications) can interfere with memory, because that crucial first sleep cycle is compromised by intoxication. And this is why sleep deprivation leads to memory loss—because the crucial 90 minutes of sleep at the end is either interrupted or never occurs. And you can’t make up for lost sleep time. Sleep deprivation after a day of learning prevents sleep-related improvement, even three days later following two nights of good sleep. This is because recovery sleep or rebound sleep is characterized by abnormal brain waves as the dream cycle attempts to resynchronize with the body’s circadian rhythm.

Sleep may also be a fundamental property of neuronal metabolism. In addition to the information consolidation functions, a new finding in 2013 showed that sleep is necessary for cellular housekeeping. Like the garbage trucks that roam city streets at five A.M., specific metabolic processes in the glymphatic system clear neural pathways of potentially toxic waste products that accumulate during waking thought. As discussed in Chapter 2, we also know that it is not an all-or-none phenomenon: Parts of the brain sleep while others do not, leading to not just the sense but the reality that sometimes we are half-asleep or sleeping only lightly. If you’ve ever had a brain freeze—momentarily unable to remember something obvious—or if you’ve ever found yourself doing something silly like putting orange juice on your cereal, it may well be that part of your brain is taking a nap. Or it could just be that you’re thinking about too many things at once, having overloaded your attentional system.

Several factors contribute to feelings of sleepiness. First, the twenty-four-hour cycle of light and darkness influences the production of neurochemicals specifically geared to induce wakeful alertness or sleepiness. Sunlight impinging on photoreceptors in the retina triggers a chain reaction of processes resulting in stimulation of the suprachiasmatic nucleus and the pineal gland, a small gland near the base of the brain, about the size of a grain of rice. About one hour after dark, the pineal gland produces melatonin, a neurohormone partly responsible for giving us the urge to sleep (and causing the brain to go into a sleep state).

The sleep-wake cycle can be likened to a thermostat in your home. When the temperature falls to a certain point, the thermostat closes an electrical circuit, causing your furnace to turn on. Then, when your preset, desired temperature is reached, the thermostat interrupts the circuit and the furnace turns off again. Sleep is similarly governed by neural switches. These follow a homeostatic process and are influenced by a number of factors, including your circadian rhythm, food intake, blood sugar level, condition of your immune system, stress, sunlight and darkness. When your homeostat increases above a certain point, it triggers the release of neurohormones that induce sleep. When your homeostat decreases below a certain point, a separate set of neurohormones are released to induce wakefulness.

At one time or another, you’ve probably thought that if only you could sleep less, you’d get so much more done. Or that you could just borrow time by sleeping one hour less tonight and one hour more tomorrow night. As enticing as these seem, they’re not borne out by research. Sleep is among the most critical factors for peak performance, memory, productivity, immune function, and mood regulation. Even a mild sleep reduction or a departure from a set sleep routine (for example, going to bed late one night, sleeping in the next morning) can produce detrimental effects on cognitive performance for many days afterward. When professional basketball players got ten hours of sleep a night, their performance improved dramatically: Free-throw and three-point shooting each improved by 9%.

Most of us follow a sleep-waking pattern of sleeping for 6–8 hours followed by staying awake for approximately 16–18. This is a relatively recent invention. For most of human history, our ancestors engaged in two rounds of sleep, called segmented sleep or bimodal sleep, in addition to an afternoon nap. The first round of sleep would occur for four or five hours after dinner, followed by an awake period of one or more hours in the middle of the night, followed by a second period of four or five hours of sleep. That middle-of-the-night waking might have evolved to help ward off nocturnal predators. Bimodal sleep appears to be a biological norm that was subverted by the invention of artificial light, and there is scientific evidence that the bimodal sleep-plus-nap regime is healthier and promotes greater life satisfaction, efficiency, and performance.

To many of us raised with the 6–8 hour, no-nap sleep ideal, this sounds like a bunch of hippie-dippy, flaky foolishness at the fringe of quackery. But it was discovered (or rediscovered, you might say) by Thomas Wehr, a respected scientist at the U.S. National Institute of Mental Health. In a landmark study, he enlisted research participants to live for a month in a room that was dark for fourteen hours a day, mimicking conditions before the invention of the lightbulb. Left to their own devices, they ended up sleeping eight hours a night but in two separate blocks. They tended to fall asleep one or two hours after the room went dark, slept for about four hours, stayed awake for an hour or two, and then slept for another four hours.

Millions of people report difficulty sleeping straight through the night. Because uninterrupted sleep appears to be our cultural norm, they experience great distress and ask their doctors for medication to help them stay asleep. Many sleep medications are addictive, have side effects, and leave people feeling drowsy the next morning. They also interfere with memory consolidation. It may be that a simple change in our expectations about sleep and a change to our schedules can go a long way.

There are large individual differences in sleep cycles. Some people fall asleep in a few minutes, others take an hour or more at night. Both are considered within the normal range of human behavior—what is important is what is normal for you, and to notice if there is a sudden change in your pattern that could indicate disease or disorder. Regardless of whether you sleep straight through the night or adopt the ancient bimodal sleep pattern, how much sleep should you get? Rough guidelines from research suggest the following, but these are just averages—some individuals really do require more or less than what is indicated, and this appears to be hereditary. Contrary to popular myth, the elderly do not need less sleep; they are just less able to sleep for eight hours at a stretch.

|

AVERAGE SLEEP NEEDS |

|

|

Age |

Needed sleep |

|

Newborns (0–2 months) |

12–18 hours |

|

Infants (3–11 months) |

14–15 hours |

|

Toddlers (1–3 years) |

12–14 hours |

|

Preschoolers (3–5 years) |

11–13 hours |

|

Children (5–10 years) |

10–11 hours |

|

Preteens and Teenagers (10–17) |

8 1/2–9 1/4 hours |

|

Adults |

6–10 hours |

One out of every three working Americans gets less than six hours’ sleep per night, well below the recommended range noted above. The U.S. Centers for Disease Control and Prevention (CDC) declared sleep deprivation a public health epidemic in 2013.

The prevailing view until the 1990s was that people could adapt to chronic sleep loss without adverse cognitive effects, but newer research clearly says otherwise. Sleepiness was responsible for 250,000 traffic accidents in 2009, and is one of the leading causes of friendly fire—soldiers mistakenly shooting people on their own side. Sleep deprivation was ruled to be a contributing factor in some of the most well-known global disasters: the nuclear power plant disasters at Chernobyl (Ukraine), Three Mile Island (Pennsylvania), Davis-Besse (Ohio), and Rancho Seco (California); the oil spill from the Exxon Valdez; the grounding of the cruise ship Star Princess; and the fatal decision to launch the Challenger space shuttle. Remember that Air France plane that crashed into the Atlantic Ocean in June 2009, killing all 288 people on board? The captain had been running on only one hour of sleep, and the copilots were also sleep deprived.

In addition to loss of life, there is the economic impact. Sleep deprivation is estimated to cost U.S. businesses more than $150 billion a year in absences, accidents, and lost productivity—for comparison, that’s roughly the same as the annual revenue of Apple Corporation. If sleep-related economic losses were a business, it would be the sixth-largest business in the country. It’s also associated with increased risk for heart disease, obesity, stroke, and cancer. Too much sleep is also detrimental, but perhaps the most important factor in achieving peak alertness is consistency, so that the body’s circadian rhythms can lock into a consistent cycle. Going to bed just one hour late one night, or sleeping in for an hour or two just one morning, can affect your productivity, immune function, and mood significantly for several days after the irregularity.

Part of the problem is cultural—our society does not value sleep. Sleep expert David K. Randall put it this way:

While we’ll spend thousands on lavish vacations to unwind, grind away hours exercising and pay exorbitant amounts for organic food, sleep remains ingrained in our cultural ethos as something that can be put off, dosed or ignored. We can’t look at sleep as an investment in our health because—after all—it’s just sleep. It is hard to feel like you’re taking an active step to improve your life with your head on a pillow.