1

TOO MUCH INFORMATION, TOO MANY DECISIONS

The Inside History of Cognitive Overload

One of the best students I ever had the privilege of meeting was born in communist Romania, under the repressive and brutal rule of Nicolae  . Although his regime collapsed when she was eleven, she remembered well the long lines for food, the shortages, and the economic destitution that lasted far beyond his overthrow. Ioana was bright and curious, and although still young, she had the colors of a true scholar: When she encountered a new scientific idea or problem, she would look at it from every angle, reading everything she could get her hands on. I met her during her first semester at university, newly arrived in North America, when she took my introductory course on the psychology of thinking and reasoning. Although the class had seven hundred students, she distinguished herself early on by thoughtfully answering questions posed in class, peppering me with questions during office hours, and constantly proposing new experiments.

. Although his regime collapsed when she was eleven, she remembered well the long lines for food, the shortages, and the economic destitution that lasted far beyond his overthrow. Ioana was bright and curious, and although still young, she had the colors of a true scholar: When she encountered a new scientific idea or problem, she would look at it from every angle, reading everything she could get her hands on. I met her during her first semester at university, newly arrived in North America, when she took my introductory course on the psychology of thinking and reasoning. Although the class had seven hundred students, she distinguished herself early on by thoughtfully answering questions posed in class, peppering me with questions during office hours, and constantly proposing new experiments.

I ran into her one day at the college bookstore, frozen in the aisle with all the pens and pencils. She was leaning limply against the shelf, clearly distraught.

“Is everything all right?” I asked.

“It can be really terrible living in America,” Ioana said.

“Compared to Soviet Romania?!”

“Everything is so complicated. I looked for a student apartment. Rent or lease? Furnished or unfurnished? Top floor or ground floor? Carpet or hardwood floor . . .”

“Yes, finally. But it’s impossible to know which is best. Now . . .” her voice trailed off.

“Is there a problem with the apartment?”

“No, the apartment is fine. But today is my fourth time in the bookstore. Look! An entire row full of pens. In Romania, we had three kinds of pens. And many times there was a shortage—no pens at all. In America, there are more than fifty different kinds. Which one do I need for my biology class? Which one for poetry? Do I want felt tip, ink, gel, cartridge, erasable? Ballpoint, razor point, roller ball? One hour I am here reading labels.”

Every day, we are confronted with dozens of decisions, most of which we would characterize as insignificant or unimportant—whether to put on our left sock first or our right, whether to take the bus or the subway to work, what to eat, where to shop. We get a taste of Ioana’s disorientation when we travel, not only to other countries but even to other states. The stores are different, the products are different. Most of us have adopted a strategy to get along called satisficing, a term coined by the Nobel Prize winner Herbert Simon, one of the founders of the fields of organization theory and information processing. Simon wanted a word to describe not getting the very best option but one that was good enough. For things that don’t matter critically, we make a choice that satisfies us and is deemed sufficient. You don’t really know if your dry cleaner is the best—you only know that they’re good enough. And that’s what helps you get by. You don’t have time to sample all the dry cleaners within a twenty-four-block radius of your home. Does Dean & DeLuca really have the best gourmet takeout? It doesn’t matter—it’s good enough. Satisficing is one of the foundations of productive human behavior; it prevails when we don’t waste time on decisions that don’t matter, or more accurately, when we don’t waste time trying to find improvements that are not going to make a significant difference in our happiness or satisfaction.

All of us engage in satisficing every time we clean our homes. If we got down on the floor with a toothbrush every day to clean the grout, if we scrubbed the windows and walls every single day, the house would be spotless. But few of us go to this much trouble even on a weekly basis (and when we do, we’re likely to be labeled obsessive-compulsive). For most of us, we clean our houses until they are clean enough, reaching a kind of equilibrium between effort and benefit. It is this cost-benefits analysis that is at the heart of satisficing (Simon was also a respected economist).

Recent research in social psychology has shown that happy people are not people who have more; rather, they are people who are happy with what they already have. Happy people engage in satisficing all of the time, even if they don’t know it. Warren Buffett can be seen as embracing satisficing to an extreme—one of the richest men in the world, he lives in Omaha, a block from the highway, in the same modest home he has lived in for fifty years. He once told a radio interviewer that for breakfasts during his weeklong visit to New York City, he’d bought himself a gallon of milk and a box of Oreo cookies. But Buffett does not satisfice with his investment strategies; satisficing is a tool for not wasting time on things that are not your highest priority. For your high-priority endeavors, the old-fashioned pursuit of excellence remains the right strategy. Do you want your surgeon or your airplane mechanic or the director of a $100 million feature film to do just good enough or do the best they possibly can? Sometimes you want more than Oreos and milk.

Part of my Romanian student’s despondency could be chalked up to culture shock—to the loss of the familiar, and immersion in the unfamiliar. But she’s not alone. The past generation has seen an explosion of choices facing consumers. In 1976, the average supermarket stocked 9,000 unique products; today that number has ballooned to 40,000 of them, yet the average person gets 80%–85% of their needs in only 150 different supermarket items. That means that we need to ignore 39,850 items in the store. And that’s just supermarkets—it’s been estimated that there are over one million products in the United States today (based on SKUs, or stock-keeping units, those little bar codes on things we buy).

All this ignoring and deciding comes with a cost. Neuroscientists have discovered that unproductivity and loss of drive can result from decision overload. Although most of us have no trouble ranking the importance of decisions if asked to do so, our brains don’t automatically do this. Ioana knew that keeping up with her coursework was more important than what pen to buy, but the mere situation of facing so many trivial decisions in daily life created neural fatigue, leaving no energy for the important decisions. Recent research shows that people who were asked to make a series of meaningless decisions of just this type—for example, whether to write with a ballpoint pen or a felt-tip pen—showed poorer impulse control and lack of judgment about subsequent decisions. It’s as though our brains are configured to make a certain number of decisions per day and once we reach that limit, we can’t make any more, regardless of how important they are. One of the most useful findings in recent neuroscience could be summed up as: The decision-making network in our brain doesn’t prioritize.

Today, we are confronted with an unprecedented amount of information, and each of us generates more information than ever before in human history. As former Boeing scientist and New York Times writer Dennis Overbye notes, this information stream contains “more and more information about our lives—where we shop and what we buy, indeed, where we are right now—the economy, the genomes of countless organisms we can’t even name yet, galaxies full of stars we haven’t counted, traffic jams in Singapore and the weather on Mars.” That information “tumbles faster and faster through bigger and bigger computers down to everybody’s fingertips, which are holding devices with more processing power than the Apollo mission control.” Information scientists have quantified all this: In 2011, Americans took in five times as much information every day as they did in 1986—the equivalent of 175 newspapers. During our leisure time, not counting work, each of us processes 34 gigabytes or 100,000 words every day. The world’s 21,274 television stations produce 85,000 hours of original programming every day as we watch an average of 5 hours of television each day, the equivalent of 20 gigabytes of audio-video images. That’s not counting YouTube, which uploads 6,000 hours of video every hour. And computer gaming? It consumes more bytes than all other media put together, including DVDs, TV, books, magazines, and the Internet.

Just trying to keep our own media and electronic files organized can be overwhelming. Each of us has the equivalent of over half a million books stored on our computers, not to mention all the information stored in our cell phones or in the magnetic stripe on the back of our credit cards. We have created a world with 300 exabytes (300,000,000,000,000,000,000 pieces) of human-made information. If each of those pieces of information were written on a 3 x 5 index card and then spread out side by side, just one person’s share—your share of this information—would cover every square inch of Massachusetts and Connecticut combined.

Our brains do have the ability to process the information we take in, but at a cost: We can have trouble separating the trivial from the important, and all this information processing makes us tired. Neurons are living cells with a metabolism; they need oxygen and glucose to survive and when they’ve been working hard, we experience fatigue. Every status update you read on Facebook, every tweet or text message you get from a friend, is competing for resources in your brain with important things like whether to put your savings in stocks or bonds, where you left your passport, or how best to reconcile with a close friend you just had an argument with.

The processing capacity of the conscious mind has been estimated at 120 bits per second. That bandwidth, or window, is the speed limit for the traffic of information we can pay conscious attention to at any one time. While a great deal occurs below the threshold of our awareness, and this has an impact on how we feel and what our life is going to be like, in order for something to become encoded as part of your experience, you need to have paid conscious attention to it.

What does this bandwidth restriction—this information speed limit—mean in terms of our interactions with others? In order to understand one person speaking to us, we need to process 60 bits of information per second. With a processing limit of 120 bits per second, this means you can barely understand two people talking to you at the same time. Under most circumstances, you will not be able to understand three people talking at the same time. We’re surrounded on this planet by billions of other humans, but we can understand only two at a time at the most! It’s no wonder that the world is filled with so much misunderstanding.

With such attentional restrictions, it’s clear why many of us feel overwhelmed by managing some of the most basic aspects of life. Part of the reason is that our brains evolved to help us deal with life during the hunter-gatherer phase of human history, a time when we might encounter no more than a thousand people across the entire span of our lifetime. Walking around midtown Manhattan, you’ll pass that number of people in half an hour.

Attention is the most essential mental resource for any organism. It determines which aspects of the environment we deal with, and most of the time, various automatic, subconscious processes make the correct choice about what gets passed through to our conscious awareness. For this to happen, millions of neurons are constantly monitoring the environment to select the most important things for us to focus on. These neurons are collectively the attentional filter. They work largely in the background, outside of our conscious awareness. This is why most of the perceptual detritus of our daily lives doesn’t register, or why, when you’ve been driving on the freeway for several hours at a stretch, you don’t remember much of the scenery that has whizzed by: Your attentional system “protects” you from registering it because it isn’t deemed important. This unconscious filter follows certain principles about what it will let through to your conscious awareness.

The attentional filter is one of evolution’s greatest achievements. In nonhumans, it ensures that they don’t get distracted by irrelevancies. Squirrels are interested in nuts and predators, and not much else. Dogs, whose olfactory sense is one million times more sensitive than ours, use smell to gather information about the world more than they use sound, and their attentional filter has evolved to make that so. If you’ve ever tried to call your dog while he is smelling something interesting, you know that it is very difficult to grab his attention with sound—smell trumps sound in the dog brain. No one has yet worked out all of the hierarchies and trumping factors in the human attentional filter, but we’ve learned a great deal about it. When our protohuman ancestors left the cover of the trees to seek new sources of food, they simultaneously opened up a vast range of new possibilities for nourishment and exposed themselves to a wide range of new predators. Being alert and vigilant to threatening sounds and visual cues is what allowed them to survive; this meant allowing an increasing amount of information through the attentional filter.

Humans are, by most biological measures, the most successful species our planet has seen. We have managed to survive in nearly every climate our planet has offered (so far), and the rate of our population expansion exceeds that of any other known organism. Ten thousand years ago, humans plus their pets and livestock accounted for about 0.1% of the terrestrial vertebrate biomass inhabiting the earth; we now account for 98%. Our success owes in large part to our cognitive capacity, the ability of our brains to flexibly handle information. But our brains evolved in a much simpler world with far less information coming at us. Today, our attentional filters easily become overwhelmed. Successful people—or people who can afford it—employ layers of people whose job it is to narrow the attentional filter. That is, corporate heads, political leaders, spoiled movie stars, and others whose time and attention are especially valuable have a staff of people around them who are effectively extensions of their own brains, replicating and refining the functions of the prefrontal cortex’s attentional filter.

These highly successful persons—let’s call them HSPs—have many of the daily distractions of life handled for them, allowing them to devote all of their attention to whatever is immediately before them. They seem to live completely in the moment. Their staff handle correspondence, make appointments, interrupt those appointments when a more important one is waiting, and help to plan their days for maximum efficiency (including naps!). Their bills are paid on time, their car is serviced when required, they’re given reminders of projects due, and their assistants send suitable gifts to the HSP’s loved ones on birthdays and anniversaries. Their ultimate prize if it all works? A Zen-like focus.

In the course of my work as a scientific researcher, I’ve had the chance to meet governors, cabinet members, music celebrities, and the heads of Fortune 500 companies. Their skills and accomplishments vary, but as a group, one thing is remarkably constant. I’ve repeatedly been struck by how liberating it is for them not to have to worry about whether there is someplace else they need to be, or someone else they need to be talking to. They take their time, make eye contact, relax, and are really there with whomever they’re talking to. They don’t have to worry if there is someone more important they should be talking to at that moment because their staff—their external attentional filters—have already determined for them that this is the best way they should be using their time. And there is a great amount of infrastructure in place ensuring that they will get to their next appointment on time, so they can let go of that nagging concern as well.

The rest of us have a tendency during meetings to let our minds run wild and cycle through a plethora of thoughts about the past and the future, destroying any aspirations for Zen-like calm and preventing us from being in the here and now: Did I turn off the stove? What will I do for lunch? When do I need to leave here in order to get to where I need to be next?

What if you could rely on others in your life to handle these things and you could narrow your attentional filter to that which is right before you, happening right now? I met Jimmy Carter when he was campaigning for president and he spoke as though we had all the time in the world. At one point, an aide came to take him off to the next person he needed to meet. Free from having to decide when the meeting would end, or any other mundane care, really, President Carter could let go of those inner nagging voices and be there. A professional musician friend who headlines big stadiums constantly and has a phalanx of assistants describes this state as being “happily lost.” He doesn’t need to look at his calendar more than a day in advance, allowing each day to be filled with wonder and possibility.

If we organize our minds and our lives following the new neuroscience of attention and memory, we can all deal with the world in ways that provide the sense of freedom that these HSPs enjoy. How can we actually leverage this science in everyday life? To begin with, by understanding the architecture of our attentional system. To better organize our mind, we need to know how it has organized itself.

Two of the most crucial principles used by the attentional filter are change and importance. The brain is an exquisite change detector: If you’re driving and suddenly the road feels bumpy, your brain notices this change immediately and signals your attentional system to focus on the change. How does this happen? Neural circuits are noticing the smoothness of the road, the way it sounds, the way it feels against your rear end, back, and feet, and other parts of your body that are in contact with the car, and the way your visual field is smooth and continuous. After a few minutes of the same sounds, feel, and overall look, your conscious brain relaxes and lets the attentional filter take over. This frees you up to do other things, such as carry on a conversation or listen to the radio, or both. But with the slightest change—a low tire, bumps in the road—your attentional system pushes the new information up to your consciousness so that you can focus on the change and take appropriate action. Your eyes may scan the road and discover drainage ridges in the asphalt that account for the rough ride. Having found a satisfactory explanation, you relax again, pushing this sensory decision-making back down to lower levels of consciousness. If the road seems visually smooth and you can’t otherwise account for the rough ride, you might decide to pull over and examine your tires.

The brain’s change detector is at work all the time, whether you know it or not. If a close friend or relative calls on the phone, you might detect that her voice sounds different and ask if she’s congested or sick with the flu. When your brain detects the change, this information is sent to your consciousness, but your brain doesn’t explicitly send a message when there is no change. If your friend calls and her voice sounds normal, you don’t immediately think, “Oh, her voice is the same as always.” Again, this is the attentional filter doing its job, detecting change, not constancy.

The second principle, importance, can also let information through. Here, importance is not just something that is objectively important but something that is personally important to you. If you’re driving, a billboard for your favorite music group might catch your eye (really, we should say catch your mind) while other billboards go ignored. If you’re in a crowded room, at a party for instance, certain words to which you attach high importance might suddenly catch your attention, even if spoken from across the room. If someone says “fire” or “sex” or your own name, you’ll find that you’re suddenly following a conversation far away from where you’re standing, with no awareness of what those people were talking about before your attention was captured. The attentional filter is thus fairly sophisticated. It is capable of monitoring lots of different conversations as well as their semantic content, letting through only those that it thinks you will want to know about.

Due to the attentional filter, we end up experiencing a great deal of the world on autopilot, not registering the complexities, nuances, and often the beauty of what is right in front of us. A great number of failures of attention occur because we are not using these two principles to our advantage.

A critical point that bears repeating is that attention is a limited-capacity resource—there are definite limits to the number of things we can attend to at once. We see this in everyday activities. If you’re driving, under most circumstances, you can play the radio or carry on a conversation with someone else in the car. But if you’re looking for a particular street to turn onto, you instinctively turn down the radio or ask your friend to hang on for a moment, to stop talking. This is because you’ve reached the limits of your attention in trying to do these three things. The limits show up whenever we try to do too many things at once. How many times has something like the following happened to you? You’ve just come home from grocery shopping, one bag in each hand. You’ve balanced them sufficiently to unlock the front door, and as you walk in, you hear the phone ringing. You need to put down the grocery bags in your hands, answer the phone, perhaps being careful not to let the dog or cat out the open door. When the phone call is over, you realize you don’t know where your keys are. Why? Because keeping track of them, too, is more things than your attentional system could handle.

The human brain has evolved to hide from us those things we are not paying attention to. In other words, we often have a cognitive blind spot: We don’t know what we’re missing because our brain can completely ignore things that are not its priority at the moment—even if they are right in front of our eyes. Cognitive psychologists have called this blind spot various names, including inattentional blindness. One of the most amazing demonstrations of it is known as the basketball demo. If you haven’t seen it, I urge you to put this book down and view it now before reading any further. The video can be seen here: http://www.youtube.com/watch?v=vJG698U2Mvo. Your job is to count how many times the players wearing the white T-shirts pass the basketball, while ignoring the players in the black T-shirts.

(Spoiler alert: If you haven’t seen the video yet, reading the next paragraph will mean that the illusion won’t work for you.) The video comes from a psychological study of attention by Christopher Chabris and Daniel Simons. Because of the processing limits of your attentional system that I’ve just described, following the basketball and the passing, and keeping a mental tally of the passes, takes up most of the attentional resources of the average person. The rest are taken up by trying to ignore the players in the black T-shirts and to ignore the basketball they are passing. At some point in the video, a man in a gorilla suit walks into the middle of things, bangs his chest, and then walks off. The majority of the people watching this video don’t see the gorilla. The reason? The attentional system is simply overloaded. If I had not asked you to count the basketball passes, you would have seen the gorilla.

A lot of instances of losing things like car keys, passports, money, receipts, and so on occur because our attentional systems are overloaded and they simply can’t keep track of everything. The average American owns thousands of times more possessions than the average hunter-gatherer. In a real biological sense, we have more things to keep track of than our brains were designed to handle. Even towering intellectuals such as Kant and Wordsworth complained of information excess and sheer mental exhaustion induced by too much sensory input or mental overload. This is no reason to lose hope, though! More than ever, effective external systems are available for organizing, categorizing, and keeping track of things. In the past, the only option was a string of human assistants. But now, in the age of automation, there are other options. The first part of this book is about the biology underlying the use of these external systems. The second and third parts show how we can all use them to better keep track of our lives, to be efficient, productive, happy, and less stressed in a wired world that is increasingly filled with distractions.

Productivity and efficiency depend on systems that help us organize through categorization. The drive to categorize developed in the prehistoric wiring of our brains, in specialized neural systems that create and maintain meaningful, coherent amalgamations of things—foods, animals, tools, tribe members—in coherent categories. Fundamentally, categorization reduces mental effort and streamlines the flow of information. We are not the first generation of humans to be complaining about too much information.

Information Overload, Then and Now

Humans have been around for 200,000 years. For the first 99% of our history, we didn’t do much of anything but procreate and survive. This was largely due to harsh global climactic conditions, which stabilized sometime around 10,000 years ago. People soon thereafter discovered farming and irrigation, and they gave up their nomadic lifestyle in order to cultivate and tend stable crops. But not all farm plots are the same; regional variations in sunshine, soil, and other conditions meant that one farmer might grow particularly good onions while another grew especially good apples. This eventually led to specialization; instead of growing all the crops for his own family, a farmer might grow only what he was best at and trade some of it for things he wasn’t growing. Because each farmer was producing only one crop, and more than he needed, marketplaces and trading emerged and grew, and with them came the establishment of cities.

The Sumerian city of Uruk (~5000 BCE) was one of the world’s earliest large cities. Its active commercial trade created an unprecedented volume of business transactions, and Sumerian merchants required an accounting system for keeping track of the day’s inventory and receipts; this was the birth of writing. Here, liberal arts majors may need to set their romantic notions aside. The first forms of writing emerged not for art, literature, or love, not for spiritual or liturgical purposes, but for business—all literature could be said to originate from sales receipts (sorry). With the growth of trade, cities, and writing, people soon discovered architecture, government, and the other refinements of being that collectively add up to what we think of as civilization.

The appearance of writing some 5,000 years ago was not met with unbridled enthusiasm; many contemporaries saw it as technology gone too far, a demonic invention that would rot the mind and needed to be stopped. Then, as now, printed words were promiscuous—it was impossible to control where they went or who would receive them, and they could circulate easily without the author’s knowledge or control. Lacking the opportunity to hear information directly from a speaker’s mouth, the antiwriting contingent complained that it would be impossible to verify the accuracy of the writer’s claims, or to ask follow-up questions. Plato was among those who voiced these fears; his King Thamus decried that the dependence on written words would “weaken men’s characters and create forgetfulness in their souls.” Such externalization of facts and stories meant people would no longer need to mentally retain large quantities of information themselves and would come to rely on stories and facts as conveyed, in written form, by others. Thamus, king of Egypt, argued that the written word would infect the Egyptian people with fake knowledge. The Greek poet Callimachus said books are “a great evil.” The Roman philosopher Seneca the Younger (tutor to Nero) complained that his peers were wasting time and money accumulating too many books, admonishing that “the abundance of books is a distraction.” Instead, Seneca recommended focusing on a limited number of good books, to be read thoroughly and repeatedly. Too much information could be harmful to your mental health.

The printing press was introduced in the mid 1400s, allowing for the more rapid proliferation of writing, replacing laborious (and error-prone) hand copying. Yet again, many complained that intellectual life as we knew it was done for. Erasmus, in 1525, went on a tirade against the “swarms of new books,” which he considered a serious impediment to learning. He blamed printers whose profit motive sought to fill the world with books that were “foolish, ignorant, malignant, libelous, mad, impious and subversive.” Leibniz complained about “that horrible mass of books that keeps on growing” and that would ultimately end in nothing less than a “return to barbarism.” Descartes famously recommended ignoring the accumulated stock of texts and instead relying on one’s own observations. Presaging what many say today, Descartes complained that “even if all knowledge could be found in books, where it is mixed in with so many useless things and confusingly heaped in such large volumes, it would take longer to read those books than we have to live in this life and more effort to select the useful things than to find them oneself.”

A steady flow of complaints about the proliferation of books reverberated into the late 1600s. Intellectuals warned that people would stop talking to each other, burying themselves in books, polluting their minds with useless, fatuous ideas.

And as we well know, these warnings were raised again in our lifetime, first with the invention of television, then with computers, iPods, iPads, e-mail, Twitter, and Facebook. Each was decried as an addiction, an unnecessary distraction, a sign of weak character, feeding an inability to engage with real people and the real-time exchange of ideas. Even the dial phone was met with opposition when it replaced operator-assisted calls, and people worried How will I remember all those phone numbers? How will I sort through and keep track of all of them? (As David Byrne sang with Talking Heads, “Same as it ever was.”)

With the Industrial Revolution and the rise of science, new discoveries grew at an enormous clip. For example, in 1550, there were 500 known plant species in the world. By 1623, this number had increased to 6,000. Today, we know 9,000 species of grasses alone, 2,700 types of palm trees, 500,000 different plant species. And the numbers keep growing. The increase of scientific information alone is staggering. Just three hundred years ago, someone with a college degree in “science” knew about as much as any expert of the day. Today, someone with a PhD in biology can’t even know all that is known about the nervous system of the squid! Google Scholar reports 30,000 research articles on that topic, with the number increasing exponentially. By the time you read this, the number will have increased by at least 3,000. The amount of scientific information we’ve discovered in the last twenty years is more than all the discoveries up to that point, from the beginning of language. Five exabytes (5 × 1018) of new data were produced in January 2012 alone—that’s 50,000 times the number of words in the entire Library of Congress.

This information explosion is taxing all of us, every day, as we struggle to come to grips with what we really need to know and what we don’t. We take notes, make To Do lists, leave reminders for ourselves in e-mail and on cell phones, and we still end up feeling overwhelmed.

A large part of this feeling of being overwhelmed can be traced back to our evolutionarily outdated attentional system. I mentioned earlier the two principles of the attentional filter: change and importance. There is a third principle of attention—not specific to the attentional filter—that is relevant now more than ever. It has to do with the difficulty of attentional switching. We can state the principle this way: Switching attention comes with a high cost.

Our brains evolved to focus on one thing at a time. This enabled our ancestors to hunt animals, to create and fashion tools, to protect their clan from predators and invading neighbors. The attentional filter evolved to help us to stay on task, letting through only information that was important enough to deserve disrupting our train of thought. But a funny thing happened on the way to the twenty-first century: The plethora of information and the technologies that serve it changed the way we use our brains. Multitasking is the enemy of a focused attentional system. Increasingly, we demand that our attentional system try to focus on several things at once, something that it was not evolved to do. We talk on the phone while we’re driving, listening to the radio, looking for a parking place, planning our mom’s birthday party, trying to avoid the road construction signs, and thinking about what’s for lunch. We can’t truly think about or attend to all these things at once, so our brains flit from one to the other, each time with a neurobiological switching cost. The system does not function well that way. Once on a task, our brains function best if we stick to that task.

To pay attention to one thing means that we don’t pay attention to something else. Attention is a limited-capacity resource. When you focused on the white T-shirts in the basketball video, you filtered out the black T-shirts and, in fact, most other things that were black, including the gorilla. When we focus on a conversation we’re having, we tune out other conversations. When we’re just walking in the front door, thinking about who might be on the other end of that ringing telephone line, we’re not thinking about where we put our car keys.

Attention is created by networks of neurons in the prefrontal cortex (just behind your forehead) that are sensitive only to dopamine. When dopamine is released, it unlocks them, like a key in your front door, and they start firing tiny electrical impulses that stimulate other neurons in their network. But what causes that initial release of dopamine? Typically, one of two different triggers:

- Something can grab your attention automatically, usually something that is salient to your survival, with evolutionary origins. This vigilance system incorporating the attentional filter is always at work, even when you’re asleep, monitoring the environment for important events. This can be a loud sound or bright light (the startle reflex), something moving quickly (that might indicate a predator), a beverage when you’re thirsty, or an attractively shaped potential sexual partner.

- You effectively will yourself to focus only on that which is relevant to a search or scan of the environment. This deliberate filtering has been shown in the laboratory to actually change the sensitivity of neurons in the brain. If you’re trying to find your lost daughter at the state fair, your visual system reconfigures to look only for things of about her height, hair color, and body build, filtering everything else out. Simultaneously, your auditory system retunes itself to hear only frequencies in that band where her voice registers. You could call it the Where’s Waldo? filtering network.

In the Where’s Waldo? children’s books, a boy named Waldo wears a red-and-white horizontally striped shirt, and he’s typically placed in a crowded picture with many people and objects drawn in many colors. In the version for young children, Waldo might be the only red thing in the picture; the young child’s attentional filter can quickly scan the picture and land on the red object—Waldo. Waldo puzzles for older age groups become increasingly difficult—the distractors are solid red and solid white T-shirts, or shirts with stripes in different colors, or red-and-white vertical stripes rather than horizontal.

Where’s Waldo? puzzles exploit the neuroarchitecture of the primate visual system. Inside the occipital lobe, a region called the visual cortex contains populations of neurons that respond only to certain colors—one population fires an electrical signal in response to red objects, another to green, and so on. Then, a separate population of neurons is sensitive to horizontal stripes as distinct from vertical stripes, and within the horizontal stripes neurons, some are maximally responsive to wide stripes and some to narrow stripes.

If only you could send instructions to these different neuron populations, telling some of them when you need them to stand up straight and do your bidding, while telling the others to sit back and relax. Well, you can—this is what we do when we try to find Waldo, search for a missing scarf or wallet, or watch the basketball video. We bring to mind a mental image of what we’re looking for, and neurons in the visual cortex help us to imagine in our mind’s eye what the object looks like. If it has red in it, our red-sensitive neurons are involved in the imagining. They then automatically tune themselves, and inhibit other neurons (the ones for the colors you’re not interested in) to facilitate the search. Where’s Waldo? trains children to set and exercise their visual attentional filters to locate increasingly subtle cues in the environment, much as our ancestors might have trained their children to track animals through the forest, starting with easy-to-see and easy-to-differentiate animals and working up to camouflaging animals that are more difficult to pick out from the surrounding environment. The system also works for auditory filtering—if we are expecting a particular pitch or timbre in a sound, our auditory neurons become selectively tuned to those characteristics.

When we willfully retune sensory neurons in this way, our brains engage in top-down processing, originating in a higher, more advanced part of the brain than sensory processing.

It is this top-down system that allows experts to excel in their domains. It allows quarterbacks to see their open receivers and not be distracted by other players on the field. It allows sonar operators to maintain vigilance and to easily (with suitable training) distinguish an enemy submarine from a freighter ship or a whale, just by the sound of the ping. It’s what allows conductors to listen to just one instrument at a time when sixty are playing. It’s what allows you to pay attention to this book even though there are probably distractions around you right now: the sound of a fan, traffic, birds singing outdoors, distant conversations, not to mention the visual distractions in the periphery, outside the central visual focus of where you’re holding your book or screen.

If we have such an effective attentional filter, why can’t we filter out distractions better than we can? Why is information overload such a serious problem now?

For one thing, we’re doing more work than ever before. The promise of a computerized society, we were told, was that it would relegate to machines all of the repetitive drudgery of work, allowing us humans to pursue loftier purposes and to have more leisure time. It didn’t work out this way. Instead of more time, most of us have less. Companies large and small have off-loaded work onto the backs of consumers. Things that used to be done for us, as part of the value-added service of working with a company, we are now expected to do ourselves. With air travel, we’re now expected to complete our own reservations and check-in, jobs that used to be done by airline employees or travel agents. At the grocery store, we’re expected to bag our own groceries and, in some supermarkets, to scan our own purchases. We pump our own gas at filling stations. Telephone operators used to look up numbers for us. Some companies no longer send out bills for their services—we’re expected to log in to their website, access our account, retrieve our bill, and initiate an electronic payment; in effect, do the job of the company for them. Collectively, this is known as shadow work—it represents a kind of parallel, shadow economy in which a lot of the service we expect from companies has been transferred to the customer. Each of us is doing the work of others and not getting paid for it. It is responsible for taking away a great deal of the leisure time we thought we would all have in the twenty-first century.

Beyond doing more work, we are dealing with more changes in information technology than our parents did, and more as adults than we did as children. The average American replaces her cell phone every two years, and that often means learning new software, new buttons, new menus. We change our computer operating systems every three years, and that requires learning new icons and procedures, and learning new locations for old menu items.

But overall, as Dennis Overbye put it, “from traffic jams in Singapore to the weather on Mars,” we are just getting so much more information shot at us. The global economy means we are exposed to large amounts of information that our grandparents weren’t. We hear about revolutions and economic problems in countries halfway around the world right as they’re happening; we see images of places we’ve never visited and hear languages spoken that we’ve never heard before. Our brains are hungrily soaking all this in because that is what they’re designed to do, but at the same time, all this stuff is competing for neuroattentional resources with the things we need to know to live our lives.

Emerging evidence suggests that embracing new ideas and learning is helping us to live longer and can stave off Alzheimer’s disease—apart from the advantages traditionally associated with expanding one’s knowledge. So it’s not that we need to take in less information but that we need to have systems for organizing it.

Information has always been the key resource in our lives. It has allowed us to improve society, medical care, and decision-making, to enjoy personal and economic growth, and to better choose our elected officials. It is also a fairly costly resource to acquire and handle. As knowledge becomes more available—and decentralized through the Internet—the notions of accuracy and authoritativeness have become clouded. Conflicting viewpoints are more readily available than ever, and in many cases they are disseminated by people who have no regard for facts or truth. Many of us find we don’t know whom to believe, what is true, what has been modified, and what has been vetted. We don’t have the time or expertise to do research on every little decision. Instead, we rely on trusted authorities, newspapers, radio, TV, books, sometimes your brother-in-law, the neighbor with the perfect lawn, the cab driver who dropped you at the airport, your memory of a similar experience. . . . Sometimes these authorities are worthy of our trust, sometimes not.

My teacher, the Stanford cognitive psychologist Amos Tversky, encapsulates this in “the Volvo story.” A colleague was shopping for a new car and had done a great deal of research. Consumer Reports showed through independent tests that Volvos were among the best built and most reliable cars in their class. Customer satisfaction surveys showed that Volvo owners were far happier with their purchase after several years. The surveys were based on tens of thousands of customers. The sheer number of people polled meant that any anomaly—like a specific vehicle that was either exceptionally good or exceptionally bad—would be drowned out by all the other reports. In other words, a survey such as this has statistical and scientific legitimacy and should be weighted accordingly when one makes a decision. It represents a stable summary of the average experience, and the most likely best guess as to what your own experience will be (if you’ve got nothing else to go on, your best guess is that your experience will be most like the average).

Amos ran into his colleague at a party and asked him how his automobile purchase was going. The colleague had decided against the Volvo in favor of a different, lower-rated car. Amos asked him what made him change his mind after all that research pointed to the Volvo. Was it that he didn’t like the price? The color options? The styling? No, it was none of those reasons, the colleague said. Instead, the colleague said, he found out that his brother-in-law had owned a Volvo and that it was always in the shop.

From a strictly logical point of view, the colleague is being irrational. The brother-in-law’s bad Volvo experience is a single data point swamped by tens of thousands of good experiences—it’s an unusual outlier. But we are social creatures. We are easily swayed by first-person stories and vivid accounts of a single experience. Although this is statistically wrong and we should learn to overcome the bias, most of us don’t. Advertisers know this, and this is why we see so many first-person testimonial advertisements on TV. “I lost twenty pounds in two weeks by eating this new yogurt—and it was delicious, too!” Or “I had a headache that wouldn’t go away. I was barking at the dog and snapping at my loved ones. Then I took this new medication and I was back to my normal self.” Our brains focus on vivid, social accounts more than dry, boring, statistical accounts.

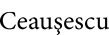

We make a number of reasoning errors due to cognitive biases. Many of us are familiar with illusions such as these:

In Roger Shepard’s version of the famous “Ponzo illusion,” the monster at the top seems larger than the one at the bottom, but a ruler will show that they’re the same size. In the Ebbinghaus illusion below it, the white circle on the left seems larger than the white circle on the right, but they’re the same size. We say that our eyes are playing tricks on us, but in fact, our eyes aren’t playing tricks on us, our brain is. The visual system uses heuristics or shortcuts to piece together an understanding of the world, and it sometimes gets things wrong.

By analogy to visual illusions, we are prone to cognitive illusions when we try to make decisions, and our brains take decision-making shortcuts. These are more likely to occur when we are faced with the kinds of Big Data that have become today’s norm. We can learn to overcome them, but until we do, they profoundly affect what we pay attention to and how we process information.

The Prehistory of Mental Categorization

Cognitive psychology is the scientific study of how humans (and animals and, in some cases, computers) process information. Traditionally, cognitive psychologists have made a distinction among different areas of study: memory, attention, categorization, language acquisition and use, decision-making, and one or two other topics. Many believe that attention and memory are closely related, that you can’t remember things that you didn’t pay attention to in the first place. There has been relatively less attention paid to the important interrelationship among categorization, attention, and memory.

The act of categorizing helps us to organize the physical world-out-there but also organizes the mental world, the world-in-here, in our heads and thus what we can pay attention to and remember.

As an illustration of how fundamental categorization is, consider what life would be like if we failed to put things into categories. When we stared at a plate of black beans, each bean would be entirely unrelated to the others, not interchangeable, not of the same “kind.” The idea that one bean is as good as any other for eating would not be obvious. When you went out to mow the lawn, the different blades of grass would be overwhelmingly distinct, not seen as part of a collective. Now, in these two cases, there are perceptual similarities from one bean to another and from one blade of grass to another. Your perceptual system can help you to create categories based on appearances. But we often categorize based on conceptual similarities rather than perceptual ones. If the phone rings in the kitchen and you need to take a message, you might walk over to the junk drawer and grab the first thing that looks like it will write. Even though you know that pens, pencils, and crayons are distinct and belong to different categories, for the moment they are functionally equivalent, members of a category of “things I can write on paper with.” You might find only lipstick and decide to use that. So it’s not your perceptual system grouping them together, but your cognitive system. Junk drawers reveal a great deal about category formation, and they serve an important and useful purpose by functioning as an escape valve when we encounter objects that just don’t fit neatly anywhere else.

Our early ancestors did not have many personal possessions—an animal skin for clothing, a container for water, a sack for collecting fruit. In effect the entire natural world was their home. Keeping track of all the variety and variability of that natural world was essential, and also a daunting mental task. How did our ancestors make sense of the natural world? What kinds of distinctions were fundamental to them?

Because events during prehistory, by definition, left no historical record, we have to rely on indirect sources of evidence to answer these questions. One such source is contemporary preliterate hunter-gatherers who are cut off from industrial civilization. We can’t know for sure, but our best guess is that they are living life very much as our own hunter-gatherer ancestors did. Researchers observe how they live, and interview them to find out what they know about how their own ancestors lived, through family histories and oral traditions. Languages are a related source of evidence. The “lexical hypothesis” assumes that the most important things humans need to talk about eventually become encoded in language.

One of the most important things that language does for us is help us make distinctions. When we call something edible, we distinguish it from—implicitly, automatically—all other things that are inedible. When we call something a fruit, we necessarily distinguish it from vegetables, meat, dairy, and so on. Even children intuitively understand the nature of words as restrictive. A child asking for a glass of water may complain, “I don’t want bathroom water, I want kitchen water.” The little munchkins are making subtle discriminations of the physical world, and exercising their categorization systems.

Early humans organized their minds and thoughts around basic distinctions that we still make and find useful. One of the earliest distinctions made was between now and not-now; these things are happening in the moment, these other things happened in the past and are now in my memory. No other species makes this self-conscious distinction among past, present, and future. No other species lives with regret over past events, or makes deliberate plans for future ones. Of course many species respond to time by building nests, flying south, hibernating, mating—but these are preprogrammed, instinctive behaviors and these actions are not the result of conscious decision, meditation, or planning.

Simultaneous with an understanding of now versus before is one of object permanence: Something may not be in my immediate view, but that does not mean it has ceased to exist. Human infants between four and nine months show object permanence, proving that this cognitive operation is innate. Our brains represent objects that are here-and-now as the information comes in from our sensory receptors. For example, we see a deer and we know through our eyes (and, downstream, a host of native, inborn cognitive modules) that the deer is standing right before us. When the deer is gone, we can remember its image and represent it in our mind’s eye, or even represent it externally by drawing or painting or sculpting it.

This human capacity to distinguish the here-and-now from the here-and-not-now showed up at least 50,000 years ago in cave paintings. These constitute the first evidence of any species on earth being able to explicitly represent the distinction between what is here and what was here. In other words, those early cave-dwelling Picassos, through the very act of painting, were making a distinction about time and place and objects, an advanced cognitive operation we now call mental representation. And what they were demonstrating was an articulated sense of time: There was a deer out there (not here on the cave wall of course). He is not there now, but he was there before. Now and before are different; here (the cave wall) is merely representing there (the meadow in front of the cave). This prehistoric step in the organization of our minds mattered a great deal.

In making such distinctions, we are implicitly forming categories, something that is often overlooked. Category formation runs deep in the animal kingdom. Birds building a nest have an implicit category for materials that will create a good nest, including twigs, cotton, leaves, fabric, and mud, but not, say, nails, bits of wire, melon rinds, or shards of glass. The formation of categories in humans is guided by a cognitive principle of wanting to encode as much information as possible with the least possible effort. Categorization systems optimize the ease of conception and the importance of being able to communicate about those systems.

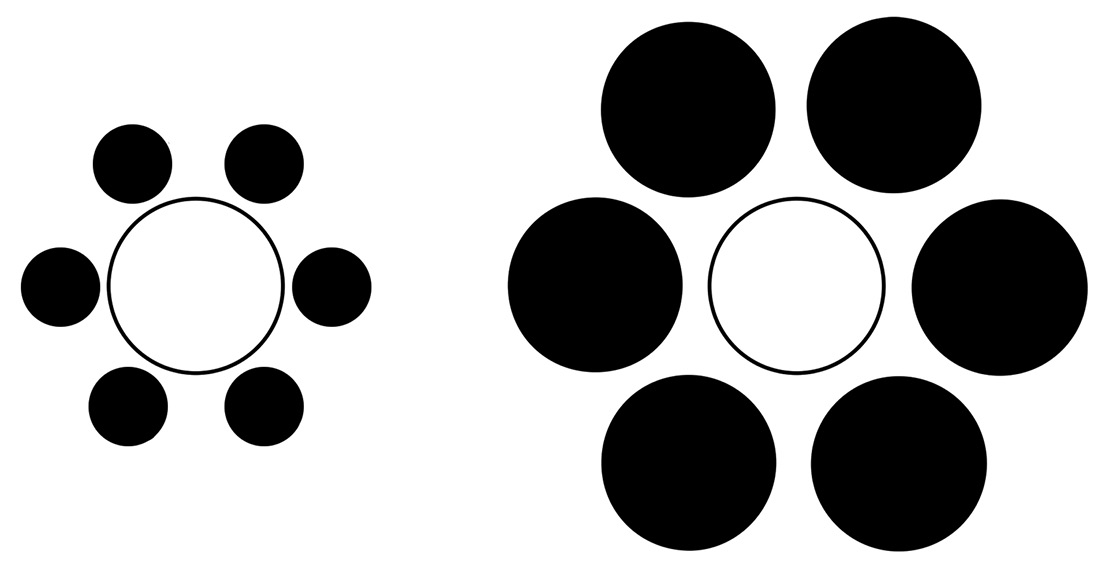

Categorization permeates social life as well. Across the 6,000 languages known to be spoken on the planet today, every culture marks, through language, who is linked to whom as “family.” Kinship terms allow us to reduce an enormous set of possible relations into a more manageable, smaller set, a usable category. Kinship structure allows us to encode as much relevant information as possible with the least cognitive effort.

All languages encode the same set of core (biological) relations: mother, father, daughter, son, sister, brother, grandmother, grandfather, granddaughter, and grandson. From there, languages differ. In English, your mother’s brother and your father’s brother are both called uncles. The husbands of your mother’s sister and of your father’s sister are also called uncles. This is not true in many languages where “uncledom” follows only by marriage on the father’s side (in patrilineal cultures) or only on the mother’s side (in matrilineal cultures), and can spread over two or more generations. Another point in common is that all languages have a large collective category for relatives who are considered in that culture to be somewhat distant from you—similar to our English term cousin. Although theoretically, many billions of kinship systems are possible, research has shown that actual systems in existence in disparate parts of the world have formed to minimize complexity and maximize ease of communication.

Kinship categories tell us biologically adaptive things, things that improve the likelihood that we have healthy children, such as whom we can and cannot marry. They also are windows into the culture of a group, their attitudes about responsibility; they reveal pacts of mutual caring, and they carry norms such as where a young married couple will live. Here is a list, for example, that anthropologists use for just this purpose:

- Patrilocal: the couple lives with or near groom’s kin

- Matrilocal: the couple lives with or near bride’s kin

- Ambilocal: married couple can choose to live with or near kin of either groom or bride

- Neolocal: couple moves to a new household in a new location

- Natolocal: husband and wife remain with their own natal kin and do not live together

- Avunculocal: couple moves to or near residence of the groom’s mother’s brother(s) (or other uncles, by definition, depending on culture)

The two dominant models of kinship behavior in North America today are neolocal and ambilocal: Young married couples typically get their own residence, and they can choose to live wherever they want, even many hundreds or thousands of miles away from their respective parents; however, many choose to live either with or very near the family of the husband or wife. This latter, ambilocal choice offers important emotional (and sometimes financial) support, secondary child care, and a built-in network of friends and relatives to help the young couple get started in life. According to one study, couples (especially low-income ones) who stay near the kin of one or both partners fare better in their marriages and in child rearing.

Kinship beyond the core relations of son-daughter and mother-father might seem to be entirely arbitrary, merely a human invention. But it shows up in a number of animal species and we can quantify the relations in genetic terms to show their importance. From a strictly evolutionary standpoint, your job is to propagate as many of your genes as possible. You share 50% of your genes with your mother and father or with any offspring. You also share 50% with your siblings (unless you’re a twin). If your sister has children, you will share 25% of your genes with them. If you don’t have any children of your own, your best strategy for propagating your genes is to help care for your sister’s children, your nieces and nephews.

Your direct cousins—the offspring of an aunt or uncle—share 12.5% of your genes. If you don’t have nephews and nieces, any care you put into cousins helps to pass on part of the genetic material that is you. Richard Dawkins and others have thus made cogent arguments to counter the claim of religious fundamentalists and social conservatives that homosexuality is “an abomination” that goes against nature. A gay man or lesbian who helps in the raising and care of a family member’s child is able to devote considerable time and financial resources to propagating the family’s genes. This has no doubt been true throughout history. A natural consequence of this chart is that first cousins who have children together increase the number of genes they pass on. In fact, many cultures promote marriage between first cousins as a way to increase family unity, retain familial wealth, or to ensure similar cultural and religious views within the union.

The caring for one’s nephews and nieces is not limited to humans. Mole rats will care for nieces and nephews but not for unrelated young, and Japanese quails show a clear preference for mating with first cousins—a way to increase the amount of their own genetic material that gets passed on (the offspring of first cousins will have 56.25% of their DNA in common with each parent rather than 50%—that is, the “family” genes have an edge of 6.25% in the offspring of first cousins than in the offspring of unrelated individuals).

Classifications such as kinship categories aid in the organization, encoding, and communication of complex knowledge. And the classifications have their roots in animal behavior, so they can be said to be precognitive. What humans did was to make these distinctions linguistic and thus explicitly communicable information.

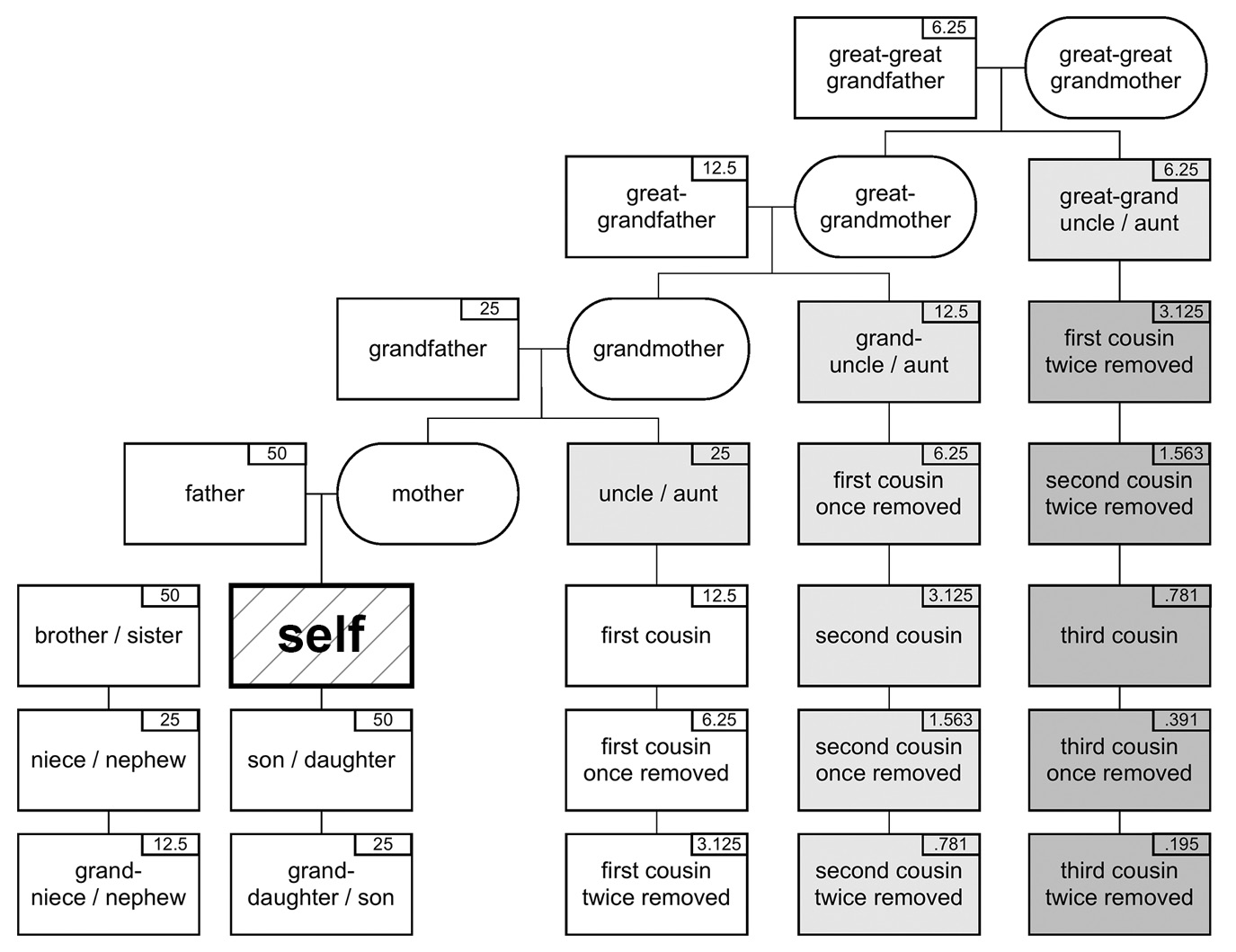

How did early humans divide up and categorize the plant and animal kingdom? The data are based on the lexical hypothesis, that the distinctions most important to a culture become encoded in that culture’s language. With increasing cognitive and categorizational complexity comes increased complexity in linguistic terms, and these terms serve to encode important distinctions. The work of sociobiologists, anthropologists, and linguists has uncovered patterns in naming plants and animals across cultures and across time. One of the first distinctions that early humans made was between humans and nonhumans—which makes sense. Finer distinctions crept into languages gradually and systematically. From the study of thousands of different languages, we know that if a language has only two nouns (naming words) for living things, it makes a distinction between human and nonhuman. As the language and culture develop, additional terms come into use. The next distinction added is for things that fly, swim, or crawl—roughly the equivalents of bird, fish, and snake. Generally speaking, two or three of these terms come into use at once. Thus, it’s unlikely that a language would have only three words for life-forms, but if it has four, they will be human, nonhuman, and two of bird, fish, and snake. Which two of those nouns gets added depends, as you might imagine, on the environment where they live, and on which critters the people are most likely to encounter. If the language has four such animal nouns, it adds the missing one of these three. A language with five such animal terms adds either a general term for mammal or a term for smaller crawling things, combining into one category what we in English call worms and bugs. Because so many preliterate languages combine worms and bugs into a single category, ethnobiologists have made up a name for that category: wugs.

Most languages have a single folksy word for creepy-crawly things, and English is no exception. Our own term bugs is an informal and heterogeneous category combining ants, beetles, flies, spiders, aphids, caterpillars, grasshoppers, ticks, and a large number of living things that are biologically and taxonomically quite distinct. The fact that we still do this today, with all our advanced scientific knowledge, underscores the utility and innateness of functional categories. “Bug” promotes cognitive economy by combining into a single category things that most of the time we don’t need to think about in great detail, apart from keeping them out of our food or from crawling on our skin. It is not the biology of these organisms that unites them, but their function in our lives—or our goal of trying to keep them on the outside of our bodies and not the inside.

The category names used by preliterate, tribal-based societies are similarly in contradiction to our modern scientific categories. In many languages, the word bird includes bats; fish can include whales, dolphins, and turtles; snake sometimes includes worms, lizards, and eels.

After these seven basic nouns, societies add other terms to their language in a less systematic fashion. Along the way, there are some societies that add an idiosyncratic term for a specific species that has great social, religious, or practical meaning. A language might have a single term for eagle in addition to its general term bird without having any other named birds. Or it might single out among the mammals a single term for bear.

A universal order of emergence for linguistic terms shows up in the plant world as well. Relatively undeveloped languages have no single word for plants. The lack of a term doesn’t mean they don’t perceive differences, and it doesn’t mean they don’t know the difference between spinach and skunk weed; they just lack an all-encompassing term with which to refer to plants. We see cases like this in our own language. For example, English lacks a single basic term to refer to edible mushrooms. We also lack a term for all the people you would have to notify if you were going into the hospital for three weeks. These might include close relatives, friends, your employer, the newspaper delivery person, and anyone you had appointments with during that period. The lack of a term doesn’t mean you don’t understand the concept; it simply means that the category isn’t reflected in our language. This could be because a need for it hasn’t been so pressing that a word needed to be coined.

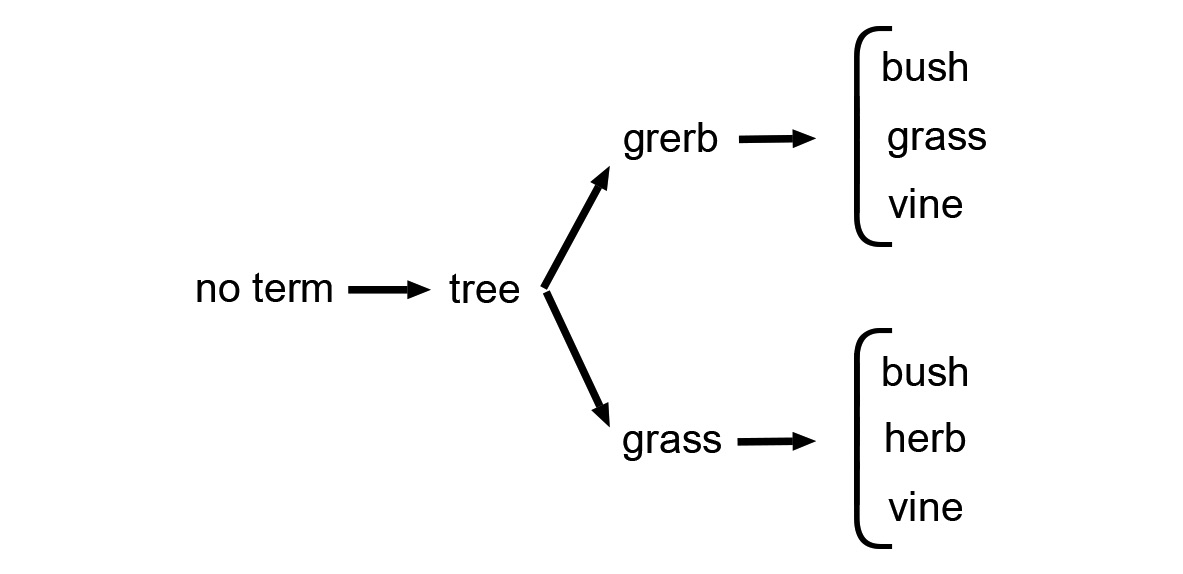

If a language has only a single term for nonanimal living things, it is not the all-encompassing word plant that we have in English. Rather, it is a single word that maps to tall, woody growing things—what we call trees. When a language introduces a second term, it is either a catchall term for grasses and herbs—which researchers call grerb—or it is the general term for grass and grassy-like things. When a language grows to add a third term for plants and it already has grerb, the third, fourth, and fifth terms are bush, grass, and vine (not necessarily in that order; it depends on the environment). If the language already has grass, the third, fourth, and fifth terms added are bush, herb, and vine.

Grass is an interesting category because most of the members of the category are unnamed by most speakers of English. We can name dozens of vegetables and trees, but most of us just say “grass” to encompass the more than 9,000 different species. This is similar to the case with the term bug—most of the members of the category remain unnamed by most English speakers.

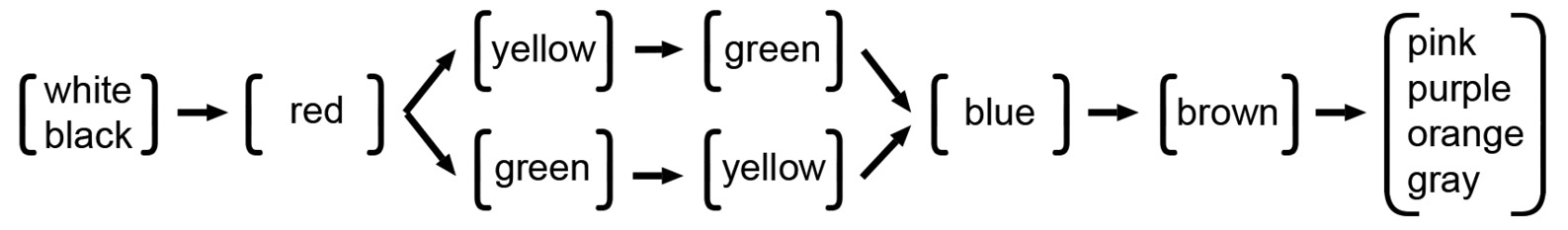

Orders of emergence in language exist for other concepts. Among the most well known was the discovery by UC Berkeley anthropologists Brent Berlin and Paul Kay of a universal order of emergence for color terms. Many of the world’s preindustrial languages have only two terms for color, roughly dividing the world into light and dark colors. I’ve labeled them WHITE and BLACK in the figure, following the literature, but it doesn’t mean that speakers of these languages are literally naming only white and black. Rather, it means that half the colors they see get mapped to a single “light colors” term and half to a single “dark colors” term.

Now here’s the most interesting part: When a language advances and adds a third term to its lexicon for color, the third term is always red. Various theories have been proposed, the dominant one being that red is important because it is the color of blood. When a language adds a fourth term, it is either yellow or green. The fifth term is either green or yellow, and the sixth term is blue.

These categories are not just academic or of anthropological interest. They are critical to one of the basic pursuits of cognitive science: to understand how information is organized. And this need to understand is a hardwired, innate trait that we humans share because knowledge is useful to us. When our early human ancestors left the cover of living in trees and ventured out onto the open savanna in search of new sources of food, they made themselves more vulnerable to predators and to nuisances like rats and snakes. Those who were interested in acquiring knowledge—whose brains enjoyed learning new things—would have been at an advantage for survival, and so this love of learning would eventually become encoded in their genes through natural selection. As the anthropologist Clifford Geertz noted, there is little doubt that preliterate, tribal-based subsistence humans “are interested in all kinds of things of use neither to their schemes [n]or to their stomachs. . . . They are not classifying all those plants, distinguishing all those snakes, or sorting out all those bats out of some overwhelming cognitive passion rising out of innate structures at the bottom of the mind. . . . In an environment populated with conifers, or snakes, or leaf-eating bats, it is practical to know a good deal about conifers, snakes, or leaf-eating bats, whether or not what one knows is in any strict sense materially useful.”

An opposing view comes from the anthropologist Claude Lévi-Strauss, who felt that classification meets an innate need to classify the natural world because the human brain has a strong cognitive propensity toward order. This preference for order over disorder can be traced back through millions of years of evolution. As mentioned in the Introduction, some birds and rodents create boundaries around their nests, typically out of rocks or leaves, that are ordered; if the order is disturbed, they know that an intruder has come by. I’ve had several dogs who wandered through the house periodically to collect their toys and put them in a basket. Humans’ desire for order no doubt scaffolded on these ancient evolutionary systems.

The UC Berkeley cognitive psychologist Eleanor Rosch argued that human categorization is not the product of historical accident or arbitrary factors, but the result of psychological or innate principles of categorization. The views of Lévi-Strauss and Rosch suggest a disagreement with the dichotomy Geertz draws between cognitive passion and practical knowledge. My view is that the passion Geertz refers to is part of the practical benefit of knowledge—they are two sides of the same coin. It can be practical to know a great deal about the biological world, but the human brain has been configured—wired—to acquire this information and to want to acquire it. This innate passion for naming and categorizing can be brought into stark relief by the fact that most of the naming we do in the plant world might be considered strictly unnecessary. Out of 30,000 edible plants thought to exist on earth, just eleven account for 93% of all that humans eat: oats, corn, rice, wheat, potatoes, yucca (also called tapioca or cassava), sorghum, millet, beans, barley, and rye. Yet our brains evolved to receive a pleasant shot of dopamine when we learn something new and again when we can classify it systematically into an ordered structure.

In Pursuit of Excellent Categorization

We humans are hardwired to enjoy knowledge, in particular knowledge that comes through the senses. And we are hardwired to impose structure on this sensory knowledge, to turn it this way and that, to view it from different angles, and try to fit it into multiple neural frameworks. This is the essence of human learning.

We are hardwired to impose structure on the world. A further piece of evidence for the innateness of this structure is the extraordinary consistency of naming conventions for biological classification (plants and animals) across widely disparate cultures. All languages and cultures—independently—came up with naming principles so similar that they strongly suggest an innate predisposition toward classification. For example, every language contains primary and secondary plant and animal names. In English we have fir trees (in general) and Douglas fir (in particular). There are apples and then there are Granny Smiths, golden delicious, and pippins. There are salmon and then sockeye salmon, woodpeckers and acorn woodpeckers. We look at the world and can perceive that there exists a category that includes a set of things more alike than they are unalike, and yet we recognize minor variations. This extends to man-made artifacts as well. We have chairs and easy chairs, knives and hunting knives, shoes and dancing shoes. And here’s an interesting side note: Nearly every language also has some terms that mimic this structure linguistically but in fact don’t refer to the same types of things. For example, in English, silverfish is an insect, not a type of fish; prairie dog is a rodent, not a dog; and a toadstool is neither a toad nor a stool that a toad might sit on.

Our hunger for knowledge can be at the roots of our failings or our successes. It can distract us or it can keep us engaged in a lifelong quest for deep learning and understanding. Some learning enhances our lives, some is irrelevant and simply distracts us—tabloid stories probably fall into this latter category (unless your profession is as a tabloid writer). Successful people are expert at categorizing useful versus distracting knowledge. How do they do it?

Of course some have that string of assistants who enable them to be in the moment, and that in turn makes them more successful. Smartphones and digital files are helpful in organizing information, but categorizing the information in a way that is helpful—and that harnesses the way our brains are organized—still requires a lot of fine-grained categorization by a human, by us.

One thing HSPs do over and over every day is active sorting, what emergency room nurses call triage. Triage comes from the French word trier, meaning “to sort, sift, or classify.” You probably already do something like this without calling it active sorting. It simply means that you separate those things you need to deal with right now from those that you don’t. This conscious active sorting takes many different forms in our lives, and there is no one right way. The number of categories varies and the number of times a day will vary, too—maybe you don’t even need to do it every day. Nevertheless, one way or another, it is an essential part of being organized, efficient, and productive.

I worked as the personal assistant for several years for a successful businessman, Edmund W. Littlefield. He had been the CEO of Utah Construction (later Utah International), a company that built the Hoover Dam and many construction projects all over the world, including half the railroad tunnels and bridges west of the Mississippi. When I worked for him, he also served on the board of directors of General Electric, Chrysler, Wells Fargo, Del Monte, and Hewlett-Packard. He was remarkable for his intellect, business acumen, and above all, his genuine modesty and humility. He was a generous mentor. Our politics did not always agree, but he was respectful of opposing views, and tried to keep such discussions based on facts rather than speculation. One of the first things he taught me to do as his assistant was to sort his mail into four piles:

- Things that need to be dealt with right away. This might include correspondence from his office or business associates, bills, legal documents, and the like. He subsequently performed a fine sort of things to be dealt with today versus in the next few days.

- Things that are important but can wait. We called this the abeyance pile. This might include investment reports that needed to be reviewed, articles he might want to read, reminders for periodic service on an automobile, invitations to parties or functions that were some time off in the future, and so on.

- Things that are not important and can wait, but should still be kept. This was mostly product catalogues, holiday cards, and magazines.

- Things to be thrown out.

Ed would periodically go through the items in all these categories and re-sort. Other people have finer-grained and coarser-grained systems. One HSP has a two-category system: things to keep and things to throw away. Another HSP extends the system from correspondence to everything that comes across her desk, either electronically (such as e-mails and PDFs) or as paper copies. To the Littlefield categories one could add subcategories for the different things you are working on, for hobbies, home maintenance, and so on.

Some of the material in these categories ends up in piles on one’s desk, some in folders in a filing cabinet or on a computer. Active sorting is a powerful way to prevent yourself from being distracted. It creates and fosters great efficiencies, not just practical efficiencies but intellectual ones. After you have prioritized and you start working, knowing that what you are doing is the most important thing for you to be doing at that moment is surprisingly powerful. Other things can wait—this is what you can focus on without worrying that you’re forgetting something.

There is a deep and simple reason why active sorting facilitates this. The most fundamental principle of the organized mind, the one most critical to keeping us from forgetting or losing things, is to shift the burden of organizing from our brains to the external world. If we can remove some or all of the process from our brains and put it out into the physical world, we are less likely to make mistakes. This is not because of the limited capacity of our brains—rather, it’s because of the nature of memory storage and retrieval in our brains: Memory processes can easily become distracted or confounded by other, similar items. Active sorting is just one of many ways of using the physical world to organize your mind. The information you need is in the physical pile there, not crowded in your head up here. Successful people have devised dozens of ways to do this, physical reminders in their homes, cars, offices, and throughout their lives to shift the burden of remembering from their brains to their environment. In a broad sense, these are related to what cognitive psychologists call Gibsonian affordances after the researcher J. J. Gibson.

A Gibsonian affordance describes an object whose design features tell you something about how to use it. An example made famous by another cognitive psychologist, Don Norman, is a door. When you approach a door, how do you know whether it is going to open in or out, whether to push it or pull it? With doors you use frequently, you could try to remember, but most of us don’t. When subjects in an experiment were asked, “Does your bedroom door open in to the bedroom or out into the hall?” most couldn’t remember. But certain features of doors encode this information for us. They show us how to use them, so we don’t have to remember, cluttering up our brains with information that could be more durably and efficiently kept in the external world.

As you reach for the handle of a door in your home, you can see whether the jamb will block you if you try to pull the door toward you. You are probably not consciously aware of it, but your brain is registering this and guiding your actions automatically—and this is much more cognitively efficient than your memorizing the flow pattern of every door you encounter. Businesses, office buildings, and other public facilities make it even more obvious because there are so many more people using them: Doors that are meant to be pushed open tend to have a flat plate and no handle on one side, or a push bar across the door. Doors that are meant to be pulled open have a handle. Even with the extra guidance, sometimes the unfamiliarity of the door, or the fact that you are on your way to a job interview or some other distracting appointment, will make you balk for a moment, not knowing whether to push or pull. But most of the time, your brain recognizes how the door works because of its affordance, and in or out you go.