O(1) is holy.

—Hamid Tizhoosh

Before learning how to implement algorithms, you should understand how to analyze the effectiveness of them. This chapter will focus on the concept of Big-O notation for time and algorithmic space complexity analysis. By the end of this chapter, you will understand how to analyze an implementation of an algorithm with respect to both time (execution time) and space (memory consumed).

Big-O Notation Primer

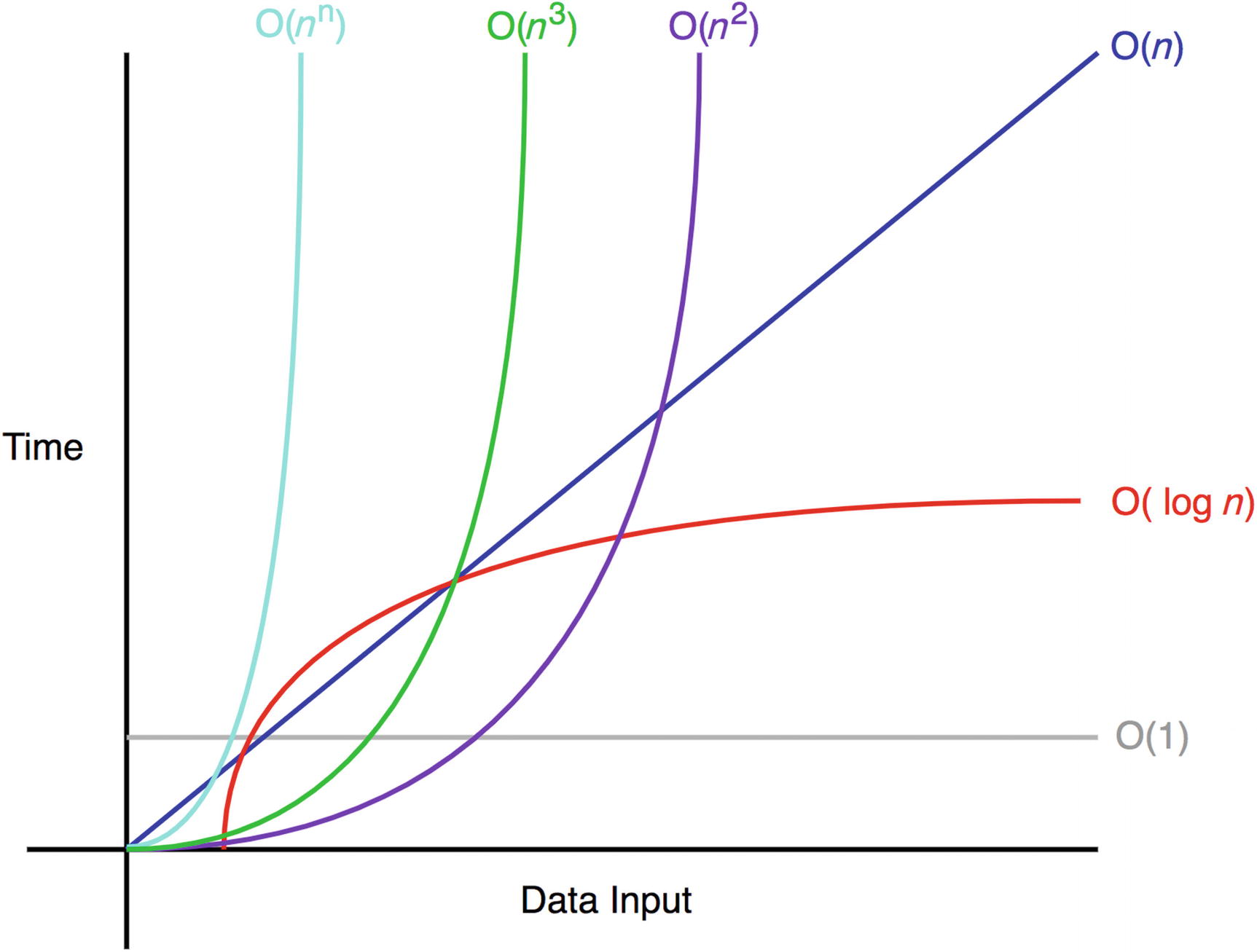

The Big-O notation measures the worst-case complexity of an algorithm. In Big-O notation, n represents the number of inputs. The question asked with Big-O is the following: “What will happen as n approaches infinity?”

Common Big-O complexities

The following sections illustrate these common time complexities with some simple examples.

Common Examples

O(1) does not change with respect to input space. Hence, O(1) is referred to as being constant time. An example of an O(1) algorithm is accessing an item in the array by its index. O(n) is linear time and applies to algorithms that must do n operations in the worst-case scenario.

Rules of Big-O Notation

Coefficient rule: If f(n) is O(g(n)), then kf(n) is O(g(n)), for any constant k > 0. The first rule is the coefficient rule, which eliminates coefficients not related to the input size, n. This is because as n approaches infinity, the other coefficient becomes negligible.

Sum rule: If f(n) is O(h(n)) and g(n) is O(p(n)), then f(n)+g(n) is O(h(n)+p(n)). The sum rule simply states that if a resultant time complexity is a sum of two different time complexities, the resultant Big-O notation is also the sum of two different Big-O notations.

Product rule: If f(n) is O(h(n)) and g(n) is O(p(n)), then f(n)g(n) is O(h(n)p(n)). Similarly, the product rule states that Big-O is multiplied when the time complexities are multiplied.

Transitive rule: If f(n) is O(g(n)) and g(n) is O(h(n)), then f(n) is O(h(n)). The transitive rule is a simple way to state that the same time complexity has the same Big-O.

Polynomial rule: If f(n) is a polynomial of degree k, then f(n) is O(nk). Intuitively, the polynomial rule states that polynomial time complexities have Big-O of the same polynomial degree.

Log of a power rule: log(nk) is O(log(n)) for any constant k > 0. With the log of a power rule, constants within a log function are also ignored in Big-O notation.

Special attention should be paid to the first three rules and the polynomial rule because they are the most commonly used. I’ll discuss each of those rules in the following sections.

Coefficient Rule: “Get Rid of Constants”

If f(n) is O(g(n)), then kf(n) is O(g(n)), for any constant k > 0.

This means that both 5f(n) and f(n) have the same Big-O notation of O(f(n)).

This block has f(n) = 5n. This is because it runs from 0 to 5n. However, the first two examples both have a Big-O notation of O(n). Simply put, this is because if n is close to infinity or another large number, those four additional operations are meaningless. It is going to perform it n times. Any constants are negligible in Big-O notation.

Lastly, this block of code has f(n) = n+1. There is +1 from the last operation (count+=3). This still has a Big-O notation of O(n). This is because that 1 operation is not dependent on the input n. As n approaches infinity, it will become negligible.

Sum Rule: “Add Big-Os Up”

If f(n) is O(h(n)) and g(n) is O(p(n)), then f(n)+g(n) is O(h(n)+p(n)).

It is important to remember to apply the coefficient rule after applying this rule.

In this example, line 4 has f(n) = n, and line 7 has f(n) = 5n. This results in 6n. However, when applying the coefficient rule, the final result is O(n) = n.

Product Rule: “Multiply Big-Os”

If f(n) is O(h(n)) and g(n) is O(p(n)), then f(n)g(n) is O(h(n)p(n)).

In this example, f(n) = 5n*n because line 7 runs 5n times for a total of n iterations. Therefore, this results in a total of 5n2 operations . Applying the coefficient rule, the result is that O(n)=n2.

Polynomial Rule: “Big-O to the Power of k”

The polynomial rule states that polynomial time complexities have a Big-O notation of the same polynomial degree.

If f(n) is a polynomial of degree k, then f(n) is O(nk).

In this example, f(n) = nˆ2 because line 4 runs n*n iterations.

This was a quick overview of the Big-O notation. There is more to come as you progress through the book.

Summary

Eliminating coefficients/constants (coefficient rule)

Adding up Big-Os (sum rule)

Multiplying Big-Os (product rule)

Determining the polynomial of the Big-O notation by looking at loops (polynomial rule)

Exercises

Calculate the time complexities for each of the exercise code snippets.

Exercise 1

Exercise 2

Exercise 3

Exercise 4

Exercise 5

Exercise 6

Answers

- 1.

O(n2)

There are two nested loops. Ignore the constants in front of n.

- 2.

O(n3)

There are four nested loops, but the last loop runs only until 10.

- 3.

O(1)

Constant complexity. The function runs from 0 to 1000. This does not depend on n.

- 4.

O(n)

Linear complexity. The function runs from 0 to 10n. Constants are ignored in Big-O.

- 5.

O(log2n)

Logarithmic complexity. For a given n, this will operate only log2n times because i is incremented by multiplying by 2 rather than adding 1 as in the other examples.

- 6.

O(∞)

Infinite loop. This function will not end.