More than a decade has passed since the pathbreaking book The Shock Doctrine (2007) was published. A supposed “letter” or message from the “frontlines” of neoliberalism, Naomi Klein’s tour de force provided a new vocabulary and, more importantly, a new tactical map, a topographical representation, or an image, if we will, of how contemporary forms of capitalism operate. Labelling it “disaster capitalism,” Klein took some thirty odd years of history and discovered a pattern in the data. She showed how closely psychiatrists “shocking” and torturing patients in the 1950s resembled the torture and massacre of political dissidents and the violent reorganization of economy in the name of “structural readjustment” throughout the 1970s globally.

Today, the doctrine of shock has never appeared more pertinent, particularly in the midst of the COVID-19 global pandemic. Now is the right time to reflect and ask what has or has not changed over the last fifty years since the 1970s that marked the start of post-Fordism and neoliberalism for many scholars. I want to extend Klein’s argument to take account of technical change and transformations in the nature of extractionism and economy.

In our current moment, market volatility, planetary-scale disease tracking, and human suffering appear to be indelibly and literally normatively connected. Saving the economy has been as important as saving human lives. While there have been pandemics in the past, never has the threat of disease and species-wide danger been so synchronically shared through media. Computational and digital technologies mark this event. We track curves, analytics, and numbers while also assuming big data will manage the coming plague. Automated platforms and social networks deliver our goods, mediate our work and friendships, and maintain what might have once been labelled the “social.” Artificial intelligence and machine learning are also being deployed to predict future disease curves and to rapidly discover, test, and simulate the protein structures and compounds that might serve as treatments or vaccinations. This turn to computation as salvation is unique, I argue, to our present, and it says much about our future imaginaries of life on this, and perhaps soon to be, other planets.

I want, therefore, to return to the site originally mapped as the first “experiment” in shock and economy—Chile. I return asking a new set of questions about how technology and life are currently governed, and, more importantly, how we are experimenting with the future of life through new forms of computational design and infrastructures. I label this next version, or perhaps test of the shock doctrine, the “smartness mandate,” and its marker is the penetration of computation and automation into every segment of human life. To sketch the contours of this new condition, this chapter speculatively examines the Atacama Desert in Chile as a topological site for envisioning and mapping technical futures.

In the course of this piece, I will survey a series of sites traversing scientific inquiry, energy and extraction, and calculative techniques. I want to emphasize that this is not a thorough or complete ethnography. I do not claim full knowledge of Chile or the Atacama, I am not an area specialist. Rather, I use this landscape to question our generally held beliefs in relation to AI. In contemplating our present, I will link the ALMA installation, an astronomical observatory that was part of the event horizon telescope, with the lithium beds in the Salar de Atacama and the Center for Mathematical Modelling in the University of Chile, Santiago. This landscape will bridge data and matter; these sites are the producers of some of the largest nonproprietary data sets on Earth and providers of many of the materials that create the information age. In this essay, I will argue that collectively these sites form the landscape of a planetary testbed, a petri dish cultivating potential futures of life, politics, and technology on Earth and beyond.

Event Horizons

A point of no return.—Oxford English Dictionary

A boundary beyond which events cannot affect an observer on the opposite side of it.—Wikipedia

First image of black hole, April 10, 2019

(Credit EHT collaboration)

When the image was released it circulated at literally the speed of light across that most human and social of networks—the internet. Comments online ranged from amazement to vast frustration that the black hole did indeed look just like we thought it might. “Awesome!,” “amazing,” “mystical,” and “capable of making humans fall in love” jockeyed with “anticlimactic,” “really?” and “it looks like the ‘Eye of Sauron’ from Lord of the Rings.” Maybe, such comments suggest, the culmination of having turned our whole planet into a technology is just a fake artifact of computer graphics algorithms; merely another stereotypical image recalling longstanding standard Western cultural tropes of radically alien and powerful forces (Overbye, 2019)? In combining both mythic aesthetic conceptions of outer space and the power of the gods with the dream of objectivity and perfect vision through technology, the image brings forth a dual temporal imaginary. The event-image both crystallized new imaginaries of a planetary (and even post-planetary) scale future integrated through data and machine sensing and mobilized our oldest and most repeated conventions of what extreme nonhuman alterity might appear like, returning us to the legacies of myth and g-ds.

Whatever the “truth” of this image, I argue that it provides evidence of a radical reformulation of perception. This image presents the figure of the terminal limits of human perception while simultaneously embodying a new form of experience comprised not within any one human or even technical installation, but through the literal networking of the entire planet into a sensor-perception instrument and experiment. This image provides an allegory, therefore, of the artificial intelligence and machine learning systems that underpin it, while it simultaneously exemplifies a classic problem in physics and computation—mainly, the impossibility of objectivity and the limits of being able to calculate or access infinity.

Objectivity

These problems have a history in science. As many scholars have demonstrated, the concept of mechanical objectivity first emerged in the nineteenth century with photography and film. The idea was linked to recognizing the fallibility of the human body and the impossibility of human objectivity, which simultaneously birthed a new desire for perfect, perhaps divine-like objectivity, inherited from Renaissance perspectivalism. This G-d like objectivity would now arrive not through the celebration of the human but through prosthesis and mechanical reproduction.1 The latest forms of big data analytics, what I have termed in my past work communicative objectivity, push this history to a new scale and intensity transforming the management of time and life (Halpern, 2014). The event horizon abandons a return to the liberal subject and offers a new model. A model of objectivity not as certainty but as the management of uncertainty and as the production, in fact, of new zones by which to increase the penetration of computation and expand the frontiers of both science and capital. In the case of the event horizon, the frontier is to reconcile and integrate two radically different forms of math and theory—general relativity and quantum mechanics. This is a scalar question—gravity is not understood at the large scale in the same way as at a subatomic or nano-scale; the hope is that these experiments will allow a unification of these two scales. In doing so, the event horizon experiments are logistical in their logic, attempting to unify and syncopate the extremely local and specific with the very large and generic.

At stake are questions of chance and what constitutes evidence and objectivity for science. Black holes were predicted, but even Einstein did not believe his own prediction, refusing to accept so radical a mutation of time and space. Einstein, it appears, still wanted to know the truth. The idea of a space beyond which his own laws no longer applied was unthinkable. However, the event horizon is not the realm of surety but of probabilities and uncertainties. Physicists can define or speculate on certain state spaces but can never precisely know the exact movement of any one particle or element. Furthermore, the event horizon is the territory of histories. Black holes may contain keys, “a backward film,” according to physicist and historian of science Peter Galison, to the universe’s entire history. Time can reverse in the black hole, and the faint glimpse of the vast energies that cluster around the event horizon suggest possible past trajectories, but never just one (Overbye, 2020).

March 13, 2017 High Altitude Sub millimeter wave array, Alma Observatory, Chajnantor Plateau, Atacama, Chile. Part of the EHT

(Photo Orit Halpern)

Sublimity

One of the key installations in this project was the Atacama Large Millimeter/Submillimeter Array (ALMA) installation, which I visited on March 13, 2017. Located on the Chajnantor Plateau in the Atacama Desert in Chile, the radio telescopes are installed at an elevation of 5050 meters in one of the driest and most extreme environments on Earth.2

Photo: Orit Halpern

In my mind, the apparatus reflected and advanced every fantasy of extraplanetary exploration I read as a child in science fiction books and watched on NASA-sponsored public programming on TV. This analogy is not just fiction. NASA and other space agencies use this desert to test equipment, train astronauts, and study the possible astrobiology of the future planets we will colonize (Hart, 2018). If the event horizon is the point of no-return, the Atacama is the landscape of that horizon, the infrastructure for our imaginaries of abandoning Earth and never returning to the past. But again, this is an irony, for ALMA collects history. Every signal processed here is eons old, millions if not billions of light years in time-space.

This technical infrastructure combined with an environment among the most arid on Earth produces strange aesthetic effects in a viewer. Such infinitude brought to us by the gift of our machines might be labelled “sublime.” What incites such emotions at one moment in history, however, may not in another. The sublime is a series of emotional configurations that come into being through historically different social and technological assemblages. My sentiments of extreme awe and desire for these infrastructures, a sense of vertigo and loss of figure-ground relations, a descent into the landscape, recalls the work of historian David Nye on the “technological sublime.” For North Americans, according to Nye, the later nineteenth century offered a new industrial landscape that incited extreme awe and concepts of beauty through structures like extension bridges. Structures such as the Brooklyn Bridge were deliberately built over the longest part of the river to prove the technical competence of its builders. Other constructions—skyscrapers, dams, canals, and so forth—were all part of this new “nature” that came into being at the time. Sites that produced a vertigo between figure and ground and reorganized social comprehensions of what constituted “nature” and “culture” or objects and subjects. The sublime, after all, is the loss of self into the landscape (Halpern, 2014; Nye, 1994).

Post-World War II environments saw a subtle shift in this aesthetic condition as it became increasingly mediated through television and other communication devices, making technical mediation a site of desire and aesthetic production. ALMA takes the informational situation to a new extreme. Here technology produces a new landscape that turns infrastructure into a site of sublimity, confusing the boundaries of the technical and “natural” and refocusing our perception toward a post-planetary aesthetic that is about transforming both scale (Earth is small) and time. The discourse surrounding ALMA suggests that all planets, including ours, and all their component landscapes are recording instruments surveying temporalities far outside of and beyond human experience (Halpern, 2014).

Machine Vision

To process the most ancient of signals demands the latest in machine learning methods and other analytic techniques. These data sets are utilized in partnership by Microsoft and other similar organizations as training sets due to their complexity and the difficulty in separating noise from signals as well as environments providing sites for testing algorithms and experimenting with new approaches to supervised and, especially, unsupervised learning (Zhang & Zhao, 2015). The image was produced through a massive integrated effort, analogous to the scientific images produced by other remote-sensing devices, such as the Mars rovers featured in Janet Vertesi’s (2015) account of machine vision at NASA. These rovers are coordinated and their data synthesized and analyzed into signification through a large process that is not the work of individuals but groups. The same can be said for the EHT.

To produce the event horizon image scientists used interferometry, a process that correlates radio waves seen by many telescopes into a singular description. The trick is to find repeat patterns that can be correlated between sites of the EHT and to remove the massive amounts of “noise” in the data, in order to produce this singular “image” of what the National Science Foundation labelled the “invisible.” Since black holes are very small on the scale of space and a large amount of other data from different phenomena in space enter the dishes as well, only machines have the capacity to analyze the large volume of signals and attempt to remove the supplementary data. Signals are picked up from an array of observatories around the world, and those that match what relativity theory predicted would be an event horizon must be correlated. Finding these signals requires the data be “cleaned”—a critical component of finding what is to be correlated. This process occurs in many different sites. I visited the data cleaners for ALMA at the European Space Observatory base in Santiago, where we discussed the process. Many of the teams worked with different machine learning approaches to use unsupervised learning methods to identify artifacts in the data and remove them. The process was quite difficult since no one had ever “seen” an event horizon or knew exactly what kind of information they were seeking.3 Having never seen a black hole, and never being able to, what should we look for? Our machines are helping us decide.

Humanity, however, insists on its liberal agency. Irrespective of infrastructural capacities, the final image was attributed to a young woman, Katie Bouman, a postdoctoral researcher at the Harvard-Smithsonian Center for Astrophysics. Bouman apparently created the algorithm that allowed the vast amounts of data gathered at the EHT’s many installations to be compared and synthesized into a singular image. In fact, Bouman was not an astronomer or astrophysicist but a computer scientist working on machine vision as a more generic problem (Grossman & Conover, 2020; Temming, 2019; Vertesi, 2015).

This attribution gestures to our own human temporal problems with the new media networks within which we are caught. It is not that the algorithm was not important, but that obviously a great deal of work by many people went into setting up the data-gathering experiment and developing methods to “clean” data. Bouman emerged as a progressive image that might translate the incoherence of a massive system into the identity politics of human history. The new discourse suggested we, like Einstein, were not yet comfortable with the horizon to our own control and command over our networks. This tension between radical uncertainty and inhuman cognition as well as our need to produce temporal command over data is one of the key features driving the growth of AI and its seemingly correlated discourses of mastery over futurity.

At ALMA, objectivity is indeed an impossibility. To process this data, figure-ground relations were literally confused. The official tour guide tells me that these telescopes contain units at the base that are the temperature of deep space in order to isolate and process signals from space and separate them from “noise” from the earthly atmosphere. By returning the signal to its “original” temperature the appropriate wavelengths can be isolated. In this installation, data is literally contextualized in an environment built within the experimental set-up. The furthest outside of Earth recreated within the machines. But perhaps this is the lesson of all scientific experiments: we create environments that are always already artificial and make nature from them (Pinch, Gooding, & Schaffer, 1989). Like the experiments of behavioral scientists and cyberneticians that produced new worlds in the name of depicting the planet, the ALMA telescopes recreate outer space within to produce visibility for the invisible and to reassemble eons of galactic time in the space of scientific practice. And if we take the event horizon as the allegory for our present, where we have turned the earth into a medium for information gathering and analysis, then this is even truer. The sites of data production, data gathering, and analysis are increasingly blurry in their boundaries. The planet has become a medium for recording inscriptions.4

Energy

The earth as medium is a truism in the Atacama that takes many forms. “Chile is copper” is an oft repeated mantra, I am told by Katie Detwiler, an anthropologist working on the Atacama and my guide to this place. And copper is in almost every machine, conducting all our electricity. The Atacama has some of the largest copper mines on Earth. Copper is industrial material; it also rests (although perhaps only for now) on an industrial economy. Copper markets are still relatively unleveraged. Unlike some other energy, mineral, and metal markets, there is little futures or derivative action. As a commodity copper suffers from modern economic concepts of business cycles, and its political economy is seemingly still grounded in terms like GDP and GNP along with concepts, stemming from Thomas Malthus and Adam Smith in the eighteenth century, of resource limitation, scarcity, demand, price, and above all population and nation. In Chile, copper is equated with nationalism. Under Pinochet these mines were unionized (contrary to what we might expect), and the state corporation CODELCO continues to smelt all the copper. This rather surprising history for a dictator synonymous with Milton Friedman and the Chicago Boys emerged from an alignment with right wing nationalists, authoritarianism, and neoliberalism (Klein, 2010).

March 23, 2017, Salar de Atacama, SQM fields

(Photo Orit Halpern)

The beds are beautiful, created by brine brought to the surface. Lithium is never pure; it is mixed with other things, also all valuable, such as magnesium and potassium. As one looks over the fields, there is an array of colors ranging from yellow to very bright blue. The first fields are still full of potassium that might serve as bedrock for fertilizers. As the beds dry longer, they turn bluer and then yellower. Finally, after almost a year, the bed fully dries5 and lithium salt, LiCl, emerges. The salt is scraped from the bed, harvested, separated from trace boron and magnesium, and affixed with Sodium Carbonate for sale. Alejandro Bucher, the technical manager of the installation, takes us on a tour.6 Its owners, Sociedad Química y Minera (SQM), he tells us, care about the environment—almost no chemicals are used in the process. The extraction of lithium is solar powered; the sun dehydrates the water and draws off the salts. A pure process. Except that it drains water. He assures us, however, that the latest expansions and technical advances will “optimize” this problem. Better water evaporation capture systems and planned desalinization plants will reduce the impact on this desert, which is the driest on Earth, and on these brine waters that are also the springs for supporting fragile ecosystems of shrimp, bacteria, and flamingos. Environmentalists, however, beg to differ; inquiries into the environmental impact of the fields have been undertaken, and the process of assessment has been criticized as opaque (Carrere, 2018).

What Pinochet never did—privatize mining—has fully come to pass with lithium. While SQM is Chilean, it is private. SQM has been attacked for anti-trade union practices, and unions are fighting to label lithium a matter of national security so the state can better regulate the material (IndustriALL Global Union, 2016). This corporation also partakes in planetary games of logistics around belt roads and resources. In 2018, the Chinese corporation Tianqi acquired a 24% share of SQM, essentially coming to dominate the corporation. While the government continues to monitor the situation and demand limits on Chinese participation on the corporate board, the situation is in flux (Gúzman, 2018). These games also demand privatized water supplies. Water is a massive commodity. Some of the largest desalinization plants on Earth will be built here by a range of vendors largely servicing the mining sector. These installations are built to fuel mining in the region. Desalinization is yet another extractive technology that facilitates the transformation of seemingly finite boundaries and resources into flexible eternally expandable frontiers, in this case through advanced technological processes of removing salt from seawater. Thus, a new infrastructure of corporate actors merging high tech with salt and water to support our fantasies of eternal growth emerges, so that we may drive clean cars and eventually arrive to the stars—where we will extract ever more materials (Harris, 2020).

These salts are also the fragile infrastructures for unique ecosystems and peoples. The water usage from these installations threatens indigenous communities in the area, already disposed and disenfranchised by the mining industry.

It is also in service of imagining a future on other planets that another group of scientists—astrobiologists—study the bacteria in these brines. These bacteria have evolved differently; the extreme conditions of the salts might offer clues, these scientists tell us, of life on Mars. These cellular creatures hold the key to survival in space and to the liveliness that might exist on other planets. We cannot accept being alone in the universe, and these bacteria allow us to envision, in their novel metabolisms and capacity to live under PH conditions lethal to most other organisms, another way to live. These beds, the astrobiologists argue, cannot be taken away to make batteries; they harbor our key to space and the way to terraform (Boyle, 2018; Parro et al., 2011).7 What are we to do? How to realize a future, driven by these batteries, that disappears as we make them?

Optimization

More than anything, the lithium mines suggest new attitudes to, or maybe practices of, boundary making and market making. They demonstrate a move away from the perfect stabilities of supply-and-demand curves to the plasticity of another order of algorithmic finance and logistical management grounded in the computations of derivative pricing equations and debt capitalism.8 The relationship between these very different and radically shifting territories of mining, salt harvesting, and astronomy can therefore only be realized in the turn to mathematics.

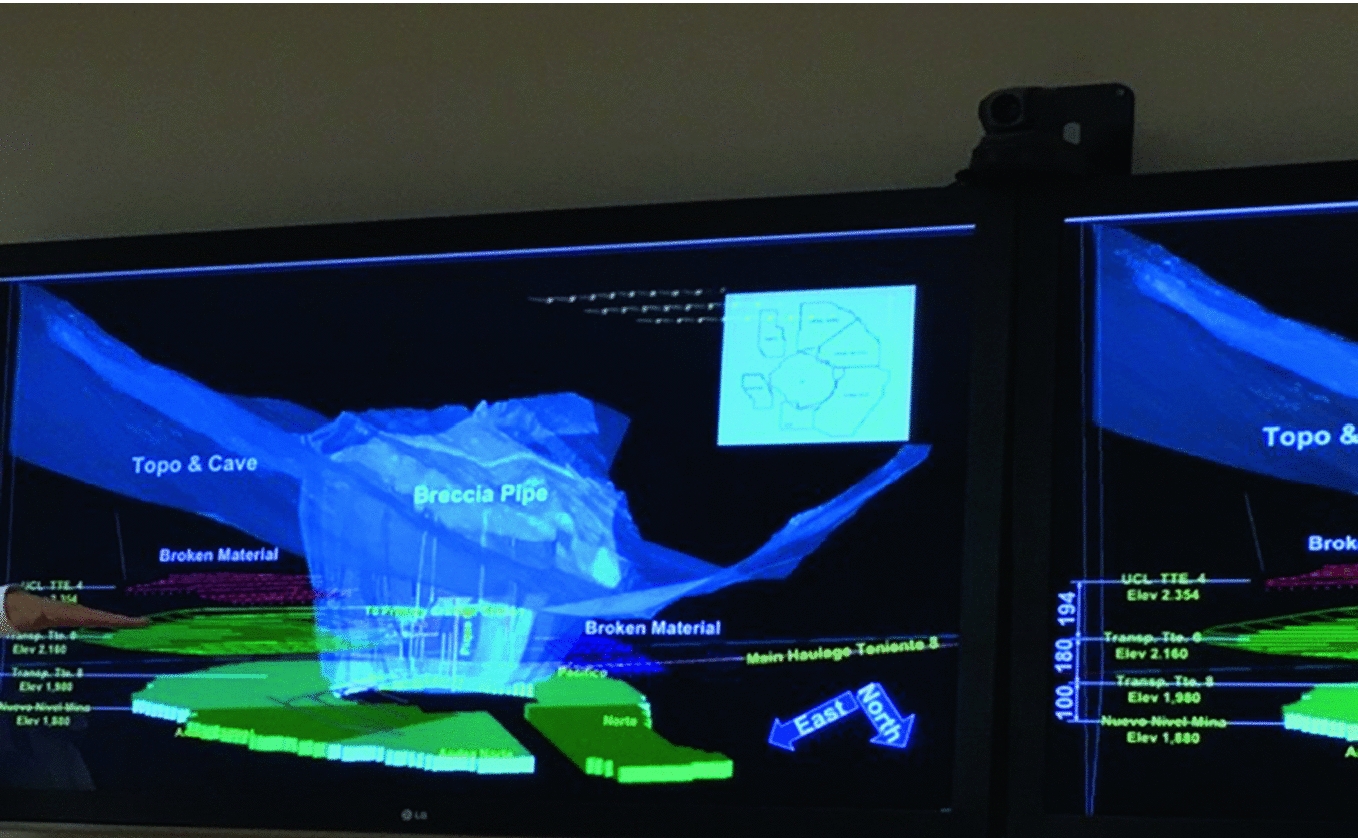

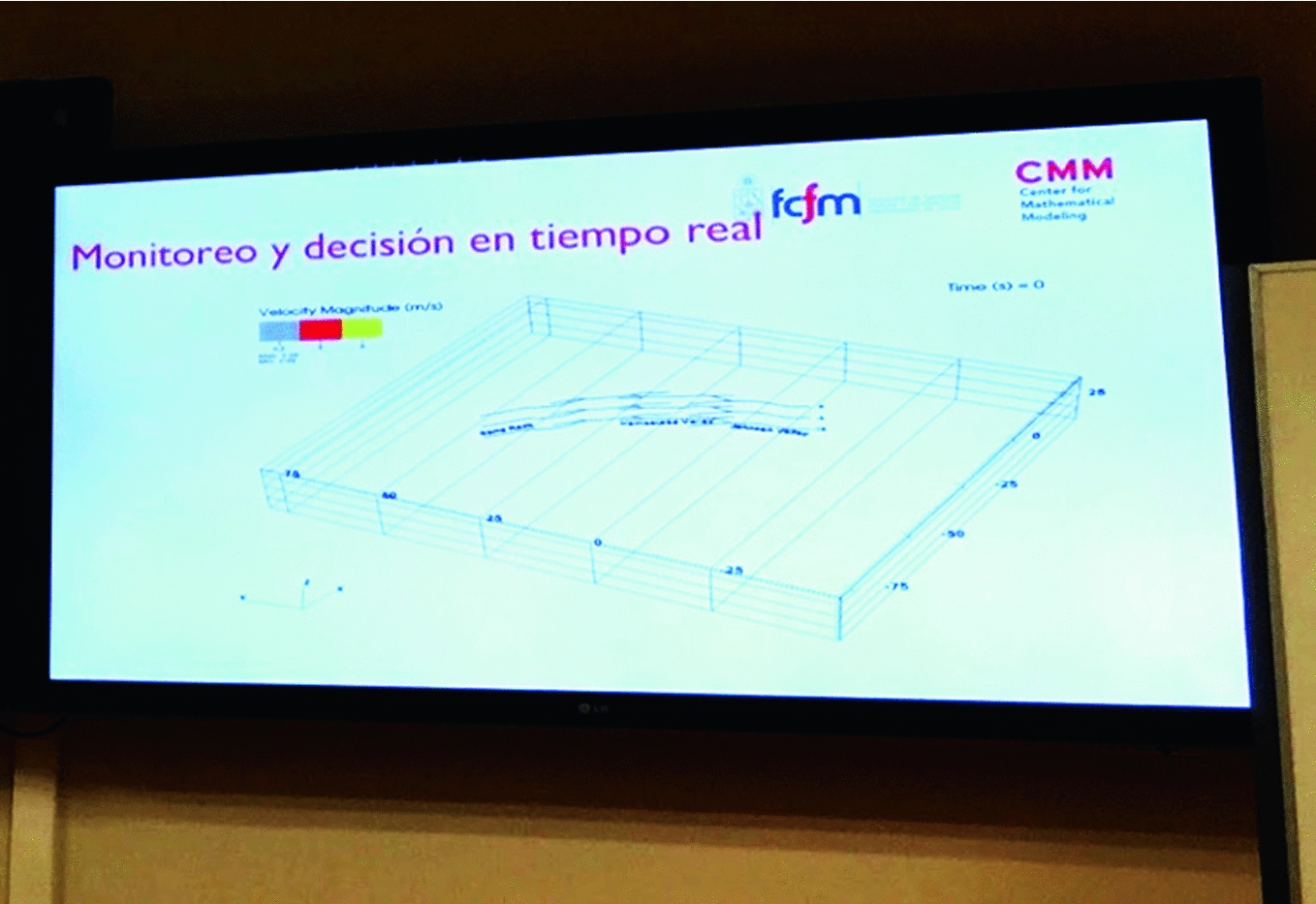

The incommensurabilities in scale and materials between the operations of mines and the seemingly metaphysical interests of the astronomical sciences are resolved at the Centre for Mathematical Modeling (CMM) in the University of Chile, located in Santiago some 1600 kilometers south. It is one of the world’s premier research centers for applied mathematics in mining. A few days after my time at ALMA, I visit the center.

Dr. Alejandro Jofré presenting on real-time analytics for decision making in extraction. March 21, 2017, CMM, University of Chile, Santiago

(Photo Orit Halpern)

Dr. Alejandro Jofré presenting on real-time analytics for decision making in extraction. March 21, 2017, CMM, University of Chile, Santiago

(Photo Orit Halpern)

This new optimization economy is also aligned, as Dr. Eduardo Vera, the executive manager of innovation and development at the CMM and in the National Laboratory for High Performance Computing argued, with rethinking mining unions and labor. The hierarchies of mines must go. In their place will be management by regular feedback loops derived from billions of sensors and automated systems that sense and decide the best actions: the best manner to ventilate, heat, cool, dig, chemically separate, mix, dispose, and scavenge material. The space of mining opened to the space of mathematics and abstraction; making Terran limits plastic, scavengable, optimizable, and ultimately grounded in the math of physics and astronomy. These communication systems, complex geological models, fluid and energy dynamics, and communication systems might also find themselves at use in other places. Over lunch, he tells me that entire computational infrastructures are being built at this moment. Large investments are being made by both corporate and government sources to develop the computer power to run advanced mathematical models and crunch vast data sets for the dual purposes of modelling Terran geologies and extraterrestrial phenomena. Ultimately, the mathematics being generated through abstract models and astronomy may also discover new methods and predictive analytics for use in asteroid and other mining.

Goldman Sachs released a report almost synchronously with my visit arguing for the future of asteroid mining, on April 6, 2017. The report was “bullish” on asteroid mining. “While the psychological barrier,” the report noted, “to mining asteroids is high, the actual financial and technological barriers are far lower.” Spacecraft and space travel are getting cheaper, and asteroids could be grabbed and hauled into low Earth orbit for mining. According to CalTech, the report cited, building asteroid-grabbing spacecraft would cost the same as setting up a mine on Earth. Goldman Sachs definitively urges speculation on space. While the market may tank on Earth, there is no question that humanity will need the materials (Edwards, 2017). Back on Earth, in Santiago, researchers speak of how astronomy’s wealth of data and complicated analytics can be brought to bear on developing the complex mathematics for geological discovery and simulations of mine stability and resources.

The discussion also indicates a shift of economy, perhaps from extraction to optimization. Vast arrays of sensors, ever more refined chemistry, and reorganized labor and supply chains are developed whose main function is to produce big data for machine learning that will in theory rummage through the tailings, discarded materials, and supplementary and surplus substances of older extractive processes in order to reorganize the production, distribution, and recycling of materials in the search for speculative (and financializable) uses for the detritus and excrement of mining.9 These computational-industrial assemblages create new economies of scavenging, such as the search for other metals in tailing ponds or the reuse of these waste materials for construction or other purposes, currently in vogue globally. In this logic, the seemingly final limits of life and resources become instead an extendable threshold that can be infinitely stretched through the application of ever finer and more environmentally pervasive forms of calculation and computation, which facilitate the optimization of salvage and extraction of finite materials. One might argue that this optimization is the perverse parallel of the event horizon. If one watches a clock fall into the event horizon, all one will see is time forever slowing down; the horizon will never be reached. History is eternally deferred at the threshold of a black hole. Big data practices for extraction provide a grotesque doppelgänger of physical phenomena. Even as energy, water, and ore run out, the terminal limits to the Terran ability to sustain capital are deferred through financial algorithms and machine learning practices. Better modelling and machine learning can allow mines to extend their operations, discover ever more minute deposits of ore, and continue to expand extraction. In fact, the application of artificial intelligence and big data solutions in geology and mine management has increased Chile’s contribution to global copper markets. Chile actually expanded its mining outputs, despite degraded repositories, growing from providing 16% of global copper for industrial use to 30% between the 1990s and the present (Arboleda, 2020, p. 66). CODELCO, the state-owned copper conglomerate, has entered into major agreements with Uptake, a Chicago-based artificial intelligence and big data enterprise platform provider (Mining Journal, 2019). The only problem with this fantasy to stop or turn back time is that we are not travelling at the speed of light and Earth is not a black hole. Instead, these practices make crisis an impossibility and blind us to the depletion of the ecosystem.

Temporalities

Calama Memorial for Pinochet Victims, https://commons.wikimedia.org/wiki/File:Memorial_DDHH_Chile_06_Memorial_en_Calama.jpg

(Downloaded August 6, 2019)

But time and data can be manipulated in many ways. Back on Earth there is a film that came out in 2010: Waiting for the Light (2010) by Patricio Guzmán. In the immediate aftermath of Chile’s coup on September 11, 1973, thousands were tortured and disappeared and nearly ten percent of the population were exiled, the paramilitary stalked Chile. Traveling in a Puma helicopter from detention site to detention site, the so-called Caravan of Death carried out the executions of twenty-six people in Chile’s south and seventy-one in the desert north. Their bodies were buried in unmarked graves or thrown from the sky into the desert. The desert was militarized, turned into a weapon for the killing of dissidents and the training of troops, and its resources used to support the state. Guzmán parallels the search for bodies by mothers of dissidents killed by Pinochet with astronomers watching and recording the stars in the Atacama’s high-altitude observatories (the wave millimeter arrays had not yet been made operational). Above all, his theme is that the landscape is a recording machine for both human and inhuman memories: the traces of stars 50 million years away and the absence of loved ones within human lives. The film implies that the desert itself provides some other intelligence or maybe memory and not only for humans.

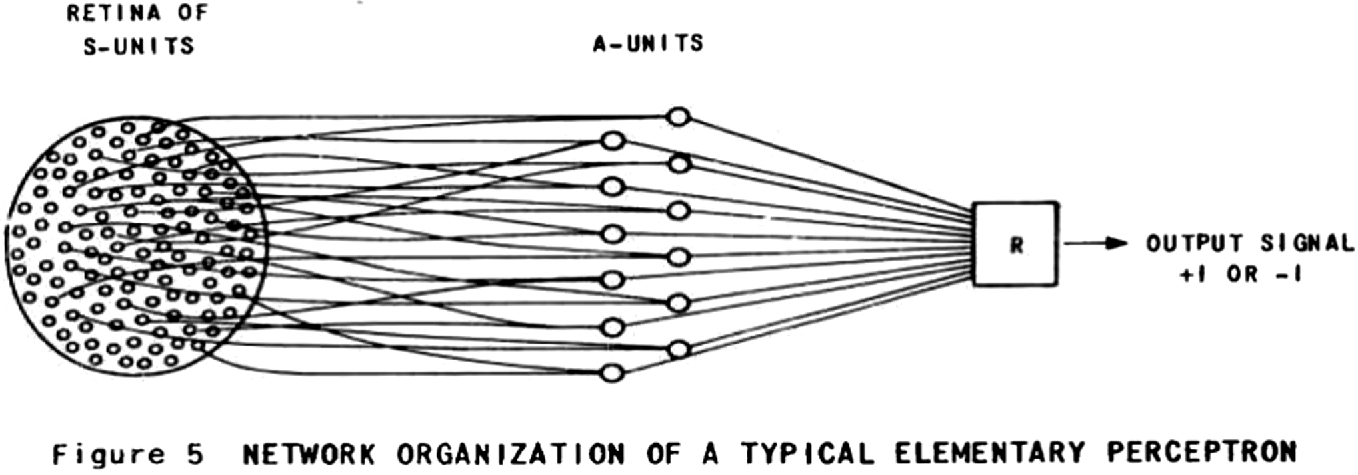

When I hear scientists speak of the possibility of real-time decision making in mining and the optimization of energy and materials through the perfection of sensing technology and big data in the mine, I hear a fantasy of stretching finite resources to infinite horizons through big data and artificial intelligences. I also hear a smaller, more embodied parallel fantasy of a new form of experience and cognition no longer nested in single human bodies, whether those of laborers or expert economists, but rather bequeathed to large networks of human-machines. These dreams of AI and machine learning—managed extraction hearken back to the history of machine learning (Rosenblatt, 1961).10

From Rosenblatt (1961)

Arranged in layers that made decisions cumulatively and statistically, the perceptron forwarded a new concept of intelligence as networked. Rosenblatt stated, “It is significant that the individual elements, or cells, of a nerve network have never been demonstrated to possess any specifically psychological functions, such as ‘memory,’ ‘awareness,’ or ‘intelligence.’ Such properties, therefore, presumably reside in the organization and functioning of the network as a whole rather than in its elementary parts” (pp. 9–10). By induction, this intelligence, therefore, might not reside in any one neuron and perhaps not even one body.

While many mutations have occurred along the road to contemporary deep learning and neural nets, insofar as most machine learning methods required training data—the computer equivalent of a parent correcting a child as the latter seeks to identify objects in the world—computers could, in principle, be trained on what was in essence population-level experience. Experience here, is moved outside of the individual; it’s the data set, environment, or sensor system that becomes the object of design. That is, though each human individual is limited by that set of external stimuli to which he or she is in fact exposed, a computer can draw on huge databases of training data that were the result of judgements and experiences of not just one individual but large populations of human individuals. These infrastructures are ubiquitous today; think of the MINST data set, Google image training sets, the massive number of click farms spread around the world, or the massive geological data sets that monitor and make “decisions” in real time to continue the extraction of ever rarer materials (Arboleda, 2020).

The inspiration for this model of networked cognition lay in many places, but above all in the work of economist Friedrich Hayek and psychologist Donald Hebb. Rosenblatt laudingly mentions Hayek (1952/2012) as the most significant of psychological theorists to envision the subjective and nonhierarchical nervous system in his work The Sensory Order. For Hayek, an unregulated market and a decentralized nervous system were the “natural” order. Hebb (1949) invented Hebbian learning in neural networks and pioneered studies in sensory deprivation. These concepts of revising the interior and exterior of the human subject and modelling neurons as networks were ones to which Rosenblatt (1958) was greatly indebted along with McCulloch-Pitts’s (1943/1970) model of neurons.

This genealogical relationship between Rosenblatt, Hayek, and Hebb returns us to Chile, history, and its legacies in the present. Naomi Klein built her argument about “shock” by claiming that methods derived from Hebb’s research (even if not intentionally) became the template for torture at the hands of the CIA. This “shock” torture mirrored the neoliberal mandate for creating disasters or “shocks” that served as the bedrock for structural readjustment policies. Chile in the 1970s was an experiment in these tactics, inaugurating a new world of planetary-scale tests, in which the “shock” doctrine may have been the first, and experiments at population level managed not through older calculi of territory, eugenics, and Malthusian economics, but through new economic calculative instruments closely attached to our intelligent machines.

I wonder then at this condition we live in and its link to artificial intelligences that have fundamentally positioned experience as a matter of extra-human or personal relations, perhaps beyond Terran experiences. We have turned our whole planet into a device for sensing the deepest, coldest space; the first wager in perhaps the biggest gamble we are taking as a species. If optimization is the “event horizon” of earthbound ecologies—representing the limit of the historical imaginary of economy by making it difficult to imagine running out of materials or suffering catastrophic events—then the event horizon appears as the very image to replace the finitude of the earth.

In a pessimistically optimistic vein, however, might this also be the final possibility to undo the fantasies of modern imperialism and anthropocentrism? There is hope in those infinitesimally specific signals found in a black hole, from eons ago beyond human or even Terran time. They remind us that there are experiences that can only emerge through the global networks of sensory and measuring instrumentations, and that there are radical possibilities in realizing that learning and experience might not be internal to the subject but shared. Perhaps these are just realizations of what we have known all along. We know that our worlds are comprised of relationships to Others, but there is a possibility that never has this been more evident or made more visible than through our new technologies, even our financial technologies and artificial intelligences. As they automate and traumatize us, they also reveal perhaps what has always been there—the sociotechnical networks that exist beyond and outside of us. Realities impossible to fully visualize. Upon introducing the perceptron, Rosenblatt spoke of “psychological opportunities”; what might these be?

These new assemblages of machines, humans, physical force, and matter also allow a reflexive critique and create new worlds. We do not know what the EHT will unearth, but we do know, especially now as we are in the midst of a global pandemic, that only our big data sets and simulations will guide us. For the first time in history as a species, perhaps, we are regularly offered different futures, charted from different data sets and global surveillance systems. Should we continue aggressive containment strategies against COVID-19? Do we prefer human life over market life? How can the sensor systems and experimental systems, the testing that is now sensing for this disease, be utilized in the future for equity or social justice? Our planet has become a vast dataset, every cell phone and many bodies (although not all, invisibilities and darknesses are also appearing) serving as recording devices that allows us to track this disease and, with it, the violences and inequities of our society. But the question is: how shall we mobilize this potential? The same distributed systems of sensors, analytics, and data collection offer many options. Totalitarian states and democratic governance through data, improved health and consciousness of social inequity, or terrible economic disenfranchisement through futures markets that even now play on the disaster and use data simulations to make bets on negative futures for humanity. We are in a massive and ongoing test scenario, mirrored by the tests we are all taking for diseases. Different forms of governance are being experimented with, different understandings of data and what imaginaries they engender, but the planetary test is not a controlled experiment; its stakes cannot be fully known and may be terminal.

The event horizon telescope is an allegory for this condition. It presents us with the radical encounter with our inability to ever be fully objective and the possibility that there are things to learn and forms of experience that are beyond the demands of capital or economy in our present. In many ways it is one possible culmination of a history of rethinking sensation, perception, and scientific epistemologies. But it is not the only possibility in a world of probabilities. Reactionary politics and extreme extractionism emerge from a perverse use of new media networks—not to recognize our subjective and interconnected relatedness but rather to valorize older forms of knowledge and power: those of myth, cartesian perspectivalism, and “nature” as a resource for “human” endeavors. Those are the politics that separate figures from grounds, maintain the stability of objects, and understand the future as always already foreclosed and known.

When we discuss AI in terms of national competition or the ongoing abstraction and rationalization from an analogue world, are we taking seriously enough the reformulations of time and space facilitated by these very techniques? Or our own investment and entanglement with sociotechnical systems? Or history? Do we ignore the landscape and the ecology of interactions when we frame ethics in terms of decisions made at singular points in time? Our dominant discourses on AI repeat ideas that we can still control the future or that technology is not natural. These are logics of the event that ignore its horizon. My hope is that perhaps in encountering the impossibility of ever imagining the reality of the event horizon, we might finally be able to witness and engage the precarious reality of life on Earth.

- 1.

For an extensive discussion of the history of objectivity and the relationship between objectivity, perception, and technology see Daston and Galison (1992) and Crary (1990).

- 2.

The observatory is also the harbinger of new forms of territory, a location that transforms the boundaries and borders between ourselves and outer-space, but also the spaces of the Earth. The installation is run by a consortium headed by the European Southern Observatory, a number of United States universities, and a series of Japanese institutions (European Southern Observatory, n.d.). Just as ALMA envisions a new world beyond our own, it is also part of producing a new geography on our planet, a world of new zonal logics. In 1990, ALMA was bequeathed to the international consortium that runs it, but Chile had already granted concessions to the European Southern Observatory in 1963 across the Atacama for observatories. ALMA is therefore a unique extraterritorial zone, part of the history of a massive astronomical collaboration engineered in the Global South in order to create the European Union through scientific cooperation in the post-World War II years. ALMA in its extraterritorial governance and work to produce new political-economic institutions—primarily the European Union—appears as an allegory for the post-planetary imaginaries which its science fuels.

- 3.

Interviews conducted at ALMA on my visit on March 13, 2019, and at the ESO Data Center in Santiago on March 20, 2017, revealed that many of the staff had been working on satellites and related information and communication problems before applying their research on to the study of the stars. ALMA has pioneered work on exo-planets and finding asteroids and other potentially mineable objects on Earth. Interviews with Yoshiharu Asaki, Associate Professor National Astronomical Observatory of Japan (ALMA), and Chin-Shin Chang, Science Archive Content Manager (Santiago).

- 4.

See also Jennifer Gabrys’ (2016) work on the idea of the planet as programmable through sensor infrastructures.

- 5.

Lithium was first discovered 1817 by Swedish chemist Johan August Arfwedson. Arfwedson, though, wasn’t able to isolate the metal when he realized petalite contained an unknown element. In 1855, British chemist Augustus Matthiessen and German chemist Robert Bunsen were successful in separating it. It is one of the lightest and softest metals known to man. In fact, it can be cut with a knife. And because of its low density, lithium can even float in water (Bell, 2020).

- 6.

Alejandro Bucher, Technical Manager SQM, March 23, 2017.

- 7.

- 8.

For work on debt and financialization as well as the place of ideas of information, computation, and algorithms in the production of these markets see Mirowski (2002), Harvey (2007), and LiPuma and Lee (2004). For an excellent summary of capital and extraction in Chile, including discussion of the way new information technologies and financial strategies are allowing a new “margin” of extraction and extension of mining capacities see Arboleda (2020).

- 9.

For more on the specific use of AI and machine learning in mine reclamation programs see Halpern (2018).

- 10.

Please note that this segment on Rosenblatt was conceived with Robert Mitchell as part of our joint project and future monograph The Smartness Mandate.

This project was supported by the Australian Research Council grant “Logistical Worlds”. Special thanks to Ned Rossiter, Brett Neilson, and Katie Detweiler for making the research possible.