From the time that Unix was first developed at the AT&T’s Bell Labs in 1969 by Ken Thompson and Dennis Ritchie until it was later released in 1975, the Internet as we now know it was not around. Most computer systems lived in virtual isolation from each other, with people directly connecting to the system that they wanted to use. The U.S. Department of Defense commissioned its Advanced Research Projects Agency to design a network to link computers together, and a military contractor known as BBN Technologies (named after founders and MIT professors Bolt, Beranek, and Newman) was awarded the contract to build that network in 1969. The result became known as ARPANET, which became operational in 1975.

As the years progressed and the new ARPANET network started taking root, systems became more interconnected. In the early days, the only people who were on this new network were scientists and government labs. Because everyone on the network felt they could reasonably trust everyone else, security was not a design goal of the protocols that were being created at the time, such as FTP, SMTP, and Telnet.

Today, with the current level of Internet connectivity, this level of trust is no longer sufficient. The networked world has become a hostile environment. Unfortunately, many of these insecure protocols have become deeply rooted and are proving difficult to replace. Nevertheless, there are many steps you can take to improve the security of Unix platforms.

The principles of securing a Unix system are by now well established—reduce the attack surface, run security software, apply vendor security updates, separate systems based on risk, perform strong authentication, and control administrator privileges. By following the procedures described in this chapter, you can make Unix much more resistant to attack. The same principles apply to all flavors of Unix (commonly referred to as “Unix-like” operating systems, because of trademark restrictions).

Start with a Fresh Install

Before proceeding any further with securing your system, you should be 100 percent positive that nobody has installed rogue daemons, Trojans, rootkits, or any other nasty surprises on your system. If the system has been connected to a network or had unsupervised users, you cannot make that guarantee.

Always start with a freshly installed operating system. Disconnect your server from the network and boot it from the supplied media from your vendor. If given the option, always choose to do a complete format of the connected drives to be sure they do not contain malicious content. Then you can install the operating system.

If this is not feasible in your situation, you need to do a complete audit of your system—applications, ports, and daemons—to verify you’re not running any rogue processes or unnecessary services and to ensure that what you are running is the same as what was installed. Take an unused server, perform a clean install of your operating system on it, and use that server to compare files. Do not put a server out on the network (either internal or external) without making sure no back doors are open.

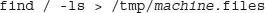

The easiest way to compare files on the two machines is to run this command:

on each system (where machine is replaced with the name of the machine) and copy both of these files into the same directory on a third system. Then use the diff command to get a list of differences:

You then have to go through the diff output line by line and verify each difference. It is an exhausting and tedious process and is useful only as a last resort when you cannot create a clean system from scratch.

If diff reports a change between files, use a trusted and verified checksum utility such as md5sum on each file. To ensure that the checksum utility is viable, copy it from a fresh install onto read-only media, and use it from that media only. Compare the checksums provided to determine whether a difference exists. If the trusted host contains a different checksum, replace the file on your server with the file from the trusted host.

Securing a Unix System

The following practices provide the most significant improvements to the security of any Unix system (or any other operating system, for that matter):

• Reduce the attack surface of systems by turning off unneeded services.

• Install secure software.

• Configure software settings securely.

• Patch systems regularly and quickly.

• Use firewalls and other network security devices to segment the network into zones of trust, and place systems into those zones based on their communication needs and Internet exposure to protect access to services.

• Strengthen authentication processes.

• Limit the number (and privileges) of administrators.

Turning off unnecessary software components and securely configuring those that remain is the first and most important step in locking down a Unix installation (or any operating system platform, for that matter). This is known as hardening the system. It changes the computer from a well-known target that attackers could easily break into to a system that is able to defend itself. When you harden the system by changing configurations to more secure settings and disabling unnecessary but attackable processes, you make the attacker’s job more difficult—by removing the most common flaws they know how to exploit.

There’s also a fringe benefit to hardening your system: a computer that runs less software runs faster. By turning off services and removing unneeded software, you’ll also speed up your system. You get security and performance improvements in one shot, and that’s a win-win.

Reducing the Attack Surface

The attack surface of a computer system is the combination of software services that an attacker could exploit, through either vulnerabilities or unsecure configurations. In the case of Unix systems, the attack surface takes the form of installed software packages and running processes. You should follow the general principle of “turn off what you don’t need,” since if you don’t need it, there’s really no cost to you. For example, many Unix systems default to run level 5, which provides a nice graphical interface. But if you’re building a web server that you’ll never log into from the console, you don’t need the GUI. In that case, you should default the system to run level 3, which provides a command-line login capability without all the overhead and vulnerabilities associated with the GUI.

Remove Unneeded Daemons

The first thing you should do to secure any computer is to disable or delete software components that aren’t going to be used. Why patch, configure, and secure software that you’ll never use? Just get rid of it, and you’ll not only save yourself work, you’ll also make an attacker’s job harder by cutting down on the overall number of vulnerabilities that can be exploited.

Most modern operating systems are written with the expectation that they will be utilized in a networked environment. To that end, many network protocols, applications, and daemons are included with the systems. Whereas some systems are good about disabling the included services, others activate all of them and leave it to you to disable the ones you do not want. This setup is inherently insecure, but it is becoming less common.

Review Startup Scripts

Most System V (SysV) Unix platforms will scan one or more directories on system startup and execute all the scripts contained within these directories that match simple patterns. For example, any script that has execute permissions set and begins with the letter S (capitalized) will be run automatically at system startup. The location of these files is a standardized directory tree that varies slightly by vendor, as shown in the following list.

| Operating System |

Location |

| Solaris |

/etc/rc[0123456].d |

| HP-UX |

/sbin/rc[01234].d |

| AIX |

/etc/rc.d/rc[23456789].d |

| Linux |

/etc/rc.d/rc[0123456S].d |

As you can see, the locations are predictable, with only minor variations. Go through your startup directories and examine each file. If a script starts an application or daemon that you are not familiar with, read the associated man pages until you understand the service it provides.

NOTE BSD Unix systems use a variation where the main configuration files are /etc/defaults/rc.conf and /etc/rc.conf. The /etc/rc script runs the /etc/rc.* files in their proper order, loads the configuration, and starts the system boot sequence.

When you have finished taking inventory, make a list of what is being started and then rule out the processes you do not need. To stop a script from executing, rename the script to break the naming convention by prepending nostart. to the script name; for example, /etc/rc2.d/nostart.S99dtlogin.

Be careful not to remove scripts that are essential to the operation of your server. If you are not certain whether a script is needed, disable it first and then reboot your server. Watch the startup and make sure the script did not run; then stringently test your server to verify that it is usable and performs the tasks required. Follow these same steps for each script you are not certain you need.

Audit Your Applications

Modern operating systems come with a myriad of applications and utilities you can install onto your system, in addition to the core operating system itself. When getting started, you may be tempted to install most—if not all—of these applications. After all, they might be useful, and they’re included with the system, so it’s probably a good idea to have them, right?

Not necessarily. Most of the applications are harmless and potentially useful, but if you do not need them, you are better off not installing them. Keep in mind that every application on your system is potentially another hole that can be exploited by a malicious person. The more applications you have installed, the more vulnerabilities a malicious attacker has to take advantage of. More applications also mean more things you need to keep track of for patching and intrusion detection.

TIP In security, the fewer things you have installed on a system, the easier you will generally find it to monitor and keep clean. Always ask yourself when setting up a system, will I need this application to run my server effectively? If the answer is no or probably not, then do not install it.

If you take possession of a server that was created by someone else, do a careful audit of that server and note everything that is installed. If you do not know what something is, research to determine what it is, what it does, and whether you need it. Keep a list of all of your servers, what applications are installed on each, and which version of each application is installed. This list will save you a lot of time and preparation work when it comes time to do security audits and system patching. Any time you add, remove, or patch an application, update your list.

Perform a full backup of your server, and then go through and disable or remove all applications that are not necessary for that server. Remove them one at a time and test your server after each change to verify that it still functions correctly.

Boot into Run Level 3 by Default

Run levels determine whether a system boots up in single-user mode, multiuser mode, command-line only, or full graphical user interface (GUI).

Your system’s exact run level may vary based on your Unix flavor, but the general idea is that you should configure the system to come up to the lowest level required for the functionality you are building. For a typical server, such as a database, mail relay, or web server, you don’t need GUI access. If you prefer a GUI environment to work on the system, that’s fine—boot up to run level 5 (or whatever run level gives you the GUI you want) but when you’re done, bring the system back down to the run level that keeps all the software running without the GUI’s overhead and attack surface. By default, your system should boot into the lowest run level needed.

Install Secure Software

Unix systems do not usually ship with the most secure software installed. Depending on how you plan to use the system, you will probably want to download and install software packages that either are more secure than the default, preloaded packages, or that provide security functions in addition to those already on the system.

Install OpenSSL

If your operating system did not ship with any SSL libraries, install OpenSSL. The OpenSSL suite is a set of encryption libraries and applications to make limited use of them. The main power of OpenSSL comes from the ability of many networking applications and daemons to link the libraries and provide network encryption of your data. For example, Apache uses OpenSSL to serve https web pages, and OpenSSH uses OpenSSL as the foundation to build on.

Replace Unsecure Daemons with OpenSSH

The Internet, as stated previously, was a much friendlier place when it was first being developed. Security was not at the forefront of anyone’s mind when connection protocols were being created. Many protocols transmit all data without encryption or obfuscation; the data they wish to send is exactly what they send. While this works and is fine in a completely safe environment, it is not a good idea when you’re sending sensitive information between systems.

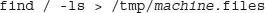

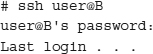

As an example, let’s suppose we’re using computer A in Chicago and we wish to connect to a remote computer B in London, UK, to check some information we have stored there. We open a shell on A and run Telnet:

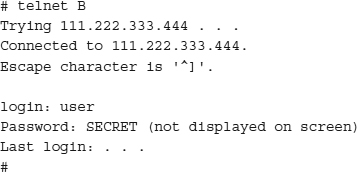

You are now logged into your server in London. Unfortunately, your login ID and password were just sent in clear, unencrypted text halfway around the world. To a packet sniffer, the traffic looks like this:

Anyone who has compromised a router anywhere along your path, or breached security in other ways, has just obtained your login credentials. This scenario unfortunately happens all too often, but it can be avoided.

Many of the unsecured protocols, such as Telnet, FTP, and the r* commands (rsh, rexec, rlogin, etc.), can be replaced with OpenSSH to provide similar functionality but with much higher security. Let’s look at the example again using OpenSSH.

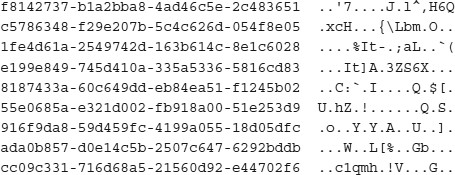

We have just accomplished the same task—logging into a server in London—but none of our personal information was readily available. OpenSSH uses OpenSSL to transparently encrypt and decrypt all information that is sent. Although everything looks the same to you, an eavesdropper on the Internet will not get any useful information from this session. All they will see is encrypted gobbledygook, as shown here:

Using OpenSSH requires that the SSH daemon (sshd) is installed and running on the remote server and the SSH client (ssh) is installed on the local system. To get OpenSSH, go to

www.openssh.org. Look at the left column and click your operating system under the “For Other OSs” heading unless you are running OpenBSD. You are required to have OpenSSL installed before installing OpenSSH.

TIP Before downloading and installing OpenSSH, make sure your vendor has not already supplied it with the operating system. As more people are starting to consider security, more vendors are starting to include secured protocols.

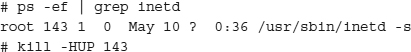

Create a startup script to launch sshd on system startup so it is always available, if your installer package didn’t do that, and then deactivate Telnet and the r* commands from /etc/inetd.conf. Send inetd an HUP signal (an acronym for “hang up,” a command that tells the process to restart itself) to have it reread the configuration file by finding the PID (process identifier, which is a unique number associated with a running process) of inetd and typing kill –HUP PID (substituting the inetd PID on your system for PID), and your server will no longer accept unencrypted remote logins.

To connect to a remote machine with SSH instead of Telnet, use the general form of the command: ssh user@machine where user is your user ID on the remote system machine.

To use Secure FTP (SFTP) instead of FTP, first comment out the ftp line in /etc/inetd .conf to disable FTP service. Then use the command sftp user@machine to connect to the remote machine and transfer files. Using SFTP instead of FTP provides not only the benefit of your username and password being encrypted, but all of the data that you transfer is also encrypted. This makes SFTP a good link in your chain for transmitting sensitive information to a remote server.

By using OpenSSH in this manner, you can disable the FTP, Telnet, and r* services, and you can close ports 20, 21, 23, and the many ports used by the r* services on your firewall to stop this type of traffic from ever getting through to your server. Port 22 will be substituted for all of these ports.

Use TCP Wrappers

TCP Wrappers is a utility that allows you to specify who is allowed to connect to a service over the network and who is not. TCP Wrappers is only useful for daemons that are invoked by inetd, unless the application or daemon was compiled with libwrap support. To check whether the daemon was compiled with libwrap support, use the following command:

Replace the word daemon with the path and name of the daemon you are checking. If grep finds a match, the service should support TCP Wrappers via /etc/hosts.allow and /etc/hosts.deny.

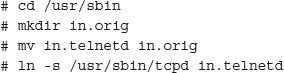

Move any daemons you want to be under the control of TCP Wrappers to in.orig, and create symbolic links to tcpd with the daemon name, as shown in the following example to migrate in.telnetd:

That’s it. All requests for Telnet (or any other services you move) to your server are now filtered by TCP Wrappers. Read the documentation that comes with TCP Wrappers to learn more about setting up the /etc/hosts.allow and /etc/hosts.deny files, along with other useful information.

Use a Software Firewall

Software firewalls are available for some flavors of Unix. For example, iptables allows packet filtering and network address translation via command-line configuration on some flavors of Linux. Filtering incoming and outbound traffic can be useful in blocking some types of network-borne attacks.

Consider Replacing Sendmail

E-mail has grown from its humble beginnings—initially it was not even its own protocol but was part of FTP. As more people started to use e-mail, it grew into its own protocol, named Simple Mail Transfer Protocol (SMTP), and sendmail arose to handle this new protocol. Over time, people started demanding more options and control over their e-mail systems. As a result, sendmail grew in complexity. This cycle continued for many years and resulted in a system that is so complicated that simple changes are beyond the ability of most people who have not devoted a large amount of time to learning sendmail.

This situation has also resulted in a very complicated code base to support the myriad options that sendmail offers. Although having these options can be beneficial, you also have the potential for many different types of exploits against this server. If you look through the past vulnerability alerts from places like CERT, you will see that a high percentage of them deal with sendmail in some manner. Sendmail can be relatively secure if you keep on top of bug fixes and security releases and know enough about sendmail rules and configurations to lock it down. Because of sendmail’s complicated configuration process, though, many people never learn enough to do this effectively.

Another major concern with sendmail is that it must be run as root to work. This restriction, coupled with the large complexity of the code base, makes it a popular target for attackers. If they succeed in exploiting sendmail, they have gained root access to your machine.

Also ask yourself if your server needs to be running sendmail (or a replacement) at all. Most systems do not—the only reason your system needs this service is if it will accept inbound e-mail for local users from the network. The majority of systems running an SMTP server do not need to and are doing so simply because that is the way the OS set up the system. If you do not need to accept inbound e-mail, do not run an SMTP service on your machine.

This situation has not gone unnoted in the software community, and several people have written replacements for sendmail that try to address its shortcomings. Some of these replacement programs work quite well and, as such, have collected a devoted following of users. We discuss the two most popular in the following sections: postfix and qmail.

Postfix Postfix is a sendmail replacement written by Wietse Venema, the same person who wrote TCP Wrappers and other applications, and it can be found at

www.postfix.org. The two overriding goals when writing Postfix were ease of use and security, and both have largely been achieved. Getting basic e-mail services running under Postfix can be easily accomplished by almost every administrator, even if he or she has not worked extensively with SMTP previously. Another benefit to Postfix is that there are very few known security exploits.

Locate your current sendmail application. If you’re not sure where it is, look in directories such as /usr/sbin, /usr/libexec, and others. You can always run the command find / -type f -name sendmail -print to locate it for you if you cannot find it. Once you have located sendmail, rename it to something else. Wietse’s suggestion is to rename it to sendmail.OFF. Locate the applications newaliases and mailq, and rename them in a similar fashion.

Now you need to create a “postfix” user ID and “postfix” group ID for Postfix to use when running. At the same time, create an additional “postdrop” group that is not assigned to any ID, not even the Postfix ID. The reason for this was mentioned earlier: if attackers manage to exploit Postfix they will not gain any elevated privileges. Make sure to use IDs that are not being used by any other process or person. The account does not need a valid shell nor a valid password or home directory.

NOTE An invalid password refers to a password that cannot be used, such as one created by entering *NP* in the password field of /etc/passwd or /etc/shadow if your system uses shadow passwords. It doesn’t refer to an account without a password at all.

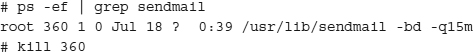

Edit /etc/aliases to add the alias postfix: root. Now you can install Postfix. When the install is finished, edit the /etc/postfix/main.cf file and make sure you change at least the variables myorigin, mydestination, and mynetworks, and any others you feel are appropriate for your environment. When you are finished, save the file and kill off the current running sendmail daemon, as shown here:

Now restart the old sendmail program in queue mode only to flush out any old e-mail that might be queued for sending, and then start Postfix:

Postfix is now installed and running on your system. Any time you make changes to the configuration files, run /usr/sbin/postfix reload to have Postfix rescan the configuration and incorporate the new changes without affecting mail delivery.

Qmail Qmail is a sendmail replacement written by Dan Bernstein. The two overriding goals for qmail were security and speed. Qmail also incorporates a simple mailing-list framework to make running your own mailing list a fairly simple process. Dan suggests using djbdns as a DNS replacement, as well.

Configuring qmail is more complicated than configuring Postfix. First you must make the qmail home directory, which, by default, is /var/qmail, and then create qmail’s group and user IDs. Read INSTALL.ids for more information on creating the necessary IDs. Then edit conf-qmail in the source directory to make any changes needed for your environment.

Because of the many complications involved in setting up qmail, you must read the README and INSTALL* files to make decisions about your environment and perform the necessary steps. Failure to do this will result in a qmail implementation that does not work. For further information on using qmail, go to

www.lifewithqmail.org and

www.qmail.org.

Configure Secure Settings

The next step in the hardening process is to replace default, open settings with more secure, limited settings.

Do Not Run Processes Using root Privilege

Many services running on your server do not need root access to perform their functions. Often, they do not need any special privileges other than the ability to read from—and possibly write to—the data directory. But owing to the Unix security measure that states only processes run by root can open a TCP/IP port below 1024, coupled with the fact that most of the well-known ports are below 1024, means that your daemons must be started as root to open their ports.

This dilemma has few workarounds. The first and safest is not to run that service at all. If the daemon isn’t running, then it does not need to run as root. This is not always practical, however. Sometimes you need to run the service provided by the daemon.

In that event, create a dedicated user ID to run the daemon, and make it as restrictive as possible. Make only the directory used by that ID writable by that ID, and give the ID no special elevated permissions. Then change the startup script so the daemon is owned by this new user ID.

Now if an attacker exploits your service and compromises your daemon, the attacker will gain access to an unprivileged account and must do further work to gain root access—giving you more time to track and block him or her before much damage occurs.

Use chroot to Isolate Processes

Many services, because of practical necessities, cannot be locked down as much as you would like. Maybe they must be run as root, or you cannot change their port assignment, or perhaps you have a completely different reason. In that case, all is not lost—you can still isolate the service to a degree using the chroot command. Please note that you can (and should if at all possible) combine chroot with other forms of security, such as changing user IDs, swapping ports, and using firewalls.

Using chroot causes the command or daemon that you execute to behave as if the directory you specified was the root (/) directory. In practical terms, that means that the daemon, even if completely compromised and exploited, cannot get out of the virtual jail you have assigned it to.

To take a practical example, let’s use chroot to isolate in.ftpd so you can run an anonymous FTP service without exposing your entire machine. First, we must create a file system to hold our pseudo root; let’s call it /usr/local/ftpd. Once you create the file system, you need to create any directories underneath it that in.ftpd expects. One common directory is etc, and you will need an etc/passwd and (if your system uses it) an etc/shadow file, as well as etc/group, and so on. You will also need a bin directory containing commands like ls so people can get file listings of the directories, and a dev directory containing the devices that FTP needs to use to read from and write to the network, disks, and the like. Also make sure to set up a directory in which the daemon can write logs.

NOTE Because the chroot process varies greatly from system to system and daemon to daemon (different systems need different directories, files, permissions, and other things), we will not go into detail here. Please search the Internet for specific pages dedicated to using chroot on your operating system for the daemon you are trying to isolate, and use the instructions presented here as merely a guideline of what you need to do.

Once you have built up the pseudo-root file system so it contains everything you need to run the daemon, review it carefully to verify that everything inside has the minimal permissions it needs to function. Every directory and file that should not be changed should have the write bit disabled; everything that does not need to be owned by the daemon should not be, and so on.

The syntax of chroot is chroot newroot command. Any arguments passed to command that start with / will be read from the newroot directory. In this example, you start up in.ftpd with the root directory /usr/local/ftpd:

Notice that the command path is still relative to the actual root of the system, not to the newroot path. There is no reason to create a /usr/sbin/in.ftpd file in the pseudo-root file system.

Now your FTP service is isolated to one directory on your server. Even if attackers completely compromise the service, they will only gain control of the chroot file system that in.ftpd is running in and not your entire machine. If you do not have exploitable applications in your pseudo root, then it will be almost impossible for attackers to elevate their permissions. Even if they do, they will still be unable to escape the jail.

Audit Your cron Jobs

Do you know what jobs your system is running unattended? Many operating systems come with a variety of automated tasks that are installed and configured for you automatically when the system is installed. Other jobs get added over time by applications that need things run periodically.

To stay on top of your system, you need to have a clear idea of what it is running. Periodically audit your crontab files and review what is being run. Many systems store their cron files in /var/spool/cron. Some cron daemons additionally support cron.hourly, cron.weekly, cron.monthly, and cron.yearly files, as well as a cron.d directory. Use the man cron command to determine your cron daemon’s exact capabilities.

Examine all of the files in each directory. Pay attention to who owns each job, and lock down cron to only user IDs that need its capability if your cron daemon (crond) supports that. Make note of each file that is running and the times that it runs. If something is scheduled that you do not know about, research to determine exactly what the file does and whether you need it. If users are running something you do not feel they need, contact them and ask for their reasons and then proceed accordingly.

Keep track of your cron jobs and periodically examine them to see if any changes have been made. If you notice something has changed, investigate it and determine why. Keeping track of what your system is doing is a key step in keeping your system secured.

Scan for SUID and SGID Files

All systems have set user ID (SUID) and set group ID (SGID) files. These files are applications, scripts, and daemons that wish to run as a specific user or group instead of as the user ID or group ID of the person running them. One example is the top command, which runs with elevated permissions so it can scan kernel space for process information. Because most users cannot read this information with their default permissions, top needs to be run with higher permissions to be useful.

Many operating systems allow you to specify that certain disks should not support SUID and SGID, usually by setting an option in your system’s mount file. In Solaris, you would specify this with the nosuid option on /etc/vfstab. For example, to mount /users with nosuid on disk c2t0d0s3, you would enter this line:

This mounts /users at boot and disables SUID and SGID applications. The applications are still permitted to run, but the SUID and SGID bits are ignored. Disable SUID and SGID on all file systems that you can as a good security practice.

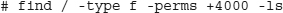

Still, you will need to scan your system periodically and get a list of all SUID and SGID processes that exist. The switch to find SUID is -perms +4000, and for SGID, it is -perms +2000. To scan for all SUID files on your entire server, run this command:

The -type f option only looks at “regular” files, not directories or other special files such as named pipes. This command lists every file with the SUID bit set on. Carefully review all of the output and verify that everything with SUID or SGID really needs it. Often you will find a surprise that needs further investigation.

Keep . out of Your PATH

As root, you must be positive that the command you think you are running is what you are really running. Consider the following scenario, where you are logged in as root, and your PATH variable is .:/usr/bin:/usr/sbin:/bin:/sbin.

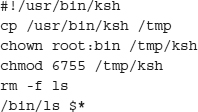

User A creates a script in his directory named ls that contains these commands:

Now user A calls you and informs you that he is having a problem with something in his home directory. You, as root, cd to his directory and run ls -l to take a look around. Suddenly, unbeknownst to you, user A now has a shell he can run to gain root permissions!

Situations like these happen frequently but are easy to avoid. If “.” was not in your path, you would see a script named ls in his directory, instead of executing it.

Audit Your Scripts

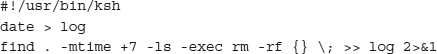

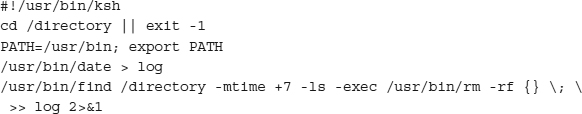

When you are writing a script, always specify the full path to the application you are using. Consider the following script:

It is only three lines long, and only contains two lines that do anything, yet there are many security holes:

• It does not specify a path.

• It does not give the full path to date.

• It does not give the full path to find.

• It does not give the full path to rm.

• It performs no error checking.

• It does not verify that the directory is correct.

Let’s take another look at the script to see how you can fix some of these problems.

The second line, cd /directory || exit -1, tells ksh to attempt to cd to /directory. If the command fails, it should exit the script with a -1 return code. The ksh command || means “if the previous command fails,” and && is the ksh command that means “if the previous command succeeds.” As an additional example, the command touch /testfile || echo Could not touch creates a file named /testfile, or if the file cannot be created (perhaps you do not have enough permissions to create it), then the words “Could not touch” are displayed on the screen. The command touch /testfile && echo Created file creates the file /testfile and only displays “Created file” if the touch command succeeded. The condition you’re checking for will determine whether you use either || or &&.

If the script proceeds past that line, you’re guaranteed to be in /directory. Now you can explicitly specify your path to lock down where the system searches for commands if you forget to give the full path. It does nothing in this small script, but it is an excellent habit to get into, especially when you are writing long and more complicated scripts; if you forget to specify the full path, you have a smaller chance that the script will invoke a Trojan.

Next you call date by its full name: /usr/bin/date. You also fully specify /usr/ bin/find and /usr/bin/rm. By doing this, you make it much harder for a malicious person to insert a Trojan into the system that you unwittingly run. After all, if they have high enough permissions to change files in /usr/bin, they probably have enough permissions to do anything else they want.

When writing a script, always follow these simple rules:

• Always specify a path.

• Always use the full path to each application called.

• Always run error-checking, especially before running a potentially destructive command such as rm.

Know What Ports Are Open

Before you expose a system to the world, you need to know what ports are open and accepting connections. Often something is open that you were not aware of, and you should shut it down before letting people access your server. Several tools let you know what your system is exposing.

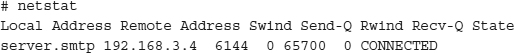

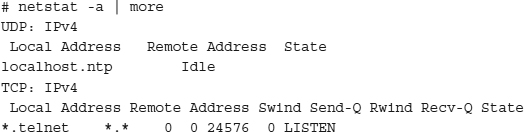

Using Netstat One tool that is bundled with almost every operating system is netstat. Netstat is a simple tool that shows you network information such as routes, ports, and connections. Netstat displays all ports with their human-equivalent name from /etc/services if the port is defined, making it easier to parse the output. This is a good reason to make sure that /etc/services is kept up to date on your system. Use the man command to discover netstat’s capabilities on your system.

We will go through a simple example here:

In this example, someone from the IP address 192.168.3.4 is connected to your server’s SMTP service. Should you be running SMTP? Should this person be allowed to connect to it?

In the following output, your system is advertising the NTP service. Is it an NTP server? Should others be allowed to connect to that service? Uh oh, big hole. You have Telnet wide open. You should at least have TCP Wrappers protecting it. Should you deactivate it?

Take the time to learn netstat. It will provide you with a wealth of network information if you learn how to ask, and it will let you see exactly who is connected to your system at any given time.

Using lsof Another useful utility is lsof (list open files). It started out as a simple utility to display what processes have files open, but it has evolved to display ports, pipes, and other communications.

Once you have lsof installed, try it out. Just running lsof by itself will show every open file and port on the system. You’ll get a feel for what lsof can do, and it’s also a great way to audit a system quickly. The command lsof | grep TCP will show every open TCP connection on your system. This tool is very powerful and also a great aid when you’re trying to unmount a file system and are repeatedly told that it is busy; lsof quickly shows you what processes are using that file system.

Configure All Your Daemons to Log

Although it seems obvious, keeping a log and replicating it is not useful if your daemons are not logging any information in the first place. Some daemons create log entries by default, and others do not. When you audit your system, verify that your daemons are set to log information. This is one of the things that the CIS benchmarking tool, discussed in “Run CIS Scans,” will look for and remind you about.

Any publicly available daemon needs to be configured to log, and the log needs to be replicated. Try accessing some of your services and see if logs were collected on your log server. If they were not, read the man page for that service and look for the option to activate logging. Activate it and try using the service again. Keep checking all of your services until you know that everything is logging and replicating.

Use a Centralized Log Server

If you are responsible for maintaining multiple servers, then checking the logs on each of them can become unwieldy. To this end, set up a dedicated server to log messages from all of your other servers. By consolidating your logs, you only have to scan one server, saving you time. It also makes a good archive in case a server is compromised; you still have untouched log files elsewhere to read.

To create a central log server, take a machine with a fast CPU and a lot of fast hard-drive space available. Shut down all other ports and services except syslogd to minimize the chance of this system being compromised, with the possible exception of a TCP-wrapped SSH daemon restricted to your workstation for remote access. Then verify that syslogd will accept messages from remote systems. This varies from vendor to vendor. Some vendors make the default behavior to accept messages, and you must turn it off if desired; others make the default to not accept messages, and you must turn it on.

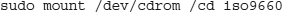

Create a system for archiving older logs and document it. If your logs are ever subpoenaed for evidence, you need to be able to prove that they have not been altered, and you will need to show how they were created. It is suggested that you compress all logs older than one week and replicate them to time-stamped, read-only media, such as a CD.

Once you have a server in place to accept your logs, you need to start pointing your servers to it. Edit /etc/syslog.conf and determine which messages you wish to replicate. At the very least, you should replicate the emerg, alert, crit, err, and warning messages, and more if you think it will be beneficial. When you know what you want replicated, add one or more lines like the following to /etc/syslog.conf:

In this example, we are replicating all emerg, alert, crit, err, warning, and notice messages to the remote server.

NOTE You can archive logs onto a remote server and keep them locally at the same time. You can also replicate to more than one log server. Syslog.conf is scanned for all matching entries—the syslogd daemon does not stop after finding the first one.

Run CIS Scans

The Center for Internet Security (CIS) has created a system security benchmarking tool. This tool, which you can download from

www.cisecurity.org, will perform an audit of your local system and report on its findings. It looks for both good and bad things and gives you an overall rank at the end of the test. Scanning tools are available for Solaris, HP-UX, Linux, and Windows, as well as Cisco routers.

The nice part about the CIS benchmarks is their explanations. The report does not simply state “You have X, which is bad”; it gives you the reasoning behind why the tool says it’s bad, and it lets you decide for yourself whether to disable the “bad thing” or keep it. The benchmark tool checks a great many things that you might not have thought of, giving you a quick detailed report of your system.

Download the CIS archive and unpack it. Read the README file and the PDF file. (The PDF file offers great reference material on system security.) Install the package by following the instructions in the README file, and when finished, you should have an /opt/CIS directory with the tool installed.

To get a snapshot of your system, run the command cis-scan. Depending on the speed of your server and the number of drives attached to it, the scan can take a long time to complete. When finished, you will have a file named cis-ruler-log.YYYYMMDD-HH:MM:SS .PID. That file contains the summary of your system, and it shows you the results of all of the tests. The file does not contain a lot of information—it is meant to be used as an index to the PDF file that comes with the scanning tool.

Go through the ruler-log file line by line, and if there is a negative result, determine whether you can implement the change suggested in the PDF file. Most of the changes can be implemented without affecting the server’s operation, but not all of them. Beware of false negatives as well; you might have PortSentry watching port 515 for lp exploits, which causes the CIS tool to report erroneously that you have lp running. The higher the number at the end of the report, the more “hardened” your system is.

This is a great tool to have in your security arsenal and to run periodically on your servers to keep them healthy. Check back on the CIS web site from time to time, as the tools are constantly evolving and changing.

Keep Software Up to Date

Every piece of software has vulnerabilities. Most vendors run audits against the code and remove any that are found, but some are inevitably released into the world. Certain people spend a great deal of time trying to discover the ones that remain; some do it to report them to vendors, but others do it for their own personal use.

In any event, occasionally exploits are found and patches are released to fix them. Unless the vulnerability is severe, or a known exploit exists in the wild, there is usually not much fanfare announcing the release of these patches. You are responsible for occasionally looking to see what patches are available from your vendor and if any of them apply to you.

Many vendors supply a tool to help you keep on top of your system patches. HP-UX has the Software Update Manager, Solaris has patchdiag and patchpro, AIX uses SMIT, and so on. Run your diagnostic tool at least once a month to see what new patches are available for your system, and determine if you need to install them. Set aside at least one hour each Sunday afternoon (more if you are allowed) as dedicated system downtime, and use that time for installing patches and performing other needed maintenance.

You should also make it a habit to go to the web site for each application you have installed to see if any bug fixes or security patches have been released for those applications. Use the list of applications you created earlier to determine whether any of the patches apply to you. Remember to update your list if you apply any patches.

Place Servers into Network Zones

All computers should be separated from each other based on their function, their sensitivity or criticality, and their exposure to the Internet. You should always assume that Internet-facing systems will eventually become compromised by attackers and limit their access accordingly. You should think, if an Internet server is compromised, where else can the attacker direct an attack from that server? NIST publication SP800-68 has some recommendations on how to decide which systems should go into differing zones.

Use an application-layer (layer seven) firewall to filter traffic between zones. These firewalls inspect traffic at the application layer and check that characteristics of traffic match those accepted by the application. The packets are dropped if they do not meet application rules.

Strengthen Authentication Processes

You can do three things to increase the security of authentication in the Unix world. First, improve security on the network by developing a strong password policy and a strong training program that teaches users their responsibility to create, use, and protect strong passwords. Second, and better yet, use some other form of authentication. Third, use additional technology and physical security to protect password databases and authentication material.

Require Strong Passwords

Recognize that your network is only as secure as the least secure part. Users are that least secure part, and anything done to strengthen that part can have an enormous effect on the baseline security of your systems. Weak passwords are easily guessed and/or compromised. Software exists that can rapidly attack the password database, capture authentication material as it crosses the network, and bombard remote logon software with password guesses.

Where possible, insist on long passwords. Set a minimum password length, keep a history of passwords (and don’t let them be reused), require a password be changed after so many days and not before so many days, and require complex passwords (composed of three out of four things: upper- and lowercase characters, numbers, and special characters).

The more complex a password is, the harder it is to crack. Teach users how to create complex passwords. You’ll also find that the historical method of substituting numbers for letters (as in Pa$$w0rd) is really no different from using a dictionary word. The password cracking tool writers know all about that old chestnut, so they long ago built those substitutions into their word lists. Better ways to create strong (but easy to remember) passwords are listed here, with examples (but don’t use these examples in real-life passwords, since they are written down here for all to see).

• Use the first one or two letters of each word in a phrase, song, or poem you can easily remember. Add a punctuation mark and a number. Thus, “Somewhere over the rainbow” can make a password like SoOvThRa36!

• Use intentionally misspelled words with a number or punctuation mark in the middle. This can make a password like Sunnee#Outcide.

• Alternate between one consonant and one or two vowels, and include a number and a punctuation mark. This provides a pronounceable nonsense word that you can remember. For example, Tehoranuwee7.

• Interlace two words or a word and a number (like a year) by alternating characters. Thus, the year 2012 and the word Stair becomes S2t0a1i2r.

• Or, my favorite because it’s easy for me to remember, choose two short words that aren’t necessarily related, and concatenate them together with a punctuation character between them like in Better7Burger. Better yet, capitalize a different letter like betTer7burGer.

You already know that any combination of your name, the names of any family or friends, names of fictional characters, operating system, hostname, phone number, license or social security numbers, any date, any word in the dictionary, passwords of all the same letter, simple patterns on the keyboard, like qwerty, any word spelled backward, or any variation on the words “password” or a company name, make easily guessed passwords, but this bears constant repeating to your user population.

Use Alternatives to Passwords

Passwords are the weakest link in any security system. Despite all your warnings, users share them. They get written down, intercepted over the network, or captured by keyloggers. Ultimately, you can’t rely on the secrecy of passwords. In fact, passwords are really a terrible way to identify someone. What if you could only identify your friends by asking them for a secret word? That’s crazy. In reality, you as a person identify other people by noticing unique characteristics about them. Computers have limitations, but they can do more than simply ask for a shared secret.

By now, we really should be using something other than passwords for authentication. Third-party products are available to do so. Tokens, biometrics, smart cards, and third-party products are better choices than passwords.

Limit Physical Access to Systems

No matter what technical defenses are put into place, if an attacker has physical possession, or even physical access, to the machine, it is much easier to compromise. Limiting physical access to systems makes an attacker’s job harder.

Chapter 34 covers techniques to accomplish this.

Limit the Number of Administrators and Limit the Privileges of Administrators

How many people know the root password on your system? Is it the same password that’s used on all the other systems in your organization? Built in administrative accounts with full privileges and shared passwords, like the root account, are one of the greatest weak points in any network environment. The root account bypasses all security mechanisms once it is logged in, and if other people are able to log in to your system as root, they can do anything they want to it. Even worse, you will have no idea of who it was because there’s no direct relationship between the root account and a single individual.

That’s why assigning each person a unique account is important, and never log in as root. You can get root privileges without logging in as root. Everyone should have a regular user account assigned to them only, and only they know the password. If you have other administrators who need root access, require them to use their own regular account first, and then elevate their privileges once they’re logged in. This way, you’ll have an audit trail.

In fact, what you should do is assign a completely random password to the root account, seal it in an envelope, and keep it around only for emergencies. You should never have to log in as root in any normal situation.

Use sudo

The sudo command is easy to use, and it’s really no extra trouble. In fact, using sudo can be easier than logging in directly as root. If you want to run a command with root privilege, just type the word sudo in front of the command, as shown here:

You can also run commands as another user instead of root, by specifying the -u argument:

The /etc/sudoers file controls which users are allowed to run sudo and which commands they can run. Thus, you can grant any user on your system privileges akin to root but only for certain programs or directories. Doing this gives you complete control of what users can do, unlike with root login.

Back Up Your System

Once you have locked down your system the way you want it, be sure to back it up. That may sound obvious, but we’ve all seen that one system everybody relies on, the one that’s been running for years without a problem but suddenly crashes, and there are no backups. And the historical information about who built it and how is long lost. Unix systems are very resilient, and they may run for many years without needing a reboot or maintenance, which can lull people into a false sense of security. Back it up!

Subscribe to Security Lists

To help you stay on top of the latest news in the world of Internet security, you can subscribe to several web sites and mailing lists. Most of them send timely alerts when an exploit is known to exist, along with the steps you can take to block the exploit temporarily and the locations of patch files (if they exist) to correct the problem.

Compliance with Standards

If you are following a specific security framework, here’s how security standards bodies have defined the things you should do to lock down your operating system. In addition, hardening checklists are available from various agencies such as the U.S. National Security Agency (see References).

ISO 27002

ISO 27002 contains the following provisions, to which this chapter’s contents are relevant.

• 10.5.1 Establish reliable backups: determine the level of backup required for each system and data to be backed up, frequency of backups, duration of retention, and test restores to assure the quality of the backups.

• 10.6.1 Separate operational responsibilities, safeguard the confidentiality and integrity of sensitive data on the network, and log and monitor network activities.

• 10.6.2 Use authentication, encryption, and connection controls along with access control, with rights based on user profiles and consistent management of access rights across the network with segregation of access roles.

• 11.2.1 Control privileged (root) access rights and use unique user IDs for each user.

• 11.2.2 Use privilege profiles for each system, and grant privileges based on these profiles.

• 11.2.3 Perform user password management, or use access tokens such as smart cards.

• 11.2.4 Review user access rights, and perform even more frequent review of privileged access rights.

• 11.3.1 Keep passwords confidential and don’t share them, avoid writing down passwords, select strong passwords that are resistant to dictionary, brute-force, or other standard attacks, and change passwords periodically.

• 11.4.1 Provide access only to services that individual users have been specifically authorized to use.

• 11.4.3 Limit access to the network to specifically identified devices or locations.

• 11.4.5 Segregate groups of computers, users, and services into logical network domains protected by security perimeters.

• 11.4.6 Filter network traffic by connection type such as messaging, e-mail, file transfer, interactive access, and applications access.

• 11.5.1 Restrict system access to authorized users.

• 11.5.2 Ensure that unique user accounts are assigned for individual use only, avoiding generic accounts such as “guest” accounts unless no tracking is required and privileges are very limited. Also ensure that regular user activities are not performed from privileged accounts.

• 11.5.3 Enforce use of individual user IDs and passwords. Enforce strong passwords, and enforce password change at set intervals.

• 11.5.4 Restrict and monitor system utilities that can override other controls.

• 11.6.1 and 11.1.1 Restrict access based on a defined access control policy.

• 11.6.2 Segment and isolate systems on the network based on their risk or sensitivity.

• 12.6 Establish a vulnerability management program.

COBIT

COBIT contains the following provisions, to which this chapter’s contents are relevant.

• AI3.2 Implement internal control, security, and audit to protect resources and ensure availability and integrity. Sensitive infrastructure components should be controlled, monitored, and evaluated.

• DS5.3 Ensure that users and their activities are uniquely identifiable. Enable user identities via authentication mechanisms. Confirm that user access rights to systems and data are appropriate. Maintain user identities and access rights in a central repository.

• DS5.4 Use account management procedures for requesting, establishing, issuing, suspending, modifying, and closing user accounts and apply them to all users, including privileged users. Perform regular review of accounts and privileges.

• DS5.7 Make security-related technology resistant to tampering.

• DS5.8 Organize the generation, change, revocation, destruction, distribution, certification, storage, entry, use, and archiving of cryptographic keys to ensure protection against modification and unauthorized disclosure.

• DS5.9 Use preventive, detective, and corrective measures, especially regular security patching and virus control, across the organization to protect against malware such as viruses, worms, spyware, and spam.

• DS5.10 Use firewalls, security appliances, network segmentation, and intrusion detection to manage and monitor access and information among networks.

• DS5.11 Exchange confidential data only over a trusted path with controls to provide authenticity of content, proof of submission, proof of receipt, and non-repudiation of origin.

Summary

The following are the most important things that you can do to secure a Unix environment:

• Harden systems using known configurations, many of which protect the system against known attacks.

• Patch systems and report on those patch statuses.

• Segment the network into areas of trust and provide border controls.

• Strengthen authentication.

• Limit the number of administrators, and limit their privileges.

• Develop and enforce security policy.

References

Barrett, Daniel, and Richard Silverman. Linux Security Cookbook. O’Reilly, 2003.

Bauer, Michael. Linux Server Security. O’Reilly, 2005.

Garfinkel, Simson, and Gene Spafford. Practical Unix & Internet Security. O’Reilly, 2003.

Loza, Boris. UNIX, Solaris and Linux: A Practical Security Cookbook. AuthorHouse, 2005.

Turnbull, James. Hardening Linux. Apress, 2005.