Weapons of Our Warfare

Next-Generation Techniques and Tools for Detection, Identification, and Analysis

Information in this chapter

• Intrusion Detection Systems and Intrusion Prevention Systems

Introduction

Defending against next-generation threats and attacks is going to require more than your traditional firewall, antivirus (AV), and intrusion detection systems (IDSs). The majority of these technologies have provided extreme benefit in the 1990s and at the very beginning of 2000s, and are still considered best practices among most security professionals. However, those best practices in isolation that provide access, control, segmentation, and the detection/notification of the presence of malware on your network are too late, as the damage has already been done, depending on the cyber actor’s ability to exfiltrate information outside your infrastructure. Before we dive into advanced concepts, let us take a moment to address a few security technologies that you need to understand, as their value in providing security is not as secure as you might have once thought.

Legacy Firewalls

This is the most common network security product that one would find in almost every organization. I recently wrote an article when I worked for McAfee and mentioned, “Who has ever been fired for buying and deploying a firewall?” I am sure the cases of those who have been fired for buying a firewall are very few, as a firewall is a best practice and considered a trusted networking device by most teams that manage networks. We sometimes hear the words muttered after a breach from the executive team: “Don’t we have a firewall?” or “They got past the firewall?” This is no fault of the executive team as they were led to believe that a firewall would really protect them. The legacy firewalls that are out there today that have not upgraded to “next generation” capabilities lack the intelligence and ability to really stop the attacks of today. We are not advocating that you do not need a firewall, as access control and segmentation are key, and for the most part you would not build a house without installing a door. This worked well in the 1990s and very early 2000s as the Internet was fairly static and attackers were trying to find ways inside your infrastructure through the firewall. Today, that paradigm has shifted by the nefarious cyber actor using your corporate users as pawns to carry out their activity. Since the attackers are using a different attack vector, they are not going to risk their visibility profile to gain access by trying to break through your firewall; they realize that in order for you to conduct business, you will have the following outbound ports opened on your firewall: 80 (HTTP), 443 (HTTPS), 25 (SMTP), and 53 (DNS), which require additional security controls. Let us take port 80 (HTTP), for example. Most organizations will have a policy that will deny inbound HTTP connections that originate from the Internet into the corporate environment. Additionally, you would have the complexity of network address translation (NAT) as most large organizations are not going to have public IP addresses for every employee. The only time you would see port 80 allowed inbound is for a DMZ that is housing your Web farm. It is important to note that any connection that is established within the internal corporate environment, such as a HTTP request going outbound, will be considered trusted once the connection has been established through the firewall. Since HTTP is using TCP, you are required, for both parties (client/server), to have a two-way conversation. It is important to note that a legacy firewall has no idea that the server your client is connected to is passing malicious traffic; all it knows is that during that session, and on the basis of a policy that allows internal clients to access the Internet, the traffic is allowed, and therefore trusted. This makes the job a lot easier for the attacker, as he or she will target vulnerabilities within the Web browser such as plug-ins, security flaws in Websites, and phishing attempts. It is much easier for the attacker to get you to click on a link or redirect you to a rogue server, as he or she knows that a legacy firewall that does not contain “next generation” features is not going to stop the attack. Furthermore, it is likely that most large enterprise deployments are not taking full advantage of the entire feature set as configuring additional services becomes complex and might impede performance or they are running other point products to mitigate other attack vectors. The key to take away from this entire paragraph is that legacy firewalls do serve a purpose in terms of static access, control, and segmentation, but during your next upgrade cycle, look at buying a firewall that claims its next generation.

Antivirus

AV is probably the oldest security technology around. We all have some familiarity with AV because it is both a consumer and commercially available product. We have no problems updating signature files, quarantining viruses, malware, and so on. The biggest threat in the late 1990s and early 2000s was all about worms. That is somewhat true today but not as prevalent as they once were. Destructive, bandwidth-eating worms are so passé and have been replaced with botnet and targeted malware. The AV market is not going away anytime soon. Although some of our colleagues in the security community might disagree, we think having some form of end-point protection such as AV is needed. In a recent interview, John Pirc, one of this book’s authors, was asked to comment about the use of AV on a Mac by ZDNET Australia at AusCert. John responded with “It’s better to be safe than sorry.” That is the bottom line as I have seen that AV works well in controlling a massive breakout that would have taken weeks to clean up. However, the amount of malware being generated on daily basis is surpassing some of the smaller niche AV vendor’s capability to keep ahead of the threat when just relying on signature matching, and not leveraging other detection techniques such as IP, URL, and sender-based reputation services. Key take-away is to make sure you are getting more than just string-based pattern matching.

Intrusion Detection Systems and Intrusion Prevention Systems

The authors realize that some organizations are more risk adverse then others, based on their industry vertical. As we have traveled the world, we have seen a split of 60% deployed in intrusion prevention system (IPS) mode and 40% deployed in IDS mode. IDSs are different than IPSs in terms that one is deployed out-of-bound (IDS) and alerts when it recognizes malicious traffic, and the other (IPS) is deployed in-line and has the capability to block and alert on the basis of malicious traffic. Although IDS is recognized as a mitigating control in order to maintain PCI-DSS compliance, it is slowly reaching the level of a must-have network security technology. This technology has been around for over a decade and has made significant advances in the area of expanding threat recognition capability beyond normal signature/pattern matching. If you have this technology deployed in detection mode only, you are really placing your organization at risk. The downside to placing an IDS in prevention mode is possible performance issues depending on the vendor you are using. However, the authors understand that some organizations out there are more risk adverse than others and are willing to accept certain level of risks.

What Is in a Name?

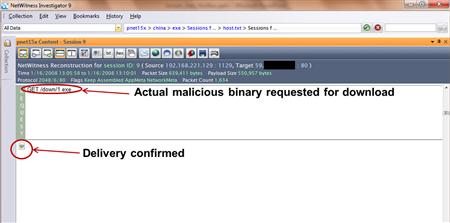

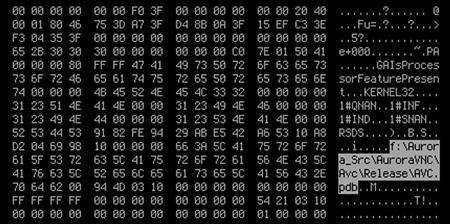

Almost every day, we find out a new attack or vulnerability. The naming of an attack is often done by the security researcher who finds it and sometimes attacks get named after artifacts that security researchers find while reverse engineering the code. Figure 12.1 shows Aurora, and by looking at the highlighted portion of the code, you can see where the name came from.

Figure 12.1 Aurora source code.

The great thing about vulnerabilities and exploits that are named is that the security community can generate a signature and is able to identify and block it by name. In the case of Aurora, it used multiple vectors in order to carry out the attack. It required the use of a vulnerability in Microsoft Internet Explorer to carry out the attack. Once it was successfully loaded on the end-point, it would use a non-RFC compliant SSL connection to communicate back to a command and control infrastructure. The point you need to take away regarding this type of attack is to look at it from a Macro point of view. This is important, as a lot of security technologies do not look at the macro aspect of the attack but focus on the micro aspects. Richard Schaeffer (NSA’s Information Assurance Director) was quoted saying that 80% of the cyber attacks today can be prevented with current technologies in place.1 For example, let us take a look at Operation Aurora:

1. Microsoft Internet Explorer vulnerability

a. Mitigated by patching your system once the vulnerability is known.

b. Virtual patching by IPSs, host IPSs, or AV to stop the delivery of the attack once a signature is made available or the end-point has been properly patched.

a. Virtual patching by IPSs, host IPSs, or AV to stop the delivery of the attack once a signature is made available.

b. Damballa’s Failsafe technology for Botnet discovery.

c. Network behavioral anomaly detection.

Note that some security vendors listed in the technology categories in “a” do not have the technical means for stopping certain types of malware delivery because they lack the ability to parse PDFs and other types of documents that are delivery vectors for malicious code.a. This requires the ability to perform network RFC checking and normalization. As we mentioned about legacy firewalls, the majority of them are not doing deep-packet inspection or RFC checking to the degree that would trigger on this type of suspicious activity that was present in Aurora. The McAfee Firewall Enterprise (formerly known as Secure Computing’s Sidewinder) has the capability to recognize a non-RFC compliant SSL connection and terminate the connection.

a. This requires a massive database, and the ability to harness bad IP address information worldwide. The IPs used in Aurora did appear in some of the leading vendors’ reputation databases, thus providing the instant ability to deny the outbound connections to the Aurora command and control server.

This illustration is important as it highlights the level of complexity and sophistication the nefarious cyber actors are using and compels a number of security vendors to provide streamlined solutions in providing reasonable security in mitigating these types of attacks, which is evident in the recent shift by increasing the security effectiveness in next-generation firewalls and reputation based services. There is not a “silver bullet” security product that can stop all these types of attacks. What is needed is awareness that the threat is real and as the Information Assurance Director of the NSA pointed out, there is that 20% of cyber attacks that are unknown. The fortunate thing is that the authors of this book are working together as Global Sr. Product Line Managers for Hewlett Packard’s Network Security division. The authors have taken the challenge to lead security change, and provided several concepts in this book that will hopefully be adopted by vendors, in order to allow businesses to operate with a higher level of confidence and assurance against the 20% of cyber attacks that are considered unknown. This becomes tricky as the unknown/unnamed attacks require a lot of real/near-real time analysis in order to bring suspicious activity to the forefront of the analyst’s preview and taking that intelligence and propagating it to the various security devices that can remediate the attack.

It is important to understand that no matter who the cyber actor is from an attribution perspective, as the attack vectors of those 20% of attacks are silent, with the recent uncovering of Stuxnet, they could be deadly. That is why we pulled together what we call the MOSAIC framework. In addition to the MOSAIC framework, we will also go into other data collections that are complimentary to security information event managers (SIEM). Raw correlated event information is powerful, but the holy grail of security information is the ability to collect full session-based information data and the ability to extract suspicious activity that some security technologies miss, because of their ability to keep state information of connection for a short period of time.

MOSAIC

Intelligence analysis is not a trivial endeavor. Predicting the future of the attack landscape is somewhat difficult but based on trends and a lot analysis, you can at least rationalize where the trends are moving. However, this requires quite a bit of work; hard work! Intelligence analysis requires the willingness to exhaustively pore over data in a meticulous fashion often times arriving at the same end until a break is made. These breaks can come in many forms, and in some instances, virtually leap out at the analyst from the body of intelligence data on which he or she may have spent days and weeks reviewing. Yogi Barra once quipped that it is hard to make predictions, especially about the future, and he was right! It is extremely hard to make realistic predictions about the future. When armed with the appropriate tools, methodology, and data, our chances of accurately predicting outcomes improve dramatically. If we are not prepared logistically, how can we possibly hope to address the threats presented to us in minutiae? If we are not comfortable with the tools and methodology of our trade, how can we feel confident about arriving at clear outcomes and decisions regarding our opponents? In The Art of War, Sun Tzu wrote, “If you know the enemy and know yourself, you need not fear the result of a hundred battles. If you know yourself but not the enemy, for every victory gained you will also suffer a defeat. If you know neither the enemy nor yourself, you will succumb in every battle.” From the writing and wisdom of Sun Tzu, we learn that without a complete and comprehensive knowledge of ourselves and our adversaries, we cannot hope to arrive at a position of victory. This is critical whether on the conventional battlefield, in the cyber realm, or in intelligence analysis environments. Intelligence analysts cannot afford to take anything for granted.

Intelligence is the sum total of disparate parts derived from virtually limitless sources, some of which are more trustworthy than others. These parts, like tiles within a mosaic, are unique. When viewed alone, they may not provide much in the way of obvious data or detail. Yet when viewed in concert with other disparate data samples, these tiles can create a picture unlike any previously conceived. This is very similar to working in the government with individual data sets that alone are unclassified, but when you place all the data sets together, they can be deemed classified. In order to recognize the picture, an analyst must be able to approach the art and science of intelligence analysis in a methodical, process-driven manner. This methodology should promote the collection of data from disparate sources, the consideration of the unobvious points of confluence that may exist between one or more pieces of data, and the clear articulation of the yield resultant from the data analysis. We believe that the end game can be achieved via the application of a high-level methodology called MOSAIC. MOSAIC enables analysts to think in a linear and a nonlinear manner in concert while seeking to accurately present data for consumption by other parties. Ascription although important, will not be the driving criteria within the MOSAIC framework, as it is our belief that intelligence is acquired from sources of varying degrees of credibility. Some sources based on an individual analyst’s point of view will no doubt be more credible than others, yet all will be important and worthy of investigation. Analysts will be introduced to MOSAIC in a structured manner allowing them to develop a familiarity with each of the following:

• Open source intelligence collection

• Asymmetrical intelligence correlation

Upon developing a level of confidence with the basic tenets of each of the key attributes that constitute the MOSAIC methodology, an analyst will be asked to challenge his or her preconceived notions about intelligence, sources, and the conventional schools of thought that promote and suggest that analysts by definition are linear thinkers. Our goal is to challenge and refute the commonly held beliefs regarding linear thinking. Through this simple methodical process, we hope to introduce an alternative that embraces linear and nonlinear analysis in concert while aiding anyone involved in intelligence analysis of information security data in becoming fluent and at ease in next generational analytic techniques.

Motive Awareness

A motive is something that causes a person to act a given way, or do a certain thing. Motives can be the result of conscious thought or unconscious thought. According to the American Heritage Medical Dictionary,2 motives can be rooted in emotion, desire, physiological need, or other similar impulses. Motives are present in all aspects of life where sentient beings are found. Being aware of motives is critical to proper intelligence analysis, and should not be taken lightly. The ability to take note of data points, circumstantial or direct, that influence outcomes and actions is of paramount importance. This level of awareness is extremely important for synthesizing cogent arguments related to a person, place, or thing of interest to an intelligence analyst. In many cases, motive awareness plays an integral role in defining and reinforcing decisions made regarding intelligence regardless of its source(s).

Open Source Intelligence Collection

As we have discussed previously, Open Source Intelligence (OSINT) is a key tool for gathering, collecting, and propagating intelligence data, ideals, and campaigns. Within the context of the MOSAIC model, Open Source Intelligence collection focuses on leveraging every possible tool at the disposal of an analyst to craft the most comprehensive view of a given set of data parameters. These sources include all data produced from publicly available information that are collected, exploited, and disseminated in a timely fashion to an appropriate audience for the purpose of addressing a specific intelligence goal or requirement.3 Newspapers, books, periodicals and journals, Websites, social networking media and sites, radio, television, motion pictures, and music, among other things, can and are often used as sources in intelligence gathering exercises. Additionally, there are commercial entities such as LexisNexis, Dunn and Bradstreet, Hoovers, Standard & Poor’s, and others who all offer open source pay for intelligence related to global risk intelligence, credit, sales, marketing, and supply chain information. These types of data are extremely valuable in intelligence analysis as it can aid the analyst in identifying patterns that may not have been apparent on initial investigation. These patterns are useful in identifying points of confluence that may have otherwise gone unnoticed, resulting in potentially grave ends.

Study

Studying is defined by Webster’s Dictionary as the act of conducting a detailed, critical inspection of a given subject. A subject can be a person, place, thing, or course (discipline) of study. The authors believe that the act of critical inspection cannot be stressed enough in all things, and intelligence analysis, in the information security arena or beyond, is no exception. Euripides, the Greek playwright wrote that people should “Question everything. Learn something. Answer nothing.”4 The act of studying is one that should be practiced exhaustively without apology. Data should be approached from as many perspectives as possible with the analyst being careful not to overlook or omit any detail in the process.

Asymmetrous Intelligence Correlation

Asymmetry implies a state of imbalance, or lack of symmetry. It is sometimes referred to as dissymmetry, and often in the context of spatial relevance, mathematics (geometric irregularities), biological studies (skewness, laterality, etc.). In the context of and intelligence analysis, asymmetrous intelligence correlation is the active correlation of intelligence data relevant to the level of surprise or uncertainty found in the activity of parties of interest involved in activities that demonstrate motive and agenda in an unexpected or new manner. Traditionally, examples of asymmetry in the context of warfare can be seen in all forms of insurgent activity and combat. Counter insurgency movements are examples of asymmetric responses to the threats posed by the actions purported by insurgents.

Similarly, in the realm of cybercrime and espionage, counter intelligence methodology (whether seen in field operations or in garrison activities) is an example of asymmetry. Intelligence analysts should become fluent in the tools, techniques, and methodology of asymmetric intelligence correlation in order to account for outliers which otherwise might be over looked.

Intelligence Review and Interrogation

Review and interrogation of theories and suppositions as they emerge are crucial to the success or failure of an analyst. Having the dedication to inspect what you expect will save precious time, and in some cases lives, given the mission and data with which an analyst is working. It is also important to push the bounds of what is technically possible. Often times you might hear that something is “technically” impossible and that might be coming from inside you. However, both authors have listened as other security engineers explained to us that our conclusions were not achievable. In some cases, they were correct, and other times we pushed the limits and discovered techniques that would otherwise be impossible.

Confluence

For the security analyst, being able to demonstrate the points of confluence or convergence of disparate data sets is an imperative. Successful demonstration of such points of conjunction aides the analyst in building his or her case, aiding him or her in driving action into realization of the results.

The MOSAIC framework is more of a statement in our approach when dealing with security. Security research is more of an art and the tenacity to keep pushing the limits of what is possible. Every security researcher is going to have his or her own approach in terms of research, data collection, and targeted technologies that he or she is researching based on his or her areas of expertise. Conversely, the nefarious cyber actor is working diligently on the next Operation Aurora. However, even as we write this book, it is very likely that an attack on the scale of Operation Aurora is happening right now. We say that with great certainty, as if the attack, vulnerability, and/or exploit have a name. You can rest assured that the majority of the security vendors have the capability of identifying it at the end-point or at network level. As we mentioned earlier in the chapter, on some of the misconceptions with firewalls, IDSs, and AV, there are some security gaps that they do not fill. The 20% of attacks that are not covered by the current security technologies that you might deploy do require additional technologies to fill the gap. Some of these advanced technologies require expertise in analysis but you can learn them and apply those principles in order to provide a higher level of assurance, and at the same time, lower your risk profile.

In the following section, we cover advanced meta-network analysis. When looking at different concepts in terms of securing your network, you have to approach it without technology religion. This will require some of you to go outside your comfort zone and realize that your approach has to be agnostic in terms of security technology. These technologies are typically not on the radar for most organizations as they might not have heard of them, or do not have the budget to expand their current security strategy beyond the typical core security devices that you would expect to find on most corporate networks. Additionally, depending on the industry vertical you are in, you might be more risk adverse in terms of how you deploy certain technologies. As we have encountered in just about all our trips around the world meeting with some of the largest companies, governments, defense organizations, and so on in the world, the majority concern about any network security technology relates to the possibility of it affecting network performance, and thereby their business operations. The majority of these performance concerns are around IPSs, firewalls, and secure Web gateways to name a few. Advances in silicon, processors, and field programmable gate array (FPGA) design have taken into account the nonsecurity attributes with network security in terms of solving the performance issues that once might have plagued a network operating at a high capacity in terms of users and bandwidth. As we might make comments that you should place certain detection devices in preventive (in-line) mode, we are only suggesting that because of the value you might be losing in terms of security effectiveness. We will cover that later in this chapter.

Advanced Meta-Network Security Analysis

This is a definition that we came across in the biomedical field, which defines meta-network analysis:

“There is a type of meta-analysis called a network meta-analysis that is potentially more subject to error than a routine meta-analysis. A network meta-analysis adds an additional variable to a meta-analysis. Rather than simply summing up trials that have evaluated the same treatment compared to placebo (or compared to an identical medication), different treatments are compared by statistical inference.”5

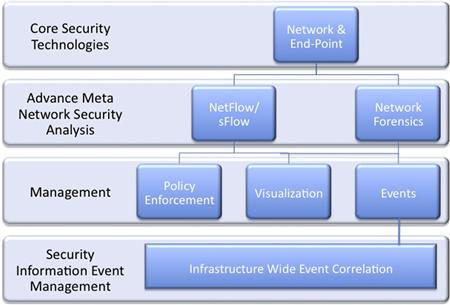

We have taken this concept and created what we are calling advanced meta-network security analysis (AMNSA). This requires the ability to harness multiple data sources in order to make a real/near-real time decision based on the analytics collected to provide immediate remediation or zeroing in on the systems that are affected by a threat that is not visible to current security devices. This is much different than what you find in a security information event management platform. By the time you have identified an in-process attack in terms of correlating event data, it is likely that you already lost data or, depending on the type of insertion into your systems, you might start seeing communication activity that is suspicious. This type of analysis is session-based. These capabilities are often found in network forensic tools that have the ability to record entire communication sessions within your network. Additionally, network behavioral anomaly detections are also key in providing insight and visibility into the who, what, when, and where of IP communications within your network. These tools are not typical in most networks as they are often seen as “nice to have” and not budgeted for and/or the organization does not have the security teams that are properly trained or have the specific skill sets associated with these technologies. They are also very resource intensive, from a personnel perspective and data storage perspective. Additionally, it requires insight to determine if certain connections are suspicious. This requires contextual information that is not black and white. In signature-based and pattern-matching technologies, there is no gray area. It is either vulnerability X or exploit Y. This is not a bad thing as it cuts down about 80% of the threats that are out there today and provides enormous benefits to the organization to operate efficiently and securely. Figure 12.2 is a high-level depiction of our next generation security framework (NGSF). The meta-aspects of AMNSA fall into the second tier of the NGSF.

Figure 12.2 Next generation security framework.

Next Generation Security Framework

The NGSF is made up of four different tiers and depending on the size of your organization and industry vertical, it is likely that you would have a minimum of two out the four tiers. Combating the level of sophistication required to minimize your risk posture against the unnamed threats requires the AMNSA tier. Let us explain each tier and the technologies you should be considering as a part of your security infrastructure.

Tier 1 Core Technologies

These technologies are considered best practices and typically consist of the following:

a. Next-generation firewalls: These are firewalls that contain a lot more intelligence than your traditional legacy firewall. In general, they have capabilities to apply policies based on IP addresses, applications, geolocation, URLs, and users. Additionally, they also contain security intelligence with intrusion prevention, reputation services, and antispam/-virus capabilities.

b. Intrusion prevention systems: These devices provide you in-line protection against well-known threats and, depending on which vendor you select, you also receive zero-day protection against some vulnerabilities and exploits that are not widely known by other security vendors. In addition to have signature/filters, pattern matching, heuristics, statistical analysis, and protocol analysis, some vendors have introduced IP reputation and application policy control to their IPS platforms. IP reputation is a very important aspect that has been added to intrusion prevention systems because it provides you additional insurance in the event the IPS does not have a signature/filter for a specific vulnerability or exploit. IP reputation is a score that is applied to a certain address that is known to be malicious in terms of serving up malware or used as a command and control node for a botnet. A great example of IP reputation at work is the example we often give about Koobface. This specific attack targeted social networking sites by distributing an email to others on the basis of your social network contacts. In short, the attack required a redirection to a known server that had a high reputation score before Koobface was ever named. What this means is that if you had a reputable IP reputation vendor, it is likely that you would not have become infected with part of the Koobface attack as the connection would have been blocked via the IP reputation score.

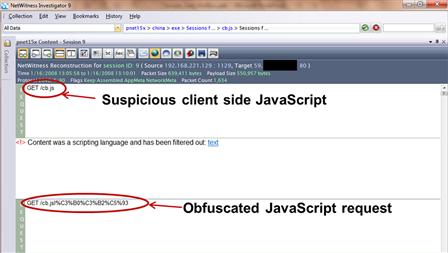

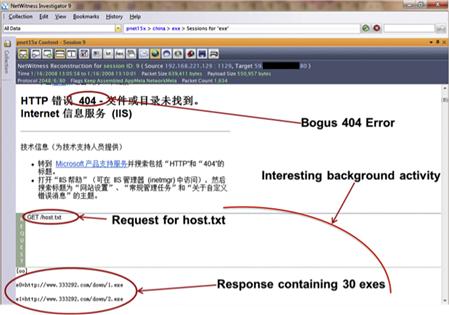

c. AV or host intrusion prevention: As we mentioned, AV is probably one of the oldest security technologies around and, from our perspective, still provides value at the end-point. Most AV vendors have expanded out past traditional viruses to covering malware, root kits, trojans, and other categories that are considered malicious. Along with some of the capabilities we listed, AVs are often found in host intrusion prevention systems. The key with host intrusion prevention is that you can isolate and restrict new binaries from running your system. This gives you the capability to white list the applications that are acceptable and black list everything else. As we mentioned, some of the attack vectors that are targeted against your host might bypass network security because they are encrypted or obfuscated in such a way that it would be impossible to detect until the attack actually tried to run on the host. For example, your normal end-user is not going to be downloading *.dll or multiple *.exes like p.exe, and then p.exe automatically copies itself to p.exe.exe. This is not normal behavior, and this type of behavior was seen with Koobface. So if anyone tells you that end-point security is dead, they are sadly mistaken. Controlling the user’s ability to run binaries and by blocking certain extensions at the network layer and end-point can be timely in the short term but the long-term payoff is much larger in terms of reducing your risk profile.

d. Nice to have: The following technologies are nice to have and do provide value, but some of them are converging into other technologies. On the basis of market size and overall worldwide deployment, we have listed these in order.

a. Secure Web gateways: This capability is currently being converged into other security devices but does provide benefit in controlling access to certain categories of URLs.

b. Mail security gateways: Some of these capabilities are being converged into other security devices but do provide tremendous value in terms of cutting down on spam, phishing, and other attacks that plague SMTP, POP, and IMAP.

c. Data leakage prevention: This technology has been around for almost a decade and did not really see its five min of fame until 2008, along with Virtualization and Cloud computing. In organizations that have highly sensitive data, critical intellectual property, and a highly mobile workforce, we would recommend looking into both network- and end-point-based data leakage prevention technologies.

d. Vulnerability scanning: This technology provides you the ability to run scans against various systems within your infrastructure to determine if they are vulnerable, and contains the latest security patches. Additionally, these scans are also useful in finding rogue machines on your network.

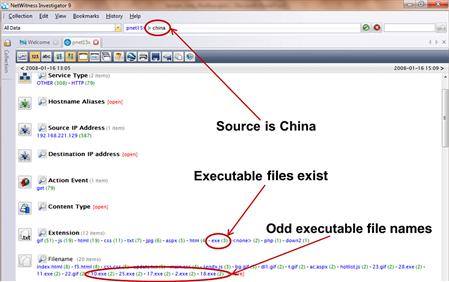

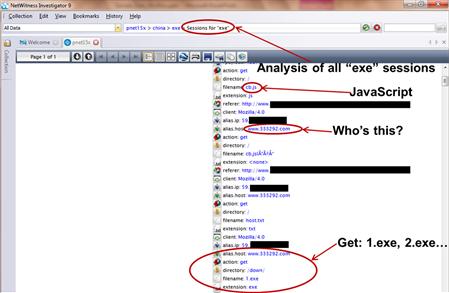

Tier 2 Advanced Meta-Network Security Analysis

As we mentioned earlier in this chapter, the ability to find the 20% of attacks that are not captured by security technologies today requires meta-analysis from multivectors. Network forensics-based tools like NetWitness offer you a complete picture of the network traffic as they have the capability to record all the packets that traverse critical traffic areas within your network. These technologies have the capability of detecting rogue/suspicious connections, malware, and in some cases, data leakage outside an organization. Additionally, the ability to leverage netflow and sFlow data within your network is key to identifying suspicious activity on your network. You will typically see netflow and sFlow in network behavior anomaly detection technology. Companies that are good at providing this type of information are Lancope and Arbor Networks. They are great at providing you the who, what, when, and where of network traffic. The why and the how are more contextual and provided in network forensic tools.

Tier 3 Management

The management elements are pretty basic in terms of providing a platform that allows you to create policy, visualize the network, and review live event data.

1. Management: In the ideal world, we would all like a single pane of glass for all our disparate devices but it is still common to have multiple management platforms, as a number of security technologies require specific proprietary management infrastructures.

2. Visualization: This area is starting to become very popular in terms of how vendors are allowing you to see data from a visual perspective. Many vendors are providing this capability and a great example of representing data visually is with NetWitness Visualize and McAfee’s Firewall Profiler. Strong visual analytics are going to be key in allowing analysts to quickly view data that are represented visually. This is valuable because the human mind understands the complexities of visualizations much easier than the sometimes vague, convoluted, and disparate pieces of data as they exist alone.

3. Events: Depending on the security technology, this usually contains event data that are tied to security vulnerabilities and are categorized as low, medium, or high. Additionally, these systems also provide you the ability to generate reports, and some even have the capability to respond to some events that require interaction with third-party equipment.

Tier 4 Security Information Event Management

This technology is key for providing infrastructure wide audit information and security events from a number of different security technologies. Additionally, the SIEM has the capability to correlate many events that by themselves are harmless, but many of those events happening together could have an entirely different meaning that might require immediate remediation.

We did leave out a lot of other technologies that fall under the umbrella of security, such as federated identity management, encryption at rest, and virtual private networks (VPNs), to name a few. The key point to our next generation security framework is geared toward technologies that can identify and remediate attacks in real/near-real time. Our emphasis on AMNSA is vitally important to the entire framework. The security benefits that can be gained by technologies in this tier can be the difference between a silent directed network attack and loss of intellectual property that ends up on the front page of The Wall Street Journal, or on the desk of your executive team in a report on how the security technology investment they deployed stopped the attack. The authors are often asked by some of the world’s top corporations on what we would do if we were in their shoes with regard to security. What technologies would you recommend, and where would you deploy them. Early in our careers, we would have had a difficult time answering that question but having traveled the world many times over, meeting with about every industry vertical and being privileged in having them share their security challenges in detail as well as their budget constraints, we feel much more confident in answering that question today. The technologies we described in the next generation security framework are exactly what we would recommend to any corporation. In terms of deployment, it is anywhere you have a boundary that you do not have full control over, that is, the perimeter, recent M&A, and b2b connections. Additionally, it is anywhere you have critical intellectual property, client/employee data, and other sensitive data. We are seeing many security deployments going into the data center as security is becoming ubiquitous throughout the entire infrastructure. Lastly, but more importantly, is the deployment of session-based analysis, which falls under tier 2 (AMNSA). For starters, this should be deployed at the perimeter, and your corporation should at least set aside budget or a pilot program for this technology. We have had the opportunity to see the benefits that NetWitness delivers in terms of insight and value. The discoveries that can be uncovered with its technology are unmatched in terms of the benefits it will provide to your corporation and it is also very complementary with the core technologies that we discussed in tier 1.

Summary

It has been a pleasure to have written this book and present a lot of knowledge, techniques, use cases, and other material in the hope of providing you with information necessary to take on the next generation and current threat landscapes. Just remember three key concepts about information security: people, process, and technology. In addressing each of these categories diligently, you will reduce your risk posture and make the Internet a lot safer for you to conduct business, adopt new IT business models with confidence, and compete on a global level with a much higher level of security efficacy.

References

1. Pirc J. SANS Technology Institute: Common network security misconceptions: Firewalls exposed Security Laboratory. Retrieved October 9, 2010, from www.sans.edu/resources/securitylab/pirc_john_firewalls.php; 2009, May 6.

2. Roehm E. Meta Network Improving Medical Statistics. N.p., 1 Dec. 2007. Web. 10 Oct. 2010 www.improvingmedicalstatistics.com/meta_network.htm; 2010.

3. Tung L. Video: Do Mac OS X users need antivirus? ZDNet Australia. Retrieved October 9, 2010, from www.zdnet.com.au/video-do-mac-os-x-users-need-antivirus-339296696.htm; 2009, May 29.

4. Zetter K. Senate panel: 80 percent of cyber attacks preventable Wired.com. N.p., 17 Nov. 2009. Web. 10 Oct. 2010 www.wired.com/threatlevel/2009/11/cyber-attacks-preventable; 2010.

1 http://www.wired.com/threatlevel/2009/11/cyber-attacks-preventable

2 American Psychological Association (APA): motive. (n.d.). The American Heritage® Stedman’s Medical Dictionary. Retrieved October 05, 2010, from Dictionary.com Website: http://dictionary.reference.com/browse/motive

3 http://frwebgate.access.gpo.gov/cgi-bin/getdoc.cgi?dbname=109_cong_public_laws&docid=f:publ163.109

4 http://thinkexist.com/quotation/question_everything-learn_something-answer/253510.html

5 http://www.improvingmedicalstatistics.com/meta_network.htm