CHAPTER 8

STICKS AND STONES AND CYBERBONES

or, The End of the Body as We Know It?

In a third-story lab of the Brain Science Institute building, located deep inside the sprawling campus of Japan’s RIKEN Institute near Tokyo, Takezo sits uncomplaining in his monkey chair. His furry little butt rests on a padded platform. At waist height a broad black table stretches before him. His arms and hands are free; in one of them he has a small rake, its handle just a couple of feet long, which he’s using to pull raisins from far points on the table to within reaching distance of his other hand. He’s very focused. Few things are as motivating to a Japanese macaque as fruit—though a near contender might be a hot tub. These monkeys are famous for lounging through long stretches of winter in Japan’s natural volcanic hot springs.

In an ordinary human chair next to Takezo’s steel-and-plastic monkey chair sits a smiling young scientist who is just as active as he is. Every time he rakes in a raisin, she places a new one somewhere else on the table. She also interacts with him. She smiles at him, talks to him in sweet, encouraging tones, and gives him frequent caresses and supportive pats. There is an ulterior motive in all the touching: In somatosensory experiments with animals it is vital to establish a high level of trust and familiarity. When the time comes to implant recording electrodes in Takezo’s brain, so that his body and action-space maps can be probed and charted, he must be willing to permit lots of touch and manipulation of his limbs. It also keeps him calm and happy.

When Dr. Atsushi Iriki and his guest enter the room, Takezo stops and stares; he knows Iriki, the senior scientist whose lab this is, but he sees few unfamiliar faces, and even fewer of them belong to gaijin (foreigners). The younger scientist bows and smiles to let Takezo know everything is fine. She coos gentle words to him in Japanese and coaxes his attention back to the raisins.

“Takezo has mastered the rake now,” says Iriki. “We’re going to start him on the joystick tomorrow.”

Takezo can boast an achievement that very few other monkeys in the world ever will: He can wield a tool, deftly and with full intention. If this fails to strike you as a real achievement, that’s because you are a tool-wielding primate of the highest order. Your body mandala is several stages more sophisticated than Takezo’s, which in evolutionary terms is about thirty million years behind yours. Unless you are disabled by stroke or brain injury, you take a great deal for granted. Simple rake wielding is quite a victory for Takezo, for Iriki’s lab, and for the science of body maps.

The idea of training monkeys to use tools came to Iriki while vacationing one summer in Okinawa. He began thinking about rakes as he watched a casino employee dressed in a Playboy bunny outfit who was using a hand rake to gather in chips from the card table. The work he was on vacation from involved probing some of the more sophisticated body maps in the parietal cortex of monkeys. One body map in particular had been puzzling him. Some of the cells in this map responded to images of sticks pointed toward the body, while others responded to sticks oriented crosswise to the body. Inspiration hit him as he watched the bunny wielding her rake. Here was one of the simplest possible tools, and she was using it to augment her body’s capabilities. Iriki imagined what must be happening in her parietal lobe to extend her arms’ dexterity with such ease and grace using a stick fitted with a wedge of plastic.

“I thought: Here’s a stick aligning to the body axis,” says Iriki. “She’s using it to alter her body schema. So why couldn’t we train a monkey to use a rake, and see if that’s what these neurons are involved in?”

And so in 1996 Iriki began a landmark series of experiments to see what happens in the parietal lobes of monkeys who are trained to use tools. He was motivated by the intuition—shared with quite a few other scientists, psychologists, and philosophers—that tool use expands the body schema. Many people have speculated about this, and everybody on earth has seen plenty of examples that seem to corroborate it. Iriki recalls sitting at the counter in a sushi restaurant one night, watching a chef carve up a fish. When the knife unexpectedly bit into the fish’s spine, the chef yanked his hand away and cried “Ouch!”

“There is a story about a master Chinese painter,” says Iriki, “who is said to have told his students: To be a true master of the brush, if someone were to cut the brush while you were writing, the brush must bleed.”

But as a scientist Iriki wanted to find out whether people are being purely metaphorical when they talk like this, or whether they are drawing their metaphors from a deeply, neurally real experience. With this sort of big picture in mind, he went back to his lab, back to his macaques, hoping that a tool-training experiment would shed some light on the neural basis of body schema.

Remember, the posterior parietal lobe is like a major river where tributaries of multiple senses and actions converge and integrate. It was already known that there is a body map (one of several multimodal maps) in the deep fold at the back of the parietal lobe that combines information about vision and touch. You’ll recall that these cells have bimodal receptive fields—that is, they respond equally well when the body part they represent is touched or when the eyes see something in the space near the body part. For example, a cell in the hand region of this map will fire with the same vigor to something touching the hand as it will to the sight of an object close to the hand. This bubble of near-space that (from the parietal neuron’s perspective) enhaloes the hand is the hand’s peripersonal space. This map cell represents both the hand itself and the region of space surrounding it—the region where the hand’s potential to act or be acted upon by nearby objects is high. In contrast, the sight of an object far beyond the hand, in extrapersonal space, doesn’t trigger a response in these cells. The cell deems the object too far away from the hand to be included in peripersonal space, and doesn’t fire in response to it. Other cells in this map respond to objects near the face and head. And surely other cells do the same thing for the knee, foot, tail (in primates who happen to have them), and so on. Together they map the space around your body as belonging to your body.

These cells were certainly interesting, but as Iriki began studying them with his rake-wielding monkeys, no one had a good idea how they contributed to the body schema, to reaching, grasping, and manipulating things, and certainly not what, if anything, they were contributing to your ability to wield a rake, a pen, a pool cue, a cursor, or a joystick.

Monkey See, Monkey Rake

It took a long time to develop a protocol to effectively train a monkey to use a rake. (In fact, Dr. Iriki’s first great challenge was to prove that monkeys, unlike apes, are even capable of learning to use tools, since they demonstrate virtually no natural inclination to do so either in the wild or in captivity.) After many months, Iriki’s team developed and refined a training process that can turn a naïve monkey into a tool user in as little as ten days to two weeks of daily training.

As soon as he had his first brood of fully trained rake-wielding monkeys, Iriki installed tiny hatches in their skulls, implanted dozens of microelectrodes into their posterior parietal neurons, and measured the neurons’ activity while the monkeys raked in the goodies. What he found amazed him. The visual receptive fields of these visual-tactile cells no longer extended to just beyond each monkey’s fingertips. Now they extended to the tip of the rake! The hand’s peripersonal space, which for the monkey’s entire life prior to rake training had never extended to more than a few inches beyond its fingertips, had now billowed out like an amoeba’s pseudopod to include a lifeless foreign object. In other words, the monkey’s parietal lobe was literally incorporating the tool into the animal’s body schema. Here, apparently, was the neural correlate of the body schema’s powerful flexibility.

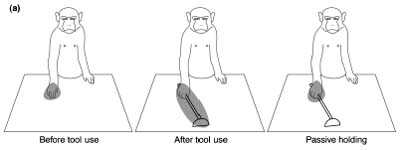

(a) Before learning to use a rake (left) or while passively holding the rake (right) without the intention of using it as a tool, the monkey’s hand-centered visual-tactile receptive fields stay confined to the hand’s immediate vicinity. But while the monkey is actively wielding the rake (center), the cells’ visual receptive fields expand along its length.

(b) The visual-tactile receptive field expansion of one of the monkey’s shoulder-centered neurons.

What Iriki measured was the morphing of these cells’ visual receptive fields, but as a tool user yourself, you know that the touch aspect of the tool-using experience is equally, if not more, profound. Imagine you lose your sight and need to learn to walk with a cane. At first you are bad at it. You stumble into things, and your flailing cane is as much a menace to your surroundings as it is a sensory aid to you. You are slow to assemble a mental model of the terrain before you, using nothing but the patterns of scraping and resistance the cane’s tip meets as you swing it. As you tap and sweep the cane, at first you are conscious mainly of the sensations in your hand and arm—which, after all, are the true physical locations of the feedback the cane is giving you. But after some practice, a remarkable illusion takes hold: The fact that you’re actually sensing all those forces and vibrations through your hand and arm fades from consciousness, and your awareness of those sensations moves out to the tip of the cane. Appreciate that for a moment. Your perceptual experience literally feels located at the tip of the lifeless stick in your hand.

This amazing flexibility in your body schema relies heavily on the body maps in your parietal lobe (with major cooperation from your frontal motor system, of course). Cells like the ones Iriki studies lie at the crux of your ability to sense the texture of your food through forks and knives or chopsticks. They allow you to pick up a bat or a racket and instantly convert your naked, inadequate primate arms into a formidable ball-slugging force to be reckoned with. They’re behind people’s deft ability with tools like drills, whisks, chain saws, and keys; with bodywear like hats, helmets, knee pads, skirts, and skis; even with vehicles, from skateboards and surfboards and bicycles to cars and boats and airplanes and space shuttles. Again, these cells are also at the heart of why you hunch down your neck when you drive your car into a parking garage with low clearance, and why you have an intimate sense of the road’s texture based on the vibrations in your seat and the handling of the steering wheel, and why a good driver can confidently estimate to within an inch whether the corner of her bumper can clear another car while making a U-turn. Your body mandala incorporates your car, your bat, your racket, your pen, your chopsticks, your clothes—anything you don, wield, or guide—into your body’s personal sense of self.

So Iriki’s sushi chef wasn’t just on hair-trigger alert against slicing his finger off; hands, knife, and fish were, as far as his higher order body maps were concerned, all truly part of him. And the Chinese calligrapher who spoke of the bleeding brush was using a poetic metaphor to describe the very real experience of tool-body unification that a skilled master enjoys. And so it isn’t pure wishy-washy mysticism to strive to “become one with” your putter, your racket, your brush, your video avatar, or whatever tool it is you seek mastery with: It has a solid experiential and neuroscientific underpinning.

Takezo is part of a long line of experiments Iriki has carried out since his original study. Over that time he has performed several variations on basic rake use. He has given the monkeys a short rake, which they had to use to retrieve a longer rake in order to reach the raisins placed out of the short rake’s reach. He has put up vision-blocking mounds (like speed bumps) and surrounded the table with mirrors, forcing the monkeys to map the locations of the raisins in a new way, using their reflections rather than direct-line sight. He has put mirrors on the rakes themselves.

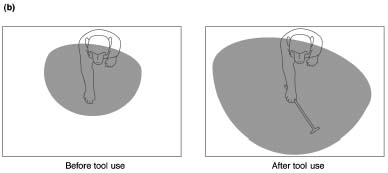

One day while watching his children play a Nintendo go-kart racing game, Iriki realized he should test whether his monkeys could learn to reconstitute their peripersonal space maps correctly using a variety of modified video feeds. Several subsequent experiments involved blocking the monkeys’ view of the table and their own arms using an opaque barrier at neck level, forcing them to watch and guide their own actions on a video monitor. At first he gave them direct, unaltered video feeds, but later he also gave them abstract representations to work with, such as a minimalist version in which a phosphorescent line segment represented the rake tip and a white dot represented the raisin on an otherwise black screen. In another experiment he put backward-facing mini cameras on the underside of the rakes.

In each case, the purpose is to see whether and how the monkeys are able to make the required mental transformations in body and action space to keep successfully raking in the raisins. In all cases so far, the monkeys have learned the skill, and their body schemas have morphed appropriately. It’s all an attempt to figure out how flexible these peripersonal space maps are. By understanding how they adapt with training, scientists like Iriki think they will gain important insights into the even greater tool-using ability of the ape lineage and the almost godlike flexibility of the human body schema.

The monkey must learn to use its body maps in a novel fashion in order to retrieve the food it wants.

Cyborgs of the Stone Age

One fact that intrigued Iriki was the unbreakable training period barrier he ran up against in every monkey he trained: ten to fourteen days. No matter how intensively they drilled, no matter how well they streamlined the training protocol, not even the most gifted macaque could be rake-trained in under ten days. This fact provided a strong clue as to what was going on.

So Iriki rake-trained a new cohort of monkeys and this time removed some of their parietal neurons and did a genetic analysis on them. Specifically, he measured the expression patterns of certain genes involved in brain growth and learning. He found these growth factors concentrated in the arm and elbow region of the parietal body map where the bimodal tool-use neurons were found. Many of the neurons in this map had grown long-distance connections (up to a few millimeters) to forge new synapses in other nearby body and body-centered space maps—connections that don’t normally exist in the Japanese macaque brain. Rake learning, for a monkey, evidently involves more than ordinary learning; it engages full-blown neuroplasticity, which requires a complex cascade of gene expression. This cascade can’t happen overnight, but needs several days to play out—ten to fourteen days, to be exact.

Iriki says there is an evolutionary insight in all this. It seems that this kind of enriched transwiring of parietal body-action maps has been steadily selected for in the primate line over the past twenty-five or so million years as monkeys became apes and then apes became human beings. It exists only as latent potential in monkeys, who don’t face the right combination of evolutionary pressure and opportunity to turn this tool-using circuitry into the developmental default. Still, being primates, their body mandalas are complex enough that it exists as realizable potential: With rigorous artificial training it can be coaxed from mere potential into behavioral reality.

In contrast, our nearest cousins, the subhuman apes, have a genuine instinct for tool use. It may pale beside ours, but it’s distinctly there. The reason is that the maps in their body mandalas are more richly interconnected from birth. The map enrichment Iriki managed to induce painstakingly in monkeys develops as a matter of course in the brains of apes. And so chimps and bonobos routinely fish for termites using peeled twigs, use rocks to crack nuts, prop up fallen branches to use as ladders, and so on. The other great apes, gorillas and orangutans, are generally less toolish, but they are certainly capable. For example, a wading gorilla will systematically poke a stick into a murky riverbed, searching for a ford. Humans have enriched their body mandalas even further, to the extent that we require—and even seek out with insatiable, instinctive hunger—rich sensorimotor interaction with tools starting in infancy.

Let’s take a quick look at human evolution. What web of circumstance led us to develop from a species of chimplike apes into a race of upright, spacefaring philosophers with aspirations to godhood? It didn’t begin with improved brains. For quite some time after our ancestors split with the ancestors of today’s chimpanzees, our brains and theirs remained pretty much the same size. What seems to have really jump-started it was a radical change to the body: our assumption of a permanently upright posture. No other primate does this. The rest of our primate kin are all inveterate knuckle-draggers.

We gave up a lot in the bargain. Our knees and spines are ill suited to bear the full force of our weight, and to this day these remain among the most vulnerable and poorly engineered parts of our anatomy. We also came away with a much higher center of mass, making us slow and topple-prone. If you’ve ever tried to catch a dog who would rather play keep-away than fetch, you’ll appreciate how much speed and maneuverability we sacrificed.

WHEN PLASTICITY MASQUERADES AS EVOLUTION

Penfield never learned this, but the primary touch homunculus is actually four homunculi laid out in parallel. Two deal with touch, and two specialize in proprioception—your felt sense of limb positions and movements. In the best-studied proprioceptive submap (neuroscientists call it Area 3a), the hand maps of different primate species contain a different number of finger maps.

Marmosets, which lack opposable thumbs and tend to use all their fingers together as a unit, don’t have separate finger maps. Their proprioceptive map looks like a mitten. Macaques, which have fully opposable thumbs and a larger repertoire of grips, possess distinct proprioceptive maps for the thumb and index finger. But their middle, ring, and little fingers are mapped together as one. Moving up the evolutionary ladder, the great apes (bonobos, chimpanzees, gorillas, and orangutans) have distinct proprioceptive maps for the thumb, index finger, and middle finger, and only their ring finger and pinky maps are blurred. We humans—known for our penchant for touch typing and pianism—have distinct maps for all five fingers.

How to explain this variation? One possibility is sheer genetics. Such differences in proprioceptive maps may be a product of brain evolution: As primate hands and fingers evolved freer, more mobile joints and new muscle attachments, the primate brain evolved specialized circuits to perceive and control its more versatile hand maps.

But there is another explanation: plasticity masquerading as evolution. Perhaps the genetic program responsible for creating this primary proprioceptive map in the brain changed little over the millions of years that separate monkeys from apes. Maybe the only things that evolved to a significant degree were the arms, hands, and fingers. Each species has its own set of mechanical and structural properties for limbs—the shape of bones and joints, the pattern of muscle and tendon attachments. Maybe the proprioceptive hand maps of monkeys are fingerless not because their genes decree it, but simply because anatomical limitations force the monkeys to use their hands as a unit.

Thus neuroplasticity might play a larger role than is generally appreciated in creating the “species-typical” organization of at least some brain maps. Evolution may craft anatomical improvements in body parts, and then the brain gerrymanders its body maps to capitalize on those improvements. Many unique features of the human mind and brain may be due to hidden, emergent processes like this one.

But the great benefit was hand freedom. Apes’ hands have to do double duty as fine manipulators and part-time forepaws. This subjects them to considerable engineering compromises. But by standing upright we cast off this limitation, and evolution could optimize our hands as hands proper. They became not only more delicate but a lot more nimble, dexterous, and clever. Our arms were also modified, especially at the shoulders. Aside from the gibbons—the so-called “lesser apes,” who hurtle arm-over-arm through the jungle canopy like Spider-Man—we are the only mammals that can pivot our forelimbs upward past our heads. The primate body mandala was already far richer than that of any other mammal, thanks mainly to our heavy reliance on our arms and hands and thumbs. We simply continued the trend.

Permanent two-legged walking coincided with the very first stone tools: sharp-edged shards of intentionally broken larger stones that were selected for their suitability as throwing weapons and butchery tools. Of course these tools were primitive compared with the precisely chipped, symmetrical, fire-hardened stone hand axes that their descendants—our ancestors—would be making several hundred thousand years down the line. But they were certainly tools, and they quickly became central to the bipedal apes’ way of life. (They certainly must have used sticks as well—as spears, clubs, staffs, and prods—but only their stone tools have survived into the present for archaeologists to find. In fact, chimps recently shocked primatologists by sharpening sticks and spearing bush babies for meat.) The craft of stone tool making improved through the ages in parallel with the remarkable ballooning of our brains. Our network of body maps grew richer and richer, and at the same time—and not just coincidentally—our brains ramified out into other realms of advanced mentation such as language, abstract thought, and a degree of moral and emotional sophistication never before seen on this earth.

In this view, the backbone of the story of human evolution has been the story of perfecting our knack for incorporating an increasingly sophisticated assortment of physical tools into our increasingly flexible body schemas. We evolved from apehood to personhood by developing a deep-seated cybernetic nature. Tool use went from a supplementary survival skill to an innate drive—what can be called the cybernetic instinct. We are able to fluidly and creatively reconfigure our body schema with ease; for us, it’s as simple as picking up a stick, or a pair of scissors, or a keychain. The impulse to augment our bodies with artifacts was bred into us over tens of thousands of generations on the African savanna. It turned us into tool-wielding savants who mastered fire, conquered the seven continents of the world, and eventually plunged a stick with a flag on it into the surface of the moon.

GRUNT, TWO, THREE…INFINITY!

Some body maps seem to have veered from their earlier functions. Consider two brain regions that seem to have their origins in ordinary homuncular body maps but bootstrapped their ways into a realm of infinity.

The first is a patch of cortex known as Broca’s area, which is critical for language processing. Damage to this area compromises the ability to apply the rules of grammar. Speech becomes “telegraphic”: It consists mostly of nouns and verbs, and it lacks the complex sequencing and embedding of words and clauses that separates Tarzan-speak from Ciceronian eloquence.

Broca’s area is acknowledged as the brain’s engine of grammatical fluency, but exactly what it does within the larger semantic economy of the human mind remains unclear. Though ensconced solidly in the frontal motor cortex, it doesn’t seem to be a motor area per se. But the location of Broca’s area is intriguing. First, its adjacency to the hand and mouth portion of the primary motor cortex is suggestive, given that the mouth and hands are the only two motor channels people use to spew forth the rat-a-tat patterns of spoken and sign language. Second, Broca’s area lies right next to the frontal mirror neuron system, which serves as a bridge linking your own body awareness and intentionality with those of others through an automatic process of mental action simulation (more on this in chapter 9). This Broca’s area–mirror neuron connection, though circumstantial, suggests a tantalizing fit with the gestural origin of language hypothesis, which holds that our protohuman ancestors began languagelike communication through a kind of sign language, then started augmenting it with vocalizations, and then, as our vocal tracts became more sophisticated, eventually supplanted the gestures like a cast-off scaffold.

The second body-map-derived area to consider is basically a counting region—a math region—and if it is damaged you may suffer acalculia (an inability to manipulate numbers). Located in the parietal cortex, it seems to have connections with the finger regions of the nearby primary touch map. Some scientists propose that this region forms while young children learn to count using their fingers; counting, adding, and subtracting become internalized and automated. If this view is correct, the region’s link to the fingers of the homunculus attests to math’s digital (digit is Greek for “finger”) origin.

The theme here, then, is body maps that outgrew their bodily origin and became something new. An area specializing in the control of our early grunts and the intention-reading system became one of the main hubs of language, which opened the human mind to an infinite scape of apprehension, from herbology to astrology to theology to Shakespeare. Meanwhile, back in the parietal lobe, the laborious finger-folding arithmeticking of preschoolers lays the foundation for their eventual entry into the timeless realms of Pythagoras, Euclid, and Sir Isaac Newton. Oh, what a body map can do! It’s no exaggeration to say that body maps played a key role in raising our minds from the limited sphere of our bodies into contemplations of infinity.

Not only can the human body mandala learn to incorporate tools—it needs exposure to tools for normal development to occur. A human is not the only animal with culture, just as an elephant is not the only animal that is deft with its nose. Culture is not just an important part of our survival kit; it is the essence of it. We are born in an absurdly immature state and spend many years utterly dependent on the physically and mentally mature adults around us. Our immature brains are culture sponges. We can afford to be born with such a relatively blank slate because our genes take it on faith, as it were, that we will be surrounded by a culture that is worth absorbing. The two main elements of human culture are a spoken language, and at least a minimal set of tools plus a matching set of tool-related skills. Even stone-age technology is a neurally highly advanced ability, requiring an extremely sophisticated body mandala. If it weren’t, other animals would have developed it too.

Cavemen in the Cyber Age

Jaron Lanier smiles as he recalls what it was like to have lobster legs sprouting from his body. He thinks all the way back to the early 1980s, when he and his friends were pioneering the technology of virtual reality. As he had done hundreds of times before, Lanier snugged the virtual headset over his eyes and found himself standing in a virtual room. But this time his avatar (his virtual body, programmed by his colleague Ann Lasko) was new, and not quite human. He held out his arms, and as usual, his avatar’s arms did the same thing. But looking down at his rib cage he could see six segmented legs, twitching gently like reeds being tousled by a breeze. He set about figuring out how to control them.

VIRTUAL REALITY 101

Virtual reality, or VR, uses a special headset connected to a computer to immerse you in an illusory three-dimensional environment. The headset covers each of your eyes with a miniature TV screen. The computer keeps track of where your head is in space and uses this information to calculate what you should see. Each eye is shown a slightly different view of the scene, so you perceive depth. Motion trackers tell the computer whenever your head swivels, pitches, tilts, or moves in any direction, and the viewpoint presented to each eye is updated accordingly. Thus, you have the convincing impression of being somewhere else—in a different room, inside a maze, on top of a mountain…anywhere, provided the computer running the simulation is fast enough.

The digital representation of your body in virtual reality is called an avatar. When two people interact face-to-face in a virtual environment, they are actually interacting avatar-to-avatar. An avatar may resemble the user who is “wearing” it, but it can be anything—a supermodel, a Zulu warrior, a space suit, a stick figure, a giant bowling pin, whatever. Virtual mirrors can be placed in a virtual environment so that users can “see themselves”—view their own avatars.

More advanced virtual reality setups give you some sort of interface device, commonly a cyberglove. The glove fits over your real hand, and just like your headset it sends position and motion information to the computer. The computer then adds the illusion of a hand to the virtual scene. The hand may be attached to your avatar or may seem to float in space, depending on how the simulation is programmed. You can use this cyberhand to interact with virtual objects in the scene. Common examples: pushing around virtual blocks, throwing virtual balls, firing virtual weapons, and pressing virtual buttons.

One of NASA’s VR interface systems. The head-mounted display provides both stereo vision and stereo sound, and two cybergloves give the wearer many options for interacting with the virtual environment. The wearer’s streaming hair was done for humor’s sake, but it illustrates VR’s potential for even deeper immersion using wind tunnels or fans, treadmills, smells, and more.

A cyberglove.

Of course the objects in a virtual reality simulation are weightless, and although they present visual surfaces to the eye, they offer no physical resistance to your hand. This is a major limitation. In the future, virtual reality researchers hope to develop a good “haptic instrumentation” for virtual reality, meaning cybergloves or even cyberbodysuits that create artificial touch and force feedback to lend virtual objects qualities of solidity, texture, and heft. Current-generation virtual reality systems are limited to head-mounted displays, cybergloves, and variations such as the cybermouse, for controlling a three-dimensional floating cursor, and the cybergun, for war training simulation or video gaming.

Another challenge: Immersion into a virtual environment will need to emulate natural forces on the body, such as those that occur whenever you rotate your body and reach for an object. If the virtual environment is not extremely true to your normal body maps, you are prone to aftereffects such as reaching errors, motion sickness, and flashbacks. In other words, you could suffer cybersickness.

As Lanier experimentally moved different parts of his body (his real body), the lobster legs bent and waved in ways that seemed random at first. But in fact there was a formula to it. The computer that was rendering his avatar was also tracking the bending of his joints (his real joints) and feeding that information into a composite control signal for the lobster legs. The recipe was complex. A subtle combination of the angles of the left wrist, right knee, and right shoulder, for example, might contribute to the flexion of the “elbow” joint of the lower right lobster leg. It was much too complex and subtle for his rational mind to grasp. But the inherent sensorimotor intelligence of his body map network was able to learn the patterns involved at a gestalt level and acquire the knack for controlling the new appendages. He soon mapped the lobster avatar into his own body schema.

The system did impose some moderate limitations on Lanier’s movements. So long as he wanted to retain control of the lobster arms, he had to move his real body according to certain patterns and postures that weren’t exactly the ones he would have chosen naturally. (An engineer would say that the lobster arms were robbing some of the “degrees of freedom” from Lanier’s movements.) But he was still able to navigate decently well and use his hands and arms to do other things. And in exchange, he was able to learn to control a new set of limbs.

“After a bit of practice I was able to move around and make the extra arms wag individually and make patterns of motion,” he says. “I was actually controlling them. It was a really interesting feeling.”

This was just one of many small informal experiments Lanier and his team ran in those days. They toyed with a variety of altered avatars to see how much distortion, reformation, augmentation, and general weirdness they could impose and still get the mind to accept the avatars as “my body.” They tried lengthened limbs, they tried shoulders at midtorso, they tried giant hands and stretchy arms like the superhero Plastic Man—and the mind would accept most of it. Just as Heidi’s body mandala had done on the operating table during her electrically induced out-of-body experience, the cybernaut’s body maps negotiated a new set of best-fit interpretations for the unnatural sensory input.

Consider one more of these experiments, which also involved a virtual, alien appendage with no analog on Lanier’s real body. But unlike the lobster arms, in this case the appendage actually seemed to have a sense of touch. Lanier explains:

You can put buzzers on different positions on your body, and if you adjust them within a given range of frequencies and phases you can generate phantom sensations between the buzzers. Now a very interesting variation on this is to put a buzzer in each hand and play with their frequencies and whatnot until you get the feeling of a sensation out there in thin air between your hands. It’s a weird, off-body tactile sensation. It’s a truly strange experience.

What we did was combine that illusion with an avatar that had a visual element that you could control using the lobster arm technique. The avatar had a short tentacle sticking out of your belly button that you could learn to wriggle around. Then with the buzzers going, it felt like the sensation was in the tip of this appendage. When you get the visual and tactile experiences going together, it becomes just astonishingly convincing. When you combine somatic illusions with visual feedback like that, you just get to this whole new level. It’s like your homunculus is maximally stretched at that point.

Lanier has a flair for naming things, most famously the term “virtual reality” itself. More recently he has begun popularizing a new catch-phrase that encapsulates virtual reality’s ability to radically reorganize the body schema: He calls it “homuncular flexibility.”

When you think of homuncular flexibility, think lobster arms. Think umbilical tentacle. Think of the illusions presented in chapter 3—the rubber hand, the shrinking waist, Pinocchio, the rubber neck—and imagine their analogues in virtual reality. When you first read about those illusions, you would have been within your rights to think, “Yes, it’s all very cool…but so what? Are these illusions anything more than just parlor tricks?” It is clear why a neuroscientist would be excited by them, but do they have any potential application outside the lab?

Yes, they do—or at least, they will. Not so much in the physical world, but in the virtual. In virtual reality, where your visual-spatial environment can be totally controlled, these illusions go from mere gee-whizzery to usefully exploitable properties of the body mandala, especially when supplemented by well-chosen touch and auditory inputs. The body sense you grew up with and have taken for granted your whole life is a lot more mutable than you ever suspected. Virtual reality is a powerful way to tap into that flexibility. Drastically altered bodies are going to be useful someday soon in video gaming, psychotherapy, rehabilitation medicine, and computer interface generally.

As for the umbilical tentacle and lobster arm experiments, Lanier says he and his pals were simply messing around with the technology. They were like the pilots of the first biplanes a century ago, doing the first loop-the-loops just for the thrill of it, just to see if they could. They didn’t do controlled experiments; they were just doing proof-of-concept work.

“But what a concept!” Lanier says. “I don’t know how far it can go, but I suspect that if we really play with it we can come up with some quite spectacular applications.”

VIRTUAL REALITY, FROM THEORY INTO PRACTICE

Most people’s intuition about VR’s usefulness is along conventional lines. Imagine immersive versions of video games like “The Sims,” “Second Life,” and “World of Warcraft” virtual conventions where professionals mill about and wheel and deal face-to-face through their avatars; realistic urban warfare zones where U.S. marines can hone their tactics, including how to interact with civilians. VR is going to make all of these possible. They are obvious and inevitable.

Aside from applications in the training of surgeons, soldiers, and other professionals, virtual reality is a promising tool for certain kinds of learning related to body maps. Lanier offers the example of juggling:

“If you build a particular motor skill in a slowed-down environment, you learn it much faster,” he says. “I did the first experiment on that with juggling. I programmed in extra-slow juggling balls and slowly sped them up. People really responded with accelerated learning. This is now being offered as a rehab tool, by the way. And it works, if not for all motor skills, then at least for a subset of them that involve fast, ballistic types of motion.”

Virtual reality is also finding increased use in treating phobias. In traditional exposure therapy, patients are increasingly exposed to the things they fear—snakes, heights, spiders, and so on—and gradually grow inured to the things that once drove them to irrational heights of terror. But this kind of therapy can be expensive, dangerous, or impractical. In contrast, virtual exposure therapy has the virtue of immersing the patient in a full three-dimensional, interactive world that’s safe and programmable. Consider the difference between a still photo showing two shoes on the ledge of a skyscraper and the street below and a simulated experience in VR. Immersion is far more powerful than a photograph. VR fully engages a person’s parietal body and space maps, creating a sense of presence within the situation rather than glimpsing the scene inside a static frame. The same goes for exposure to spiders and other objects of phobia, as well as for re-creating war zones for the treatment of post-traumatic stress disorder.

Finally, the pornography and sex industries are sure to drive a lot of innovation and demand for VR, as they have been doing with new technology for decades (e.g., videotape, pagers, and the Web). The intersection of porn and virtual reality is known as teledildonics. Not only will people be able to change how their partners’ and their own avatars appear, they’ll be able to alter the felt size and configuration of their body parts. If the touch delivered by a sexual interface device moves, say, one inch on your skin while its virtual counterpart moves two inches, your body schema will believe in the doubled dimensions of whatever it is you want to double in size. Without a doubt, this will have great commercial appeal.

Bending the Homunculus Till It Breaks

Ever since those early forays into homuncular flexibility, Lanier says he’s been deeply impressed by the brain’s willingness to let its sense of embodiment be warped by artificial sensory input. Unfortunately, he wasn’t able to pursue it very far due to hardware and software limitations. After an initial spike of interest during the 1980s, the public and much of the business world grew disillusioned with the hype surrounding virtual reality. The budding digerati recognized virtual reality’s revolutionary potential, but the technology of the day was simply inadequate to do more than reveal a rough outline of the future. But today with faster computers, advances in three-dimensional rendering, and cheaper components, there is a revival in virtual reality research, notably in fields relating to psychology. This includes the study of human-machine interfaces, where the power of homuncular flexibility holds great promise.

There’s no question the body schema is incredibly labile. The really interesting question then is, How flexible? Where does it all break down? How far can you stretch the homunculus before it revolts? How far can your body schema bend before the illusion is spoiled?

Enter Dr. Jeremy Bailenson, an assistant professor of communication at Stanford University who recently teamed up with Lanier to repeat and extend some of those early shoestring experiments. Bailenson’s research explores the intersections between virtual reality, body schema, homuncular flexibility, and social psychology.

His lab is a small room in one of Stanford’s old brick buildings crammed full of computer equipment and workstations. Adjoining it is a much larger and completely empty room where people decked out in virtual reality headsets can wander without fear of tripping over any peskily nonvirtual obstacles.

“We have a bunch of projects going,” says Bailenson, looking around the room considering which ones to demonstrate. “Ah, yes, here’s one that’s related to homuncular flexibility.”

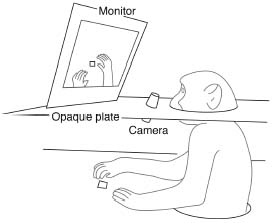

He indicates a computer workstation with a Web camera perched atop the monitor. The camera tracks your facial expressions and transforms them into control signals for a robot hand. The hand, which is wearing a red woolen mitten, juts out from beside the monitor as though offering a handshake. Raising or crinkling your brow opens and closes the hand’s grip. Moving your head up and down controls the hand’s angle in a vertical plane. After a bit of practice you can learn to use your face and neck to deliver a handshake. This prototype works fine, says Bailenson. But his lab will need to acquire a more fully articulated robot hand before he can see how much manual dexterity a person can exert with face muscles.

At a nearby workstation Bailenson indicates another face-tracking camera. This one uses your expressions to alter a shape floating on the screen. A neutral expression elicits a green cube. An angry face transforms it into a red octahedron. A smile turns it into a yellow pyramid. Bailenson says he wants to see if you can learn to use this interface to read emotions as easily as you can from faces.

Bailenson also wants to see how people’s avatars affect their social interactions. For example, in one study he randomly assigned avatars of different heights to volunteers. Imagine you are a subject in the study. Your avatar might be taller or shorter than your real body, or it might be close to your true height. In the first phase of the experiment you are allowed to “see yourself” in a virtual mirror in an otherwise empty virtual room. Bailenson tells you to walk around the room and get used to the headgear. Subconsciously you also register your new height (assuming it has changed).

For phase two you go through a virtual door into another room where you meet face-to-face with a volunteer “wearing” a different avatar. Both of you are then required to play a simple negotiation game in which you must come to a mutual decision about how to split up a sum of money. The game ends and the money is assigned to each player only when both of you agree to the terms you’ve negotiated.

You probably think you wouldn’t be a pushover just because you happened to get a shorter avatar. You probably think the height of your avatar would have no influence on your negotiation skills. And you may be right—but don’t be too sure. Bailenson found a dramatic correlation: The majority of players, regardless of whether they are tall, short, or of gender-typical height in real life, will negotiate much less effectively and less aggressively if they receive a shorter avatar. Bailenson says the finding floored him.

“If your avatar is just six inches shorter than your negotiating partner, you’re twice as likely to end up accepting really, really unfair terms at the end of the transaction,” he says. He is running similar experiments that vary race and gender. The interplay between your body schema and body image is as slippery and as complex as ever.

Bailenson is also running experiments in which he measures people’s heart rate and skin conductance—the autonomic signals measured in lie detector tests—and uses that information to modulate certain features of their avatars. For example, he makes people’s heart rates control their avatars’ heights within a certain range. If your heart is beating fast, your avatar is four inches taller than when your heart rate is slow. Or your skin conductance—basically, the sweatiness of your palms—determines your avatar’s translucency. The more uncomfortable or nervous you are, the more you sweat, and the more see-through you become. The more confident you feel, or the better you start to perform, the more solid-looking you are.

Bailenson expects these methods will inject a new dimension into social psychology—the study of how people interact, negotiate, influence, perceive, and judge one another (and themselves). In real-life interaction people’s body images and schemas are relatively stable. But with virtual reality your body schema and image, which are integral to how you see yourself and treat others, are as flexible as your wardrobe. Results from these studies may impact how people end up using virtual reality as more and more social and business interactions migrate into shared virtual environments.

What will happen in a world where you can strike up friendships from opposite ends of the earth without ever actually seeing other people’s real faces and body language? What will it do to the business world if everyone is hyperconscious of avatars’ heights, races, and gender? How might autonomic changes to your avatar affect communication between you and your online friends, or with your therapist, or in multiplayer games where negotiation and dialogue are important elements of play? Only time and further research will tell.

Through the Looking Glass

Dr. Iriki also has an interest in the body schema’s interface with cyberspace. While he watched his children play their Nintendo road racing game, he was impressed by how absorbed they were. They not only had an emotional investment in winning, they seemed to be embodied in the game world itself. As their insane cartoon go-karts screeched around corners, the kids leaned their weight into the couch as though it would help balance their turns. When their carts jumped or dodged, the kids mimicked or responded to the motions with their whole bodies. The kids’ enthusiastic body movements got Iriki thinking about his monkeys again, and convinced him that he should start them on video displays and joysticks. Physical tools like rakes may be one thing, but can monkeys make the next leap, into the use of virtual tools? Indeed they can.

Takezo is again sitting in his monkey chair, but now he is adept with the joystick. He fiddles with it as intently as any twelve-year-old Nintendo addict fighting his showdown with the end-of-level boss monster. With his right hand, Takezo sweeps his viewfinder around in search of the next raisin. He spots it. His left hand darts out with its rake and pulls in the treat. Just like the rake, the joystick and the camera it controls have been added to his bodily sense of self; they aren’t lifeless foreign objects anymore, but honorary eye and neck muscles. Takezo can use the correlation between his hand movements on the joystick and the shifting view it creates on his monitor to map the table space in front of him. The instant he spots the raisin, his parietal space maps talk to his frontal motor maps to create a precisely targeted rake-reaching motor program. The actual table is invisible, but he is no longer dependent on “real,” unmediated seeing and reaching. Thanks to the plasticity-driven changes in his brain, his interconnected body maps create an intuitive link in his mind between screen space, table space, and reach space.

Looking back, maybe it isn’t too surprising that monkeys are able to use cybertools such as joysticks and shifting camera views. The primate body mandala is formidably flexible. In an earlier experiment, Iriki got curious to see what would happen if he showed the monkeys images of their hands greatly enlarged, like a Mickey Mouse glove. As expected, their parietal hand maps expanded to accommodate the altered visual input. Their body schemas willingly accepted the illusion of a supersized hand.

Your body schema will also readily accept changes in scale and magnitude, of course. Not only in size, but in distance. For example, if your virtual hand moves five times as far and as fast as your actual hand does, you will experience that directly. In fact, if the distance multiplier isn’t insanely large, you won’t even notice!

Nor do kids (of all ages) notice the degree to which their own body schemas absorb the characters in the games they love to play. Homuncular flexibility explains why. Their deep captivation occurs not just because of the graphics, the fast pace, the bells and whistles, but also because their body maps are infused with the cybernetic instinct.

It is worth noting that Nintendo’s latest gaming console, the Wii (pronounced “we”), absorbs the player’s body schema more deeply than any other system on the market. It does this through a novel control device—a small wireless wand that keeps track of how it is moved and rotated in space. Every swoosh, twist, and jerk of the wand translates into a related move in the video game. Swords, baseball bats, golf clubs, boat tillers, you name it, faithfully mirror the player’s actions. The wand also emits sound effects and vibrations, deepening the illusion by engaging the body mandala through additional senses.

In the early weeks following the Wii’s commercial release at the end of 2006, Nintendo had to recall and strengthen the wrist straps looped to the control wands because they kept breaking and flying from players’ sweaty hands, shattering windows, cracking television screens, and bruising foreheads. Many players say that after a few minutes of play the illusion can become so convincing that it is hard not to get overenthusiastic. They forget they are not truly wielding a sword. They become heedless of the sofas and coffee tables in the living room. Think of the Wii as an intermediate step between “traditional” console games like the Xbox and future VR-based gaming systems, which seem destined to make the Wii as tame and passé as “Tetris.” Consider what the Canadian educator, philosopher, and scholar Marshall McLuhan wrote about in the 1960s. Television, he argued, represents a technological extension of your ability to see and hear. In a similar vein, video games and VR games on the horizon represent a technological extension of your body schema into a constructed world of pure fantasy.

It is an area of gleeful geek speculation as to what’s going to happen in another generation or two once virtual reality technology gets both cheap enough and high-quality enough to penetrate the consumer electronics market. What, if anything, will it do for (or to) our homuncularly flexible children? Will it benefit them? Might it harm them, socially or neurally, in unforeseeable ways? These questions are wide open.

On the negative side is the litany of problems surrounding today’s video games and online allures. Kids ignore homework and chores, according to critics. They isolate themselves from flesh-and-blood peers and family, entrenching antisocial habits. They sit for hour after hour, day after day, moving nothing but fingers and eyeballs and growing fat and flabby from inactivity.

As video games and virtual communities are scaled up beyond the confines of today’s relatively small, flat-screen monitors into the total-immersion experience of virtual reality, these trends may amplify. Already some hard-core players of massively multiplayer games like “The Sims,” “Second Life,” and “Ultima Online” value their virtual relationships and their avatars’ well-being more than their own. When virtual reality becomes ubiquitous, will such people recede even further from the real world? Will their ranks multiply?

Another issue is whether violent video games increase aggression in chronic gamers. There is abundant controversy over the effects of games such as “Quake,” “Doom,” and the infamous “Grand Theft Auto” on young people’s behavior. Evidence that video games may be harmful is found in a recent study where teenagers were randomly selected to play either a violent video game or a nonviolent video game for thirty minutes. Then their brains were scanned to see what areas had been engaged by the games. Compared with teens who played nonviolent games, those who were immersed in violence showed less activity in the prefrontal cortex—an area involved in inhibition, concentration, and self-control—and increased activity in the amygdala, which corresponds to heightened emotion. However, as the video game industry and many civil libertarians were quick to point out, the study did not show that these differences were long-term, or even harmful while they lasted. When you read the literature on this controversy it quickly takes on the flavor of a he-said, she-said squabble, with neither side able to soundly refute the other.

Whichever side you take in today’s debate, it is legitimate to ask whether tomorrow’s fully immersive blood-drenched, morality-free games will be patently harmful. Expect a lot more controversy over these issues through the 2010s and ’20s.

Lanier is in the optimists’ camp. He thinks the “genuine weirdness” of VR-turbocharged homuncular flexibility will have many uses and benefits, but first people will have to go through a less imaginative phase of treating virtual reality as essentially just a three-dimensional interactive movie—that is, you simply “don” an avatar and walk around in it like a Halloween costume. The avatar might be of a different gender, it might be taller or shorter than your real body, it might look like an elven knight or a Confederate soldier, but basically you participate in the virtual world on the terms of your natural body schema. But Lanier sees that as merely the entry point. “The future application of virtual reality, and the real art of it,” he says, “will be to change your sensorimotor loops, to change the nature of your own body perception through the avatar.”

Thus imagine you are a child learning about dinosaurs. Your virtual-reality-assisted lesson will not merely take you back to the late Cretaceous like a time-traveling field zoologist sent to observe Tyrannosaurus rex in its natural habitat; you will actually become the dinosaur. Your T. rex avatar’s arms are foreshortened and two-clawed, and, like Lanier’s lobster arms, are much less dexterous than your real hands. But this dexterity isn’t being lost, it’s just being rerouted and translated to other parts of your body. Some of your hand and arm movements are fed into greatly enhanced mobility for your huge and powerful neck and jaws, and the rest is fed into a control signal for a giant tail you can swoosh to and fro. After a while of playing around in this avatar, your primate-style body schema fades into the background and is supplanted by a saurian one.

Lanier expounds on avatar educational possibilities. “In learning trigonometry, you could become the triangle,” he says. With the right interface, being isosceles or right-angled or congruent could have muscular, sensory, physically intuitive consequences that reinforce many of the important trig concepts that kids struggle to grasp when they are taught solely using chalk-scrawled figures and Greek terminology.

“In learning biology,” he continues, “you become a molecule with a specific shape that only docks onto certain receptors. Suddenly the lessons are not so abstract. From early in life, every child has the experience of being a triangle, a molecule, a T. rex, an octopus, and God knows what. So in addition to learning how to control their own bodies, they learn from early childhood how to control other bodies.”

The interesting question then is, Does that mean anything? How does that affect development, how does that affect cognition, how does that affect culture, how does that affect society?

“It’s going to be a very interesting experiment,” Lanier says. “It won’t be systematic. It will be commercial and it will be driven by a lot of user interest. It will be like the World Wide Web was ten years ago and like MySpace is today. Twenty years from now, give or take, the tools for this will be everywhere, and then a generation of kids will grow up with it.”

Lanier thinks the outcome will be positive overall. He predicts that early, lifelong experience with homuncular flexibility will be similar to growing up multilingual. Kids who grow up speaking more than one language get slight but measurable cognitive advantages that last a lifetime.

“In the same way, being multihomuncular”—he chuckles—“will be a lifelong enhancement.”