CHAPTER 4

The Machine That Made Art

Quite possibly the most famous statement ever made about Hollywood is the one that screenwriter William Goldman laid out in his 1983 memoir, Adventures in the Screen Trade. Combing through his decades in the film industry for something approaching a profundity, all Goldman was able to muster was the idea that, when it comes to the tricky business of moviemaking, “Nobody knows anything. Did you know,” he asks,

that Raiders of the Lost Ark was offered to every single studio in town and they all turned it down? All except Paramount. Why did Paramount say yes? Because nobody knows anything. And why did all the other studios say no? Because nobody knows anything. And why did Universal, the mightiest studio of them all, pass on Star Wars . . . ? Because nobody . . . knows the least goddam [sic] thing about what is or isn’t going to work at the box office.1

Goldman is hardly alone in his protestations. In the autobiography of studio executive Mike Medavoy, the legendary film executive who had a hand in Apocalypse Now, One Flew Over the Cuckoo’s Nest and The Silence of the Lambs, opines, “The movie business is probably the most irrational business in the world . . . [It] is governed by a set of rules that are absolutely irrational.”2 Hollywood lore is littered with stories of surefire winners that become flops, and surefire flops that become winners. There are niche films that appeal to everyone, and mainstream films that appeal to no one. Practically no Hollywood decision-maker has an unblemished record and, when one considers the facts, it’s difficult to entirely blame them.

• • •

Take the ballad of the two A-list directors, for example. In the mid-2000s, James Cameron announced that he was busy working on a mysterious new script, called “Project 880.” Cameron had previously directed a string of hit movies, including The Terminator, Terminator 2, Aliens, The Abyss, True Lies and Titanic, the latter of which won 11 Academy Awards and became the first film in history to earn more than a billion dollars. For the past ten years, however, he had been sidelined making documentaries. Moreover, his proposed new film didn’t have any major stars, he was planning to shoot it in the experimental 3-D format, and he was asking for $237 million to do so. Perhaps against more rational judgment, the film was nonetheless made, and when it arrived in cinemas it was instantly labeled in the words of one reviewer, “The Most Expensive American Film Ever . . . And Possibly the Most Anti-American One Too.”3

Would you have given Cameron the money to make it? The correct answer, as many cinema-goers will be aware, is yes. Retitled Avatar, Project 880 proceeded to smash the record set by Titanic, becoming the first film in history to earn more than $2 billion at the box office.

At around the same time that Avatar was gaining momentum, a second project was doing the rounds in Hollywood. This was another science-fiction film, also in 3-D, based upon a classic children’s story, with a script cowritten by a Pulitzer Prize–winning author, and was to be made by Andrew Stanton, a director with an unimpeachable record who had previously helped create the highly successful Pixar films WALL-E and Finding Nemo, along with every entry in the acclaimed Toy Story series. Stanton’s film (let’s call it “Project X”) came with a proposed $250 million asking price to bring to the screen—a shade more than Project 880. Project X received the go-ahead too, only this didn’t turn out to be the next Avatar, but rather the first John Carter, a film that lost almost $200 million for its studio and resulted in the resignation of the head of Walt Disney Studios (the company who bankrolled it), despite the fact that he had only taken the job after the project was already in development. As per Goldman’s Law, nobody knows anything.

Patterns Everywhere

This is, of course, exactly the type of conclusion that challenge-seeking technologists love to hear about. The idea that there should be something (the entertainment industry at that) that is entirely unpredictable is catnip to the formulaic mind. As it happens, blockbuster movies and high-tech start-ups do have a fair amount in common. Aside from the fact that most are flops, and investors are therefore reliant on the winners being sufficiently big that they more than offset the losers, there are few other industries where the power of the “elevator pitch” holds more sway. The elevator pitch is the idea that any popular concept should be sufficiently simple that it can be explained over the course of a single elevator ride. Perhaps not coincidentally, this is roughly the time frame of a 30-second commercial: the tool most commonly used for selling movies to a wide audience.

Like almost every popular idea, the elevator pitch (also known in Hollywood as the “high concept” phenomenon) has been attributed to a number of industry players, although the closest thing to an agreed-upon dictionary definition still belongs to Steven Spielberg. “If a person can tell me the idea in twenty-five words or less, it’s going to make a pretty good movie,” the director of Jurassic Park and E.T. the Extra-Terrestrial has said. “I like ideas . . . that you can hold in your hand.”4 Would you be in the least surprised to hear that Spielberg’s father, Arnold Spielberg, was a pioneering computer scientist who designed and patented the first electronic library system that allowed the searching of what was then considered to be vast amounts of data? Steven Spielberg might have been a flop as a science student, but The Formula was in his blood.5

The Formula also runs through the veins of another Hollywood figure, whom Time magazine once praised for his “machine-like drive,” and who originally planned to study engineering at MIT, before instead taking the acting route and going on to star in an almost unblemished run of box office smashes. Early in Will Smith’s career, when he was little more than a fad pop star appearing in his first television show, The Fresh Prince of Bel-Air, the aspiring thespian sat down with his manager and attempted to work out a formula that would transform him from a nobody into “the biggest movie star in the world.” Smith describes himself as a “student of the patterns of the universe.” At a time when he was struggling to be seen by a single casting director, Smith spent his days scrutinizing industry trade papers for trends that appeared in what global audiences wanted to see. “We looked at them and said, ‘Okay, what are the patterns?’” he later recalled in an interview with Time magazine.6 “We realized that ten out of ten had special effects. Nine out of ten had special effects with creatures. Eight out of ten had special effects with creatures and a love story . . .” Two decades later, and with his films having grossed in excess of $6.36 billion worldwide, Smith’s methodology hasn’t changed a great deal. “Every Monday morning, we sit down [and say], ‘Okay, what happened this weekend, and what are the things that resemble things that have happened the last ten, twenty, thirty weekends?’” he noted.

Of course, as big a box-office attraction as Smith undoubtedly is, when it comes to universal pattern spotting, he is still strictly small-fry.

The Future of Movies

In the United Kingdom, there is a company called Epagogix that takes the Will Smith approach to movie prediction, only several orders of magnitude greater and without the movie star good looks. Operating out of a cramped office in Kennington, where a handful of data analysts sit hunched over their computers and the walls are covered with old film posters, Epagogix is the unlikely secret weapon employed by some of the biggest studios in Hollywood. Named after the Greek word for the path that leads from experience to knowledge, Epagogix carries a bold claim for itself: it can—so CEO and cofounder Nick Meaney claims—accurately forecast how much money a particular film is going to earn at the box office, before the film in question is even made.

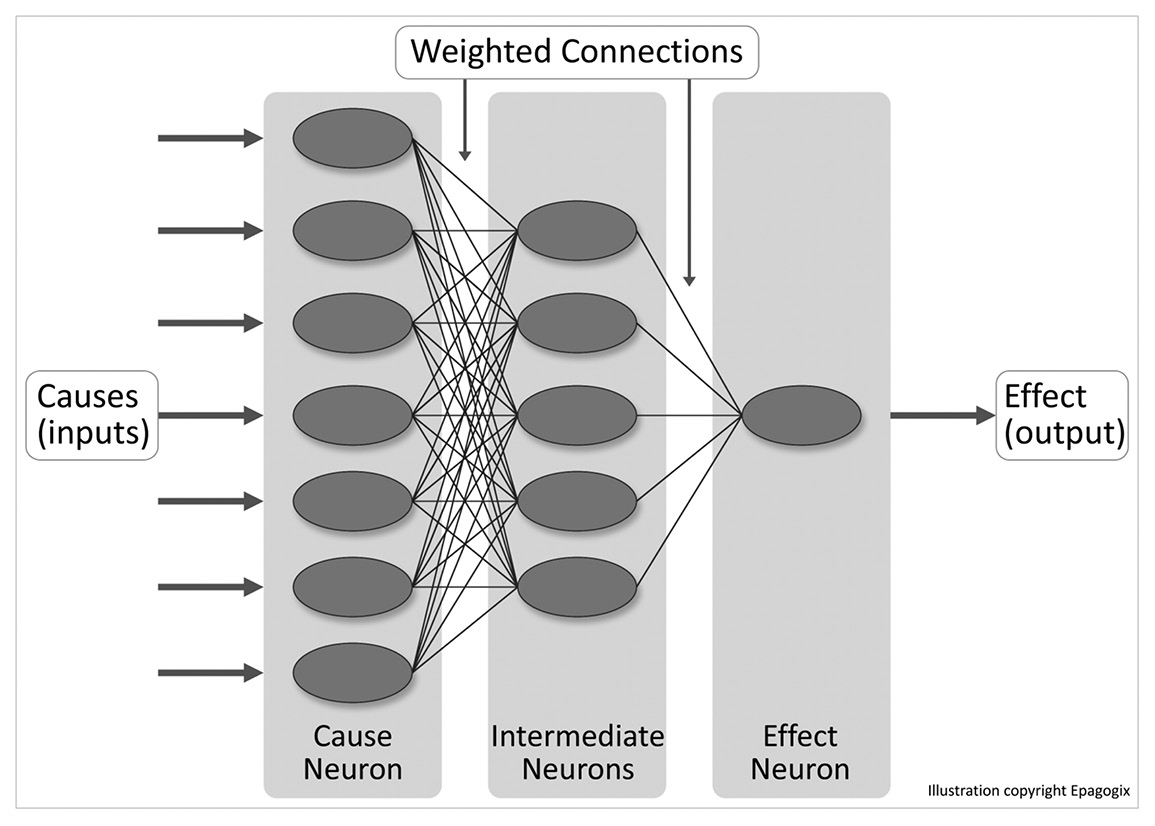

Epagogix’s route to Hollywood was something of an unusual one. Meaney, a fortysomething Brit with a mop of thick black hair and a face faintly reminiscent of a midcareer Orson Welles, had a background in risk management. During his career Meaney was introduced by several mathematician friends to what are called neural networks: vast, artificial brains used for analyzing the link between cause and effect in situations where this relationship is complex, unclear or both. A neural network could be used, for example, to read and analyze the sound recordings taken from the wheels of a train as it moves along railway tracks. Given the right information it could then predict when a particular stretch of track is in need of engineering, rather than waiting for a major crash to occur. Meaney saw that neural networks might be a valuable insurance tool, but the idea was quickly shot down by his bosses. The problem, he realized, is that insurance doesn’t work like this: premiums reflect the actuarial likelihood that a particular event is going to occur. The better you become at stopping a particular problem from happening, the lower the premiums that can be charged to insure against it.

Movies, however, could benefit from that kind of out-of-the-box thinking. With the average production costs for a big budget movie running into the tens or even hundreds of millions of dollars, a suitably large flop can all but wipe out a movie studio. This is exactly what happened in 1980, when the infamous turkey Heaven’s Gate (singled out by Guardian critic Joe Queenan as the worst film ever made) came close to destroying United Artists. One studio boss told Meaney that if someone was able to come up with an algorithm capable of stopping a single money-losing film from being made each year, the overall effect on that studio’s bottom line would be “immeasurable.” Intrigued, Meaney set about bringing such a turkey-shooting formula to life. He teamed up with some data-analyst friends who had been working on an algorithm designed to predict the television ratings of hit shows. Between them they developed a system that could analyze a movie script on 30,073,680 unique scoring combinations—ranging from whether there are clearly defined villains, to the presence or lack of a sidekick character—before cranking out a projected box office figure.

To test the system, a major Hollywood studio sent Epagogix the scripts for nine completed films ready for release and asked them to use the neural network to generate forecasts for how much each would make. To complicate matters, Meaney and his colleagues weren’t given any information about which stars the films would feature, who they were directed by, or even what the marketing budget was. In three of the nine cases, the algorithm missed by a considerable margin, but in the six others the predictions were eerily accurate. Perhaps the most impressive prediction of all concerned a $50 million film called Lucky You. Lucky You starred the famous actress Drew Barrymore, was directed by Curtis Hanson, the man who had made the hit movies 8 Mile and L.A. Confidential, and was written by Eric Roth, who had previously penned the screenplay for Forrest Gump. It also concerned a popular subject: the world of high-stakes professional poker. The studio was projecting big numbers for Lucky You. Epagogix’s neural network, on the other hand, predicted a paltry $7 million. The film ended up earning just $6 million. From this point on, Epagogix began to get regular work.

Illustration of a simplified neural network. The cat’s cradle of connections in the middle, labeled the “intermediate neurons,” is the proprietary “secret sauce” that makes Epagogix’s system tick.

A fair question, of course, is why it takes a computer to do this. As noted, Will Smith does something not entirely dissimilar from his kitchen table at the start of every week. Could a person not go through the 30,073,680 unique scoring combinations and check off how many of the ingredients a particular script adhered to? The simple answer to this is no. While it would certainly be possible (albeit time-consuming) to plot each factor separately, this would say nothing about how the individual causal variables interact with one another to affect box office takings. To paraphrase a line from George Orwell’s Animal Farm, all numbers might be equal, but some numbers are more equal than others.

Think about it in terms of a successful movie. In March 2012, The Hunger Games was released at cinemas and rapidly became a huge hit. But did The Hunger Games become a hit because it was based on a series of books, which had also been hits, and therefore had a built-in audience? Did it become a hit because it starred the actress Jennifer Lawrence, who Rolling Stone magazine once referred to as “the coolest chick in Hollywood”?7 Or did it become a hit because it was released at a time of year when a lot of young people were out of school or college and were therefore free to go to the cinema? The best that anyone can say about any one of these questions is: maybe. The Hunger Games was based on a successful series of books, it did star a hot young actress popular with the film’s key demographic, and it was released during the spring break holiday in the United States when large numbers of young people were on holiday. But the same could be said for plenty of other films that don’t go on to become massive hits. And although The Hunger Games ultimately took more than $686 million in cinemas, how does anyone know whether all of the factors mentioned resulted in positive gains? Could it be that there was a potential audience out there who stayed home because they had heard that the film was based on a book, or because it starred Jennifer Lawrence, or because they knew that the cinema would be full of rowdy youths fresh out of school? Might the film have earned a further $200 million if only its distributors had known to hold out until later in the year to release it?

These are the types of questions Epagogix seeks to quantify. A studio that employs Meaney and his colleagues will send Epagogix a shooting script, a proposed cast list, and a note about the specific time of year they plan to release their film. In return, they receive a sealed brown envelope containing the neural network’s report. “We used to send reports that were this thick,” Meaney says, creating a gap between his thumb and his forefinger to indicate a dossier the thickness of an average issue of Vogue magazine. Unconvinced that they were being read all the way through, the company now sends just two or three pages, bound by a single staple. “You might think that studios would want more than that, but in fact we spent a lot of time trimming these down,” he continues.

The last page of the report is the most important one: the place where the projected box-office forecast for the film is listed. There is also a second, mysterious number: usually around 10 percent higher than the first figure, but sometimes up to twice its value. This figure is the predicted gross for the film on the condition that certain recommended tweaks are made to the script. Since regression testing is used to analyze each script in forensic detail, Meaney explains that the neural network can be used to single out individual elements where the potential yield is not where it should be—or where one part of the film is dragging down others. Depending upon your disposition and trust in technology, this is the point at which Epagogix takes a turn for either the miraculous or the unnerving. It is not difficult to imagine certain screenwriters would welcome the types of notes that might allow them to create a record-breaking movie, while others will detest the idea that an algorithm is telling them what to do.

It’s not just scriptwriters who have the potential to be confused either. “One of the studio heads that we deal with on a regular basis is a very smart guy,” Meaney says. “Early on in our dialogue with him he used to ask questions like, ‘What would happen if the main character wears a red shirt? What difference does that make in your system?’ He wasn’t trying to catch us out; he was trying to grasp what we do. I was never able to explain to his satisfaction that it all depends. Is this a change that can be made without altering any of the other variables that the neural network ranks upon? Very seldom is there a movie where any significant alteration doesn’t mean changes elsewhere.” To modify a phrase coined by chaos theory pioneer Edward Lorenz, a butterfly that flaps its wings in the first minute of a movie may well cause a hurricane in the middle of the third act.

The studio boss to whom Meaney refers was likely picking a purposely arbitrary detail by mentioning the color of a character’s shirt. After all, who ever formed their opinion about which movie to go and see on a Saturday night, or which film to recommend to friends, on the basis of whether the protagonist wears a blue shirt or a red shirt? But Meaney was nonetheless bothered by the comment: not because the studio boss was wrong, but because he wanted to reassure himself that saying “it depends” wasn’t a cop-out. That evening he phoned one of his Epagogix colleagues back in the UK. “Quick as a flash, they said to me, ‘Of course it depends.’ Think about Schindler’s List,” Meaney recalls. “At the end of the film you get a glimpse of color and it’s an absolutely pivotal moment in the narrative. In terms of our system it suddenly put the question into an historical context. In that particular film it makes the world of difference, while in another it might make no difference at all. Everything’s relative.”8

Parallel Universes

One way to examine whether there really are universal rules to be found in art would be to rewind time and see whether the same things became popular a second time around. If the deterministic formula that underpins companies like Epagogix is correct, then a blockbuster film or a best-selling novel would be successful no matter how many times you repeated the experiment over again. Avatar would always have grossed in excess of $2 billion, while John Carter would always have performed the exact same belly flop. Wolfgang Amadeus Mozart was always destined for greatness, while Antonio Salieri was always doomed to be an also-ran. This would similarly mean that there is no such thing as an “unlikely hit,” since universal truths would state that there are rules that define something as a success or a failure. Adhere to them and you have a hit. Fail to do so and you have a flop.

A few years ago, a group of researchers from Princeton University came up with an ingenious way of testing this theory using computer simulations. What sparked Matthew Salganik, Peter Dodds and Duncan Watts’s imagination was the way in which they saw success manifest itself in the entertainment industry. Much as some companies start out in a crowded field and go on to monopolize it, so too did they notice that particular books or films become disproportionate winners. These are what are known as “superstar” markets. In 1997, for instance, Titanic earned nearly 50 times the average U.S. box-office take for a film released that year. Was Titanic really 50 times better than any other film released that year, the trio wondered, or does success depend on more than just the intrinsic qualities of a particular piece of content? “There is tremendous unpredictability of success,” Salganik says. “You would think that superstar hits that go on to become so successful would be somehow different from all of the other things they’re competing against. But yet the people whose job it is to find them are unable to do so on a regular basis.”

To put it another way, if Goldman’s Law that nobody knows anything is right, is this because experts are too stupid to realize a hit when they have one on their hands, or does nobody know anything because nothing is for certain?

In order to test their hypothesis, Salganik, Dodds and Watts created what they referred to as an “Experimental Study of Inequality and Unpredictability in an Artificial Cultural Market.”9 This consisted of an online music market, a bit like iTunes, but featuring unknown songs from unknown bands. The 14,341 participants recruited online were given the chance to listen to the songs and rate them between 1 (“I hate it”) and 5 (“I love it”). They could then download those songs that they liked the most. The most downloaded tracks were listed in a Top 40–style “leader board” that was displayed in a prominent position on the website.

What made this website different from iTunes was that Salganik, Dodds and Watts had not created one online music market, but many. When users logged on to the site, they were randomly redirected to one of nine “parallel universes” that were identical in every way with the exception of the leader board. According to the researchers’ logic, if superstar hits really were orders of magnitude better than average, one would expect the same songs to appear in the same spot in each universe.

What Salganik, Dodds and Watts discovered instead was exactly what they had suspected: that there is an accidental quality to success, in which high-ranking songs take an early lead for reasons that seem inconsequential, based upon those taste-makers who sample it first. Once this lead is established, it is exacerbated through social feedback. A bookshop, for instance, might notice that one particular book is proving more popular than others, and therefore decide to order more copies. At this stage, the book may be selling 11 copies for every 10 sold by its next most popular rival—a marginal improvement. But when the new copies arrive they are displayed in favorable places around the shop (on a table next to the front door, for example) and soon the book is selling twice as many copies as its closest rival. To sell even more, the bookshop then decides to try to attract new customers by lowering its own profit margins and selling the book at a reduced price. At this point the book is selling four times as many copies as its closest rival. Because customers have the impression that the book is popular (and therefore must be good) they are more likely to buy it, thereby driving sales up even more.

This is what psychologists call the “mere-exposure” effect. At a certain juncture a tipping point is reached, where people will buy copies of the book so as not to be left out of what they see as a growing phenomenon, in much the same way that we might tune in to an episode of a television show that has gained a lot of buzz, just to see what all the fuss is about.

In Salganik, Dodds and Watts’s experiment the songs that were ranked as the least popular in one universe would never prove the most popular in another, while the most popular songs in one universe would never prove the least popular somewhere else. Beyond this, however, any other result was possible.

The Role of Appeal

As you may have detected, there was a problem posed by the formulation of Salganik, Dodds and Watts, one that they acknowledged when it came time to write up their findings. In an experiment designed to determine the relationship between popularity and quality, how could any meaningful conclusions be drawn without first deciding upon a quantifiable definition for quality? “Unfortunately,” as the three researchers gravely noted in their paper, “no generally agreed upon measure of quality exists, in large part because quality is largely, if not completely, a social construction.” To get around this issue (which they referred to as “these conceptual difficulties”) Salganik, Dodds and Watts chose to eschew debate about the artistic “quality” of individual songs altogether, and instead to focus on the more easily measurable characteristic of “appeal.”

A song’s appeal was established through the creation of one more music market, this time with no scoreboard visible. Lacking the presence of any obvious social feedback mechanisms, Salganik, Dodds and Watts theorized that whichever song turned out to be the most popular in this scenario would do so based wholly on the merits of its objective appeal. What those were didn’t matter. All that mattered was that they existed.

This market-driven reading of “appeal” over “quality” is (no pun intended) a popular one. “There are plenty of films that, to me, might be better than Titanic, but in the marketplace it’s Titanic that earns the most,” says Epagogix’s Nick Meaney. If what emanates from his company’s neural network happens to coincide with what Meaney considers a great work of art, that is wonderful. If it doesn’t, it’s better business sense to recommend studios fund a film that a lot of people will pay money to see and not feel cheated by, rather than one that a few critics might rave about but nobody else will watch. Netflix followed a similar logic to Meaney when in 2006 it implemented its (now abandoned) $1,000,000 open competition to ask users to create a filtering algorithm that markedly improved upon Netflix’s own recommender system. Instead of an “improvement” being an algorithm that directed users toward relatively obscure critical favorites like Yasujirô Ozu’s 1953 masterpiece Tokyo Story or Jean Renoir’s 1953 La Règle du jeu, Netflix judged “better recommendations” as recommendations that most accurately predicted the score users might give to a film or TV show. To put it another way, this is “best” in the manner of the old adage stating that the best roadside restaurants are those with the most cars parked outside them.

The advent of mass appeal is a fairly modern concept, belonging to the rise of factory production lines in the late 19th and early 20th century. For the first time in history, a true mass market emerged as widespread literacy coincided with the large-scale move of individuals to cities. This was the birth of the packaged formula, requiring the creation of products designed to sell to the largest number of people possible. Mass production also meant standardization, since the production and distribution process demanded that everything be reduced to its simplest possible components. This mantra didn’t just apply to consumer goods, but also to things that didn’t inherently require simplification as part of their production process. As the authors of newspaper history The Popular Press, 1833–1865 observe, for example, the early newspaper tycoons “packaged news as a product [my emphasis] to appeal to a mass audience.”10

In this way, art, literature and entertainment were no different from any other product. In the dream of the utopianists of the age, mass appeal meant that the world would move away from the elitist concept of “art” toward its formalized big brother, “engineering.” Cars, airplanes and even entire houses would roll off the factory conveyor belt en masse, signaling an end to an existence in which inequality was commonplace. How could it, when everyone drove the same Model T Ford and lived in the same homes? Art was elitist, irrational and superficial; engineering was collectivist, functional and hyperrational. Better to serve the democratized objectivity of the masses than the snobbish subjectivity of the few.11

It was cinema that was seized upon as the ideal medium for conveying popular, formulaic storytelling, representing the first example of what computational scholar Lev Manovich refers to as New Media. “Irregularity, nonuniformity, the accident and other traces of the human body, which previously, inevitably accompanied moving image exhibitions, were replaced by the uniformity of machine vision,” Manovich writes.12 In cinema, its pioneers imagined a medium that could apply the engineering formula to the field of entertainment. Writing excitedly about the bold new form, Soviet filmmaker and propagandist Sergei Eisenstein opined, “What we need is science, not art. The word creation is useless. It should be replaced by labor. One does not create a work, one constructs it with finished parts, like a machine.”

Eisenstein was far from alone in expressing the idea that art could be made more scientific. A great many artists of the time were similarly inspired by the notion that stripping art down to its granular components could provide their work with a social function on a previously unimaginable scale, thereby achieving the task of making art “useful.” A large number turned to mechanical forms of creativity such as textile, industrial and graphic design, along with typography, photography and photomontage. In a state of euphoria, the Soviet artists of the Institute for Artistic Culture declared that “the last picture has been painted” and “the ‘sanctity’ of a work of art as a single entity . . . destroyed.” Art scholar Nikolai Punin went one step further still, both calling for and helpfully creating a mathematical formula he claimed to be capable of explaining the creative process in full.13

Unsurprisingly, this mode of techno-mania did not go unchallenged. Reacting to the disruptive arrival of the new technologies, several traditionally minded scholars turned their attentions to critiquing what they saw as a seismic shift in the world of culture. For instance, in his essay “The Work of Art in the Age of Mechanical Reproduction,” German philosopher and literary critic Walter Benjamin observed:

With the advent of the first truly revolutionary means of reproduction, photography, . . . art sensed the approaching crisis . . . Art reacted with the doctrine of l’art pour l’art, that is, with a theology of art. This gave rise to . . . “pure” art, which not only denied any social function of art but also any categorizing by subject matter.14

Less than a decade later in 1944, two German theorists named Theodor Adorno and Max Horkheimer elaborated on Benjamin’s argument in their Dialectic of Enlightenment, in which they attacked what they bitingly termed the newly created “culture industry.” Adorno and Horkheimer’s accusation was simple: that like every other aspect of life, creativity had been taken over by industrialists obsessed with measurement and quantification. In order to work, artists had to conform, kowtowing to a system that “crushes insubordination and makes them subserve the formula.”

Had they been alive today, Adorno and Horkheimer wouldn’t for a moment have doubted that a company like Epagogix could successfully predict the box office of Hollywood movies ahead of production. Forget about specialist reviewers; films planned from a statistical perspective call for nothing more or less than statistical analysis.

Universal Media Machines

In 2012, another major shift was taking place in the culture industry. Although it was barely remarked upon at the time, this was the first year in which U.S. viewers watched more films legally delivered via the Internet than they did using physical formats such as Blu-ray discs and DVDs. Amazon, meanwhile, announced that, less than two years after it first introduced the Kindle, customers were now buying more e-books than they were hardcovers and paperbacks combined.

At first glance, this doesn’t sound like such a drastic alteration. After all, it’s not as if customers stopped watching films or reading books altogether, just that they changed something about the way that they purchased and consumed them. An analog might be people continuing to shop at Gap, but switching from buying “boot fit” to “skinny” jeans.

However, while this analogy works on the surface, it fails to appreciate the extent of the transition that had taken place. It is not enough to simply say that a Kindle represents a book read on screen as opposed to on paper. Each is its own entity, with its own techniques and materials. In order to appear on our computer screens, tablets and smartphones, films, music, books and paintings must first be rendered in the form of digital code. This can be carried out regardless of whether a particular work was originally created using a computer or not. For the first time in history, any artwork can be described in mathematical terms (literally a formula), thereby making it programmable and subject to manipulation by algorithms.

In the same way that energy can be transferred from movement into heat, so too can information now shift easily between mediums. For instance, an algorithm could be used to identify the presence of shadows in a two-dimensional photograph and then translate these shadows to pixel depth by measuring their position on the grayscale—ultimately outputting a three-dimensional object using a 3-D printer.15 Even more impressively, in recent years Disney’s R&D division has been hard at work on a research project designed to simulate the feeling of touching a real, tactile object when, in reality, users are only touching a flat touch screen. This effect is achieved using a haptic feedback algorithm that “tricks” the brain into thinking it is feeling ridges, bumps or potentially even textures, by re-creating the sensation of friction between a surface and a fingertip.16 “If we can artificially stretch skin on a finger as it slides on the touch screen, the brain will be fooled into thinking an actual physical bump is on a touch screen even though the touch surface is completely smooth,” says Ivan Poupyrev, the director of Disney Research, who describes the technology as a means by which interactions with virtual objects can be made more realistic.17

There is also the possibility of combining different mediums in entirely new ways, something increasingly common in a world used to web pages, PowerPoint presentations, and mobile multimedia messages. It is no coincidence that the advent of the programmable computer in the 20th century saw the art world take its first tentative steps away from the concept of media specificity. As the computer became a multipurpose canvas for everything from illustration to composition, so too did modern artists over the past 50 years seek to establish formulas capable of bringing together previously separate entities, such as musical and visual composition.

Scientists and artists alike have long been fascinated by the neurological condition of synesthesia (Greek for “joined perception”), in which affected individuals see words as colors, hear sounds as textures, or register smells as shapes. A similar response is now reproducible on computer, and this can be seen through the increasing popularity of “info-aesthetics”18 that has mirrored the rise of data analytics. More than just a method of computing, info-aesthetics takes numbers, text, networks, sounds and video as its source materials and re-creates them as images to reveal hidden patterns and relationships in the data.

Past data visualizations by artists include the musical compositions of Bach presented as wave formations, the thought processes of a computer as it plays a game of chess, and the fluctuations of the stock market. In 2013, Bill Gates and Microsoft chief technology officer Nathan Myhrvold filed a patent for a system capable of taking selected blocks of text and using this information to generate still images and even full-motion video. As they point out, such technology could be of use in a classroom setting—especially for students suffering from dyslexia, attention deficit disorder, or any one of a number of other conditions that might make it difficult to read long passages of text.19

To Thine Own Self Be True/False

Several years ago, as an English graduate student, Stephen Ramsay became interested in what is known as graph theory. Graph theory uses the mathematical relationship between objects to model their connections—with individual objects represented by “nodes” and the lines that connect them referred to as “edges.” Looking around for something in literature that was mathematical in structure, Ramsay settled upon the plays of William Shakespeare. “A Shakespearean play will start in one place, then move to a second place, then go back to the first place, then on to the third and fourth place, then back to the second, and so on,” he says. Intrigued, Ramsay set about writing a computer program capable of transforming any Shakespearean play into a graph. He then used data-mining algorithms to analyze the graphs to see whether he could predict (based wholly on their mathematical structure) what he was looking at was a comedy, tragedy, history or romance. “And here’s the thing,” he says. “I could. The computer knew that The Winter’s Tale was a romance, it knew that Hamlet was a tragedy, it knew that A Midsummer Night’s Dream was a comedy.” There were just two cases in which the algorithm, in Ramsay’s words, “screwed up.” Both Othello and Romeo and Juliet came back classified as comedies. “But this was the part that was flat-out amazing,” he says. “For a number of years now, literary critics have been starting to notice that both plays have the structure of comedies. When I saw the conclusion the computer had reached, I almost fell off my chair in amazement.”

The idea that we might practically use algorithms to find the “truths” obscured within particular artistic works is not a new one. In the late 1940s, an Italian Jesuit priest named Roberto Busa used a computer to “codify” the works of influential theologian Thomas Aquinas. “The reader should not simply attach to the words he reads the significance they have in his mind,” Busa explained, “but should try to find out what significance they had in the author’s mind.”20

Despite this early isolated example, however, the scientific community of the first half of the 20th century for the most part doubted that computers had anything useful to say about something as unquantifiable as art. An algorithm could never, for example, determine authorship in the case of two painters with similar styles—particularly not in situations in which genuine experts had experienced difficulty doing so. In his classic book Faster Than Thought: A Symposium on Digital Computing Machines, the late English scientist B. V. Bowden offers the view that:

It seems most improbable that a machine will ever be able to give an answer to a general question of the type: “Is this picture likely to have been painted by Vermeer, or could van Meegeren have done it?” It will be recalled that this question was answered confidently (though incorrectly) by the art critics over a period of several years.21

To Bowden, the evidence is clear, straightforward and damning. If Alan Turing suggested that the benchmark of an intelligent computer would be one capable of replicating the intelligent actions of a man, what hope would a machine have of resolving a problem that even man was unable to make an intelligent judgment on? A cooling fan’s chance in hell, surely.

In recent years, however, this view has been challenged. Lior Shamir is a computer scientist who started his career working for the National Institutes of Health, where he used robotic microscopes to analyze the structure of hundreds of thousands of cells at a time. After that he moved on to astronomy, where he created algorithms designed for scouring images of billions of galaxies. Next he began working on his biggest challenge to date: creating the world’s first fully automated, algorithmic art critic, with a rapidly expanding knowledge base and a range of extremely well-researched opinions about what does and does not constitute art. Analyzing each painting it is shown based on 4,024 different numerical image content descriptors, Shamir’s algorithm studies everything that a human art critic would examine (an artist’s use of color, or their distribution of geometric shapes), as well as everything that they probably wouldn’t (such as a painting’s description in terms of its Zernike polynomials, Haralick textures and Chebyshev statistics). “The algorithm finds patterns in the numbers that are typical to a certain artist,” Shamir explains.22 Already it has proven adept at spotting forgeries, able to distinguish between genuine and fake Jackson Pollock drip paintings with an astonishing 93 percent accuracy.

Much like Stephen Ramsay’s Shakespearean data-mining algorithm, Shamir’s automated art critic has also made some fresh insights into the connections that exist between the work of certain artists. “Once you can represent an artist’s work in terms of numbers, you can also visualize the distance between their work and that of other artists,” he says. When analyzing the work of Pollock and Vincent Van Gogh—two artists who worked within completely different art movements—Shamir discovered that 19 of the algorithm’s 20 most informative descriptors showed significant similarities, including a shared preference for low-level textures and shapes, along with a similar deployment of lines and edges.23 Again, this might appear to be a meaningless insight were it not for the fact that several influential art critics have recently begun to theorize similar ideas.24

Bring on the Reading Machines

This newfound ability to subject media to algorithmic manipulation has led a number of scholars to call for a so-called algorithmic criticism. It is no secret that the field of literary studies is in trouble. After decades of downward trends in terms of enrollments, the subject has become a less and less significant part of higher education. So how could this trend be reversed? According to some, the answer is a straightforward one: by turning it into the “digital humanities,” of course. In a 2008 editorial for the Boston Globe entitled “Measure for Measure,” literary critic Jonathan Gottschall dismissed the current state of his field as “moribund, aimless, and increasingly irrelevant to the concerns . . . of the ‘outside world.’” Forget about vague terms like the “beauty myth” or Roland Barthes’s concept of the death of the author, Gottschall says. What is needed instead is a productivist approach to media built around correlations, pattern-seeking and objectivity.

As such, Gottschall lays out his Roberto Busa–like beliefs that genuine, verifiable truths both exist in literature and are desirable. In keeping with the discoverable laws of the natural sciences, in Gottschall’s mind there are clear right and wrong answers to a question such as, “Can I interpret [this painting/this book/this film] in such-and-such a way?”

While these comments are likely to shock many of those working within the humanities, Gottschall is not altogether wrong in suggesting that there are elements of computer science that can be usefully integrated into arts criticism. In the world of The Formula, what it is that is possible to know changes dramatically. For example, algorithms can be used to determine “vocabulary richness” in literature by measuring the number of different words that appear in a 50,000-word block of text. This can bring about a number of surprises. Few critics would ever have suspected that a “popular” author like Sinclair Lewis—sometimes derided for his supposed lack of style—regularly demonstrates twice the vocabulary of Nobel laureate William Faulkner, whose work is considered notoriously difficult.

One of the highest-profile uses of algorithms to analyze text took place in 2013 when a new crime fiction novel, The Cuckoo’s Calling, appeared on bookshelves around the world, written by a first-time author called Robert Galbraith. While the book attracted little attention early on, selling just 1,500 printed copies, it became the center of controversy after a British newspaper broke the story that the author may be none other than Harry Potter author J. K. Rowling, writing under a pseudonym. To prove this one way or the other, computer scientists were brought in to verify authorship. By using data-mining techniques to analyze the text on four different variables (average word length, usage of common words, recurrent word pairings, and distribution of “character 4-grams”), algorithms concluded that Rowling was most likely the author of the novel, something she later admitted to.25

As Stephen Ramsay observes, “The rigid calculus of computation, which knows nothing about the nature of what it’s examining, can shock us out of our preconceived notions on a particular subject. When we read, we do so with all kinds of biases. Algorithms have none of those. Because of that they can take us off our rails and make us say, ‘Aha! I’d never noticed that before.’”

Data-tainment

A quick scan of the best-seller list will be enough to convince us that, for better or worse, book publishers are not the same as literary professors. This doesn’t mean that they are exempt from the allure of using algorithms for analysis, however. Publishers, of course, are less interested in understanding a particular text than they are in understanding their customers. In previous years, the moment that a customer left a bookshop and took a book home with them, there was no quantifiable way a publisher would know whether they read it straight through or put it on a reading pile and promptly forgot about it. Much the same was true of VHS tapes and DVDs. It didn’t matter how many times an owner of Star Wars rewound their copy of the tape to watch a Stormtrooper bump his head, or paused Basic Instinct during the infamous leg-crossing scene: no studio executive was ever going to know about it. All of that is now changing, however, due to the amount of data that is able to be gathered and fed back to content publishers. For example, Amazon is able to tell how quickly its customers read e-books, whether they scrutinize every word of an introduction or skip over it altogether, and even which sections they choose to highlight. They know that science fiction, romance and crime novels tend to be read faster than literary fiction, while nonfiction books are less likely to be finished than fiction ones.

These insights can then be used to make creative decisions. In February 2013, Netflix premiered House of Cards, its political drama series starring Kevin Spacey. On the surface, the most notable aspect of House of Cards appeared to be that Netflix—an on-demand streaming-media company—was changing its business model from distribution to production, in an effort to compete with premium television brands like Showtime and HBO. Generating original video content for Internet users is still something of a novel concept, particularly when it is done on a high budget and, at $100 million, House of Cards was absolutely that. What surprised many people, however, was how bold Netflix was in its decisions. Executives at the Los Gatos–based company commissioned a full two seasons, comprising 26 episodes in total, without ever viewing a single scene. Why? The reason was that Netflix had used its algorithms to comb through the data gathered from its 25 million users to discover the trends and correlations in what people watched. What it discovered was that a large number of subscribers enjoyed the BBC’s House of Cards series, evidenced by the fact that they watched episodes multiple times and in rapid succession. Those same users tended to also like films that starred Kevin Spacey, as well as those that were directed by The Social Network’s David Fincher. Netflix rightly figured that a series with all three would therefore have a high probability of succeeding.26

The gamble appeared to pay off. Under a review titled “House of Cards Is All Aces,” USA Today praised the show as “money well-spent” and among the “most gorgeous [pieces] of television” people were likely to see all year.27 President Obama admitted to being a fan. Netflix followed up its House of Cards success with three more well-received series: Hemlock Grove, Arrested Development and Orange Is the New Black. At the 2013 Emmy Awards, the company notched up a total of 14 nominations for its efforts.28 “It took HBO 25 years to get its first Emmy nomination,” noted American TV critic and columnist David Bianculli in an article for the New York Times. “It took Netflix six months.”29

Netflix’s success has seen it followed by online retailer Amazon, which also has access to a vast bank of customer information, revealing the kind of detailed “likes” and “dislikes” data that traditional studio bosses could only dream of. “It’s a completely new way of making movies,” Amazon founder Jeff Bezos told Wired magazine. “Some would say our approach is unworkable—we disagree.”30

In Soviet Russia, Films Watch You

In a previous life, Alexis Kirke worked as a quantitative analyst on Wall Street, one of the so-called rocket scientists whose job concerns a heady blend of mathematics, high finance and computer skills. Having completed a PhD in computer science, Kirke should have been on top of the world. “Quants” are highly in demand and can earn upward of $250,000 per year, but Kirke nonetheless found himself feeling surprisingly disenfranchised. “After about a year, I decided that this wasn’t what I wanted to do,” he says. What he wanted instead was to pursue an artistic career. Kirke left the United States, moved back home to Plymouth, England, and enrolled in a music degree course. Today, he is a research fellow at Plymouth University’s Interdisciplinary Center for Computer Music Research.

In 2013, Kirke achieved his greatest success to date when he created Many Worlds, a film that changed the direction of its narrative based upon the response of audience members. Many Worlds premiered at the Peninsula Arts Contemporary Music Festival in 2013, and its interactivity marked a major break from traditional cinema by transforming audiences from passive consumers into active participants. At screenings, audience members were fitted with special sensors capable of monitoring their brain waves, heart rate, perspiration levels and muscle tension. These indicators of physical arousal were then fed into a computer, where they were averaged and analyzed in real time, with the reactions used to trigger different scenes. A calm audience could conceivably be jolted to attention with a more dramatic sequence, while an already tense or nervous audience could be shown a calmer one. This branching narrative ultimately culminated in one of four different endings.31

In a sense, companies like Epagogix, which I mentioned at the start of the chapter, offer a new twist on an old idea: that there is such a thing as a work of art that will appeal to everyone. Anyone who has ever read two opposite reviews of the same film—one raving about it and the other panning it—will realize that this is not necessarily true. Our own preferences are based on synthetic concepts based around inherited ideas, as well as our own previous experiences. My idea of how Macbeth should be performed on the stage is based on those performances I have attended in the past, or what I have read about the play. The same is true of the films I like, the music I enjoy, and the books I read.

“A fixed film appeals to the lowest common denominator,” says Kirke. “What it does is to plot an average path through the audience’s emotional experience, and this has to translate across all audiences in all countries. Too often this can end in compromise.” Kirke isn’t wrong. For every Iron Man—a Hollywood blockbuster that appeals to vast numbers without sacrificing quality—there are dozens of other films from which every ounce of originality has been airbrushed in an effort to appease the widest possible audience. Many Worlds suggests an alternative: that in the digital age, rationalization no longer has to be the same as standardization. Formulas can exist, but these don’t have to ensure that everything looks the same.32

A valid question, of course, concerns the cost of implementing this on a wider level. Alexis Kirke created Many Worlds on what he describes as a “nano-budget” of less than $4,000—along with some lights, tripods and an HD camera borrowed from Plymouth University’s media department for a few days. How would this work when scaled up to Hollywood levels? After all, at a time when blockbuster movies can cost upward of $200 million, can studios really afford the extra expenditure of shooting four different endings in the way that Kirke did? He certainly believes they can. As I described earlier in this chapter, the entertainment industry currently operates on a highly inefficient (some would say unscientific) business model reliant on statistically rare “superstar” hits to offset the cost of more likely losses. The movie studio that makes ten films and has two of these become hits will be reasonably content. But what if that same studio ramped up its spending by shooting alternate scenes and commissioning several possible sound tracks at an additional cost of 50 percent per film, although this in turn meant that the film was more likely to become a hit? If branching films could be all things to all people, studios might only have to make five films to create two sizeable hits.

Following the debut of Many Worlds, Kirke was approached by several major media companies interested in bringing him on board to work as a consultant. The BBC twice invited him to its headquarters in Manchester to screen the film and discuss his thoughts on the future of interactive media. Manufacturers were particularly interested in how this technology could usefully be integrated into the next generation of television sets. “This is something that’s already starting to happen,” Kirke says. In 2013, Microsoft was awarded a patent for a camera capable of monitoring the behavior of viewers, including movement, eye tracking and heart rate. This behavior can then be compiled into user-specific reports and sent, via the cloud, to a remote device able to determine whether certain goals have been met.33 Advertisers, for instance, will have the option of rewarding viewers who sit through commercial breaks with digital credits (iTunes vouchers, perhaps) or physical prizes. Because Microsoft’s camera sensor has the ability to recognize gestures, advertisers could create dances or actions for viewers to reproduce at home. The more enthusiastic the reproduction, the more iTunes vouchers the viewer could win.

Another company, named Affectiva, is beginning to market facial expression analysis software to the consumer product manufacturers, retailers, marketers and movie studios. Its mission is to mine the emotional response of consumers to help improve the designs and marketing campaigns of products.34 Film and television audiences will similarly increasingly be watched by nonspeech microphones and eye line sensors, along with social network scanners built into mobile devices, which adjust whatever they are watching according to reactions. If it is determined that a person’s eyes are straying from the screen too often, or that they are showing more interest in Facebook than the entertainment placed in front of them, films will have the option of adjusting editing, sound track or even narrative to ensure that maximum engagement level is maintained at all times.

A Moving Target

Traditionally, the moment that a painting was finished, a photograph was printed or a book was published it was fixed in place. We might even argue that such a quality forms part of our appreciation. With its fixed number of pages bound by a single spine, the physical organization of a book invites the reader to progress through it in a linear, predetermined manner—moving from left to right across the page, then from page to page, and ultimately from chapter to chapter, and cover to cover.35 As a result, a book appeals to our desire for completion, wholeness and closure.

No such permanence or fixedness exists in the world of The Formula, in which electronic books, films and music albums can be skipped through at will.36 This, in turn, represents a flattening of narrative, or a division of it into its most granular elements. As computer scientist Steven DeRose argues in a 1995 paper entitled “Structured Information: Navigation, Access and Control,” this analysis of structured information does not get us close to certain universal truths, “in the sense that a Sherlock Holmes should peer at it and discern hidden truth . . . but rather in the sense that the information is divided into component parts, which in turn have components, and so on.”37

This narrative unwinding was demonstrated to great effect several years ago when the American artist Jason Salavon digitized the hit movie Titanic and broke it up into its separate frames. Each of these frames was then algorithmically averaged to a single color using a computer, before the frames were recollected as a unified image, mirroring the narrative sequence of the film. Reading the artwork from left to right and top to bottom, the movie’s rhythm was laid out in pure color.38

Both Alexis Kirke’s Many Worlds and Salavon’s reimagining of Titanic represent two sides of the same coin. In a post-9/11 age in which our own sense of impermanence is heightened, past and present are flattened in the manner of a Facebook timeline, and the future is an uncertain prospect, what relevance do traditional beginnings, middles and ends have? This is further seen by the number of artworks that, imbued with the power of code and real-time data streams, exist in a state of constant flux. In the same way that the Internet will never be completed—any more than technology itself can be completed—these algorithmic artworks are able to adapt and mutate as new data inputs are absorbed into the whole.

An example of this was created in Cambridge, Massachusetts, where two members of Google’s Big Picture data visualization group, Fernanda Viégas and Martin Wattenberg, coded an online wind map of the United States, which presents data from the National Digital Forecast Database in the hypnotic form of a swirling, constantly changing animation.39 “On calm days it can be a soothing meditation on the environment,” Wattenberg says. “During hurricanes it can become ominous and frightening.”40 In a previous age of fixedness, a work of art became timeless by containing themes universal enough to span generations. Today “timeless” means changing for each successive audience: a realization of the artist’s dilemma that work is never finished, only abandoned.

In his latest book, Present Shock, cyberpunk media theorist Douglas Rushkoff seizes upon a similar idea to discuss the ways in which today’s popular culture reflects The Formula. Much as the artists of the early 20th century adopted the techniques and aesthetics of heavy-duty industrial machinery as their model of choice for the direction in which to take their art, so too does today’s entertainment industry reflect the flux-like possibilities of code. Unlike the predictable narrative character arcs of classic films like The Godfather, today’s most lauded creations are ongoing shows such as Game of Thrones that avoid straightforward, three-act structures and simply continue indefinitely.

Looking at shows like the NBC series Community and Seth MacFarlane’s Family Guy, Rushkoff further demonstrates the technology-induced collapse of narrative structure at work. Community features a group of misfits at Greendale Community College, who constantly refer to the fact that they are characters within a sitcom. What story arcs do exist in the show are executed with the full knowledge that the viewing audience is well versed in the clichés that make up most traditional sitcoms. Family Guy similarly breaks away from traditional narrative storytelling in favor of self-contained “cutaway” gags, which prove equally amusing regardless of the order in which they are played, making it the perfect comedy for the iPod Shuffle generation. Like its obvious forerunner, The Simpsons, rarely does a plot point in Family Guy have any lasting impact—thereby allowing all manner of nonsensical occurrences to take place before the “reset” button is hit at the end of each episode.

A more poignant illustration of this conceit can be found in the more serious drama series on television. Shows like The Wire, Mad Men, The Sopranos and Dexter all follow ostensibly different central characters (ranging from Baltimore police and Madison Avenue admen to New Jersey mobsters and Miami serial killers) whose chief similarity is their inability to change their nature, or the world they inhabit. As Rushkoff writes, these series

don’t work their magic through a linear plot, but instead create contrasts through association, by nesting screens within screens, and by giving viewers the tools to make connections between various forms of media . . . The beginning, the middle, and the end have almost no meaning. The gist is experienced in each moment as new connections are made and false stories are exposed or reframed. In short, these sorts of shows teach pattern recognition, and they do it in real time.41

Even today’s most popular films no longer exist as unitary entities, but as nodes in larger franchises—with sequels regularly announced even before the first film is shown. It’s no accident that in this setting many of the most popular blockbusters are based on comic-book properties: a medium in which, unlike a novel, plot points are ongoing with little expectation of an ultimate resolution.

In this vein, Alexis Kirke’s Many Worlds does not exist as an experimental outlier, but as another step in the unwinding of traditional narrative and a sign of things to come. While stories aren’t going anywhere, Kirke says, in the future audiences are likely to be less concerned with narrative arcs than they will with emotional ones.

Digital Gatekeepers

A lack of fixedness in art and the humanities can have other, potentially sinister, implications. Because the “master” copy of a particular book that we are reading—whether this be on Kindle or Google Books—is stored online and accessed via “the cloud,” publishers and authors now possess the ability to make changes to works even after they have been purchased and taken home. A poignant illustration of this fact occurred in 2009 when Amazon realized that copies of George Orwell’s classic novel Nineteen Eighty-Four being sold through its Kindle platform were under copyright, rather than existing in the public domain as had been assumed. In a panic, Amazon made the decision to delete the book altogether, resulting in it vanishing from the libraries of all those who had purchased it. The irony, of course, is that Nineteen Eighty-Four concerns a dystopian future in which the ruling superpower manipulates its populace by rewriting the history books on a daily basis. More than 60 years after the novel was first published, such amendments to the grand narrative are now technically possible.

Writing in Wired magazine in July 2013, Harvard computer-science professor Jonathan Zittrain described this as “a worrisome trend” and called for digital books and other texts to be placed under the control of readers and libraries—presumed to have a vested interest in the sanctity of text—rather than with distributors and digital gatekeepers. Most insidious of all, Zittrain noted, was the fact that changes can be made with no evidence that things were ever any other way. “If we’re going to alter or destroy the past,” he wrote, “we should [at least] have to see, hear and smell the paper burning.”42

A Standardized Taste

In the early 1980s, a computer science and electronic engineering graduate from UC Berkeley set out to create a musical synthesizer. What Dave Smith wanted was to establish a standardized protocol for communication between the different electronic musical instruments made by different manufacturers around the world. What he came up with was christened the “Musical Instrument Digital Interface” and—better known by the name MIDI—became the entrenched unitary measurement for music. As a musical medium, MIDI is far from perfect. Although it can be used to mimic a wide palette of sounds using a single keyboard, it retains the keyboard’s staccato, mosaic qualities, which means that it cannot emulate the type of curvaceous sounds produceable by, say, a talented singer or saxophonist. As virtual-reality innovator (and talented musician) Jaron Lanier observes:

Before MIDI, a musical note was a bottomless idea that transcended absolute definition . . . After MIDI, a musical note [is] no longer just an idea, but a rigid, mandatory structure you couldn’t avoid in the aspects of life that had gone digital.43

This sort of technological “lock-in” is an unavoidable part of measurement. The moment we create a unitary standard, we also create limitations. More than two centuries before Dave Smith created MIDI, an 18th-century Scottish philosopher named David Hume wrote an essay entitled “(Of the) Standard of Taste.” In it, Hume argued that the key component to art (the thing that would come after the equals sign were it formulated as an equation) was the presence of what he termed “agreeableness.” Hume observed, “it is natural for us to seek a Standard of Taste; a rule, by which the various sentiments of men may be reconciled.”44

Unlike many of the figures discussed at the start of this chapter, Hume believed that there were not objective measures of aesthetic value, but that these were rather subjective judgments. As he phrased it, “to seek the real beauty, or the real deformity, is as fruitless an enquiry, as to seek the real sweet or real bitter.” At the same time, Hume acknowledged that, within subjectivity, aspects do indeed exist that are either “calculated to please” or “displease”—thus bringing about his “standard of taste.”

Hume was ahead of his time in various ways. In recent years, a number of organizations around the world have been investigating what is referred to as “Emotional Optimization.” Emotional Optimization relates to the discovery that certain parts of the brain correspond to different emotions. By asking test subjects to wear electroencephalography (EEG) brain caps, neuroscientists can measure the electrical activity that results from ionic current flows within the neurons of the brain. These readings can then be used to uncover the positive and negative reactions experienced by a person as they listen to a piece of music or watch a scene from a film. Through the addition of machine-learning tools, the possibility of discovering which low-level features in art prompt particular emotional responses becomes a reality.

Looking to the future, the potential of such work is clear. The addition of a feedback loop, for instance, would allow users not simply to have their EEG response to particular works read, but also to dictate the mood they wanted to achieve. Instead of having playlists to match our mood, a person would have the option of entering their desired emotion into a computer, with a customized playlist then generated to provoke that specific response. This may have particular application in the therapeutic world to help treat those suffering from stress or forms of depression. Runners, meanwhile, could have their pulse rates measured by the headphones they’re wearing, with music selected according to whether heart rate rises or falls. Translated to literature, electronic novels could monitor the electrical activity of neurons in the brain while they are being read, leading to algorithms rewriting sections to match the reactions elicited. In the same way that a stand-up comic or live musician subtly alters their performance to fit a particular audience, so too will media increasingly resemble its consumer. The medium might stay the same, but the message will change depending on who is listening.

In an article published in the New Statesman, journalist Alexandra Coughlan refers to this idea as “aural pill-popping,” in which Emotional Optimization will mean that there will be “one [music] track to bring us up [and] another to bring us down.”45 This comment demonstrates a belief in functional form—the idea that, as I described earlier in this chapter, it is desirable that art be “made useful” in some way. Coughlan’s suggestion of “aural pill-popping” raises a number of questions—not least whether the value of art is simply as a creative substitute for mind-altering drugs.

We might feel calm looking at Mark Rothko’s Untitled (Green on Blue) painting, for example, but does this relegate it to the artistic equivalent of Valium? In his book To Save Everything, Click Here, Belarusian technology scholar Evgeny Morozov takes this utilitarian idea to task. Suppose, Morozov says, that Google (selecting one company that has made clear its ambitions to quantify everything) knows that we are not at our happiest after receiving a sad phone call from an ex-girlfriend. If art equals pleasure—and the quickest way to achieve pleasure is to look at a great painting—then Google knows that what we need more than anything for a quick pick-me-up is to see a painting by Impressionist painter Renoir:

Well, Google doesn’t exactly “know” it; it knows only that you are missing 124 units of “art” and that, according to Google’s own measurement system, Renoir’s paintings happen to average in the 120s. You see the picture and—boom!—your mood stays intact.46

Morozov continues his line of inquiry by asking the pertinent questions that arise with such a proposition. Would keeping our mood levels stabilized by looking at the paintings of Renoir turn us into a world of art lovers? Would it expand our horizons? Or would such attempts to consume art in the manner of self-help literature only serve to demean artistic endeavors? Still more problems not touched on by Morozov surface with efforts to quantify art as unitary measures of pleasure, in the manner of Sergei Eisenstein’s “attractions.” If we accept that Renoir’s work gives us a happiness boost of, say, 122, while Pablo Picasso’s score languishes at a mere 98, why bother with Picasso’s work at all?

Similarly, let’s imagine for a moment that the complexity of Beethoven’s 7th Symphony turns out to produce measurably greater neurological highs than Justin Bieber’s hit song “Baby,” thereby giving us the ability to draw a mathematical distinction between the fields of “high” and “low” art. Should this prove to be the case, could we receive the same dosage of artistic nourishment—albeit in a less efficient time frame—by watching multiple episodes of Friends (assuming the sitcom is classified as “low” art) as we could from reading Leo Tolstoy’s War and Peace (supposing that it is classified as “high” art)? Ultimately, presuming that War and Peace is superior to Friends, or that Beethoven is superior to Justin Bieber, simply because they top up our artistic needs at a greater rate of knots, is essentially the same argument as suggesting that James Patterson is a greater novelist than J. M. Coetzee on the basis that data gathered by Kindle shows that Patterson’s Kill Alex Cross can be read in a single afternoon, while Coetzee’s Life & Times of Michael K takes several days, or even weeks. It may look mathematically rigorous, but something doesn’t quite add up.

The Dehumanization of Art

All of this brings us ever closer to the inevitable question of whether algorithms will ever be able to generate their own art. Perhaps unsurprisingly, this is a line of inquiry that provokes heated comments on both sides. “It’s only a matter of when it happens—not if,” says Lior Shamir, who built the automated art critic I described earlier. Much as Epagogix’s movie prediction system spots places in a script where a potential yield is not where it should be and then makes recommendations accordingly, so Shamir is convinced that in the long term his creation will be able to spot the features great works of art have in common and generate entire new works accordingly.

While this might seem a new concept, it is not. In 1787, Mozart anonymously published what is referred to in German as Musikalisches Würfelspiel (“musical dice game”). His idea was simple: to enable readers to compose German waltzes, “without the least knowledge of music . . . by throwing a certain number with two dice.” Mozart provided 176 bars of music, arranged in 16 columns, with 11 bars to each column. To select the first musical bar, readers would throw two dice and then choose the corresponding bar from the available options. The technique was repeated for the second column, then the third, and so on. The total number of possible compositions was an astonishing 46 × 1,000,000,000,000,000, with each generated work sounding Mozartian in style.47

A similar concept—albeit in a different medium—is the current work of Celestino Soddu, a contemporary Italian architect and designer who uses what are referred to as “genetic algorithms” to generate endless variations on individual themes. A genetic algorithm replicates evolution inside a computer, adopting the idea that living organisms are the consummate problem solvers and using this to optimize specific solutions. By inputting what he considers to be the “rules” that define, say, a chair or a Baroque cathedral, Soddu is able to use his algorithm to conceptualize what a particular object might look like were it a living entity undergoing thousands of years of natural selection. Because there is (realistically speaking) no limit to the amount of results the genetic algorithm can generate, Soddu’s “idea-products” mean that a trendy advertising agency could conceivably fill its offices with hundreds of chairs, each one subtly different, while a company engaged in building its new corporate headquarters might generate thousands of separate designs before deciding upon one to go ahead with.

There are, however, still problems with the concept of creating art by algorithm. Theodor Adorno and Max Horkheimer noted in the 1940s how formulaic art does not offer new experiences, but rather remixed versions of what came before. Instead of the joy of being exposed to something new, Adorno saw mass culture’s reward coming in the form of the smart audience member who “can guess what is coming and feel flattered when it does come.”48 This prescient comment is backed up by algorithms that predict the future by establishing what has worked in the past. An artwork in this sense might achieve a quantifiable perfection, but it will only ever be perfection measured against what has already occurred.

For instance, Nick Meaney acknowledges that Epagogix would have been unable to predict the huge success of a film like Avatar. The reason: there had been no $2 billion films before to measure it against. This doesn’t mean that Epagogix wouldn’t have realized it had a hit on its hands, of course. “Would we have said that it would earn what it did in the United States? Probably not,” Meaney says. “It would have been flagged up as being off the scale, but because it was off the scale there was nothing to measure it against. The next Avatar, on the other hand? Now there’s something to measure it against.”

The issue becomes more pressing when it relates to the generating of new art, rather than the measurement of existing works. Because Lior Shamir’s automated art critic algorithm measures works based on 4,024 different numerical descriptors, there is a chance that it might be able to quantify what would comprise the best illustration of, say, pop art and generate an artwork that conforms to all of these criteria. But these criteria are themselves based upon human creativity. Would it be possible for algorithms themselves to move art forward in a meaningful way, rather than simply aping the style of previous works? “At first, no,” Shamir says. “Ultimately, I would be very careful in saying there are things that machines can not do.”

A better question might be whether we would accept such works if they did—knowing that a machine rather than a human artist had created them? For those that see creativity as a profoundly human activity (a relatively new idea, as it happens), the question is one that goes beyond technical ability and touches on somewhat close to the essence of humanity.

In 2012, the London Symphony Orchestra took to the stage to perform compositions written entirely by a music-generating algorithm called Iamus.49 Iamus was the project of professor and entrepreneur Francisco Vico, under whose coding it has composed more than one billion songs across a wide range of genres. In the aftermath of Iamus’s concert, a staff writer for the Columbia Spectator named David Ecker put pen to paper (or rather finger to keyboard) to write a polemic taking aim at the new technology. “I use computers for damn near everything, [but] there’s something about this computer that I find deeply troubling,” Ecker wrote.

I’m not a purist by any stretch. I hate overt music categorization, and I hate most debates about “real” versus “fake” art, but that’s not what this is about. This is about the very essence of humanity. Computers can compete and win at Jeopardy!, beat chess masters, and connect us with people on the other side of the world. When it comes to emotion, however, they lack much of the necessary equipment. We live every day under the pretense that what we do carries a certain weight, partly due to the knowledge of our own mortality, and this always comes through in truly great music. Iamus has neither mortality nor the urgency that comes with it. It can create sounds—some of which may be pleasing—but it can never achieve the emotional complexity and creative innovation of a musician or a composer. One could say that Iamus could be an ideal tool for creating meaningless top-40 tracks, but for me, this too would be troubling. Even the most transient and superficial of pop tracks take root in the human experience, and I believe that even those are worth protecting from Iamus.50

Perhaps there is still hope for those who dream of an algorithm creating art. However, as Iamus’s Francisco Vico points out: “I received one comment from a woman who admitted that Iamus was a milestone in technology. But she also said that she had to stop listening to it, because it was making her feel things. In some senses we see this as creepy, and I can fully understand that. We are not ready for it. Part of us still thinks that computers are Terminators that want to kill us, or else simple tools that are supposed to help us with processing information. The idea that they can be artists, too, is something unexpected. It’s something new.”