THOMAS H. GALLAUDET*

December 10, 1787–September 10, 1851

The public papers have already announced the deaths of several distinguished gentlemen in various parts of the country. Of Hon. Levi Woodbury and the Rev. Stephen Olin, D.D., extended notices have already been published. One or two others, however, have died, of whom adequate obituaries have not yet been given.

Among these was the Rev. Thomas H. Gallaudet, L.L.D., well-known as the pioneer of Deaf-Mute Instruction in this country, who died at his residence in Hartford, on Wednesday, the 10th inst., at the age of 64.

At an early period of his life, Mr. Gallaudet became interested in the cause of the Deaf and Dumb, and an accidental circumstance decided his future career. In the autumn of the year 1807, a child of Dr. Mason F. Cogswell, then residing in the city of Hartford, became, through the effects of a malignant fever, first deaf and then dumb. Mr. Gallaudet, a young man of talents, education and benevolence, interested himself in the case of this unfortunate child, and, with a strong desire to alleviate her condition, attempted to converse with and instruct her. His efforts were rewarded with partial success; and through the exertions of Dr. Cogswell, Mr. Gallaudet was commissioned to visit Europe for the purpose of qualifying himself to become a teacher of the Deaf and Dumb in this country. Seven gentlemen of Hartford subscribed a sufficient amount of funds to defray his expenses, and on the 25th of May, 1815, Mr. Gallaudet sailed for Europe.

Meanwhile, the friends of the project employed the interval of time in procuring an act of incorporation from the Legislature of Connecticut, which was accomplished in May, 1816. In May, 1819, the name of “the American Asylum at Hartford for the Education and Instruction of the Deaf and Dumb,” was bestowed by the Legislature on the first institution for the Deaf-Mutes established in the United States.

After spending several months in the assiduous prosecution of his studies, under the Abbé Siçard and others, Mr. Gallaudet returned to this country in August, 1816. He was accompanied by Mr. Laurent Clerc, a deaf and dumb Professor in the Institution of Paris, and well-known in Europe as a most intelligent pupil of the Abbé. Mr. Clerc is now living in a vigorous old age, and is still a teacher in the American Asylum at Hartford.

The Asylum was opened on the 15th of April, 1817, and during the first week of its existence numbered seven pupils; it now averages 220 annually. Mr. Gallaudet became the Principal of the Institution at its commencement, and held the office until April, 1830, when he resigned, and has since officiated as Chaplain of the Retreat for the Insane at Hartford.

His interest in the cause of the Deaf-Mute Education has always continued unabated, and his memory will be warmly cherished by that unfortunate class of our fellow beings as well as by a large circle of devoted friends. The tree which was planted under his supervision and tended by his care, has borne good fruit.

* This is the first formal obituary to be published in The Times.

SAMUEL F. B. MORSE

April 27, 1791–April 2, 1872

Prof. [Samuel F. B.] Morse died last evening at 8 o’clock, his condition having become very low soon after sunrise. [He was 80 years old.] Though expected, the death of this distinguished man will be received with regret by thousands to whom he was only known by fame.

Few persons have ever lived to whom all departments of industry owe a greater debt than the man whose death we are now called on to record. There has been no national or sectional prejudice in the honor that has been accorded to him, from the fact that the benefit he was the means of bestowing upon mankind has been universal, and on this account the sorrow occasioned by his death will be equally worldwide.

Prof. Morse was born in Charlestown, Mass., April 27, 1791. His father, Dr. Jedidiah I. Morse, was a prominent Congregational minister. At an early age Samuel was sent to Yale College, and was graduated from that institution in 1810. Passionately fond of art, he determined to become a painter, and for this purpose in the following year he sailed for England, in company with Washington Alston, in order to study under the direction of Benjamin West, at that time considered the leading artist in Europe. Within two years he had made such progress that he received the gold medal of the Adelphi Society, for a cast of the “Dying Hercules.”

He returned to this country in 1815, and devoted himself entirely to his profession. While on a visit to Concord, N.H., in order to paint the portraits of several persons there, he formed the acquaintance of Lucretia Walker, who soon after became his wife.

In 1825, in connection with a number of other artists of this City, he organized an Art Association, which, a year later, was reorganized as the National Academy of Arts, a name it has ever since borne. In 1829 he made a second trip to Europe, for the purpose of still further pursuing his professional studies. During this trip, which lasted three years, he greatly improved himself in the technical branches of the art.

While abroad he was elected Professor of the Literature of the Arts of Design in the University of the City of New York, and it was on his return to accept the position that the invention that has since made his name illustrious suggested itself to him. While at college he had devoted much of his time to the study of chemistry, and even in after years the phenomena of electricity and of electromagnetism had been to him a source of considerable speculation, and during the present voyage, which was made from Havre to New York on the old packet-ship Sully, the conversation turned accidentally on this subject, in connection with a discovery that had shortly before been made in France, of the correlation of electricity and magnetism.

While one day conversing on this matter with a fellow-passenger, the thought flashed upon Morse’s mind that this chemical relationship might be made practically useful. It would probably have been impossible even for the inventor to have said how the proper means for effecting this purpose suggested themselves to him; it was something almost superhuman, as will readily be seen, when it is said that he then conceived in his mind, not only the idea of the electric telegraph, but of the electromagnetic and chemical recording telegraph, substantially as they now exist.

On reaching home, he devoted the greater part of his time to making experiments on this subject. At first there was great difficulty in obtaining the proper instruments, though in 1835 he had succeeded in constructing an apparatus which enabled him to communicate from one extremity of two distant points, of a circuit of half a mile. Unfortunately, this did not allow him to communicate back from the other extremity, but two years more of persistent research was sufficient to overcome this difficulty, and the invention was now ready for exhibition.

This was done in the Autumn of 1837, the wires being laid on the roof of the University building, opposite Washington Square. A great many hundreds visited the place, and all expressed, as well they might, their unbounded astonishment.

In the Winter of the same year Prof. Morse went to Washington to urge upon Congress the necessity of making some provision to assist him in carrying out his invention. Yet to the minds of Congressmen, the invention seemed altogether too chimerical to be likely ever to prove of any worth; and so, after a futile attempt to induce the Congressional Committee to make a favorable report, Prof. Morse returned to New York, having wasted an entire Winter and accomplished nothing.

In the Spring of 1838, he determined to make an effort in Europe, hoping for better appreciation there than in his own country. It was a mistaken thought, however, for after a sojourn of four years he returned to New York, having succeeded in procuring merely a brevet d’invention [patent] in France, and no aid or security of any kind from the other countries.

A less energetic man would have given up after so many rebuffs, but a firm belief in the inestimable value of his invention prompted Prof. Morse to make still another effort at Washington. So faint were the chances of Congress appropriating anything that on the last night of the session, having become thoroughly wearied and disgusted with the whole matter, Prof. Morse retired to bed; but in the morning he was roused with the information that a few minutes before midnight his bill had come up, had been considered, and that he had been awarded $30,000 with which to make an experimental essay between Baltimore and Washington.

The year following the work was completed, and proved a complete success. From that time until the present the demands for the telegraph have been constantly increasing; they have been spread over every civilized country in the world, and have become, by usage, absolutely necessary for the well-being of society.

Prof. Morse was twice married; and his private life was one of almost unalloyed happiness. He resided during the Summer at Poughkeepsie, in a delightful country house on the banks of the Hudson, surrounded by everything that could minister comfort, or gratify his tastes. In the Winter he generally lived in New York. By those who have examined the question, it is reported that the first idea of a submarine cable to Europe emanated in the brain of Prof. Morse, in which case there is little connected with telegraphy of which he may not be said to be the author.

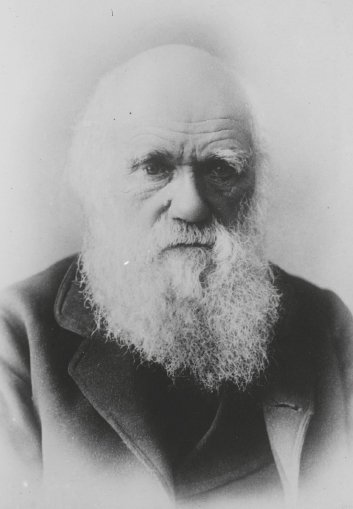

CHARLES DARWIN

February 12, 1809–April 19, 1882

The announcement that Charles Robert Darwin died on Wednesday at his residence, Down House, near Orpington, will be read by very few individuals who have not some degree of acquaintance with the physical theories formulated and taught by this distinguished naturalist, however scanty may be their actual knowledge of his works.

Darwin has been read much, but talked about more. Since the publication of his work “On the Origin of Species” in 1859, and particularly within the 11 years which have elapsed since his “Descent of Man” was given to the world, he has been the most widely known of living thinkers. Doctrines such as he set forth could not long remain the exclusive property of philosophers nor of educated people. They made their way at once into the reading and thought of the masses until the slightest allusion to Darwinism was sure of instant recognition.

It is not to be supposed that every country clergyman became profoundly versed in the doctrines of evolution, or that little laughing schoolgirls joking with each other about a monkey ancestry had followed Mr. Darwin very far in his speculations on differentiation of species, but the ministers somehow all knew that evolution was an abominable heresy, and the schoolchildren intuitively understood that if man is descended from the ape, he cannot be descended from Adam. All that part of the world which had never thought of such things before was aroused by the shock of the new idea.

There had been skeptics and atheists and deists and what not before, but what grave essayists call scientific unbelief sprang primarily from works of Charles Darwin, and is fed chiefly from the writings of other scientists who are at work extending and completing the framework be erected. Mr. Darwin, therefore, may be called an epoch-making man.

The qualities and natural bent of his clear mind were inherited. His father and grandfather were naturalists, though the latter, Dr. Erasmus Darwin, was a much more famous and productive man than his son, Dr. R. W. Darwin. Erasmus Darwin, a botanist of renown, is best known as the author of a remarkable poem called the “Botanic Garden,” which, though destitute of the poetic feeling, shows its author to have been deeply versed in the Linnaean system of botany.

Charles Robert Darwin was born at Shrewsbury, England, Feb. 12, 1809. When he was 16 years old, he entered Edinburgh University, and remained there two years, going then to Christ’s College, Cambridge, where he received the degree of Bachelor of Arts in 1831. In December of the same year he was selected as a naturalist to make a voyage of scientific exploration around the world on board the ship Beagle. Five years were spent in this way.

The opportunities for research, experiment, and study, particularly during his stay in South America, were fruitful in the material and hints out of which his later theories were evolved. Returning from this voyage in 1836, he began the preparation of a “Journal of Researches” into the geology and natural history of the countries visited by the expedition. Between 1844 and 1859 his publications were mostly brief monographs contributed to scientific publications or read before learned societies. But during this long period he occupied himself with untiring zeal and systematic regularity in the study of nature, making a series of observations upon the forms and habits of animals, plants and minerals and slowly accumulating that vast mass of facts and registered phenomena to which he was later on to apply his theory of evolution.

The publication in 1859 of his work, “On the Origin of Species by Means of Natural Selection; or, the Preservation of Favored Races in the Struggle for Life,” was the announcement to his friends that he had at length passed over the sea of hypothesis to the firm ground of scientific assertion and to the world that it must revise or fortify its opinions on biological subjects. In 1871 appeared the best known of all his books, “The Descent of Man, and Selection in Relation to Sex,” in two volumes; the following years saw the publication of half a dozen more. Each of these books has its place in the development of the theory which bears their author’s name. But it is upon the “Origin of Species” and the “Descent of Man” that his fame chiefly rests.

Mr. Darwin made an extremely modest use of his great attainments. He did not construct a theory of the cosmos, and he did not deal with the entire theory of evolution. He was content to leave others to poke about in the original protoplasmic mire, and to extend the evolutionary law to social and political phenomena. For himself, he tried to show how higher organic forms were evolved out of lower. He starts with life already existing, and traces it through its successive forms up to the highest—man. His central principle—his opponents call it a dogma—is “natural selection,” called by Herbert Spencer “the survival of the fittest,” a choice which results inevitably from “the struggle for existence.” It is a law and fact in nature that there shall be the weak and the strong. The strong shall triumph and the weak shall go to the wall.

The law, though involving destruction, is really preservative. If all plants and animals were free to reproduce their kind under like and equally favorable conditions, if all were equally strong and well equipped for obtaining sustenance and making their way in the world, there would soon be no room on the earth for even a single species. The limit of subsistence and the power of reproduction are the bounds between which the conflict rages. In this struggle the multitudes are slain and the few survive. But the survivors do not owe their good luck to chance. Their adaptation to their surroundings is the secret of their exemption from the fate which overtakes those less happily circumstanced. A variety of squirrels, for instance, which is capable of wandering far afield in pursuit of its food, which is cunning and swift enough to evade its enemies, and has a habit of providing a store of nuts for Winter use, will naturally have a better chance of survival than a variety deficient in these qualities.

But Mr. Darwin also discovered that natural selection created special fitness for given circumstances and surroundings. Climate, soil, food supply, and other conditions act in this way, and the result is the differentiation of species. A certain thistle grows in a kind of soil which is rich in the elements which go to produce the tiny hairs upon the surface of the plant. The seeds are thus furnished with downy wings longer than usual, and are wafted further off where they have plenty of space to grow, and they, in turn, reproduce and emphasize the changes to which they owe their existence. Seeds or nuts developing a thick covering for the kernel are thus protected from birds and animals, and live to germinate, producing also hard-shelled seeds, and thus the process goes on.

Varieties which do not develop a high degree of special adaptation to their surroundings fall out of the race. An infinitesimally minute variation of function or structure, repeated and becoming more marked through many successive generations, results ultimately in the production of a variety; or even of a species, quite unlike the parent individual.

Mr. Darwin was by no means the discoverer of the theory of evolution. That is at least as old as Aristotle, who supposed individuals to be produced, not by a simultaneous creation of a minute copy of the adult, with all the different organs, but by epigenesis—that is, by successive acts of generation or growth, in which the rudiment or cell received additions. Other philosophers have adopted and used this theory to a greater or less extent. But it never had a substantial basis of fact or a thoroughly scientific application until Mr. Darwin worked it out.

Within the limits he set for himself Mr. Darwin meets no rival claimant for the honors the scientific and thinking world have accorded him. The dispatch announcing his death says that he had been suffering for some time from weakness of the heart, but continued to work to the last. He was taken ill on Tuesday night with pains in the chest, faintness, and nausea. The nausea lasted more or less during Wednesday and culminated in death in the afternoon. Mr. Darwin remained fully conscious until within a quarter of an hour of his death.

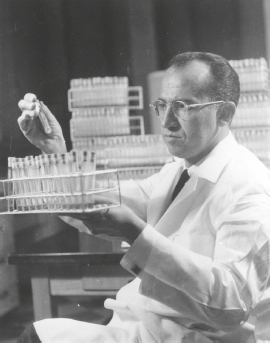

LOUIS PASTEUR

December 27, 1822–September 28, 1895

PARIS—Prof. Louis Pasteur, the distinguished chemist and discoverer of the Pasteur treatment for the cure of rabies, is dead.

Louis Pasteur was pre-eminently a man of his time, of this very moment. His name and the names of the young physicians who became his pupils were synonyms of scientific progress, except to very conservative practitioners, and to these they were synonyms of at least restless research and patient labor.

He had implicit faith in science, and he gave the example of incessant investigating from which he knew the future would derive advantage. Even in later years, when adverse criticism that had pained him extremely had ceased, and marks of distinction had come to him from those whose esteem he valued, and a partial paralysis had rendered useless one side of his body, he was at work every day in his laboratory. His head was bent like a ripe sheaf of wheat, his steps were a painful shuffle, but he was always present with the punctuality of one disciplined to answer to a roll call.

His name is inseparable from any stage of the study of micro-organisms, he founded all the modern anti-surgical principles, he was the master of bacteriology, but his influence was more profound than these phrases indicate.

Pasteur gave to the world better methods of preventing and healing disease, an incalculable economy of lives, proofs against attractive hypotheses which were resulting in false philosophical forms, a revelation of the concealed operations of myriads of organisms, a link in the chain of evidence respecting the proof of the law of evolution.

Dr. Carpenter said of him, in a reference to the London Medical Congress of 1881: “There was none whose presence was more universally or more cordially welcomed than that of a quiet-looking Frenchman, who is neither a great physician nor a great surgeon, nor even a great physiologist, but who, originally a chemist, has done for medical science more than any savant of his day.”

Pasteur demonstrated against all the savants who had preceded him that fermentation is life without air. He extracted the pure juice from the interior of the grape and proved that without contact with impure air it never fermented. He proved that the ferment of the grape is held for germination in the particles of saccharomyces which cling to its exterior and to the twigs of the vine.

In 1885 the silk culture of France produced a revenue of 130,000,000f.; in 12 years it fell, by disease in silkworms, to 8,000,000f. The chemist Dumas appealed to Pasteur, who had never handled a silkworm. Pasteur proved that independent mobile corpuscles, which caused the silk plague, were present in all states of the insect. He proved that when present in the egg they reappeared in all the cyclical alterations of the insect’s life. He proved that they could be readily detected only in the moth. He suggested the selection of healthy moths, proved his views by experiments with others, prophesied results, and restored the silk industry.

Lister, inspired by Pasteur’s work, studied lactic fermentation, verified the latter’s researches into the influence of air in causing fermentation, and redemonstrated in his own field of inquiries the different causes operating in both fermentation and putrefaction. His practical application of the knowledge was in the perfect antiseptic dressing of wounds.

After suppressing the silk plague, preserving the vineyards and the vines, successfully vaccinating the cattle, and giving to Lister a new surgical system, Pasteur discovered the vaccine virus against the rabies, or hydrophobia.

In America the malady is comparatively not frequent, but in Europe, and especially in Russia, it has ever been a cause of mortal anguish.

Pasteur proved that the brain substance and the medulla of a rabid animal would cause rabies if injected hypodermically, and that the period of incubation was of about the same duration as that following the bite of a rabid dog. Then he established the fact that the period of incubation could be shortened almost to a definite time, and when he had triumphantly replied to all objections, began his amazing record of cures from hydrophobia.

A writer on the perfection of Pasteur’s experimental methods has said:

“The caution exercised by Pasteur, both in the execution of his experiments and in the reasoning based upon them, is perfectly evident to those who, through the practice of severe experimental inquiry, have rendered themselves competent to judge of good experimental work. He found germs in the mercury used to isolate the air. He was never sure that they did not cling to the instruments he employed, or to his own person. Thus, when he opened his hermetically sealed flasks upon the Mer-de-Glace, he had his eye upon the file used to detach the drawn-out necks of his bottles; and he was careful to stand to leeward when each flask was opened. Using these precautions, he found the glacier air incompetent in 19 cases out of 20 to generate life, while similar flasks opened amid the vegetation of the lowlands were soon crowded with living things.”

He was born at Dole, in the Department of the Jura [in eastern France], Dec. 27, 1822. He became at 18 a member of the university, in the situation of a supernumerary master of studies at the College of Besançon. At 21 he was admitted as a pupil to the Normal School. He was a graduate in the physical sciences in September, 1846, but he remained at the school two years longer as an assistant instructor in chemistry, obtained a doctor’s degree, and received the appointment of Professor of Physics at the Lycée of Dijon. Then his long years of comparative poverty came to an end.

In 1849 he was a substitute at the Chali of Chemistry in the Strasburg Faculty of Sciences; in 1852 he was invested with this chair. At the end of 1854 he organized the newly created Faculty of Sciences at Lille. In 1857 he returned to Paris as science director at the Normal School.

He retained this office for 10 years, adding to his labor in 1863 the tedious, infinitely wearisome Professorship of Geology, Physics, and Chemistry at the Ecole des Beaux-Arts. He was professor at the Sorbonne from 1867 to 1875.

He varied his labors not by recreation, but, by changing their nature. He experimented when not teaching, and wrote when not doing either. He was the recipient of numerous honors, including the rank of Grand Officer of the Legion of Honor and the magnificent laboratories of the Pasteur Institute in Paris, built by popular subscription and a liberal contribution from the Czar of Russia.

He was kind to his assistants and pupils. They were profoundly devoted to him. His work is not finished by his death, but wherever there are lovers of science there will be reverently whispered a regretful comment, for it is a very pure and brilliant light that has gone out.

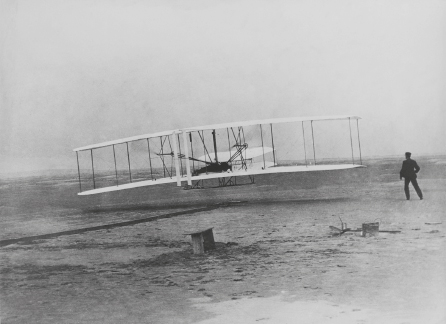

WILBUR WRIGHT

April 16, 1867–May 30, 1912

DAYTON, Ohio—Following a sinking spell that developed soon after midnight, Wilbur Wright, aviator and aeroplane builder, died of typhoid fever at 8:15 A.M. today.

When the patient succumbed at age 45 to the burning fever that had been racking his body for days and nights, he was surrounded by the members of his family, which included his father, Bishop Milton Wright, and brother Orville, the co-inventor of the aeroplane.

Wilbur was born near the Town of Dune Park, Indiana, on April 16, 1867. Four years later, after the family had moved to Ohio, Orville, was born in Dayton.

Instead of referring to any achievement as his own, Wilbur invariably referred to it as coming from “the Wright brothers.”

ORVILLE WRIGHT

August 19, 1871–January 30, 1948

DAYTON, Ohio—Orville Wright, who with his brother, the late Wilbur Wright, invented the airplane, died here tonight at 10:40 in Miami Valley Hospital. He was 76 years old and had been suffering from lung congestion and coronary arteriosclerosis.

In the early fall of 1900 fishermen and Coast Guardsmen dwelling on that lonely and desolate spot of sand dividing Albemarle Sound from the Atlantic Ocean on the coast of North Carolina called Kitty Hawk discovered a new amusement. Trudging through the deep sand and beach grass to the side of the cone-shaped twin dunes known as the Kill Devil Hills, they watched two young men from Ohio try to break their necks.

These young men would launch themselves from the steep side of one of the hills lying flat down on the lower panel of what appeared to be a huge box-kite. His companion at one wing and a volunteer at the other would run and help the kite into the air. Then the kite would fly while the bird-man turned his body this way and that to maintain balance.

The real fun came when the kite would suddenly nose down and plow into the sand and the operator would hurtle out the front doubled up into a ball and roll in a cloud of sand and dust down the side of the hill.

The onlookers did not know it, but they were witnesses at the birth of aviation, and the two who provided so much entertainment were Orville and Wilbur Wright.

Orville was born at Dayton on Aug. 19, 1871, four years after the birth of Wilbur, the son of Bishop Milton Wright, a militant pastor and publicist of the United Brethren Church. There were seven children in the family, but only two others, Lorin and a sister, Katherine, were especially concerned with aviation.

As early as 1891, Orville and Wilbur had read of the experiments of Otto Lilienthal and other glider pioneers, but it was not until Lilienthal’s death in 1896 in a glider accident that they took a definite interest in the problems of flight. Orville at that time was recovering from a bout with typhoid fever and Wilbur read aloud to him. From Lilienthal they went to Samuel P. Langley and his experiments. They read Marey on animal mechanism and by 1899 had progressed through the records of Octave Chanute and works obtained through the Smithsonian Institution.

From Lilienthal and Chanute they got the enthusiasm that decided them to attempt gliding. They sent for Langley’s tables and Lilienthal’s and watched the progress of Sir Hiram Maxim’s experiments. Langley and Maxim represented the school of scientific research and Chanute and Lilienthal were the experimenters actually trying their wings. The Wrights were drawn to the fliers rather than the mathematicians and physicists.

Using the tables of Lilienthal and Langley, they constructed a series of gliders. The gliders did not function and so the Wrights changed them, still following the tables of their predecessors. Finally, they concluded that something was wrong with the tables. For one thing, a concave wing surface produced a resultant force when air passed across it utterly at variance with the theory of Langley and Lilienthal.

The Wrights had been contented before 1900 with wind currents on the flat Ohio terrain, but they knew that for the best results they must find steady, even winds blowing up over smooth hills. With the aid of the Weather Bureau they discovered Kitty Hawk.

While hundreds of attempts to “mount the machine” were made on the first Kitty Hawk visit, the brothers flew only a few minutes altogether. They returned to their home to study. The second glider they took to Kitty Hawk, in the summer of 1901, was better than the first, but they were not satisfied. Again they returned to Dayton to experiment.

In the autumn of 1902 they were ready with a biplane with the adjustable trailing edges on the wings, the horizontal elevator and the vertical rudder. They connected all these to a single set of controls—the first time anything of the sort had been done.

In Kitty Hawk from late September until December 1903, they worked on the machine in a shed which nearly blew away, airplane and all, during a hurricane. Even after they were ready, bad weather set in again and they had strong winds day after day.

During the enforced idleness they invented and constructed an air-speed indicator and a device for measuring the distance flown in respect to the moving air itself. Finally a good day came. The two brothers flipped a coin to decide who should be the first and Wilbur won. The engine was started and the machine lifted after a short run along the track they had built for its skids.

But immediately one wing dipped. It stalled and was on the ground again after being in the air for three seconds. Pleased with the result, the brothers were still not willing to call it a flight. A skid was broken in the attempt and two days were consumed in fixing it. Finally on the evening of Dec. 16 everything was again ready and the flight was set for next morning.

It was Orville’s turn. Into the teeth of a 27-mile-an-hour wind the plane was pointed. Orville released the wire that connected it to the track and it started so slowly that Wilbur was able to keep pace alongside, clinging to one wing to help steady the craft. Orville, lying flat on the lower wing, opened the engine wide. The plane speeded up and lifted clear.

It rose about 10 feet suddenly and then as suddenly darted toward the ground. A sudden dive 120 feet from the point it rose into the air brought it to earth again and man’s first flight in a powered heavier-than-air machine was over. It had lasted 12 seconds, and allowing for the wind, it had made a forward speed of more than 33 miles an hour. Three more flights were made that day, with Wilbur up twice and Orville once, and on the fourth trial Wilbur flew 59 seconds, covered 852 feet over the ground and made an air distance of more than half a mile.

In July 1909, Orville won a government contract by attaining a speed of 42 miles an hour. His contract called for a plane that could make 40 miles an hour and the Government offered a bonus for speeds above that limit. Manufacturing began at Dayton. This period also was marked by a series of patent fights. Glenn Curtiss was starting his experiments and adapted principles that the courts finally ruled were the property of the Wright brothers.

After Wilbur died in 1912, Orville worked on quietly at Dayton. Although friendly and accessible to visitors he avoided the spotlight. The death of Wilbur had left him very much alone.

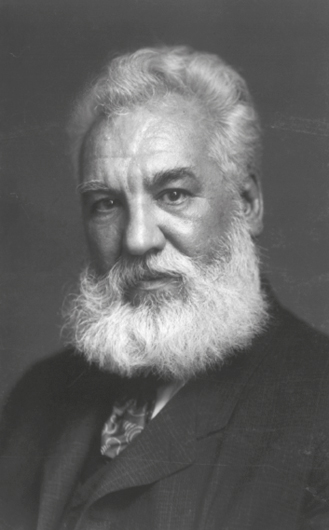

ALEXANDER GRAHAM BELL

March 3, 1847–August 2, 1922

SYDNEY, Nova Scotia—Dr. Alexander Graham Bell, inventor of the telephone, died at 2 o’clock this morning at Beinn Bhreagh, his estate near Baddeck.

Although the inventor, who was in his 76th year, had been in failing health for several months, the end was unexpected. Yesterday afternoon his condition, brought about by progressive anemia, became serious, and Dr. Ker of Washington, a cousin of Mrs. Bell, a house guest and a Sydney physician, attended him.

With Mr. Bell when he died were Mrs. Bell, a daughter, Mrs. Marion Hubbard Fairchild, and her husband. The inventor leaves another daughter, Mrs. Elise M. Grosvenor.

At sunset on Friday, on the crest of Beinn Bhreagh Mountain, the body of Dr. Bell will be buried at a spot chosen by the inventor himself. The grave of the venerable scientist, the immensity of whose life work was attested by scores of telegrams which came today to the Bell estate from the world’s prominent figures, is at a point overlooking the town of Baddeck, Cape Breton. The sweeping vista from the mountaintop, so admired by Dr. Bell, stretches far over the Bras d’Or Lakes. Sunset, chosen as the moment when the body will be committed to the sturdy hills, gilds the waters of the lakes until they are really what their name means—“the lakes of the arm of gold.”

Dr. Bell asked to be buried in the countryside where he had spent the major portion of the last 35 years of his life. The inventor came to Cape Breton 40 years ago, and five years later purchased the Beinn Bhreagh estate. His last experiments, dealing with flying boats, were made on Bras d’Or Lakes.

American specialists who were rushing to the bedside of Dr. Bell were told of his death while aboard fast trains bound for Baddeck, and, being too late, turned back.

On learning of his death President Harding sent this telegram to Mrs. Bell:

“The announcement of your eminent husband’s death comes as a great shock to me. In common with all of his countrymen, I have learned to revere him as one of the great benefactors of the race and among the foremost Americans of all generations.”

President Thayer of the American Telephone and Telegraph Company ordered the Bell system throughout the country to half-mast flags on its buildings.

And Thomas A. Edison today paid the following tribute to his fellow-inventor, Alexander Graham Bell: “I am sorry to learn of the death of Alexander Graham Bell, the inventor of the first telephone. I have always regarded him very highly, especially for his extreme modesty.”

Alexander Graham Bell lived to see the telephonic instrument over which he talked a distance of 20 feet in 1876 used, with improvements, for the transmission of speech across the continent, and more than that, for the transmission of speech across the Atlantic and from Washington to Honolulu without wires. The little instrument he patented less than 50 years ago, scorned then as a joke, was when he died the basis for 18,000,000 telephones used in every civilized country in the world. The Bell basic patent, the famed No. 174,465 which he received on his 29th birthday and which was sustained in a historic court fight, has been called the most valuable patent ever issued.

Although the inventor of many contrivances which he regarded with as much tenderness and to which he attached as much importance as the telephone, a business world which he confessed he was often unable to understand made it assured that he would go down in history as the man who made the telephone. He was an inventor of the gramophone, and for nearly 20 years was engaged in aeronautics. Associated with Glenn H. Curtiss and others whose names are now known wherever airplanes fly, he pinned his faith on the efficacy for aviation of the tetrahedral cell, which never achieved the success he saw for it in aviation, but as a by-product of his study he established an important new principle in architecture.

Up to the time of his death Dr. Bell took the deepest interest in aviation. Upon his return from a tour of the European countries in 1909 he reported that the Continental nations were far ahead of America in aviation and urged that steps be taken to keep apace of them. He predicted in 1916 that the great war would be won in the air. It was always a theory of his that flying machines could make ever so much more speed at great heights, in rarefied atmosphere, and he often said that the transatlantic flight would be some time made in one day, a prediction which he lived to see fulfilled.

The inventor of the telephone was born in Edinburgh, on March 3, 1847. Means of communication had been a hobby in the Bell family long before Alexander was born. His grandfather was the inventor of a device for overcoming stammering and his father perfected a system of visible speech for deaf mutes. When Alexander was about 15 years old he made an artificial skull of guttapercha and India rubber that would pronounce weird tones when blown into by a hand bellows. At the age of 16 he became, like his father, a teacher of elocution and instructor of deaf mutes.

When young Bell was 22 years old he was threatened with tuberculosis, which had caused the death of his two brothers, and the Bell family migrated to Brantford, Canada.

Soon after he came to America, at a meeting with Sir Charles Wheatsone, the English inventor, Bell got the ambition to perfect a musical or multiple telegraph. His father, in an address in Boston one day, mentioned his son’s success in teaching deaf mutes, which led the Boston Board of Education to offer the younger Bell $100 to introduce his system in the newly opened school for deaf mutes there. He was then 24 years old, and quickly gained prominence for his teaching methods. He was soon named a professor in Boston University.

But teaching interfered with his inventing and he gave up all but two of his pupils. One of these was Mabel Hubbard of a wealthy family. She had lost her speech and hearing when a baby and Bell took the most acute interest in enabling her to hear. She later became Mrs. Bell.

Bell spent the following three years working, mostly at night, in a cellar in Salem, Mass. Gardiner G. Hubbard, his future father-in-law, and Thomas Sanders helped him financially while he worked on his theory that speech could be reproduced by means of an electrically charged wire. His first success came while he was testing his instruments in new quarters in Boston. Thomas A. Watson, Bell’s assistant, had struck a clock spring at one end of a wire and Bell heard the sound in another room. For 40 weeks he worked on his instruments, and on March 10, 1876, Watson, who was working in another room, was startled to hear Bell’s voice say:

“Mr. Watson, come here. I want you.”

On his 29th birthday Bell received his patent. At the Centennial in Philadelphia he gave the first public demonstration of his instrument. He had not intended to go to the exposition. He was poor and had planned to take up teaching again. In June he went to the railroad station one day to see Miss Hubbard off for Philadelphia. She had believed he was going with her. As he put her on the train and it moved off without him, she burst into tears. Seeing this, Bell rushed ahead and caught the train, without baggage or ticket.

An exhibition on a Sunday afternoon was promised to him. When the hour arrived it was hot, and the judges were tired. It looked as if there would be no demonstration for Bell, when Dom Pedro, the Emperor of Brazil, appeared and shook Mr. Bell by the hand. He had heard some of the young man’s lectures. Bell made ready for the demonstration. A wire had been strung along the room. Bell took the transmitter, and Dom Pedro placed the receiver to his ear.

“My God, it talks!” he exclaimed.

Then Lord Kelvin took the receiver.

“It does speak,” he said. “It is the most wonderful thing I have seen in America.”

The judges then took turns listening, and the demonstration lasted until 10 o’clock that night. The instrument was the center of interest for scientists the rest of the exposition.

The commercial development of the telephone dated from that day in Philadelphia.

While Alexander Graham Bell will be best remembered as the inventor of the telephone, a claim he sustained through many legal contests, he also became noted for other inventions. With Sumner Tainter he invented the gramophone. He invented a new method of lithography, a photophone, and an induction balance. He invented the telephone probe, which was used to locate the bullet that killed President Garfield. He spent 15 years and more than $200,000 in testing his tetrahedral kite, which he believed would be the basis for aviation.

The inventor was the recipient of many honors in this country and abroad.

Dr. Bell regarded the summit of his career as reached when in January of 1915 he and his old associate, Mr. Watson, talked to one another over the telephone from San Francisco to New York. It was nearly two years later that by a combination of telephonic and wireless telegraphy instruments the engineers of the American Telephone and Telegraph Company sent speech across the Atlantic.

In 1915 Dr. Bell said that he looked forward to the day when men would communicate their thoughts by wire without the spoken word.

“The possibilities of further achievement by the use of electricity are inconceivable,” he said. “Men can do nearly everything else by electricity already, and I can imagine them with coils of wire about their heads coming together for communication of thought by induction.”

In April of 1916 he declared that land and sea power would become secondary to air power. He expressed then the opinion that the airplane would be more valuable as a fighting machine than the Zeppelin and urged that the United States build a strong aerial fleet.

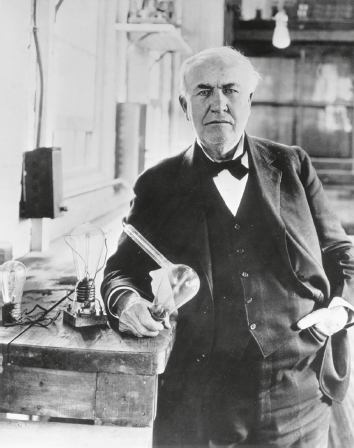

THOMAS EDISON

February 11, 1847–October 18, 1931

WEST ORANGE, N.J.—Thomas Alva Edison died at 3:24 o’clock this morning at his home, Glenmont, in the Llewellyn Park section of this city. The great inventor, the fruits of whose genius so magically transformed the everyday world, was 84 years and 8 months old.

The end came almost imperceptibly as the sick man’s ebbing strength, sapped by long months of struggle against a complication of ailments, gradually receded until his heart ceased beating.

Through the long days when Mr. Edison calmly, cheerfully awaited the inevitable, amazing evidences of the world’s affectionate concern for one of its most useful citizens were plentiful. Pope Pius XI, President Hoover, Henry Ford and a host of others kept in daily touch [about] his condition.

Anxiety for the man whose creative genius gave the world the electric light, the phonograph, the motion picture camera and a thousand other inventions ranging through all the various fields of science had been general since he collapsed in the living room of his home on Aug. 1.

Thomas Alva Edison was born in Milan, Ohio, on Feb. 11, 1847. He came of vigorous and independent-minded stock, originally coming to America from the Zayder Zee. His great-grandfather was Thomas Edison, a New York banker of prominence on the Tory side during the Revolutionary War. So much of a Loyalist was he that when the Colonies won their independence he went to Canada to live under the British flag. There his grandson, Samuel, became a rebel against the King, rose to the rank of Captain in Papineau’s insurgent army in 1837 and fled to the States with a price on his head.

Samuel Edison settled at Milan, Ohio, where his son, Thomas, was born. Edison got most of his schooling there. He was always, as he remembered it later, at the foot of his class. In interviews he recalled his mother’s indignation when one teacher told him that he was “addled,” a fighting adjective in country districts. His mother, who had been a teacher, took him out of school and educated him herself. At the age of nine he had read or his mother had read to him “The Penn Encyclopedia,” “Hume’s History of England” and Gibbons’s “Decline and Fall of the Roman Empire.”

But the books which put the backward schoolboy of the tiny canal village on the way to become one of the greatest men of his time were popular works on electricity and chemistry. He and his mother performed some of the simple chemical experiments they found described in the books.

At the age of 12, to earn money for his experiments, he persuaded his parents to allow him to become a railroad newsboy. He set up a laboratory in the baggage car, along with a printing press, on which he published a weekly newspaper that gained as many as 400 paid subscribers.

One day a bottle containing phosphorus fell off a shelf and broke upon the floor. The phosphorus set fire to the car, which was with some difficulty saved from burning up, and the conductor put the boy and his belongings off the train and boxed his ears so soundly as to cause the beginning of the deafness with which he has ever since been afflicted.

The young man found work as a telegraph operator, drifted to New York, and began repairing stock tickers for a market reporting firm. Fortune began to smile on him at the age of about 22, when he worked out improvements in tickers and telegraph appliances and got $50,000 for one invention.

Almost entirely self-educated, Edison was never a profound student of physics, mathematics and theoretical chemistry, but he had a rich equipment for an inventor. To an enormous practical grasp of physics and electricity he joined wide general experience, reading and intellectual activity. He read books of every kind. He was a believer in vast and miscellaneous general information, as he demonstrated in 1921, when the Edison questionnaire became famous. He gave to his prospective employees the kind of examination which he himself could have passed when he was a youth. He wanted to find men of his own type, men of intellectual curiosity and general knowledge. His practical scientific knowledge, his original, penetrating mind and his invincible industry gave him the greatest output of invention of any living man.

When Edison was only 26 years old, in 1873, he made an agreement with the Western Union Telegraph Company to give them an option on all telegraph inventions that came out of his head. He then moved to Newark, N.J., into a bigger shop than his Manhattan working place. He completed an automatic telegraph, making possible the transmission of 1,000 words a minute between points as far distant as Washington and New York.

At the age of 29 Edison had become so famous that curious people crowded his workshop and he could not work satisfactorily. He moved further away from New York, to Menlo Park, N.J.

Before that time he had already experimented on an incandescent electric light. At the time, the arc lamp was already in existence in public squares in this city. Backed by a syndicate with a capital of $300,000, including men such as J. Pierpont Morgan, Edison extended his experiments. Finally, in 1879, he made the discovery which made the incandescent light a success.

One night in his laboratory, idly rolling between his fingers a piece of compressed lampblack, he realized that a slender filament of it might emit incandescent light if burned in a vacuum. So it did, and further experiments demonstrated that a filament of burned cotton thread would provide even greater incandescence. He placed the filament in a globe and connected it with the wires leading to the machine generating electric current. Then he extracted the air from the globe and turned on the electricity.

“Presto! A beautiful light greeted his eyes,” in the words of a New York newspaper article. “He turned on more current, expecting the fragile filament immediately to fuse; but no. The only change was a more brilliant light. He turned on more current and still more, but the delicate thread remained intact. Then, with characteristic impetuosity, and wondering and marveling at the strength of the little filament, he turned on the full power of the machine and eagerly watched the consequences. For a minute or more the tender thread seemed to struggle with the intense heat passing through it—heat that would melt the diamond itself. Then at last it succumbed and all was darkness. The powerful current had broken it in twain, but not before it had emitted a light of several gas jets.”

On Jan. 1, 1880, the public was invited to go to Menlo Park and see the operation of the first lighting plant. Electricians, many of them, insisted that there was some trickery in the exhibition, but almost simultaneously the Edison lighting system spread all over civilization.

In 1877, while at work on a machine to improve the transmission of Morse code, Edison realized that its sounds made indentations on paper fitted to a rapidly rotating cylinder—“a musical, rhythmic sound resembling that of human talk heard indistinctly,” as he later wrote in the North American Review in 1887. “This led me to try fitting a diaphragm to the machine which would receive the vibrations or sound waves made by my voice when I talked to it and register these vibrations upon an impressible material placed on the cylinder.” He continued:

“The indentations on the cylinder, when rapidly revolved, caused a repetition of the original vibrations to reach the ear through a recorder, just as if the machine itself was speaking.” Thus was born the phonograph.

Edison invented the motion picture machine in 1887. The Zoetrope and other machines were then in existence for throwing pictures from transparencies on a screen one after another and giving the effect of action. It occurred to Edison that pictures could be taken in rapid succession by the camera and later used to synthesize motion. He put his pictures on the market in the form of a peep-show. Put a nickel in the slot and you could see dances, prizefights, fencing matches and other bits of action.

Here Edison, for all his powers of forecasting the future, made his major failure as a prophet. For a long time he opposed the idea of projecting pictures on the screen. He thought that it would ruin the nickel-in-the-slot peep-show business. In spite of his creative imagination and his comprehensive genius, he seemed to have been lacking in showmanship.

Edison was married twice, in 1873 to Miss Mary G. Stillwell, by whom he had three children, and in 1886 to Miss Mina Miller, who is the mother of three more.

Mr. Edison relaxed a little in the latter years of his life. He spent his Winters at Fort Myers, Fla., where he experimented with a miniature rubber plantation. In spite of his deafness, he remained sunny and genial till the end of his days. Honors came to him from all sides. Edison’s career was brilliantly summed up by Arthur Williams, Vice President of the New York Edison Company, who said:

“Entering this building [the Hotel Astor] tonight, we passed through that extraordinary area of publicity by light, often called the brightest spot on earth—Times Square. Standing there, thinking of Edison and his work, we may well remember the inscription on the tomb of Sir Christopher Wren in St. Paul’s, London, ‘If you would see his monument, look around.’”

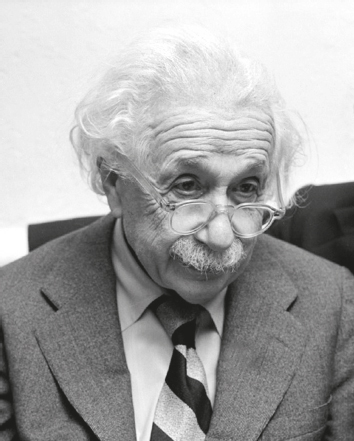

ALBERT EINSTEIN

March 14, 1879–April 18, 1955

PRINCETON, N.J.—Dr. Albert Einstein, one of the great thinkers of the ages, died in his sleep here early today.

A rupture of the aorta brought death to the 76-year-old master physicist and mathematician and practicing humanitarian. He died at 1:15 A.M. in Princeton Hospital.

The shy professor’s exit was as unostentatious as the life he had led for many years in the New Jersey village, where he was attached to the Institute for Advanced Study.

The body was cremated without ceremony at 4:30 P.M. after the removal, for scientific study, of vital organs, among them the brain that had worked out the theory of relativity and made possible the development of nuclear fission.

President [Dwight D.] Eisenhower declared that “no other man contributed so much to the vast expansion of 20th-century knowledge.” Eminent scientists and heads of state sent tributes from many nations, including Israel, whose establishment as a state he had championed, and Germany, which he had left forever in 1932 because of Nazism’s rising threat against individual liberty and Jewish life.

In 1904, Albert Einstein, then an obscure young man of 25, could be seen daily in the late afternoon wheeling a baby carriage on the streets of Bern, Switzerland, halting now and then, unmindful of the traffic around him, to scribble down some mathematical symbols in a notebook that shared the carriage with his infant son, also named Albert.

Out of those symbols came the most explosive ideas in the age-old strivings of man to fathom the mystery of his universe. Out of them, incidentally, came the atomic bomb, which, viewed from the long-range perspective of mankind’s intellectual and spiritual history may turn out, Einstein fervently hoped, to have been just a minor by-product.

With those symbols Dr. Einstein was building his theory of relativity. In that baby carriage with his infant son was Dr. Einstein’s universe-in-the-making, a vast, finite-infinite four-dimensional universe, in which the conventional universe—existing in absolute three-dimensional space and in absolute three-dimensional time of past, present and future—vanished into a mere subjective shadow.

Dr. Einstein was then building his universe in his spare time, on the completion of his day’s routine work as a humble, $600-a-year examiner in the Government Patent Office in Bern.

A few months later, in 1905, the entries in the notebook were published in four epoch-making scientific papers. In the first he described a method for determining molecular dimensions. In the second he explained the photo-electric effect, the basis of electronics, for which he won the Nobel Prize in 1921. In the third, he presented a molecular kinetic theory of heat. The fourth and last paper that year, entitled “Electrodynamics of Moving Bodies,” a short article of 31 pages, was the first presentation of what became known as the Special Relativity Theory.

Neither Dr. Einstein, nor the world he lived in, nor man’s concept of his material universe, were ever the same again.

The scientific fraternity recognized that a new star of the first magnitude had appeared on their firmament. By 1920 the name of Einstein had become synonymous with relativity, a theory universally regarded as so profound that only 12 men in the entire world were believed able to fathom its depths.

Albert Einstein was born at Ulm, Wuerttemberg, Germany, on March 14, 1879. His boyhood was spent in Munich, where his father, who owned electro-technical works, had settled. The family migrated to Italy in 1894, and Albert was sent to a cantonal school at Aarau in Switzerland. In 1901 he was appointed examiner of patents at the Patent Office at Bern where, having become a Swiss citizen, he remained until 1909.

It was in this period that he obtained his Ph.D. degree at the University of Zurich and published his first papers on physical subjects.

These were so highly esteemed that in 1909 he was appointed Extraordinary Professor of Theoretical Physics at the University of Zurich. In 1913 a special position was created for him in Berlin as director of the Kaiser Wilhelm Physical Institute. He was elected a member of the Royal Prussian Academy of Sciences and received a stipend sufficient to enable him to devote all his time to research.

Dr. Einstein married Mileva Marec, a fellow-student, in Switzerland, in 1901. They had two sons. The marriage ended in divorce. He married again, in 1917, this time his cousin, Elsa Einstein, a widow with two daughters. She died in Princeton in 1936.

When the Institute for Advanced Study was organized in 1932 at Princeton, Dr. Einstein accepted the place of Professor of Mathematics and Theoretical Physics. He made plans to live there about half of each year.

These plans were changed suddenly. Adolf Hitler rose to power in Germany and essential human liberty, even for Jews with world reputations like Dr. Einstein, became impossible in Germany. In the late spring of 1933 Dr. Einstein learned that his two stepdaughters had been forced to flee Germany.

Not long after that he was ousted from the supervising board of the German Bureau of Standards. His home at Caputh was sacked by Hitler Brown Shirts on the allegation that Dr. Einstein, a pacifist, had a vast store of arms hidden there.

The Prussian Academy of Science expelled him and also attacked him for having made statements regarding Hitler atrocities. His reply was this:

“I do not want to remain in a state where individuals are not conceded equal rights before the law for freedom of speech and doctrine.”

In September of 1933 he went into seclusion on the coast of England, fearful that the Nazis had plans upon his life. Then he journeyed to Princeton and in 1940 became a citizen of the United States.

Paradoxically, the figure of Einstein the man became more and more remote, while that of Einstein the legend came ever nearer to the masses of mankind. They grew to know him not as a universe-maker whose theories they could not hope to understand but as a world citizen, a symbol of the human spirit and its highest aspirations.

“Saintly,” “noble” and “lovable” were the words used to describe him. He radiated humor, warmth and kindliness. He loved jokes and laughed easily.

Princeton residents would see him walk in their midst, a familiar figure, yet a stranger; a close neighbor, yet a visitor from another world. And as he grew older his otherworldliness became more pronounced, yet his human warmth did not diminish.

Outward appearance meant nothing to him. Princetonians soon got used to the long-haired figure in pullover sweater and unpressed slacks, a knitted stocking cap covering his head in winter.

“My passionate interest in social justice and social responsibility,” he wrote, “has always stood in curious contrast to a marked lack of desire for direct association with men and women. I am a horse for single harness, not cut out for tandem or team work. I have never belonged wholeheartedly to country or state, to my circle of friends, or even to my own family.”

It was this independence that made Dr. Einstein on occasions the center of controversy. In January 1953 he urged President Harry S. Truman to commute the death sentences of Julius and Ethel Rosenberg, the two convicted atomic spies who were executed five months later. Later that year he advised a witness not to answer any questions by Senator Joseph R. McCarthy, Republican of Wisconsin.

His political ideal was democracy. “I am convinced,” he wrote in 1931, two years before Hitler came to power, “that degeneracy follows every autocratic system of violence, for violence inevitably attracts moral inferiors.”

His love for the oppressed also led him to become a strong supporter of Zionism. In November, 1952, following the death of Chaim Weizmann, Dr. Einstein was asked if he would accept the Presidency of Israel. He replied that he was deeply touched by the offer but that he was not suited for the position.

On Aug. 6, 1945, when the world was electrified with the news that an atomic bomb had exploded over Japan, the significance of relativity was intuitively grasped by the millions. From then on the destiny of mankind hung on a thin mathematical thread.

Informed of the news by a reporter for The New York Times, Dr. Einstein asked, “Do you mean that, young man?” When the reporter replied “Yes” the physicist was silent for a moment, then shook his head.

“Ach! The world is not ready for it,” he said.

Thereafter Dr. Einstein became the chairman of the Emergency Committee of Atomic Scientists, organized to make the American people aware of the potential horrors of atomic warfare and the necessity for the international control of atomic energy.

He found recreation from his labors in playing the grand piano that stood in the solitary den in the garret of his residence. Much of his leisure time, too, was spent in playing the violin. He was fond of playing trios and quartets with musical friends.

While he did not believe in a formal, dogmatic religion, Dr. Einstein, like all true mystics, was of a deeply religious nature.

“The most beautiful and profound emotion we can experience,” he wrote, “is the mystical. It is the source of all true art and science. He to whom this emotion is a stranger, who can no longer pause to wonder and stand rapt in awe, is as good as dead: his eyes are closed.”

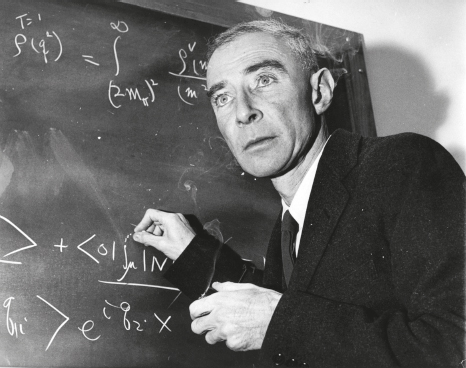

J. ROBERT OPPENHEIMER

April 22, 1904–February 18, 1967

PRINCETON, N.J.—J. Robert Oppenheimer, the nuclear physicist who has been called “the father of the atomic bomb,” died here tonight at the age of 62.

A spokesman for the family said Dr. Oppenheimer died in his home at the Institute for Advanced Study. He had been suffering from cancer of the throat. Dr. Oppenheimer and his wife, Katherine, had a son and daughter.

Starting precisely at 5:30 A.M., Mountain War Time, July 16, 1945, J. Robert Oppenheimer lived his life in the blinding light and the crepusculine shadow of the world’s first manmade atomic explosion.

That sun-like flash illuminated him as a scientific genius. It also led to his disgrace when, in 1954, he was described as a security risk. Publicly rehabilitated in 1963, this bafflingly complex man nonetheless never fully succeeded in dispelling doubts about his conduct.

Dr. Oppenheimer was a cultivated scholar, a humanist, a linguist of eight tongues and a brooding searcher for ultimate spiritual values. And, from the moment that the test bomb exploded at Alamogordo, N.M., he was haunted by the implications for man in the unleashing of the basic forces of the universe.

As he clung to one of the uprights in the desert control room that July morning and saw the mushroom cloud rising, a passage from the Bhagavad-Gita, the Hindu sacred epic, flashed through his mind: “If the radiance of a thousand suns were to burst into the sky, that would be like the splendor of the Mighty One.”

And as the atomic cloud pushed higher above Point Zero, another line came to him from the same scripture: “I am become Death, the shatterer of worlds.” Two years later, he was still beset by the moral consequences of the bomb, which, he told fellow physicists, had “dramatized so mercilessly the inhumanity and evil of modern war.”

With the detonation of the first three atomic bombs and the Allied victory in World War II, Dr. Oppenheimer, at 41, reached the apogee of his career. Acclaimed as “the father of the atomic bomb,” he was credited by the War Department “with achieving the implementation of atomic energy for military purposes.” In 1946, he received a Presidential Citation and a Medal of Merit for his direction of the Los Alamos Laboratory, where the bomb had been developed.

From 1945 to 1952, Dr. Oppenheimer was one of the foremost Government advisers on atomic policy. He was the dominant author of the Acheson-Lilienthal Report [named for Secretary of State Dean Acheson and David Lilienthal, first chairman of the Atomic Energy Commission], which offered a plan for international control of atomic energy.

From 1947 to 1952, Dr. Oppenheimer headed the A.E.C.’s General Advisory Committee of top nuclear scientists, and for the following two years he was its consultant. Then, in December 1953, President Dwight D. Eisenhower ordered that a “blank wall be placed between Dr. Oppenheimer and any secret data,” pending a hearing.

Up to then, Dr. Oppenheimer’s big-brimmed brown porkpie hat was a frequent sight in Washington and the capitals of Western Europe. People were captivated by his charm, eloquence and sharp, subtle humor and awed by the scope of his erudition, the incisiveness of his mind, and his arrogance toward those he thought were slow or shoddy thinkers.

He was an energetic man at parties, where he was usually the center of attention. He was gracious as a host and the maker of fine and potent martinis.

J. Robert Oppenheimer (the “J” stood for nothing) was born in New York on April 22, 1904, the son of Julius and Ella Freedman (or Friedman) Oppenheimer. Julius Oppenheimer was a prosperous textile importer who had emigrated from Germany, and his wife was a Baltimore artist, who died when her elder son was 10. (The younger son, Frank, also became a physicist.)

Robert was a shy, delicate boy who was more concerned with his homework and with poetry and architecture than with mixing with other youngsters. After attending the Ethical Culture School, he entered Harvard College in 1922, intending to become a chemist.

In addition to studying physics and other sciences, he learned Latin and Greek and was graduated summa cum laude in 1925, having completed four years’ work in three.

From Harvard, he went to the University of Cambridge, in England, where he worked in atomics under Lord Rutherford, the eminent physicist. Thence he went to the Georg-August-Universitat in Gottingen, Germany. He received his doctorate there in 1927.

In 1929, Dr. Oppenheimer joined the faculties of Caltech at Pasadena and the University of California at Berkeley. Magnetic, lucid, always accessible, he developed hundreds of young physicists. At that time, he recalled later, “I was not interested in and did not read about economics or politics. I was almost wholly divorced from the contemporary scene in this country.”

But beginning in late 1936, his life underwent a change of direction that involved him in Communist, trade union and liberal causes. These commitments and associations ended about 1940, according to the scientist.

One factor in his awakening to the world about him was a love affair, starting in 1936, with a woman who was a Communist, now dead. (In 1940 he married the former Katherine Puening, who had been a Communist during her marriage to Joseph Dallet, a Communist who died fighting for the Spanish Republican Government.)

Dr. Oppenheimer also felt, as he put it, a “smoldering fury about the treatment of Jews in Germany,” and he was deeply dismayed by the Depression. But he consistently denied that he was ever a member of the Communist party.

Dr. Arthur H. Compton, the Nobel Prize–winning scientist, brought Dr. Oppenheimer informally into the atomic project in 1941. Dr. Oppenheimer impressed Maj. Gen. Leslie R. Groves, in charge of the $2 billion Manhattan Engineer District, as the bomb project was code-named, who named him director despite Army Counter-Intelligence qualms over his past associations.

With General Groves, Dr. Oppenheimer selected the Los Alamos site for the laboratory and gathered a top-notch scientific staff, including Dr. Enrico Fermi and Dr. Niels Bohr. Dr. Oppenheimer displayed a special genius for administration and handling the sensitive prima-donna scientific staff. He drove himself at breakneck speed, and at one time his weight dropped to 115 pounds.

Because a security-risk potential was already imputed to him, he was dogged by Army agents. His phone calls were monitored, and his mail was opened. Then an overnight visit with his former fiancee—by then no longer a Communist—on a trip to San Francisco in June 1943 aroused the Counter-Intelligence Corps.

That August, for reasons that remain obscure, Dr. Oppenheimer volunteered to a C.I.C. agent that the Russians had tried to get information about the Los Alamos project. George Eltenton, a Briton and a slight acquaintance of Dr. Oppenheimer, had asked a third party to get in touch with some project scientists.

In three subsequent interrogations Dr. Oppenheimer embroidered this story, but he declined to name the third party or to identify the scientists. (In one interrogation, however, he gave the C.I.C. a long list of persons he said were Communists or Communist sympathizers, and he offered to dig up information on former Communists at Los Alamos.)

Finally, in December, 1943, Dr. Oppenheimer, at General Groves’s order, identified the third party as Prof. Haakon Chevalier, a French teacher at Berkeley and a longtime friend of the Oppenheimer family. At the security hearings in 1954, the scientist recanted his espionage account as a “cock-and-bull story,” saying only that he was “an idiot” to have told it.

Neither Professor Chevalier nor Mr. Eltenton was prosecuted. Later, Professor Chevalier asserted that Dr. Oppenheimer had betrayed him out of ambition.

Another charge against Dr. Oppenheimer involved the hydrogen, or fusion, bomb and his relations with Dr. Edward Teller. At Los Alamos Dr. Teller, a vociferous proponent of the hydrogen bomb, was passed over for Dr. Hans Bethe as head of the important Theoretical Physics Division. Dr. Teller, meantime, worked on problems of fusion.

At the war’s end, hydrogen bomb work was generally suspended. In 1949, however, when the Soviet Union exploded its first fission bomb, the United States considered pressing forward with a fusion device. The matter came to the A.E.C.’s General Advisory Committee, headed by Dr. Oppenheimer.

On the ground that manufacturing a hydrogen bomb was not technically feasible at the moment, the committee unanimously recommended that thermonuclear research be maintained at a theoretical level only.

In 1950 President Harry S. Truman overruled Dr. Oppenheimer’s committee and ordered work pushed on the fusion bomb. Dr. Teller was given his own laboratory and within a few months the hydrogen bomb was perfected.

In late 1953, William L. Borden, former executive director of the Joint Congressional Committee on Atomic Energy, wrote to F.B.I. Director J. Edgar Hoover asserting that, in his opinion, Dr. Oppenheimer had been “a hardened Communist” and had probably “been functioning as an espionage agent.”

President Eisenhower cut Dr. Oppenheimer off from access to secret material. Lewis L. Strauss, then chairman of the A.E.C., gave Dr. Oppenheimer the option of cutting ties with the commission or asking for a hearing. He chose a hearing.

The action against Dr. Oppenheimer dismayed the scientific community and many other Americans. He was widely pictured as a victim of McCarthyism.

It was charged at the security hearings that Dr. Oppenheimer had not been sufficiently diligent in furthering the hydrogen bomb and that he influenced other scientists. Dr. Teller testified that, apart from giving him a list of names, Dr. Oppenheimer had not assisted him “in the slightest” in recruiting scientists. Dr. Teller, moreover, said he was opposed to restoring Dr. Oppenheimer’s security clearance.

Dr. Oppenheimer vigorously denied that he had been dilatory or neglectful in supporting the hydrogen bomb.

The three-member Personnel Security Board of the A.E.C. held hearings in Washington in April and May 1954. By a vote of 2 to 1, the board declined to reinstate Dr. Oppenheimer’s security clearance, even though it called the scientist “a loyal citizen.”

Dr. Oppenheimer returned to Princeton to live quietly until April 1962, when President John F. Kennedy invited him to a White House dinner. In December 1963, President Johnson handed Dr. Oppenheimer the highest award of the A.E.C., the $50,000 tax-free Fermi Award. In accepting it, Dr. Oppenheimer said, “I think it is just possible, Mr. President, that it has taken some charity and some courage for you to make this award today.”

CHARLES A. LINDBERGH

February 4, 1902–August 26, 1974

Maui, Hawaii—Charles A. Lindbergh, the first man to fly the Atlantic solo nonstop, died this morning at his simple seaside home here. He was 72 years old.

The cause was cancer of the lymphatic system, according to Dr. Milton Howell, a longtime friend.

Mr. Lindbergh was buried about three hours later in the cemetery adjoining the tiny Kipahulu church. He was dressed in simple work clothing and his body was placed in a coffin built by cowboys employed on cattle ranches in the nearby town of Hana. Dr. Howell said that the aviator had spent the last weeks of his life planning his funeral.

In a tribute this evening to Mr. Lindbergh, President Ford said the courage and daring of his Atlantic flight would never be forgotten. He said the selfless, sincere man himself would be remembered as one of America’s all-time heroes and a great pioneer of the air age that changed the world.

By Alden Whitman

In Paris at 10:22 P.M. on May 21, 1927, Charles Augustus Lindbergh, a one-time Central Minnesota farm boy, became an international celebrity. A fame enveloped the 25-year-old American that was to last him for the remainder of his life, transforming him in a frenzied instant from an obscure aviator into a historical figure.

The consequences of this fame were to exhilarate him, to involve him in profound grief, to engage him in fierce controversy, to turn him into an embittered fugitive from the public, to accentuate his individualism to the point where he became a loner, to give him a special sense of his own importance, to allow him to play an enormous role in the growth of commercial aviation, as well as to be a figure in missile and space technology, to give him influence in military affairs, and to raise a significant voice for conservation, a concern that marked his older years.

All these things were touched off when a former stunt flier and airmail pilot touched down the wheels of his small and delicate monoplane, the Spirit of St. Louis, on the tarmac of Le Bourget 33 1/2 hours after having lifted the craft off Roosevelt Field on Long Island. Thousands trampled through fences and over guards to surround the silvery plane and to acclaim the first man to fly the Atlantic solo nonstop from the United States to Europe—a feat that was equivalent in the public mind then to the first human step on the moon 42 years later. Icarus had at last succeeded, a daring man alone had attained the unattainable.

What enhanced the feat for many was that Lindbergh was a tall, handsome bachelor with a becoming smile, an errant lock of blond hair over his forehead and a pleasing outward modesty and guilelessness. He was the flawless El Cid, the gleaming Galahad, Frank Merriwell in the flesh.

The delirium that engulfed Paris swirled out over the civilized world. He was gushed over, adulated, worshiped, feted in France, Belgium and Britain. In New York, 4 million people spilled into the streets. Ticker tape and confetti rained on the Broadway parade. Lindbergh, at one point, was “so filled up with listening to this hero guff that I was ready to shout murder.”

What the pandemonium obscured was that Lindbergh’s epic flight was a most minutely planned venture by a professional flier with 2,000 air hours. “Why shouldn’t I fly from New York to Paris?” he had asked himself in September, 1926. “I have more than four years of aviation behind me. I’ve barnstormed over half of the 48 states. I’ve flown my mail through the worst of nights.”

There had been two previous Atlantic flights—both in 1919, the first when one of three Navy craft flew from Newfoundland to the Azores; and the second when John Alcock and Arthur Brown made it from Newfoundland to Ireland. But no one had made the crossing alone, or from continent to continent.

Lindbergh was conceived of as a nice young man, perhaps a little unpolished socially. He attracted new friends who were considerate of his strong individualism.

The conservative views that Lindbergh later articulated, the remarks about Jews that proved so startling when he was opposing American entry into World War II, his adverse opinion of the Soviet Union, his belief in Western civilization—these were all a reflection of a worldview prevalent among his friends, many rich and conservative. An engineer and aviator of genius, he was, however, not an intellectual, nor a consistent reader, nor a social analyst.

Lindbergh did not regard himself as an anti-Semite. Indeed, he was shocked a couple of years ago when this writer put the question to him. “Good God, no,” he responded, citing his fondness for Jews he had known or dealt with. Nor did he condone the Nazi treatment of German Jews. On the other hand, he accepted as fact that American Jewish groups were among those promoting United States involvement in World War II.

He voiced these views in a speech in Des Moines, Iowa, on Sept. 11, 1941. He said of the Jewish people: “Their greatest danger to their country lies in their large ownership and influence in our motion pictures, our press, our radio and our government.”

The speech evoked a nationwide outcry. Lindbergh never withdrew his remarks, which he considered statements of “obvious fact.”

He also declined to repudiate the award to him of the Service Cross of the German Eagle, bestowed in 1938 by Hermann Goering, the Nazi leader, “at the direction” of Hitler. The medal plagued his reputation for the rest of his life.

Lindbergh’s life, like his personality, was full of shadows and enigmas. Born Feb. 4, 1902, he was the son of C. A. Lindbergh, a prosperous Little Falls, Minn., lawyer and land speculator, and his second wife, Evangeline Lodge Land. Charles Augustus Lindbergh Jr. lived in Little Falls with few interruptions until he was 18.

His interest in flying was sparked in 1908 or ’09, when, one day, he heard a buzzing in the sky and climbed onto the roof of his home to witness a frail biplane skimming through the clouds.

“Afterward, I remember lying in the grass and looking up at the clouds and thinking how much fun it would be to fly up there,” he recalled later.

In 1921, he motorcycled to the Nebraska Aircraft Corporation in Lincoln, which was then producing an airplane and giving flying lessons to promote the product.

Lindbergh took his first flight April 9, 1922, and barnstormed over the Midwest. He was billed as “Daredevil Lindbergh” for his stunt feats.

However, he did not solo until April, 1923, when he purchased his first plane, a Jenny. Shortly afterward, he began to take up passengers in various towns at $5 a ride. He then attended an Army flying school and spent some time as an air circus stunt flier before being hired in St. Louis as the chief pilot on a mail run to Chicago.

On a flight in September, 1926, he was musing about the possibilities of long-distance trips, and he “startled” himself by thinking “I could fly nonstop between New York and Paris.”