7.1 Preliminary Discussion

- 1.

Scientific (evidence-based, observational-empirical, double-blind, case-control, the principle of falsifiability and hierarchy of evidence-based) model and method.

- 2.

Philosophical model and method (each of which depends on vast supragroups and positions, as we evidenced several times in this analysis, including thought experiments in experimental philosophy).

- 3.

Artistic model and method (from theories of perception to art movements and philosophies, to the “sheer enjoyment through the senses”, and to the social, political, sense/meaning-making, affirming-affirmative and activating-activist component of performance art).

- 4.

Religious/spiritual/meditative model and method (with special reference to mysticism and mystical experiences, but also related to NDEs, OOBEs, alternate states of consciousness, neurotheology, etc.).

Criticism of traditional (albeit not necessarily traditionalist) religions often identified the perceived delusional-illusional aspects of belief and practice. In this view, revealed religion is an illusion, for its truth is always something to be represented, not conceptualized, not conceptually understood, because the content of religion is correct, but the form is wrong, and that is the perspective of Verene in his analysis of Hegel. This certainly defines the “problematic relation between illusion, representation, perception, conceptualization and revelation of metaphysical, spiritual, and religious, especially messianic and/or prophetic type such as premonitions, apocalyptic visions, clairvoyance etc., is at the center of the debate on the ontology and phenomenology of psychiatric events” (Tomasi 2016). Philosophical, psychological and psychiatric definitions of ‘manifestation’ and ‘epiphany’ are particularly relevant in analyzing the cognitive-rational perspective as the preferred method of investigation of such issues. For example, a rationalization/cognitivization of (the figure and/or imago of) Christ or the Spirit would not make sense according to Verene because of their nature-substance. In the attempt to unify the two elements and moments, the Father and the Son, problems appear on both strictly cognitive levels (because cognition necessarily has an object) as well as from the theological perspective, for instance in the filioque doctrine at the center of the Great Schism. The Holy/Whole Spirit vs. the Ghost (Geist) appears in time as substance, and unsuccessfully tries to unite the elements, which is the same as saying it tries to annihilate (reduce) time. Under this framework, Erinnerung is a denial of time but recollection. That is precisely why cognition is ‘less than absolute knowledge’, and our understanding can only move beyond the cognitive stage, through the previously analyzed ‘beautiful soul’ which is both the very intuition of the Divine, and the Divine’s intuition of itself, the self’s own act in contraposition to what was ‘content’ in religion. This is the reason beyond the divine nature of language (learned at the level of das Meinen, since the real cannot be said), of the poetry of the very poetic art of intuition, which ultimately leads to philosophy, to a communion with itself (Tomasi 2016). Now, all these discussions on communication, creativity and intuition are to be understood, in this context, as areas of investigation for neuroscience. More specifically, as this conclusion involves ‘Philosophy as basic approach toward Neuroscience’, we want to spend some time justifying why we should care at all about these ideas. With the ever increasing specialization and subdivision of science into multiple subspecialties, we can observe how very often this process happens not for a genuine interest in the best possible practices or form of scientific inquiry, but simply due to an ever increasing demand for added ‘labeling’ at the scientific level in academia. In other words, modern science, ever since post-enlightenment developments (as we have seen multiple times in this study), has increasingly become distant, even ‘foreign’ or ‘alien’ to disciplines such as natural philosophy. While it is understandable and to some extent justifiable, that, once a solid scientific paradigm, in terms of method and technology, has been found to be effective and accurate in the efforts of new discoveries, science will focus more and more on areas in which results can be found, these efforts are themselves part of (could read: ‘prisoners of’) the general goal of such paradigm. This has a direct impact on funding research studies, especially in the context of grants. If the amount of money is limited, and ‘everyone wants the money’ one of the best ways to access these funds is exactly this further subdivision of fields, a subdivision which promotes the idea that is actually better to know a lot more about a very limited area of investigation (a view which can certainly be defended given the increasing complexity of science in all areas) and ‘not so much’ about the ‘general connections’ between fields. While we do agree that is humanly very difficult, almost impossible, to be a specialist in one field and at the same time know ‘a lot about everything’ without losing focus on and expertise in that very field, we also see an increasing negative bias toward researchers who are actually trying to bridge these two perspectives. To give a quick example in the form of mere anecdotal evidence (the reader will not find any bibliographical reference in this case), we have observed, personally and professionally, that an expert in diverse fields of science can sometimes be viewed as someone like a ‘popular science author’ but (at times) with not enough knowledge of the complexity and multitude of details in any field. A similar bias we observed in the field of medicine, where terms/titles such as ‘general practitioner’, ‘family physician’ and ‘generalist doctor’ are at times ‘looked down upon’ by the (just as an example) “neurosurgeon-researcher who only specializes in the substantia nigra selectivity, with special reference to the pars cumpacta in relation to sleeping patterns observable in animal modeling”. Let us restate that we completely understand the incredible difficulty of mastering a lot of knowledge in such a vast area like modern science without sounding (and often truly being) ‘superficial’ or ‘general’, but we want to promote the idea of “Philosophy as basic approach toward Neuroscience” to at least try to be flexible in moving the focus back and forth from the smallest detail to the bigger picture. Furthermore, as we also mentioned many times, an “expert is someone who experiences”, someone who has or makes an experience, certainly with the methods of science but also via relations with other scientists, and human beings in general, all the way to those experiences that need to be quantified/qualified as personal (at times even subjective), artistic, spiritual and even mystical. Once more, this is the very nature of consciousness as ‘shared knowledge’. If we do not ‘keep this mindset in mind’ the risk is that the aforementioned subdivision of fields will not be motivated by true scientific (and human) curiosity (broadly indeed to include genuine interest for fellow human beings, and the world in general), but by money. There can be in fact very dangerous consequences when science is used to foster not clinical research, but the monetary gain for pharmaceutical companies, not green energy solutions, but the oil industry, not universal wellbeing, but class-divided health coverage. To provide an example in this sense, when analyzing socioeconomic status, healthcare and medicine, education and literacy, science and technology, and equal distribution of wealth in the United States, for instance, this country does not score very high globally (Mujkanović 2016). However, although the general quality and affordability of the US healthcare and medicine in general is still far away from the standards of other countries (especially other American countries like Canada and European countries like Austria, Denmark, Italy, the Netherlands and Norway), the United States were certainly on the right path, and the implementation of the Patient Protection and Affordable Care Act (PPACA) and the Health Care and Education Reconciliation Act have been successful in achieving the goal of quality, affordable healthcare and reduction of uninsured rate for all citizens of or residents in the United States, until the change of powers in policy making. In this regard, we can certainly state that not everything was perfect with ‘Obamacare’. First of all, many opponents felt that the centralization of powers in the hands of the Washington federal government might take away some of the liberties and abilities of single states to provide good (health) care to their citizens. Of note, the same fear is still found throughout Europe when discussing the centralizing power of the European Union in terms of financial structuration of society, which many perceive as globalizing in the sense of increasing the privileges of the banks, of corporations over people and of economy over morality. In any case, the vast majority of European countries, EU-members or not, were able to keep a relatively free healthcare system, with paid maternity leave, and very inexpensive higher education tuition rates, especially in the fields of medicine and medical science, again relatively to the US-based higher education system.

7.2 The Triple-S Model: Self, Soul, Spirit

7.2.1 Critical Neuroscience

- (a)

What our mind and soul (both of which, at this level do not make sense at all, anymore) really are (reality=observation=description, following the same schemata).

- (b)

What (in the absence of a self, intended as connected to mind and soul) our brain should do.

- (a)

Individual and/or subjectively understood

- (b)

Transcendental and/or internally felt

- (c)

Metaphysical or/or divinely inspired

Moral neuroscience is a subfield and a sub-theoretical framework which can be applied to many other fields in neuroscience and to science in general. To provide further explanation of the direct implication of a ‘moralizing neuroscience’ in those areas where medicine intersects and interacts with ethics, morality and end-of-life decisions, we would like to briefly discuss the application of such ethical aspects as found in acute care settings. Medical Science, including those areas covered by neuroscience and neurology, is structured on a vast array of clinical, theoretical and ethical standpoints, upon which the combination of medical, patient-centered theory and practice of care is based. In this context, the analysis of advance directives is a fundamental cornerstone of critical neuroscience, because—in research, academia and healthcare—we need to understand those parameters which can guarantee patient’s informed consent from both the quantitative perspective (patient’s capacity for autonomy), and the qualitative perspective (the patient’s ability for autonomy and voluntariness). These aspects are always present in clinical efforts at every stage of care, from the initial treatment to palliative care. More specifically, end-of-life decisions should be made once patient autonomy is assessed, thus determining a series of considerations including the broader conceptual umbrella of good and evil categories, particularly good life versus good death. In this regard, Schicktanz and Schweda (2009) helps us understand the planning of advance directives in juxtaposition and comparison by the way they are interpreted by a third party. Critical neuroscience faces bioethical demands by addressing questions from a multilayered, multifaceted and multicultural perspective. In this context, the focus on diversity and universality is key to determine a structured process which fosters a better understanding of the patient in his/her individuality. In fact, advance directives indicate a document addressing the specific needs and preferences of care in the partial or total absence of ability and/or capability in health-related decision making, including (self) care, due to illness or incapacity. To be sure, these decision-making abilities are influenced by an interplay between personal and cultural identity (Schicktanz 2009). Certainly, the underpinning ethical considerations are part of the broader theoretical debate in philosophy, most particularly in the philosophy of medicine. Johnson (2009) refers to universalism versus casuistry in addressing the problems arising by considering ethical questions under the lens of the individual case/patient and the context/case-situation/environment, including universalization and generalization (as in the perspectives of Immanuel Kant vs. situation ethics). Furthermore, this analysis helps reframe the problem of the continuity of self also in terms of our connection with the sensory apparatus (Fig. 7.1), which is central to our efforts, as researchers and healthcare providers in general, to better address the needs of each patient, especially when a full understanding, diachronically intended, is compromised due to issues related to the ever changing medical situation. It is certainly the case of degenerative disorders, including comorbidity of causes and effectors, such as neurological disorders and mental health disorders in general, for instance in patients with Alzheimer’s disease or specific psychiatric diagnosis (schizophrenia, schizoaffective disorder, etc.). The conceptual issues regarding autonomy, consent and personhood are addressed by Coleman (2013) when discussing yet another philosophical concept, the harm principle by John Stuart Mill. In fact, utilitarian principles are in place in analyzing physicians’ attitudes toward clinical decisions within advance directives. This perspective is certainly a core issue in the patient-provider, especially patient–physician relationship. Scientists and clinicians can and should be able to address this issue by fostering open, positive and competent communication with and between patient and physician.Generations of anthropologists and moral psychologists before had gathered evidence on the development, cognitive-emotional mechanisms, and cultural diversity of morality, but suddenly in 2001 with the publication of the first neuroimaging experiments the situation seemed to have changed. It seems fair to say that of the seven different psychological-neuroscientific theoretical accounts of morality distinguished by Jorge Moll et al., all the evidence gathered hitherto does not unequivocally favor any particular one (Moll et al., 2005). While the science communication accompanying the original study by Greene et al. suggested the philosophical relevance of the research, even putting forward the idea that the new findings could make moral philosophers superfluous (Helmuth, 2001), so far the opposite has been the case: theoreticians of all kinds responded to the prescriptive/normative claims and emphasized how these neuroscientific reports rely on theoretical presumptions and individual interpretation. While theoretical in its scope, moral neuroscience is used to provide the ultimate answers of human right and wrong that Sperry and Gazzaniga called for. More applied/technical implications are promised by the complementary research that might be coined “immoral neuroscience”: the investigation of what makes us behave immorally or criminally.2

The concept of continuity of self and the interaction of the individual-subject with the external world of stimuli via sensory perception. As it is well known, the olfactory system has the particular feature of almost completely bypassing the thalamic analysis (decoding, amplifying and transmitting) in its paths toward cortical areas

This is another essential aspect of this study, that is, the promotion of appropriate ideas—thus, appropriate for the target population, for example, our patients—for the amelioration of care. Let us restate this principle once more. To provide better care we need to (a) provide the patient with better tools to foster self-care in the healing process, (b) identify those tools as parts of the mind–body connection, in terms of strategies the patient can think of and use for his/her wellbeing and (c) define the terms ‘mind’ and ‘body’, via the thorough analysis of the mind–body problem and the justification-proof of the existence of these two terms, their interaction, and its modalities.

Beneficence—What is in the best interest for the patient while focusing on safety in healthcare environments.

Justice and Fidelity—Fair access to quality healthcare with a focus on trust and loyalty to the patient.

Self-determination and Autonomy—Ethical obligation to patients; What measures to take on the patient’s behalf.

Full disclosure and Veracity—Full disclosure of truthful information allowing the patient to make an informed decision.

Informed consent—A voluntary choice to accept or refuse treatment.

Privacy and Confidentiality—Exercising trust in health care professionals to disclose private information.

To be able to meet her/his full potential (self-actualization).

To feel competent, with strong self-esteem, self-perception and self-worth (esteem).

To feel psychologically and professionally supported in the system (love and belongingness).

Better understand their own needs, values, role and identity as they apply in a healthcare system, and better relate to the patient’s own needs by clinically addressing them.

Be more prepared and ready to check all the clinical tasks required as part of their clinical interventions, and verify their self-actualization process while performing those tasks.

Be clinically and ethically more competent in addressing issues such as clinical decision-making and advocating for patients’ actions in the case of illness or impaired capacity and autonomy.

Be legally responsible for the application of specific ethical guidelines in advance care decision making (e.g. end-of-life and palliative care).

Be supported by healthcare and higher educational system in which the role and scope of nursing will be better understood and respected by other healthcare providers and team members.

7.2.2 Neurolinguistics

Anatomical view of the ear in relation to auditory transmission (signaling in blue). Highlighted we can observe the ear canal and the eardrum or tympanic membrane (pink), the labyrinth (orange), the middle ear space (purple) and the cochlea (blue)

The philosophical discussion at the center of the aforementioned considerations also provides the framework for the semantic analysis of meaning vs. value judgment, especially in relation to the development of morality, as well as the theoretical basis for mismatch design, subtraction paradigm and violation-based studies. Charles Leslie Stevenson examined the cognitive use of language, thereby presenting the perspective of human self-knowledge through communication with other human beings in a specific context. The first pattern analysis focuses on the two parts of ethical statements, the speaker’s declaration, that is, the declaration of the speaker’s attitude and an imperative to follow it in a specular way: to mimic, copy and mirror it. The intersection of philosophy and neurolinguistics thus helps us frame the way human beings shape their meaning and significance, whether to or through self-reflection or external factors. In the case of Stevenson, the translation of an ethical sentence remains a non-cognitive one, but it raises existential(ist) questions on personal responsibility and action. Since imperatives cannot be proven, they can be supported, and the purpose of this process is to make the listener understand the consequences of the action they are being commanded to do.

Furthermore, cognitive-attentive/attentional task monitoring studies originate from this semantic analysis design, including the probe verification in which a series of sentences are analyzed by presenting the subjects with a ‘probe word’ following each statement. In this type of experimental study, the subject then has to identify the presence of such words in the previously administered sentence. From this perspective, this experimentation is akin to acceptability judgment task-based research studies, and other types of research areas—for instance lexical decision tasks used in priming studies, or research on grammatical acceptability or semantic acceptability—shared by linguistics in general, including lexicology and morphology (the study of the relationships between related words, their independent and codependent structure, their formation and storage/accumulation and access/accessibility, thus related to recall and memory-based processes and LTP), semantics (concerned with encoded meaning, value and significance), syntax (concerned with combinatory patterns), phonetics and phonology (the study of speech sounds and—in neurolinguistics and psycholinguistics—their neural and psychological underpinnings, including the ability of separating language-based sounds from background noise), pragmatics (analyzing the context, and its role and conceptual weight in the interpretation of meaning, also in relation to sociocultural conventions at the base, for instance, of orthography).

Of course, in each of these areas of neurolinguistics, the ‘neuro’ element has to be experimentally understood with the help of evidence-based studies based on a series of tasks. The responses, reactions and/or outcomes following the tasks can then be analyzed both theoretically and with the support of technologies in neuroimaging and hemodynamic techniques such as functional near-infrared spectroscopy (fNIRS), or diffusion tensor imaging (DTI), especially useful to track neural connections between brain areas while the task is performed, and others. This type of research in neurolinguistics also monitors quantitative data in relation to (reaction/decision/acquisition) time and ordering—processing of linguistic patterns by the subject. More in detail, if we observe (via electrophysiological techniques such as EEG) a certain pattern of neural activity following a specific task, we can identify (again, we are still within the same theoretical framework) discrete values of brain response, on a computational level. In this context, we refer to the common neural responses reflecting semantic processing (event-related potentials or ERP) ELAN, N400 and P600. A less passive-observational and more active-stimulatory approach comes from other techniques such as transcranial magnetic stimulation and direct cortical stimulation. Furthermore, in neurolinguistics, we also find the usage of outliers to the standards for this type of investigation. More in detail, observing grammar-syntax anomalies in the order, disposition and conceptual structure of words within sentences, providence evidential relations for, as an example, the N400 effect or the P600 response. Studies by Embick, Hillyard, Kutas and Osterhou were able to shed new light on the neural processes and their location in neuroanatomical and functional terms behind these language-use anomalies. These studies also provide important information on the processes behind language acquisitions, more specifically around the various stages of linguistic development in connection to neural development (including ‘babbling’ stages), in relation to the acquisition of multiple languages and the interactions between them, also in terms of crossing-violation. The use of Neuroimaging techniques in this context allows for a deeper comparison between language processing and specific area neural activation at baseline (for instance, in experimental observation of subjects reading complex vs. basic sentences), to monitor not only area-specific developmental stages, but also to incorporate a broader analysis on neuroplasticity and increase in gray and white matter. The so-called subtraction paradigm focuses exactly on such comparison. Electrophysiological techniques and electrocorticography have also been used in the study of language processing, but they are generally limited, due to the nature of these techniques, to the analysis of the mechanisms at the basis of these processes and their time-sequence (often implemented with studies on event-related potentials, which provide detailed information on amplitude, latency and broad scalp/cortical topography) rather than on their exact location.

Certainly, when combining observation-type and experimental technology-based research with quantitative vs. qualitative theoretical, statistical/epidemiological analysis, we need to avoid, as much as possible, any type of subjective bias, or attempt to isolate this component to monitor possible contributors or distracters to the processes involving a final result. In fact, some researchers will use an artificial, experimental ‘distractor’ to (a) better investigate working memory in language processing and (b) avoid bias originating from the subjects (over) focusing on (in other words, orienting more attention to) the experiment itself and its stimuli. The distraction in this context might come from multiple and non-related stimuli to elicit a mismatch negativity response or MMN, or by asking the subject to engage in multiple tasks at once, as it is the case of the double-task experiment.

7.2.3 Neuroheuristics or Neuristics

A strong philosophical approach is presented in the heuristic examination of neural underpinnings. Such an approach is embraced by the field of neuroheuristics, also called neuristics. Therefore, research in this area analyzes the scientific information on neural activity from within, adopting a problem-solving framework including complexity, non-reducibility, deduction-induction-intuition-based debate and (abstract vs. extract) philosophical speculation, especially in relation to the cognitive examination of decision-making procedures. Neuroheuristics combines many transdisciplinary approaches in both ‘hard’ and ‘soft’ sciences; however, the philosophical baseline of such investigation is non-binary in an experimental sense. More specifically, neuroheuristics utilizes the fundamental data and information pieces gathered by neurosciences, neurobiology, in particular, to follow a ‘bottom-up’ process to have a deeper understanding of the structure and function of neural areas, especially in the CNS. However, a challenge to this approach is represented by the difficult task of monitoring (and avoiding bias at the same time vs. simultaneously) multiple neuroanatomical areas and activities and relate them to internal/external variables. To compensate this experimental difficulty, neuroheuristics also relies on theoretical frameworks such as double heuristics, quantum physics debate and black box theory, the latter in particular regard to the understanding of essence vs. existence in terms of (just vs. only) functionality. In fact, the term ‘black box’ is somewhat akin to the concept of ‘camera obscura’ although in this context the narrowing focal process is not mirroring, (re)representing, (re)coding or portraying an image, but is aware of the absence of knowledge of its internal functions, processes and workings; it is ‘aware of being unaware’. This black box could thus represent the computational or at least quantitative aspect of the (human) brain as a calculator, better understood via algorithms and equations.

Ens Astrorum or Ens Astrale, representing the influx and influence of the stars

Ens Veneni, through the poison absorbed, inhaled by the body

Ens Naturale, or the natural predisposition and constitution

Ens Spirituale, representing the influx and influence of the spirit

Ens Dei, defined as influx and influence of God

7.2.4 Neuroeconomics

What we have discussed so far in relation to cognitive and computational decision-making analysis is used by neuroeconomics to further investigate the relationship between neural activity and behavior involved in the creation-production, distribution-sharing-selling and use-usage-consumption of (primarily material) goods and services. More specifically, behavior is interpreted from the perspective of single vs. multiple decisions, or a single (less-least or more-most) better/worse choice among many options. Given this premise, neuroeconomics is fully part of a much broader philosophical debate, especially in regard to (hard/soft) determinism vs. indeterminism and compatibilism vs. incompatibilism in the free-will debate. However, in mainstream modern neuroeconomics, the main assumptions follow the ones of contemporary economics, especially in regard to expected utility, utility maximization (as in Bernoulli) logical base of informed and rational agent-based decisions, and standardized/single-currency/system models on overall utility value. A heuristics-based criticism of mainstream economics applies even more strongly to neuroeconomics, given the assumption of the validity of some animal modeling-based investigation of decision-making processes at a neural level. A similar criticism is found in the definition of risk and avoidable or unwanted outcomes, and on whether firing rates of individual neurons can be understood under the lens of ‘better choice’ or ‘decision to avoid’, as in the studies by Padoa-Schioppa and Assad on the orbitofrontal cortex of monkeys. As animals, especially humans, make the decision in a social environment, elements of social neuroscience and psychology or sociology are used in this field to account for the number of social effectors in each decision or series of choices. Strong moral-ethical and even theological-eschatological elements of discussion are present, as universality of value and judgment, cooperation, prize/praise, retribution, punishment and altruism are the direct outcomes of such analysis. To provide an example, in the so-called prisoner’s dilemma by Flood and Dresher (1951) the concept of trust plays a fundamental role, in that it determines the level of cooperation between/among individuals. On the level of neuroeconomics, the increased outcome in terms of spread/shared benefit within social cooperation is compared with individual/single gain and it is modulated by the presence of the hormone oxytocin and the activation of the reward pathway in the CNS, most specifically the ventral striatum (as well as the tegmental area) in the brain. Therefore, the theoretical assumptions in neuroeconomics are tested using multiple Neuroimaging technologies. These studies rely on the analysis of blood-oxygenation levels as well as on the presence, absence or increase/decrease levels of specific, task-related chemicals during activation-action-function and at baseline, often by comparing any such activity with a control activity or comparing average subjects with subjects affected by neurocognitive damage, especially in the case of behavioral and emotional-related areas such as the limbic system, and especially the amygdala (which appears to play a very important role in loss aversion studies). Aside for the effects on trust and risk perception of oxytocin, we will mention serotonin in relation to intertemporal choice (the expected utility assigned by human subjects to events occurring at different times, as opposed to the assumed constancy-consistency of choice found in discounted utility), the presence of dopamine and increased activation of the dopamine reward pathway (especially the nucleus accumbens), as well as the BA8 area of the frontomedian cortex, the frontoparietal cortex and the mesial prefrontal cortex for difficult decision-making processes involving uncertainty. The latter is at the center of investigations regarding normal and abnormal (not necessarily in psychological-psychiatric terms) behavior, as with the generalized tendency to overweigh small probabilities and underweigh large ones in terms of showing risk-seeking behaviors, as evidenced by the studies by Tversky and Kahneman (1981). Moreover, when the balance between sheer uncertainty and risk appears to show ‘heaviness’ on the latter (as in gaming or gambling), we notice an increased activation of the insular cortex.

Finally, another important element in neuroeconomics is shared with psychology, especially in regard to the studies conducted by Bandura and Mischel on cognition, decision making and the connection (which is choice) between immediate and delayed reward. In such a process, the neural underpinnings appear to be the lateral prefrontal cortex, although with a ratio differential. More specifically, research studies suggest that the limbic system is (more) activated in the case of impulsive decisions, while the cortex is (more) activated in general aspects of the intertemporal decision process. In other words, the ratio of limbic to cortex activation decreased as a function of the amount of time (passed) until reward (obtained or perceived as such), and this would also explain the activation of other chemical components, especially hormones and neurotransmitters, as well as the production of cortisol and activation/deactivation of stress response in individual with drug addiction.

7.2.5 Artificial Intelligence

A thorough and critical examination of neuroscience, especially in regard to the mind–body problem, cannot possibly avoid the debate over artificial intelligence, a tremendously important topic in the contemporary scientific research. As we did in previous conversations, we will start with a definition of terminologies. First of all, debating artificial intelligence means being aware of a very particular comparison within the—for now assumed to be valid in theoretical terms—dichotomy/distinction between ‘artificial’ and ‘natural’. The first term is pretty straight forward, as it originates in the Lat. artificialis, with the meaning of ‘made with/by/out of art’ (also in an Aristotelian ‘techne’ model, thereby scientific) and thus opposed/differentiated from nature. In this sense, ‘artificial’ also means “made, created, or produced by (human) beings rather than occurring naturally”. Whether other (types of) animals beyond the human species could account for artificial in this case, is for now beyond the scope of this analysis. However, at least in English, the term was often used to define something first created as a copy or imitation of something natural. The second term, ‘intelligence’, is something more difficult to define in this context, although the etymology does not present particular difficulties in translation. As a direct derivative of the Lat. intelligĕre, the concept involves a specific way of ‘reading between the lines’, thus including ‘comprehending’ or ‘perceiving’. Focusing for a moment on the first verb, if we follow the translation of ‘understanding’ in terms of “taking (each element, alone, and in combination and/or mutual interaction) together” we don’t see too many complications in relating the term to a computational activity. This obviously implies a non-specific value/structure/meaning to human intelligence, in comparison to an artificial one, given that both deal with aspects such as collection, compilation, comparison, combination and calculation. Leaving aside for now philosophical considerations on utilitaristic/utilitarian and mechanistic aspects of such activity, artificial intelligence such as the one provided not only by the most advanced computer systems, but also by simple electronic calculators, appears in this area faster and more precise—in other terms, better—than the intelligence of human beings. With some elements possibly shared by both comprehension and perception, such as capacity for logic, learning (also, but not limited to a psychological-behavioral sense), planning, creativity and problem solving, the main problem in identifying perception has to do with the connections with ‘the self’ as in self-perception, self-image, self-awareness, all the way to multiple types (which is in itself an assumption, albeit with strong scientific evidence, for instance in the differentiation of cerebral areas and functions) of knowledge, wisdom and (deeper? broader?) understanding, also in the translational sense of ‘foundation’ or ‘principle’, thus connecting with ‘inclination’ (as a translation for the German Neigung) and motivation, orientation. Moreover, even from a strictly scientific point of view, a universal and universally accepted definition of intelligence is far from being ‘a thing’. Certainly, throughout history there have been multiple attempts to provide an accurate definition in this sense. The Board of Scientific Affairs of the American Psychological Association gave in this sense this definition of the term:

Within the realm of human intelligence, there are also certain parameters which are used to better define the term, including the volume, speed, acceleration (frequency, velocity) and/or structure of the working memory and/to the capacity for sequential activation and activity forecast, the hierarchy system of information-processing neural activities, including neurogenesis and axonal structuration, and the more complex (philosophically speaking, at least) activities of biographical memory and consciousness. In the United States, a popular definition of intelligence is the famous definition offered in 1994 by Mainstream Science on Intelligence:Individuals differ from one another in their ability to understand complex ideas, to adapt effectively to the environment, to learn from experience, to engage in various forms of reasoning, to overcome obstacles by taking thought. Although these individual differences can be substantial, they are never entirely consistent: a given person’s intellectual performance will vary on different occasions, in different domains, as judged by different criteria. Concepts of “intelligence” are attempts to clarify and organize this complex set of phenomena. Although considerable clarity has been achieved in some areas, no such conceptualization has yet answered all the important questions, and none commands universal assent. Indeed, when two dozen prominent theorists were recently asked to define intelligence, they gave two dozen, somewhat different, definitions.3

Among the very interesting skills that human intelligence provides, and is therefore at the center of the developments of artificial intelligence technologies, we find pattern recognition (for instance, the ability to recognize facial expressions on one side and familiar faces on the other), and communication, including language understanding and speech production, as well as concept/idea formation. To be more precise, given the current scientific knowledge, at least until new technological advancements would reach the same levels and possibly surpass them, much of human intelligence is shared by other animals, especially mammals, as well. On a theoretical level, human intelligence can be understood within specific frameworks, for instance, the ‘theory of multiple intelligences’ by Gardner, which in turn refers to eight main abilities as bases for related ‘intelligencies’: linguistic, logical-mathematical, spatial, musical, bodily, kinesthetic, interpersonal and intrapersonal.5 Other wide known theories include Bandura’s theory of self-efficacy and cognition (with further developments by Walter Mischel), the Intelligence Compensation Theory or ICT by Wood and Englert, the Investment theory based on the Cattell–Horn–Carroll theory, the so-called LI effect or Latent inhibition by Lubow and Moore, the Parieto-frontal integration theory of intelligence, Piaget’s theory and Neo-Piagetian theories, the PASS theory of intelligence by Luria, the Process, Personality, Intelligence & Knowledge theory or PPIK, and the Triarchic theory of intelligence by Sternberg. Aside from the specific content and differences between theories, for the perspective of our analysis we are interested in possibly defining, when and if possible, the main components that could possibly separate, in qualitative and/or quantitative terms, artificial intelligence from human (or more generally, animal) intelligence. This could also mean ‘simply’ characterizing those differences as stages or levels, albeit this decision would lay or ascribed, possibly artificial in themselves assumptions and values/goals, thus drawing a connection—once again—between intelligence, knowledge and awareness—consciousness, also in neurobiological terms (Fig. 7.3). For instance, we could quantify intelligence a (Ia) as ‘less complex’ or ‘slower’ than intelligence b (Ib). Then we would also decide (observe?) whether these intrinsic qualities are essential or existential, in the sense of result or (by) product of (external/internal) environmental factors, to determine whether the outcomes are ‘produced’ or ‘defining’ the ‘being a’ from (the)6 ‘being b’.[Intelligence is] A very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience. It is not merely book learning, a narrow academic skill, or test-taking smarts. Rather, it reflects a broader and deeper capability for comprehending our surroundings – “catching on”, “making sense” of things, or “figuring out” what to do.4

View of the Reticular Activating System (RAS), with thalamus and corpus subthalamicus, substantia nigra, medial and lateral lemniscus (including the nucleus and the decussation), decussation of the superior peduncle, reticular formation, the internal arcuate fibers, and the olive. The RAs play a very special role in the processes involved in alertness, arousal, attention, consciousness and habituation

7.2.6 Sense, Purpose, Meaning

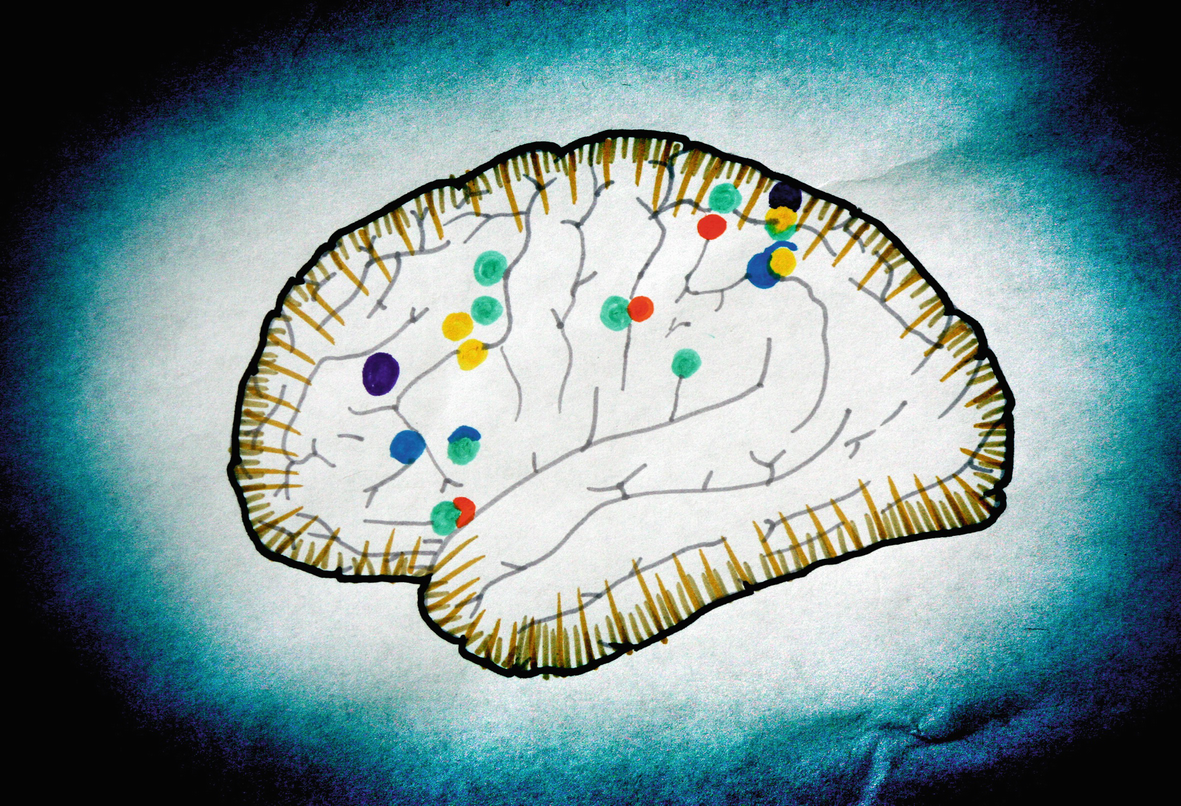

Rendering of the comparative analysis of neural correlates of consciousness in humans according to the meta-analysis by Rees et al. (2002), showing localized areas (in red), Lumer et al. (1998, in aquamarine), Kleinschmidt et al. (1998, in light blue), Portas et al. (2000, in yellow) and Beck et al. (2001, in dark blue)

- (a)

Touching with the eye, reaching with the eye and creating with the eye (based on the Sansc. Root *op as in the Gr. ὄψις, thus [ap]perception, grasping, seeing)

- (b)

Touching with the mind, reaching with the mind and creating with the mind (based on the Sansc. Root *ap as found in Opus/Opera)

How can we then quantify these subjective experiences and judgments as universally valid, when faced with the difficult task of creating a series of guidelines to serve more noble (once this hierarchy of ethical nobility is in place, which in itself warrants further discussion) goals, to protect and serve the general population, or humanity as a whole? A good example to remember in this context, is the famous Stanley Milgram’s experiment, also referred to as the ‘obedience experiment’, which began in July 1961 at Yale University. In this series of social-behavioral psychology experiments, Dr. Milgram was interested in answering questions on the role of authority and obedience to unethical, immoral and possibly dangerous and hostile behavior in the general population. More specifically, three types of individuals were involved in the study; the teacher, the learner and the experimenter. The subject of the study—always playing the role of the teacher, a role picked a priori without the subject’s knowledge—was required to administer a shock to the learner (an actor) for each wrong answer, in 15-volt increments, to the maximum 45-volt shock three times in succession, after which the learner would cease to answer or reply in any way to the questions asked (Milgram 1974). The main controversy regarding the ethics of the experiment is related to the ‘inflicted insight’ suffered by the subjects-teachers, which is considered part of the more general methodology within ‘deceptive debriefing’ with potential future psychologically-emotionally harmful consequences (Levine 1988). More in detail, this criticism stems from the ‘Right to full disclosure’ according to which the researcher not only fully describes the specifics of the research to each participant/subject prior to the beginning of the experiment, but also grants the right to withdraw or refuse participation (Polit and Beck 2014, p. 84).

- (a)

Under pressure or coercion

- (b)

Under perceived or real authority

- (c)

If they are made to believe that they are doing the right thing (the combination of a & b)

In conclusion and paradoxically8 the ethically wrong procedure of Stanley Milgram’s Experiment contributed to the realization of the possibility of ethical wrongdoing within and across perceived opposite sides. Beside considerations proper to ‘the banality of evil’ (Arendt 1963) and ‘the evil of banality’ (Minnich 2016), we should also remember that both (even) Sartre and Camus warned us against the risks of ‘bad philosophies’ which promote the full abandonment of reason/rationality/rational methods, as in the case of (form the existentialist judgment) Heidegger, Husserl, Jaspers, Shestov and especially Kierkegaard, who didn’t just abandon reason, but ‘turned himself to God’ and Husserl, who elevated reason, ultimately arriving at ubiquitous Platonic forms and an abstract god (Tomasi 2016). Under this analysis, embracing the absurd means acknowledging the contradiction between the desire of human reason and the unreasonable world. As it is well known, Sartre defended (his) affirmation of the reality of every truth in a naturalistic manner, as the confrontation of human beings with the possibility of choice. Therefore, since man is “responsible for what he is and at the same time he is responsible for other human beings, he always chooses the better for himself and therefore for humanity”.9

The possibility of free will, free won’t and free choice then is to be understood in a higher sense of personal opinion, as discussed above. The ethical-structural-functional ‘goodness’ of the (human) brain as a universally-similar neural element which is both sensor and deliverer of physical and metaphysical experiences helps navigating such notions of good vs. evil. We mentioned that the ‘aboveness’ of ethics in research could start through the legal path of guidelines, rules and regulations, but we now have to admit that there is an ulterior ‘aboveness’ which involves a higher, more profound sphere. An element of transcendental existence, which many have attributed to a divine, spiritual, religious entity. An entity10 which communicates with us through us, more specifically to the complex apparatus of mind–body processes (in our analysis, especially neurological ones) to create—in this context, we agree with the poetic element therein—our own, true, both objective and subjective experience. A mind–body experience. A mind–body evidence. An experience which, to be completely understood, needs to be fully integrated. In conclusion, we hope that our re-examination of the mind–body problem provided experience and evidence, in ontological and practical (in the clinical sense) terms for the fact that we, human beings, can be ‘out of tune’ with our self, and this tuning activity, which is also a neurological mechanism, a psychological process, and an existential need, can be supported by “(an) appropriate application of (an) appropriate philosophy” to shed light on our true nature and nurture.