Chapter 6

From old world to new

Historians, philosophers, theologians, popularizers, and Nobel Prize committees concerned with 20th-century physics tend to direct their attention to TOEs, and to the microphysics of cosmogony. The emphasis privileges the mind-expanding concepts of relativity theory, quantum physics, and the systematics of elementary particles. A secondary theme stresses the control of nature by physical theory as demonstrated by the release of nuclear energy. After this demonstration, great laboratories, national and international, employing machines as expensive as battleships, multiplied ‘elementary’ particles as well as high-energy physicists and the army of technicians, scanners, computer specialists, engineers, grant officers, bookkeepers, secretaries, and contractors on whom TOE quests now depend. The symbol and acme of this enterprise in the short American century is Fermilab (see Figure 23).

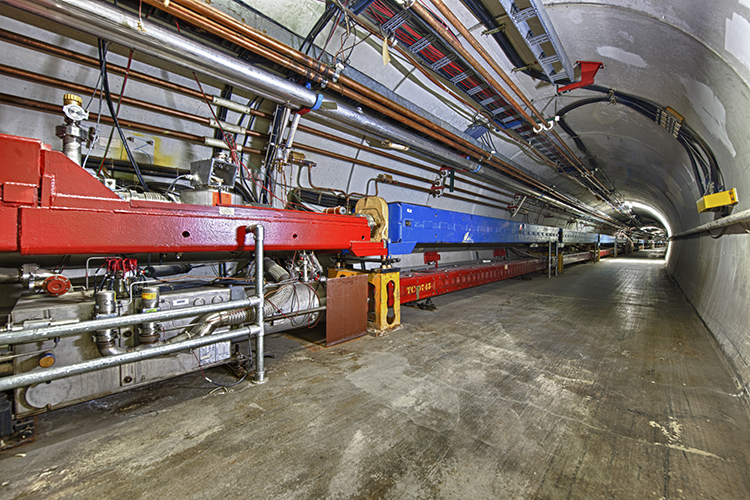

23. A small piece of big physics. A section of the Tevatron tunnel at Fermilab. Protons accelerated in an evacuated circular pipe four miles in circumference attained a velocity 99.99995 percent that of light. The pipe resides within the lower line of boxed superconducting magnets. The upper line of magnets moved antiproton beams at room temperature.

Nevertheless, the romantic investigation of the smallest and the biggest things in the universe employs less than 20 per cent of today’s physicists if the divisional breakdown of the American Physical Society adequately represents the profession at large. The majority of physicists work on condensed matter, plasmas, computers, optics, lasers, polymers, fluid dynamics, materials, atomic, molecular, and nuclear problems, questions of military interest, and border subjects overlapping chemistry, Earth vsciences, biology, medicine, and engineering. They also cultivate the latest sprigs of that old trunk of physica, meteorologica: climatology, plate tectonics, ionospherics, oceanography, and seismology.

Legacies of World War I

While drifting westward towards the US during the 1930s, the centre of gravity of physics experienced tugs from the Soviet Union and Japan. A few numbers will illustrate the vigour of this expansion. The figure of merit is a doubling each decade between 1920 and 1940. That happened in the US for the annual harvest of PhDs in physics (50 to 200) and physicists in industry (400 to 1,600). In Japan, PhDs doubled every seven years, memberships in the Physical and Mathematical Society of Japan every eleven years (reaching 1,100 in 1940), and research laboratories every twenty years (to 325). The rapid growth of physics in the Soviet Union may be indicated by an eight-fold increase in universities and colleges, a six-fold increase in scientific staff, and a twenty-fold increase in employees of the Academy of Sciences.

The feedback mechanism for this explosive growth, and for slower increases in European centres, was the discovery of the importance of physics for national defence, industrial development, and what Italian fascists and Japanese militarists called ‘autarky’. The Great War had shown that determination and technology could make a nation independent of others for products and raw ingredients. Thus it was with nitrogenous fertilizers in Germany and with pharmaceuticals, fine chemicals, electrical appliances, and optical glass in the Entente powers. Among the first research establishments set up in Japan and the Soviet Union were optical institutes charged with perfecting glass for military purposes. In the late 1920s, Italy followed suit with an institute for optics, one of the few successful state-supported high-tech research endeavours during Mussolini’s regime.

Two other industries virtually created during the war, aeronautics and radio, gave employment to physicists in all developed countries after it. Government and industrial laboratories for radio and telephony multiplied, perfecting vacuum tubes, receivers, relays, and transmitters, promoting miniaturization and developing sound recording and playback. Physicists so employed helped to engineer several simultaneous revolutions by providing hardware for large-scale research, radios for domestic leisure, and the loudspeaker for politicians and propagandists. Fascists and Nazis battened on the big lie, the mass rally, and the amplifier.

The science and rhetoric of World War I still confront us in weather reports, which record the vision of Vilhelm Bjerknes (Bergen) and his school of climatologists. Cut off from meteorological information from the West during the war, they filled the hole with the theory of fronts between struggling air masses. They chose their terminology with an eye to trench warfare. Massive data about the upper atmosphere, collected to guide the flights of planes and shells, stood ready to test and extend their theories after the war.

In addition to its legacy of hardware and practically minded scientists, World War I confronted demobilizing physicists with discoveries made in sheltered niches during it. Einstein had pondered the curious fact that a body’s resistance to motion has the same measure as its acceleration under gravity. It followed that all observers can consider themselves at rest in their reference frames if they attribute any acceleration they feel to gravity. This equivalence permitted Einstein to geometrize gravitation 300 years after Galileo had intuited the possibility. The trick was to use non-Euclidean geometry. A wheel spinning at relativistic velocities has a different geometry than when resting because the circumference suffers a Lorentz contraction and the radius does not. Owing to the equivalence of acceleration (in this case of circular motion) and gravity, Einstein inferred that massive gravitating bodies warp the space around them so as to capture other bodies. Depending on the velocity and direction with which a celestial object approaches the warp, it can be swallowed like an apple, acquired as a planet, or deflected as a comet. Just like a Cartesian solar vortex! Einstein completed his ‘general theory of relativity’ in November 1915, the year in which his friend and colleague Fritz Haber enriched the art of war with poison gas.

According to general relativity, light should swerve when passing through warped space. Einstein suggested testing the prediction by comparing the direction of stellar rays that grazed the Sun during a total solar eclipse with their direction six months earlier. In 1918, American astronomers tried to detect the expected shift. They failed. A year later an English expedition found it. The confirmation aroused immense public interest in a world eager to raise its gaze to the stars.

Bohr’s quantum theory of the atom prospered mightily during the war. In neutral Denmark, he and his Dutch student, Hendrik Kramers, developed the correspondence principle, and in neutral Sweden Manne Siegbahn (Stockholm) and his students made precision measurements of high-frequency spectra that gave access to the deep structure of atoms. These measurements exploited two capital prewar discoveries. In 1911–12, Max von Laue (Munich), William Lawrence Bragg (Cambridge), and William Henry Bragg (Leeds) showed that X-rays can be refracted and reflected by crystals, which made possible X-ray spectroscopy and tipped the wave–particle duality towards waves; and in 1913–14 Henry Moseley (Manchester, then Oxford) inaugurated X-ray spectroscopy by detecting high-frequency line spectra characteristic of the elements from which they came. Moseley found that the strongest line from an element Z had a frequency that obeyed the simple Balmer-like formula, ν=K(Z−1)2 × (1−1/22). He interpreted the formula as a confirmation of the concept of atomic number and a signpost to the innermost region of the atom.

Arnold Sommerfeld (Munich), who was too old to fight and impractical in war work, developed Bohr’s theory by introducing additional quantum numbers. With them he accounted for X-ray and complex optical spectra. His systematic presentation of his postulates and results, Atombau und Spektrallinien (1919), gave demobilizing physicists the score of what he called the ‘atomic music of the spheres’. Sommerfeld’s music, though magnificent, was too formal for Bohr’s taste as it introduced quantum numbers and ad hoc limitations on electronic transitions without much physical justification. Bohr preferred his method of intuition and conjecture, which only he, following what Einstein admired as his ‘unfailing tact’, knew how to use.

The third fundamental wartime novelty in basic physics obtruded when Rutherford, snatching time from his defence work, inquired how close to the nucleus Coulomb’s law held. He found a few deviations of a peculiar kind in encounters between hard alpha rays and air molecules: instead of being repelled, the alpha disappeared and a faster, apparently lighter particle emerged. Rutherford supposed that he witnessed a game of marbles in which the alpha entered the nuclear precinct and knocked out a hydrogen nucleus (a ‘proton’), and conjectured that nuclei consisted of alpha particles, protons, and neutral particles each made up of a beta ray and a proton.

From electrons to stars

In the summer of 1922, Bohr gave inspiring, perplexing lectures at Göttingen in which he claimed that the correspondence principle determined how many elements fell into each row of the periodic table. He made do with two quantum numbers. Among his auditors was Sommerfeld’s prize student Wolfgang Pauli, who three years later assigned two more quantum numbers to each electron to derive the lengths of the rows of the periodic table, 2, 8, 18, 32. He required the ‘exclusion principle’ that no two atomic electrons can have the same values of all four quantum numbers. Its implication that antipathy reigned among electrons showed that some concepts of Greek physica remained useful; and its rapid acceptance measured how far atomic physicists had accustomed themselves to the irrationality of the microworld.

In the same year, 1925, another product of Bohr–Sommerfeld rearing, Werner Heisenberg, fresh from a stay with the strict mathematical theorist Max Born (Göttingen), found a calculus relating only observable features of spectral lines. The calculus presented frequencies and intensities in square arrays (‘matrices’) whose entries (the intensities) were located by quantum numbers defining possible electronic transitions. Heisenberg, his former fellow student Pascual Jordan, and their teacher the formalist Born quickly sophisticated Heisenberg’s insight so effectively that very few physicists could understand it. Fortunately for their self-esteem and sanity, Erwin Schrödinger (Zurich), a descendant of Boltzmann’s school, found another calculus without recourse to matrices. Rather, he picked up a hint from the doctoral thesis of Louis de Broglie (Paris), who used relativity theory idiosyncratically to associate a standing wave with an electron moving in a stationary state. Schrödinger devised an equation for this wave (subsequently ‘the ψ-wave’ or ‘wave function’) that depended on the electron’s energy. Calculators soon discovered that they could obtain the numbers in Heisenberg’s matrices by using Schrödinger’s waves.

The almost simultaneous discoveries of wave and matrix mechanics followed two different paths from the same demonstration: that an X-ray can strike an electron much as one billiard ball does another. This ‘Compton effect’ (1922, after Arthur Holly Compton, St Louis) stampeded physicists into taking seriously the concept of the light particle (‘photon’) that Einstein had suggested as a ‘heuristic hypothesis’ in 1905 to account for the photoelectric effect. De Broglie’s coupling of a wave to a particle accepted the photon, whereas Bohr, who had rejected light quanta, tried to elude Compton’s billiard-ball theory. His failed effort helped Heisenberg invent quantum mechanics. After experiments decided for Compton, Bohr gave equal weight to wave and particle properties. Bohr added the qualification that no realizable experiment can require a description employing these contradictory characteristics simultaneously (‘complementarity’, 1927).

Schrödinger tried to interpret the ψ-wave realistically as a measure of the electrical density at every point in the atom. He did not succeed. Physicists reluctantly accepted Born’s elucidation that ψ2 at any place gives the probability of finding the entire electron there. Heisenberg then drew from the mathematical formalism the conclusion that the microphysicist can never do better than reckon the probable, that not even a Demiurge could determine the exact position and corresponding momentum of an electron (‘the uncertainty principle’, 1927). To Einstein and other senior physicists this conclusion was a derogation of duty. Several times Einstein constructed thought experiments that garnered more information than quantum mechanics allowed, and every time Bohr escaped by finding an inconsistency or omission in Einstein’s examples. The defeat of his last attempt, made with two colleagues in 1935, persuaded almost all physicists who still took an interest in the argument that quantum mechanics does give a complete though probabilistic atomic and molecular physics.

By 1935, the quantum mechanics of electrons and radiation rested on a relativistic theory invented by Paul Dirac (Cambridge, 1928). Its results had been as surprising and gratifying as the radio waves unexpectedly generated by Maxwell’s equations. Imposing the requirement of relativistic invariance made the electron’s wave function describe particles with a fourth degree of freedom and either positive or negative energy. Dirac interpreted the extra degree as an intrinsic (but non-mechanical) spin, thus rationalizing Pauli’s quantum numbers and the concept of electron spin proposed to improve spectral systematics by Samuel Goudsmit and George Uhlenbeck (Leyden) in 1925. The negative-energy solutions appeared to describe a particle with positive charge. One that fitted the description (the ‘positron’) quickly turned up in a context soon to be described. Meanwhile, several researchers, including J. J. Thomson’s son George Paget Thomson (Aberdeen), detected the ‘de Broglie wave’ of electrons. The subsequent award of a Nobel Prize to Thomson for showing that the electron is wave-like thus oddly complemented the prize his father had won for showing it to be particle-like.

Dirac’s relativistic theory supposed that a high-energy photon could create an electron–positron pair, which, by subsequent mutual annihilation, could make another photon. Incorporating this demiurgic idea, theorists developed quantum electrodynamics, an account of electrons and photons real and virtual that predicted values of many measurable parameters to exquisite accuracy once they had developed rules to remove the infinities that pestered their calculations. That did not happen until after World War II.

The meagre flux of high-energy alpha particles from natural radioactive sources did not score enough hits to explore nuclear architecture effectively. Several physicists proposed to increase the flux by accelerating beams of protons to the many hundreds of keV (1,000 electron volts) deemed necessary to jam one into a light nucleus. Here the subtleties of quantum mechanics, brought to Rutherford’s laboratory at Cambridge by the imaginative Russian physicist and humourist George Gamow, came to the aid of the marble shooters.

Gamow had noticed that the probabilistic ψ-calculus predicted that a proton could sometimes enter (‘tunnel into’) a nucleus with less energy than it would require if it behaved like a marble and the nucleus like a brick wall. Rutherford’s lieutenant, John Cockcroft, in collaboration with Ernest Walton and the major electrical manufacturer Metropolitan Vickers, exploited tunnelling to reduce lithium atoms to alpha particles with 300 kV protons. By 1932 they had taught their apparatus to hold 700 keV and disintegrated lithium in quantity.

Meanwhile, Ernest Lawrence (Berkeley) had devised a way to add energy in small steps to a spiralling charged particle so that the entire accelerating potential never had to be maintained on any part of the apparatus. The key to his ‘cyclotron’ is that at nonrelativistic speeds the frequency with which a charged particle circulates when confined by a magnetic field does not depend on its energy. Lawrence and his students placed two hollow D-shaped electrodes within a squat cylinder with a proton source at its centre, exhausted the space, applied an oscillating electric field across the gap between the ‘dees’, slathered on sealing wax, shimmed the magnet, and, at the end of 1932, had a tiny beam at 1 MeV (million eV) (see Figure 24). That same year James Chadwick (Cambridge) detected Rutherford’s nuclear neutral particle (‘neutron’). The simplest combination containing it, the deuteron, the nucleus of heavy hydrogen (‘deuterium’), was isolated, again in 1932, a wonder-filled year for physicists if for no one else at the depth of the Great Depression.

24. Patent on the cyclotron. The cyclotron principle—that the accelerated particles’ angular frequency, v/r = (e/m)H, is independent of its energy at nonrelativistic speeds—allows the spiral motion to take place entirely under a constant magnetic field H. As shown on the right, the electric and magnetic fields automatically focus the particle beam.

As available energies increased, so did the number of elements transformed or disintegrated. The marble model persisted, however, until 1934, when Marie Curie’s daughter Irène and her husband Frédéric Joliot (Paris) observed a delay between absorption and admission. Soon nuclear physicists and chemists were making ‘activities’ throughout the periodic table by shooting protons, deuterons, alpha particles, and neutrons at every element they could procure. By 1939, the ever-enlarging Berkeley cyclotron was producing prodigious currents of 16 MeV deuterons. It often laboured around the clock to make radioisotopes with desirable properties as tracers (notably carbon-14) and therapeutic agents (phosphorus-32). The cyclotron was preeminently and, with its large attendant staff, capital costs, and operating expenses, symbolically American. Only eight existed outside the US at the outbreak of World War II. A man from Berkeley had to be summoned to get several of them started.

Just as the marble analogy blinded physicists to artificial activities, the expectation that the products of a transformed element would lie close to it in the periodic table blinded them to nuclear fission. Enrico Fermi and his group (Rome) saw and missed it in the course of irradiating uranium with neutrons. Lise Meitner and her chemist colleagues Otto Hahn and Fritz Strassmann (Berlin) persisted for years in identifying the products of uranium irradiation as ‘transuranic’. Eventually, the chemical evidence persuaded Meitner’s colleagues that the uranium nucleus could be split into two elements of moderate atomic weight. Meitner, then an émigrée in Siegbahn’s laboratory, and her nephew Otto Frisch, then working in Bohr’s institute, proposed the mechanism of fission, which Bohr clarified and broadcast to the world in 1939. Everyone knowledgeable realized that the neutrons released by fission in a large sample of uranium might provoke a chain reaction.

After the excitement of 1920, interest in Einstein’s stable, almost flat, closed universe flagged for a decade. However, his equations also allowed expanding, contracting, and oscillating universes. The first to treat expansion as the true state of affairs was Georges Lemaître, a Belgian priest who had studied at Cambridge and Harvard, where he learned about Edwin Hubble’s (Caltech) capital discovery that other ‘island universes’ (galaxies) are receding from ours with speeds proportional to their distances from us. In 1931, Lemaître proposed that the expansion spreading the galaxies began when the universe, encapsulated in a single atom, exploded.

Just after the explosion, everything was radiation and maybe electrons. Where then did Thales’ water come from? The question of the origin of the elements drew George Gamow, his Hungarian colleague Edward Teller (Washington, DC), and the world’s leading nuclear theorist Hans Bethe (Cornell), all immigrants to the US, into the expanding universe. Gamow supposed that stellar interiors provided the ovens that cooked protons into the elements and that his tunnelling mechanism kept down the temperature required. In 1938, Bethe succeeded in defining the nuclear syntheses that power the stars, which did not require or reach a temperature at which nuclei heavier than helium’s could be made. Gamow therefore relocated his ovens (unnecessarily, as it turned out) to the hotter regime of the early expanding universe.

In 1942, in the last of the Washington meetings organized by Gamow and Teller that had helped set the agenda of American theoretical physics in the 1930s, the participants agreed that ‘the elements originated in a process of explosive character, which took place “at the beginning of time” and resulted in the present expansion of the universe’. Thus the physics of the laboratory, where the energies required to add a nucleon to a nucleus could be measured, made contact with speculations about the state of the universe at grossly inaccessible times and places. At this point Gamow broke off speculating about the great explosion at the origin of time to help the US navy improve its gunnery in the here and now.

Hotness and coldness

The discovery of radioactivity breathed new life into the subject of atmospheric electricity created by Franklin’s theory of lightning. Radioactive substances near the Earth’s surface give off radiation that ionizes the lower atmosphere and keeps Earth from cooling at the rate Lord Kelvin had calculated. As expected, the ionizing radiation declined with height above the Earth’s surface. Consequently the discovery that at higher altitudes ionization increases bewildered Victor Hess (Vienna, 1912) and other daredevil physicists who measured radiation from balloons. Aristotle’s problem arose again: was the cause located beneath or above Moon? Hess judged it to lie outside the solar system since it operated with equal strength day and night. After World War I, Millikan took up the question using war-surplus sounding balloons. In his opinion, incoming ‘cosmic rays’ were photons created in space during the amalgamation of four protons. Absorption measurements and calculations of the energy released (via E = mc2) at first favoured Millikan’s fantasy that cosmic rays were the ‘birth cries’ of atoms. In 1929, Walther Bothe and Werner Kohlhörster (Berlin) found that most of the charged rays at sea level could penetrate 10 inches of gold and so could not be photons.

The Earth itself provided the instrument needed to decide whether cosmic rays are charged particles. Bruno Rossi (Florence), Lemaître, and Manuel Vallarta (MIT)—adapting Carl Størmer’s (Oslo) calculations of the paths of particles causing the aurora borealis—showed that the Earth’s magnetic field would bend the trajectories of incoming charged particles by an amount dependent on latitude. An international team led by Compton and deploying standardized ponderous electroscopes supplied by the Carnegie Institution’s Department of Terrestrial Magnetism, confirmed that the rays obeyed the calculations.

The latitude survey counted all the cosmic rays that penetrated its instruments. Bothe and Kohlhörster had followed the path of one and the same ray through their gold brick. They had considered only events in which Geiger counters placed above and below the brick fired together. Their method excited Rossi and his fellow student in Florence, Giuseppe Occhialini, to do better. Exploiting vacuum tubes available for radios, they detected counter coincidences electronically. Thus they discovered that a cosmic ray can sail through a metre or more of lead, implying energies of several GeV (1,000 MeV).

Electronic counters when arranged to trigger a cloud chamber made possible efficient self-portraiture of a cosmic ray. This chamber, invented before the war by C. T. R. Wilson (Cambridge) and improved after it by P. M. S. Blackett (also Cambridge), recorded tracks by condensing water droplets around ions created by the passage of energetic charged particles. The intensity of ionization and the curvature of the tracks in a magnetic field gave information about the particles’ charge, mass, momentum, and energy. Blackett’s group inspected 400,000 tracks in 1924 to find six showing Rutherford’s nuclear marble game. By hooking up Rossi’s coincidence circuitry to the cloud chamber, however, they quickly obtained many fine pictures of the tracks of positive electrons. That was shortly after Carl Anderson (Caltech) chanced to find a beautiful example on one of the 3,000 exposures he made without coincidence circuitry.

The positron put in its appearance in 1932, the year of the neutron, deuteron, cyclotron, and Cockcroft–Walton. Five years later, Anderson and others at Caltech, Harvard, and Tokyo found the tracks of what they called ‘mesotrons’ or ‘mesons’—particles weighing around 200 electron masses. The weight agreed with that of the hypothetical ‘Yukon’, which Hideki Yukawa, the first important domestically trained Japanese theorist, had proposed in 1935 as the mediator of the ‘strong force’ between neutrons and protons. Physicists had already accepted a ‘weak force’ to account for beta decay and an imponderable uncharged ‘neutrino’ to conserve energy in the process. Naturally, they identified Anderson’s mesotron with the Yukon.

In 1943, Rossi, resettled in the US, determined the mesotron’s half-life at rest by measuring the delay between its arrival on Earth and the emission of the electron into which it decays. The result, two microseconds, was not long enough to bring mesotrons down through the atmosphere in the numbers observed at the surface. Since, however, they move at relativistic speeds, they live detectably longer in motion than at rest, and so delivered the first direct confirmation of Einstein’s time dilation.

Physicists gained the keys to another new kingdom at the same time they discovered cosmic rays. In 1911, Heike Kamerlingh Onnes (Leyden) and his associates found that the electrical resistance of pure metals plummeted suddenly to zero at liquid-helium temperatures. This phenomenon, to which he gave the name ‘superconductivity’, was not his greatest surprise in the kingdom of the cold. Liquid helium had an anomalously low density and would not freeze. These hints stimulated research after the war following a pattern similar to that of nuclear physics. A new-world laboratory challenging the old leader invented new costly apparatus that magnified effects a hundredfold. Stranger phenomena soon came to light. Theorists competed to explain them by applying quantum mechanics to fields founded in the decade before World War I.

The new-world site that challenged the old (in this case Leyden) was again Berkeley. The counterpart to Lawrence, William Francis Giauque, attained temperatures within a degree of absolute zero in 1933 by applying ‘adiabatic demagnetization’. This technique cools a paramagnetic crystal to liquid-helium temperatures where the little thermal energy it retains resides in the vibrations of its lattice and the disordered spins of its atoms. Giauque used a strong magnetic field to line up the spins and liquid helium to carry away the heat thus generated, as in the isothermal compression of a steam engine. Having insulated the specimen thermally, he turned off the field, the spins regained their disorder at the expense of the lattice vibrations, and the temperature fell, as in an engine’s adiabatic expansion, to half a degree absolute. By 1938, he had arrived within 0.004° centigrade of absolute zero. Experimentalists thus acquired a tool for studying superconductivity and other odd phenomena in regions well below the liquefaction point of helium. The odd phenomena, which included a sudden, million-fold jump in heat conductivity and an abrupt vanishing of viscosity in helium, gave rise to the concept of superfluidity (1938).

Effective theories of superconductivity and superfluidity drew on a quantum reformulation of the old electron theory of metals synthesized by Sommerfeld and Bethe in 1933. Building on the insights of Heisenberg’s student Felix Bloch (Leipzig, then Stanford), they treated unbound electrons in crystals at low temperatures as free except for the exclusion principle, which forces them to fill up the lowest quantum states available. Only those near the top of this electron sea can take up additional energy as the temperature rises and so sail freely along or above it. The new model agreed with the old theory where it had worked, and explained its failures (as in specific heats) where it had not. These results, obtained by European theorists, pertained to ideal solids. Beginning in 1933, Eugene Wigner and his student Frederick Seitz (Princeton) began the hard calculations of what Pauli called ‘dirty effects’ (caused by impurities), and other American groups, notably John Slater’s at MIT, soon mucked in.

The free electron model did not account for superconductivity. That became more embarrassing as information about it mounted during the 1930s. There were two main centres of research: Oxford, where Franz Simon, Fritz London, and other German-Jewish refugee physicists, descended intellectually from Nernst and, in Simon’s case, Giauque, set up a thriving low-temperature laboratory with the help of British industry; and Moscow, where Peter Kapitza, a former associate of Rutherford, and the theorist Lev Landau, worked in the institute given to Kapitza as consolation for his unwilling separation from Cambridge. Although some of these men glimpsed the nature of a workable theory of superconductivity, they kindly allowed Americans to invent it.

Americanization

During World War II, physical science and engineering created radar, which led to microwave technologies and the laser; atomic bombs, which brought nuclear power; the V-2 rocket, which launched the aerospace industry; and the ‘bombe’, the essential device for breaking the Enigma codes, which, with electronic analysers constructed for calculating ballistic trajectories, pioneered the electronic computer. This intrusive device emerged in the late 1940s in materializations of Alan Turing’s conception of a ‘universal machine’, which related to the computer as Carnot’s theory did to the steam engine. The ENIAC, the first postwar electronic computer, was rich in vacuum tubes (some 17,468), poor in memory, and programmable only by changing its wiring. Developed at the University of Pennsylvania under an army contract to calculate firing tables, it soon switched to working on the hydrogen bomb. Its successor, the MANIAC, built on the architecture created primarily by John von Neumann (Princeton), contained its own instructions and could be reprogrammed without rewiring. Von Neumann architecture has survived throughout the revolutionary advances made possible by transistors, microprocessors, and gigabyte memories.

As the only major belligerent to emerge from the war rich and relatively unscathed, the US was best positioned to capitalize on the computer and other inventions. To augment its head start in exploiting allied wartime inventions, the US sent scavengers behind its advancing armies to seize information about developments in Germany. In the transfer of Werner von Braun’s rockets and technicians to Alabama (‘Operation Paperclip’), the US picked up men as well as hardware without inquiring zealously about their degrees of Nazism. The French, British, and Russians also took what they could.

Americanization meant not only an emphasis on big machines and large collaborations, and hurried competition fostered by ambition and federal funding agencies, but also a neglect of matters that the generation of Bohr and Einstein had thought important. Just as Newton’s followers in the 18th century dropped his scruples about distance forces and ignored his rules of philosophizing, so postwar Americans did not bother themselves with the enigmas of quantum physics or, in general, with the high culture of the prewar Europeans. The uncomplicated unlearnedness of Lawrence was closer to the norm than the tortured culture of Robert Oppenheimer.

The Americanization of theoretical physics made its postwar debut in a conference held in June 1947 at Shelter Island to insulate the participants from the demands society had placed on ‘brilliant young’ physicists—the only type, apart from Einstein, it knew. The planners consulted Pauli, then at Princeton, and ignored his suggestion to reconvene the creators of quantum mechanics. They opted for a small specialist meeting featuring upcoming Americans, homegrown theorists from Oppenheimer’s school at Berkeley and Caltech and other youths whose science the war had honed. The plan of the meeting, drawn up by John Wheeler (Princeton), reads like the agenda for a fast-paced attack on a wartime problem. The brilliant young things were to begin with the question whether a logical, consistent, and comprehensive quantum theory exists; if yes, to frame it; if no, to define the limitations of existing theory and propose ways to extend it.

Two-thirds of the participants were American by birth and most of the others were émigrés of the 1930s. Bohr’s former collaborator Kramers (Leyden) urged in vain for consideration of Copenhagen’s old-world view that the troubles of quantum electrodynamics (‘QED’) could not be overcome without a better classical electron theory on which to apply the correspondence principle. The intellectual leader of the meeting was Oppenheimer. Performing as he had when director of Los Alamos, he went straight to current problems like the demoralizing infinities of QED and the puzzling mesotron that lived too long to be a Yukon. Robert Marshak (Rochester) made the generous suggestion, soon confirmed, that the Yukon was not the only meson in the world. As for the infinities, pragmatic ‘renormalization’ of QED by Richard Feynman and Julian Schwinger (and independently by Yukawa’s classmate Sin-Itiro Tomanago) saved the phenomena to as many decimal places as anyone could measure.

A second conference, held in 1948 with most of the same participants but with Bohr in place of Kramers, inspired an instructive clash between new and old. Feynman (Cornell) sketched his version of QED with diagrams of the sort that soon became indispensable. Bohr did not like them: they violated the uncertainty principle by assigning definite paths to particles and they represented positrons incomprehensibly as electrons moving backward in time. No matter. Feynman and Schwinger (Harvard) were the new world-class theorists.

The US had learned to produce not only theorists of the Jewish-cosmopolitan-extrovert type like Oppenheimer, Feynman, and Schwinger, but also of an introverted, unassuming, gentile type. John Bardeen began neither as a physicist nor as a New Yorker, but as a petroleum engineer in the Midwest. Sickening of oil, he enrolled in graduate school in Princeton and worked on solids under Wigner. He started as a physicist at Bell Labs where, at the end of the war, he joined a small group under William Shockley (a student of Slater’s from MIT) dedicated to making an amplifier using the semi-conductors silicon and germanium, known intimately for their use in radar detectors. Bardeen’s mastery of the quantum theory of solids together with the experimental inventiveness of Walter Brattain and the support of Shockley resulted, in 1947, in the invention of the transistor and a revolution in electronics. While Bardeen was in Stockholm in 1956 to collect the Nobel Prize awarded to him, Brattain, and Shockley, his theorist colleagues Robert Schrieffer and Leon Cooper (all then at the University of Illinois) continued work on his idea that interactions between vibrations of the crystal lattice and electrons skimming the surface of Sommerfeld’s electronic sea supplied the mechanism of superconductivity. It was back to Stockholm for Bardeen, the only two-time winner of the Nobel Prize in Physics.

New business

In 1944, drawing up plans for the postwar era, Lawrence reckoned that his laboratory would require around $85,000 annually and gifts in kind from the army’s Manhattan Engineer District to do well in peacetime. A year later, knowing that the bomb project would succeed, he raised his expectations a hundredfold. In the last days before the District became the civilian Atomic Energy Commission (AEC, 1947), it gave Lawrence hundreds of kilo-dollars’ worth of electronics for incorporation into the three major accelerators his laboratory had in hand. One of these, the 184-inch synchrocyclotron, started up just before the AEC did, with the presumed capacity of making mesons. Attempts to capture them on photographic emulsions failed, even after Cecil Powell (Bristol) and his cosmic-ray group had confirmed Marshak’s conjecture by photographing the birth of a Yukon (see Figure 25). Its parent, the ‘pi-meson’ or ‘pion’, is the mediator of the strong force. Giulio Lattes (Brazil), who had worked with Powell, came to Berkeley and showed the cyclotroneers how to photograph pions. As in induced radioactivity and nuclear fission, a Berkeley accelerator had produced the means but not the men to make a discovery realized in Europe with much simpler equipment. But the future belonged to the big machine.

25. Birth and death in the microworld. An emulsion stack exposed to cosmic rays gave the first proof of the two-meson hypothesis. In the example furthest left, a pion on the right strikes a molecule, giving rise to a muon that proceeds upwards until it decays into an electron and a neutrino.

The AEC, uncertain whether better acquaintance with the pion might not be necessary for the next generation of nuclear bombs or power, authorized a machine in the 2–3 GeV range for its laboratory at Brookhaven on Long Island. Berkeley kept ahead with a 6 GeV version expected to produce antiprotons. The huge energies involved required a new design, in which protons could be accelerated to relativistic speeds not by spiralling out under the pole pieces of a large magnet, but by being confined by small fast-acting magnets in a narrow pipe laid out in a circle over 36 metres in diameter.

When completed in 1954, the ‘Bevatron’ was an engineering marvel that could accelerate protons through four million turns (300,000 miles) in 1.85 seconds to 6.2 GeV. The following year, a group led by Fermi’s former student Emilio Segrè (Berkeley) detected the antiproton. The detection was noteworthy not only for confirming the general theory of antimatter but also for revealing serious difficulties in apportioning credit for discoveries in big-machine physics. The physicist-builders of the Bevatron certainly deserved some credit. The designer of the quadrupole focusing magnets Segrè’s group used, Oreste Piccione, an Italian émigré working at Brookhaven, demanded it. But only Segrè and his senior collaborator Owen Chamberlain received the Nobel Prize. Piccione’s subsequent suit for a share was thrown out of court not on the merits, but because he had waited too long to bring it. Hundreds of physicists, from graduate students through veteran scientists, may now work on a single ‘experiment’. The feeling of being another cog in a great machine, and the certainty that the project leaders would get the credit for its results, drove many individualists into other sorts of work.

Nonetheless, accelerator laboratories multiplied. While the Bevatron settled down to making strange particles and its attendants developed the bubble chamber—a detector that rivalled the main machine in cost and complexity and depended on cryogenics developed for the hydrogen bomb—competitors first nationally and then internationally came to threaten Berkeley’s lead in high-energy physics (HEP). Fermilab, founded near Chicago in 1967, took the lead. Berkeley compensated by diversifying into projects of more immediate public utility and by cooperating more fully with other laboratories. In this way it copied Europeans who, being unable after the war to match US investments in HEP, banded together to form the Centre Européen pour la Recherche Nucléaire (CERN), which now has the largest accelerator in the world. Its circumference of 27 km crisscrosses the French-Swiss border near Geneva (see Figure 26).

26. Very big science. A view of CERN indicating the route of the Large Hadron Collider under France and Switzerland. In 2009 its greater capabilities caused the Tevatron to shut down. Other acronyms (ALICE, ATLAS) designate experimental sites; SPS (Super Proton Synchrotron), CERN’s first big instrument, now supplies the main ring with its initial beam.

While pioneering in international scientific collaboration, CERN has been a cornucopia of elementary particles. Most recently, in 2012, it had the highly hyped honour of detecting the heavy particle postulated by Peter Higgs (Edinburgh) in the 1960s to account for the mass of other particles. In the interim, physicists reduced nucleons and other heavy particles to combinations of ‘quarks’ held so firmly together by ‘gluons’ that experiment can never pry them apart. According to this standard model, whose playful American terminology jars pleasantly with Whewell’s classical coinage, the nuclear, weak, and electromagnetic forces were one and the same during the very early universe.

Immediately after World War II, English scientists who had worked on radar copied Galileo and directed their war-surplus equipment towards the heavens. The first important groups grew in Cambridge (J. A. Ratcliffe, Martin Ryle) and Manchester–Jodrell Bank (Bernard Lovell). They mapped radio sources in the solar system and identified many in other galaxies. In one source, Maarten Schmidt (Caltech) found evidence of a red shift implying great distance, which, with its observed brightness, indicated an output of energy some hundreds of times that of our entire galaxy. Many such ‘quasi-stellar objects’ (quasars) then put in an appearance, as did ‘pulsars’ (Jocelyn Bell et al., 1967), which emit staggering bursts of energy at regular short intervals. These objects, and other odd stellar species uncovered by X-ray, infrared, radio, and visual observations, helped rekindle interest in cosmology.

When the US entered radio astronomy around 1950, it possessed the first large telescope built to detect centimetric microwaves. In this region, in 1965, two physicists at Bell Telephone Laboratories discovered an annoying universal background radiation while surveying sources of radio noise. With the help of Robert Dicke and others at Princeton who unknowingly had revived Gamow’s cosmological ideas, the Bell discoverers, Arno Penzias and Robert Wilson, identified the ubiquitous noise with radiation left over from the aboriginal explosion (or ‘Big Bang’) of the universe. According to Gamow and his student Ralph Alpher, radiation had gone its own way, disjoined from matter, shortly after the Bang and subsequently cooled as it expanded with the universe. The noise that bothered Bell Telephone had a temperature not far from that expected from the expansion rate and Planck’s formula. The community of cosmologists was then enjoying a dispute between Big Bangers (Gamow and the Princeton group), and Steady-State Men, who supposed that spontaneous creation of hydrogen atoms compensated for the dilution effected by expansion (Fred Hoyle, Hermann Bondi, and Thomas Gold, all Cambridge). The background radiation settled the question.

Radar expertise gave birth to a discovery of greater importance to most people than cosmic noise. In 1954, Charles Townes, who worked at Bell Labs from 1939 to 1947 on centimetric radar, combined war surplus with Einstein’s old idea of ‘stimulated emission’, a quantum process by which incoming light entices an atom in an excited state to radiate. The resulting device, the maser, which two Nobel laureates at the university (Columbia) to which Townes had moved from Bell Labs told him would not work, worked on molecules. Townes and others soon found ways to promote the maser to optical frequencies. The uses of the new device, the laser, in physics and everyday life are legion: holography, rock concerts, surgery, light art, CD players, barcode readers, and so on. The Handbook of Laser Technology published fifty years after Townes’ invention of the maser required 2,665 pages to describe them all.

Steering missiles, rockets, space probes, and nuclear submarines, testing nuclear explosives above and under ground and water, monitoring test-ban treaties, and searching for uranium and other strategic materials required exact information about Earth, ocean, and atmosphere. The relevant specialties—seismology, ionospherics, meteorology, and oceanography—had received little funding or prestige between the wars in comparison with nuclear physics. After World War II the US government threw money at them and joined enthusiastically in underwriting the multi-billion-dollar International Geophysical Year dedicated to the worldwide collection of pertinent data. At the same time, 1957, Earth sciences received a pleasant jolt from the launch of Sputnik. The National Aeronautics and Space Administration (NASA), set up to close the ‘space gap’ and to put a man on the Moon, needed to know what its rockets, satellites, and space vehicles would encounter en route, in orbit, and on arrival. Bits of moon rock, displayed around the US, promoted Earth scientists as the mushroom cloud had nuclear physicists.

Flights of meteors, the solar wind, and the Earth’s magnetosphere have become as predictable as the tides and even the weather, which is now usually forecast more accurately by meteorologists than by astrologers. This improvement was another dividend from military investments. Before World War II, many meteorologists rated the concept of fronts as ‘risky’ and the prospect of a dynamical weather theory as ‘unpromising’. After the war, Jule Charney (Princeton, MIT) and von Neumann adapted geostrophic models (winds blowing along isobars) to computation by electronic computers. Models incorporating barocline instabilities (mismatches between the gradients of temperature and pressure) then developed together with computer capability and data collection via radar and satellite. These conceptual and computational advances have helped to identify anthropogenic contributions to climate change.

The stimulation of interest in Earth sciences by government investment after World War II led to a fundamental discovery. Oceanographers detected that seafloor sediments and high ridges lying in the middle of the great oceans were relatively youthful, and that the foothills of the immense mid-ocean mountain chains enjoyed a surprisingly benign temperature. Further investigation disclosed that the temperate regime arose from melted rocks bubbling up from cracks in the ocean floor. In 1960, Henry Hess (Princeton) conjectured that the warmth and youth of the sediments indicated that molten magma rose from the Earth’s interior at these ridges and spread the seafloor apart. That could not continue for very long unless the spreading floor disappeared somewhere. Obvious loci for such brutal events are fault lines showing volcanic activity.

The developers of this theory had in mind the conjecture made in 1912 by Alfred Wegener (Marburg), who argued from the distribution of species, the shape of continents, and the presence of coastal mountain ranges that the Earth’s entire landmass once fitted together. ‘Pangaea’ subsequently broke apart and the continents drifted to where we find them. In the modern version of Wegener’s theory, it is not the continents, but ‘plates’ on which they and the oceans ride, that migrate. This theory, ‘plate tectonics’, received timely support from an entirely disparate line of inquiry into the residual magnetism of rocks. Geologists studying the meanderings of Earth’s magnetic poles as recorded in ordinary rocks reached the astonishing conclusion that Earth’s field has reversed from time to time, the south pole going north and the north south. The most recent of these reversals took place less than a million years ago.

In 1963, Fred Vine and Drummond Matthews (Cambridge) and Lawrence Morley (Canadian Geological Survey) suggested that the sea floor had recorded the magnetic reversals in stripes parallel to the vents from which the molten rock emerges. And this is what the oceanographic research ship Eltanin, operated by Columbia’s Lamont Geophysical Laboratory, discovered. The travels of the continents, as revealed with the help of sensitive magnetometers, mass spectrometers, argon-potassium radioactive dating, and other instrumentation derived from military technology, take place at the brisk tempo of 4.5 cm per year.

The resources of some international facilities—like CERN and the European Space Agency, high-profile national ones like Russia’s space programme, and some industrial research laboratories—have long challenged American supremacy in physics. More recently, Japan, China, and India have added their appreciable weight to the competition. Nonetheless, the US still dominates research. This is not merely because of the amount of money that has poured into its universities and national laboratories from federal and state government, the military, and industry. American dominance will probably continue for a time even as international pressures progressively reduce it. US research universities train foreign graduate students and postdocs as well as domestic ones, and do so efficiently in the approximation to English that is the international language of science.