‘Life is what the least of us make most of us feel the least of us make the most of.’

Willard Quine1

‘I have opinions of my own – strong opinions – but I don't always agree with them.’

President George W. Bush

Ever since these early realisations that there are properties of the Universe which are necessary for life, there has been growing interest in what has become known as the ‘Anthropic Principle’, and a wide-ranging debate has continued amongst astronomers, physicists and philosophers about its usefulness and ultimate significance. One of the reasons for this degree of interest has been the discovery that there are many ways in which the actual values of the constants of Nature help to make life a possibility in the Universe. Moreover, they sometimes appear to allow it to be possible by only a hair's breadth. We can easily imagine worlds in which the constants of Nature take on slightly different numerical values where living beings like ourselves would not be possible. Make the fine structure constant bigger and there can be no atoms, make the strength of gravity greater and stars exhaust their fuel very quickly, reduce the strength of nuclear forces and there can be no biochemistry, and so on. There are three types of change to consider. Tiny, infinitesimal, changes are possible. If we change the value of the fine structure constant only in the twentieth decimal place there will be no bad consequences for life that we know of. If we change it by a very small amount, say in the second decimal place, then the changes become more significant. Properties of atoms are altered and complicated processes like protein folding or DNA replication may be adversely affected. However, new possibilities for chemical complexity may open up. Evaluating the consequences of these changes is difficult because they are not so cut and dried. Third, there are very large changes. These will stop atoms or nuclei existing at all and are much more clear-cut as a barrier to developing complexity based upon the forces of Nature. For many conceivable changes, there could be no imaginable forms of life at all.

First, it is important to be quite clear about the way in which Dicke introduced his anthropic argument since there is considerable confusion2 amongst commentators. A condition, like the existence of stars or certain chemical elements, is identified as a necessary condition for the existence of any form of chemical complexity, of which life is the most impressive known example. This does not mean that if this condition is met that life must exist, will never die out if it does exist, or that the fact that this condition holds in our Universe means that it was ‘designed’ with life in mind. These are all quite separate matters. If our ‘necessary’ anthropic condition is truly a necessary condition for living observers to exist in the Universe then it is a feature of the Universe that we must find it to possess, no matter how unlikely it might appear a priori.

The error that many people now make is to assume that an anthropic argument of this sort is a new scientific theory about the Universe that is a rival for other more conventional forms of explanation as to why the Universe possesses the ‘necessary’ anthropic condition. In fact, it is nothing of the sort. It is simply a methodological principle which, if ignored or missed, will lead us to draw incorrect conclusions. As we have seen, the story of Dirac and Dicke is a case in point. Dirac did not realise that a Large Number coincidence was a necessary consequence of being an observer who looks at the Universe at a time roughly equal to time required for stars to make the chemical elements needed for complex life to evolve spontaneously. As a result he drew the wrong conclusion that huge changes needed to be made to the laws of physics – changing the law of gravity to allow G to vary in time. Dicke showed that although such a coincidence might appear unlikely a priori, it was in fact a necessary feature of a universe containing observers like ourselves. It is therefore no more (and no less) surprising a feature of the Universe than our own existence.

There are many interesting examples of observer bias in less cosmic situations than the one considered by Dicke. My favourite concerns our perceptions of traffic flow. A recent survey of Canadian drivers3 showed that they tended to think that the next lane on the highway moves faster than the one they are travelling in. This inspired the authors of the study to propose many complex psychological reasons for this belief amongst drivers, thinking perhaps that a driver is more likely to make comparisons with other traffic when being passed by faster cars than when overtaking them or that being overtaken leaves a bigger impression on a driver than overtaking. These conclusions are by no means unimportant because one of the conclusions of the study was that drivers might be educated about these tendencies so as to help them resist the constant urge to change lanes in search of a faster path, thereby speeding total traffic flow and improving safety. However, while the psychological causes might well be present, there is a simpler explanation for the results of the survey: traffic does go faster in the other lanes! The reason is a form of observer selection. Typically, slower lanes are created by overcrowding.4 So, on the average, there are more cars in congested lanes moving slowly than there are in emptier lanes moving faster.5 If you select a driver at random and ask them if they think the next lane is faster you are more likely to pick a driver in a congested lane because that's where most drivers are. Unfortunately, because of observer bias the driver survey does not tell you anything about whether it is good or bad to change lanes. The grass, maybe, is always greener on the other side.

Figure 8.1 Why do the cars in the other lane seem to be going faster? Because on the average they are!6

Once we know of a feature of the Universe that is necessary for the existence of chemical complexity, it is often possible to show that other features of the Universe that appear to have nothing to do with life are necessary by-products of the ‘necessary’ condition. For example, Dicke's argument really tells us that the Universe has to be billions of years old in order that there be enough time for the building blocks of life to be manufactured in the stars. But the laws of gravitation tell us that the age of the Universe is directly linked to other properties it displays, like its density, its temperature, and the brightness of the sky. Since the Universe must expand for billions of years it must become billions of light years in visible extent. Since its temperature and density fall as it expands it necessarily becomes cold and sparse. As we have seen, the density of the Universe today is little more than one atom in every cubic metre of space. Translated into a measure of the average distances between stars or galaxies this very low density shows why it is not surprising that other star systems are so far away and contact with extraterrestrials is difficult. If other advanced life-forms exist in the Universe then, like us, they will have evolved unperturbed by beings from other worlds until they reached an advanced technological stage. Moreover, the very low temperature of radiation does more than ensure that space is a cold place. It guarantees the darkness of the night sky. For centuries scientists have wondered about this tantalising feature of the Universe. If there were huge numbers of stars out there in space then you might have thought that looking up into the night sky would be a bit like looking into a dense forest (Figure 8.2).

Figure 8.2 If you look into a deep forest then your line of sight always ends on a tree.7

Every line of sight should end on a star. Their shining surfaces would cover every part of the sky making it look like the surface of the Sun. What saves us from this shining sky is the expansion of the Universe. In order to meet the necessary condition for living complexity to exist there has to be ten billion years of expansion and cooling off. The density of matter has fallen to such a low value that even if all the matter were suddenly changed into radiant energy we would not notice any significant brightening of the night sky. There is just too little radiation to fill too great a space for the sky to appear bright any more. Once, when the universe was much younger, less than a hundred thousand years old, the whole sky was bright, so bright that neither stars nor atoms nor molecules could exist. Observers could not have been there to witness it.

These observations have other by-products of a much more philosophical nature. The large size and gloomy darkness of the Universe appear superficially to be deeply inhospitable to life. The appearance of the night sky is responsible for many religious and aesthetic longings born out of our apparent smallness and insignificance in the light of the grandeur and unchangeability of the distant stars. Many civilisations worshipped the stars or believed that they governed their future while others, like our own, often yearn to visit them.

George Santayana writes in The Sense of Beauty8 of the emotional effect that results from the contemplation of the insignificance of the Earth and the vastness of the star-spangled heavens. For,

‘The idea of the insignificance of our earth and of the incomprehensible multiplicity of worlds is indeed immensely impressive; it may even be intensely disagreeable … Our mathematical imagination is put on the rack by an attempted conception that has all the anguish of a nightmare and probably, could we but awake, all its laughable absurdity … the kinship of the emotion produced by the stars with the emotion proper to certain religious moments makes the stars seem a religious object. They become, like impressive music, a stimulus to worship.

Nothing is objectively impressive; things are impressive only when they succeed in touching the sensibility of the observer, by finding the avenues to his heart and brain. The idea that the universe is a multitude of minute spheres circling like specks of dust, in a dark and boundless void, might leave us cold and indifferent, if not bored and depressed, were it not that we identify this hypothetical scheme with the visible splendour, the poignant intensity, and the baffling number of the stars.

… the sensuous contrast of the dark background, – blacker the clearer the night and the more stars we can see, – with the palpitating fire of the stars themselves, could not be exceeded by any possible device.'

Others have taken a more prosaic view. The heavyweight English mathematician and philosopher Frank Ramsey (the brother of Michael Ramsey, the former Archbishop of Canterbury) responded to Pascal's terror at ‘the silence of the infinite spaces’ around us in a sanguine fashion by remarking that,

‘Where I seem to differ from some of my friends is in attaching little importance to physical size. I don't feel the least humble before the vastness of the heavens. The stars may be large, but they cannot think or love; and these are qualities which impress me far more than size does. I take no credit for weighing nearly seventeen stone. My picture of the world is drawn in perspective, and not like a model drawn to scale. The foreground is occupied by human beings, and the stars are all as small as threepenny bits.’9

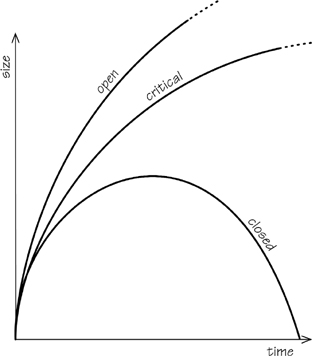

Yet, although size isn't everything, on a cosmic scale it is most certainly something. The link between the time that the expansion of the Universe has apparently been going on for (which we usually call the ‘age’ of the Universe) and other things to do with life was something that cosmologists should have latched on to far more quickly. It could have stopped them pursuing another incorrect cosmological possibility for nearly twenty years. In 1948 Hermann Bondi, Thomas Gold and Fred Hoyle introduced a rival to the expanding Big Bang Universe. The Big Bang theory10 implied that the expansion of the Universe began at a definite past time. Subsequently, the density and temperature of the matter and radiation in the Universe fell steadily as the Universe expanded. This expansion may continue forever or it may one day reverse into a state of contraction, revisiting conditions of ever greater density and temperature until a Big Crunch is encountered at a finite time in our future (see Figure 8.3).

This evolving scenario has the key feature that the physical conditions in the Universe's past were not the same as those that exist today or which will exist in the future. There were epochs when life could not exist because it was too hot for atoms to exist; there were epochs before there were stars and there will be a time when all the stars have died. In this scenario there is a preferred interval of cosmic history during which observers are most likely first to evolve and make their observations of the Universe. It also implied that there was a beginning to the Universe, a past time before which it (and time itself perhaps) did not exist, but it was silent as to the why or the wherefore of this beginning.

The alternative scenario created by Bondi, Gold and Hoyle was motivated in part by a desire to do away with the need for a beginning (or a possible end) to the Universe. Their other aim was to create a cosmological scenario that looked, on the average, always the same so that there were no preferred times in cosmic history (see Figure 8.4). At first this seems impossible to achieve. After all, the Universe is expanding. It is changing, so how can it be rendered changeless? Hoyle's vision was of a steadily flowing river, always moving but always much the same. In order for the Universe to present the same average density of matter and rate of expansion no matter when it was observed, the density would need to be constant. Hoyle proposed that instead of the matter in the Universe coming into being at one past moment it was continuously created at a rate that exactly countered the tendency for the density to be diluted by the expansion. This mechanism of ‘continuous creation’ needed to occur only very slowly to achieve a constant density; only about one atom in every cubic metre every 10 billion years was required and no experiment or astronomical observation would be able to detect an effect so small. This ‘steady state’ theory of the Universe made very definite predictions. The Universe looked the same on the average at all times. There were no special epochs in cosmic history – no ‘beginning’, no ‘end’, no time when stars started to form or when life first became possible in the Universe (see Figure 8.5).

Figure 8.3 The two types of expanding universe: “open” universes expand forever; “closed” universes eventually contract back to an apparent Big Crunch at a finite time in the future. The “critical” universe marks the dividing line between the two and also expands for ever.

Figure 8.4 The expansion of a steady-state universe. The rate of expansion is always the same. There is no beginning and no end, no special epoch when life can first emerge or after which it begins to die out along with the stars. The universe looks the same on the average at all times in its history.

Eventually this theory was ruled out by a sequence of observations that began in the mid 1950s, showing first that the population of galaxies that were profuse emitters of radio waves varied significantly as the Universe aged, and culminated in the discovery in 1965 of the heat radiation left over from the hot beginning predicted by the Big Bang models. This microwave background radiation had no place in the steady state Universe.

For twenty years astronomers tried to find evidence that would tell us whether the Universe was truly in the steady state that Bondi, Gold, and Hoyle proposed. A simple anthropic argument could have shown how unlikely such a state of affairs would be. If you measure the expansion rate of the Universe it tells you a time for which the Universe appears to have been expanding.11 In a Big Bang universe this really is the time since the expansion began – the age of the Universe. In the steady state theory there is no beginning and the expansion rate is just the expansion rate and nothing more. This is illustrated in the picture shown in Figure 8.4.

Figure 8.5 (a) The variation of the average density of matter in an expanding Big Bang universe. (b) The average density of matter in a steady-state universe is always the same.

In a Big Bang theory the fact that the expansion age is just slightly greater than the age of the stars is a natural state of affairs. The stars formed in our past and so we should expect to find ourselves on the cosmic scene after they have formed. But in a steady state universe the ‘age’ is infinite and is not linked to the rate of expansion. In a steady state Universe it is therefore a complete coincidence that the inverse of the expansion rate gives a time that is roughly equal to the time required for stars to produce elements like carbon. Just as surely as the coincidence between the inverse of the expansion rate of the Universe and the time required for stars to produce biochemical elements ruled out the need for Dirac's varying G, it should have cast doubt on the need for a steady state universe.

‘A banker is a man who lends you an umbrella when the weather is fair, and takes it away from you when it rains.’

Mark Twain

We have seen that a good deal of time is needed if stars are to manufacture carbon out of inert gases like hydrogen and helium. But time is not enough. The specific nuclear reaction that is needed to make carbon is a rather improbable one. It requires three nuclei of helium to come together to fuse into a single nucleus of carbon. Helium nuclei are called alpha particles and this key carbon-forming reaction has been dubbed the ‘triple-alpha’ process. The American physicist Ed Salpeter first recognised its significance in 1952. However, a few months later, whilst visiting Cal Tech in Pasadena, Fred Hoyle realised that making carbon in stars by this process was doubly difficult. First, it was difficult to get three alpha particles to meet and, even if you did, the fruits of their liaison might be short-lived. For if you looked a little further down the chain of nuclear reactions it seemed that all the carbon could quickly get consumed by interacting with another alpha particle to create oxygen.

Hoyle realised that the only way to explain why there was a significant amount of carbon in the universe was if the production of carbon went much faster and more efficiently than had been envisaged, so that the ensuing burning to oxygen did not have time to destroy it all. There was only one way to achieve this carbon boost. Nuclear reactions occasionally experience special situations where their rates are dramatically increased. They are said to be ‘resonant’ if the sum of the energies of the incoming reacting particles is very close to a natural energy level of a new heavier nucleus. When this happens the nuclear reaction goes especially quickly, often by a huge factor.

Hoyle saw that the presence of a significant amount of carbon in the Universe would be possible only if the carbon nucleus possessed a natural energy level at about 7.65 MeV above the ground level. Only if that was the case could the cosmic carbon abundance be explained, Hoyle reasoned. Unfortunately no energy level was known in the carbon nucleus at the required place.12

Pasadena was a good place to think about the energy levels of nuclei. Willy Fowler led a team of outstanding nuclear physicists and was an extremely affable and enthusiastic individual. Hoyle didn't hesitate to pay him a visit. And soon Fowler had persuaded himself that all the past experiments could indeed have missed the energy level that Hoyle was proposing. Within days, Fowler had pulled in other nuclear physicists from the Kellogg Radiation Lab and an experiment was planned. The result when it came was dramatic.13 There was a new energy level in the carbon nucleus at 7.656 MeV, just where Hoyle had predicted it would be.

The whole sequence of events for the production of carbon by stars then looked so delicately balanced that, as a science-fiction universe, it would have seemed contrived. First, three helium nuclei (alpha particles) have to interact at one place. This they manage to do in a two-step process. First, two helium nuclei combine to create a beryllium nucleus

helium + helium ⊒ beryllium

Fortunately, beryllium has a peculiarly long lifetime,12 ten thousand times longer than the time required for two helium nuclei to interact and so it stays around long enough to have a good chance of combining with another helium nucleus to produce a carbon nucleus:

beryllium + helium ⊒ carbon

The 7.656 MeV energy level in the carbon nucleus lies just above the energies of the beryllium plus helium (7.3667 MeV), so that when the thermal energy of the inside of the star is added the nuclear reaction becomes resonant and lots of carbon is produced. But that is not the end of the story. The next reaction waiting to burn up all the carbon is

carbon + helium ⊒ oxygen.14

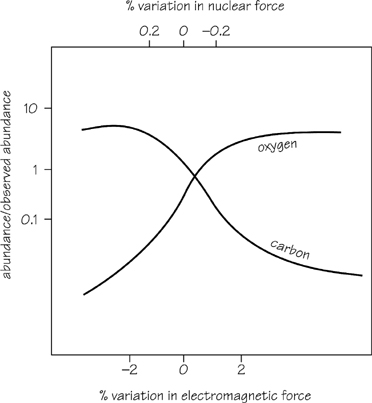

What if this reaction should turn out to be resonant as well? Then all the rapidly produced carbon would disappear and the carbon resonance level would be to no avail. Remarkably, this last reaction just fails to be resonant. The oxygen nucleus has an energy level at 7.1187 MeV that lies just below the total energy of carbon plus helium at 7.1616 MeV. So when the extra thermal energy in the star is added this reaction can never be resonant and the carbon survives (see Figure 8.7). Hoyle recognised that his finely balanced sequence of apparent coincidences was what made carbon-based life a possibility in the Universe.15

The positioning of the nuclear energy levels in carbon and oxygen is the result of a very complicated interaction between nuclear and electromagnetic forces that could not be calculated easily when the discovery of the carbon resonance level was first made. Today, it is possible to make very good estimates of the contributions of electromagnetic and nuclear forces to the levels concerned. One can see that their positions are a consequence of the fine structure constant and strong nuclear force constant taking the values that they do with high precision. If the fine structure constant, that governs the strength of electromagnetic forces, were changed by more than 4 per cent or the strong force by more than 0.4 of one per cent then the production of carbon or oxygen would be reduced by factors of between 30 and 1000. More detailed calculations of the fate of stars when these constants of Nature are slightly changed have been carried out recently by Heinz Oberhummer, Attila Csótó and Helmut Schlattl.16 Their results can be seen in Figure 8.6.

We see that the carbon and oxygen levels vary systematically as the constants of Nature governing the position of the resonance levels are changed. If they are altered from their actual values we end up with large amounts of carbon or large amounts of oxygen but never of both. A change of more than 0.4 per cent in the constants governing the strength of the strong nuclear force or more than 4 per cent in the fine structure constant would destroy almost all carbon or almost all oxygen in every star.

Figure 8.6 The production of carbon and oxygen by stars if the constants of Nature governing the strengths of the electromagnetic and nuclear forces are changed by the indicated amounts.

Hoyle had been very struck by the carbon resonance-level coincidence and its implications for the constants of physics. Rounding off a discussion of the astrophysical origin of the elements, he wrote,17

‘But I think one must have a modicum of curiosity about the strange dimensionless numbers [constants] that appear in physics, and on which, in the last analysis, the precise positioning of the levels in a nucleus such as C12 or O16 must depend. Are these numbers immutable, like the atoms of the nineteenth-century physicist? Could there be a consistent physics with different values for the numbers?’

Hoyle sees two alternatives on offer: either we must seek to demonstrate that the actual values of the constants of Nature ‘are all entirely necessary to the logical consistency of physics’, or adopt the point of view that ‘some, if not all, the numbers in question are fluctuations; that in other places of the Universe their values would be different.’

At first, Hoyle favoured the second ‘fluctuation’ idea, that the constants of Nature might be varying, possibly randomly, throughout space so that only in some places would the balance between the fine structure constant and the strong force constant come out ‘just right’ to allow an abundance of carbon and oxygen. Thus, if this picture is adopted,18

‘the curious placing of the levels in C12 and O16 need no longer have the appearance of astonishing accidents. It could simply be that since creatures like ourselves depend on a balance between carbon and oxygen, we can exist only in the portions of the universe where these levels happen to be correctly placed. In other places the level on16 might be a little higher, so that the addition of α-particles to C12 was highly resonant. In such a place … creatures like ourselves could not exist.'

In the years that followed Hoyle gradually took a more deterministic view of the resonance level coincidences, seeing them as evidence for some form of pre-planning of the universe in order to make life possible:19

‘I do not believe that any scientist who examined the evidence would fail to draw the inference that the laws of nuclear physics have been deliberately designed with regard to the consequences they produce inside the stars. If this is so, then my apparently random quirks have become part of a deep-laid scheme. If not then we are back again at a monstrous sequence of accidents.’

Hoyle's successful prediction sparked a resurgence of interest in the old Design Arguments, beloved of eighteenth- and nineteenth-century natural theologians, but with a new twist. Since ancient times there had been strong support for an argument for the existence of God (or ‘the gods’) from the fact that living things seemed to be tailor-made for their function. Animals appeared to be perfectly camouflaged for their environments; parts of our bodies were delicately engineered to provide (most of) us with ease of mobility, good vision, keen hearing and so forth;20 the motions of the planets were favourably arranged to make the terrestrial climate conducive to the continuance of life. Large numbers of apparent coincidences existed between things and persuaded many a philosopher, theologian and scientist of the past that none of this was an accident. The Universe was designed with an end in view. This end involved the existence of life – perhaps even ourselves – and the plainness ofthe evidence for such design meant that there had to be a Designer.

As it stands this ancient argument was difficult to refute by means of scientific facts. And it was always persuasive for those who were not scientists. After all, there are remarkable adaptations between living things and their environments all over the natural world. It is much easier to undermine by means of logical or philosophical argument. But scientists are never very impressed by such arguments unless they can provide a better explanation. And so it was with the Design Argument. Despite its blinkered attitude to many of the realities of the world, it was only overthrown as a serious explanation of the existence of complexity in Nature when a better explanation came along.21 The better explanation was by means of evolution by natural selection, which showed how living things can become well adapted to their environments over the course of time under a very wide range of circumstances, so long as the environment is not changing too quickly. Complexity could develop from simplicity without direct Divine intervention.

It is important to see what this type of Design Argument was focusing on. It is an argument about the inter-relationships between different outcomes of the laws of Nature. These are only partially determined by the forms of the laws. Their form is also determined by the constants of Nature, the starting conditions, and all manner of other statistical accidents.22

In the late seventeenth century Isaac Newton discovered the laws of motion, gravitation and optics that enabled us to understand the workings of the inanimate world around us and the motions of the celestial bodies in remarkable detail. Newton's success was seized upon by natural theologians and religious apologists who saw the beginnings of another style of Design Argument altogether – one based not upon the outcomes of the laws of Nature but upon the form of the laws themselves. With Newton's encouragement there grew up a Design Argument based upon the evident intelligence, mathematical elegance and effectiveness of Newton's laws of Nature. A typical form of the argument would be to show that the famous inverse-square law of gravitation was optimal for the existence of a solar system. If it had been an inverse-cube or any other inverse power of distance other than two then there could not exist stable planetary periodic orbits. All planets would follow a spiral path into the Sun or escape to infinity. This type of argument is quite different from the teleological form based upon fortuitous outcomes and adaptations. It identifies the most deep-seated basis for ‘order’ in the Universe as the fact that it can be so widely and accurately described by simple mathematical laws. It then presumes that order needs an ‘orderer’.

The contrast between these two forms of the Design Argument – from laws and from outcomes – is clearly displayed by the effects of the discovery that organisms evolve by natural selection. This quickly finished the argument from outcomes as a useful explanation of anything.23 But the Design Argument based on laws was completely unaffected. Natural selection did not act upon laws of motion or forces of Nature nor, as Maxwell liked to stress, could selection alter the properties of atoms and molecules.

In retrospect, it is clear that it is possible to create a further, distinct form of the Design Argument which appeals to the particular values taken by the fundamental constants of Nature. It is this set of numbers that distinguishes our Universe from others, and fixes the resonance levels in carbon and oxygen nuclei. It would be possible for the laws of Nature that we know to take the same form yet for the constants of Nature to change their values. The outcomes would then be very different.

The fact that we can shift the values of constants of Nature in so many of our laws of Nature may be a reflection of our ignorance. Many physicists believe, like Eddington, that ultimately the values of the constants of Nature will be shown to be inevitable and we will be able to calculate them in terms of pure numbers. However, it has become increasingly clear, as we will see in later chapters, that not all of the constants will be determined in this way. Moreover, the nature of the determination for the others may have a significant statistical aspect. What may be predicted is not the value but a probability distribution that the constant take any value. There will no doubt be a most probable value but that may not be the value that we see, if only because it may characterise a universe in which observers cannot exist.

‘I do not feel like an alien in this universe. The more I examine the universe and study the details of its architecture, the more evidence I find that the universe in some sense must have known that we were coming.’

Freeman Dyson24

The general importance of Dicke's approach to understanding the Large Numbers of cosmology was first seized upon by Brandon Carter, then a Cambridge astrophysicist but now working at Meudon in Paris. Carter had learned about the Large Number coincidences by reading Bondi's student textbook of cosmology25 but had not fallen under the spell of the steady state theory that was the centrepiece of Bondi's presentation. Bondi liked to assume that because the laws of Nature must be always the same, all other gross aspects of the Universe should display the same uniformity in space and time.26 The steady state theory was based on precisely this premise – that the gross structure of the Universe is always the same on the average. Bondi confessed to not having been able to follow the calculations of Eddington in his attempts to explain the Large Numbers by means of his Fundamental Theory. By contrast, he is more outspoken about Dirac's scheme to render the gravitation constant a time variable, seeing it as a further denial of the steady state principle:

‘Dirac … is contraposed to the basic arguments of the steady-state theory, since it supposes that not only the universe changes but with it the constants of atomic physics. In some ways it may almost be said to strengthen the steady-state arguments by showing how limitless the variations are that may be imagined to arise in a changing universe.’27

As a result of considering Dicke's explanation for the inevitability of our observation of some of the Large Number coincidences, Carter saw that it was important to stress the limitations of grand philosophical assumptions about the uniformity of the Universe. Ever since Copernicus showed that the Earth should not be placed at the centre of the known astronomical world, astronomers had used the term Copernican Principle to underwrite the assumption that we must not assume anything special about our position in the Universe. Einstein had assumed this implicitly when he first searched for mathematical descriptions of the Universe by seeking solutions of his equations that ensured every place in the Universe was the same: same density, same rate of expansion, and same temperature. The steady-statesmen went one step further by seeking universes that were the same at every time in cosmic history as well. Of course, the real Universe cannot be exactly the same everywhere but to a very good approximation, when one averages over large enough regions of space, it appears to be so, to an accuracy of about one part in one hundred thousand.

Carter rejected the wholesale use of the Copernican Principle in more specific situations because there are clearly restrictions on where and when observers could be present in the Universe:

‘Copernicus taught us the very sound lesson that we must not assume gratuitously that we occupy a privileged central position in the Universe. Unfortunately there has been a strong (not always subconscious) tendency to extend this to a questionable dogma to the effect that our situation cannot be privileged in any sense.’28

Carter's emphasis upon the role of the Copernican Principle was encouraged by the fact that this presentation took the form of a lecture at an international astronomy meeting convened in Cracow to coincide with the 500th anniversary of Copernicus' birth.

Dicke's argument showed that there was good reason to expect life to come on the scene several billion years after the expansion of a Big Bang Universe began. This showed that one of the Large Numbers coincidences was an inevitable observation by such observers. This was an application of what Carter called the weak anthropic principle,

‘that what we can expect to observe must be restricted by the condition necessary for our presence as observers.’29

Later, Carter regretted using the term ‘anthropic principle’. The adjective ‘anthropic’ has been the source of much confusion because it implies there is something in this argument that focuses upon Homo sapiens. This is clearly not the case. It applies to all observers regardless of their form and biochemistry. But if they were not biochemically constructed from the elements that are made in the stars then the specific feature of the Universe that would be inevitable for them might differ from what is inevitable for us. However, the argument is not really changed if beings are possibly based upon silicon chemistry or physics. All the elements heavier than the chemically inert gases of hydrogen, deuterium and helium are made in the stars like carbon and require billions of years to create and distribute. Later, Carter preferred the term ‘self-selection principle’ to stress the way in which the necessary conditions for the existence of observers select, out of all the possible universes, some subset which allows observers to exist. If you are unaware that being an observer in the Universe already limits the type of universe you could expect to observe then you are liable to introduce unnecessary grand principles or unneeded changes to the laws of physics to explain unusual aspects of the Universe. The archetypal examples are Gerald Whitrow's discussion of the age and density of the universe30 and Robert Dicke's explanation of the Large Numbers.

Carter's consideration of the self-selecting influence of our existence upon the sort of astronomical observations we make was inspired by reading about the Large Numbers coincidences in Bondi's book. Not knowing of Dicke's arguments of 1957 and 1961, he also noticed the importance of considering the inevitability of our observing the Universe close to the typical hydrogen-burning lifetime of a typical star. He was struck by the unnecessary way that Dirac had introduced the hypothesis of varying constants to explain these coincidences:31

‘it was completely erroneous for him to have used this coincidence as a motivation for a radical departure from standard theory.

At the time when I originally noticed Dirac's error, I simply supposed that it had been due to an emotionally neutral oversight, easily explicable as being due to the rudimentary state of general understanding of stellar evolution in the pioneering era of the 1930s, and that it was therefore likely to have been already recognised and corrected by its author. My motivation in bothering to formulate something that was (as I thought) so obvious as the anthropic principle in the form of an explicit precept, was partly provided by my later realisation that the source of such (patent) errors as that of Dirac was not limited to chance oversight or lack of information, but that it was also rooted in more deep seated emotional bias comparable with that responsible for early resistance to Darwinian ideas at the time of the “apes or angels” debates in the last century. I became aware of this in Dirac's own case when I learned of his reaction when his attention was explicitly drawn to the “anthropic” line of reasoning [about the Large Number coincidences] … when it was first pointed out by Dicke in 1961. This reaction amounted to a straight refusal to accept the line of reasoning leading to Dicke's (in my opinion unassailable) conclusion that “the statistical support for Dirac's cosmology is found to be missing”. The reason offered by Dirac is rather astonishing in the context of a modern scientific debate: after making an unsubstantiated (and superficially implausible) claim to the effect that in his own theory “life need never end” his argument is summarised by the amazing statement that, in choosing between his own theory and the usual one … “I prefer the one that allows the possibility of endless life”. What I found astonishing here was of course the suggestion that such a preference could be relevant in such an argument … Dirac's error provides a salutary warning that contributes to the motivation for careful formulation of the anthropic and other related principles.'32

The weak anthropic principle applies naturally to help us understand why variable quantities take the range of values that we find them to take in our vicinity of space and time. But there exist ‘coincidences’ between combinations of quantities which are believed to be true constants of Nature. We will not be able to explain these coincidences by the fact that we live when the Universe is several billion years old, in conditions of relatively low density and temperature. Carter's suggested response to this was more speculative. If the constants of Nature can't change and are programmed into the overall structure of the Universe in a unique way then maybe there is some as yet unknown reason why there have to be observers in the Universe at some stage in its history? Carter dubbed this the strong anthropic principle, which states

‘that the Universe (and hence the fundamental parameters on which it depends) must be such as to admit the creation of observers within it at some stage.’

The introduction of such a speculation needs evidence to support it. In this case it is that there are a number of unusual apparent coincidences between superficially unrelated constants of Nature that appear to be crucial for the existence of ourselves or any other conceivable form of life. Hoyle's unusual carbon and oxygen resonance levels are archetypal examples. There are many others. Small changes in the strengths of the different forces of Nature and in the masses of different elementary particles destroy many of the delicate balances that make life possible. By contrast, if the conditions for life to develop and persist had been found to depend only very weakly on all the constants of Nature then there would be no motivation for thinking about an anthropic principle of this stronger sort. In future chapters we shall see how this idea provokes a serious consideration of the idea that there exist other ‘universes’ which possess different properties and different constants of Nature so that we might conclude that we find ourselves inhabiting one of the possible universes in which the constants and cosmic conditions have fallen out in a pattern that permits life to exist and persist – for we could not find it otherwise.

‘Do I dare

Disturb the universe?'

T.S. Eliot33

We have been saying that the values of the constants of Nature are rather fortuitously ‘chosen’ when it comes to allowing life to evolve and persist. Let's take a look at a few more examples. The structure of atoms and molecules is controlled almost completely by two numbers that we encountered in Chapter 5: the ratio of the electron and proton masses, ?, which is approximately equal to 1/1836 and the fine structure constant ?, which is approximately equal to 1/137. Suppose that we allow these two constants to change their values independently and we also assume (for simplicity) that no other constants of Nature are changed. What happens to the world if the laws of Nature stay the same?

If we follow up the consequences we soon find there isn't much room to manoeuvre. Increase β too much and there can be no ordered molecular structures because it's the small value of β that ensures that electrons occupy well-defined positions around an atomic nucleus and don't wiggle around too much. If they did then very fine-tuned processes like DNA replication would fail. The number β also plays a role in the energy generation processes that fuel the stars. Here it links up with α to make the centres of stars hot enough to initiate nuclear reactions. If β exceeds about 0.005 α2 then there would be no stars. If modern grand unified gauge theories are on the right track then alpha must lie in the narrow range between about 1/180 and 1/85 otherwise protons will decay long before the stars can form. The Carter condition is also shown dashed (– – –) on the picture. Its track picks out worlds where stars have convective outer regions which seem to be needed to make some systems of planets. The regions of α and β that are allowed and forbidden are shown in Figure 8.7.

If, instead of α versus β, we play the game of changing the strength of the strong nuclear force, αs, together with that of α, then unless αs > 0.3α1/2 the biologically vital elements like carbon would not exist and there would not be any organic chemists. They would be unable to hold themselves together. If we increase αs by just 4 per cent there is a potential disaster because a new nucleus, helium2 made of two protons and no neutrons, can now exist34 and allows very fast direct nuclear reactions proton + proton → helium-2. Stars would rapidly exhaust their fuel and collapse to degenerate states or black holes. In contrast, if ?s were decreased by about 10 per cent then the deuterium nucleus would cease to be bound and the nuclear astrophysical pathways to the biological elements would be blocked. Again, we find a rather small region of parameter space in which the basic building blocks of chemical complexity can exist. The inhabitable window is shown in Figure 8.8.

The more simultaneous variations of other constants one includes in these considerations, the more restrictive is the region where life, as we know it, can exist. It is very likely that if variations can be made then they are not all independent. Rather, making a small change in one constant might alter one or more of the others as well. This would tend to make the restrictions on most variations become even more tightly constrained.

Figure 8.7The habitable zone where life-supporting complexity can exist if the values of β and α were permitted to vary independently. In the lower right-hand zone there can be no stars. In the upper right-hand zone there are no non-relativistic atoms. In the top left zone the electrons are insufficiently localised for there to exist highly ordered self-reproducing molecules. The narrow ‘tramlines’ pick out the region which may be necessary for matter to remain stable for long enough for stars and life to evolve. 35

Figure 8.8 The habitable zone where life-supporting complexity can exist if the values of αs and α are changed independently. The bottom right-hand zone does not allow essential biochemical elements like carbon, nitrogen and oxygen to exist. The top left-hand zone permits a new nucleus, helium-2, called the diproton, to exist. This provides a route for very fast hydrogen burning in stars and would probably lead them to exhaust their fuel long before conditions were conducive to the formation of planets or the biological evolution of complexity. 36

These examples should be regarded as merely indications that the values of the constants of Nature are rather bio-friendly. If they are changed by even a small amount the world becomes lifeless and barren instead of a home for interesting complexity. It was this unusual state of affairs that first provoked Brandon Carter to see what sort of ‘strong anthropic’ explanations might be offered for the values of the constants of Nature.

‘I don't want to achieve immortality through my work. I want to achieve immortality through not dying. I don't want to live on in the hearts of my countrymen. I would rather live on in my apartment.’

Woody Allen37

Other more speculative anthropic principles have been suggested by other researchers. John Wheeler, the Princeton scientist who coined the term ‘black hole’ and played a major role in their investigation, proposed what he called the Participatory Anthropic Principle. This is not especially to do with constants of Nature but is motivated by the fineness of the coincidences that allow life to exist in the cosmos. Perhaps, Wheeler asks, life is in some way essential for the coherence of the Universe? But surely we are of no consequence to the far-flung galaxies and the existence of the Universe in the distant past before life could exist? Wheeler was tempted to ask if the importance of observers in bringing quantum reality into full existence may be trying to tell us that ‘observers’, suitably defined, may be in some sense necessary to bring the Universe into existence. This is very hard to make good sense of because in quantum theory the notion of an observer is not sharply defined. It is anything that registers information. A photographic plate would do just as well as a night watchman.

A fourth Anthropic Principle, introduced by Frank Tipler and myself, is somewhat different. It is just a hypothesis that should be able to be shown to be true or false using the laws of physics and the observed state of the Universe. It is called the Final Anthropic Principle (or conjecture) and proposes that once life emerges in the Universe it will not die out. Once we have come up with a suitably wide definition of life, say as information processing (‘thinking’) with the ability to store information (‘memory’), we can investigate whether this could be true. Note that there is no claim that life has to arise or that it must endure. Clearly, if life is to endure forever it must ultimately change its basis from life as we know it. Our knowledge of astrophysics tells us that the Sun will eventually undergo an irreversible energy crisis, expand, and engulf the Earth and the rest of the inner solar system. We will need to be gone from Earth by then, or to have transmitted the information needed to recreate members of our species (if it can still so be called) elsewhere. Thinking millions of years to the future we might also imagine that life will exist in forms that today would be called ‘artificial’. Such forms might be little more than processors of information with a capacity to store information for future use. Like all forms of life they will be subject to evolution by natural selection.38 Most likely they will be tiny. Already we see a trend in our own technological societies towards the fabrication of smaller and smaller machines that consume less and less energy and produce almost no waste. Taken to its logical conclusion, we expect advanced life-forms to be as small as the laws of physics allow.

In passing we might mention that this could explain why there is no evidence of extraterrestrial life in the Universe. If it is truly advanced, even by our standards, it will most likely be very small, down on the molecular scale. All sorts of advantages then accrue. There is lots of room there – huge populations can be sustained. Powerful, intrinsically quantum computation can be harnessed. Little raw material is required and space travel is easier. You can also avoid being detected by civilisations of clumsy bipeds living on bright planets that beam continuous radio noise into interplanetary space.

We can now ask whether the Universe allows information processing to continue forever. Even if you don't want to equate information processing with life, however futuristic, it should certainly be necessary for it to exist. This turns out to be a question that we can go quite close to answering. If the Universe began to accelerate a few billion years ago, as recent observations indicate, then it is likely that it will continue accelerating forever.39 It will never slow down and contract back to a Big Crunch. If so, then we learn that information processing will come to a halt. Only a finite number of bits of information can be processed in a never-ending future. This is bad news. It occurs because the expansion is so rapid that information quality is very rapidly degraded.40 Worse still, the accelerated expansion is so fast that light signals sent out by any civilisation will have a horizon beyond which they cannot be seen. The Universe will become partitioned into limited regions within which communication is possible.

An interesting observation was made along with the original proposal of the Final Anthropic Principle. We pointed out41 that if the expansion of the Universe were found to be accelerating then information processing must eventually die out. Recently, important observational evidence has been gathered by several research groups to show that the expansion of the Universe began to accelerate just a few billion years ago. But suppose the observational evidence for the present acceleration of the Universe turns out to be incorrect.42What then? It is most likely that the Universe will keep on expanding forever but continuously decelerate as it does so. Life still faces an uphill battle to survive indefinitely. It needs to find differences in temperature, or density, or expansion in the Universe from which it can extract useful energy by making them uniform. If it relies on mining sources of energy that exist locally – dead stars, evaporating black holes, decaying elementary particles – then eventually it runs into the problem that well-worked coal mines inevitably face: it costs more to extract the energy than can be gained from it. Beings of the far future will find that they need to economise on energy usage

– economise on living in fact! They can reduce their free energy consumption by spending long periods hibernating, waking up to process information for a while before returning to their inactive state. There is one potential problem with this Rip van Winkle existence.

You need a wake-up call. Some physical process needs to be arranged which will supply an unmissable wake-up call without using so much energy that the whole point of the hibernation period is lost. So far it is not clear whether this can be done forever. Eventually it appears that mining energy gradients that can be used to drive information processing becomes cost ineffective. Life must then begin to die out.

By contrast, if life does not confine its attentions to mining local sources of energy the long-range forecast looks much brighter. The Universe does not expand at exactly the same rate in every direction. There are small differences in speed from one direction to another which are attributable to gravitational waves of very long, probably infinite, wavelength threading space. The challenge for super-advanced life-forms is to find a way of tapping into this potentially unlimited energy supply. The remarkable thing about it is that its density falls off far more slowly than that of all ordinary forms of matter as the Universe expands. By exploiting the temperature differences created by radiation moving parallel to direction of expansion moving at different rates, life could find a way to keep its information processing going.

Lastly, if the Universe does collapse back to a future Big Crunch in a finite time then the prospects at first seem hopeless. Eventually, the collapsing Universe will contract sufficiently for galaxies and stars to merge. Temperatures will grow so high that molecules and atoms will be dismembered. Again, just as in the far future, life has to exist in some abstract disembodied form, perhaps woven into the fabric of space and time. Amazingly, it turns out that its indefinite survival is not ruled out so long as time is suitably defined. If the true time on which the universe ‘ticks’ is a time created by the expansion itself then it is possible for an infinite number of ‘ticks’ of this clock to occur in the finite amount of time that appears to be available on our clocks before the Big Crunch is reached.

There is one last trick that super-advanced survivors might have up their sleeves in universes that seem doomed to expand forever. In 1949 the logician Kurt Gödel, Einstein's friend and colleague at Princeton, shocked him by showing that time travel was allowed by Einstein's theory of gravity.43 He even found a solution of Einstein's equations for a universe in which this occurred. Unfortunately, Gödel's universe is nothing like the one that we live in. It spins very rapidly and disagrees with just about all astronomical observations one cares to make. However, there may be other more complicated possibilities that resemble our Universe in all needed respects but which still permit time travel to occur. Physicists have spent quite a lot of effort exploring how it might be possible to create the distortions of space and time needed for time travel to occur. If it is possible to engineer the conditions needed to send information backwards in time then this offers a strategy for escape from a lifeless future for suitably ethereal forms of ‘life’ defined by information processing and storage. Don't invest your efforts in perfecting means of extracting usable energy from an environment that is being driven closer and closer to a lifeless equilibrium. Instead, travel backwards in time to an era where conditions are far more hospitable. Indeed, travel is not strictly necessary, just transmit the instructions needed for re-emergence.

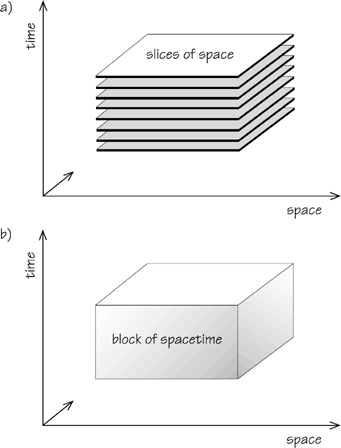

Often, people are worried about apparent factual paradoxes that can emerge from allowing backward time travel. Can't you kill yourself or your parents in infancy so that you cannot exist? All these paradoxes are impossibilities. They arise because you are introducing a physical and logical impossibility by hand. It helps to think of space and time in the way that Einstein taught us: as a single block of spacetime, see Figure 8.9.

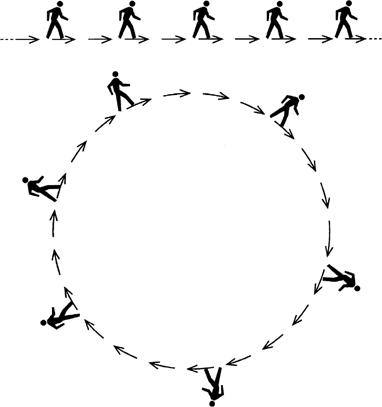

Now step outside spacetime and look in at what happens there. Histories of individuals are paths through the block. If they curve back upon themselves to form closed loops then we would judge time travel to occur. But the paths are what they are. There is no history that is ‘changed’ by doing that. Time travel allows us to be part of the past but not to change the past. The only time-travelling histories that are possible are self-consistent paths. On any closed path there is no well-defined division between the future and the past. It is like having a troop of soldiers marching one behind the other in single file. If they march in a straight line then it is clear who is in front of whom. But make them march in a circle so the previous leader follows the previous back-marker and there is no longer any well-defined sense of order in the line, as pictured in Figure 8.10.

Figure 8.9 (a) A stack of slices of space taken at different times; (b) a block of spacetime made from all the slices of space. This block could be sliced up in many ways that differ from the slicing chosen in (a).

Figure 8.10 March in a straight line and it is clear who is in front of whom. March in a circle and everyone is in front and behind everyone else.

If this type of backward time travel is an escape from the thermodynamic end of the Universe and our Universe appears to be heading for just such a thermodynamic erasure of all possibilities for processing information then maybe super-advanced beings in our future are already travelling backwards into the benign cosmic environment that the present-day universe affords. Many arguments have been put forward to argue against the arrival of tourists from the future but they have a rather anthropocentric purpose in mind. It has been argued that the great events in Earth's history (events around Bethlehem in 4 BC, the Crucifixion, the death of Socrates, and so on) would become magnets for backward time travellers, creating a huge cumulative audience that was evidently not present. But there is no reason why escapees from the heat death of the Universe should visit us, let alone cause crowd control problems at critical points in our history.

My favourite argument44 against backward time travel is a financial one. It appeals to the fact that interest rates in the money markets are non-zero to argue that neither forward nor backward time travellers are taking advantage of their position to make a killing on the financial markets. If they were able to invest in the past on the basis of knowing where markets would increase in the future then the long-term result would be to drive interest rates to zero. Again, it is easy to avoid allowing this argument to rule out time travellers escaping the heat death of the Universe. One suspects, however, that financial investments might be the least of their concerns.