Chapter 12. (Artificial Intelligence)

Playing Dice of Doom against other people can be fun, but what if you could play against the program itself? What if the program were intelligent enough to win? We can make that happen with artificial intelligence (AI), that is, techniques that make programs appear as smart as people in narrow domains. In this chapter, we will make our implementation of Dice of Doom lazy and create an AI player.

|#

12.1 An Intelligent Life-form

Chad has finally beaten DrRacket’s Dicetromonom guard. In shock, Dicetromonom explodes, which gives Chad a chance to figure out what made Dicetromonom tick. He dissects Dicetromonom’s head and finds a small hard drive.

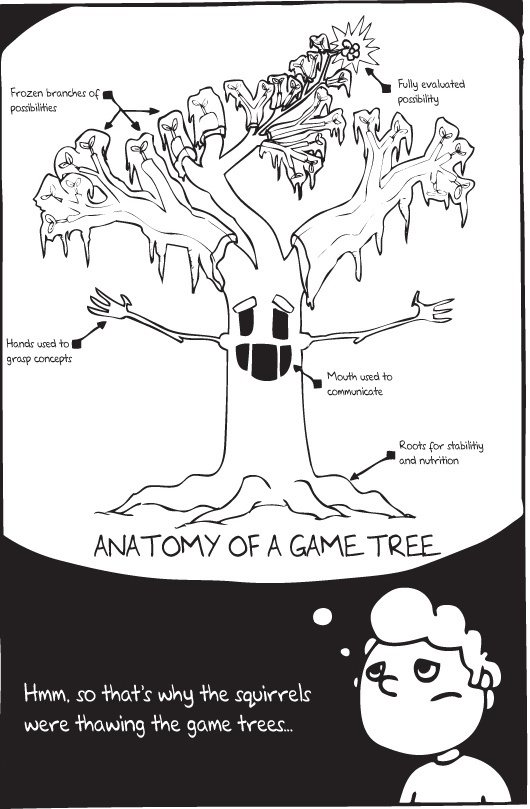

Chad plugs the drive into his computer and begins to examine the robot’s game software. He discovers a number of neat coding tricks. First, he notices that Dicetromonom can play on large game boards because it generates the game tree lazily. Second, he discovers Dicetromonom’s way of planning a game strategy, and it is amazingly simple.

12.2 Lazy Games

Recall that all our discussions of Dice of Doom in Chapter 10 involved 2×2 boards. If you tried to run the program on a larger board than that, it just wouldn’t work, unless you had access to a really great supercomputer. With the lazy evaluation trick from Chapter 11, we can easily improve the performance of our program. Laziness enables our program to play on large boards, and best of all, it saves some time.

Here’s how we use force and delay in our Dice of Doom game. First, we add delay to the game-tree function:

(define (game-tree board p dice)

;; create tree of attacks from this position; add passing move

(define (attacks board)

(for*/list ([src board]

[dst (neighbors (territory-index src))]

#:when (attackable? board p src dst))

(define from (territory-index src))

(define dice (territory-dice src))

(define newb (attack board p from dst dice))

(define gt-attack

(game newb p (delay (cons (passes newb) (attacks newb)))))

(move (list from dst) gt-attack)))

;; create a passing move and the rest of the game tree

(define (passes board)

(define-values (new-dice newb) (distribute board p dice))

(move '() (game-tree newb (switch p) new-dice)))

;; -- START: --

(game board p (delay (attacks board))))Here, we have wrapped the generation of moves in (delay ...) as indicated in two places with bold. These delays postpone the generation of all possible moves in game structs. In particular, the for loop in attacks and all direct and indirect recursive calls become suspended computations.

You may think that all we have to do is find all uses of the game-moves function and wrap them with (force ...) so that we get lists and not just suspended computations. While find-and-insert is indeed one way to apply all the requisite calls to force, in Racket there’s another way, and you will find the second one much more appropriate for this chapter. The second way is made for lazy programmers, that is, programmers who think hard and avoid manual typing on the keyboard until necessary.

The lazy way is to redefine the function game-moves once and for all. Doing so has a huge advantage: we don’t need to change the rest of the code at all. Better still, when we add code to the game, we don’t need to remember that game-moves retrieves a promise and that we need to use force to get the list from this promise. The forcing just happens automatically.

The following is the lazy way to define the game struct so that game-moves forces its field every time it is used:

(define-values (game game? game-board game-player game-moves)

(let ()

(struct game (board player delayed-moves))

(values game

game?

game-board

game-player

(lambda (g) (force (game-delayed-moves g))))))It is a mouthful of code, and it is complicated, but once you understand this trick, you will find that it is extremely useful in many situations. We will use it again in Chapter 14.

The first line in this code snippet sets up a multiple-values definition. Specifically, it defines five functions: game, game?, game-board, game-player, and game-moves. If you squint just a bit, you see that these are precisely the functions introduced by this structure definition:

(struct game (board player moves))

The second line sets up a local scope of definitions, and the third line defines the expected game struct in that scope. The function definitions that it introduces are visible only inside of (let ...). The trick is that the next expression is a values expression that bundles up game, game?, game-board, and game-player, plus one more function so that they match up with the variable names introduced by define-values.

As you can see, the last value in this bundle is a function that accepts a game tree, retrieves the delayed list of moves, and forces the promise to deliver the actual list. This function uses the locally defined game-moves and force. But because the whole bundle of values escapes the scope, this unnamed lambda function becomes the value of the globally defined name game-moves. When this function is used, it has access to the original selector, while the rest of the program accepts this lambda function as the selector. We can have our pie and eat it, too.

In short, we use the power of Racket to change a large program in two small ways to get a large change in behavior. You can mimic some aspects of laziness in other languages, but this isn’t normally one of those ideas you see early on. With Racket, however, it can become a part of your first-year repertoire.

Now you may wonder why the call to force doesn’t create the whole game tree, canceling all the benefits we get from suspending the game tree generation in the first place. To understand why it works, take a second look at game-tree. Once we force the list of moves, the next step is always to call the locally defined attacks function on board. This call will indeed create one complete level of the game—a complete list of moves—with a delay. Furthermore, because of the recursive nature of game-tree, the generation of the tree is suspended in each node attached to a move. Only the next call to force will unfold one more level passing of the game tree, but only for the moves the game needs to execute. And voilà, you have a lazy version of Dice of Doom.

Lazy Dice of Doom has two advantages over our first draft of the game. First, Lazy Dice of Doom no longer needs restrictions on the rules that make Dice of Doom a finite, terminating game. It is okay to play infinite games now, because the game tree is unfolded only as much as needed, and we never need more than a finite portion. Second, Lazy Dice of Doom is good not only for playing infinite games, but also for playing on large game boards.

Try it out. Play on a 3×3 or 5×5 board. But just playing the game by yourself is boring, even with a bigger board. Let’s make the game interesting by adding AI.

12.3 Adding Artificial Intelligence

The strategy of search and evaluate is one idea behind John McCarthy’s notion of AI. For games, this idea works amazingly well and truly invokes the perception of intelligence. Our game’s AI will search the game tree, evaluate all moves, and determine the best one. To complete the evaluations, we will use something called the minimax algorithm.

The principle behind the minimax algorithm is “what is good for me is bad for my opponent,” and vice versa. Hence, the algorithm minimizes the maximum damage an opponent can inflict during a turn. Roughly speaking, the algorithm proceeds in two steps. First, it assigns a value to each move in the tree, up to a certain level. Second, it determines the minimum of the maximal damage at each level of the tree, from the perspective of the current player. At the root of the game tree, the AI picks the move that results in the best possible outcome as far out as the algorithm can see.

To write the AI, we need a depth limit:

(define AI-DEPTH 4)

We do not want the AI to make an evaluation beyond a certain depth in the game tree. Otherwise, the AI forces every single level in the game tree, and we lose all the advantages of going lazy. For this game, we define the depth to be 4, so the AI will evaluate only four moves deep into the tree. The depth limit reduces the AI’s intelligence but without it the program would run too slowly.

Next, we should write a function that limits the evaluation of the tree. This prune-tree function would take a game tree and the desired depth of evaluation. It would then create a game structure and recursively trim each branch via a for loop.

The process could stop when the desired depth reaches 0. But we don’t write a separate tree-pruning function. Instead, we prune as we go. That is, the rating function evaluates the moves as it goes and stops evaluating when it reaches the specified depth.

As it turns out, we actually need a pair of rating functions: one for rating moves in the tree and one for rating positions. Here is the first one:

(define (rate-moves tree depth)

(for/list ([move (game-moves tree)])

(list move (rate-position (move-gt move) (- depth 1)))))The rate-moves function consumes a tree and a depth. With a for/list loop, it produces a list that pairs each move with the rating of the position that results from this move. The latter is obtained via rate-position, the function that assigns a value to each tree:

(define (rate-position tree depth)

(cond [(or (= depth 0) (no-more-moves? tree))

(define-values (best w) (winners (game-board tree)))

(if (member AI w) (/ 1 (length w)) 0)]

[else

(define ratings (rate-moves tree depth))

(apply (if (= (game-player tree) AI) max min)

(map second ratings))]))The function generates a numeric point rating for a given branch of the game tree. If the function has reached the desired depth or the state has no further moves, we’ll need to check who the winner is for the current position. If the current player isn’t among the winners of this position, we can give the position the minimum rating of 0. Otherwise, we’ll divide 1 by the number of winners to determine our rating. By doing this, we also give a meaningful rating for ties. If the player is the sole winner, the rating, according to this formula, will be the maximum value of 1. For a two-player tie, the rational result would be 1/2.

If, however, more moves are available, we’ll need to look at all the subsequent moves to decide how to rate the current position. We accomplish this by calling our rate-moves function. As per the minimax principle, we will then pick either the max or min rating of all the follow-up moves, depending on whether the move being rated is for the AI player or its opponent.

Now we can bring all of these functions together into a single function that acts as an artificially intelligent player. In this role, the-ai-plays takes a game tree and determines the best possible moves at the given tree node:

(define (the-ai-plays tree)

(define ratings (rate-moves tree AI-DEPTH))

(define the-move (first (argmax second ratings)))

(define new-tree (move-gt the-move))

(if (= (game-player new-tree) AI)

(the-ai-plays new-tree)

new-tree))The function starts by rating all possible moves from the root of the given tree to some fixed depth. The best move is determined by Racket’s higher-order argmax function, which finds the element of ratings that maximizes the output of second. So in this case, it returns the move that has the maximum rating, and then the-ai-plays creates a new game tree from it. If the current player after this move is still the AI player, then the-ai-plays continues to pick moves. Otherwise, it returns the new game tree.

All that’s left to do is link the AI into Dice of Doom. To this end, we modify the pass function to allow the AI to take over the role of the players:

(define (pass w)

(define m (find-move (game-moves (dice-world-gt w)) '()))

(cond [(false? m) w]

[(or (no-more-moves? m) (not (= (game-player m) AI)))

(dice-world #f (game-board m) m)]

[else

(define ai (the-ai-plays m))

(dice-world #f (game-board ai) ai)]))This new version calls the AI when it is the AI’s turn; otherwise, the function just passes the turn normally.

We now have a fully functional game that employs an AI. Try it out!

Stop-when—Chapter Checkpoint

In this chapter, we used lazy evaluation to improve Dice of Doom, and then we created an AI player so that you can play against the program:

Lazy evaluation allows us to make the game more efficient so that it can handle larger game boards by ignoring branches of the game tree until they are absolutely needed.

We used a simple minimax algorithm to create an AI player.

Finally, you learned how to define structures that implement more than just the built-in constructors, accessors, and predicates.

Chapter Challenges

Easy. Make the AI step through its moves. Right now, it executes all of its moves at lightning speed, leaving players slightly dazed by the crushing blows that it inflicts. It will be easier for the player to understand if the AI executes its moves step-by-step instead of all at once.

Medium. Create a hint feature. When you press a certain key, a good enemy territory will be highlighted as a suggested attack. Hint: This will require you to use some of the pieces of the AI for the human player.

Difficult. Search the Web for other AI algorithms that an automated Dice of Doom player could employ against you. Then turn the new player loose on your friends.