Maurice Allais was barely three years old when his father was called to military service in August 1914 to fight in World War I. The little French boy would never see him again; captured by the Germans, he died in captivity seven months later. The loss marked the boy not only in his youth, but for his entire life.

Born into a typical French working-class family, Allais’ maternal grandfather had been a carpenter, the father a cheesemonger with a little shop in Paris. After his father’s death, his mother raised Maurice while hovering on the brink of poverty. The boy showed early signs of exceptional gifts. He excelled in high school, usually being the first in his class in almost all subjects, be they French, Latin, or mathematics. But it was history that fascinated him. Allais first intended to study that subject upon graduation but was dissuaded by his math teacher, who convinced him that with talents such as his he should turn to science. The French system of higher education is sometimes decried as elitist, but it is a tribute to it that gifted young people need not be well-to-do or have the right contacts to enter the leading institutions. Allais gained entry to the elite and highly competitive École Polytechnique in 1931 and graduated first in his class two years later.

Upon graduation, the young man visited the United States. It was 1933, and that country was in the midst of the Great Depression. Aghast at the devastation he saw, he decided that he needed to understand how such events could occur. It was the sight of “a graveyard of factories” that led him to study economics. “My motivation was an idea of being able to improve the conditions of life, to try to find a remedy to many of the problems facing the world. That’s what led me into economics. I saw it as a way of helping people.”1

Back in France, high-level positions in governmental administration beckoned, but like all graduates of École Polytechnique, Allais had to complete a year of military service first. He did so in the artillery corps and then completed two further years of study toward an engineering degree at the École Nationale Superieure des Mines in Paris. In 1937, the newly minted, twenty-six-year-old mining engineer was put in charge of mines and quarries, as well as the railway system, in five of France’s eighty-nine départements. But soon World War II was in full swing, and Allais was called back to military service. As a lieutenant, he was given command of a heavy artillery battery on the Italian front, but after the fall of Paris to the Germans, the war with Italy only lasted for two weeks, from June 10 to June 25, 1940. Following the armistice, Allais was demobilized and returned to his former position in German-occupied France.

It was during the last years of the war, and in the three years following it, that Allais formulated his thinking about the economy, all the while carrying out his administrative functions. Largely self-taught, and working eighty hours a week, Allais published his first two fundamental works, À la Recherche d’une Discipline Économique (In quest of an economic discipline, 1943) and Économie et Interêt (Economy and interest, 1947), as well as three minor pieces and various news articles. He had decided to write and publish all his works in French, his native tongue—a choice that would prove fateful for his career.

In 1948, he was excused from administrative duties and henceforth concentrated exclusively on research, teaching, and scientific publication. In addition to being a professor of economic analysis at the École Nationale Supérieure des Mines from 1944 on, and the director of a research unit at the Centre de la Recherche Scientifique (CNRS) from 1946 on, he held teaching positions at other institutions, such as the institute of statistics at the University of Paris (1947–1968), the Thomas Jefferson Center at the University of Virginia (1958–1959), the Graduate Institute of International Studies in Geneva (1967–l970), and again the University of Paris (1970–1985).

His many contributions to economic science derived essentially from the epiphany he had had during his visit to the United States as a student. He sought to find solutions to the fundamental problem of any economy: how to maximize economic efficiency while ensuring an acceptable distribution of income. “Thus, my vocation as an economist was not determined by my education, but by circumstances. Its purpose was to endeavour to lay the foundations on which an economic and social policy could be validly built.”2

The numerous honors for his scientific work were crowned with the award of the Nobel Prize in Economics in 1988, for his “pioneering contributions to the theory of markets and efficient utilisation of resources.” Allais was seventy-seven years old, and many observers wondered why it had taken the Nobel committee so long to honor this French economist, especially since other winners—Paul Samuelson in 1970, John Hicks and Kenneth Arrow in 1972, and Robert Solow in 1987—had been awarded the prize for work that Allais had already done, or for which he had laid the groundwork. Even a student of his, Gérard Debreu, had received the Nobel Prize five years before. Why was that? The reason was simple: While the lingua franca in economics was English, as in all sciences, Allais had written his work in French. Had his early publications been available in English, “a generation of economic theory would have taken a different course,” according to Paul Samuelson.3

Allais’s interests also included applied economics (namely, economic management, taxation, the distribution of income, monetary policy, energy, transportation, and mining). Motivated by his studies of the factors of economic development, the liberalization of international trade, and the monetary conditions of international economic relations, he took an active part in various organizations, such as the European Union of Federalists, the European Movement, the Movement for an Atlantic Union, and the European Economic Community. He was a rapporteur at many international conferences aimed at establishing the North Atlantic Treaty Organization (NATO) and the European Community.

He was opposed, however, to the creation of a common European currency—not because he disapproved of the euro as such, but because he thought such a step must be preceded by a full political union of the European nations. And even though he was one of the founding members of the prestigious liberal think tank Société du Mont Pélerin, he refused to sign the constituting document because in his opinion, too much weight was given to private property rights.

Surprisingly, economics was not the only scientific field in which Allais made his mark. Trained as a scientist, he remained fascinated by physics and performed experiments in the early summer of 1954 that produced baffling results. Over a period of thirty days, he recorded the movement of a specially designed “paraconical pendulum.” While the measurements were in progress, a total solar eclipse of the Sun occurred, and at the very moment that the Moon passed in front of the Sun, the pendulum sped up.

This result, which has since been reproduced during about twenty solar eclipses, was extremely puzzling and remains unexplained to this day. One possible explanation is that the Moon, when it passes in front of the Sun, either absorbs or bends gravitational waves. That would imply that space manifests different properties along different axes due to motion through an ether that is partially influenced by planetary bodies. However, the theory of an ether has been debunked since 1887. Or has it? Did Allais’s experiment revive the discredited theory, thereby also putting into doubt parts of Einstein’s theory of relativity? The latter would have pleased Allais, who was convinced that Einstein had plagiarized the work of Henri Poincaré.4 Had Allais also found a convincing explanation for what would become known as the Allais effect, it would perhaps have warranted another Nobel Prize, this time in physics.5

It is one of Allais’s early works, dating back to 1952, that is of interest here. In previous works, Allais had proved that equilibrium in a market economy is equivalent to maximum efficiency. Now he wanted to extend that theory to an economy with uncertainty.

John von Neumann and Oskar Morgenstern had published the second edition of Theory of Games five years earlier, including the proof that adherence to their four axioms was a necessary and sufficient condition for a person to possess a utility function. And according to them, any rational operator must maximize the mathematical expectation of his or her utility.

To Allais, this stance was unacceptable because it neglected the fact that human decision-makers were…well, human. He concluded that mathematical expectation could not be the central factor when making decisions; the crucial element had to be psychological. It is the probability distribution of psychological values around their mean, not the probability distribution of utilities around their mean, that represents the fundamental psychological element of the theory of risk.

In stipulating a utility function that increases at a decreasing rate, Daniel Bernoulli had already taken into account human psychology to a large degree. Later on, von Neumann and Morgenstern, as well as Milton Friedman, Leonard Savage, and Harry Markowitz, had advanced this theory, and Frank Ramsey and Savage had postulated that even probability—an objective measure if ever there was one—had to be considered from a psychological perspective. But Allais took an immense step further.

To test the underlying assumptions of the theory of expected utility, particularly its axioms, he devised an intricate experiment with about 100 subjects, all of them well versed in the theory of probability. By any reasonable standard, they would be considered to behave rationally. Allais asked them two questions. While reading them, please ask yourself which answers you would give. The first question was:

Which of the following situations do you prefer?6

(a) $1 million for sure,

or

(b) 10 percent chance of receiving $5 million,

89 percent chance of getting $1 million, and a

1 percent chance of getting nothing.

Which of the two situations would you, the reader, prefer?

Most of Allais’s subjects answered that they preferred (a), the $1 million for sure. Then Allais asked the second question:

Which lottery do you prefer:

(c) 11 percent chance of getting $1 million, and

89 percent chance of getting nothing,

or

(d) 10 percent chance of getting $5 million, and

90 percent chance of getting nothing.

Did you ask yourself which you would prefer?

Most of the respondents preferred the slightly lower probability of obtaining a substantially higher prize—they preferred (d) over (c).

Surprise, surprise: the choices preferred by the majority—(a) over (b) and (d) over (c)—contradict the “independence of irrelevant alternatives,” the notorious axiom of von Neumann and Morgenstern, and of Savage…and of anybody else who pretends to think “rationally.”

How could this be so? Since this is not immediately obvious, let us rewrite situation (a) as lottery (a′), noting that “for sure” is equal to 89 percent plus 11 percent. Hence, the decision is between

(a′) 11 percent chance of getting $1 million,

89 percent chance of getting $1 million,

or

(b′) 10 percent chance of receiving $5 million,

89 percent chance of getting $1 million,

1 percent chance of getting nothing.

Because “89 percent chance of getting $1 million” is common to both lotteries, this part of the lottery is irrelevant and, by von Neumann and Morgenstern’s fourth axiom, should be ignored. Hence, the question Allais asked boils down to

(a′′) 11 percent chance of $1 million,

or

(b′′) 10 percent chance of receiving $5 million,

1 percent chance of getting nothing

Allais’s interviewees, and most probably you, the reader, as well, preferred (a′′) to (b′′) Note that this choice does not maximize the monetary outcome, but it can be explained by decreasing marginal utility of wealth (i.e., aversion to risk).

So far so good, so now, let’s turn to (c′) versus (d′). We write these as

(c′) 11 percent chance of getting $1 million, and

89 percent chance of getting nothing,

or

(d′) 10 percent chance of getting $5 million, and

89 percent chance of getting nothing, and

1 percent chance of getting nothing.

This time, it is the “89 percent chance of getting nothing” that is common to both lotteries and is irrelevant. It should be ignored. Hence, Allais’s second question can be reduced to

(c′′) 11 percent chance of getting $1 million,

or

(d′′) 10 percent chance of getting $5 million, and

1 percent chance of getting nothing

Most interviewees preferred (d′′) to (c′′). Have you, perceptive reader, noticed that (a′′) and (b′′) are exactly the same as (c′′) and (d′′)? Nevertheless, very many people prefer (a′′) over (b′′), but at the same time they prefer (d′′) to (c′′). What a paradox! By adding “89 percent chance of getting $1 million” to both lotteries, people prefer (a) over (b). But by adding “89 percent chance of getting nothing” to both lotteries, they prefer (d) over (c).

Another way to recognize this paradox is to compute the expected payouts. The expected value of gamble (a) is $1 million, while it is $1.39 million for (b); the expected value of (c) is $110,000, and $500,000 for (d). So, by the theory of expected utility, (b) should be preferred over (a), and (d) should be preferred over (c). In the first gamble, the less risky choice is preferred over a higher expected utility, while in the second gamble, a higher expected utility is preferred over a less risky choice.

One of the people to fall into this trap was Leonard Savage himself. Allais organized a conference in May 1952 in Paris, called “Foundations and Applications of the Theory of Risk Bearing.” The “Who’s Who” of economic theory—everyone who was anyone—attended. Of course, Friedman (Nobel Prize 1976) and Savage were there, as were Ragnar Frisch (Nobel Prize 1969), Paul Samuelson (Nobel Prize 1970), Kenneth Arrow (Nobel Prize 1972), and many other luminaries.

Over lunch, Allais presented Savage with his questionnaire. The statistician, as knowledgeable about rational decision-making as anybody in the world, considered the situations…and promptly chose (a) and (d). Once Allais pointed out his “irrationality,” Savage was deeply disturbed—he had violated his own theory! Allais, on the other hand, was jubilant. By challenging the axiom of “independence of irrelevant alternatives,” his lunchtime quiz had thrown what he somewhat dismissively called the “American School of rational decision theory,” so ably developed by von Neumann, Morgenstern, and Savage, into turmoil.

Allais explained the paradox with the apparent preference for security in the neighborhood of certainty, a profound psychological, if supposedly irrational, reality. As his experiment showed, it superseded the fourth axiom, the independence of irrelevant alternatives.

As there was no room for such lack of rationality in the theory of expected utility, something had to give. “If so many people accept this axiom without giving it much thought,” Allais wrote, “it is because they do not realize all the implications, some of which—far from being rational—may turn out to be quite irrational in certain psychological situations.”7 The rational human, behaving according to expected utility theory when faced with risk, simply does not exist.

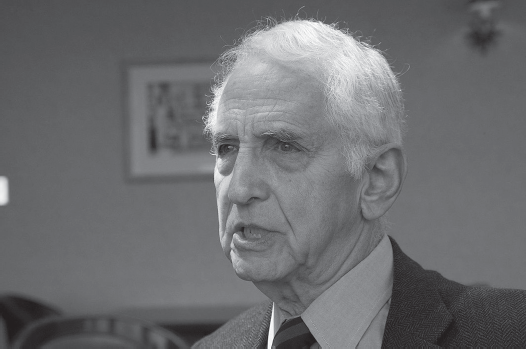

Several years later, expected utility theory was dealt a further blow with another paradox. This part of the story is linked to a historic event in 1971—the notorious leak of the so-called Pentagon Papers to The New York Times, the Washington Post, and more than a dozen other news outlets. The Papers, a study commissioned by the U.S. secretary of defense, Robert McNamara, about the American military involvement in Vietnam, noted—among many other deeply embarrassing details—how the war in Vietnam was expanded to Cambodia and Laos without informing the American people, how several U.S. administrations misled Congress, and how several presidents had actually lied to the public. The study was classified as top-secret, and only fifteen copies were made. Two of the copies were given to the RAND Corporation, the think tank in California where Kenneth Arrow had spent summers in the late 1940s. The content raised the profound suspicion of an associate by the name of Daniel Ellsberg (figure 10.1).

FIGURE 10.1: Daniel Ellsberg.

Source: Wikimedia Commons; photo by Christopher Michel.

Born in 1931 in Chicago, Ellsberg was a highly intelligent boy. His parents were of Jewish descent but had become devout Christian Scientists. His father, an engineer, was unemployed for some time due to the Great Depression but eventually got a job at a renowned engineering firm in Michigan. Family friends remembered the Ellsberg household as cold, with Christian Science front and center. The family prayed every morning, studied a weekly Bible lesson, joined Wednesday night meetings, and attended church every Sunday. The boy excelled at school, but his mother foresaw a career for him as a concert pianist and drove him accordingly. She pushed him to practice six to seven hours a day on weekdays and twelve hours on Saturdays. But when a renowned piano teacher declined to take him on as a student, she was devastated. So great was the maternal pressure that Ellsberg would exclaim later in life, “I’ve done my time in hell.”8

But all plans were cut short after a terrible car accident when he was fifteen years old. On their way from Michigan to a family event in Denver, the father, overtired from hours of driving, fell asleep at the steering wheel and crashed into a concrete bridge. He and his son survived, but the mother died instantly, and Ellsberg’s younger sister succumbed to her injuries several hours later. Daniel himself was in a coma for thirty-six hours. When he came to, he showed little emotion. It was as if the death of his mother had taken pressure off the boy. One of the first thoughts that allegedly came to him when he awoke from his coma was that he would no longer have to practice the piano. No wonder he felt the need to turn to psychoanalysis in later life, a decision that would prove to have unintended consequences.

He had no difficulty getting accepted to the college of his choice, Harvard University, and obtained a Pepsi-Cola scholarship to study economics there. After graduating summa cum laude, he spent a year on a Woodrow Wilson Scholarship at the University of Cambridge. In 1954, he joined the Marine Corps and was discharged as a first lieutenant after serving for three years as a platoon leader and company commander, including six months with the Sixth Fleet during the Suez Crisis. Then it was back to Harvard for three years of independent graduate study as a Junior Fellow. In 1962, he earned a PhD in economics at Harvard with a thesis called Risk, Ambiguity, and Decision.

While writing his doctoral thesis at Harvard, Ellsberg was employed as a strategic analyst at the RAND Corporation. The choice was not fortuitous because the think tank—at the forefront of the emerging field of decision theory—provided an extremely stimulating intellectual environment. More than thirty Nobel Prize winners have been associated with it to date. But even in the heady environment at RAND, Ellsberg stood out. An officer in the Marines with a Harvard PhD and several papers published in prestigious scientific journals, he was the ideal recruit. Working on classified research that required clearances even higher than top secret, he became acquainted with the processes behind high-level decision-making, such as whether to initiate nuclear war. It was no pretty discovery. What he realized, to his shock, was “that the danger of nuclear war did not arise from the likelihood of surprise attack by either side but from possible escalation in a crisis.” This knowledge became “a burden…that has shaped my life and work ever since.”9

Working on an Air Force contract, he became a consultant to the Commander-in-Chief Pacific, then to the Departments of Defense and State, and finally to the White House, specializing in problems of the command and control of nuclear weapons, nuclear war plans, and crisis decision-making. In October 1962, at the onset of the Cuban Missile Crisis, he was called to Washington, and for the next week served on two of the three working groups reporting to the Executive Committee of the National Security Council. A later assignment had him assist on secret plans to escalate the war in Vietnam, although he personally regarded them as wrongheaded and dangerous.

He volunteered to serve in Vietnam, transferring to the State Department in mid-1965. Based at the U.S. embassy in Saigon for two years, Ellsberg’s assignment was to evaluate pacification efforts on the front lines. Relying on his Marine training, he accompanied troops to combat in order to see the hopeless war from up close. In the process, he contracted hepatitis, probably on an operation in the rice paddies.

Back at RAND, he worked on the top-secret McNamara study of “U.S. Decision-making in Vietnam, 1945–68,” which later came to be known as the Pentagon Papers. This study taught him about the continuous record of governmental deception and unwise decision-making, cloaked by secrecy, under four presidents: Harry S. Truman, Dwight D. Eisenhower, John F. Kennedy, and Lyndon B. Johnson. What was worse, was that he “learned from contacts in the White House that this same process of secret threats of escalation was underway under a fifth president, Richard M. Nixon.” His conclusion was that “only a better-informed Congress and public might act to avert indefinite prolongation and further escalation of the war.” After meeting conscientious objectors who refused to be drafted to fight in what they saw to be a wrongful war, even at the risk of going to prison, Ellsberg asked himself, “what could I do to help shorten this war, now that I’m prepared to go to prison for it?”

In the fall of 1969, he reached a momentous decision: He photocopied the entire 7,000-page McNamara study and handed it to the chairman of the Senate Foreign Relations Committee, Senator William Fulbright. The senator sat on it without publicizing it, fearing executive reprisal, and in February 1971, the exasperated Ellsberg decided to leak it: first to The New York Times, then to the Washington Post, and then to seventeen other newspapers. He was sure that he would go to prison for the rest of his life.

The Nixon administration sued to stop the publication of the Pentagon Papers, and the case soon reached the Supreme Court. Citing the First Amendment, the justices ruled 6‒3 to uphold the newspapers’ right to publish the Papers. Frustrated, the administration tried to cast doubt on the leaker’s credibility or otherwise squelch him. Agents acting on the orders of the Nixon administration—they called themselves “the plumbers” because their job was to “plug leaks”—broke into the office of Ellsberg’s psychoanalyst and tried to steal the doctor’s files on him. Presumably, they hoped either to blackmail him or to smear him so that any future revelations would not be believable. They found nothing because the doctor kept Ellsberg’s file under a fake name. There were even plans to physically incapacitate or kill the whistleblower.

Ellsberg faced prosecution on twelve federal felony counts. The indictments carried a maximum sentence of 115 years in prison. This courageous and brilliant young man, with prospects of rising to the highest rung of academia or administration, was willing to risk his career, freedom, and even his life in order to expose the government’s deception of its people. Charged with stealing and holding secret documents, he was about to give it all up. But he never wavered. “I felt that as an American citizen, as a responsible citizen, I could no longer cooperate in concealing this information from the American public. I did this clearly at my own jeopardy and I am prepared to answer to all the consequences of this decision,” he said in a public statement.10

Ellsberg was lucky. During the trial, the judge revealed that representatives of the government had attempted to bribe him (the judge) by offering him the directorship of the Federal Bureau of Investigation (FBI). When, on top of all this, the prosecution claimed that the records of illegal wiretaps had been lost, the judge had had enough. He declared a mistrial and all charges were dismissed “with prejudice,” which meant that Ellsberg could not be prosecuted again for his alleged crimes.

Several years before he became notorious for the Pentagon Papers, Ellsberg had made a name for himself in a totally different context. While still a Fellow at Harvard, he published a paper that demonstrated problems with the way in which people handle probabilities. “Risk, Ambiguity, and the Savage Axioms,” published in the Quarterly Journal of Economics, is considered a landmark in the theory of decision-making.

Following in Allais’s footsteps, Ellsberg concocted an experiment. It went like this: suppose that you have an urn that contains ninety balls. Thirty are red, and the other sixty are either blue or green. You do not know how many are green or how many are blue; there may be zero green balls and 60 blue balls, one green and 59 blue, or the other way around, or anything in between. If you guess correctly which ball you draw, you win a prize. But before you draw, you must answer a question: Would you prefer to bet on a red ball or a green ball?11

There’s no correct answer, of course, and some people would pick green, others would pick red. Then those who answer “red” are asked a second question: Would you prefer to bet that it will be “red or blue,” or that it will be “green or blue”? Again, there is no correct answer but many choose “green or blue.”

The latter group—be it a majority or just many people—presents a paradox: “If you are in [this group], you are now in trouble with the Savage axioms,” said Ellsberg.12 Let’s see why:

The urn contains thirty reds, and sixty other balls that are either blue or green, in some unknown combination.

Does Bertha prefer to bet on a red or on a green? If she opts for red, as some people surely do, she obviously believes that there are fewer than thirty greens in the urn. Hence, by her own belief, the urn must contain more than thirty blues.

Now, does Bertha prefer to bet on “red or blue” or on “green or blue”? If she opts for “green or blue,” as some people would, she is irrational. Why? Well, she knows that there are thirty reds, and, as she indicated by her answer to the first question, she believes that there are more than thirty blues. Hence, she should have opted for “red or blue,” which, according to her belief, will include more than sixty balls.

Note that when the choice was between red and green, she opted for red. When the choice was between “red or blue” on the one hand and “green or blue” on the other, she opted for the latter. Simply by adding “or blue” to the alternatives made Bertha reverse her decision, thus acting against her own belief!

This is quite amazing. Ramsey had already established the underpinnings of a theory of subjective probabilities. But Ellsberg showed that people behave irrationally, even given their own assessment of probabilities.

The underlying reason for such a situation is that Bertha violated the axiom of the independence of irrelevant alternatives. A rational person, no matter what her belief about the number of green balls, should compare “red or blue” to “green or blue” in the same manner as she compares red to green. The phrase “or blue” is an irrelevant alternative and should not influence her choice, just like adding “89 percent chance of getting $1 million” to both alternatives in Allais’s experiment should not influence that decision. It’s déjà-vu all over again.

Ellsberg then took his colleagues to task, many more senior than he, either because they participated, and failed, in his experiment, or based on their theoretical work. “There are those who do not violate the axioms, or say they won’t, even in these situations…. Some violate the axioms cheerfully, even with gusto…others sadly but persistently, having looked into their hearts, found conflicts with the axioms and decided…to satisfy their preferences and let the axioms satisfy themselves. Still others…tend, intuitively, to violate the axioms but feel guilty about it and go back into further analysis…. A number of people who are not only sophisticated but reasonable decide that they wish to persist in their choices.” The latter group, Ellsberg notes, includes people who felt committed to the axioms and were surprised (even dismayed) to find that they wished to violate them.

Ramsey and Savage had surmised that probabilities cannot be considered objective, but Ellsberg proved that even subjective probabilities do not add up. Are people who behave in that manner irrational? That depends on the definition of rational. In any case, their behavior is inconsistent with expected utility theory. Ellsberg thought that their choices were motivated by ambiguity aversion: People seem to prefer taking on risk in situations where they know the odds, rather than when the odds are completely ambiguous. When they choose “green or blue,” they know that there are sixty balls; had they opted for “red or blue,” there could have been anywhere between thirty and ninety.13 But call it whatever you want, “ambiguity aversion,” as did Ellsberg, or “preference for security in the neighborhood of certainty,” as did Allais, people’s behavior is irrational according to the axioms of game theory. Allais’s paradox exhibits a shortcoming of subjective utility, while Ellsberg’s paradox demonstrations the inadequacy of subjective probability. Both failings are grounded in the violation of the axiom of the independence of irrelevant alternatives.