In our very first chapter, we emphasized that computing is application-specific and is a technique to calculate any measurable entity across multi-disciplinary fields. These calculations are meant to solve actual computational problems in a real-world scenario, which is why our focus is on computational problem solving, catering to the prime objective of computing.

Computing: The answer to every computational problem lies in its computed solution.

Accelerated computing: A computationally intensive problem requires an accelerated solution.

Let's understand how PyCUDA can help us solve a myriad of computational problems based on GPU computations via Python, with or without CUDA-C/C++ code.

Following our first C++ versus CUDA example, we will now look into a very simple Python versus PyCUDA example with a similar approach, hands-on with PyCharm. Following this, we'll shift our focus toward actual GPU-accelerated computations to solve specific computational problems with PyCUDA.

Perform the following steps to create a simple program in Python that will initialize two arrays with values and then calculate a third array whose values will the the product of corresponding elements in the two arrays, using the PyCharm IDE:

- Launch PyCharm as illustrated earlier and create a new empty Python file:

- Now use the following code. We again try to use 500 million elements with double precision:

import numpy as np

from timeit import default_timer as timer

N = 500000000 #500 Million Elements

# CPU Function to multiply two array elements and also update the results on the second array

def multiply(p_cpu, q_cpu):

for i in range(N):

q_cpu[i] = p_cpu[i] * q_cpu[i]

def main():

#Initialize the two arrays of double data type all with 0.0 values upto N

p = np.zeros(N, dtype=np.double)

q = np.zeros(N, dtype=np.double)

#Update all the elements in the two arrays with 23.0 and 12.0 respectively

p.fill(23.0)

q.fill(12.0)

default_timer is used to compute the CPU computation time:

#Time the CPU Function

begin = timer()

multiply(p, q)

numpy_cpu_time = timer() - begin

#Report CPU Computation Time

print("CPU function took %f seconds." % numpy_cpu_time)

#Choose a random integer index value between 0 to N

random = np.random.randint(0, N)

#Verify all values to be 276.0 for second array by random selection

print("New value of second array element with random index", random, "is", q[random])

if __name__ == "__main__":

main()

- Right-click over the code and select Run 'conventional_python_...':

Python does not have built-in array support and that is why we import the numpy library for this. This time, we find that the CPU computation time is many times higher than we previously saw on C++ in the first section of this chapter. It took 114.381630 seconds:

cProfile is a command-line-based Python profiler similar to nvprof in CUDA. With the following command, you can profile your complete Python code inclusive of GPU-based allocations and look up the CPU+GPU computation time:

python -m cProfile -s cumulative your_program.py | less

To exit from the interface, press Q.

For our PyCUDA version of the same code we used in the preceding code, we choose a version that includes minimal C code:

import pycuda.autoinit

import pycuda.driver as cudadrv

import numpy

import pycuda.gpuarray as gpuarray

from pycuda.elementwise import ElementwiseKernel

# Here, we multiply the two values and update all the elements of the second array with the new product

# Note that we pass C syntax into the ElementwiseKernel

multiply = ElementwiseKernel (

"double *a_gpu, double *b_gpu",

"b_gpu[i] = a_gpu[i] * b_gpu[i]",

"multiply")

N = 500000000 # 500 Million Elements

a_gpu = gpuarray.to_gpu(numpy.zeros(N).astype(numpy.double))

b_gpu = gpuarray.to_gpu(numpy.zeros(N).astype(numpy.double))

The following code snippet assigns values to all the elements of the two arrays, calculates and stores their product using the multiply function, and then prints the results and time taken:

a_gpu.fill(23.0)

b_gpu.fill(12.0)

begin = cudadrv.Event()

end = cudadrv.Event()

# Time the GPU function

begin.record()

multiply(a_gpu, b_gpu)

end.record()

end.synchronize()

gpu_multiply_time = begin.time_till(end)

random = numpy.random.randint(0,N)

# Randomly choose index from second array to confirm changes to second array

print("New value of second array element with random index", random, "is", b_gpu[random])

# Report GPU Function time

print("GPU function took %f milliseconds." % gpu_multiply_time)

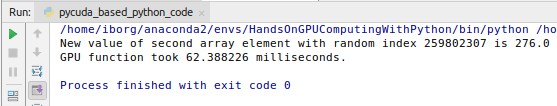

The GPU function now computes the same operation in 62.38826 milliseconds:

First, we import autoinit from PyCUDA, which automatically performs all the steps necessary to get CUDA ready to submit the NVIDIA GPU compute kernels. The driver module is imported from PyCUDA to calculate the GPU computation time. PyCUDA's own gpuarray module is imported to initialize the arrays with the GPU. As per the official PyCUDA documentation, evaluating involved expressions on GPUArray instances can be somewhat inefficient, because a new temporary variable is created for each intermediate result. So, we import the elementwise module from PyCUDA, which contains tools to help generate kernels to evaluate multi-stage expressions on one or several operands in a single pass.

To understand this a bit better, let's remove the elementwise kernel and try a similar operation but with the gpuarray module:

import pycuda.autoinit

import pycuda.gpuarray as gpuarray

import numpy as np

import pycuda.driver as cudrv

N = 500000000

begin = cudrv.Event()

end = cudrv.Event()

begin.record()

a_gpu = gpuarray.to_gpu(np.zeros(N).astype(np.double))

a_gpu.fill(23.0)

b_gpu=a_gpu*12.0

end.record()

end.synchronize()

pycuda_gpu_time = begin.time_till(end)

random = np.random.randint(0,N)

We now check our new multiplication product value by randomly choosing an index and also reporting the computation time:

print("Choosing second array element with index", random, "at random:", b_gpu[random])

print("\nGPU took %f milliseconds." % pycuda_gpu_time)

The result gets computed in around 2.5 seconds on the GPU:

Even though this code appears to be just like a conventional Python program, it took more than two seconds to complete. So, now we know the significance of using the elementwise kernel.

Now let's come back to addressing the core concept of computing: how can this approach be utilized to solve a computational problem? Let's choose a problem scenario, say in the field of biostatistics, which relates to the statistical study of biological data. Suppose we want to correlate one series of such biological big data with another. With PyCUDA and other GPU-accelerated Python modules, we can compute values to understand the relationship between two biological data arrays by using a statistical formula. After we illustrate our first PyCUDA program, we'll further explore this approach later in this chapter through a real-world example.