Part II: Prediction Model and Consciousness—Association of Contents with Consciousness

Empirical Findings Ia: Predicted Input—Unconscious or Conscious?

Can predictive coding account for the association of contents with consciousness? Traditional models of stimulus-induced activity (such as neurosensory models; see chapter 1, part I, and chapter 3, part I) that hold the actual input, that is, the stimulus itself, to be sufficient cannot properly account for consciousness. Consciousness itself does not come with the stimulus itself (the actual input) and therefore cannot be found in such (supposedly) purely sensory-based stimulus-induced activity.

Predictive coding, however, presupposes a different model of stimulus-induced activity. Rather than being sufficiently determined by the actual input, specifically, the sensory input, stimulus-induced activity is also codetermined by the predicted input of the prestimulus activity levels. The neurosensory model of stimulus-induced activity is thus replaced by a neurocognitive model (part II in chapter 5).

Can the neurocognitive model of stimulus-induced activity as in predictive coding account for the association of any given content with consciousness? If so, the cognitive component itself, the predicted input, should allow for associating contents with consciousness. The predicted input itself including its content should then be associated with consciousness rather than unconsciousness. The prediction model of brain and its neurocognitive model of stimulus-induced activity would thus be extended to consciousness entailing a cognitive model of consciousness (see, e.g., Hohwy, 2013; Mossbridge et al., 2014; Palmer, Seth, & Hohwy, 2015; Seth, Suzuki, & Critchley, 2012; Yoshimi & Vinson, 2015).

In contrast, if dissociation between predicted input and consciousness is possible, that is, allows for an unconscious predicted input, empirical evidence would not support the supposed relevance of the predicted input for associating contents with consciousness. Even if it held true for the brain’s neural processing of contents, the prediction model of brain and its cognitive model of stimulus-induced activity could then no longer be extended to consciousness. The crucial question thus is whether the predicted input and its contents are associated with consciousness by default, that is, automatically, which would make impossible unconscious processing. Or, alternatively, whether predicted input and its contents can also be processed in an unconscious way—in that case, consciousness would not be associated in an automatic way. We take up this question in the next section.

Empirical Findings Ib: Predicted Input—Unconscious Processing

Vetter et al. (2014) conducted a behavioral study in which they separated the predicted percept in a visual motion paradigm from the actually presented stimulus and the subsequently perceived content or percept. The authors exploited the fact that conscious perception of apparent motion varies with motion frequencies (those frequencies in which we perceive the movement or motion of a stimulus) with each subject preferring a specific frequency. The respective individual’s preferred motion frequency must reflect the predicted input, that is, the predicted motion percept.

To serve as prediction of a specific actual input, the predicted input must be in time with the frequency in order to serve as predicted input, whereas, if it is not in accordance with the respective frequency or out of time, it cannot serve as predicted input. Vetter, Sanders, and Muckli (2014) consequently distinguished between predicted percepts as being in time with the subsequent actual input and unpredicted percepts as being out of time with the subsequent actual input.

The authors presented the subjects with three different kinds of actual inputs, intermediate, high, and low motion frequencies. They observed that the in-time predicted input worked well and thus predicted actual input in the intermediate motion frequencies, which was also associated with conscious awareness of the predicted input itself. In contrast, the low motion frequencies neither took on the role as predicted input nor was either associated with consciousness.

That was different in high motion frequencies, however. In this case the in-time predicted input still functioned and operated as prediction but was no longer associated with conscious illusory motion perception (despite the fact that it predicted well the subsequent actual input). There is a dissociation between the high frequencies serving as predicted input and their association with consciousness: they take on the role as predicted input but are not associated with consciousness. Hence, predicted input/predictive coding and consciousness can dissociate from each other with both not being coupled with each other by default, that is, in a necessary way.

The study by Vetter et al. (2014) shows three different scenarios: (1) predicted input with consciousness (as in intermediate-motion frequencies); (2) predicted input without consciousness (as in high-motion frequencies); (3) no predicted input at all (as in low-motion frequencies). Together, these data by Vetter et al. (2014) suggest that predictive coding is not necessarily coupled with consciousness—the presence of predictive coding is well compatible with the absence of consciousness.

The assumption of unconscious processing of the predicted input is further supported by others (see den Ouden et al., 2009; Kok, Brouwer, van Gerven, & de Lange, 2013; Wacongne et al., 2011). One can consequently infer that empirical evidence does not support the claim that the predicted input is associated with and thus sufficient by itself for consciousness. Note that my claim does not contest that there are unconscious elements in the predicted inputs. My claim concerns only that the predicted input itself can be processed in a completely unconscious way without entailing consciousness. This speaks against the predicted input being a sufficient neural condition of consciousness.

In contrast, it leaves open whether the predicted input may at least be a necessary (but nonsufficient) neural condition of consciousness. In either case we need to search for a yet different neuronal mechanism that allows for associating consciousness to contents as either related to predicted or to actual input. How can we describe the requirements for such an additional neuronal mechanism in more detail? We consider this question in the section that follows.

Empirical Findings IIa: From the Predicted Input to Consciousness

How does the requirement for an additional neuronal mechanism stand in relation to the cognitive model of consciousness? It means that the neurocognitive model of stimulus-induced activity as based on the predicted input and its modulation of the actual input cannot sufficiently account for associating any given content with consciousness. The predicted input itself can remain unconscious; that is, it cannot be associated with consciousness.

How and from where, then, is consciousness coming from if not from the predicted input itself? One may now revert to the actual input. However, as stated in the previous section, consciousness does not come with the actual input, that is, the sensory stimulus, either. We thus remain in the dark as to where and how consciousness can be associated with any given content independent of whether it originates in either the predicted input or the actual input.

Where does this leave us? The extension of the neurosensory to a neurocognitive model of stimulus-induced activity as in predictive coding only concerns the selection of contents in consciousness. In contrast, the neurocognitive model as presupposed by predictive coding remains insufficient by itself to address the question for the association of contents with consciousness. The prediction model of brain and its neurocognitive model of stimulus-induced activity therefore cannot be simply extended to a cognitive model of consciousness. Instead, we require a model of consciousness that is not primarily based on the neurocognitive model of stimulus-induced activity as in the prediction model of brain.

Put differently, we require a noncognitive model of stimulus-induced activity with its ultimate extension into a noncognitive model of consciousness (see Lamme, 2010; Northoff & Huang, in press; Tsuchiya et al., 2015, for first steps in this direction within the context of neuroscience) in order to account for our second two-part question of why and how consciousness can be associated with contents. Such a model is put forth and developed in the second part of this volume (chapters 7–8) where I present a spatiotemporal model of consciousness. Before considering such a spatiotemporal model, however, we should discuss some counterarguments by the advocate of predictive coding.

The advocate of predictive coding may now want to argue that the predicted input is only half of the story. The other half consists of the prediction error. Even if the predicted input itself may remain unconscious, the prediction error may nevertheless allow for associating contents with consciousness. In that case the prediction error itself and, more specifically, its degree (whether high or low) may allow for associating contents with consciousness. For example, a high degree of prediction error, as based on strong discrepancy between predicted and actual input, may favor the association of the respective content with consciousness. Conversely, if the prediction error is low, the content may not be associated with consciousness. Is such association between prediction error and consciousness supported on empirical grounds? We investigate this further in the next section.

Empirical Findings IIb: Interoceptive Sensitivity versus Interoceptive Accuracy and Awareness

The model of predictive coding has been mainly associated with exteroceptive stimulus processing: the processing of inputs to neural activity that originate from the environment. However, recently Seth (2013, 2014; Seth, Suzuki, & Critchley, 2012) suggests extending the model of predictive coding from extero- to interoceptive stimulus processing, thus applying it to stimuli generated within the body itself. As with exteroceptive stimulus processing, the stimulus-induced activity resulting from interoceptive stimuli is supposed by Seth to result from a comparison between predicted and actual interoceptive input.

Seth and Critchley (2013) (see also Hohwy, 2013) argue that the predicted input is closely related to what is described as agency, the multimodal integration between intero- and exteroceptive inputs. Neuronally, agency is related to higher-order regions in the brain such as the lateral prefrontal cortex where statistically based models of the possible causes underlying an actual input are developed.

Seth observes that comparison between predicted interoceptive input and actual interoceptive input takes place in a region on the lateral surface of the brain, the insula. The anterior insula may be central here because it is where intero- and exteroceptive pathways cross such that intero- and exteroceptive stimuli can be linked and integrated (see Craig, 2003, 2009, 2011); such intero-exteroceptive integration allows the insula to generate interoceptive–exteroceptive predictions of, for instance, pain, reward, and emotions (as suggested by Seth, Suzuki, & Critchley, 2012; Seth & Critchley, 2013; as well as Hohwy, 2013).

Is the processing of the interoceptive–exteroceptive predicted input associated with consciousness? One way to test this is to investigate conscious awareness of the heartbeat. We do need to distinguish between interoceptive accuracy and awareness since they may dissociate from each other. One can, for instance, be inaccurate about one’s heart rhythm while being highly aware of one’s own heartbeat (Garfinkel et al., 2015) as, for instance, is the case in anxiety disorder. Let us start with interoceptive accuracy. We may be either less or more accurate in our perception of our own heartbeat (Garfinkel et al., 2015). Such inaccuracy in the awareness of our heartbeat leads us to the concept of interoceptive accuracy (which, analogously, may also apply to exteroceptive stimuli, or exteroceptive accuracy).

The concept of interoceptive accuracy (or, alternatively, interoceptive inaccuracy) describes the degree to which the subjective perception of the number of heartbeats deviates from the number of objective heartbeats (as measured for instance with EEG) or, more generally “the objective accuracy in detecting internal bodily sensations” (Garfinkel et al., 2015). The less deviation between objective and subjective heartbeat numbers, the higher the degree of interoceptive accuracy. In contrast, the more deviation between objective and subjective heartbeat numbers, the higher the degree of interoceptive inaccuracy.

Interoceptive accuracy must be distinguished from interoceptive awareness. Interoceptive awareness describes the consciousness or awareness of one’s own heartbeat. One may well be aware of one’s own heartbeat even if one remains inaccurate about it. In other terms, interoceptive awareness and interoceptive accuracy may dissociate from each other. Recently, Garfinkel et al. (2015) also distinguished between interoceptive sensibility and awareness. Following Garfinkel et al. (2015), interoceptive sensibility concerns the “self-perceived dispositional tendency to be internally self-focused and interoceptively cognizant” (using self-evaluated assessment of subjective interoception).

Taken in this sense, interoceptive sensibility includes the subjective report of the heartbeat (whether accurate or inaccurate), which entails consciousness; interoceptive sensibility can thus be considered an index of consciousness (in an operational sense as measured by the subjective judgment). Interoceptive sensibility must be distinguished from interoceptive awareness, which concerns the metacognitive awareness of interoceptive (in)accuracy of the heartbeat.

Put more simply, interoceptive sensibility can be described as consciousness of the heartbeat itself, whereas interoceptive awareness refers to the cognitive reflection on one’s own sensibility, thus entailing a form of meta-consciousness: awareness. Accordingly, the question of consciousness with regard to interoceptive stimuli from the heart comes down to interoceptive sensitivity (as distinguished from both interoceptive accuracy and awareness). For that reason, I focus in the following on interoceptive sensitivity as paradigmatic instance of consciousness.

Prediction Model and Consciousness Ia: Contents—Accurate versus Inaccurate

The example of interoception makes clear that we need to distinguish between the association of contents with consciousness on the one hand and the accuracy/inaccuracy in our detection and awareness of contents. Put somewhat differently, we need to distinguish the cognitive process of detection/awareness of contents as accurate or inaccurate from the phenomenal or experiential processes of consciousness of those contents irrespective of whether they are accurate or inaccurate in our awareness.

The distinction between consciousness and detection/awareness does not apply only to interoceptive stimuli from one’s own body, such as one’s own heartbeat, but also to exteroceptive stimuli from the environment. We may be accurate or inaccurate in our reporting and judgment of exteroceptive stimuli such as when we misperceive a face as a vase. Moreover, we may remain unaware of our inaccuracy in our judgment; the unawareness of the inaccuracy in our judgment may guide our subsequent behavior. Therefore, both exteroceptive accuracy/inaccuracy and awareness need to be distinguished from exteroceptive sensitivity, that is, consciousness itself: the latter refers to the association of exteroceptive contents with consciousness independent of whether that very same content is detected. Moreover, the association of consciousness of exteroceptive contents remains independent of whether the subject is aware of its own accuracy or inaccuracy.

First and foremost, the preceding reflections culminate in a useful distinction between two different concepts of contents: accurate and inaccurate. The accurate concept of content refers exclusively to accurate registering and processing of events or objects within the body and/or world in the brain. Conceived in an accurate way, content is supposed to reflect objects and events as they are (entailing realism).

In contrast, the inaccurate concept of content covers improper or inaccurate registering and processing of events or objects in the body and/or world in the brain. If content only existed in an accurate way, intero- and exteroceptive (in)accuracy should remain impossible. However, that premise is not endorsed by empirical reality since, as we have seen, we can perceive our own heartbeat inaccurately, and we are all familiar with misperceiving elements of our environments.

The virtue of predictive coding is that it can account for the inaccuracies in content far better than can be done with a naïve conception of the brain as a passive receiver and reproducer of inputs. By combining predicted input/output with actual input/output, predictive coding can account for the selection of inaccurate contents in our interoception, perception, attention, and so forth. In a nutshell, predictive coding is about contents, and its virtue lies in the fact that it can account for accurate and inaccurate as well as internally and externally generated contents.

In sum, predictive coding can well account for the selection of contents as well as our awareness of those contents as accurate or inaccurate. In contrast, predictive coding remains insufficient when it comes to explain how those very same contents can be associated with consciousness in the first place.

Prediction Model and Consciousness Ib: Contents and Consciousness

Can predictive coding also extend beyond contents, that is both the accurate and inaccurate, to their association with consciousness? For his example of interoceptive stimuli, Seth supposes that predictive coding accounts for interoceptive sensitivity, that is, the association of interoceptive stimuli with consciousness. More specifically, Seth (2013; Seth, Suzuki, & Critchley, 2012) infers from the presence of predictive coding of interoceptive stimuli in terms of predicted input and prediction error within the insula specifically to the presence of consciousness, that is, consciousness of one’s own heartbeat, which results in what he describes as “conscious presence” (Seth, Suzuki, & Critchley, 2012).

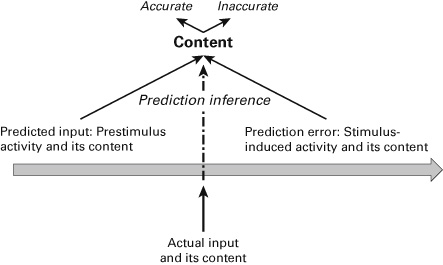

Since he infers from the presence of predictive coding the presence of consciousness, I here speak of a prediction inference. The concept of prediction inference refers to the assumption that one infers from the neural processing of contents in terms of predicted and actual inputs the actual presence of consciousness. Is the prediction inference justified? I argue that such inference is justified for contents including the distinction between accurate and inaccurate contents: we can infer from the neural processing of predicted and actual input to accurate and inaccurate contents—I call such inference prediction inference.

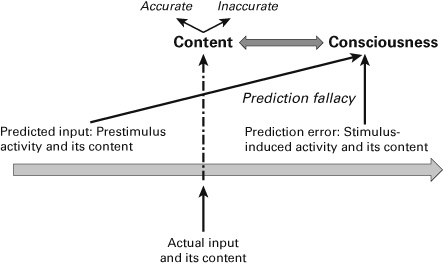

In contrast, that very same inference is not justified when it comes to consciousness: we cannot infer from the neural processing of predicted and actual input to the association of content (including both accurate and inaccurate contents) with consciousness—I call such inference prediction fallacy. From this, I argue that Seth can make his assumption of the presence of consciousness only on the basis of committing such a prediction fallacy. We analyze this fallacy in detail in the next section.

Prediction Model and Consciousness IIa: Prediction Inference versus Prediction Fallacy

Seth infers, from the fact that predictive coding operates in interoceptive stimulus processing, the presence of consciousness in the form of interoceptive sensibility. Specifically, he infers from the presence of interoceptive predicted inputs (as generated in the insula) the presence of conscious interoceptive contents, for example, the heartbeat during subsequent stimulus-induced activity as based on the prediction error. That inference, however, overlooks the fact that interoceptive contents can nevertheless remain within the realm of unconsciousness. The presence of interoceptive predicted input and the subsequent prediction error in stimulus-induced activity may account for the presence of interoceptive contents, for example, our own heartbeat, including our subsequent judgment of these interoceptive contents as either accurate or inaccurate.

In contrast, the interoceptive contents themselves do not yet entail the association of contents with consciousness—they can well remain as unconscious (as is most often the case in daily life) irrespective of whether they are (judged and detected and become aware as) accurate or inaccurate. The conceptual distinction between consciousness of contents on the one hand and accurate/inaccurate contents on the other is supported on empirical grounds given data that show how interoceptive accuracy can dissociate from interoceptive sensitivity, that is, consciousness (and also from interoceptive awareness) and can thus remain unconscious (rather than conscious) (see Garfinkel et al., 2015).

The same holds analogously for exteroceptive contents as originating in the external world rather than in one’s own internal body. These exteroceptive contents may be accurate or inaccurate with respect to the actual event in the world; that remains independent of whether they are associated with consciousness. This independence is supported by empirical data (see Faivre et al., 2014; Koch et al., 2016; Lamme, 2010; Northoff, 2014b; Northoff et al., 2017; Tsuchiya et al., 2015; Koch & Tsuchiya, 2012). Therefore, the conceptual distinction between consciousness of contents and the contents themselves including whether they are accurate or inaccurate is supported on empirical grounds for both intero- and exteroceptive contents, or, put another way, from body and the world.

The distinction between consciousness of contents and contents themselves carries far-reaching implications for the kind of inference we can make from the presence of contents to the presence of consciousness. Seth’s prediction inference accounts well for the interoceptive contents and their accuracy or inaccuracy. In contrast, that very same prediction inference cannot answer the questions of why and how the interoceptive contents, as based on predicted input and prediction error, are associated with consciousness rather than remaining within the unconscious.

Hence, to account for the association of the interoceptive contents with consciousness, Seth (2013) requires an additional step, the step from unconscious interoceptive contents (including both accurate and inaccurate) to conscious interoceptive content (irrespective of whether these contents are detected/judged as accurate or inaccurate). This additional step is neglected, however, when he directly infers from the presence of predictive coding of contents in terms of predicted input and prediction error to their association with consciousness.

What does this mean for the prediction inference? The prediction inference can well account for interoceptive accuracy/inaccuracy and, thus, more generally, for the selection of contents. Predictive coding allows us to differentiate between inaccurate and accurate intero-/exteroceptive contents. Thus, the prediction inference remains nonfallacious when it comes to the contents themselves including their accuracy or inaccuracy. However, this contrasts with intero-/exteroceptive sensitivity and, more generally, with consciousness. But the prediction inference cannot account for the association of intero-/exteroceptive contents including both accurate and inaccurate contents with consciousness. When it comes to consciousness, then, the prediction inference must thus be considered fallacious, which renders it what I describe as prediction fallacy (see figures 6.1a and 6.1b).

Figure 6.1a Prediction inference.

Figure 6.1b Prediction fallacy.

Prediction Model and Consciousness IIb: Consciousness Extends beyond Contents and Cognition

What is the take-home message from this discussion? Both predicted input and prediction error themselves do not entail any association of their respective contents with consciousness. The empirical data show that both predicted input and prediction error can remain unconscious. There are contents from the environment that remain unconscious in our perception and cognition. We, for instance, plan and execute many of our actions in an unconscious way. Given the framework of predictive coding, planning of action is based on generating a predicted input, while the execution of action is related to prediction error. As both planning and execution of action can remain unconscious, neither predicted input nor prediction output is associated with consciousness. Hence, the example of action supports the view that predictive coding does not entail anything about whether contents are associated with consciousness.

Let us consider the following example. When we drive our car along familiar routes, much of our perception of the route including the landscape along it will remain an unconscious element. That changes once some unknown not yet encountered obstacle, such as a street blockade due to an accident, occurs. In such a case, we may suddenly perceive the houses along the route in a conscious way and become aware that, for instance, there are some beautiful mansions. Analogous to driving along a familiar route, most of the time the contents from our body, such as our heartbeat, remain unconscious entities.

The prediction fallacy simply ignores that the contents associated with both predicted input and prediction error can remain unconscious by us. The fallacy rests on the confusion between selection of contents (which may be accurate or inaccurate) and consciousness of contents. Predictive coding and the prediction model of brain can well or sufficiently account for the distinction between accuracy and inaccuracy of contents. In contrast, they cannot sufficiently account for why and how the selected contents (accurate and inaccurate) can be associated with consciousness rather than remaining as unconscious contents. In sum, the prediction inference remains nonfallacious with regard to the selected contents (as accurate or inaccurate), whereas it becomes fallacious when it comes to the association of contents with consciousness.

What does the prediction fallacy entail for the cognitive model of consciousness? The cognitive model of consciousness is based first on contents and second on the processing of selected contents in terms of predictive coding with predicted input and prediction error. The cognitive model tacitly presupposes a hidden inference from the the selection of contents (as accurate or inaccurate) to the association of those contents with consciousness.

However, the distinction between the selected contents as accurate or inaccurate on the basis of predictive coding does not entail anything for their association with consciousness. The inference from prediction to consciousness is thus fallacious, what we may term a prediction fallacy. Empirical data show that any selected contents (accurate or inaccurate as well as predicted input or prediction error) can remain unconscious and do therefore not entail their association with consciousness.

In conclusion, the cognitive model of stimulus-induced activity and the brain in general as based solely on contents as in the prediction model of brain remain insufficient to account for consciousness. For that reason, we need to search for an additional dimension beyond contents and prediction that determines consciousness. Put simply, consciousness extends beyond contents and our cognition of them.