8. Crowning glory

As we tour around your body, experiencing the associated wonders of science, we don’t find a lot that is unique to human beings. There is nothing we have experienced in the body itself that couldn’t be found working similarly in other animals. Your eyes, for example, are fine, but nothing special. Every capability possessed by the parts of your body we have met so far can be bettered by a different creature. But there is one bit of you that is special. Your brain.

What goes on inside your head

That unappetising looking lump of flesh in your skull, weighing in at around 1.5 kilograms (three pounds) is fiendishly complex. Inside it, there are around 100 billion of the key functional cells, neurons, some with many connections to others, making the number of connections at any one time around 1,000 trillion. And considering that it only amounts to one or two per cent of your bodyweight, your brain is a real drain on resources – of the 100 watts or so of energy your body generates (equivalent to a traditional light bulb), the brain hogs around twenty per cent.

Look at a picture of the brain from above and it appears to be a single lump of matter, not unlike an enormous pink walnut, but in fact it is almost entirely divided into two, with the halves of the brain joined at the back by a bundle of nerves called the corpus callosum. Some responsibilities are split between the two halves. The left side is largely responsible for the right side of your body, including the vision from your right eye, and vice versa.

There is a traditional view that the left side is the one that kicks in when you are being organised and structured. It is largely responsible for numbers, words and rationality. It prefers things sequenced and ordered. There’s nothing it likes better than taking an analytical approach, working through something step by step in a linear fashion. The right side in this conception of the brain is much more touchy-feely. It takes the overview, a holistic approach to the world. It deals with imagery and art, colour and music. If you need to think spatially or deal with aesthetics, it’s time to call on the right side.

At least, that’s the simplistic view. However, when we’re dealing with the brain, things are very rarely simple. In practice, though one side may dominate, both sides are involved to some degree in all these types of thinking. What is certainly true, though, is that the brain has two clear modes of operation that correspond to the attributes traditionally allocated to its two sides (and so labelled left- and right-brain thinking). This is why it can often be a real problem to come up with fresh ideas in a traditional business environment.

People will sit down to have a nice, structured, orderly meeting. Very logical and analytical. Before long, the right sides of their brains have shut down, leaving the participants with limited resources for creativity, as new ideas depend on making fresh linkages, and the ideal is to have both sides of the brain in action. This is why new ideas can often be inspired by music, taking a walk, looking at images, thinking spatially. It’s a way of bringing the right side of the brain in to play.

Experiment – Feeling your brain

There is a simple way to experience the two halves of your brain in action. A technique called the Stroop effect allows you to experiment on your own brain (no surgery required) and feel the switch between the sides. Go to www.universeinsideyou.com, click on Experiments and select the experiment Feeling your brain, then follow the instructions.

The Stroop effect uses words and colours, each a responsibility of a different side of the brain. It doesn’t matter how much you are instructed to concentrate on colours, in this experiment your brain sees words – handled primarily by the left side of the brain – and lets the right side, taking care of colours, pretty well shut down. When you suddenly have to make use of the right-hand side again, you can practically feel the gears grinding in your brain as it tries to catch up.

Brains weren’t made for maths

We’ve already seen when looking at sight and hearing that it is easy to fool your brain. The human brain is absolutely great at many things. But it often struggles with tasks that have been added to our repertoire since brains evolved.

A good example of a role your brain just wasn’t evolved to work with is arithmetic. Your computer at home would be hopeless at many things you do easily, but give it a task like finding the square root of 5,181,408,324 and it will have the answer before you’ve even scratched your head. (It’s 71,982, of course.) This just isn’t the kind of thing humans were evolved to do – maths doesn’t come naturally.

Nowhere is this more obvious than when dealing with probability and statistics. Probability is involved in many of our everyday activities, and statistics are thrown around in the news and politics all the time, yet our brains, developed to deal with images and patterns, have a huge problem dealing with these manipulations of numbers and the impact of chance.

Let’s take three examples where the nature of your brain’s wiring is such that it gets confused by these incredibly useful numbers.

Open the door

In the 1960s, Canadian-born presenter Monty Hall was in charge of a US TV game show called Let’s Make a Deal. The format of the show resulted in the kind of problem that is excellent at exposing our difficulties with probability.

Let’s imagine you’re through to the final stage of a TV game show like Let’s Make a Deal. The host brings you to part of the set where there are three doors. Behind two of these doors is a goat (don’t ask me why), while behind the third door is a car. You want to win the car but don’t know which door it is behind. Still, you are asked to pick a door, so you do. There’s a one in three chance you have picked the car, and a two in three chance you have picked a goat.

Now the host opens one of the doors you didn’t pick and shows you a goat. He gives you a choice. Would you like to stick with the door you first chose, or switch to the other remaining door? What would you do? Does it matter, in terms of your chance of winning the car? Is it better to stick with the door you first chose, better to switch to the other unopened door, or does it not matter which of the two you choose?

We know that after one door is opened to show a goat there are two doors left, one with a car behind it, one with a goat behind it. So it seems obvious that there’s a 50:50 chance of winning the car whichever door you choose. And yet this is wrong. In fact you would be twice as likely to win the car if you were to switch to the other door as you would if you were to stay with the one you first chose.

If you find that statement ridiculous, you are in good company. Writer Marilyn vos Savant had a column in Parade magazine in which she answered readers’ questions. In 1990, she was presented with this problem and came up with the answer I gave above: you are better off switching, it’s twice as good as sticking. She was deluged with thousands of complaints telling her that she was wrong and that there was an even chance of winning with either of the remaining doors. Some of the letters were from mathematicians and other academics.

You can easily demonstrate that it is better to switch using a computer simulation – it really does work. But that doesn’t get around the frustration of it not seeming logical. The important factor is that the game show host didn’t open a door at random. He knew that there was a goat behind the door he opened. Think back to when you first chose a door. There was a 2/3 chance you had picked a goat – a 2/3 chance that the car was behind one of the other doors. All the host did was show you which of those two doors to pick – there was still a 2/3 chance that the car was there. So with only one alternative, you were better switching to the third door.

The two-boy problem

Oddly enough, another of vos Savant’s columns also created a surge of complaints, and this too was as a result of a problem with probability that strains the brain. The problem is simple enough: I have two children. One is a boy born on a Tuesday. What is the probability I have two boys? But to get a grip on this problem we need first to take a step back and look at a more basic problem. I have two children. One is a boy. What is the probability I have two boys?

A knee-jerk reaction to this is to think ‘One’s a boy – the other can either be a boy or a girl, so there’s a 50:50 chance that the other is a boy. The probability that there are two boys is 50 per cent.’

Unfortunately that’s wrong.

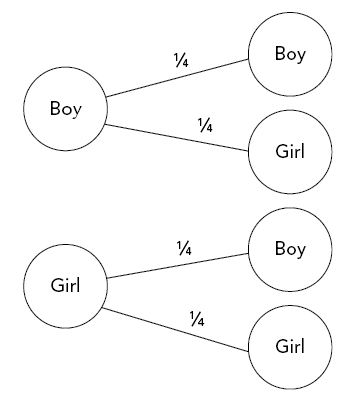

You can see why with this handy diagram. The first column is the older child. It might be a boy or a girl, the chance is 50:50. Then in each case we’ve a 50:50 chance of a boy or girl for the second child. So each of the combinations has a one in four (or 25 per cent) chance of occurring.

All the combinations except girl-girl fit the statement ‘I have two children. One is a boy.’ So we’ve got three equally likely possibilities where one child is a boy, of which only one is two boys. So there’s a one in three chance that there are two boys.

If this sounds surprising, it’s because the statement ‘one is a boy’ doesn’t tell us which of the two children it’s referring to. If we say ‘The eldest one is a boy’, then our ‘common sense’ assessment of probability applies. If the eldest is a boy, there are only two options with equal probability – second child is a boy or second child is a girl. So it’s 50:50.

Now we’re equipped to move on to the full version of the problem. I have two children. One is a boy born on a Tuesday. What is the probability I have two boys? Your gut feeling probably says ‘The extra information provided about the day he’s born on can’t make any difference. It must still be a one in three chance that there are two boys.’ But startlingly, the probability is now 13 in 27 – pretty close to 50:50.

To explain this perhaps I should draw another diagram, but I can’t be bothered – you’ll have to imagine it. In this diagram there are fourteen children in the first column. ‘First child a boy born on a Sunday, first child a boy born on a Monday, first child a boy born on a Tuesday, first child a girl born on a Sunday etc. all the way through to first child a girl born on a Saturday.

Each of these fourteen first children has fourteen second children options. Second boy born on a Sunday … and so on.

That’s 196 combinations in all, but luckily we can eliminate most of them. We are only interested in combinations where one of the children is a boy born on a Tuesday. So the combinations we are interested in are the fourteen that spread out from ‘first child a boy born on a Tuesday’ plus the thirteen that start from one of the other first children and are linked to ‘second child a boy born on a Tuesday.’ This makes 27 combinations in all. How many of these involve two boys? Half of the first fourteen do – one for a second boy born on each day of the week. And for the remaining thirteen, six will have a boy as the first child (because we don’t include ‘first boy born on a Tuesday.’) So that’s 7 + 6 = 13, 13 combinations that provide us with two boys. So the chance of there being two boys is 13 in 27.

Common sense really revolts at this. By simply saying what day of the week a boy was born on, we increase the probability of the other child being a boy. But we could have said any day of the week, so how can this possibly work? The only way I can think of to describe what’s happening is to say that by limiting the boy we know about to being born on a certain day of the week, we cut out a lot of the options. We are, in effect, bringing the situation closer to being that ‘the oldest child is a boy’ – we are adding information to the picture.

The probabilities work – you can model this in a computer if you like – and the numbers are correct. But what is going on here mangles the mind. Don’t you just love probability? (I ought to say, by the way, that this isn’t quite realistic. It assumes there is an equal chance that either child is a boy or a girl, and that there are equal chances of children being born on each day of the week. In reality neither of these is quite true, but that doesn’t matter for the purposes of the exercise.)

A test of your understanding

Those last two examples do come up in real life. As well as on Monty Hall’s show, for example, a version of the goats and car problem was used on Mississippi river boats by gambling hustlers who got punters to bet based on the 50:50 assumption and made a killing. But the third example of how bad the brain is at dealing with probability and statistics is one that is much more important for real life, because it’s one that rears its head in the way we understand the results of medical tests – and it’s a difficulty that doctors have just as much of a problem with as the rest of us.

Let’s imagine there’s a test for a particular disease that gets the answer correct 95 per cent of the time, so it’s quite a good test. Let’s say that around one in 1,000 people – which would be around 61,000 people in the UK – have this disease at any one time. And finally a million randomly selected people take the test, including you. If you are told your test came out positive, how likely are you to have the disease?

Bearing in mind that the test is 95 per cent accurate, you may well think that you have a 95 per cent chance of having the disease, but actually the result is much more encouraging. Of those million people tested, around 1,000 will have the disease. Of these, 950 will be told correctly that they have it and 50 won’t, as the test is 95 per cent accurate. 999,000 won’t have the disease. Of these, 949,050 will get a (correct) negative result from the test and 49,500 will get a false positive result.

This means that of the 50,450 positive results, 98 per cent will be false. If you get a positive result, there is only a two per cent chance you have the disease. This example might use extreme numbers, but whenever you have a widely used test for a relatively rare condition, the chances are that the majority of the positive results will be false. This can be both distressing and result in potentially dangerous further testing, so it isn’t a trivial outcome. Once more, the way our brains are made simply doesn’t fit well with understanding probability.

But what does it mean?

Whenever your brain encounters probability and statistics, it’s worth just taking a step back and making sure you understand what’s going on. And make sure also that other people using statistics have got it right. It’s all too common for government departments, newspapers and TV news desks to make just the same mistakes with probability and statistics as the rest of us.

A good way of testing statistics is to explore a little more widely – get some more information before you believe scary-sounding numbers. You might hear, for example, that violent crimes in your neighbourhood have increased by 100 per cent since last year. It sounds like it’s time to move out. But make sure you ask for numbers to put this into context – if the rise is from one crime to two, it is a 100 per cent increase, but the reality isn’t as worrying as the statistics sound.

You also need to be particularly careful to keep your brain on track when you have to deal with multiple sensory inputs. A great example of this was research conducted in the late 1990s where people were stopped in the street and asked to give directions. While they were helping someone with a map, some workmen came along the street, carrying a door. The workmen passed between the test subject and the person asking for help, who was one of the researchers.

While the door blocked the subject’s view, the person asking for help swapped places with one of the door carriers. Around 50 per cent of subjects never noticed that they finished off giving directions to a totally different person. They were too focused on the task. We are much less conscious of what’s going on around us than is often assumed in a court of law.

You must remember this

Memory is equally worryingly faulty. You are, in many ways, your memories; without them you would not be the person you are. Yet a fair number of those memories you cherish are false. Some are constructed a long time after the event to which they refer. It’s not uncommon for what seems to be a memory to be derived from a photograph or video of an event. Others are slanted by our opinion – for example, we tend to remember extremes, so we think a summer was much hotter than it really was because of one hot day. We are also more inclined to give weight to recent experiences, so a wet week at the end of a month of excellent weather will have us moaning about not getting a summer at all.

Another problem with memories is that they are based on your ability to observe and capture information, but as we’ve seen, the image your brain shows you is a very subjective construct. This can easily lead to your seeing things that aren’t there, or not seeing things that are, and these mistakes are subsequently remembered as fact.

A while ago, someone mentioned they had seen me walking the dog while I chatted on my mobile phone – quite a detailed observation. The only problem was, I wasn’t at home that day, and hadn’t taken my dog for a walk. This is where the whole business of observation, perception and memory becomes potentially dangerous. Imagine that the person who thought he saw me then witnessed a murder, committed by the person he saw. He would have been happy to stand up in court and swear that he saw me commit that crime, yet I wasn’t there. Whenever a court case depends solely on witness evidence, particularly evidence depending on memory after a significant period of time has elapsed, it’s quite worrying.

Experiment – Counting the passes

This is a very well-known experiment, but please have a go at it even if you have seen the original version – this is a new version that will still be of interest if you carry it through to the end. Go to www.universeinsideyou.com, click on Experiments and select the Counting the passes experiment. You will be asked to count the number of times someone in white passes the ball. In the fast-moving game it is difficult to keep track (numbers and memory involved here), so you really need to concentrate hard on who is passing the ball.

Although it doesn’t work for everyone, more than 50 per cent of people fail to accurately observe what is going on in this simple video. It’s hardly surprising how often your brain will get things wrong. Often these failings are more entertaining than worrying – optical illusions can be great fun, for example. However, whenever we rely on our ability to recall exactly what happened in confusing circumstances, we need to be aware of the brain’s limitations.

Memory lets us down in surprising ways. We might recognise a face – so clearly it is stored away in our memory – but be unable to put a name to it. It is entirely possible to forget your own phone number, even though it is a sequence of digits that you make use of time and again. Perhaps most frustrating of all is the way that memory can give you half the story – there are times when you know there was something you had to remember, but you can’t remember what it is!

Solid state versus squishy state

One of the reasons it’s easy to misunderstand memory is that we are so familiar with computers, and we assume that there is some similarity between the way computer memory works and the way human memory works. But that’s not the case.

Computer memory consists of a specific value – zero or one – stored in a specific location. Each location has an address. You can go straight to that location and find the value. This makes it great for something like looking up a number – a computer won’t forget a phone number in a hurry. By comparison, your brain does not hold a memory in a single location, nor does it have a direct way of going to a particular value. The way information is held is structured as patterns and images, which is why your brain may have trouble with a phone number, but it finds it a lot easier to recognise a face than a computer does.

Remembering how it’s done

There are also several distinct kinds of memory in the brain. The lowest level is procedural memory, the memory that tells you how to do something. This takes place in the most primitive part of the brain, the part most closely shared with the widest range of animals, specifically the cerebellum and the corpus collosum, the bundle of nerves that links the two halves of the brain.

Procedural memory is accessed significantly more quickly than higher levels of memory, and with no conscious effort. If you are a touch typist like me, it’s easy to demonstrate that procedural memory is different from conscious memory. As I type this, I am not looking at the keyboard and I don’t think about where each key is. I simply think the words and my fingers type it. Procedural memory handles where to put my fingers and when to press.

If I try to remember where a particular letter, an N, say, is on the keyboard, I can’t. I couldn’t tell you. But I can type an N without thinking about it – my procedural memory knows the keyboard, but my higher memory doesn’t. Something similar happens with experienced drivers. When you learn to drive you have to consciously be aware of what to do; how and when to change gear and so on. With experience, this ability becomes tucked away in procedural memory and happens without you having to think about it.

Remembering stuff

The higher level of memory, the conscious level, is processed by a number of areas of the brain. It is broadly divided into short-term, or working, memory and long-term memory. The prefrontal cortex, behind the forehead, administers the short-term memory, while the hippocampus, a central area of the brain that is supposed to look like a seahorse (but doesn’t!) manages long-term memories, though the memories themselves are distributed throughout the brain.

One of the big distinctions between short-term and long-term memory is that we control what is in our short-term memory – you can consciously keep something in those short-term slots – but we have no direct control over long-term memory. You can’t just flag something for memory and it automatically stays – you have to work at it. This is unnerving, when you think about it. You presumably think of yourself as rational, and yet here is one of the most important functions of your brain, probably the aspect that most defines you as an individual, and you have no direct control over it.

The brain is a self-patterning system, a common natural phenomenon. The more you use a particular neural pathway in the brain, the easier it becomes to use that pathway. If you think of the connections between neurons as electrical wiring, the wiring gets thicker as it is used, which makes it easier to use it again. So constantly accessing a particular memory makes that memory easier to recall – the mechanism behind the importance of revision.

Under pressure, your brain depends more than usual on these well-trodden pathways, which is why when you want to be creative it is best to be relaxed and not under pressure to find an instant answer. This gives the brain the chance to make use of thinner, less-frequented connections, where new ideas can spring up.

I know the face

Because our memories don’t work like a computer, it helps to manipulate information to make it more acceptable to our brains and more accessible in memory. If, for example, you want to remember someone’s name, there’s a very simple technique to fix it in your memory: take the name and make a visual image out of it. Make it as colourful, visual, graphic (and even funny) as you can. Then combine the image with a mental picture of that person.

Let me give an example. Twenty-five years ago, when I first came across this technique, I thought I would give it a try. I happened to go into a pharmacy that lunchtime and decided to remember the name of the first person I came across with a name badge on. She was called Ann Hibble, a name I remember to this day. The image I conjured up was a hippopotamus (a big, purple hippopotamus) rearing up out of the floor of the shop and nibbling the woman’s toes. An hippo nibbling – Ann Hibble.

As was pointed out when looking at the left/right split of the brain, things like colour, movement and drama all engage distinctive functions in the brain. So using imagery with colour, movement and drama helps ensure that this aspect of the brain is made use of, as well as the other brain modules more naturally involved with words. Memories are stored across both sides of the brain, so every little helps.

This technique for remembering a name involves fooling the brain. You are pretending that you’re doing something more like the tasks your brain originally evolved to do. Humans evolved to recognise patterns, images and pictures in the world around us, so by superimposing an image on the name we hide the words under the visuals and get our memory to accept them more readily.

I probably wouldn’t still be able to remember the name Ann Hibble if I hadn’t reinforced it regularly by retelling the story. One essential to getting something to stick in memory is rehearsing that memory – digging it out and revisiting it on a regular basis, thereby thickening the neural connections. The ideal is to repeat this process on a gradually lengthening scale; perhaps after an hour, a day, a week, a month, six months, a year … if you do this the chances are the memory will never leave you.

Take down my phone number

At least names can be associated with objects and images, but numbers are even more abstract, and even more alien to the brain. When first faced with a number, the initial problem is that your short-term memory only has a very limited number of slots. You can only think of around seven things at a time without something popping out and being lost. Unfortunately a typical phone number might have eleven digits, which is beyond the capacity of your short-term memory.

Here’s a made-up phone number: 02073035629. Taken as eleven separate digits it is pretty well impossible to remember, which is why phone numbers have traditionally been broken up into chunks. If you can memorise a chunk of numbers as a single item, you can squeeze the whole thing into short-term memory, en route to memorising it fully.

I remember that tail from somewhere

Memory is, of course, not unique to human beings. Anyone with regular exposure to animals will be aware how much memory features in their behaviour. Even the humble goldfish is perfectly capable of remembering things. This is a shame in a way, because the myth that a goldfish has a three-second memory makes for excellent jokes: ‘Just because I have a three-second memory, they think I won’t get bored with fish food … Oh, wow! Fish food!’ Okay, not always excellent.

However, anyone who has kept goldfish will be aware that they can remember things perfectly well – for example coming to a particular part of the pond or tank in response to a prompt before feeding, and a TV show has managed to get goldfish to learn the route around mazes. The idea that they have a three-second memory is nothing more than urban myth, probably equating intelligence and memory in some way, where in practice there is very little link between the two.

The brain scribble

The human brain is, without doubt, our crowning glory, and one of the most remarkable ways that we extend the functions of our brain is through the use of writing. The amazing thing about writing is that it is a means for one brain to communicate with another – in the case of this book, my brain communicating with yours – where time and space are removed as barriers.

Natural communication is limited in these respects. Mostly animals and plants communicate in the here and now. With a few exceptions in chemical-based communications that linger, a message is produced, consumed and gone, never to return. But writing takes away this limitation. You can take a book off the shelf and read words that were written thousands of miles away or even thousands of years ago. It is quite possible for you to have more communication on your bookshelves from dead people than living, and the chances are that few, if any, of the authors live on your street. When you read these words it will be months or years after the moment (13.32 GMT on Tuesday 4 October 2011) when they were written.

Of course we now have many other ways to communicate that are more instant than writing, but often these messages don’t overcome time the way writing can. Because they are written down, these words will still be here in ten years time, maybe even a hundred years or a thousand. The cold call I just received from a stockbroker in New York was instantly consigned to the bin of time – the communication is as dead as a wolf’s howl (thankfully, in this particular instance).

Writing has been crucial to the development of our technological society. Without writing there would be no science, only myth. With no way to build practically on the experience of previous years, we would always be re-inventing the wheel. Computer technology is often seen as something of an enemy of writing – why read a book when you can watch videos on YouTube? – but without writing there could be no computer software, no development of the hardware, and much of the content of the internet remains word-based.

Writing with pictures

Writing in the broadest sense is an extension of our brains. It is a way of taking information one human brain and storing it so that it can be revisited by another brain elsewhere in time and space. Originally, this was in the form of pictures. The cave paintings showing human beings, animals and patterns of hands dating back 30,000 years or more are not abstract daubing, but a means of communicating. They were fixed in space, slow to produce and difficult to interpret, but no one can doubt their ability to survive through time.

Over many years, straightforward pictures developed into pictograms. These still featured recognisable images, but the pictures were more stylised, making them quicker to execute, and more consistent in appearance. One pictogram would typically represent an object or, more subtly, a concept. It doesn’t take a genius to decode a pictogram message showing fruit lying on the ground, then a pair of arms, then fruit in a basket.

The problem with a system like this is that there are too many symbols to cope with. A simplification would be to have separate symbols for fruit, basket and ground, and by drawing them in a particular relationship – perhaps with a special linking mark to suggest ‘on’ or ‘in’ – to combine those symbols. Now those simple pictograms are evolving into ‘ideograms’ – symbols that can put across an abstract concept like ‘on’.

This is the stage at which ‘proto-writing’, the immediate ancestor of true writing, is thought to have emerged. Somewhere between nine and six millennia ago, symbols were being used with a degree of visual structure to put across a simple message. It is difficult to say when this proto-writing emerged, but many archaeologists believe the best early example currently known is that on the Tărtăria tablets, found in the village of that name in central Romania (once part of Transylvania). The clay tablets, a few inches across, feature just such a combination of stylised drawings, symbols and lines. It’s possible that these were purely decorative, but everything about them suggests a message, a clear attempt to communicate information from one human brain to another.

Did you hear about my mummy?

Egyptian hieroglyphs form the best-known writing system that takes the next step – still using pictographs and ideographs, but in a much more formalised setting. The big advance here is that the pictures sometimes represent words, sometimes parts of words. Although hieroglyphs are the instantly recognisable script of ancient Egypt, they were only for special purposes. They were slow to produce and not well suited to, for instance, keeping accounts. A second system, hieratic, developed alongside hieroglyphs. It was also based on visual symbols, but was significantly more like a modern written script.

The Egyptians weren’t the first to use true writing. Another of the regional superpowers of the time, Sumer, had what was probably the first written language, a cuneiform script – one where the characters are built up from wedge-shaped marks – a bit like the side-on view of a tack – made with the end of a stylus. To look at, these little symbols seem little more than a tally. But they are far more. They are a means of expanding the human brain, spreading information from one person to another.

By around 4,000 years ago, writing was spreading like wildfire. The Chinese system dates back to this time, using a large number of symbols (around 5,000) that represent words or parts of words. Our own alphabet has a ragged history before reaching our current written form. The name ‘alphabet’ shows its Greek origins (alpha and beta being the first two characters of the Greek alphabet), though the characters we use have a more complex history.

Abjads to alphabets

The earliest known predecessor of our script is the proto-Canaanite alphabet. Technically it was an abjad, an alphabet without vowels. The vowels are either implied by position, or marked using small change marks, like accents. This written form was used in the Middle East from around 3,500 years ago and was taken up by the Phoenicians. The symbols were adapted for both Greek and Aramaic lettering. Greek is thought to be the first true alphabet with vowels treated similarly to consonants, developing around 3,000 years ago.

Our own Latin or Roman script was derived over time from the Greek, and just as the US and the internet have spread the use of English today, the Roman Empire spread the use of Latin lettering as their language became a common tongue, one that that would outlast the empire itself by over 1,000 years. Isaac Newton’s greatest work, Philosophiae Naturalis Principia Mathematica was written in Latin as late as 1687, while his Opticks, first published in 1704, though written in English, was translated into Latin for a wider audience.

It sounds capital

The Roman script we are familiar with – our capital letters with a few omissions – were the Roman equivalent of hieroglyphs. They were primarily used for carving in stone and important proclamations. Everyday writing used a different script called Roman cursive that looks part way between the capitals and modern lower case. Initially the letters varied greatly in size and placement, but over time they became more standardised in scale and more like our current lower case letters. Originally, though, capitals and cursive were two distinct schemes, and a writer would use one or the other, but gradually capitals crept in for emphasis in the midst of the cursive.

Exactly how capitals were to be used took a lot of time to settle down. In English, for instance, there was period when they were only used to emphasise new sections like sentences, then a time when, like modern German, they were used on pretty well every noun, before settling on the current compromise. It wasn’t until printing came along that the two types of lettering would be called upper and lower case, referring to the moveable type that was used in printing until computerised printers became the norm. A page of print was bound together from a collection of individual letters on metal blocks. Capital letters would be kept in higher drawers or cases, while the ‘minuscule’ letters were stored in the lower cases.

Now we can see the true power of writing in enabling human beings to benefit from the power of their unique brains, taking them far beyond the capabilities of any natural creature on the planet. Think what you can do, thanks to writing: you can consult the wisdom of someone long dead, expanding the capabilities of your brain. You can support the working of your body by going online and purchasing something to eat from the other side of the world – or jot down a reminder on a Post-it note to ensure that you remember to do something important. And that’s just your direct use.

Hardly anything around you that makes you different from your ancestors 100,000 years ago would exist were it not for the written word being present to aid its development. The written word makes it practical to have law, science, and literature, to name but three. Of course before the existence of writing there was an oral tradition – there were storytellers, for instance, but the difference in capability brought about by writing and its impact on human beings was immense. Speech can do a vast amount, but when a topic gets too complicated, writing is necessary to back it up.

The written word is immensely powerful, and many of us feel that it has a kind of magic. There is something special about books and bookshops, something very physically satisfying about handling a book. (Equally there’s something special about a web search engine like Google, but that’s a different kind of magic.) Of course, as a writer I would say that books are special, because books are what I do, but still, it’s not an uncommon feeling. When written words are combined with a human’s practical ability to make things happen they are of almost limitless power.

Are you human?

Impressed though you should be with the power of your brain that leaks out into writing, there are some aspects of your mental capability that can be emulated by a computer. As already mentioned, any old PC is much better at arithmetic and probability than any of us will ever be, and computers are also able to beat chess grand masters. In other cases we are just about holding our own – take, for example, the Turing test.

This was a trial devised by code-breaking and computing pioneer Alan Turing as a way of telling whether computers had finally come of age and were rivalling humans for intelligence. If you could sit in one room and communicate down a wire with ‘someone’ and couldn’t tell whether that ‘someone’ was a computer or a person, then you could consider that the computer had achieved a form of artificial intelligence.

Over the years various programs have been written to try to interact convincingly with human beings, with various levels of success.

Experiment – Talking to computers

Go to www.universeinsideyou.com, click on Experiments and select the Talking to computers experiment. First try out Eliza, built into the page. This is one of the oldest computer programs designed to hold a conversation, written in the mid-1960s. It acts like a psychotherapist, echoing your statements back to you. It’s quite easy to mess up, but if you play the game and don’t try to be too clever, it is surprisingly good.

Then scroll down and click the link to take a look at Cleverbot. This is one of the best modern ‘chatbots’, as such programs are called. Even Cleverbot is relatively easy to confuse, but it has many more tricks up its sleeve than Eliza to attempt to look human.

At the Techniche Festival in Guwahati, India in 2011, the Cleverbot chatbot beat the Turing test. Or at least that’s what has been claimed. In the test, 30 volunteers typed conversations, half with a human, half with a chatbot. Then an audience of 1,334 people, including the volunteers, voted on which conversations were with humans. A total of 59 per cent thought Cleverbot was human, making the organisers (and the magazine New Scientist) claim that the software had passed the Turing test.

By comparison 63 per cent of the voters thought that the human participants were human. This process can be a bit embarrassing for human participants who are thought to be a computer. I don’t think, though, that the outcome is really a success under the Turing test. The participants were only allowed a four-minute chat, which gives the chatbot designers an opportunity to use short-term tactics that wouldn’t work in a real extended conversation, the kind of interaction I envisage Turing had in mind.

And then there’s the location of the event – a key piece of information that is missing from the published report is how many of the voters had English as a first language. If, as I suspect, many of those voting did not, or spoke English with distinctly non-Western idioms, their ability to spot which participants were human and which weren’t would inevitably be compromised.

Would you kill to save lives?

Holding a conversation is one thing, but dealing with ethics is another. It’s hard to imagine programming a computer to have a true understanding of ethics. After all, we don’t necessarily even have a clear view of our own ethics. The theory may be straightforward, but when it comes to practice, it’s easy to make decisions that it is then difficult to justify. Here’s a well-known example …

Imagine you are in a railway control centre and you see that a runaway train is headed down the track. It is totally out of control – you can do nothing to stop it. It is heading for a set of points where you can choose which of two lines it will travel down. If you do nothing, it will head down line A and plough into and kill twenty people who are on the track celebrating the opening of a new railway charity. If you throw the switch it will head down line B and will kill a single individual who is clearing the line of rubbish.

Let’s be clear here: if you throw that switch you will directly cause someone to die who otherwise would not, but if you don’t throw the switch, twenty people will die. What do you do? Decide before reading on.

Now let’s slightly change the situation. Now you are standing on a bridge over the track. As before, a runaway train is heading down the track towards twenty innocent people, who will be killed if it isn’t diverted at the points. You can’t get to the controls, but there is a pressure switch beside the track below you that will flip the train onto a safe line, where no one will be killed. The only way to activate that switch is to drop something weighing twice your weight onto it. Sitting precariously on the parapet of the bridge is a very large person …

If you push that person off the bridge, where they will definitely be killed by the train, you will save the other twenty people. If you do nothing, the twenty people will die. What do you do?

The majority of people would press the button to divert the train and kill one person rather than twenty. But many could not bring themselves to push the person off the bridge, even though this is apparently making exactly the same sacrifice.

Psychologists will tell you that this is because you are ethically capable of killing someone remotely by throwing a switch to save others, but, apparently entirely illogically, you can’t face making the hands-on gesture. They point out that the same sort of shift has happened in warfare as the human technology used to kill each other has gone from hand-to-hand combat to bullets and missiles. However, I personally think that, useful though this thought experiment is for getting insights into our ethical systems, it is flawed.

The trouble is that the two different railway scenarios are not equally plausible. The first example genuinely could happen. It would be entirely possible to throw a switch and transfer a train to a different track, killing one person instead of twenty. But it seems very unlikely that you would have a pressure switch that required twice your weight and that you happened to have a person sitting there and knew what they weighed. The original phrasing of the problem, the form which has been actively used in psychological testing, is even more implausible. It suggests that the person on the bridge is so fat that they can stop the train with their weight alone, showing a very poor understanding of physics on the part of the psychologists.

Worse than that, though, the psychologists are forgetting the impact of probability. The first test is not just more plausible as a scenario, but you could be happy that, technical failure excepting, when you throw the switch in the control room, the train will be diverted down the second track. However, even if you have been told that it will work, there is every possibility that pushing someone off a bridge could go wrong; they might fall in the wrong place, for example. The high level of uncertainty in the second test means that it would be much less appealing, even if there were no ethical concerns about taking a direct action to kill someone.

Trusting and ultimatums

Another experiment that you can carry out yourself gives powerful insights into the way we trust and also how we balance logic and emotion in our decision making – something else computers have problems with. We make decisions all the time, and this game really gets to the heart of what’s happening when we make a choice – because it’s not as simple a process as it may seem. The experiment is called the ultimatum game.

Experiment – Ultimatum game

Try this out next time you have some friends around to experiment on (or when you are next down the pub). You need two people and a small amount of money, which you have to be prepared to part with for the sake of the experiment.

Explain to the two people you want to carry out a simple experiment. You are going to ask each of them to make a decision about some money. They must not discuss their decision in any way. Put the money on the table in front of them, so it is clear and real. Explain that you are going to give them this money to share – there are no strings attached, simply a decision to make.

The first person has to decide how the money is split between them. He or she can split it however they like. The money can be split 50:50, the decider can keep it all, or they can split it any other way they like. (It helps if you make the money a nice easy amount to split this way.) The person deciding must not talk about the decision in any way, but merely announce how the money will be split. The second person will then say either ‘Yes’ and the two of them will get the money, split between them that way, or ‘No’ in which case neither of them gets any money.

This game has been undertaken many times in many circumstances. The logical thing for the second person to do is say ‘Yes’, as long as the first person gives them something. Even if they’re only offered a penny, it’s money for nothing. In practice, though, the second person tends to say ‘No’ unless they get what they regard as a fair proportion of the money.

What counts as a fair proportion varies from culture to culture. Some will accept as low as fifteen per cent, others expect a full 50 per cent. In Europe and the US we tend to expect around 30 per cent or more before saying ‘Yes’.

What the experiment shows is that we consider trust and fairness worth paying for. We are willing to lose money in exchange for putting things right. If human logic were based purely on economics then this just wouldn’t make sense – you should always take the money. But your brain makes decisions based on a much more complex mix of factors than finance alone.

This is not to say that finance doesn’t have some input into the complex system of weightings that is involved in decision making. If, for example, a billionaire decided to play this game, and offered a total stake of ten million pounds, the chances are you would happily accept being offered just five per cent – £500,000. Unless you are also extremely rich, half a million pounds is just too life-changing an amount to turn down in order to teach someone a lesson and punish their lack of fairness.

It’s an interesting exercise to think to yourself just how little you would accept in such circumstances. Where between £500,000 and £1 (which most people would reject) would you draw the line?

Weighing up the options

This kind of game seems to directly reflect the way the brain’s decision-making capability functions. Different components of the decision are given weightings. The bigger the weighting the more important the factor is to the decision. These weighted values are then added together and whichever option gets the biggest weight wins. In the case of the ultimatum game, factors that are likely to be given weights include:

- How much money is involved?

- How much money do you already have and feel you need? (So, how important is the sum offered to you and your life?)

- How fair is the split that the other person comes up with?

- Is it for real? (Will you really get the money, or is it just hypothetical?)

- What is your relationship to the other person?

If a computer were undertaking this it would literally be multiplying the scores by the weightings, producing a set of numbers to compare. In the brain this kind of scoring is undertaken in an analogue fashion – it’s more about the strength of an electrical impulse or the concentration of a chemical – but the effect is very much the same.

Allowing for all the factors

We like to think that we make logical decisions. Not the kind of cold logic deployed by Mr Spock, which would go for the money every time in the ultimatum game, but a more human logic that considers relationships, trust and fairness to be important as well as finances. And provided we do take into account all the factors that are coming into play, many human beings probably are logical in this fashion. But it’s easy to miss what’s really influencing a decision. The result can be an outcome that doesn’t make any sense in terms of, say, your long-term good, because your decision-making process gave greater weight to short-term pleasure.

You can see this happening all the time from relatively mild personal decisions – like whether or not to have that tasty but unhealthy junk food or chocolate bar – to truly life-threatening decisions involving taking hard drugs or undertaking a high-risk activity. Human beings are not very good at factoring long-term impacts into our decision making. We can be aware of these factors, we can know very well what the implications are, but short-term gain very often outweighs long-term benefits.

Economists have traditionally been particularly bad at understanding human decision making. They used to expect perfect rational behaviour, where ‘perfect’ and ‘rational’ are defined as being behaviour that optimises the financial benefit to the individual. But such an approach is naïve in the extreme when thinking about real human beings, as is now increasingly being realised.

It could be you

Just take the simple example of playing the lottery. You are extremely unlikely to win a major lottery. The chances are millions to one against (to be precise, 13,983,816 to one in the UK Lotto draw) – similar to your chance of being killed in a plane crash, or being struck by lightning. Yet every week lots of people take part. What’s going on?

In part it reflects our inability to handle probability. Just imagine if one day they drew the lottery results and the balls came out 1, 2, 3, 4, 5, 6. There would be an outcry. At best it would be assumed that the drawing mechanism was faulty and at worst it would be thought that there was fraud. There would probably be questions asked in parliament. And yet that sequence of numbers has exactly the same chances of being drawn as the numbers that popped out of the machine last Saturday. (In practice they were 29, 9, 15, 39, 17, 30.)

It’s only when we see a sequence like 1, 2, 3, 4, 5, 6 coming out that we realise just how unlikely the chances are of winning – those astronomical odds don’t really make a lot of sense to our mathematically challenged minds. However, despite this poor ability we have to cope with such numbers, the mathematicians, scientists and economists who regularly call people stupid for playing the lottery entirely miss the point as well. They are using a very poor model of human decision making.

I think I understand probability reasonably well, for example, but I still play the lottery. Admittedly in a controlled way with a small set monthly budget, but I do play. So why do I do it? It involves the kind of rewards that conventional economics is not good at reflecting.

If the sum involved in playing is so small that I can consider it negligible (perhaps the equivalent of buying a weekly drink at a coffee shop), then I can easily offset the almost inevitable loss against a very low chance of winning an exciting amount. To add to the benefit side of the equation, with this style of play I get a small win roughly every couple of months. This will inevitably be for between £3 and £10, but there are still a few minutes of delicious anticipation after getting the ‘Check your account’ email from the National Lottery when it could be so much better.

One of the important factors in considering the decision to play to be rational is that I totally forget about my entry unless I do get one of those emails. I don’t anxiously check my numbers. I don’t know what my numbers are. As far as I am concerned, once the payment has been made the money has gone, just as if I had spent it on those coffees. That way, any win is pure pleasure, because it has no cost attached to it. Let’s face it, the only thing I’m likely to get the day after a visit to Starbucks is indigestion. (This is not casting aspersions on Starbucks. It’s just that although I like real coffee, it upsets my stomach.)

Economics gets it wrong

Decision making based on finance alone ignores any enjoyment gained. In fact it ignores any benefits other than hard cash. If you took such an approach in your normal life, you would never spend any money on anything that hadn’t got a clear financial benefit. Okay, you would buy food because you need to stay alive, but obviously you would select the cheapest food to give the necessary nutritional value. You would never go to the cinema, or theatre or to a concert. You would never buy a present or a treat. You would never eat in a restaurant, because you can always make something cheaper at home. The economist’s ‘perfect’ life isn’t worth living.

Did you do that consciously?

We have seen that you make decisions all the time to do things based on this complex mix of benefits, often with a skewed view towards short-term gain. But on the whole you probably think that your decision making is conscious. It’s the ‘you’ inside your head, your conscious mind, that you assume is making the decisions.

When you are thinking about something – this question for example – where does that thought seem to take place? Where do you imagine ‘you’ to be located?

If you are like most people you will locate your conscious mind roughly behind your eyes, as if there were a little person sitting there, steering the much larger automaton that is your body. You know there isn’t really a tiny figure in there, pulling the levers, but your consciousness seems to have a kind of independent existence, telling the rest of your body what to do.

This simple picture of your conscious mind as something inside your head, that pulls (imaginary) levers to make your body act faces one big problem. Modern brain studies show that a frightening amount of your actions are actually controlled by the unconscious mind. It’s still ‘you’ that makes the decision – but not the conscious you, the active bit you think of as being in charge.

Let’s imagine you are sitting outside with a ball alongside you. You pick up the ball and throw it. What happened in your brain? The natural assumption is that your conscious mind thought ‘Okay, I’m going to throw this ball,’ signals were sent through your nervous system, and your arm did the job. I’m not suggesting you literally had to consciously, if silently, verbalise ‘Okay, I’m going to throw this ball,’ but you made the conscious decision to do it, then it happened.

Brain activity is associated with increased blood flows. By monitoring brain activity using fMRI (functional magnetic resonance imaging) scans detecting blood flows in the brain, it’s possible to see when the decision to perform the action is taken. This typically happens in the unconscious mind about a second before the hand begins to move. The conscious awareness of the decision takes place about one third of a second later. So, before you think ‘I’m going to throw this ball,’ your brain knows it is going to do it and is getting fired up. Only then do you become aware of the decision.

This sounds weird and rather scary. The decision is made before you are conscious of it. It’s almost as if you were a kind of robot, with no true free will. But in reality it’s more complex than this. Firstly, there is time for your conscious mind to abort the action. In the unlikely event you find yourself starting to do something you don’t really want to do, you can stop it. And more significantly, it’s not some alien external force that makes the initial decision, it is still you. You just aren’t conscious of it.

Even so, this unconscious decision making does emphasise how complex our brain activity is, and how it really is very difficult to be definitive about how consciously individuals decide to do things (and perhaps to what extent they should be punished for doing badly or rewarded for doing well).

Mood swings and comfort breaks

A significant difference between the brain and a computer is that the brain is influenced much more by the environment it works in. You might think that your computer occasionally gets into a bad mood, but in reality, software glitches apart, it will make the same decision every time if presented with the same data. Your brain is much more likely to change its assessment due to outside influences.

One obvious example is mood. It’s all too easy to make a bad decision simply because you are in a foul mood and are prepared, as the confusing saying goes, to cut off your nose to spite your face. You will make a decision that is bad for you, simply to irritate someone else or to be difficult. Surprisingly, two pieces of research in 2011 also identified that the state of your bladder has an influence on your decision making.

One paper, describing the rather unfortunately named concept (bearing in mind we’re dealing with bladder control) of ‘inhibitory spillover,’ explains how, when we’re under pressure to urinate, we do better at decisions where self-control is important. It’s as if the fact that you are exerting conscious control over your muscles means that you also have better control of what would otherwise be knee-jerk decisions. These could be anything from making a high-speed identification of someone to taking financial decisions that result in short-term benefit but long-term problems.

The other piece of work shows that a full bladder isn’t always a good thing: it can also make for bad decision making. As anyone who has tried to drive while desperate for the loo can confirm, this study found that we find it harder to pay attention and to keep information in our short-term memory when faced with an overfull bladder. This means an increased risk of having an accident when under such pressure.

It might seem that these two pieces of work are contradictory, but your brain is complex enough for these two results to be complementary in outcome. The fact that you find it harder to concentrate and retain information in memory when you have a full bladder is likely to reinforce a tendency not to jump in and make impulsive decisions, but rather to take a step back and exert more self control. This is fine when you have plenty of time, but not when making constant, important decisions. It’s probably best, for example, that airline pilots and truck drivers have regular comfort breaks.

The brain’s own painkillers

We also need to consider just how important the brain is when it comes to feeling pain. Although we associate pain with the area where we are hurt, the feeling of pain is generated by the brain, which means the brain can also turn off this feeling. We’ve seen earlier the way that swearing (page 43) and aspirin (page 146) can relieve pain, but another surprisingly effective approach is the use of placebos. These are dummy medicines with no content, usually sugar pills, which are used to test the effectiveness of new drugs. If a drug does no better than a placebo, it isn’t worth using.

However, it has been known for a long time that placebos do themselves have positive effects. If your brain believes that the pill you are taking will have a beneficial effect it often will. This is particularly true of pain relief. The brain has its own natural ways of switching off the pain signals, and these can be encouraged into action with a placebo. In the case of pain relief, what a placebo does is make the brain assume that pain levels will reduce, and the brain makes this a self-fulfilling prophesy by releasing natural painkillers like the morphine-related endorphins.

Homeopathic misdirection

This appears to be the way that many alternative medicines work. Homeopathic treatments, for example, make no sense as an actual medicine. Homeopathy is supposed to work by combining an outdated medical idea that taking a small dose of a poison causes benefit and a magical concept that because something is similar to something else, it will have the same effect. So you take a small dose of a poison that causes similar symptoms to the one you are suffering and the result is to alleviate the suffering.

This makes no medical sense, and in practice homeopathic treatments are diluted so much that there will rarely be a single molecule of the original active substance in the liquid that is then dripped onto a sugar pill. The result is that a homeopathic pill is exactly the same as a placebo, and similarly it can have good effects by encouraging the brain of the person taking it to make things better.

Some supporters of homeopathy argue that it can’t be a placebo as it also works with some animal problems, and the animals can hardly be fooling themselves as they have no idea what is going on. There seem to be three factors here. A proportion of the animals would get better whatever was done, but the owner would assume the remedy helped. Other owners fool themselves into thinking that an animal has become more comfortable (you can’t actually tell the level of pain it is feeling, for instance), and finally an owner may well combine giving the treatment with extra care and attention, which itself will have a positive placebo effect on the animal.

The same applies to many other alternative treatments – acupuncture is a good example where there is little evidence of the treatment having any real benefit over and above being a placebo.

The ethics of placebos

The interesting thing here is whether or not this means that these treatments, or treatment using an explicit placebo, should be used. Many scientists have the knee-jerk reaction that they are unethical. To make effective use of a placebo (whether labelled alternative medicine or substituting for conventional medication) the person giving the treatment has to lie to the patient. It involves deception or self-deception.

The difficult ethical question is whether or not it is acceptable to deceive people in order to make them feel better. The placebo effect can be quite powerful, and is less likely to have side effects than many conventional medications. But is it possible to justify using deception to achieve positive results? Do the ends justify the means?

You might feel one answer would be that it ought to be justifiable, as long as it is cheap. After all, a lot of medicines are expensive. Given that a placebo (or a homeopathic treatment) is just a sugar pill, a bottle full should only cost a few pence. This might seem a way to justify what would otherwise be a cruel deception

Unfortunately, research has also shown that expensive placebos work better than cheap ones, when the people taking them are aware of the cost. When test subjects were given two placebo painkillers, one costing $2.50 per pill and the other $0.10 per pill, then given electric shocks, the subjects on the more expensive sugar pills experienced considerably better pain relief.

What is certainly true, though, is that the deception could be justified for the use of placebos and alternative medicine if it had a clear benefit and no disadvantage; there have, after all, been examples where such deception has resulted in suffering and death. Where a patient is given a homeopathic remedy or other alternative medicines to prevent malaria or ‘cure’ cancer, HIV and other life-threatening diseases, as has happened all too often, it can produce deadly results as well as raising false hope. If taking these remedies results in avoiding conventional treatment it can have terrible consequences, and deserves to be condemned.

A placebo is a mechanism that misleads the brain, using it to influence the body. Like all the ways your brain and body function, this mechanism has evolved through many generations. It’s time to return to the mirror, to consider your body as a whole, and how it came to be here at all.