CHAPTER 14

ASSESSING DESIGN

Ming-Chien Hsu, Monica E. Cardella, and Şenay Purzer

Purdue University

ABSTRACT

Engineering design, an integral part of engineering education, is a challenging process to describe, teach, and assess. In this chapter, we describe the way we explored design process assessment in the K–12 setting. An instrument that was previously used to assess college students and professional engineers was adapted for elementary students. The students were asked to critique an engineering design process. Their responses were coded for occurrences of design process concepts. We validated the results by comparing the frequency of the occurrences of the responses in the instruction group and the comparison group of students before and after the school year. A version was created for elementary teachers and validated through comparing performances before and after a week-long engineering workshop. While there are areas for continual development and improvement, this method of evaluating design process knowledge has the potential to provide formative assessment of design learning.

INTRODUCTION

Design is a distinguishing feature of engineering (Simon, 1996) and is integral to all engineering disciplines (Atman et al., 2005). Design is also multifaceted: exploratory, rhetorical, emergent, opportunistic, reflective, risky, and an important human endeavor (Cross, 1999). While design is a complex activity, it is also accessible and engaging for a wide range of learners, including children in preschool and elementary schools. For example, design is a central concept within the Engineering is Elementary curriculum currently in use on a national scale. Within this curriculum, design is taught as a process involving five main activities: asking, imagining, planning, creating, and improving (Cunningham & Hester, 2007). Although the process is portrayed in a simplified five-activity cyclical model, the process retains the complexities of design—the iterative nature of design, the challenge of not only solving but framing problems (through the process of “asking” questions), and the need to imagine and plan before creating.

Recent studies have provided evidence that learning through engineering design helps students’ conceptual understanding and skill development, (Kolodner et al., 2003; Mehalik, Doppelt, & Schunn, 2008; Sadler, Coyle, & Schwartz, 2000). For example, Kolodner et al. (2003) incorporated a design process into inquiry-oriented middle school classrooms to help students become critical thinkers and informed decision makers. Also, Mehalik et al. (2008) found that middle school students who learned through a design-based approach were able to learn science concepts more effectively and retained them better as compared to those using an inquiry approach. In alignment with these studies, recent reports on engineering education have also indicated that the design process should be an important part of K–12 engineering learning (Committee on K–12 Engineering Education; National Academy of Engineering and National Research Council, 2009) and a key focus for assessment in precollege engineering education (Cardella, Salzman, Purzer, & Strobel, 2014).

As states are beginning to adopt the Next Generation Science Standards (NGSS Lead States 2013), schools are adding engineering content, including design, to their curricular experiences. Besides putting thought into appropriate methods for teaching design, it is also necessary to clarify how to assess design knowledge and skills. Assessments of design understanding can provide important feedback for learners and instructors to further the learners’ understanding. It can also provide important insights for researchers investigating the impacts of different interventions aimed at increasing understanding of design. In this chapter, we describe existing design assessment approaches, as well as our efforts to develop assessment instruments to measure elementary school students’ and teachers’ understanding of the engineering design process.

THEORETICAL FRAMEWORK

In the development of the assessment instruments to measure students’ and teachers’ understanding of design, we adopted Pellegrino et al.’s (2001) framework describing assessment as “reasoning from evidence” and consisting of three linking elements: cognition, observation, and interpretation. The framework has been used extensively when structuring assessment, both for program assessment and classroom assessment. For example, the framework was used as a framing concept in evaluating young children’s work (Helm, Beneke, & Steinheimer, 2007) and in making sense of complex assessment (Mislevy, Steinberg, Breyer, Almond, & Johnson, 2002).

Cognition refers to beliefs about how people learn (Pellegrino et al., 2001). Previous design research results suggest that the design process that people use might be indicative of the kinds of design thinking that they may or may not use, such as reflective practice (Adams, Turns, & Atman, 2003). Also, research suggests that design language shapes the knowledge that people have about design (Atman, Kilgore, & McKenna, 2008); we believe that design language also reflects the knowledge that people have about design. Thus people with a better understanding of the design process will give not only a more comprehensive view of the process but also exhibit deeper reasoning abilities.

Observation refers to the task or situation that will prompt learners to demonstrate the knowledge or skills (Pellegrino et al., 2001). We used a design scenario as the type of task that would prompt respondents’ thinking and answering, where the goal of the design scenario was to elicit participants’ understanding of design and ability to notice and discuss different aspects of a design process. The scenario is described in more detail later in the chapter.

Interpretation refers to a method of interpreting the performance to make sense of the observation gathered from the task. We referred to the content and the pedagogical perspectives of design learning when we interpret the data gathered, and we made a first attempt in developing a set of rubrics for quantitative assessment.

After considering Pellegrino’s framework and the three linking elements of cognition, observation, and interpretation, we asked the question: How can design process learning in elementary school contexts be assessed with alignment to our understanding of design learning?

INSTRUMENT DEVELOPMENT PROCESS

Content development

In order to assess elementary students’ and teachers’ design knowledge, we began our instrument development process by considering the content that the instrument would need to assess. While little research has been conducted to characterize elementary students’ or teachers’ understanding of engineering design or their engineering design skills, the literature contains many examples of expert-novice studies comparing college students at different points in their college studies, as well as comparing college students to practicing engineers (Atman et al., 2007; Atman, Cardella, Turns, & Adams, 2005; Atman, Chimka, Bursic, & Nachtmann, 1999). From this review of the literature, we determined that the instrument would need to capture differences in respondents’ understanding of problem scoping (i.e., problem-definition, information -gathering, and problem-framing activities), idea generation, and iteration. In addition, the instrument would need to capture respondents’ understanding of design as a process, rather than respondents’ understanding of individual activities.

Our next step in our instrument development process was a review of existing instruments used to assess engineering design process knowledge. The Museum of Science, Boston, uses knowledge questions to assess elementary students’ understanding of individual activities, but does not assess students’ understanding of design as a process (Lachapelle & Cunningham, 2007). Hirsch and her colleagues have, however, looked at middle school students’ understanding of design process through interviews in which students were presented with scenarios of children engaged in design activities. Students needed to identify the design steps that were being used or the subsequent step that should be used (Hirsch, Berliner-Heyman, Carpinelli, & Kimmel, 2012). Other instruments have been developed to measure college students’ design learning: concept maps (Sims-Knight, Upchurch, &

Fortier, 2005a; Turns, Atman, & Adams, 2000; Walker, 2005; Walker & King, 2003), simulation (Sims-Knight, Upchurch, & Fortier, 2005b), a knowledge test (Bailey, 2008; Bailey & Szabo, 2006), verbal protocol analysis (Adams et al., 2003; Atman & Bursic, 1998; Atman et al., 1999), design journals (Seepersad, Schmidt, & Green, 2006; Turns, 1997), surveys (Purzer, Hilpert & Wertz, 2011; Adams & Fralick, 2010; Eastman, Newstetter & McCracken, 2001), and scenarios (McMartin, McKenna, & Youssefi, 2000). We reviewed these previous approaches to assessment to consider the appropriateness of the methodologies for our context, as well as the potential for adapting actual instruments for use with elementary students and/or teachers.

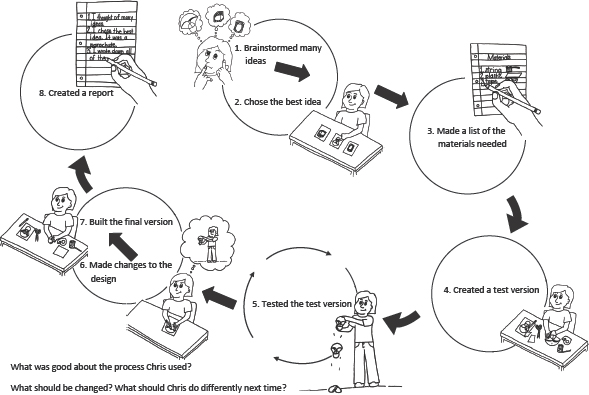

For the elementary student population, we made the following considerations. First, the task should be prompted. Second, the task should be stand-alone for teachers to use in the classroom. Based on these considerations, we identified an existing instrument as suited to adapt for our purpose. Bailey and his colleagues developed an instrument to prompt students to critique a design task laid out chronologically and presented as a Gantt chart (Bailey & Szabo, 2006). Bailey has used this design process knowledge task to identify differences between freshman and senior engineering students’ understanding of design process. In order to further validate the instrument, Bailey also administered the task to practicing engineers and compared his findings to other published research on expert engineers’ design practices (Bailey, 2008). We changed Bailey’s task’s initial description so that is developmentally appropriate for elementary students (Please refer to Figure 14.1.). We also replaced the Gantt chart with alternative pictures depicting a child’s design process. Like Bailey’s task, the main instructions remained the same; the students were asked to (1) comment on what is good about the design process and (2) comment on how the process can be improved. The changes made were reviewed by an external expert in K–12 engineering education research and an external expert who has served as an elementary science specialist. The version of the instrument depicted in Figure 14.1 is our current version of the instrument; it matches the original version in content, but the original drawings have been replaced with more professional versions.

For the elementary teacher version of the task, many of the methodologies and actual instruments used with the college students would have been appropriate. However, to facilitate possible investigations of student design understanding in relation to their teacher’s understanding of design, we decided to modify Bailey’s task for the teachers as well. In our initial version of the task, teachers were asked to critique a Gantt chart (akin to the college student version of the task). The scenario provided for the teachers suggested that the Gantt chart represented a student’s design process: “Imagine that you asked your students to design a container to keep an egg safe during an egg drop contest. Now imagine that we were able to capture one of the students’ design process and create the following table showing the different activities that she/he engaged in, how much time was spent on each activity, as well as the student’s sequence of events.”

As a part of the validation process, we pilot tested the student instrument with 37 elementary students at the beginning and end of a school year in which they had received various degrees of engineering instruction. We refer to the group of students who received engineering instructions as the instruction group. These students were second through fourth graders from 10 different classrooms with teachers who had attended a week-long teacher professional development program on implementing engineering content in K–12 classrooms. The student instrument was also administered at the beginning and end of the school year with an additional 34 students from matching grade levels who did not receive engineering instruction (the comparison group). An interviewer talked with students individually, showed them the illustration of a child’s design process (Figure 14.1), and prompted the students’ responses. We also pilot tested the teacher instrument with the teachers for the instruction group before and after the week-long teacher development program that occurred during the summer, as well as a second group of additional teachers. A total of 62 teachers participated in the study. Additional details about the teacher professional development program, the classroom activities, recruitment methods, and demographic details are described in other publications (e.g., Hsu, Cardella, & Purzer, 2010).

The audio recordings of the interviews with the students were transcribed and analyzed using content analysis and an open coding method (Strauss & Corbin, 1990) by two independent coders. Initial Kappa Coefficient was 0.604, and differences were later resolved by consensus. Seven coding categories emerged. We mapped five of the coding categories to the language used in the Engineering is Elementary design process model (Ask, Imagine, Plan, Create, Test, Improve). The additional two coding categories were “Test” and “Document.” Please refer to Table 14.1 for definitions and examples of each coding category.

Table 14.1. Definition and examples of each coding category.

| Category Explanation: Indicating that design process should include … | Examples of specific terms that children used | |

| Ask | Asking about the details of the problem and constraints | We asked questions about how is it going to make it more soft or is it going to be like a real egg. |

| Imagine | Brainstorming ideas and picking a good idea | He thought about it. Because if you think about it and drew it, it helps you better to pick which one and helps you do good. He wrote down his ideas and he picked one of them. He’s brainstorming and trying very hard. |

| Plan | Planning ahead, including the materials needed for finishing the design | He said what was going to before he started doing all this. He made a list of the materials he may need like a bucket. |

| Create | Creating and building | He created something. He built it differently. |

| Improve | Making the design even better | If it didn’t work too well, she might want to make a few more changes than she did. He improved it. He was fixing his project he was redoing it to make it not break the egg. |

| Test | Testing out the prototypes built | You don’t know if it works if you don’t-test them. He tested the test version… . So he can see what he needs to add. |

| Document | Taking notes of what ideas came up and what was done | He wrote a report about it… . So that ummm everybody else knows. He’s supposed to write what he think. Then if he forget, he can read his list. |

We calculated the number of design concepts in each student’s response. The sum of the number of concepts was counted as each student’s total design process knowledge score. Each student had a pre-score and a post-score. The highest possible score for students was 7 points. The teacher written responses were analyzed in much the same way, except that they did not need to be transcribed, and an additional code of “time” was used. Because the teacher version of the instrument included a Gantt chart, teachers were able to comment on the amounts of time that were allocated to the different design activities. The remainder of the codes were the same, although the specific terms that teachers used were different due to differences in the task as well as the teachers’ advanced education and training. We calculated the number of design activities (concepts) included in each teacher’s response. The sum of the number of concepts was counted as each teacher’s total design process score. Each teacher had a pre-score and a post-score. The highest possible score for teachers was 8 points.

To collect evidence of the validity of the instrument and determine if the student version of the instrument could detect across-group differences, we conducted a Mann–Whitney test (since the data violate assumptions of parametric test) using SPSS version 19 to compare the instruction group and the comparison group regarding their total design process knowledge scores. At a significance level of 0.05, the pre-test total score of the instruction group (Mdn = 1) did not did not differ significantly from the comparison group (Mdn = 1) (U = 700, z = 0.845, p = 0.398, r = 0.10); at the beginning of the school year, the two groups of students performed equally well on the task. By the end of the school year, the instruction group scored significantly higher in the post test compared to the pre test, as revealed by a Wilcoxon signed rank test (U = 293.00, z = 2.09, p = 0.037, r = 0.24). By contrast, the comparison group did not score differently in the pre test and the post test (U = 144.00, z = 1.48, p = 0.138). Specifically, we have noted that in contrast to all other categories that have improved in the post test, few students commented on the aspect of “ask”—asking in order to understand the problem and the constraints. This is the case with both groups in pre test and post test.

To examine the teacher data and investigate the teacher instrument’s ability to distinguish the differences between pre and post training, we performed the Wilcoxon signed rank test and compared total pre-test scores to the total post-test scores. The test showed that there was a significant difference between the pre and post total design scores (z = 3.01, p < 0.01, r = 0.28). We further looked into which aspects of the design process improved after the workshop. The McNemar test on the paired two-level data at a significance level of 0.05 showed a significant increase in the number of participants mentioning the aspects of create (25% vs. 43%) and time (48% vs. 80%) in post test as compared to pre test.

DISCUSSION

We considered the validity when developing the instrument. In the content aspect, we considered the relevance of content and task to the target population of elementary students, reviewed existing instruments, and adapted a suitable instrument to suit the cognitive ability of our population. We likewise considered the relevance of content and task for the teacher population, and ultimately decided to structure the task in a way to facilitate comparisons between students and teachers. For the substantive aspect, there is empirical evidence to show that when university students and practitioners engage in this task, they are exhibiting behaviors that are consistent with their design process knowledge and skills (Bailey, 2008). We also know that the patterns exhibited by our participants are consistent with the patterns of expert-novice differences exhibited by other groups, where both the teachers and students in this study exhibited behavior that was more similar to novice behavior. For the generalizability aspect, we provide more details of our studies in our other publications (Hsu et al., 2010) for future studies to interpret across population groups and settings. For the structural aspect, we conducted pilot tests to explore and evaluate how the scoring structure reflects students gained understanding in design.

The results of the pilot suggested that the teacher instrument and the rubrics were able to reflect the differences in teachers’ knowledge before and after a week-long workshop and students’ knowledge after the engineering lessons. The teachers participated in extensive engineering design and engineering education activities during the week-long teacher development program; the students partook at least one engineering lesson during the school year.

The instrument has the potential to provide information on the content and pedagogy aspects of design learning. For example, the instrument can be used to assess students’ naturalistic learning progression of design process, which is crucial for designing developmentally appropriate content for engineering design learning. Also, as a form of formative assessment, the teachers can adjust activities in the next engineering unit to emphasize the aspects of design process that students exhibit more difficulty learning.

Limitations

One limitation of the current instrument is a lack of comparison between survey scores and actual design behavior. As our next step, we plan to further validate the student version of the instrument by conducting concurrent observation of students doing design to address the external aspect of construct validity (Messick, 1995). We also plan to establish rubrics with respect to the complexity of students’ reasoning. While we still have further work to do in terms of refining the instrument and administering it to larger groups of elementary school students, the interview data have already provided some interesting examples of what some students learned about the design process. We believe that after completing further revisions to the instrument, this instrument may be useful for capturing what students learn about the engineering design process by participating in design-based instruction or as a form of formative assessment to give teachers feedback on future instructions. This would complement the progress that our community has made in documenting the benefits of design-based instruction for students’ science learning. Similarly, we are continuing to refine and pilot test the teacher version of the instrument in order to provide a useful tool for measuring the impact of various teacher development activities. Finally, we are also working to create versions of the instrument that are appropriate for other groups, including a version for high school students that is currently underway.

Alternate approaches

While we have focused much of this chapter on our instruments and our instrument development process, there are other approaches to assessing design. In this section we describe in more detail some of the approaches used for assessing college students’ understanding of engineering design as well as some of the approaches used for pre-college populations. As noted previously, at the college level, approaches have included concept maps (Sims-Knight et al., 2005a; Turns et al., 2000; Walker, 2005; Walker & King, 2003), simulation (Sims-Knight et al., 2005b), a knowledge test (Bailey, 2008; Bailey & Szabo, 2006), verbal protocol analysis (Adams et al., 2003; Atman & Bursic, 1998; Atman et al., 1999), design journals (Seepersad et al., 2006; Turns, 1997); surveys (Purzer, Hilpert & Wertz, 2011; Adams & Fralick, 2010; Eastman, Newstetter & McCracken, 2001), and scenarios (McMartin, McKenna, & Youssefi, 2000). Each of these approaches could be appropriate for use with teachers, as well as older pre-college students (i.e., high school students). In particular, in recent work, Mentzer and Park (2011) have used verbal protocol studies to investigate high school students’ design processes.

CONCLUSION

Engineering design is a complex process that is challenging to describe, teach, and assess. However, it is also an integral part of engineering, and therefore an integral part of precollege engineering education. Thus it is important to continue to investigate new approaches for teaching engineering design to pre-college students and to continue to develop approaches for assessing pre-college students’ and educators’ design process knowledge and skills. Recent work (including, but not limited to, our own) has yielded some tools for educators to use to provide feedback to learners (whether the learners are teachers participating in a workshop or students in a classroom) as well as to provide insights to researchers investigating the effectiveness of different interventions.

ACKNOWLEDGMENTS

This work was supported by a grant from the National Science Foundation (DRL 0822261). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

We would like to thank Dr. Heidi Diefes-Dux for leading the data collection efforts of the project and the many students at INSPIRE for assisting in data collection and transcription. We would also like to thank DeLean Tolbert, Brittany Mihalec-Adkins, and Bailey Mantha-Nagrant for their help with coding the data.

REFERENCES

Adams, R. S., & Fralick, B. (2010). A conceptions of design instrument as an assessment tool. Proceedings of the IEEE Frontiers in Education Conference. Washington, DC.

Adams, R. S., Turns, J., & Atman, C. (2003). Educating effective engineering designers: The role of reflective practice. Design Studies, 24(3), 275–294.

Atman, C., Adams, R. S., Cardella, M. E., Turns, J., Mosborg, S., & Saleem, J. (2007). Engineering design processes: A comparison of students and expert practitioners. Journal of Engineering Education, 96(4), 359–379.

Atman, C., & Bursic, K. (1998). Verbal protocol analysis as a method to document engineering student design processes. Journal of Engineering Education, 87(2), 121–132.

Atman, C., Cardella, M. E., Turns, J., & Adams, R. S. (2005). A comparison of freshman and senior engineering design processes: an in depth follow-up study. Design Studies, 26(4), 325–357.

Atman, C., Chimka, J., Bursic, K., & Nachtmann, H. (1999). A comparison of freshman and senior engineering design processes. Design Studies, 20(2), 131–152.

Atman, C., Kilgore, D., & McKenna, A. F. (2008). Characterizing design learning through the use of language: A mixed-methods study of engineering designers. Journal of Engineering Education, 97(3), 309–326.

Bailey, R. (2008). Comparative study of undergraduate and practicing engineer knowledge of the roles of problem definition and idea generation in design. International Journal of Engineering Education, 24(2), 226–233.

Bailey, R., & Szabo, Z. (2006). Assessing engineering design process knowledge. International Journal of Engineering Education, 22(3), 508–518.

Cardella, M. E., Salzman, N., Purzer, Ş., & Strobel, J. (2014). Assessing engineering knowledge, attitudes, and behaviors for research and program evaluation purposes. In S. Purzer, J. Strobel, & M. E. Cardella (Eds.), Engineering in pre-college settings: Research into practice. West Lafayette, IN: Purdue University Press.

Cross, N. (1999). Natural intelligence in design. Design Studies, 20(1), 25–39.

Cunningham, C., & Hester, K. (2007). Engineering is elementary: An engineering and technology curriculum for children. Proceedings of the American Society for Engineering Education Annual Conference & Exposition. Honolulu, HI. June 24-27.

Eastman, C., Newstetter, W., & McCracken, M. (2001). Design knowing and learning: Cognition in design education. Oxford, UK: Elsevier.

Helm, J. H., Beneke, S., & Steinheimer, K. (2007). Windows on learning: Documenting young children. New York: Teachers College Press.

Hirsch, L. S., Berliner-Heyman, M. S. L., Carpinelli, J. D., & Kimmel, H. S. (2012). Introducing middle school students to engineering and the engineering design process. Proceedings of the American Society for Engineering Education Annual Conference & Exposition. San Antonio, TX. June 14-17.

Hsu, M.-C., Cardella, M. E., & Purzer, Ş. (2010). Assessing elementary teachers’ design knowledge before and after introduction of a design process model. Proceedings of the 2010 American Society for Engineering Education Annual Conference & Exposition. Louisville, KY.

Katehi, L., Pearson, G., & Feder, M. (Eds.). (2009). Engineering in K–12 education: Understanding the status and improving the prospects. Committee on K-12 Engineering Education. National Academy of Engineering, National Research Council. Washington, DC: National Academy Press.

Kolodner, J. L., Camp, P. J., Crismond, D., Fasse, B., Gray, J., Holbrook, J., … (2003). Problem-based learning meets case-based reasoning in the middle-school science classroom: Putting Learning by Design into practice. Journal of the Learning Sciences, 12(4), 495–547.

Lachapelle, C., & Cunningham, C. (2007). Engineering is elementary: Children’s changing understandings of science and engineering. Proceedings of the American Society for Engineering Education Annual Conference & Exposition. Honolulu, HI. June 24-27.

McMartin, F., McKenna, A., & Youssefi, K. (2000). Scenario assignments as assessment tools for undergraduate engineering education. IEEE Transactions on Education, 43(2), 111–119.

Mehalik, M. M., Doppelt, Y., & Schunn, C. D. (2008). Middle-school science through design-based learning versus scripted inquiry: Better overall science concept learning and equity gap reduction. Journal of Engineering Education, 97(1), 71–85.

Mentzer, N., & Park, K. (2011). High school students as novice designers. Proceedings of the American Society for Engineering Education Annual Conference & Exposition. Vancouver, BC. June 26-29.

Messick, S. (1995). Validity of psychological assessment: Validation of inferences from persons’ responses and performances as scientific inquiry into score meaning. American Psychologist, 50(9), 741–749.

Mislevy, R. J., Steinberg, L. S., Breyer, F. J., Almond, R. G., & Johnson, L. (2002). Making sense of data from complex assessments. Applied Measurement in Education, 15(4), 363–389.

NGSS Lead States. (2013). Next Generation Science Standards: For states, by states. Washington, DC: National Academies Press.

Pellegrino, J. W., Chudowsky, N., & Glaser, R. (2001). Knowing what students know: The science and design of educational assessment. Washington, DC: National Academy Press.

Purzer, Ş., Hilpert, J. C., & Wertz, R. E. H. (2011). Cognitive dissonance during engineering design. Proceedings of the IEEE Frontiers in Education Conference. Rapid City, SD.

Sadler, P. M., Coyle, H. P., & Schwartz, M. (2000). Engineering competitions in the middle school classroom: Key elements in developing effective design challenges. Journal of the Learning Sciences, 9(3), 299–327.

Seepersad, C., Schmidt, K., & Green, M. (2006). Learning journals as a cornerstone for effective experiential learning in undergraduate engineering design courses. Proceedings of the American Society for Engineering Education Annual Conference & Exposition. Chicago, IL. June 18-21.

Simon, H. A. (1996). The sciences of the artificial. Cambridge, MA: MIT Press.

Sims-Knight, J. E., Upchurch, R. L., & Fortier, P. (2005a). Assessing students’ knowledge of design process in a design task. Proceedings of the IEEE Frontiers in Education Conference. Indianapolis, IN.

Sims-Knight, J. E., Upchurch, R. L., & Fortier, P. (2005b). A simulation task to assess students’ design process skill. Proceedings of the IEEE Frontiers in Education Annual Conference. Indianapolis, IN.

Strauss, A. L., & Corbin, J. M. (1990). Basics of qualitative research. Thousand Oaks, CA: Sage Publications.

Turns, J. (1997). Learning essays and the reflective learner: Supporting assessment in engineering design education. Proceedings of the IEEE Frontiers in Education Conference. Pittsburgh, PA.

Turns, J., Atman, C., & Adams, R. (2000). Concept maps for engineering education: A cognitively motivated tool supporting varied assessment functions. IEEE Transactions on Education, 43(2), 164–173.

Walker, J. M. T. (2005). Expert and student conceptions of the design process. International Journal of Engineering Education, 21(3), 467–479.

Walker, J. M. T., & King, P. H. (2003). Concept mapping as a form of student assessment and instruction in the domain of bioengineering. Journal of Engineering Education, 92(2), 167–179.