.

.Solution to Exercise 0.1, page ix

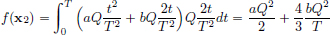

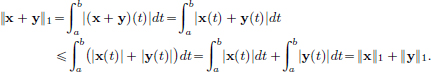

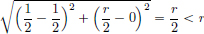

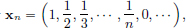

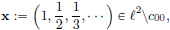

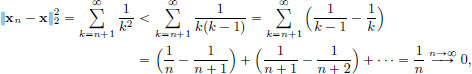

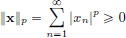

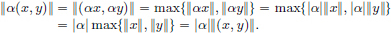

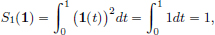

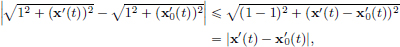

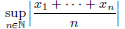

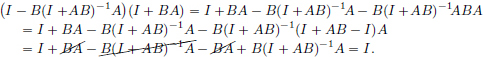

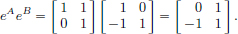

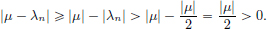

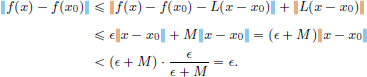

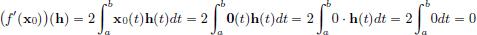

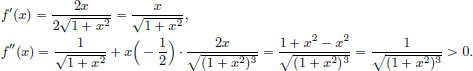

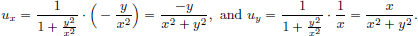

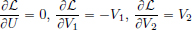

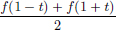

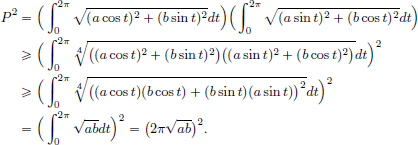

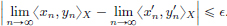

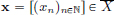

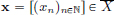

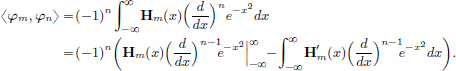

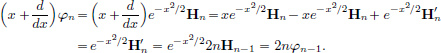

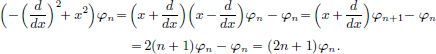

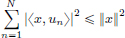

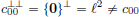

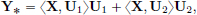

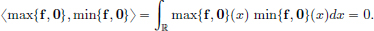

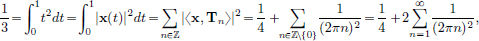

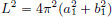

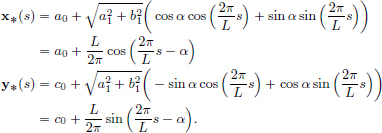

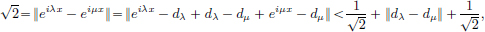

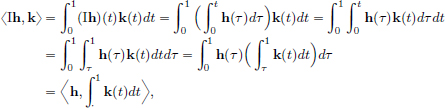

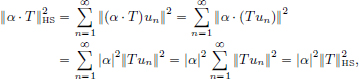

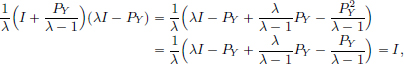

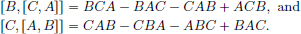

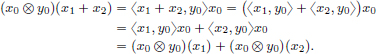

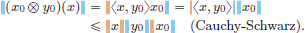

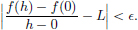

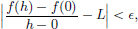

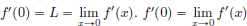

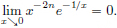

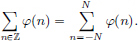

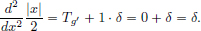

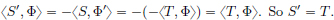

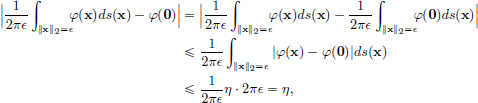

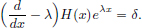

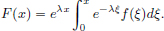

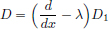

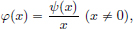

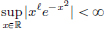

We have  .

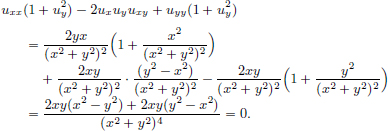

.

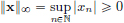

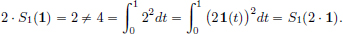

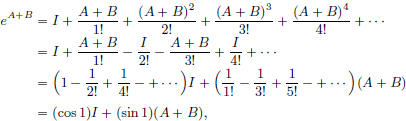

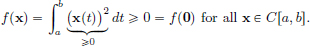

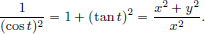

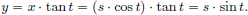

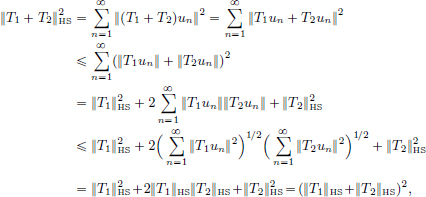

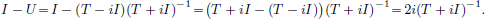

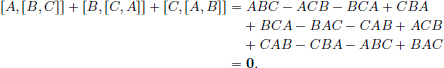

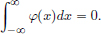

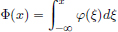

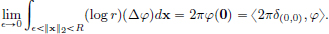

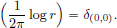

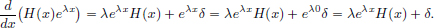

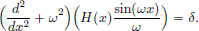

On the other hand,  .

.

Clearly f(x2) > f(x1), and so the mining operation x1 is preferred to x2 because it incurs a lower cost.

Solutions to the exercises from Chapter 1

Solution to Exercise 1.1, page 7

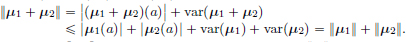

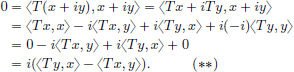

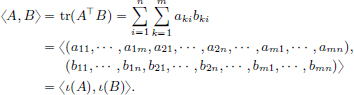

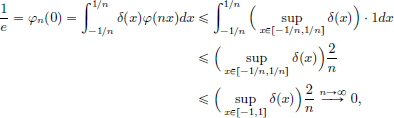

True. Indeed we have:

(V1)For all x, y, z > 0, x  (y

(y  z) = x

z) = x  (yz) = x(yz) = (xy)z = (xy)

(yz) = x(yz) = (xy)z = (xy)  z = (x

z = (x  y)

y)  z.

z.

(V2)For all x > 0, x  1 = x1 = x = 1x = 1

1 = x1 = x = 1x = 1  x.

x.

(So 1 serves as the zero vector in this vector space!)

(V3)If x > 0, then 1/x > 0 too, and x  (1/x) = x(1/x) = 1 = (1/x)x = (1/x)

(1/x) = x(1/x) = 1 = (1/x)x = (1/x)  x.

x.

(Thus 1/x acts as the inverse of x with respect to the operation  .)

.)

(V4)For all x, y > 0, x  y = xy = yx = y

y = xy = yx = y  x.

x.

(V5)For all x > 0, 1 · x = x1 = x.

(V6)For all x > 0 and all  .

.

(V7)For all x > 0 and all  .

.

(V8)For all x, y > 0,  .

.

We remark that V is isomorphic to the one dimensional vector space R (with the usual operations): indeed, it can be checked that the maps log : V → R and exp : R → V are linear transformations, and are inverses of each other.

Solution to Exercise 1.2, page 7

We prove this by contradiction. Suppose that C[0, 1] has dimension d. Consider functions xn(t) = tn, t ∈ [0, 1], n = 1, ··· , d. Since polynomials are continuous, we have xn ∈ C[0, 1] for all n = 1, ··· , d.

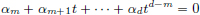

First we prove that xn, n = 1, ··· , d, are linearly independent in C[0, 1]. Suppose not. Then there exist αn ∈ R, n = 1, ··· , d, not all zeros, such that α1 · x1 + ··· + αd · xd = 0. Let m ∈ {1, ··· , d} be the smallest index such that αm ≠ 0. Then for all t ∈ [0, 1], αmtm + ··· + αdtd = 0. In particular, for all t ∈ [0, 1], we have  .

.

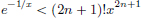

Thus for all n ∈ N we have  .

.

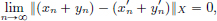

Passing the limit as n → 8, we obtain αm = 0, a contradiction. So the functions xn, n = 1, ··· , d, are linearly independent in C[0, 1].

Next, we get the contradiction to C[0, 1] having dimension d. Since any independent set of cardinality d in a d-dimensional vector space is a basis for this vector space, {xn : n = 1, ··· , d} is a basis for C[0, 1]. Since the constant function 1 (taking value 1 everywhere on [0, 1]) belongs to C[0, 1], there exist βn ∈ R, n = 1, ··· , d, such that 1 = β1 · x1 + ··· + βd · xd. In particular, putting t = 0, we obtain the contradiction that 1 = 0: 1 = 1(0) = (β1 · x1 + ··· + βd · xd)(0) = 0.

Solution to Exercise 1.3, page 7

(“If ” part.) Suppose that ya = yb = 0. Then we have:

(S1)If x1, x2 ∈ S, then x1 + x2 ∈ S. As x1, x2 ∈ C1[a, b], also x1 + x2 ∈ C1[a, b]. Moreover, x1(a) + x2(a) = 0 + 0 = 0 = ya and x1(b) + x2(b) = 0 + 0 = 0 = yb.

(S2)If x ∈ S and α ∈ R, then α · x ∈ S. Indeed, as x ∈ C1[a, b], and α ∈ R,

we have α·x ∈ C1[a, b], and (α·x)(a) = α0 = 0 = ya, (α·x)(b) = α0 = 0 = yb.

(S3)0 ∈ S, since 0 ∈ C1[a, b] and 0(a) = 0 = ya = yb = 0(b).

Hence, S is a subspace of a vector space C1[a, b]

(“Only if ” part.) Suppose that S is a subspace of C1[a, b]. Let x ∈ S.

Then 2 · x ∈ S. Therefore, (2 · x)(a) = ya, and so ya = (2 · x)(a) = 2x(a) = 2ya.

Thus ya = 0. Moreover, (2 · x)(b) = yb, and so yb = (2 · x)(b) = 2x(b) = 2yb.

Hence also yb = 0.

Solution to Exercise 1.4, page 10

Solution to Exercise 1.5, page 14

From the triangle inequality, we have that ||x|| = ||y + x − y||  ||y|| + ||x − y||, for all x, y ∈ X. So for all x, y ∈ X, ||x|| − ||y||

||y|| + ||x − y||, for all x, y ∈ X. So for all x, y ∈ X, ||x|| − ||y||  ||x − y||.

||x − y||.

Interchanging x, y, we get  .

.

So for all x, y ∈ X, − (||x|| − ||y||)  ||x − y||.

||x − y||.

Combining the results from the first two paragraphs, we obtain |||x|| − ||y|||  ||x − y|| for all x, y ∈ X.

||x − y|| for all x, y ∈ X.

Solution to Exercise 1.6, page 14

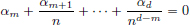

No, since for example (N2) fails if we take x = 1 and α = 2:

Solution to Exercise 1.7, page 15

We verify that (N1), (N2), (N3) are satisfied by || · ||Y:

(N1)For all y ∈ Y, ||y||Y = ||y||X  0.

0.

If y ∈ Y and ||y||Y = 0, then ||y||X = 0, and so y = 0 ∈ X.

But 0 ∈ Y, and so y = 0 ∈ Y.

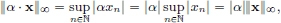

(N2)If y ∈ Y and α ∈ R, then α · y ∈ Y and  .

.

(N3)If y1, y2 ∈ Y, then y1 + y2 ∈ Y.

Also,  .

.

Solution to Exercise 1.8, page 15

(1) We first consider the case 1  p < ∞, and then p = ∞. Let 1

p < ∞, and then p = ∞. Let 1  p < ∞.

p < ∞.

(N1)If x = (x1, ··· , xd) ∈ Rd then  .

.

If x ∈ Rd and ||x||p = 0, then ||x||pp = 0. that is,  .

.

So |xn| = 0 for 1  n

n  d, that is, x = 0.

d, that is, x = 0.

(N2)Let x = (x1 , ··· , xd) ∈ Rd, and α ∈ R.

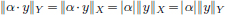

Then  .

.

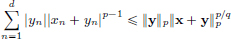

(N3)Let x = (x1, ··· , xd) ∈ Rd and y = (y1 , ··· , yd) ∈ Rd.

If p = 1, then we have |xn + yn|  |xn| + |yn| for 1

|xn| + |yn| for 1  n

n  d.

d.

By adding these, ||x + y||1  ||x||1 + ||y||1, establishing (N3) for p = 1.

||x||1 + ||y||1, establishing (N3) for p = 1.

Now consider the case 1 < p < ∞.

If x + y = 0, then ||x + y||p = ||0||p = 0  ||x||p + ||y||p trivially.

||x||p + ||y||p trivially.

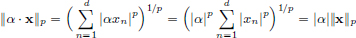

So we assume that x + y ≠ 0. By Hölder’s Inequality, we have

where we used q(p − 1) = p in order to obtain the last equality.

Similarly,  . Consequently,

. Consequently,

Dividing throughout by  , we obtain

, we obtain  . This completes the proof that (Rd, || · ||p) is a normed space for 1

. This completes the proof that (Rd, || · ||p) is a normed space for 1  p < ∞.

p < ∞.

Now we consider the case p = ∞.

(N1)If x = (x1 , ··· , xd) ∈ Rd, then ||x||∞ = max{|x|, ··· , |xd|}  0.

0.

If x ∈ Rd and ||x||∞ = 0, then max{|x1|, ··· , |xd|} = 0, and so |xn| = 0 for 1  n

n  d, thart is, x = 0.

d, thart is, x = 0.

(N2)Let x = (x1 , ··· , xd) ∈ Rd, and α ∈ R.

Then  .

.

(N3)Let x = (x1, ··· , xd) ∈ Rd and y = (y1, ··· , yd) ∈ Rd.

We have  for 1

for 1  n

n  d.

d.

So it follows that ||x + y||∞  ||x||∞ + ||y||∞, establishing (N3) for p = ∞.

||x||∞ + ||y||∞, establishing (N3) for p = ∞.

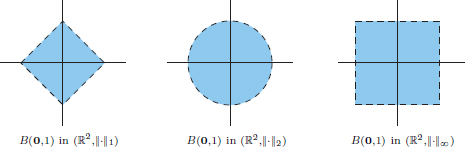

(2) See the following pictures.

(3) We have for x = (a, b) ∈ R2 that

So  .

.

We have  . We have

. We have

giving 1/p  hp

hp  0 for all p, and so hp → 0 as p → ∞.) So it follows by the Sandwich Theorem1 that

0 for all p, and so hp → 0 as p → ∞.) So it follows by the Sandwich Theorem1 that  .

.

The balls Bp(0, 1) grow to B∞(0, 1) as p increases.

Solution to Exercise 1.9, page 16

(1)If x, y ∈ B(0, 1), then for all α ∈ (0, 1), (1 − α) · x + α · y ∈ B(0, 1) too, since

(3)B(0, 1) is not convex: taking x = (1, 0), y = (0, 1) and α = 1/2, we obtain  , and so

, and so  .

.

Solution to Exercise 1.10, page 16

We’ll verify that (N1), (N2), (N3) hold.

(N1)If x ∈ C[a, b], then |x(t)|  0 for all t ∈ [0, 1], and so

0 for all t ∈ [0, 1], and so  .

.

Let x ∈ C[a, b] be such that ||x||1 = 0. If x(t) = 0 for all t ∈ (a, b), then by the continuity of x on [a, b], it follows that x(t) = 0 for all t ∈ [a, b] too, and we are done! So suppose that it is not the case that for all t ∈ (a, b), x(t) = 0. Then there exists a t0 ∈ (a, b) such that x(t0) ≠ 0. As x is continuous at t0, there exists a δ > 0 small enough so that a < t0 − δ, t0 + δ < b, and such that for all t ∈ [a, b] such that t0 − δ < t < t0 + δ, |x(t) − x(t0)| < |x(t0)|/2. Then for t0 − δ < t < t0 + δ, we have, using the “reverse” Triangle Inequality from Exercise 1.5, page 14, that

So  .

.

This is a contradiction. Hence x = 0.

(N2)For x ∈ C[a, b], α ∈ R,  .

.

(N3)Let x, y ∈ C[a, b]. Then

Solution to Exercise 1.11, page 17

(N1)For x ∈ Cn[a, b], clearly  .

.

If x ∈ Cn[a, b] is such that ||x||n,∞ = 0, then ||x||∞ + ··· + ||x(n)||∞ = 0, and since each term in this sum is nonnegative, we have ||x||∞ = 0, and so x = 0.

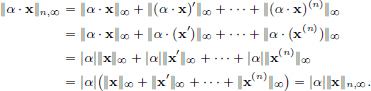

(N2)Let x ∈ Cn[a, b] and α ∈ R. Then

(N3)Let x, y ∈ Cn[a, b]. For all 0  k

k  n, ||x(k) + y(k)||∞

n, ||x(k) + y(k)||∞  ||x(k)||∞ + ||y(k)||∞, by the Triangle Inequality for || · ||∞ Consequently,

||x(k)||∞ + ||y(k)||∞, by the Triangle Inequality for || · ||∞ Consequently,

Solution to Exercise 1.12, page 17

(1)Let k1, k2, m1, m2, n1, n2 ∈ Z, p ł m1, m2, n1, n2 and  .

.

If k1 > k2, then pk1−k2m1n2 = m2n1, which implies that p | m2n1, and as p is prime, this would mean p | m1 or p | n1, a contradiction. Hence k1  k2. Similarly, we also obtain k2

k2. Similarly, we also obtain k2  k1.

k1.

Thus k1 = k2. Consequently,  , and so | · |p is well-defined.

, and so | · |p is well-defined.

(2)If 0 ≠ r ∈ Q, then we can express r as  , with k, m, n ∈ Z, and p

, with k, m, n ∈ Z, and p  m, n.

m, n.

We see that  . If r = 0, then |r|p = |0|p = 0 by definition.

. If r = 0, then |r|p = |0|p = 0 by definition.

Thus |r|p  0 for all r ∈ R. Also if r ≠ 0, then |r|p > 0. Hence |r|p = 0 implies that r = 0.

0 for all r ∈ R. Also if r ≠ 0, then |r|p > 0. Hence |r|p = 0 implies that r = 0.

(3)The claim is obvious if r1 = 0 or r2 = 0. Suppose that r1 ≠ 0 and r2 ≠ 0.

Let  and

and  .

.

So  . As p

. As p  m1, p

m1, p  m2, and p is prime, we have p

m2, and p is prime, we have p  m1m2.

m1m2.

Similarly p  n1n2. Thus

n1n2. Thus  .

.

(4)The inequality is trivially true if r1 = 0 or r2 = 0 or if r1 + r2 = 0.

Assume r1 ≠ 0, r2 ≠ 0, and r1 + r2 ≠ 0.

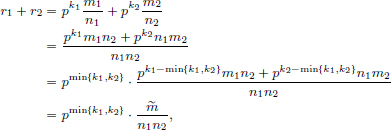

Let  , with k1, k2, m1, m2, n1, n2 ∈ Z, p

, with k1, k2, m1, m2, n1, n2 ∈ Z, p  m1, m2, n1, n2. We have

m1, m2, n1, n2. We have

where  := pk1−min{k1,k2} m1n2 + pk2−min{k1,k2} n1m2 (≠ 0, since r1 + r2 ≠ 0). By the Fundamental Theorem of Arithmetic, there exists a unique integer

:= pk1−min{k1,k2} m1n2 + pk2−min{k1,k2} n1m2 (≠ 0, since r1 + r2 ≠ 0). By the Fundamental Theorem of Arithmetic, there exists a unique integer

0 and an integer m such that

0 and an integer m such that  and p

and p  m. Clearly p

m. Clearly p  n1n2.

n1n2.

Hence r1 + r2 =  , with p

, with p  m, n1n2.

m, n1n2.

So

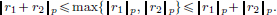

This yields the Triangle Inequality:

Solution to Exercise 1.13, page 17

(N1)Clearly  for all M = [mij] ∈ Rm×n.

for all M = [mij] ∈ Rm×n.

If ||M||∞ = 0, then |mij| = 0 for all 1  i

i  m, 1

m, 1  j

j  n, that is, M = [mij] = 0, the zero matrix.

n, that is, M = [mij] = 0, the zero matrix.

(N2)For M = [mij] ∈ Rm×n and α ∈ R, we have

(N3)For P = [pij], Q = [qij] ∈ Rm×n, |pij + qij|  |pij| + |qij|

|pij| + |qij|  ||P||∞ + ||Q||∞.

||P||∞ + ||Q||∞.

As this holds for all i, j, ||P + Q||∞ =  .

.

Solution to Exercise 1.14, page 19

Consider the open ball B(x, r) = {y ∈ X : ||x − y|| < r} in X. If y ∈ B(x, r), then ||x − y|| < r. Define r′ = r − ||x − y|| > 0. We claim that B(y, r′) ⊂ B(x, r). Let z ∈ B(y, r′). Then ||z − y|| < r′ = r − ||x − y|| and so ||x − z||  ||x − y|| + ||y − z|| < r. Hence z ∈ B(x, r). The following picture illustrates this.

||x − y|| + ||y − z|| < r. Hence z ∈ B(x, r). The following picture illustrates this.

Solution to Exercise 1.15, page 19

The point  , but for each r > 0, the point

, but for each r > 0, the point  belongs to the ball B(c, r), but not to I, since ||y − c||2 =

belongs to the ball B(c, r), but not to I, since ||y − c||2 =  , but

, but  ≠ 0. See the following picture.

≠ 0. See the following picture.

Solution to Exercise 1.16, page 19

Using the following picture, it can be seen that the collections O1, O2, O∞ of open sets in the normed spaces (R2, || · ||1), (R2, || · ||2), (R2, || · ||∞), respectively, coincide.

Solution to Exercise 1.17, page 20

If Fi, i ∈ I, is a family of closed sets, then X\Fi, i ∈ I, is a family of open sets. Hence  is open. So

is open. So  is closed.

is closed.

If F1, ··· , Fn are closed, then X\F1, ··· , X\Fn are open, and so the intersection  of these finitely many open sets is open as well.

of these finitely many open sets is open as well.

Thus  is closed.

is closed.

For showing that the finiteness condition cannot be dropped, we’ll consider the normed space X = R, and simply rework Example 1.15, page 20, by taking complements.

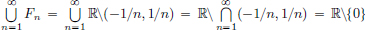

We know that Fn := R\(−1/n, 1/n), n ∈ N, is closed and the union of these,  which is not closed, since if it were, its complement R\(R\{0}) = {0} would be open, which is false.

which is not closed, since if it were, its complement R\(R\{0}) = {0} would be open, which is false.

Solution to Exercise 1.18, page 20

Consider the closed ball B(x, r) = {y ∈ X : ||x − y||  r} in X. To show that B(x, r) is closed, we’ll show its complement, U := {y ∈ X : ||x − y|| > r}, is open. If y ∈ U, then ||x − y|| > r. Define r′ = ||x − y|| − r > 0. We claim that B(y, r′) ⊂ U. Let z ∈ B(y, r′). Then ||z − y|| < r′ = ||x − y|| − r and so ||x − z||

r} in X. To show that B(x, r) is closed, we’ll show its complement, U := {y ∈ X : ||x − y|| > r}, is open. If y ∈ U, then ||x − y|| > r. Define r′ = ||x − y|| − r > 0. We claim that B(y, r′) ⊂ U. Let z ∈ B(y, r′). Then ||z − y|| < r′ = ||x − y|| − r and so ||x − z||  ||x − y|| − ||y − z|| > ||x − y|| − (||x − y||− r) = r. Hence z ∈ U.

||x − y|| − ||y − z|| > ||x − y|| − (||x − y||− r) = r. Hence z ∈ U.

Solution to Exercise 1.19, page 20

(1)False.

For example, in the normed space R, consider the set [0, 1). Then [0, 1) is not open, since every open ball B with centre 0 contains at least one negative real number, and so B has points not belonging to [0, 1).

On the other hand, this set [0, 1) is not closed either, as its complement is C := (−∞, 0) ∪ [1, ∞), which is not open, since every open ball B′ with centre 1 contains at least one positive real number strictly less than one, and so B′; contains points that do not belong to C.

(2)False. R is open in R, and it is also closed.

(3)True. Ø and X are both open and closed in any normed space X.

(4)True. [0, 1) is neither open nor closed in R.

(5)False.

0 ∈ Q, but every open ball centred at 0 contains irrational numbers; just consider  /n, with a sufficiently large n.

/n, with a sufficiently large n.

(6)False.

Consider the sequence (an)n∈N given by a1= , and for n > 1, an+1 =

, and for n > 1, an+1 =  . Then it can be shown, using induction on n, that (an)n∈N is bounded below by

. Then it can be shown, using induction on n, that (an)n∈N is bounded below by  , and that (an)n∈N is monotone decreasing. (Example 1.19, page 31.) So (an)n∈N is convergent with a limit L satisfying

, and that (an)n∈N is monotone decreasing. (Example 1.19, page 31.) So (an)n∈N is convergent with a limit L satisfying  , and so L2 = 2.

, and so L2 = 2.

As L must be positive (the sequence is bounded below by  ), it follows that L =

), it follows that L =  . So every ball with centre

. So every ball with centre  and a positive radius contains elements from Q (terms an for large n), showing that R\Q is not open, and hence Q is not closed.

and a positive radius contains elements from Q (terms an for large n), showing that R\Q is not open, and hence Q is not closed.

(Alternately, let c ∈ R have the decimal expansion c = 0.101001000100001 ···. The number c is irrational because2 it has a nonterminating and nonrepeating decimal expansion. The sequence of rational numbers obtained by truncation, namely 0.1, 0.101, 0.101001, 0.1010010001, 0.101001000100001, ··· converges with limit c, and so every ball with centre c and a positive radius contains elements from Q, showing again that R \ Q is not open, and hence Q is not closed.)

(7)True.  As each (n, n + 1) is open, so is their union.

As each (n, n + 1) is open, so is their union.

Hence Z = R\(R\Z) is closed.

We have already seen in Exercise 1.14, page 19, that the interior of S, namely the open ball B(0, 1) = {x ∈ X : ||x|| < 1} is open. Also, it follows from Exercise 1.18, page 20, that the exterior of the closed ball B(0, 1), namely the set U = {x ∈ X : ||x|| > 1} is open as well. Thus, the complement of S, being the union of the two open sets B(0, 1) and U, is open. Consequently, S is closed.

If X = {0}, then {0} is clearly closed, since X\{0} = Ø is open.

Now suppose that X ≠ {0}, and let x ∈ X. We want to show that U := X\{x} is open. Let y ∈ U := X\{x}, and set r := ||x – y|| > 0. We claim that the open ball B(y, r) is contained in U. If z ∈ B(y, r), then ||y – z|| < r, and so ||z – x||  ||x – y|| – ||y – z||

||x – y|| – ||y – z||  r – ||y – z|| > r – r = 0. Hence z ≠ x, and so z ∈ X\{x} = U. Consequently U is open, and so {x} = X\U is closed.

r – ||y – z|| > r – r = 0. Hence z ≠ x, and so z ∈ X\{x} = U. Consequently U is open, and so {x} = X\U is closed.

If F is empty, then it is closed.

If F is not empty, then F = {x1, ··· , xn} =  {xi}, for some x1, ···, xn ∈ X.

{xi}, for some x1, ···, xn ∈ X.

As F is the finite union of the closed sets {x1}, ···, {xn}, F is closed too.

Let x, y ∈ R and x < y. By the Archimedean property of R, there is a positive integer n such that n > 1/(y – x), that is n(y – x) > 1. Also, there are positive integers m1, m2 such that m1 > nx and m2 > –nx, so that –m2 < nx < m1. Thus we have nx ∈ [–m2, –m2 + 1) ∪ [–m2 + 1, –m2 + 2)∪···∪[m1 – 1, m1). Hence there is an integer m such that m – 1  nx < m. We have nx < m

nx < m. We have nx < m  1 + nx < ny, and so dividing by n, we have x < q := m/n < y. Consequently, between any two real numbers, there is a rational number.

1 + nx < ny, and so dividing by n, we have x < q := m/n < y. Consequently, between any two real numbers, there is a rational number.

Let x ∈ R and let  > 0. Then there is a rational number y such that x –

> 0. Then there is a rational number y such that x –  < y < x +

< y < x +  , that is, |x – y| <

, that is, |x – y| <  . Hence Q is dense in R.

. Hence Q is dense in R.

Let x ∈ R and let  > 0. If x ∈ R\Q, then taking y = x, we have |x – y| = 0 <

> 0. If x ∈ R\Q, then taking y = x, we have |x – y| = 0 <  . If on the other hand, x ∈ Q, then let n ∈ N be such that n >

. If on the other hand, x ∈ Q, then let n ∈ N be such that n >  /

/ so that with y := x +

so that with y := x +  /n, we have y ∈ R\Q, and |x – y| =

/n, we have y ∈ R\Q, and |x – y| =  /n <

/n <  . So R\Q is dense in R.

. So R\Q is dense in R.

Let x = (xn)n∈N ∈ ℓ2, and  > 0. Let N ∈ N be such that

> 0. Let N ∈ N be such that

Then y := (x1, ···, xN, 0, ···) ∈ c00, and

Thus ||x – y||2 <  . Consequently, c00 is dense in ℓ2.

. Consequently, c00 is dense in ℓ2.

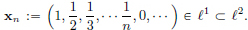

Consider the set D of all finitely supported sequences with rational terms. Then D is a countable set since it is a countable union of countable sets. We now show that D is dense in ℓ1. Let x := (xn)n∈N ∈ ℓ1 and let r > 0.

Let N ∈ N be large enough so that

As Q is dense in R, there exist q1, ···, qN ∈ Q such that

With x′ := (q1, ···, qN, 0, ···) ∈ D,

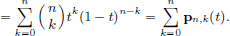

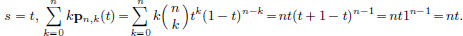

By the Binomial Theorem, we have

Putting s = t, we get 1 = (t + (1 – t))n

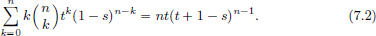

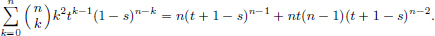

Keeping s fixed, and differentiating (7.1) with respect to t yields

Multiplying throughout by t gives

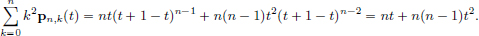

With

Differentiating (7.2) with respect to t yields

Multiplying throughout by t yields

Setting s = t now gives

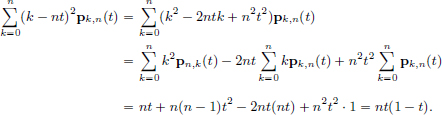

Hence

(1)We check that the relation ~ is reflexive, symmetric and transitive.

(ER1)(Reflexivity) If ||·|| is a norm on X, then for all x ∈ X, we have that 1 · ||x|| = ||x|| = 1 · ||x||, and so ||·|| ~ ||·||.

(ER2)(Symmetry) If ||·||a ~ ||·||b, then there exist positive m, M such that for all x ∈ X, m||x||b  ||x||a

||x||a  M ||x||b. A rearrangement of this gives (1/M)||x||a

M ||x||b. A rearrangement of this gives (1/M)||x||a  ||x||b

||x||b  (1/m)||x||a, x ∈ X, and so ||·||2 ~ ||·||1.

(1/m)||x||a, x ∈ X, and so ||·||2 ~ ||·||1.

(ER3)(Transitivity) If ||·||a ~ ||·||b and ||·||b ~ ||·||c, then there exist positive constants Mab, Mbc, mab, mbc such that for all x ∈ X, we have that mab||x||b  ||x||a

||x||a  Mab||x||b and mbc ||x||c

Mab||x||b and mbc ||x||c  ||x||b

||x||b  Mbc ||x||c.

Mbc ||x||c.

Thus mabmbc||x||c  mab||x||b

mab||x||b  ||x||a

||x||a  Mab||x||b

Mab||x||b  MabMbc ||x||c, and so ||·||a ~ ||·||c.

MabMbc ||x||c, and so ||·||a ~ ||·||c.

(2)Suppose that ||·||a ~ ||·||b. Because ~ is an equivalence relation, it is enough to just prove that if U is open in (X, ||·||b), then U is open in (X, ||·||a) too, and similarly, if (xn)n∈N is Cauchy (respectively) convergent in (X, ||·||b), then it is Cauchy (respectively convergent) in (X, ||·||a) as well. Let m, M > 0 be such that for all x ∈ X, m||x||b  ||x||a

||x||a  M||x||b.

M||x||b.

Let U be open in (X, ||·||b), and x ∈ U. Then as U is open in (X, ||·||b), there exists an r > 0 such that Bb(x, r) := {y ∈ X : ||y – x||b < r} ⊂ U. But if y ∈ X satisfies ||y – x||a < mr, then ||y – x||b  (1/m)||y – x||a < (1/m)mr = r, and so y ∈ Bb(x, r) ⊂ U. Hence Ba(x, mr) := {y ∈ X : ||y – x||a < mr} ⊂ U. So it follows that U is open in (X, ||·||a) too.

(1/m)||y – x||a < (1/m)mr = r, and so y ∈ Bb(x, r) ⊂ U. Hence Ba(x, mr) := {y ∈ X : ||y – x||a < mr} ⊂ U. So it follows that U is open in (X, ||·||a) too.

Now suppose that (xn)n∈N is a Cauchy sequence in (X, ||·||b). Let  > 0. Then there exists an N ∈ N such that for all n > N, ||xn – xm||b <

> 0. Then there exists an N ∈ N such that for all n > N, ||xn – xm||b <  /M. Hence for all n > N, ||xn – xm||a

/M. Hence for all n > N, ||xn – xm||a  M ||xn – xm||b < M · (

M ||xn – xm||b < M · ( /M) =

/M) =  .

.

Consequently, (xn)n∈N is a Cauchy sequence in (X, ||·||a) as well.

If (xn)n∈N is a convergent sequence in (X, ||·||b) with limit L, then for  > 0, there exists an N ∈ N such that for all n > N, ||xn – L||b <

> 0, there exists an N ∈ N such that for all n > N, ||xn – L||b <  /M. Thus for all n > N, ||xn – L||a

/M. Thus for all n > N, ||xn – L||a  M ||xn – L||b < M · (

M ||xn – L||b < M · ( /M) =

/M) =  . So (xn)n∈N is convergent with limit L in (X, ||·||a) too.

. So (xn)n∈N is convergent with limit L in (X, ||·||a) too.

(1)Let L > 0 be such that for all x, y ∈ R, |f(x) – f(y)| =

Then in particular, with  n ∈ N, and y = 0, we obtain

n ∈ N, and y = 0, we obtain

Thus n  L for all n ∈ N, which is absurd. So f is not Lipschitz.

L for all n ∈ N, which is absurd. So f is not Lipschitz.

(2)x1(0) = 0 and x2(0) = 02/4 = 0, and so x1, x2 satisfy the initial condition.

For all t  0,

0,

So x1, x2 are both solutions to the given Initial Value Problem.

Let F be closed, and (xn)n∈N be a sequence in F which converges to x. Suppose that x ∉ F. Since F is closed, there is an open ball B(x, r) := {x ∈ X : ||x – x|| < r} with r > 0, which is contained in X\F. But with  := r > 0, there exists an N ∈ N such that for all n > N, ||xn – x|| < r. In particular, ||xN+1 – x|| < r, so that F ∋ xN+1 ∈ B(x, r) ⊂ X\F, a contradiction. Hence x ∈ F.

:= r > 0, there exists an N ∈ N such that for all n > N, ||xn – x|| < r. In particular, ||xN+1 – x|| < r, so that F ∋ xN+1 ∈ B(x, r) ⊂ X\F, a contradiction. Hence x ∈ F.

Now suppose that for every sequence (xn)n∈N in F, convergent in X with a limit x ∈ X, we have that the limit x ∈ F. We want to show that X\F is open. Suppose it isn’t. Then3 ¬[∀x ∈ X\F, ∃r > 0 such that B(x, r) ⊂ X\F]. In other words, ∃x ∈ X\F such that ∀r > 0, B(x, r) ∩ F ≠ Ø. So with r = 1/n, n ∈ N, we can find an xn ∈ B(x, r) ∩ F. Then we obtain a sequence (xn)n∈N in F satisfying ||xn – x|| < 1/n for all n ∈ N. Thus (xn)n∈N converges to x. But x ∉ F, contradicting the hypothesis. Hence X\F is open, that is, F is closed.

Let (xn)n∈N in c00 be given by  n ∈ N.

n ∈ N.

Then with  we have

we have

showing that c00 is not closed.

(1)Suppose that F is a closed set containing S. Let L be a limit point of S.

Then there exists a sequence (xn)n∈N in S\{L} which converges to L.

As each xn ∈ S\{L} ⊂ S ⊂ F, and since F is closed, it follows that L ∈ F.

So all the limit points of S belong to F. Hence S ⊂ F.

S is closed. Suppose that (xn)n∈N is a sequence in S that converges to L.

We would like to prove that L ∈ S. If L ∈ S, then L ∈ S, and we are done.

So suppose that L ∉ S. Now for each n, we define the new term x′n as follows:

1°If xn ∈ S, then x′n := xn.

2°If xn ∉ S, then xn must be a limit point of S, and so B(xn, 1/n) must contain some element, say x′n, of S.

Hence we have

Thus (x′n)n∈N is a sequence in S\{L} which converges to L, and so L is a limit point of S, that is, L ∈ S. Consequently S is closed.

(2)We first note that if y ∈ Y, then there exists a (yn)n∈N in Y that converges to y. Indeed, this is obvious if y is a limit point of Y, and if y ∈ Y, then we may just take (yn)n∈N as the constant sequence with all terms equal to y. We have:

(S1)Let x, y ∈ Y. Let (xn)n∈N, (yn)n∈N be sequences in Y that converge to x, y, respectively. Then xn + yn ∈ Y ⊂ Y for each n ∈ N, and (xn + yn)n∈N converges to x + y. But as Y is closed, it follows that x + y ∈ Y too.

(S2)Let α ∈ K, y ∈ Y. Let (yn)n∈N be a sequence in Y that converges to y. Then α · yn ∈ Y ⊂ Y for each n ∈ N, and (α · yn)n∈N converges to α · y.

But as Y is closed, it follows that α · y ∈ Y too.

(S3)0 ∈ Y ⊂ Y.

Hence Y is a closed subspace.

(3)The proof is similar to part (2). Let x, y ∈ C. Then there exist sequences (xn)n∈N and (yn)n∈N in C that converge to x, y, respectively. If α ∈ (0, 1), then (1 – α)x + αy = (1 – α)  xn + α

xn + α  yn =

yn =  ((1 – α)xn + αyn).

((1 – α)xn + αyn).

As (1 – α)xn + αyn ∈ C ⊂ C for all n ∈ N, and since C is closed, it follows that (1 – α)x + αy ∈ C too.

(4)Suppose that D is dense in X. Let x ∈ X\D. If n ∈ N, then the ball B(x, 1/n) must contain an element dn ∈ D. The sequence (dn)n∈N converges to x because ||x – dn|| < 1/n, n ∈ N. Hence x is a limit point of D, that is, x ∈ D.

So X\D ⊂ D. Also D ⊂ D. Thus X = D ∪ (X\D) ⊂ D ⊂ X, and so X = D. Now suppose that X = D. If x ∈ X\D = D\D, then x is a limit point of D, and so there is a sequence (dn)n∈N in D that converges to x. Thus given an  > 0, there is an N such that ||x – dN|| <

> 0, there is an N such that ||x – dN|| <  , that is, dN ∈ D ∩ B(x,

, that is, dN ∈ D ∩ B(x,  ).

).

On the other hand, if x ∈ D, and  > 0, then x ∈ B(x,

> 0, then x ∈ B(x,  ) ∩ D.

) ∩ D.

Hence D is dense in X.

Let (xn)n∈N) ℓ1. Then  and so

and so  |xn| = 0.

|xn| = 0.

Thus there exists an N ∈ N such that |xn|  1 for all n

1 for all n  N. For all n

N. For all n  N, |xn|2 = |xn| · |xn|

N, |xn|2 = |xn| · |xn|  |xn| · 1 = |xn|. By the Comparison Test4,

|xn| · 1 = |xn|. By the Comparison Test4,

Hence (xn)n∈N ∈ ℓ2.

while the Harmonic Series

while the Harmonic Series  diverges.

diverges.

(ℓ1, ||·||2) is not a Banach space: Let us suppose, on the contrary, that it is a Banach space, and we will arrive at a contradiction by showing a Cauchy sequence which is not convergent in (ℓ1, ||·||2).

Consider for n ∈ N,  Then (xn)n∈N converges in ℓ2 to

Then (xn)n∈N converges in ℓ2 to  because

because

So (xn)N is a Cauchy sequence in (ℓ2, ||·||2), and so it is also Cauchy in (ℓ1, ||·||2). As we have assumed that (ℓ1, ||·||2) is a Banach space, it follows that the Cauchy sequence (xn)n∈N must be convergent to some element x′ ∈ ℓ1 ⊂ ℓ2. But by the uniqueness of limits (when we consider (xn)n∈N as a sequence in ℓ2), we must have x = x′ ∈ ℓ1, which is false, since we know that the Harmonic Series diverges. This contradiction proves that (ℓ1, ||·||2) is not a Banach space.

Let (an)n∈N be a Cauchy sequence in c0. Then this is also a Cauchy sequence in ℓ∞, and hence convergent to a sequence in ℓ∞, say a. We’ll show that a ∈ c0. We write  and

and  Let

Let  > 0. Then there exists an N ∈ N such that ||aN – a||∞ <

> 0. Then there exists an N ∈ N such that ||aN – a||∞ <  . In particular, for all m ∈ N,

. In particular, for all m ∈ N,  <

<  . But as aN ∈ c0, we can find an M such that for all m > M,

. But as aN ∈ c0, we can find an M such that for all m > M,  Consequently, for m > M, we have from the above that

Consequently, for m > M, we have from the above that  Thus a ∈ c0 too.

Thus a ∈ c0 too.

Given  > 0, let N ∈ N be large enough so that for all n > N, ||xn – x|| <

> 0, let N ∈ N be large enough so that for all n > N, ||xn – x|| <  . Then for all n > N, we have ||xn|| – ||x||

. Then for all n > N, we have ||xn|| – ||x||  ||xn – x|| <

||xn – x|| <  , and so it follows that the sequence (||xn||)n∈N is R is convergent, with limit ||x||.

, and so it follows that the sequence (||xn||)n∈N is R is convergent, with limit ||x||.

First consider the case 1  p < ∞.

p < ∞.

(N1) for all x = (x1, x2, x3, ···) ∈ ℓp.

for all x = (x1, x2, x3, ···) ∈ ℓp.

If  then |xn| = 0 for all n, and so x = 0.

then |xn| = 0 for all n, and so x = 0.

(N2)||α · x||p =  = |α| ||x||p, for x ∈ ℓp, α ∈ K.

= |α| ||x||p, for x ∈ ℓp, α ∈ K.

(N3)Let x = (x1, x2, ···) and y = (y1, y2, ···) belong to ℓp. Let d ∈ N.

By the Triangle Inequality for the ||·||p-norm on Rd,

Passing the limit as d tends to ∞ yields ||x + y||p  ||x||p + ||y||p.

||x||p + ||y||p.

Now consider the case p = ∞.

(N1) for all x = (x1, x2, x3, ···) ∈ ℓ∞.

for all x = (x1, x2, x3, ···) ∈ ℓ∞.

If  then |xn| = 0 for all n, that is, x = 0.

then |xn| = 0 for all n, that is, x = 0.

(N2) for x ∈ ℓ∞, α ∈ K.

for x ∈ ℓ∞, α ∈ K.

(N3)Let x = (x1, x2, ···) and y = (y1, y2, ···) belong to ℓ∞.

Then for all k, |xk + yk|  |xk| + |yk|

|xk| + |yk|  ||x||∞ + ||y||ℓ, and so

||x||∞ + ||y||ℓ, and so

From Exercise 1.11, page 17, taking n = 1, (C1[a, b], ||·||1,∞) is a normed space. We show that (C1[a, b], ||·||1,∞) is complete. Let (xn)n∈N be a Cauchy sequence in C1[a, b]. Then ||xn – xm||∞  ||xn – xm||∞ + ||x′n – x′m||1,∞, and so (xn)n∈N is a Cauchy sequence in (C[a, b], ||·||∞), and hence convergent to, say, x ∈ C[a, b]. Also, ||x′n – x′m||∞

||xn – xm||∞ + ||x′n – x′m||1,∞, and so (xn)n∈N is a Cauchy sequence in (C[a, b], ||·||∞), and hence convergent to, say, x ∈ C[a, b]. Also, ||x′n – x′m||∞  ||xn – xm||∞ + ||x′n – x′m||1,∞ = ||xn – xm||1,∞, shows that (x′n)n∈N is a Cauchy sequence in (C[a, b], ||·||∞), and hence convergent to say, y ∈ C[a, b]. We will now show that x ∈ C1[a, b], and x′ = y. Let t ∈ [a, b]. By the Fundamental Theorem of Calculus,

||xn – xm||∞ + ||x′n – x′m||1,∞ = ||xn – xm||1,∞, shows that (x′n)n∈N is a Cauchy sequence in (C[a, b], ||·||∞), and hence convergent to say, y ∈ C[a, b]. We will now show that x ∈ C1[a, b], and x′ = y. Let t ∈ [a, b]. By the Fundamental Theorem of Calculus,  and so

and so

Passing the limit as n goes to ∞ gives, for all t ∈ [a, b],

By the Fundamental Theorem of Calculus, x′ = y ∈ C[a, b]. So x ∈ C1[a, b]. Finally, we’ll show that (xn)n∈N converges to x in C1[a, b]. Let  > 0, and let N be such that for all m, n > N, ||xn – xm||1,∞ <

> 0, and let N be such that for all m, n > N, ||xn – xm||1,∞ <  . Then for all t ∈ [a, b], we have |xn(t) – xm(t)| + |x′n(t) – x′m(t)|

. Then for all t ∈ [a, b], we have |xn(t) – xm(t)| + |x′n(t) – x′m(t)|  |xn – xm|∞ + |x′n – x′m|∞ = ||xn – xm||1,∞ <

|xn – xm|∞ + |x′n – x′m|∞ = ||xn – xm||1,∞ <  . Letting m go to ∞, it follows that for all n > N, |xn(t) – x(t)| + |x′n(t) – x′(t)

. Letting m go to ∞, it follows that for all n > N, |xn(t) – x(t)| + |x′n(t) – x′(t)

As the choice of t ∈ [a, b] was arbitrary, it follows that

As the choice of t ∈ [a, b] was arbitrary, it follows that

that is, ||xn – xm||1,∞  2

2 .

.

Let (xn)n∈N be any Cauchy sequence in X. We construct a subsequence (xnk)k∈N inductively, possessing the property that if n > nk, then ||xn – xnk|| < 1/2k, k ∈ N. large enough so that if n, m  n1, then ||xn – xm|| < 1/2. Suppose xn1, ···, xnk have been constructed. Choose nk+1 > nk such that if n, m

n1, then ||xn – xm|| < 1/2. Suppose xn1, ···, xnk have been constructed. Choose nk+1 > nk such that if n, m  nk+1, then ||xn – xm|| < 1/2k+1. In particular for n

nk+1, then ||xn – xm|| < 1/2k+1. In particular for n  nk+1, ||xn – xnk+1|| Z 1/2k+1.

nk+1, ||xn – xnk+1|| Z 1/2k+1.

Now define u1 = xn1, uk+1 = xnk+1 – xnk, k ∈ N.

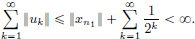

We have  Thus

Thus  converges.

converges.

But the partial sums of  are

are

So (xnk)k∈N converges in X, to, say x ∈ X. As (xnk)k∈N is a convergent subsequence of the Cauchy sequence (xn)n∈N, it now follows that (xn)n∈N is itself convergent with the same limit x. Indeed, given  > 0, first let N be such that for all n, m > N, ||xn – xm|| <

> 0, first let N be such that for all n, m > N, ||xn – xm|| <  /2, and next let nK > N be such that ||xnK – x|| <

/2, and next let nK > N be such that ||xnK – x|| <  /2, which yields that for all n > N,

/2, which yields that for all n > N,

(N1)For (x, y) ∈ X × Y, ||(x, y)|| = max{||x||, ||y||}  0.

0.

If ||(x, y)|| = 0, then 0  ||x||

||x||  max{||x||, ||y||} = {||x, y)|| = 0, and so ||x|| = 0, giving x = 0. Similarly, y = 0 too, and so (x, y) = 0X×Y.

max{||x||, ||y||} = {||x, y)|| = 0, and so ||x|| = 0, giving x = 0. Similarly, y = 0 too, and so (x, y) = 0X×Y.

(N2)For α ∈ K, and (x, y) ∈ X × Y,

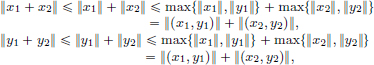

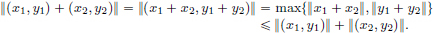

(N3)Let (x1, y1), (x2, y2) ∈ X × Y. Then

and so max{||x1 + x2||, ||y1 + y2||}  ||(x1, y1)|| + {||x2, y2)||. Thus

||(x1, y1)|| + {||x2, y2)||. Thus

Hence (x, y)  max{||x||, ||y||}, (x, y) ∈ X × Y, defines a norm on X × Y.

max{||x||, ||y||}, (x, y) ∈ X × Y, defines a norm on X × Y.

Let ((xn, yn))n∈N be Cauchy in X × Y. As ||x||  max{||x||, ||y||} = ||(x, y)||, (xn)n∈N is Cauchy in X. As X is Banach, (xn)n∈N converges to some x ∈ X. Similarly (yn)n∈N converges to a y ∈ Y. Let

max{||x||, ||y||} = ||(x, y)||, (xn)n∈N is Cauchy in X. As X is Banach, (xn)n∈N converges to some x ∈ X. Similarly (yn)n∈N converges to a y ∈ Y. Let  > 0. Then there exists an Nx such that for all n > Nx, ||xn – x|| <

> 0. Then there exists an Nx such that for all n > Nx, ||xn – x|| <  , and there is an Ny such that for all n > Ny, ||yn – y|| <

, and there is an Ny such that for all n > Ny, ||yn – y|| <  . So with N := max{Nx, Ny}, for all n > N, we have ||xn – x|| <

. So with N := max{Nx, Ny}, for all n > N, we have ||xn – x|| <  and ||yn – y|| <

and ||yn – y|| <  . Thus ||(xn, yn) – (x, y)|| = ||(xn – x, yn – y)|| = max{||xn – x||, ||yn – y||} <

. Thus ||(xn, yn) – (x, y)|| = ||(xn – x, yn – y)|| = max{||xn – x||, ||yn – y||} <  , showing that ((xn, yn))n∈N converges to (x, y) in X × Y. So X × Y is Banach.

, showing that ((xn, yn))n∈N converges to (x, y) in X × Y. So X × Y is Banach.

Since K is compact in Rd, it is closed and bounded. Let R > 0 be such that for all x ∈ K, ||x||2  R. In particular, for every x ∈ K ∩ F, we have ||x||2

R. In particular, for every x ∈ K ∩ F, we have ||x||2  R. Thus K ∩ F is bounded. Also, since both K and F are closed, it follows that even K ∩ F is closed. Hence K ∩ F is closed and bounded, and so by Theorem 1.10, page 45, we conclude that K∩F is compact.

R. Thus K ∩ F is bounded. Also, since both K and F are closed, it follows that even K ∩ F is closed. Hence K ∩ F is closed and bounded, and so by Theorem 1.10, page 45, we conclude that K∩F is compact.

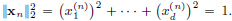

Clearly Sd–1 is bounded. It is also closed, and we prove this below. Let (xn)n∈N be a sequence in Sd–1 which converges to L in Rd. Let L = (L1, ···, Ld) and  for n ∈ N. Then

for n ∈ N. Then  xn(k) = Lk (k = 1, ..., d).

xn(k) = Lk (k = 1, ..., d).

Since xn ∈ Sd–1 for each n ∈ N, we have  Passing the limit as n → ∞, we obtain

Passing the limit as n → ∞, we obtain  Hence L ∈ Sd–1. So Sd–1 is closed. As Sd–1 is closed and bounded, it follows from Theorem 1.10, page 45, that it is compact.

Hence L ∈ Sd–1. So Sd–1 is closed. As Sd–1 is closed and bounded, it follows from Theorem 1.10, page 45, that it is compact.

(1)Let (Rn)n∈N be a sequence in O(2).

Using  then

then  and

and

So each of the sequences (an)n∈R, (bn)n∈R, (cn)n∈R, (dn)n∈R is bounded.

By successively refining subsequences of these sequences, we can choose a sequence of indices n1 < n2 < n3 <···, such that the sequences (ank)k∈N, (bnk)k∈N, (cnk)k∈N, (dnk)k∈N are convergent, to, say, a, b, c, d, respectively.

Hence (Rnk)k∈N is convergent with the limit

From (Rn) Rn = I (n ∈ N), it follows that also R

Rn = I (n ∈ N), it follows that also R R = I, that is, R ∈ O(2).

R = I, that is, R ∈ O(2).

(2)The hyperbolic rotations  belong to O(1, 1) because

belong to O(1, 1) because

But ||R(t)||∞  | cosh(t)| = cosh t → ∞ as t → ∞, showing that O(1, 1) is not bounded. Hence O(1, 1) can’t be compact (as every compact set is necessarily bounded).

| cosh(t)| = cosh t → ∞ as t → ∞, showing that O(1, 1) is not bounded. Hence O(1, 1) can’t be compact (as every compact set is necessarily bounded).

Let  Since K ⊂ [0, 1], clearly K is bounded.

Since K ⊂ [0, 1], clearly K is bounded.

Moreover,

Thus R\K, being the union of open intervals, is open, that is, K is closed. Since K is closed and bounded, it is compact.

If 1 ∈ C[0, 1] denotes the constant function taking value 1 everywhere, then

and so

So (L2) is violated, showing that S1 is not a linear transformation.

On the other hand, S2 is a linear transformation. For all x1, x2 ∈ C[0, 1],

and so (L1) holds. Moreover, for all α ∈ R and x ∈ C[0, 1] we have

and so (L2) holds as well.

(1)Let α1, α2 ∈ R be such that α1f1 + α2f2 = 0, that is,

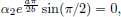

In particular, with t = 0, we obtain α1 = 0. Thus α2eat sin(bt) = 0 for all t ∈ R. With t = π/2b, we see that  and so α2 = 0. Consequently, f1, f2 are linearly independent.

and so α2 = 0. Consequently, f1, f2 are linearly independent.

(2)First of all, D is a well-defined map from Sf1,f2 to itself, since

Thus DSf1,f2 ⊂ Sf1,f2.

Furthermore, it is clear that D(g1 + g2) = D(g1) + D(g2) for all g1, g2 ∈ C1(R) (and in particular for g1, g2 ∈ Sf1,f2 ⊂ C1(R)), and also D(α · g) = α · D(g) and all g ∈ (R) (and in particular, for all g ∈ Sf1,f2).

Hence D is a linear transformation from Sf1,f2 to itself.

(3)We have Df1 = aeat cos(bt) – eatb sin(bt) = af1 – bf2, and

Df2 = aeat sin(bt) + eatb cos(bt) = bf1 + af2.

So the matrix of D with respect to the basis B = (f1, f2) is

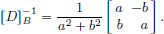

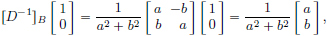

(4)As det[D]B = a2 + b2 ≠ 0, [D]B is invertible, and

Hence D is invertible, and the inverse D–1 : Sf1,f2 → Sf1,f2 has the matrix [D–1]B (with respect to B) given by [D–1]B = [D]–1]B found above.

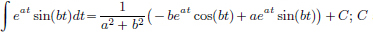

(5)We note that  and so

and so

By the definition of D,

So  any constant.

any constant.

Similarly, as  we have

we have

and so

So  any constant.

any constant.

(1)We have

(As expected, the arc length is simply the length of the line segment [0, 1].)

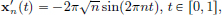

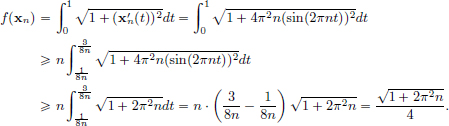

(2)We have  and so

and so

(3)Suppose that f is continuous at 0. Then with  := 1 > 0, there exists a δ > 0 such that whenever x ∈ C1[0, 1] and ||x – 0|| < δ, we have |f(x) – f(0)| < 1.

:= 1 > 0, there exists a δ > 0 such that whenever x ∈ C1[0, 1] and ||x – 0|| < δ, we have |f(x) – f(0)| < 1.

We have  for all

for all

Hence for such n there must hold that |f(xn) – f(0)| = |f(xn) – 1| < 1.

So for all  we have

we have

|f(xn)|

|f(xn)|  |f(xn) – 1| + 1 < 1 + 1 = 2,

|f(xn) – 1| + 1 < 1 + 1 = 2,

which is a contradiction. Hence f is not continuous at 0.

Let x0, x ∈ C1[a, b]. Using the triangle inequality in (R2, ||·||2), we obtain

and so

Thus given  > 0, if we set δ :=

> 0, if we set δ :=  , then we have for all x ∈ C1[0, 1] satisfying ||x – x0||1,∞ < δ that |f(x) – f(x0)|

, then we have for all x ∈ C1[0, 1] satisfying ||x – x0||1,∞ < δ that |f(x) – f(x0)|  ||x – x0||1,∞ < δ =

||x – x0||1,∞ < δ =  .

.

So f is continuous at x0. As the choice of x0 was arbitrary, f is continuous.

Let x0 ∈ X. Given  > 0, set δ :=

> 0, set δ :=  . Then for all x ∈ X satisfying ||x – x0|| < δ, we have | ||x|| – ||x0|| |

. Then for all x ∈ X satisfying ||x – x0|| < δ, we have | ||x|| – ||x0|| |  ||x – x0|| < δ =

||x – x0|| < δ =  . Thus ||·|| is continuous at x0. As x0 ∈ X was arbitrary, it follows that ||·|| is continuous on X.

. Thus ||·|| is continuous at x0. As x0 ∈ X was arbitrary, it follows that ||·|| is continuous on X.

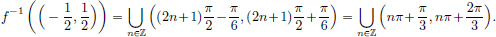

f–1({–1, 1}) = {nπ : n ∈ Z}, f–1({1}) = {2nπ : n ∈ Z}, f–1([–1, 1]) = R, and

Since cos is periodic with period 2π (that is, f(x) = f(x + 2π) for all x ∈ R), we have f(R) = f([0, 2π]) = f([δ, δ + 2π]) = [–1, 1].

(“If” part) Suppose that for every closed F in Y, f–1(F) is closed in X.

Now let V be open in Y. Then Y\V is closed in Y.

Thus f–1(Y\V) = f–1(Y)\f–1(V) = X\f–1(V) is closed in X.

Hence f–1(V) = X\(X\f–1(V)) is open in X.

So for every open V in Y, f–1(V) is open in X.

By Theorem 2.1, page 63, f is continuous on X.

(“Only if” part) Suppose that f is continuous.

Let F be closed in Y, that is, Y\F is open in Y.

Hence f–1(Y\F) = f–1(Y\f–1(F) = X\f–1(F) is open in X.

Consequently, we have that f–1(F) is closed in X.

If x ∈ (g  f)–1(W), then (g

f)–1(W), then (g  f)(x) ∈ W, that is, g(f(x)) ∈ W. So f(x) ∈ g–1(W), that is, x ∈ f–1(g–1(W)). Thus (g

f)(x) ∈ W, that is, g(f(x)) ∈ W. So f(x) ∈ g–1(W), that is, x ∈ f–1(g–1(W)). Thus (g  f)–1(W) ⊂ f–1 (g–1(W)).

f)–1(W) ⊂ f–1 (g–1(W)).

If x ∈ f–1(g–1(W)), then f(x) ∈ g–1(W), that is, (g  f)(x) = g(f(x)) ∈ W. Hence x ∈ (g

f)(x) = g(f(x)) ∈ W. Hence x ∈ (g  f)–1(W). So we have f–1(g–1(W)) ⊂ (g

f)–1(W). So we have f–1(g–1(W)) ⊂ (g f)–1(W).

f)–1(W).

Consequently, (g  f)–1(W) = f–1(g–1(W)).

f)–1(W) = f–1(g–1(W)).

(1)True.

Since (–∞, 1) is open in R and f : X → R is continuous, it follows that {x ∈ X : f(x) < 1} = f–1(–∞, 1) is open in X by Theorem 2.1, page 63.

(2)True.

Because (1, ∞) is open in R, and f : X → R is continuous, it follows by Theorem 2.1, page 63, that {x ∈ X : f(x) > 1} = f–1 (1, ∞) is open in X.

(3)False.

Take for example X = R with the usual Euclidean norm, and consider the continuous function f(x) = x for all x ∈ R. Then {x ∈ X : f(x) = 1} = {1}, which is not open in R.

(4)True.

(–∞, 1] is closed in R because its complement is (1, ∞), which is open in R. As f : X → R is continuous, {x ∈ X : f(x)  1} = f–1 (–∞, 1] is closed in X by Corollary 2.1, page 64.

1} = f–1 (–∞, 1] is closed in X by Corollary 2.1, page 64.

(5)True.

Since {1} is closed in R and since f : X → R is continuous, it follows by Corollary 2.1, page 64, that {x ∈ X : f(x) = 1} = f–1{1} is closed in X.

(6)True.

Each of the sets f–1{1} and f–1{2} are closed, and so their finite union, namely {x ∈ X : f(x) = 1 or 2} is closed as well.

(7)False.

Take for example X = R with the usual Euclidean norm, and consider the continuous function f(x) = 1 (x ∈ R). Then {x ∈ X : f(x) = 1} = R, which is not bounded, and hence can’t be compact.

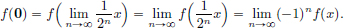

For all x ∈ X, we have f(2x) = –f(x), and so

Since the sequence  converges to 0, it follows that

converges to 0, it follows that

So we obtain that ((–1)nf(x))n∈N is convergent with limit f(0). Thus the subsequence (f(x))n∈N = ((–1)2nf(x))n∈N of ((–1)f(x))n∈N is also convergent with limit f(0). Hence f(x) = f(0) for all x ∈ X. As f(0) = f(2 · 0) = –f(0), it follows that f(0) = 0. Hence f(x) = 0 for all x ∈ X. So if f is continuous and it satisfies the given identity then it must be the constant function x  0 : X → Y.

0 : X → Y.

Conversely, the constant function x  0 : X → Y is indeed continuous and also f(2x) + f(x) = 0 + 0 = 0 for all x ∈ X.

0 : X → Y is indeed continuous and also f(2x) + f(x) = 0 + 0 = 0 for all x ∈ X.

The determinant of M = [mij] is given by the sum of expressions of the type

where p : {1, 2, 3, ···, n} → {1, 2, 3, ···, n} is a permutation. Since each of the maps M  m1p(1) m2p(2) m3p(3) ... mnp(n) is easily seen to be continuous using the characterisation of continuous functions provided by Theorem 2.3, page 64, it follows that their linear combination is also continuous.

m1p(1) m2p(2) m3p(3) ... mnp(n) is easily seen to be continuous using the characterisation of continuous functions provided by Theorem 2.3, page 64, it follows that their linear combination is also continuous.

{0} is closed in R, and so its inverse image det–1{0} = {M ∈ Rn×n : det M = 0} under the continuous map det is also closed. Thus its complement, namely the set {M ∈ Rn×n : det M ≠ 0}, is open. But this is precisely the set of invertible matrices, since M ∈ Rn×n is invertible if and only if det M ≠ 0.

We’d seen in Exercise 1.21, page 21, that a singleton set in any normed space is closed. So {0} is closed in Rm. As the linear transformation TA : Rn → Rm is continuous, its inverse image under TA, T–1A({0}) = {x ∈ Rn : Ax = 0} = ker A, is closed in Rn.

Let V be a subspace of Rn, and let {v1, · · ·, vk} be a basis for V. Extend this to a basis {v1, · · ·, vk, vk+1, · · ·, vn} for R. By using the Gram-Schmidt orthogonalisation procedure, we can find an orthonormal5 set of vectors {u1, · · ·, un} such that for each k ∈ {1, · · ·, n}, the span of the vectors v1, · · ·, vk coincides with the span of u1, · · ·, uk. Now define A ∈ R(n–k)×n as follows:

It is clear from the orthonormality of the ujs that Au1 = · · · = Auk = 0, and so it follows that also any linear combination of u1, · · ·, uk lies in the kernel of A. In other words, V ⊂ ker A.

On the other hand, if x = α1u1 + · · · + αnun, where α1, · · ·, αn are scalars and if Ax = 0, then it follows that

So x = α1u1 + · · · + αkuk ∈ V. Hence ker A ⊂ V.

Consequently V = ker A, and by the result of the previous exercise, it now follows that V is closed.

(1)The linearity of T follows immediately from the properties of the Riemann integral. Continuity follows from the straightforward estimate

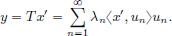

(2)The partial sums sn of the series converge to f. Thus, since the continuous map T preserves convergent sequences, it follows that

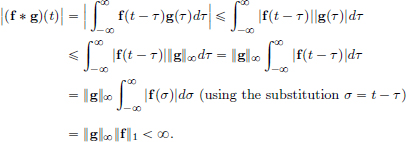

We have for all t ∈ R that

Thus ||f ∗ g||∞  ||g||∞||f||1 for all g ∈ L∞(R). So f∗ is well-defined. Linearity is easy to see. From the above estimate, it follows that the linear transformation f∗ is continuous as well.

||g||∞||f||1 for all g ∈ L∞(R). So f∗ is well-defined. Linearity is easy to see. From the above estimate, it follows that the linear transformation f∗ is continuous as well.

Consider the reflection map  : L2(R) → L2(R). Then it is straightforward to check that R ∈ L(L2(R)), and moreover it is continuous since ||f||2 =

: L2(R) → L2(R). Then it is straightforward to check that R ∈ L(L2(R)), and moreover it is continuous since ||f||2 =  2 for all f ∈ L2(R). Clearly Y = ker(I – R), and so, being the inverse image of the closed set {0} under the continuous map I – R, it follows that Y is closed.

2 for all f ∈ L2(R). Clearly Y = ker(I – R), and so, being the inverse image of the closed set {0} under the continuous map I – R, it follows that Y is closed.

For

and so Λ ∈ CL(ℓ2) and ||Λ||

|λn|.

|λn|.

Moreover, for ℓ2 ∋ en := (0, · · ·, 0, 1, 0, · · ·) ∈ ℓ2 (sequence with all terms equal to 0 and nth term equal to 1), we have

for all n, and so ||Λ|| is an upper bound for {|λn| : n ∈ N}. Hence ||Λ||

|λn|.

|λn|.

From the above, it now follows that ||Λ|| =  |λn|.

|λn|.

If λn = 1 –,  n ∈ N, then ||Λ|| =

n ∈ N, then ||Λ|| =

= 1.

= 1.

Suppose that x = (an)n∈N ∈ ℓ2 is such that ||x||2  1 and ||Λx||2 = ||Λ|| = 1.

1 and ||Λx||2 = ||Λ|| = 1.

If 0 = a2 = a3 = · · ·, then Λx = 0, and this contradicts the fact that ||Λx||2 = 1.

So at least one of the terms a2, a3, · · · must be nonzero.

a contradiction. So the operator norm is not attained for this particular Λ.

Let x = (xn)n∈N ∈ ℓp, and let  > 0.

> 0.

Then there exists an N such that  |xk|p <

|xk|p <  p. Let sn :=

p. Let sn :=  xkek.

xkek.

Then for n > N, x – sn = (0, · · ·, 0, xn+1, xn+2, xn+3, · · ·).

So  giving ||x – sn||p <

giving ||x – sn||p <  .

.

So (sn)n∈N converges in ℓp to x, that is, x =  xnen.

xnen.

The map x = (x1, x2, x3, · · ·)  xn : ℓp → K is easily seen to be linear.

xn : ℓp → K is easily seen to be linear.

It’s continuous as for all x ∈ ℓp, |φn(x)| = |xn| = (|xn|p)1/p  = |x|p.

= |x|p.

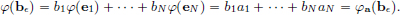

If x =  , where the ξis and

, where the ξis and  s are scalars, then applying φn,

s are scalars, then applying φn,

As the choice of n was arbitrary, ξn =  for all n.

for all n.

(1)Let x = (xn)n∈N ∈ ℓ∞. Then for all n ∈ N, |xn|  ||x|∞.

||x|∞.

Thus  = ||x||∞.

= ||x||∞.

Consequently Ax ∈ ℓ∞. So A is a well-defined map.

The linearity is easy to check.

Also, we see that for all x ∈ ℓ∞ that ||Ax||∞ =

||x||∞.

||x||∞.

So A ∈ CL(ℓ∞), and ||A||  1. Also, with 1 := (1, 1, 1, · · ·) ∈ ℓ∞, we have

1. Also, with 1 := (1, 1, 1, · · ·) ∈ ℓ∞, we have

Consequently, ||A|| = 1.

(2)Let x = (xn)n∈N ∈ c, and let its limit be denoted by L.

We’ll show that Ax ∈ c as well.

We will prove that Ax is convergent with the same limit L! (Intuitively, this makes sense since for large n, all xns look alike,  L, and the average of these is approximately L, since the first few terms do not “contribute much” if we take a large collection to take an average.)

L, and the average of these is approximately L, since the first few terms do not “contribute much” if we take a large collection to take an average.)

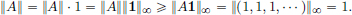

Let  > 0. Then there exists an N1 ∈ N such that for all n > N1, |xn – L| <

> 0. Then there exists an N1 ∈ N such that for all n > N1, |xn – L| <  /2. Since (xn)n∈N is convergent, it is bounded, and so there exists an M > 0 such that for all n ∈ N, |an|

/2. Since (xn)n∈N is convergent, it is bounded, and so there exists an M > 0 such that for all n ∈ N, |an|  M.

M.

Choose N ∈ N such that N > max

(This ghastly choice of N is arrived at by working backwards. Since we wish to make  less than

less than  for n > N, we manipulate this, as shown in the chain of inequalities below, and then choose N large enough to achieve this.)

for n > N, we manipulate this, as shown in the chain of inequalities below, and then choose N large enough to achieve this.)

So N > N1 and  Then for all n > N, we have:

Then for all n > N, we have:

So  is a convergent sequence with limit L.

is a convergent sequence with limit L.

Hence Ax ∈ c. Consequently Ac ⊂ c, and c is an invariant subspace of A.

(If part:) Since  |λn| > 0, we have |λk|

|λn| > 0, we have |λk|

|λn| > 0, and so λk ≠ 0 for all k.

|λn| > 0, and so λk ≠ 0 for all k.

Moreover,  < ∞, and so V : ℓ2 → ℓ2 given by

< ∞, and so V : ℓ2 → ℓ2 given by

belongs to CL(ℓ2). Moreover for all (an)n∈N we have

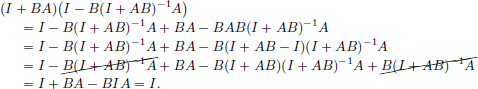

and so VΛ = I = ΛV. Hence Λ is invertible in CL(ℓ2), with Λ–1 = V.

(Only if part:) Let Λ be invertible in CL(ℓ2). Then there exists a Λ–1 ∈ CL(ℓ2) such that Λ–1Λ = I = ΛΛ–1. So ||x||2 = ||Λ–1Λx||2  ||Λ–1||||Λx||2, for all x ∈ ℓ2.

||Λ–1||||Λx||2, for all x ∈ ℓ2.

Hence ||Λx||2

for all x ∈ ℓ2. So with x := ek (kth term 1, others 0),

for all x ∈ ℓ2. So with x := ek (kth term 1, others 0),

Thus

We have

Similarly,

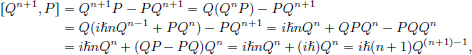

(1)If there exist matrices A, B such that AB – BA = I, then

a contradiction.

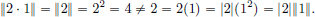

(2)If n = 1, then ABn – BnA = AB – BA = I = 1 · B0 = nBn–1.

If for some n ∈ N, we have ABn – BnA = nBn–1, then

and so the result follows by induction.

Suppose that AB – BA = I. Then for all n ∈ N, ABn – BnA = nBn–1. Taking operator norm on both sides yields

We claim that Bn–1 ≠ 0 for all n ∈ N. Indeed, if n = 1, then B0 := I ≠ 0. If Bn–1 ≠ 0 for some n ∈ N, then Bn = 0 gives the contradiction that

and so we must have Bn ≠ 0 too. By induction, our claim is proved. Thus in (7.3), we may cancel ||Bn−1|| > 0 on both sides of the inequality, obtaining n  2||A||||B|| for all n ∈ N, which is absurd. Consequently, our original assumption that AB − BA = I must be false.

2||A||||B|| for all n ∈ N, which is absurd. Consequently, our original assumption that AB − BA = I must be false.

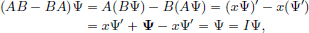

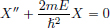

(3)If Ψ ∈ C∞(R), then

and so AB − BA = I.

Solution to Exercise 2.23, page 87

(1)For x = (x1, x2) ∈ R2, we have, using the Cauchy-Schwarz inequality, that

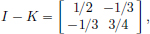

So

By the Neumann Series Theorem, (I − K)−1 exists in CL(R2).

So there is a unique solution x ∈ R2 to (I − K)x = y, given by x = (I − K)−1y.

(2)We have  and so

and so

Thus

(3)A computer program yielded the following numerical values:

Solution to Exercise 2.24, page 88

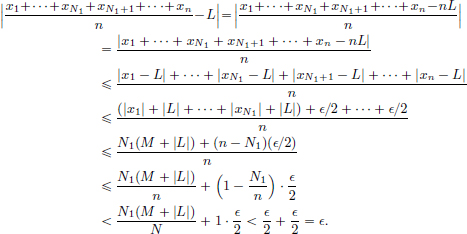

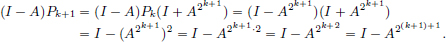

If n = 1, then (I − A)P1 = (I − A)(I + A)(I + A2) = I − A4 = I − A21 + 1.

If the claim is true for some k ∈ N, then

So the claim follows by induction for all n ∈ N.

(I − A2n+1)n∈N converges to I in L(X) since ||A|| < 1 and

Also, since ||A|| < 1, I − A is invertible in CL(X). We have

and so ((I − A)−1 (I − A2n+1))n∈N = ((I − A)−1(I − A)Pn)n∈N = (Pn)n∈N is convergent with limit (I − A)−1.

Solution to Exercise 2.25, page 88

(1)Let T0 ∈ GL(X). Then T0−1 ∈ CL(X), and also r := ||T0−1|| ≠ 0.

If T ∈  , and in particular,

, and in particular,

and so by the Neumann Series Theorem, I + (T − T0)T0−1 belongs to GL(X).

But as T0 ∈ GL(X) too, it now follows that

This completes the proof that GL(X) is an open subset of CL(X).

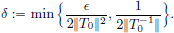

(2)Let T0 ∈ GL(X) and  > 0. Set

> 0. Set

Let T ∈ CL(X) be such that ||T − T0|| < δ.

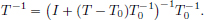

Then in particular ||T −  and so by part (1), T ∈ GL(X), with

and so by part (1), T ∈ GL(X), with

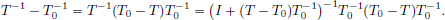

Moreover, we have

Thus using the estimate from the Neumann Series Theorem,

Solution to Exercise 2.26, page 92

A2 = B2 = 0, and so A, B are nilpotent.

Hence  and

and

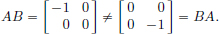

We note that

Also,

We have  and

and  Thus

Thus

and so

Solution to Exercise 2.27, page 94

Suppose that the Banach space has an infinite countable Hamel basis {x1, x2, x3, ··· }. We can ensure that for all n ∈ N, we have ||xn|| = 1. Let Fn := span{x1, x2, ···, xn}. Then each Fn is a finite dimensional normed space (with the induced norm from X), and so it is a Banach space. It follows that Fn is a closed subspace of X. By the Baire Lemma, there is an n ∈ N such that Fn contains an open set U, and in particular, an open ball B(X, 2r) for some r > 0. The vector y := rxn+1 + x belongs to B(X, 2r) since ||y − x|| = ||rxn+1|| = r < 2r. Since y, x ∈ B(X, 2r) ⊂ Fn, and as Fn is a subspace, we conclude that (y − x)/r ∈ Fn too, that is, xn+1 ∈ Fn = span{x1, ···, xn}, a contradiction.

Solution to Exercise 2.28, page 96

In light of the Open Mapping Theorem, such a function must necessarily be nonlinear. If the function is constant on an open interval I, then the image f(I) will be a singleton, which is not closed. The following function does the job:

If I := (−1, 1), then f(I) = {0}, which is not open. f is surjective and continuous, and its graph is depicted in the following picture.

Solution to Exercise 2.29, page 96

From Exercise 1.38, page 44, X × Y is a Banach space. Since G(T) is a closed subspace of the Banach space X × Y, it is a Banach space too. Let us now consider the map p : G(T) → X defined by p(X, Tx) = x for x ∈ X. Then p is a linear transformation:

for α ∈ K, x, x1, x2 ∈ X. Moreover, p continuous because

p is also injective since if p(X, Tx) = 0, then x = 0.

Furthermore, if x ∈ X, then x = p(x, Tx), showing that p is surjective too.

Thus, p ∈ CL(G(T), X) is bijective, and hence invertible in CL(G(T), X), with inverse p−1 ∈ CL(X, G(T)). Hence for all x ∈ X,

showing that T ∈ CL(X, Y).

Solution to Exercise 2.30, page 102

We have

Solution to Exercise 2.31, page 102

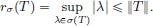

(1)We know that σ (T) ⊂ {λ ∈ C : |λ|  ||T||}, and so ||T|| is an upper bound for {|λ| : λ ∈ σ(T)}. Thus

||T||}, and so ||T|| is an upper bound for {|λ| : λ ∈ σ(T)}. Thus

(2)We have σ(TA) = {eigenvalues of A} = {1}, and so rσ(TA) = 1.

On the other hand, with  we have ||x1||2 = 1, and so

we have ||x1||2 = 1, and so

Solution to Exercise 2.32, page 103

Suppose that λ2 ∉ σ(T2). Then λ2 ∈ ρ(T2), that is, λ2 I − T2 is invertible in CL(X). From the identity (λ2I − T2) = (λI − T)(λI + T) = (λI + T)(λI − T), we then obtain

But then Q = QI = Q(λI − T)P = IP = P, and so P = Q ∈ CL(X) is the inverse of λI − T, a contradiction to the fact that λ ∈ σ(T).

Solution to Exercise 2.33, page 103

If en ∈ ℓ2 denotes the sequence with the nth term equal to 1, and all others equal to 0, then Λen = λnen, and so each λn is an eigenvalue of Λ with eigenvector en ≠ 0. Thus {λn : n ∈ N} ⊂ σp(Λ).

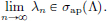

Next we will show that σ(Λ) ⊂ {λn : n ∈ N} {0}. To this end, suppose that μ ∉ {λn : n ∈ N}

{0}. To this end, suppose that μ ∉ {λn : n ∈ N} {0}. Then we claim that μI − Λ is invertible in CL(ℓ2). By a previous exercise, we know that in order to show the invertibility of

{0}. Then we claim that μI − Λ is invertible in CL(ℓ2). By a previous exercise, we know that in order to show the invertibility of

it is enough to show that |μ − λn| is bounded away from 0. To see this, note that since  there is an N large enough such that |λn| < |μ|/2 for all n > N, and so

there is an N large enough such that |λn| < |μ|/2 for all n > N, and so

But also |μ − λ1|, ···, |μ − λN| are all positive, so that we do have

Hence μI − Λ ∈ CL(ℓ2) is invertible in CL(ℓ2), that is, μ ∈ ρ(Λ).

Thus σ(Λ) ⊂ {λn : n ∈ N} {0}.

{0}.

But the spectrum σ(Λ) is closed, and since it contains σp(Λ) ⊃ {λn : n ∈ N}, it must contain the limit of (λn)n∈N, which is {0}.

So we also obtain {λn : n ∈ N} {0} ⊂ σp(Λ)

{0} ⊂ σp(Λ) {0} ⊂ σ(Λ).

{0} ⊂ σ(Λ).

Thus σ(Λ) = {λn : n ∈ N} {0}.

{0}.

Consequently, {λn : n ∈ N} ⊂ σp(Λ) ⊂ {λn : n ∈ N} {0} = σ(Λ).

{0} = σ(Λ).

Solution to Exercise 2.34, page 103

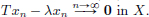

(1)Suppose that λ ∈ σap(T). Then there exists a sequence (xn)n∈C of vectors in X such that ||xn|| = 1 for all n ∈ N, and

We will just prove that λ ∉ ρ(T), and so by definition it will follow that then λ ∈ σ(T). Suppose, on the contrary, that λ ∈ ρ(T). Then T − λI is invertible in CL(X). Thus

a contradiction. Consequently, λ ∉ ρ(T), that is, λ ∈ σ(T).

(2)For k ∈ N, let ek denote the sequence in ℓ2 whose kth term is 1 and all other terms are zeros. Then ||ek||2 = 1, and Λek = λkek, so that

that is,  Consequently,

Consequently,

Solution to Exercise 2.35, page 103

Let λ ∈ C and Ψ ∈ DQ be such that xΨ(x) = λΨ(x) for almost all x ∈ R, that is, (x − λ)Ψ(x) = 0 for almost all x ∈ R. Now x − λ ≠ 0 for all x ∈ R\{λ}. Hence for almost all x ∈ R, Ψ(x) = 0, that is, Ψ = 0 in L2(R). Consequently, λ can’t be an eigenvalue of Q, and so σp(Q) = ∅.

Solution to Exercise 2.36, page 105

For simplicity we’ll assume K = R. If a = (an)n∈N ∈ ℓ1, then define the functional φa ∈ CL(c0, R) = (c0)′ by

Then a  φa : ℓ1 → (c0)′ is an injective linear transformation, and it is also continuous because |φa(b)|

φa : ℓ1 → (c0)′ is an injective linear transformation, and it is also continuous because |φa(b)|  ||b||∞ ||a||1 for all b ∈ c0, and ||φa||

||b||∞ ||a||1 for all b ∈ c0, and ||φa||  ||a||1. To see the surjectivity of this map, we need to show that given φ ∈ (c0)′, there exists an a ∈ ℓ1 such that φ = φa. Let en ∈ c0 being the sequence with nth term 1 and all others 0. Set a = (φ(e1), φ(e2), φ(e3), ···). We’ll show that a ∈ ℓ1, and that φ = φa.

||a||1. To see the surjectivity of this map, we need to show that given φ ∈ (c0)′, there exists an a ∈ ℓ1 such that φ = φa. Let en ∈ c0 being the sequence with nth term 1 and all others 0. Set a = (φ(e1), φ(e2), φ(e3), ···). We’ll show that a ∈ ℓ1, and that φ = φa.

Define the scalars αn, n ∈ N, by

Then for all n we have αnφ(en) = |φ(en)|.

We have ||(α1, ···, αn, 0, ···)||∞  1, and so

1, and so

for all n ∈ N. Hence a ∈ ℓ1.

Finally, we need to show φ = φa . Let b = (bn)n∈N ∈ c0 and  > 0. Then there exists an N such that for all n > N, |bn| <

> 0. Then there exists an N such that for all n > N, |bn| <  . Set b

. Set b = (b1, ···, bN, 0, ···) ∈ c0. Then ||b − b

= (b1, ···, bN, 0, ···) ∈ c0. Then ||b − b ||∞ = ||(0, ··· , 0, bN+1, ···)||∞

||∞ = ||(0, ··· , 0, bN+1, ···)||∞

. Moreover, we have that

. Moreover, we have that

Hence

As the choice of  > 0 was arbitrary, it follows that φ(b) = φa(b) for all b ∈ c0, that is, φ = φa.

> 0 was arbitrary, it follows that φ(b) = φa(b) for all b ∈ c0, that is, φ = φa.

Solution to Exercise 2.37, page 105

(1)BV [a, b] is a vector space: We prove that BV [a, b] is a subspace of the vector space R[a,b] of all real valued functions on [a, b] with pointwise operations.

(S1)The zero function 0 belongs to BV [a, b].

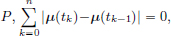

Indeed, for any partition  and so var(0) = 0 < ∞.

and so var(0) = 0 < ∞.

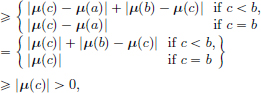

(S2)Let μ1, μ2 ∈ BV [a, b]. Then we have

and so μ1 + μ2 ∈ BV [a, b].

(S3)Let α ∈ R and μ ∈ BV [a, b]. Then

and so αμ ∈ BV [a, b].

(2)We show that μ  ||μ|| defines a norm on BV [a, b].

||μ|| defines a norm on BV [a, b].

(N1)If μ ∈ BV [a, b], then ||μ|| = |μ(a)| + var(μ)  0.

0.

Let μ ∈ BV [a, b] be such that ||μ|| = 0. Then var(μ) = 0, and |μ(a)| = 0.

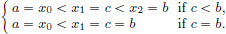

Hence μ(a) = 0. Suppose that μ ≠ 0. Then there exists a c ∈ [a, b] such that μ(c) ≠ 0. Clearly c ≠ a, since μ(a) = 0. Now consider the partition

Then var

a contradiction. Hence μ = 0.

(N2)Let α ∈ R and μ ∈ BV [a, b]. Then αμ ∈ BV [a, b], and we have seen earlier that varαμ = |α|var(μ). Hence

(N3)Let μ1, μ2 ∈ BV [a, b]. Then μ1 + μ2 ∈ BV [a, b], and we’ve seen above that var(μ1 + μ2)  var(μ1) + var(μ2). Thus

var(μ1) + var(μ2). Thus

Consequently BV [a, b] is a normed space with the norm ||·||.

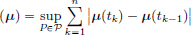

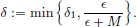

(3)Let x ∈ C[a, b] and μ ∈ BV [a, b]. Given  > 0, let δ > 0 be such that for every partition P satisfying δP < δ, we have

> 0, let δ > 0 be such that for every partition P satisfying δP < δ, we have

Then

As the choice of  > 0 was arbitrary, it follows that

> 0 was arbitrary, it follows that

(4)For all x ∈ C[a, b], |φµx|  ||x||∞ var(μ).

||x||∞ var(μ).

From the linearity of the Riemann-Stieltjes integral, it follows that φµ is a linear transformation from C[a, b] to R. From the above estimate, we also see that φµ is continuous. Consequently φµ ∈ CL(C[a, b), R) = (C[a, b])′.

Moreover ||φµ||  var(μ).

var(μ).

(5)We will show that (x  x(a)) = φµ, where

x(a)) = φµ, where

First of all, μ ∈ BV [a, b], since var(μ) = 1 < ∞.

Let x ∈ C[a, b], and  > 0. Let δ > 0 be such that for all t such that t − a < δ, we have |x(t) − x(a)| <

> 0. Let δ > 0 be such that for all t such that t − a < δ, we have |x(t) − x(a)| <  .

.

Then for all partitions P with δP < δ, we have

where the last inequality follows from the fact that |a − t1|  δP < δ.

δP < δ.

So  (μ is not unique: for any c ∈ R, μ + c also works!)

(μ is not unique: for any c ∈ R, μ + c also works!)

Solution to Exercise 2.38, page 109

On the one dimensional subspace Y :=span{x∗} ⊂ X, we have a continuous linear map φ : Y → C. (Simply define φ(αx∗) = α, then |φ(αx∗)| = |α| = ||αx∗||/||x∗||, and so ||φ|| = 1/||x∗|| < ∞.) By the Hahn-Banach Theorem, there exists an extension φ∗ ∈ CL(X, C) of φ, and so φ∗(x∗) = φ(x∗) = 1 ≠ 0. (Alternatively, one could just use Corollary 2.7, page 109, with x = x∗ and y = 0: there exists a functional φ∗ ∈ CL(X, C) such that φ∗(x∗) ≠ φ∗(0) = 0.)

Solution to Exercise 2.39, page 115

Consider the collection P of all linearly independent subsets S ⊂ X. Consider the partial order which is simply set inclusion ⊂. Then every chain in P has an upper bound, as explained below.

If C is a chain in P, then  is an upper bound of C.

is an upper bound of C.

We just need to show the linear independence of this set U. To this end, let v1, ···, vn be any set of vectors from U for which there exist scalars α1, ···, αn in F such that α1v1 + ··· + αnvn = 0. Let the sets S1, ···, Sn ∈ C be such that v1 ∈ S1, ···, vn ∈ Sn. As C is a chain, we can arrange the finitely many Sks in “ascending order”, and there exists a k∗ ∈ {1, ···, n} such that S1, ···, Sn ⊂ Sk∗. Then v1, ···, vn ∈ Sk∗. But by the linear independence of Sk∗, we conclude that α1 = ··· = αn = 0. Thus U is linearly independent, showing that every chain in P has an upper bound.

By Zorn’s Lemma, P has a maximal element B. We claim that span B = X. For if not, then there exists an x ∈ X\span B. We will show B′ := B ∪ {x} is linearly independent. Suppose that α1, ···, αn, α ∈ K and v1, ···, vn ∈ B are such that αx + α1v1 + ··· + αnvn = 0. First we note that α = 0, since otherwise

which is false. As α = 0, the equality αx + α1v1 + ··· + αnvn = 0 now becomes α1v1 + ··· + αnvn = 0. But by the independence of the set B, we conclude that α1 = ··· = αn = 0 too. Hence B′ is linearly independent, and so B′ belongs to P. As B′ = B ∪ {x}  B, we obtain a contradiction (to the maximality of B). Consequently, span B = X, and as B ∈ P, B is also linearly independent.

B, we obtain a contradiction (to the maximality of B). Consequently, span B = X, and as B ∈ P, B is also linearly independent.

Solution to Exercise 2.40, page 115

Let B = {vi : i ∈ I}. Every x ∈ X has a unique decomposition

for some finite number of indices i1, ···, in ∈ I and scalars α1, ···, αn in F. Define F(x) = α1f(vi1) + ··· + αnf(vin). It is clear that F(vi) = f(vi), i ∈ I. Let us check that F : X → Y is linear.

(L1)Given x1, x2 ∈ X, there exist scalars α1, ···, αn and β1, ···, βn (possibly several of them equal to zero) and indices i1, ···, in ∈ I, such that

(L2)Let α ∈ F. Given x ∈ X, there exist β1, ··· , βn ∈ F and i1, ···, in ∈ I, such that x = β1vi1 + ··· + βnvin. Then αx = (αβ1)vi1 + ··· + (αβn)vin.

Solution to Exercise 2.41, page 115

Let B be a Hamel basis for X. As X is infinite dimensional, B is an infinite set. Let {vn : n ∈ N} be a countable subset of B. Let y∗ ∈ Y be any nonzero vector.

Let f : B → Y be defined by

By the previous exercise, this f extends to a linear transformation F from X to Y. We claim that F ∉ CL(X, Y). Suppose that it does. Then there exists an M > 0 such that for all x ∈ X, ||F(x)||  M||x||. But if we put x = vn, n ∈ N, this yields n||vn||||y∗|| = ||f(vn)|| = ||F(vn)||

M||x||. But if we put x = vn, n ∈ N, this yields n||vn||||y∗|| = ||f(vn)|| = ||F(vn)||  M ||vn||, and so for all n ∈ N, n

M ||vn||, and so for all n ∈ N, n  M/||y∗||, which is absurd. Thus F is a linear transformation from X to Y, but is not continuous.

M/||y∗||, which is absurd. Thus F is a linear transformation from X to Y, but is not continuous.

Solution to Exercise 2.42, page 115

If R were finite dimensional, say d-dimensional over Q, then there would exist a one-to-one correspondence between R and Qd. But Qd is countable, while R isn’t, a contradiction. So R is an infinite dimensional vector space over Q.

Suppose that R has a countable basis B = {vn : n ∈ N} over Q.

We will define an injective map  yielding a contradiction.

yielding a contradiction.

Set f(0) := 0 ∈ Q1. If x ≠ 0, then x has a decomposition x = q1v1 + ··· + qnvn, where q1, ···, qn ∈ Q and qn ≠ 0. In this case, set f(x) = (q1, ···, qn) ∈ Qn. It can be seen that if f(x) = f(y), for some x, y ∈ R, then x = y. So f is injective.

As  is countable, follows that R is countable too, a contradiction.

is countable, follows that R is countable too, a contradiction.

Hence B can’t be countable.

Solution to Exercise 2.43, page 115

The set R is an infinite dimensional vector space over Q. Let {vi : i ∈ I} be a Hamel basis for this vector space. Fix any i∗ ∈ I.

We define a function f : B → R on the basis elements:

Let F be an extension of f from B to R, as provided by Exercise 2.40, page 115. Then F is linear, and in particular, additive. So F(x + y) = F(x) + F(y) for all x, y ∈ R.

We now show that F is not continuous on R: for otherwise, for any vi ≠ vi∗, if (qn)n∈N is a sequence in Q converging to the real number vi/vi∗ (vi∗ ≠ 0 since it is a basis vector), then we would have

a contradiction!

Solution to Exercise 2.44, page 116

(1)By the Algebra of Limits, the map l is linear.

Let (xn)n∈N ∈ c. For all n ∈ N, |xn|  ||(xn)n∈N||∞.

||(xn)n∈N||∞.

Passing the limit as

Thus l ∈ CL(c, K).

(2)Y is a subspace of ℓ∞. Indeed we have:

(S1)Clearly (0)n∈N ∈ Y, since

(S2)Let (xn)n∈N, (yn)n∈N ∈ Y.

Then  and

and  exist.

exist.

As

we conclude that  exists as well.

exists as well.

Thus (xn)n∈N + (yn)n∈N ∈ Y too.

(S3)Let (xn)n∈N ∈ Y and α ∈ K. Then  exists.

exists.

As  it follows that

it follows that

exists, and so α · (xn)n∈N ∈ Y.

exists, and so α · (xn)n∈N ∈ Y.

Consequently, Y is a subspace of ℓ∞.

(3)For all x ∈ ℓ∞, x − Sx ∈ Y : Let x = (xn)n∈N ∈ ℓ∞. Then we have

We have

As x ∈ ℓ∞, it follows that  and so x − Sx ∈ Y.

and so x − Sx ∈ Y.

(4)If x = (xn)n∈N ∈ c, then Ax ∈ c, where A denotes the averaging operator (Exercise 2.19, page 77).

Hence  exists, and so x ∈ Y. Consequently, c ⊂ Y.

exists, and so x ∈ Y. Consequently, c ⊂ Y.

(5)Define L0 : Y → K by L0(xn)n∈N =

Then it is easy to check that L0 : Y → K is a linear transformation.

Moreover, if x ∈ Y, then

But

Hence |L0x|  ||x||∞. Consequently, L0 ∈ CL(Y, K).

||x||∞. Consequently, L0 ∈ CL(Y, K).

We had seen that if x ∈ c, then Ax ∈ c, and that l(Ax) = l(x).

Hence for all x ∈ c, L0(x) = l(Ax) = l(x), that is, L0|c = l.

Using the Hahn-Banach Theorem, there exists an L ∈ CL(ℓ∞, K) such that L|Y = L0 (and ||L|| = ||L0||).

In particular, if x ∈ c, then x ∈ Y and so Lx = L0x = lx. Thus L|c = l.

Also, if x = (xn)n∈N ∈ ℓ∞, then x − Sx ∈ Y.

Thus Lx = LSx for all x ∈ ℓ∞, that is, L = LS.

(6)We have

Consequently,

Solutions to the exercises from Chapter 3

Solution to Exercise 3.1, page 124

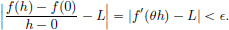

f is a continuous linear transformation. Thus it follows that f′(x0) = f for all x0, and in particular also for x0 = 0.

Solution to Exercise 3.2, page 125

Suppose that f′(x0) = L ∈ CL(X, Y). Let M > 0 be such that ||Lh||  M||h||, for all h ∈ X. Let

M||h||, for all h ∈ X. Let  > 0. Then there exists a δ1 > 0 such that whenever x ∈ X satisfies 0 < ||x − x0|| < δ1, we have

> 0. Then there exists a δ1 > 0 such that whenever x ∈ X satisfies 0 < ||x − x0|| < δ1, we have

So if x ∈ X satisfies ||x − x0|| < δ1, then ||f(x) − f(x0) − L(x − x0)||

||x − x0||.

||x − x0||.

Let  Then for all x ∈ X satisfying ||x − x0|| < δ, we have

Then for all x ∈ X satisfying ||x − x0|| < δ, we have

Hence f is continuous at x0.

Solution to Exercise 3.3, page 125

(Rough work: We have for x ∈ C1[0, 1] that

where L : C1[0, 1] → R is the map given by Lh = 2x′0(1)h′(1), h′ ∈ C1[0, 1]. So we make the guess that f′(x0) = L.)

Let us first check that L is a continuous linear transformation. L is linear because:

(L1)For all h1, h2 ∈ C1[0, 1], we have

(L2)For all h ∈ C1 [0, 1] and α ∈ R, we have

Also, L is continuous since for all h ∈ C1[0, 1], we have

So L is a continuous linear transformation. Moreover, for all x ∈ C1[0, 1],

so that

Given  > 0, set δ =

> 0, set δ =  . Then if x ∈ C1[0, 1] satisfies 0 < ||x − x0||1, ∞ < δ, we have

. Then if x ∈ C1[0, 1] satisfies 0 < ||x − x0||1, ∞ < δ, we have

Solution to Exercise 3.4, page 125

Given  > 0, let

> 0, let  ′ > 0 be such that

′ > 0 be such that  ′||x2 − x1|| <

′||x2 − x1|| <  . Let δ′ > 0 such that whenever 0 < ||x − γ(t0)|| < δ′, we have

. Let δ′ > 0 such that whenever 0 < ||x − γ(t0)|| < δ′, we have

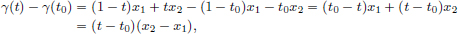

Let δ 0 be such that δ ||x2 − x1|| < δ′. For all t ∈ R satisfying 0 < |t − t0| < δ,

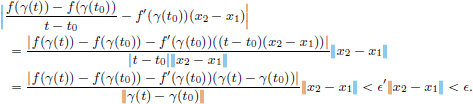

and so ||γ(t) − γ(t0)|| = |t − t0|||x2 − x1||  δ||x2 − x1|| < δ′. Thus for all t ∈ R satisfying 0 < |t − t0| < δ, we have

δ||x2 − x1|| < δ′. Thus for all t ∈ R satisfying 0 < |t − t0| < δ, we have

Thus f  γ is differentiable at t0 and

γ is differentiable at t0 and

Let x1, x2 ∈ X be such that g(X1) ≠ g(X2). With γ the same as above, we have for all t ∈ R that

So g  γ is constant. Thus (g

γ is constant. Thus (g  γ)(1) = g(x2) = g(x1) = (g

γ)(1) = g(x2) = g(x1) = (g  γ)(0), a contradiction. Consequently, g is constant.

γ)(0), a contradiction. Consequently, g is constant.

Solution to Exercise 3.5, page 128

Suppose that f′(x0) = 0. Then for every

In particular, setting h = x0, we have  giving x0 = 0 ∈ C[a, b].

giving x0 = 0 ∈ C[a, b].

Vice versa, if x0 = 0, then

for all h ∈ C[a, b], that is, f′(0) = 0.

Consequently, f′(x0) = 0 if and only if x0 = 0.

So we see that if x∗ is a minimiser, then f′(x∗) = 0, and so from the above x∗ = 0. We remark that 0 is easily seen to be the minimiser because

Solution to Exercise 3.6, page 129

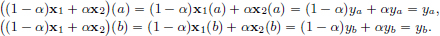

If x1, x2 ∈ S, α ∈ (0, 1), then x1, x2 ∈ C1[a, b]. So (1 − α)x1 + αx2 ∈ C1[a, b]. Moreover, as x1(a) = x2(a) = ya and x1b = x2(b) = yb, we also have that

Thus (1 − α)x1 + αx2 ∈ S. Consequently, S is convex.

Solution to Exercise 3.7, page 129

For x1, x2 ∈ X and α ∈ (0, 1) we have by the triangle inequality that

Thus || · || is convex.

Solution to Exercise 3.8, page 129

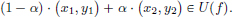

(If part:) Let x1, x2 ∈ C and α ∈ (0, 1). Then we have that (x1, f(x1) ∈ U(f) and (x2, f(x2)) ∈ U(f). Since U(f) is convex,

Consequently, (1 − α)f(x1) + αf(x2) = y  f(x) = f((1 − α) · x1 + α · x2). Hence f is convex.

f(x) = f((1 − α) · x1 + α · x2). Hence f is convex.

(Only if part:) Let (x1, y1), (x2, y2) ∈ U(f) and α ∈ (0, 1). Then we know that y1  f(x1) and y2

f(x1) and y2  f(x2) and so

f(x2) and so

Consequently,  that is,

that is,

So U(f) is convex.

Solution to Exercise 3.9, page 129

We prove this using induction on n. The result is trivially true when n = 1, and in fact we have equality in this case. Suppose the inequality has been established for some n ∈ N. If x1, ···, xn, xn+1 are n + 1 vectors, and  then

then

and so the claim follows for all n.

Solution to Exercise 3.10, page 130

We have for all x ∈ R

Thus f is convex.

(Alternately, one could note that  is a norm on R2, and so it is convex. Now fixing y = 1, and keeping x variable, we get convexity of

is a norm on R2, and so it is convex. Now fixing y = 1, and keeping x variable, we get convexity of

Solution to Exercise 3.11, page 132

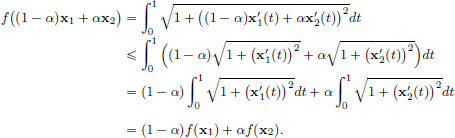

For x1, x2 ∈ C1[0, 1] and α ∈ (0, 1), we have, using the convexity of function  (Exercise 3.10, page 130), that

(Exercise 3.10, page 130), that

Solution to Exercise 3.12, page 133

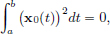

(If:) Suppose that x0(t) = 0 for all t ∈ [0, 1]. Then we have that for all h ∈ C[0, 1],

and so f′(x0) = 0.

(Only if:) Now suppose that f′(x0) = 0. Thus for every h ∈ C[0, 1], we have

In particular, taking h := x0 ∈ C[0, 1], we obtain

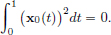

So  As x0 is continuous on [0, 1], it follows that x0 = 0.

As x0 is continuous on [0, 1], it follows that x0 = 0.

By the necessary condition for x0 to be a minimiser, we have that f′(x0) = 0 and so x0 must be the zero function 0 on [0, 1]. Furthermore, as f is convex and f′(0) = 0, it follows that the zero function is a minimiser. Consequently, there exists a unique solution to the optimisation problem, namely the zero function 0 ∈ C[0, 1]. The conclusion is also obvious from the fact that for all x ∈ C[0, 1],

Solution to Exercise 3.13, page 141

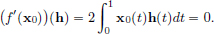

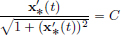

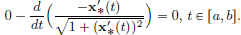

We have  Then

Then  and

and

The Euler-Lagrange equation is

Upon integrating, we obtain  on [a, b] for some constant C.

on [a, b] for some constant C.

Thus  , for all t ∈ [a, b].

, for all t ∈ [a, b].

So A  0, and

0, and  for each t ∈ [a, b]. As

for each t ∈ [a, b]. As  is continuous, we can conclude that

is continuous, we can conclude that  must be either everywhere equal to

must be either everywhere equal to  , or everywhere equal to −

, or everywhere equal to − . In either case,

. In either case,  is constant, and so x∗ is given by x∗(t) = αt + β, t ∈ [a, b]. Since x∗(a) = xa and x∗(b) = xb, we have

is constant, and so x∗ is given by x∗(t) = αt + β, t ∈ [a, b]. Since x∗(a) = xa and x∗(b) = xb, we have

and  for all t ∈ [a, b].

for all t ∈ [a, b].

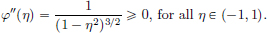

That this x∗ ∈ S is indeed a minimiser can be concluded by noticing that the map x  L(γx) : S → R is convex, thanks to the convexity of

L(γx) : S → R is convex, thanks to the convexity of

for all η ∈ R (Exercise 3.10, page 130).

for all η ∈ R (Exercise 3.10, page 130).

(The fact that x∗ is a minimiser, is of course expected geometrically, since the straight line is the curve of shortest length between two points in the Euclidean plane.)

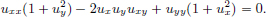

Solution to Exercise 3.14, page 141

We have

Solution to Exercise 3.15, page 141

With  we have

we have

Then  and

and

The Euler-Lagrange equation is