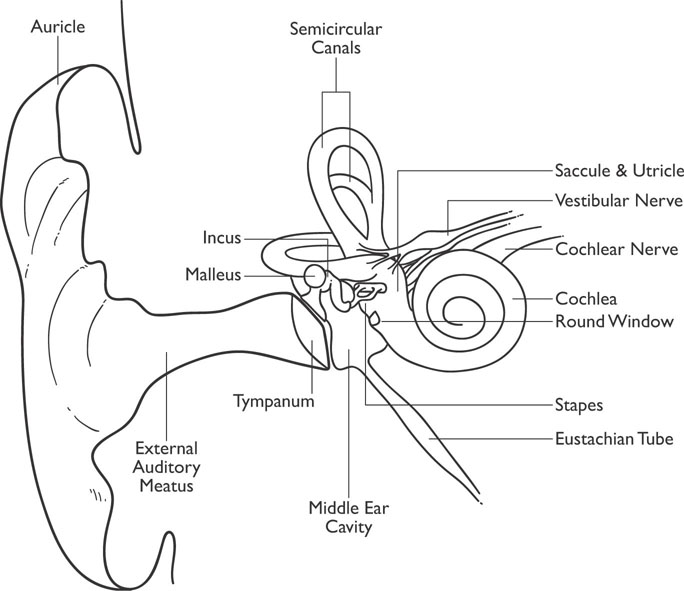

Figure 4-1: Diagram of the Ear

Audition and language play an extremely important part in your life. These are essential senses/functions that are involved in every factor of daily life from communication to survival. Do your ears hear and does your mouth speak? Hopefully by now you are thinking differently about your senses. So the answer to the old Zen question: “If a tree falls in the forest and no one is there to hear it—does it make a noise?” should be easy to answer at this point. If the answer is not clear now, it will be after this chapter. Read on grasshopper.

Three elderly gentlemen are out walking. The first one says, “Windy, isn’t it?” The second one says, “No, it’s Thursday!” The third one says, “So am I. Let’s go get a beer.”

Sound is an extremely important perception and is important in directing behavior. Sounds are in reality vibrations of the molecules in the air that stimulate the auditory system. A person with “normal hearing” will be able to detect a sound wave between 20 and 20,000 Hz (hertz, or cycles per second), but humans are typically most sensitive to vibrations between 1,000 and 4,000 Hz. The frequency of the vibration determines its pitch (high or low). Dogs can hear pitches as high as 45,000 Hz and some other animals can hear much higher frequencies. Figure 4-1 displays the anatomy of the ear for you to follow during the discussion of how these vibrations are interpreted as sound by the brain.

Figure 4-1: Diagram of the Ear

Sound waves travel through the air, reach the outer ear, and then travel down the auditory canal where they stimulate the tympanic membrane (eardrum). The signal is then transmitted by means of three small bones (ossicles) known as the malleus (hammer), incus (anvil), and stapes (stirrup) to the cochlea to other inner ear organs. The hammer vibrations lead to vibrations of the anvil and ultimately to vibrations of the stirrup. The vibrations of the stapes then lead to vibrations of a membrane known as the oval window. The vibrations of the oval window transfer into the fluid contained in the snail-shaped cochlea. The fluid-filled cochlea is a coiled tube (hence the name cochlea, which means “land snail”) that has an internal membrane running through it. The internal membrane is the auditory receptor organ known as the organ of Corti. The vibrations from the oval window move through the organ of Corti as a wave. This organ contains two membranes: the basilar membrane and the tectorial membrane. Hair cells, which act as auditory receptors, are situated in the basilar membrane, and the tectorial membrane rests on them (sort of like a hair sandwich). The vibrations from the oval window stimulate these hair cells, and this stimulation makes the axons in the auditory nerve fire (the auditory nerve is part of a branch of cranial nerve VIII). As the cochlear fluid vibrates, it is eventually dissipated by the round window, which is an elastic membrane of the cochlear wall. Figure 4-1 also displays the semicircular canals, which are part of the vestibular system.

The human cochlea is extremely sensitive and can detect tones that differ in frequency by only two-tenths of a percent. Different frequencies produce their maximum stimulation at different points along the basilar membrane, with higher frequencies stimulating the area closer to the window and lower frequencies further up the membrane. This allows for a person to be quite sensitive to different tones and pitch.

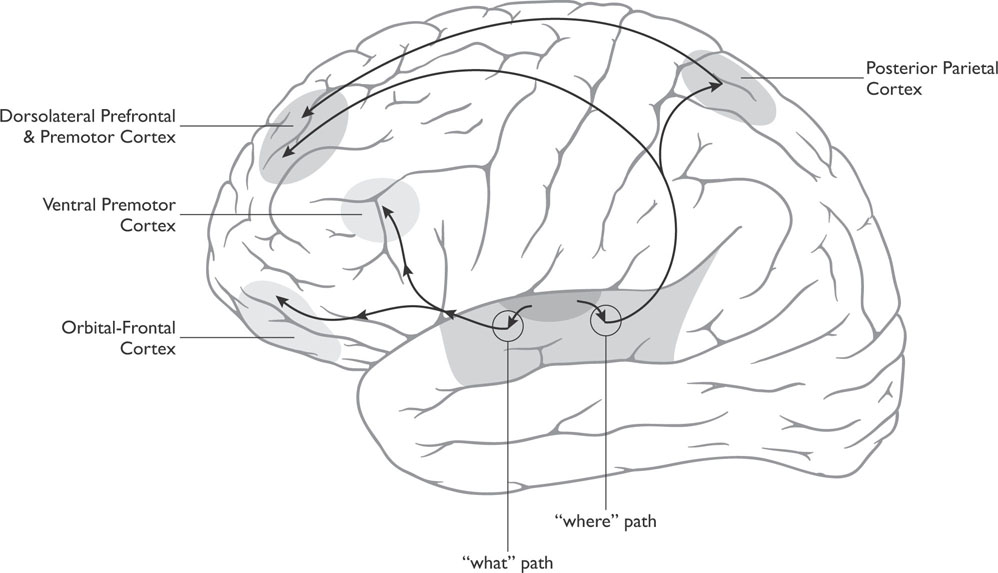

There is a network of auditory pathways from the inner ear to the auditory cortex located in the temporal lobe of the brain. The functions of the auditory cortex in humans are still not well understood, but projections from the cochlea lead to the superior olivary nuclei (or superior olives for the singular) located on both sides of the brain stem in the pons. These projections are lateralized such that sounds from the left ear are projected to the right superior olives and vice versa. Axons then project to the midbrain (to a structure called the inferior colliculi, where the information is integrated) and then to the thalamus (to a thalamic area called the medial geniculate nucleus). The information is analyzed in the thalamus, and if deemed important, it is then sent on to the primary auditory cortex, which is located in the superior temporal lobe. Routing sound impulses through two stops before going to the thalamus assists you in locating the source of the sound.

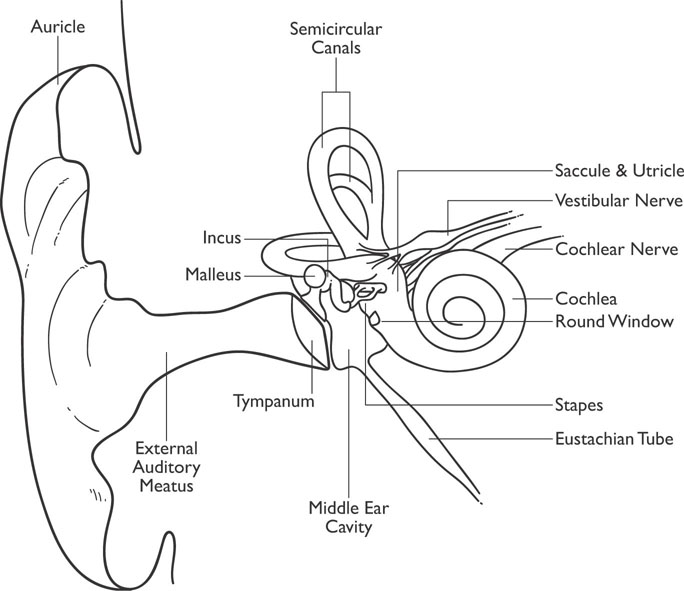

The primary auditory cortex (also called Heschl’s gyrus) is slightly below the central sulcus in the upper-middle portion of the temporal lobe (see Figure 4-2). The neurons in the primary auditory cortex respond to different frequencies of sound depending on their location in the primary auditory cortex in much the same way that different neurons in different areas of the visual system respond to different shapes. Neuroanatomists refer to this frequency map as a tonotopic map, as different areas of the primary auditory cortex respond to specific tones. When one locates sounds coming from different locations, differences in the time of arrival of the signal from the two ears lead to differential stimulation in the superior olives, and this allows one to locate the direction of the sound.

Figure 4-2: The Auditory Cortex

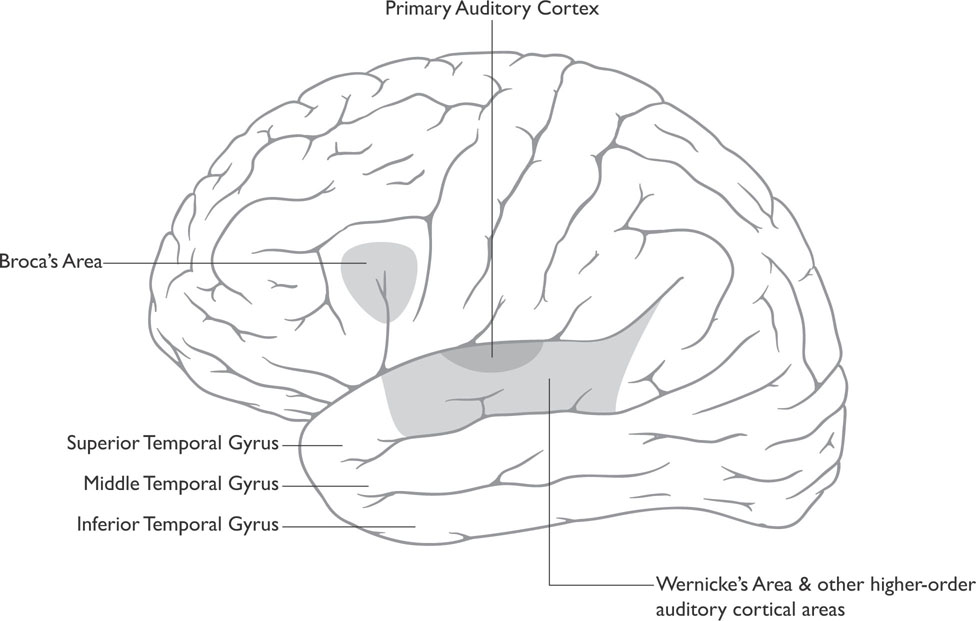

Once the sound is processed by the auditory cortex, researchers believe it is sent via two streams of auditory analysis to two different areas of association cortices: an anterior auditory pathway located toward the frontal lobe that identifies the sound (the “what pathway”) and a posterior auditory pathway more toward the parietal lobe that locates sounds (the “where pathway”), see Figure 4-3. Of course such an interpretation occurs at both of these levels of analysis for every sound. (Remember that any association cortex is an area of the cortex where sensory systems interact and associations are made. Areas of an association cortex have fields that react to visual information, fields that react to auditory information, and fields receptive to both visual and auditory information.)

Figure 4-3: “What” and “Where” Paths of Audition

Slightly behind or posterior to the primary auditory cortex is an area known as Wernicke’s area (see Figure 4-2). Wernicke’s area is important in the comprehension of language, both written and spoken. People with damage to this area develop difficulties understanding verbal language, a condition known as a receptive aphasia or Wernicke’s aphasia (aphasia is a term used for language disorders).

Wernicke’s area has extensive connections with another language area in the frontal lobe known as Broca’s area, located in the frontal lobe just anterior (in front of) the primary motor strip that also resides in the frontal lobe (see Figure 4-2). Broca’s area is important in the production of speech. Both Broca’s and Wernicke’s areas are located on the left hemisphere in nearly all right-handed people and most left-handed people (see the following for a discussion of how handedness relates to language). There is some evidence to suggest that the area on the right hemisphere that corresponds to Wernicke’s area is important in processing prosody, the changes in tone and rhythm of speech that convey meaning.

The common types of deafness are conductive deafness, associated with damage to the ossicles, and nerve deafness, associated with damage to the cochlea or to the auditory nerve. It appears that the major cause of nerve deafness is a loss of hair cell receptors in the basal membrane of the cochlea. If only certain hair cell receptors are damaged, the person may be deaf to some frequencies but not to others, something typically seen in age-related hearing loss. Typically this type of hearing loss occurs at higher frequencies. Some people with nerve deafness can benefit from cochlear implants that convert sounds picked up by a microphone on the patient’s ear to electrical signals that are then carried to the cochlea. The signals then excite the auditory nerve. Deafness that results from damage to the ossicles is typically treated with a hearing aid that magnifies the amplitude of the sound waves.

Tinnitus or a chronic ringing in the ears can result from a number of different causes, such as infection, exposure to loud noise, side effects from certain drugs, or even an allergic reaction. Tinnitus can also occur as a result of aging when people begin to lose their ability to hear at high frequencies.

It is certainly true that animals can communicate with each other by making verbal calls. However, human language is not simply a type of communication similar to what other animals use when they make such calls. Human language differs from the verbalizations of animals on a number of different levels, the most important of which is a structured grammar that allows for complex ideas and abstractions to be communicated. Human language consists of rules of grammar, nouns, verbs, modifiers, etc., that allow for the transmission of ideas in a nearly infinite number of ways compared to the finite number of single words available for use. Attempts to teach primates language indicate that their messages are most often random with respect to the order of the words and that they are not capable of following or using the rules of grammar.

For most people, language functions are dependent on structures in the left hemisphere of the brain. Language researchers still do not understand why this is the case or why most people are right-handed, although there are many theories as to why this is so. Man’s closest evolutionary relative, the chimpanzee, also demonstrates a particular hand preference; however, unlike humans, this preference appears to be a 50-50 split between left and right hands.

Despite what is depicted in science-fiction movies, primates can only express what they have learned that a word is for, such as signing for food, or at best primates can express a very limited number of novel ideas consisting of two words. Primates do not learn sign language from humans and then sign to one another.

Wernicke’s area of the brain—discussed earlier in this chapter—is important in the decoding of receptive language. A classic theory describing the brain structures involved in expressive language, receptive language, and reading is the Wernicke-Geschwind Model. According to this model, a person having a conversation will experience activation of the primary auditory cortex when hearing another person speak. This leads to activation of Wernicke’s area, where spoken language (and written language) is processed. When one wishes to respond to the other person, Wernicke’s area generates the neural representation of the thought or reply and sends it to Broca’s area by way of a nerve tract known as the arcuate fasciculus that connects Wernicke’s area to Broca’s area. Broca’s area activates the appropriate articulation for the response and sends it to the neurons that drive the appropriate muscles of speech in the primary motor cortex, the area of the brain that controls movement. Then the person responds.

When a person is reading, the signal received by the primary visual cortex is transmitted to the left angular gyrus in the parietal lobe. Here the visual form of the word is translated into its auditory code and then transmitted to Wernicke’s area for comprehension. If you were reading aloud, this information would be transmitted back to Broca’s area, and the previously described process would continue.

The Wernicke-Geschwind Model of language is based on studies of patients who had cortical damage and resulting language deficits. For example, Broca’s area is named after the physician who discovered it, based on two patients with a severe expressive aphasia that resulted from damage to a specific area of the brain (the particular form of aphasia associated with damage to this area is often termed Broca’s aphasia). Likewise, Wernicke’s area is named after the physician who studied patients with a severe receptive aphasia who had damage to that particular area (the resulting aphasia is sometimes termed Wernicke’s aphasia). However, it is rare to find patients that have circumscribed damage to one particular area as a result of a stroke or traumatic brain injury (in fact, recent re-evaluations of both Broca’s and Wernicke’s seminal patients indicated that the brain damage was extensive in both cases and not circumscribed to those particular areas). Moreover, newer technologies such as functional brain imaging have indicated three important observations regarding language in humans that shed doubt on the Wernicke-Geschwind Model:

Language expression and language reception are mediated by many areas of the brain that also participate in the cognition (thinking) that is involved in language-related behavior.

The areas of the brain involved in language are not solely dedicated to language production or reception.

Because many of the areas of the brain that perform language functions are also parts of other systems, these areas are likely to be small, specialized, and extensively distributed throughout the brain.

Nonetheless, patients with left frontal brain damage near or around Broca’s area will tend to demonstrate expressive language problems, whereas those who sustain damage around or near Wernicke’s area are more likely to display difficulties with receptive language. Yet expressive or receptive aphasias are also seen in patients with damage to other areas of the brain.

A disruption of language abilities due to brain insult is known as aphasia. Problems with articulation of speech due to nerve damage that are not impairments in language abilities are termed dysarthria. Dysarthria results from an insult to the motor-component of speaking such as the facial muscles and is not due to damage to the language centers of the brain.

The vast majority of right-handed people have language lateralized to the left side of the brain (studies indicate that approximately 95 percent of these people have left-brain language). However, the situation is not quite the same with left-handed people. Left-handed people tend to be more ambidextrous than right-handed people as a group. The incidence of right hemisphere–dominant language in left-handers appears to be related to the degree of left-handedness in the person; the more left-dominant the person is, the more likely he will demonstrate a right hemisphere dominance for language. There are no significant differences in the normal language abilities of people with right-brained language. One study found the incidence of right hemisphere language dominance to be about 4 percent in strong right-handers (people who are predominantly right-handed), 15 percent in ambidextrous people, and 27 percent in strong left-handers.

Reading is a complex process that occurs in the brain and may involve two different pathways: a word-meaning-sound pathway and a word-sound-meaning pathway. This model is referred to as the dual-pathway of reading.

The word-meaning-sound pathway involves recognizing a written word pattern (e.g., the word “dog”) as being the same pattern as one stored in memory. If the written word pattern is linked to a particular meaning in the association cortex, one becomes conscious of the meaning of the word. This pathway is alternatively referred to as the whole-word route. This route takes some time to develop because when one is first exposed to words, one has not made the connection between the word pattern and the meaning of the word. However, once these connections are formed, they work efficiently and quickly.

Reading using the word-sound-meaning pathway involves breaking up the written word pattern into segments (e.g., “d-o-g”) and combining the segments to form a mental representation of the sound of the word. The sound of the word is then compared to sounds in the storehouse of word sounds in the brain and recognized as the same sound as the one in memory. Because the stored word sound is linked to a meaning, you then become aware of the meaning. Some refer to this pathway as the sound-of-letters pathway.

Modular models claim language comprehension is executed in independent brain modules working in a one-way direction so that higher levels cannot influence lower levels that have completed their functions. Interactive models state that all types of information contribute to word recognition, including the context; words are recognized as the result of nodes in a network that are activated together. Hybrid models attempt to combine both.

Supporters of the dual-pathway model of reading believe that in most adults the two pathways complement each other and work in parallel, allowing for the quickest and most accurate reaction. This model also explains mistakes people sometimes make when reading, such as when a person speaks a different word from what is written, but the word still conveys the same meaning. For example, while reading out loud one might say “they went to sleep” while reading the phrase “they went to bed.” Such errors indicate that the person is extracting the meaning before decoding the word, thus using the word-meaning-sound pathway.

The term dyslexia refers to a group of disorders that involves impaired reading. There are two types of dyslexia: developmental dyslexia, where a person has difficulty reading despite normal intelligence, normal development, and no history of brain damage (true dyslexia); and acquired dyslexia, which occurs in an individual who previously had no significant difficulty reading but due to some type of brain injury has developed problems reading (sometimes called alexia).

Research on dyslexia indicates that there are probably a number of different brain mechanisms that, when disrupted, result in dyslexic behaviors. For example, one view of developmental dyslexia is that the dyslexic person cannot process incoming visual information. This disruption is believed to occur in one of the thalamic visual system pathways, the magnocellular pathway. However, other researchers suggest that this pathway is not impaired in all dyslexics and that the cerebellum, the structure in the posterior portion of the brain, is impaired. Based on neuroimaging studies of individuals with dyslexia, these researchers suggest that this dysfunction in the cerebellum interferes with the performance of many automatic and over-learned skills (which the cerebellum moderates), and reading is one of these. Imaging studies have sometimes shown that some dyslexics demonstrate abnormalities in the cerebellum and not in a thalamic pathway.

The effects of damage to the auditory cortex have been difficult to determine because of the location of the auditory cortex. Most of the human auditory cortex is located in the lateral fissure of the brain. It is rarely entirely destroyed, but there is often extensive damage to the surrounding tissue. Studies of the effects of damage to one side of the auditory cortex indicate this results in difficulty localizing sounds coming into the opposite ear (e.g., left auditory cortex damage would result in difficulty localizing sounds coming from the right side).

A small number of cases with damage to both sides of the auditory cortex have been studied, and initially there appeared to be a total loss of hearing in these cases; however, hearing in these cases often recovered weeks later. It may be that the total hearing loss occurred as a result of the initial shock of the damage, and as the neurons recovered hearing was restored. Over the long run, the major effects of bilateral auditory cortex damage appear to disrupt the ability to localize sounds and the ability to discriminate between different frequencies.

As discussed earlier, aphasia can be associated with brain damage that can occur in many different parts of the brain. The production of speech in aphasia is classified as either fluent or nonfluent.

Four language-related factors are generally considered when assessing aphasia: expressive language (length of phrase, articulation, and other factors), language comprehension (usually for simple or complex commands), naming ability (typically naming pictures of objects), and repetition (the ability to repeat single words or phrases).

Fluent aphasias are characterized by verbal output that is plentiful, well articulated, and of relatively normal lengths and prosody (prosody refers to variations in pitch, rhythm, and loudness). Nonfluent aphasias are characterized by verbal output that has short phrase lengths, is effortful, and has disrupted prosody. Fluent aphasias are typically associated with more posterior brain damage (e.g., Wernicke’s aphasia), whereas nonfluent aphasias are associated with more anterior brain damage in the motor regions of the brain that involve speech production.

Broca’s aphasia typically occurs when there is brain damage to the posterior portion of the frontal lobe of the brain. Verbal output is described as telegraphic in that it is pressured, slow, and halting. Articulation is impaired and utterances are typically less than four words in length. These patients can repeat single words and very short phrases but have difficulty naming objects. Comprehension of speech is relatively good except for complex commands or passive sentences.

Transcortical motor aphasia involves lesions or other areas of damage in the frontal lobe (but not at Broca’s area). People with this type of aphasia make little attempt to spontaneously speak, and when they do speak, their utterances are typically less than four words, but articulation may be good. Comprehension is generally good; naming objects is good; and repetition is generally preserved.

Mixed transcortical aphasia results from diffusion lesions surrounding cortical language areas. Expressive language is severely limited or absent altogether; naming is significantly impaired; and comprehension is impaired. Repetition, despite impaired comprehension of language, remains generally preserved.

Global aphasia results from massive damage to the languagedominant hemisphere and results in markedly impaired expressive and receptive language abilities.

Aphasia is not typically an isolated consequence of brain damage. The specific areas of the brain that contribute to language production and reception are rarely damaged in isolation. Often there are serious motor deficits, such as paralysis to the opposite side of the body, sensory deficits, neurological deficits, and other cognitive deficits, associated with these aphasias.

Wernicke’s aphasia occurs with lesions or damage to the posterior portion of the temporal lobe. Expressive language is fluent with normal utterance length but with an abundance of semantic and phonemic paraphasias, such as word substitutions or neologisms (made-up words). Because these patients cannot comprehend what they say or mean to say, their expressive language, while fluent, is often meaningless and incomprehensible (often referred to as “word salad” due to many words being spoken, but without any coherence). Comprehension is impaired; naming is severely disrupted; and repetition is typically severely impaired.

Conduction aphasia occurs with damage to the supramarginal gyrus in the parietal lobe or to the arcuate fasciculus. Conversational speech is relatively normal in length but marred with paraphasias. Comprehension is relatively spared, but the person may have difficulty with complex verbal statements or following multistep commands. Repetition is poor and naming is always impaired.

Anomic aphasia can occur when there is damage in the angular gyrus. Conversational speech is typically good but with word-finding difficulties. Repetition is generally good, and auditory comprehension is typically good, except during instances where the person must divide her attention between tasks. Naming is impaired in these patients in light of an absence of other significant language problems.

Transcortical sensory aphasia most often involves damage at the junction between the temporal, parietal, and occipital lobes of the brain. Conversational speech is fluent but with word-finding difficulties and semantic paraphasias (word substitutions that are often totally inappropriate or do not resemble the intended word in sound or meaning). Comprehension is typically impaired, but repetition is surprisingly good, in light of poor comprehension. Naming is typically impaired.