11

Random Forests

Random forests are a modification of bagged decision trees that build a large collection of de-correlated trees to further improve predictive performance. They have become a very popular “out-of-the-box” or “off-the-shelf” learning algorithm that enjoys good predictive performance with relatively little hyperparameter tuning. Many modern implementations of random forests exist; however, Leo Breiman’s algorithm (Breiman, 2001) has largely become the authoritative procedure. This chapter will cover the fundamentals of random forests.

11.1 Prerequisites

This chapter leverages the following packages. Some of these packages play a supporting role; however, the emphasis is on how to implement random forests with the ranger (Wright and Ziegler, 2017) and h2o packages.

# Helper packages

library(dplyr) # for data wrangling

library(ggplot2) # for awesome graphics

# Modeling packages

library(ranger) # a c++ implementation of random forest

library(h2o) # a java-based implementation of random forest

We’ll continue working with the ames_train data set created in Section 2.7 to illustrate the main concepts.

11.2 Extending bagging

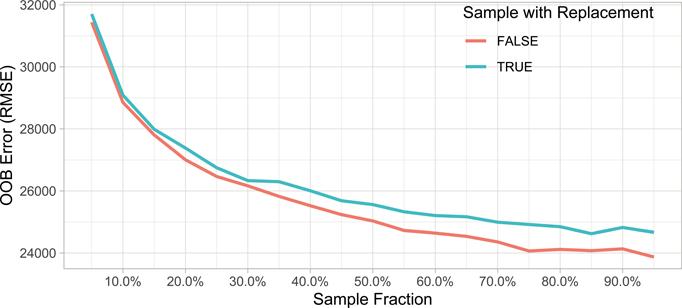

Random forests are built using the same fundamental principles as decision trees (Chapter 9) and bagging (Chapter 10). Bagging trees introduces a random component into the tree building process by building many trees on bootstrapped copies of the training data. Bagging then aggregates the predictions across all the trees; this aggregation reduces the variance of the overall procedure and results in improved predictive performance. However, as we saw in Section 10.6, simply bagging trees results in tree correlation that limits the effect of variance reduction.

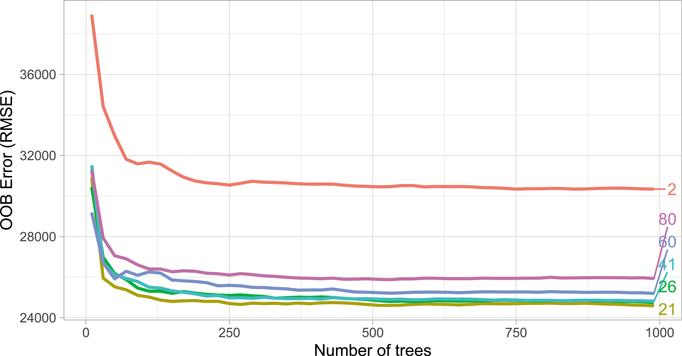

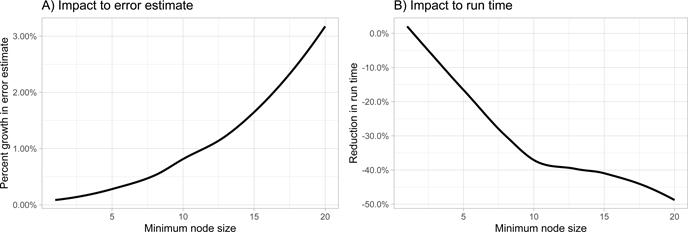

Random forests help to reduce tree correlation by injecting more randomness into the tree-growing process.1 More specifically, while growing a decision tree during the bagging process, random forests perform split-variable randomization where each time a split is to be performed, the search for the split variable is limited to a random subset of mtry of the original p features. Typical default values are (regression) and (classification) but this should be considered a tuning parameter.

The basic algorithm for a regression or classification random forest can be generalized as follows:

1. Given a training data set

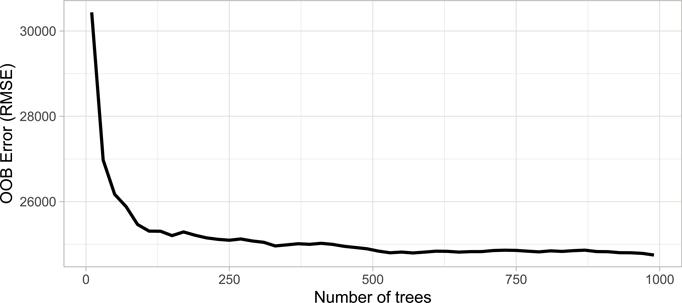

2. Select number of trees to build (n_trees)

3. for i = 1 to n_trees do

4. | Generate a bootstrap sample of the original data

5. | Grow a regression/classification tree to the bootstrapped data

6. | for each split do

7. | | Select m_try variables at random from all p variables

8. | | Pick the best variable/split-point among the m_try

9. | | Split the node into two child nodes

10. | end

11. | Use typical tree model stopping criteria to determine when a | tree is complete (but do not prune)

12. end

13. Output ensemble of trees

When mtry = p, the algorithm is equivalent to bagging decision trees.

Since the algorithm randomly selects a bootstrap sample to train on and a random sample of features to use at each split, a more diverse set of trees is produced which tends to lessen tree correlation beyond bagged trees and often dramatically increase predictive power.