CHAPTER 6

The Crisis of Abundance

Can Our Ancient Genes Cope with Our Modern Diet?

Avoidable human misery is more often caused not so much by stupidity as by ignorance, particularly our ignorance about ourselves.

—Carl Sagan

When it comes to managing junior surgical residents, there is a sort of unwritten rule that Hippocrates might have stated as follows: First, let them do no harm. That rule was in full effect during my early months at Johns Hopkins, in 2001, on the surgical oncology service. We were removing part of a patient’s cancerous ascending colon, and one of my jobs was to “pre-op” him, which was basically like a briefing/semi-interrogation the day before surgery to be sure we knew everything that we needed to know about his medical history.

I met with this patient and outlined the procedure he was about to undergo, reminded him not to eat anything after 8 p.m., and asked him a series of routine questions, including whether or not he smoked and how much alcohol he drank. I had practiced asking this last one in a disarming, seemingly offhand way, but I knew it was among the most important items on my checklist. If we believed that a patient consumed significant amounts of alcohol (typically more than four or five drinks per day), we had to make sure that the anesthesiologists knew this, so they could administer specific drugs during recovery, typically benzodiazepines such as Valium, in order to ward off alcohol withdrawal. Otherwise, the patient could be at risk for delirium tremens, or the DTs, a potentially fatal condition.

I was relieved when he told me that he drank minimally. One less thing to worry about. The next day, I wheeled the patient into the OR and ran my checklist of mundane intern-level stuff. It would take a few minutes for the anesthesiologists to put him to sleep, after which I could place the Foley catheter into his bladder, swab his skin with Betadine, place the surgical drapes, and then step aside while the chief resident and attending surgeon made the first incision. If I was lucky, I would get to assist with the opening and closing of the abdomen. Otherwise, I was there to retract the liver, holding it out of the way so the senior surgeons could have an unobstructed view of the organ they needed to remove, which was sort of tucked in underneath the liver.

As the surgery got under way, nothing seemed out of the ordinary. The surgeons had to make their way through a bit of abdominal fat before they could get to the peritoneal cavity, but nothing we didn’t see most days. There is an incredible rush of anticipation one feels just before cutting through the last of several membranes separating the outside world from the inner abdominal cavity. One of the first things you see, as the incision grows, is the tip of the liver, which I’ve always considered to be a really underappreciated organ. The “cool kids” in medicine specialize in the brain or the heart, but the liver is the body’s true workhorse—and also, it’s simply breathtaking to behold. Normally, a healthy liver is a deep, dark purple color, with a gorgeous silky-smooth texture. Hannibal Lecter was not too far off: it really does look as if it might be delicious with some fava beans and a nice Chianti.

This patient’s liver appeared rather less appetizing as it emerged from beneath the omental fat. Instead of a healthy, rich purple, it was mottled and sort of orangish, with protruding nodules of yellow fat. It looked like foie gras gone bad. The attending looked up at me sharply. “You said this guy was not a drinker!” he barked.

Clearly, this man was a very heavy drinker; his liver showed all the signs of it. And because I had failed to elicit that information, I had potentially placed his life in danger.

But it turned out that I hadn’t made a mistake. When the patient awoke after surgery, he confirmed that he rarely drank alcohol, if ever. In my experience, patients confronting cancer surgery rarely lied about drinking or anything else, especially when fessing up meant getting some Valium or even better, a couple of beers with their hospital dinner. But he definitely had the liver of an alcoholic, which struck everyone as odd.

This would happen numerous times during my residency. Every time, we would scratch our heads. Little did we know that we were witnessing the beginning, or perhaps the flowering, of a silent epidemic.

Five decades earlier, a surgeon in Topeka, Kansas, named Samuel Zelman had encountered a similar situation: he was operating on a patient whom he knew personally, because the man was an aide in the hospital where he worked. He knew for a fact that the man did not drink any alcohol, so he was surprised to find out that his liver was packed with fat, just like that of my patient, decades later.

This man did, in fact, drink a lot—of Coca-Cola. Zelman knew that he consumed a staggering quantity of soda, as many as twenty bottles (or more) in a single day. These were the older, smaller Coke bottles, not the supersizes we have now, but still, Zelman estimated that his patient was taking in an extra 1,600 calories per day on top of his already ample meals. Among his colleagues, Zelman noted, he was “distinguished for his appetite.”

His curiosity piqued, Zelman recruited nineteen other obese but nonalcoholic subjects for a clinical study. He tested their blood and urine and conducted liver biopsies on them, a serious procedure performed with a serious needle. All of the subjects bore some sign or signs of impaired liver function, in a way eerily similar to the well-known stages of liver damage seen in alcoholics.

This syndrome was often noticed but little understood. It was typically attributed to alcoholism or hepatitis. When it began to be seen in teenagers, in the 1970s and 1980s, worried doctors warned of a hidden epidemic of teenage binge drinking. But alcohol was not to blame. In 1980, a team at the Mayo Clinic dubbed this “hitherto unnamed disease” nonalcoholic steatohepatitis, or NASH. Since then, it has blossomed into a global plague. More than one in four people on this planet have some degree of NASH or its precursor, known as nonalcoholic fatty liver disease, or NAFLD, which is what we had observed in our patient that day in the operating room.

NAFLD is highly correlated with both obesity and hyperlipidemia (excessive cholesterol), yet it often flies under the radar, especially in its early stages. Most patients are unaware that they have it—and so are their doctors, because NAFLD and NASH have no obvious symptoms. The first signs would generally show up only on a blood test for the liver enzyme alanine aminotransferase (ALT for short). Rising levels of ALT are often the first clue that something is wrong with the liver, although they could also be a symptom of something else, such as a recent viral infection or a reaction to a medication. But there are many people walking around whose physicians have no idea that they are in the early stages of this disease, because their ALT levels are still “normal.”

Next question: What is normal? According to Labcorp, a leading testing company, the acceptable range for ALT is below 33 IU/L for women and below 45 IU/L for men (although the ranges can vary from lab to lab). But “normal” is not the same as “healthy.” The reference ranges for these tests are based on current percentiles,[*1] but as the population in general becomes less healthy, the average may diverge from optimal levels. It’s similar to what has happened with weight. In the late 1970s, the average American adult male weighed 173 pounds. Now the average American man tips the scale at nearly 200 pounds. In the 1970s, a 200-pound man would have been considered very overweight; today he is merely average. So you can see how in the twenty-first century, “average” is not necessarily optimal.

With regard to ALT liver values, the American College of Gastroenterology recently revised its guidelines to recommend clinical evaluation for liver disease in men with ALT above 33 and women with ALT above 25—significantly below the current “normal” ranges. Even that may not be low enough: a 2002 study that excluded people who already had fatty liver suggested upper limits of 30 for men, and 19 for women. So even if your liver function tests land within the reference range, that does not imply that your liver is actually healthy.

NAFLD and NASH are basically two stages of the same disease. NAFLD is the first stage, caused by (in short) more fat entering the liver or being produced there than exiting it. The next step down the metabolic gangplank is NASH, which is basically NAFLD plus inflammation, similar to hepatitis but without a viral infection. This inflammation causes scarring in the liver, but again, there are no obvious symptoms. This may sound scary, but all is not yet lost. Both NAFLD and NASH are still reversible. If you can somehow remove the fat from the liver (most commonly via weight loss), the inflammation will resolve, and liver function returns to normal. The liver is a highly resilient organ, almost miraculously so. It may be the most regenerative organ in the human body. When a healthy person donates a portion of their liver, both donor and recipient end up with an almost full-sized, fully functional liver within about eight weeks of the surgery, and the majority of that growth takes place in just the first two weeks.

In other words, your liver can recover from fairly extensive damage, up to and including partial removal. But if NASH is not kept in check or reversed, the damage and the scarring may progress into cirrhosis. This happens in about 11 percent of patients with NASH and is obviously far more serious. It now begins to affect the cellular architecture of the organ, making it much more difficult to reverse. A patient with cirrhosis is likely to die from various complications of their failing liver unless they receive a liver transplant. In 2001, when we did the operation on the man with the fatty liver, NASH officially accounted for just over 1 percent of liver transplants in the United States; by 2025, NASH with cirrhosis is expected to be the leading indication for liver transplantation.

As devastating as it is, cirrhosis is not the only end point I’m worried about here. I care about NAFLD and NASH—and you should too—because they represent the tip of the iceberg of a global epidemic of metabolic disorders, ranging from insulin resistance to type 2 diabetes. Type 2 diabetes is technically a distinct disease, defined very clearly by glucose metrics, but I view it as simply the last stop on a railway line passing through several other stations, including hyperinsulinemia, prediabetes, and NAFLD/NASH. If you find yourself anywhere on this train line, even in the early stages of NAFLD, you are likely also en route to one or more of the other three Horsemen diseases (cardiovascular disease, cancer, and Alzheimer’s disease). As we will see in the next few chapters, metabolic dysfunction vastly increases your risk for all of these. So you can’t fight the Horsemen without taking on metabolic dysfunction first.

Notice that I said “metabolic dysfunction” and not “obesity,” everybody’s favorite public health bogeyman. It’s an important distinction. According to the Centers for Disease Control (CDC), more than 40 percent of the US population is obese (defined as having a BMI[*2] greater than 30), while roughly another third is overweight (BMI of 25 to 30). Statistically, being obese means someone is at greater risk of chronic disease, so a lot of attention is focused on the “obesity problem,” but I take a broader view: obesity is merely one symptom of an underlying metabolic derangement, such as hyperinsulinemia, that also happens to cause us to gain weight. But not everyone who is obese is metabolically unhealthy, and not everyone who is metabolically unhealthy is obese. There’s more to metabolic health than meets the eye.

As far back as the 1960s, before obesity had become a widespread problem, a Stanford endocrinologist named Gerald Reaven had observed that excess weight often traveled in company with certain other markers of poor health. He and his colleagues noticed that heart attack patients often had both high fasting glucose levels and high triglycerides, as well as elevated blood pressure and abdominal obesity. The more of these boxes a patient checked, the greater their risk of cardiovascular disease.

In the 1980s, Reaven labeled this collection of related disorders “Syndrome X”—where the X factor, he eventually determined, was insulin resistance. Today we call this cluster of problems “metabolic syndrome” (or MetSyn), and it is defined in terms of the following five criteria:

-

high blood pressure (>130/85)

-

high triglycerides (>150 mg/dL)

-

low HDL cholesterol (<40 mg/dL in men or <50 mg/dL in women)

-

central adiposity (waist circumference >40 inches in men or >35 in women)

-

elevated fasting glucose (>110 mg/dL)

If you meet three or more of these criteria, then you have the metabolic syndrome—along with as many as 120 million other Americans, according to a 2020 article in JAMA. About 90 percent of the US population ticks at least one of these boxes. But notice that obesity is merely one of the criteria; it is not required for the metabolic syndrome to be diagnosed. Clearly the problem runs deeper than simply unwanted weight gain. This tends to support my view that obesity itself is not the issue but is merely a symptom of other problems.

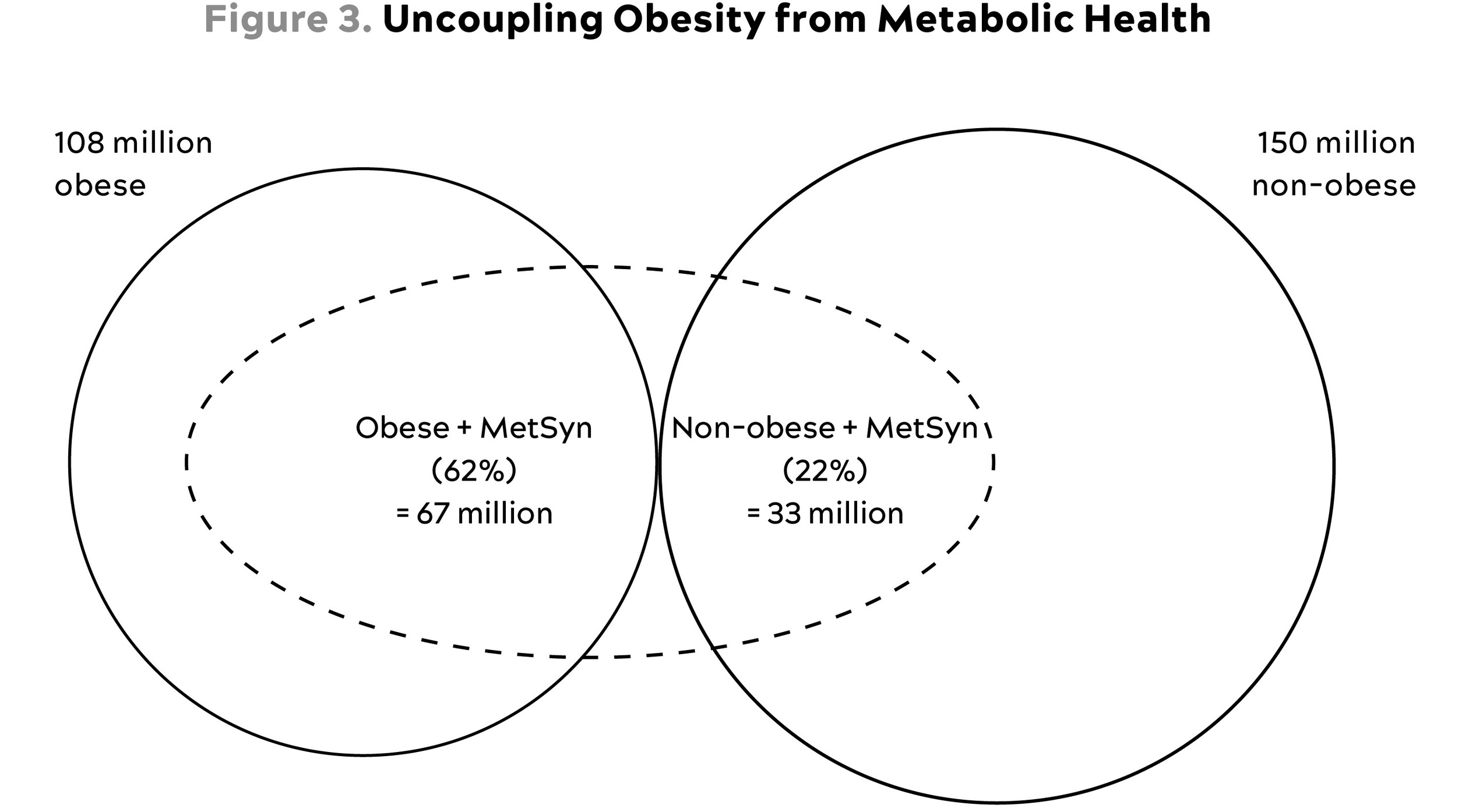

Studies have found that approximately one-third of those folks who are obese by BMI are actually metabolically healthy, by many of the same parameters used to define the metabolic syndrome (blood pressure, triglycerides, cholesterol, and fasting glucose, among others). At the same time, some studies have found that between 20 and 40 percent of nonobese adults may be metabolically unhealthy, by those same measures. A high percentage of obese people are also metabolically sick, of course—but as figure 3 illustrates, many normal-weight folks are in the same boat, which should be a wake-up call to all. This is not about how much you weigh. Even if you happen to be thin, you still need to read this chapter.

Source: Internal analysis based on data from National Institute of Diabetes and Digestive and Kidney Diseases (2021).

Relative prevalence of metabolic dysfunction (“MetSyn”) across the obese and nonobese segments of the population.

This figure (based on NIH data and not the JAMA article just mentioned) shows quite dramatically how obesity and metabolic dysfunction are not the same thing—far from it, in fact. Some 42 percent of the US population is obese (BMI>30). Out of a conservatively estimated 100 million Americans who meet the criteria for the metabolic syndrome (i.e., metabolically unhealthy), almost exactly one-third are not obese. Many of these folks are overweight by BMI (25-29.9), but nearly 10 million Americans are normal weight (BMI 19-24.9) but metabolically unhealthy.

Some research suggests that these people might be in the most serious danger. A large meta-analysis of studies with a mean follow-up time of 11.5 years showed that people in this category have more than triple the risk of all-cause mortality and/or cardiovascular events than metabolically healthy normal-weight individuals. Meanwhile, the metabolically healthy but obese subjects in these studies were not at significantly increased risk. The upshot is that it’s not only obesity that drives bad health outcomes; it’s metabolic dysfunction. That’s what we’re concerned with here.

Metabolism is the process by which we take in nutrients and break them down for use in the body. In someone who is metabolically healthy, those nutrients are processed and sent to their proper destinations. But when someone is metabolically unhealthy, many of the calories they consume end up where they are not needed, at best—or outright harmful, at worst.

If you eat a doughnut, for example, the body has to decide what to do with the calories in that doughnut. At the risk of oversimplifying a bit, the carbohydrate from our doughnut has two possible fates. First, it can be converted into glycogen, the storage form of glucose, suitable for use in the near term. About 75 percent of this glycogen ends up in skeletal muscle and the other 25 percent goes to the liver, although this ratio can vary. An adult male can typically store a total of about 1,600 calories worth of glycogen between these two sites, or about enough energy for two hours of vigorous endurance exercise. This is why if you are running a marathon or doing a long bike ride, and do not replenish your fuel stores in some way, you are likely to “bonk,” or run out of energy, which is not a pleasant experience.

One of the liver’s many important jobs is to convert this stored glycogen back to glucose and then to release it as needed to maintain blood glucose levels at a steady state, known as glucose homeostasis. This is an incredibly delicate task: an average adult male will have about five grams of glucose circulating in his bloodstream at any given time, or about a teaspoon. That teaspoon won’t last more than a few minutes, as glucose is taken up by the muscles and especially the brain, so the liver has to continually feed in more, titrating it precisely to maintain a more or less constant level. Consider that five grams of glucose, spread out across one’s entire circulatory system, is normal, while seven grams—a teaspoon and a half—means you have diabetes. As I said, the liver is an amazing organ.

We have a far greater capacity, almost unlimited, for storing energy as fat—the second possible destination for the calories in that doughnut. Even a relatively lean adult may carry ten kilograms of fat in their body, representing a whopping ninety thousand calories of stored energy.

That decision—where to put the energy from the doughnut—is made via hormones, chief among them insulin, which is secreted by the pancreas when the body senses the presence of glucose, the final breakdown product of most carbohydrates (such as those in the doughnut). Insulin helps shuttle the glucose to where it’s needed, while maintaining glucose homeostasis. If you happen to be riding a stage of the Tour de France while you eat the doughnut, or are engaged in other intense exercise, those calories will be consumed almost instantly in the muscles. But in a typical sedentary person, who is not depleting muscle glycogen rapidly, the excess energy from the doughnut will largely end up in fat cells (or more specifically, as triglycerides contained within fat cells).

The twist here is that fat—that is, subcutaneous fat, the layer of fat just beneath our skin—is actually the safest place to store excess energy. Fat in and of itself is not bad. It’s where we should put surplus calories. That’s how we evolved. While fat might not be culturally or aesthetically desirable in our modern world, subcutaneous fat actually plays an important role in maintaining metabolic health. The Yale University endocrinologist Gerald Shulman, one of the leading researchers in diabetes, once published an elegant experiment demonstrating the necessity of fat: when he surgically implanted fat tissue into insulin-resistant mice, thereby making them more fat, he found that their metabolic dysfunction was cured almost instantly. Their new fat cells sucked up their excess blood glucose and stored it safely.

Think of fat as acting like a kind of metabolic buffer zone, absorbing excess energy and storing it safely until it is needed. If we eat extra doughnuts, those calories are stored in our subcutaneous fat; when we go on, say, a long hike or swim, some of that fat is then released for use by the muscles. This fat flux goes on continually, and as long as you haven’t exceeded your own fat storage capacity, things are pretty much fine.

But if you continue to consume energy in excess of your needs, those subcutaneous fat cells will slowly fill up, particularly if little of that stored energy is being utilized. When someone reaches the limit of their capacity to store energy in their subcutaneous fat, yet they continue to take on excess calories, all that energy still has to go somewhere. The doughnuts or whatever they might be eating are probably still getting converted into fat, but now the body has to find other places to store it.

It’s almost as if you have a bathtub, and you’re filling it up from the faucet. If you keep the faucet running even after the tub is full and the drain is closed (i.e., you’re sedentary), water begins spilling over the rim of the tub, flowing into places where it’s not wanted or needed, like onto the bathroom floor, into the heating vents or down the stairs. It’s the same with excess fat. As more calories flood into your subcutaneous fat tissue, it eventually reaches capacity and the surplus begins spilling over into other areas of your body: into your blood, as excess triglycerides; into your liver, contributing to NAFLD; into your muscle tissue, contributing directly to insulin resistance in the muscle (as we’ll see); and even around your heart and your pancreas (figure 4). None of these, obviously, are ideal places for fat to collect; NAFLD is just one of many undesirable consequences of this fat spillover.

Source: Tchernof and Després (2013).

Fat also begins to infiltrate your abdomen, accumulating in between your organs. Where subcutaneous fat is thought to be relatively harmless, this “visceral fat” is anything but. These fat cells secrete inflammatory cytokines such as TNF-alpha and IL-6, key markers and drivers of inflammation, in close proximity to your most important bodily organs. This may be why visceral fat is linked to increased risk of both cancer and cardiovascular disease.

Fat storage capacity varies widely among individuals. Going back to our tub analogy, some people have subcutaneous fat-storage capacity equivalent to a regular bathtub, while others may be closer to a full-sized Jacuzzi or hot tub. Still others may have only the equivalent of a five-gallon bucket. It also matters, obviously, how much “water”is flowing into the tub via the faucet (as calories in food) and how much is flowing out via the drain (or being consumed via exercise or other means).

Individual fat-storage capacity seems to be influenced by genetic factors. This is a generalization, but people of Asian descent (for example), tend to have much lower capacity to store fat, on average, than Caucasians. There are other factors at play here as well, but this explains in part why some people can be obese but metabolically healthy, while others can appear “skinny” while still walking around with three or more markers of metabolic syndrome. It’s these people who are most at risk, according to research by Mitch Lazar at the University of Pennsylvania, because a “thin” person may simply have a much lower capacity to safely store fat. All other things being equal, someone who carries a bit of body fat may also have greater fat-storage capacity, and thus more metabolic leeway than someone who appears to be more lean.

It doesn’t take much visceral fat to cause problems. Let’s say you are a forty-year-old man who weighs two hundred pounds. If you have 20 percent body fat, making you more or less average (50th percentile) for your age and sex, that means you are carrying 40 pounds of fat throughout your body. Even if just 4.5 pounds of that is visceral fat, you would be considered at exceptionally high risk for cardiovascular disease and type 2 diabetes, in the top 5 percent of risk for your age and sex. This is why I insist my patients undergo a DEXA scan annually—and I am far more interested in their visceral fat than their total body fat.

It may have taken you a long time to get there, but now you are in trouble—even if you, and your doctor, may not yet realize it. You have fat accumulating in many places where it should not be, such as in your liver, between your abdominal organs, even around your heart—regardless of your actual weight. But one of the first places where this overflowing fat will cause problems is in your muscle, as it worms its way in between your muscle fibers, like marbling on a steak. As this continues, microscopic fat droplets even appear inside your muscle cells.

This is where insulin resistance likely begins, Gerald Shulman concludes from three decades’ worth of investigation. These fat droplets may be among the first destinations of excess energy/fat spillover, and as they accumulate they begin to disrupt the complex network of insulin-dependent transport mechanisms that normally bring glucose in to fuel the muscle cell. When these mechanisms lose their function, the cell becomes “deaf” to insulin’s signals. Eventually, this insulin resistance will progress to other tissues, such as the liver, but Shulman believes that it originates in muscle. It’s worth noting that one key ingredient in this process seems to be inactivity. If a person is not physically active, and they are not consuming energy via their muscles, then this fat-spillover-driven insulin resistance develops much more quickly. (This is why Shulman requires his study subjects, mostly young college students, to refrain from physical activity, in order to push them towards insulin resistance.)

Insulin resistance is a term that we hear a lot, but what does it really mean? Technically, it means that cells, initially muscle cells, have stopped listening to insulin’s signals, but another way to visualize it is to imagine the cell as a balloon being blown up with air. Eventually, the balloon expands to the point where it gets more difficult to force more air inside. You have to blow harder and harder. This is where insulin comes in, to help facilitate the process of blowing air into the balloon. The pancreas begins to secrete even more insulin, to try to remove excess glucose from the bloodstream and cram it into cells. For the time being it works, and blood glucose levels remain normal, but eventually you reach a limit where the “balloon” (cells) cannot accept any more “air” (glucose).

This is when the trouble shows up on a standard blood test, as fasting blood glucose begins to rise. This means you have high insulin levels and high blood glucose, and your cells are shutting the gates to glucose entry. If things continue in this way, then the pancreas becomes fatigued and less able to mount an insulin response. This is made worse by, you guessed it, the fat now residing in the pancreas itself. You can see the vicious spiral forming here: fat spillover helps initiate insulin resistance, which results in the accumulation of still more fat, eventually impairing our ability to store calories as anything other than fat. There are many other hormones involved in the production and distribution of fat, including testosterone, estrogen, hormone-sensitive lipase[*3] and cortisol. Cortisol is especially potent, with a double-edged effect of depleting subcutaneous fat (which is generally beneficial) and replacing it with more harmful visceral fat. This is one reason why stress levels and sleep, both of which affect cortisol release, are pertinent to metabolism. But insulin seems to be the most potent as far as promoting fat accumulation because it acts as kind of a one-way gate, allowing fat to enter the cell while impairing the release of energy from fat cells (via a process called lipolysis). Insulin is all about fat storage, not fat utilization.

When insulin is chronically elevated, more problems arise. Fat gain and ultimately obesity are merely one symptom of this condition, known as hyperinsulinemia. I would argue that they are hardly even the most serious symptoms: as we’ll see in the coming chapters, insulin is also a potent growth-signaling hormone that helps foster both atherosclerosis and cancer. And when insulin resistance begins to develop, the train is already well down the track toward type 2 diabetes, which brings a multitude of unpleasant consequences.

Our slowly dawning awareness of NAFLD and NASH mirrors the emergence of the global epidemic of type 2 diabetes a century ago. Like cancer, Alzheimer’s, and heart disease, type 2 diabetes is known as a “disease of civilization,” meaning it has only come to prominence in the modern era. Among primitive tribes and in prior times, it was largely unknown. Its symptoms had been recognized for thousands of years, going back to ancient Egypt (as well as ancient India), but it was the Greek physician Aretaeus of Cappadocia who named it diabetes, describing it as “a melting down of the flesh and limbs into urine.”

Back then, it was vanishingly rare, observed only occasionally. As type 2 diabetes emerged, beginning in the early 1700s, it was at first largely a disease of the superelite, popes and artists and wealthy merchants and nobles who could afford this newly fashionable luxury food known as sugar. The composer Johann Sebastian Bach is thought to have been afflicted, among other notable personages. It also overlapped with gout, a more commonly recognized complaint of the decadent upper classes. This, as we’ll soon see, was not a coincidence.

By the early twentieth century, diabetes was becoming a disease of the masses. In 1940 the famed diabetologist Elliott Joslin estimated that about one person in every three to four hundred was diabetic, representing an enormous increase from just a few decades earlier, but it was still relatively uncommon. By 1970, around the time I was born, its prevalence was up to one in every fifty people. Today over 11 percent of the US adult population, one in nine, has clinical type 2 diabetes, according to a 2022 CDC report, including more than 29 percent of adults over age sixty-five. Another 38 percent of US adults—more than one in three—meet at least one of the criteria for prediabetes. That means that nearly half of the population is either on the road to type 2 diabetes or already there.

One quick note: diabetes ranks as only the seventh or eighth leading cause of death in the United States, behind things like kidney disease, accidents, and Alzheimer’s disease. In 2020, a little more than one hundred thousand deaths were attributed to type 2 diabetes, a fraction of the number due to either cardiovascular disease or cancer. By the numbers, it barely qualifies as a Horseman. But I believe that the actual death toll due to type 2 diabetes is much greater and that we undercount its true impact. Patients with diabetes have a much greater risk of cardiovascular disease, as well as cancer and Alzheimer’s disease and other dementias; one could argue that diabetes with related metabolic dysfunction is one thing that all these conditions have in common. This is why I place such emphasis on metabolic health, and why I have long been concerned about the epidemic of metabolic disease not only in the United States but around the world.

Why is this epidemic happening now?

The simplest explanation is likely that our metabolism, as it has evolved over millennia, is not equipped to cope with our ultramodern diet, which has appeared only within the last century or so. Evolution is no longer our friend, because our environment has changed much faster than our genome ever could. Evolution wants us to get fat when nutrients are abundant: the more energy we could store, in our ancestral past, the greater our chances of survival and successful reproduction. We needed to be able to endure periods of time without much food, and natural selection obliged, endowing us with genes that helped us conserve and store energy in the form of fat. That enabled our distant ancestors to survive periods of famine, cold climates, and physiologic stressors such as illness and pregnancy. But these genes have proved less advantageous in our present environment, where many people in the developed world have access to almost unlimited calories.

Another problem is that not all of these calories are created equal, and not all of them are metabolized in the same way. One abundant source of calories in our present diet, fructose, also turns out to be a very powerful driver of metabolic dysfunction if consumed to excess. Fructose is not a novel nutrient, obviously. It’s the form of sugar found in nearly all fruits, and as such it is essential in the diets of many species, from bats and hummingbirds up to bears and monkeys and humans. But as it turns out, we humans have a unique capacity for turning calories from fructose into fat.

Lots of people like to demonize fructose, especially in the form of high-fructose corn syrup, without really understanding why it’s supposed to be so harmful. The story is complicated but fascinating. The key factor here is that fructose is metabolized in a manner different from other sugars. When we metabolize fructose, along with certain other types of foods, it produces large amounts of uric acid, which is best known as a cause of gout but which has also been associated with elevated blood pressure.

More than two decades ago, a University of Colorado nephrologist named Rick Johnson noticed that fructose consumption appeared to be an especially powerful driver not only of high blood pressure but also of fat gain. “We realized fructose was having effects that could not be explained by its calorie content,” Johnson says. The culprit seemed to be uric acid. Other mammals, and even some other primates, possess an enzyme called uricase, which helps them clear uric acid. But we humans lack this important and apparently beneficial enzyme, so uric acid builds up, with all its negative consequences.

Johnson and his team began investigating our evolutionary history, in collaboration with a British anthropologist named Peter Andrews, a retired researcher at the Natural History Museum in London and an expert on primate evolution. Others had observed that our species had lost this uricase enzyme because of some sort of random genetic mutation, far back in our evolutionary past, but the reason why had remained mysterious. Johnson and Andrews scoured the evolutionary and fossil record and came up with an intriguing theory: that this mutation may have been essential to the very emergence of the human species.

The story they uncovered was that, millions of years ago, our primate ancestors migrated north from Africa into what is now Europe. Back then, Europe was lush and semitropical, but as the climate slowly cooled, the forest changed. Deciduous trees and open meadows replaced the tropical forest, and the fruit trees on which the apes depended for food began to disappear, especially the fig trees, a staple of their diets. Even worse, the apes now had to endure a new and uncomfortably cold season, which we know as “winter.” In order to survive, these apes now needed to be able to store some of the calories they did eat as fat. But storing fat did not come naturally to them because they had evolved in Africa, where food was always available. Thus, their metabolism did not prioritize fat storage.

At some point, our primate ancestors underwent a random genetic mutation that effectively switched on their ability to turn fructose into fat: the gene for the uricase enzyme was “silenced,” or lost. Now, when these apes consumed fructose, they generated lots of uric acid, which caused them to store many more of those fructose calories as fat. This newfound ability to store fat enabled them to survive in the colder climate. They could spend the summer gorging themselves on fruit, fattening up for the winter.

These same ape species, or their evolutionary successors, migrated back down into Africa, where over time they evolved into hominids and then Homo sapiens—while also passing their uricase-silencing mutation down to us humans. This, in turn, helped enable humans to spread far and wide across the globe, because we could store energy to help us survive cold weather and seasons without abundant food.

But in our modern world, this fat-storage mechanism has outlived its usefulness. We no longer need to worry about foraging for fruit or putting on fat to survive a cold winter. Thanks to the miracles of modern food technology, we are almost literally swimming in a sea of fructose, especially in the form of soft drinks, but also hidden in more innocent-seeming foods like bottled salad dressing and yogurt cups.[*4]

Whatever form it takes, fructose does not pose a problem when consumed the way that our ancestors did, before sugar became a ubiquitous commodity: mostly in the form of actual fruit. It is very difficult to get fat from eating too many apples, for example, because the fructose in the apple enters our system relatively slowly, mixed with fiber and water, and our gut and our metabolism can handle it normally. But if we are drinking quarts of apple juice, it’s a different story, as I’ll explain in a moment.

Fructose isn’t the only thing that creates uric acid; foods high in chemicals called purines, such as certain meats, cheeses, anchovies, and beer, also generate uric acid. This is why gout, a condition of excess uric acid, was so common among gluttonous aristocrats in the olden days (and still today). I test my patients’ levels of uric acid, not only because high levels may promote fat storage but also because it is linked to high blood pressure. High uric acid is an early warning sign that we need to address a patient’s metabolic health, their diet, or both.

Another issue is that glucose and fructose are metabolized very differently at the cellular level. When a brain cell, muscle cell, gut cell, or any other type of cell breaks down glucose, it will almost instantly have more ATP (adenosine triphosphate), the cellular energy “currency,” at its disposal. But this energy is not free: the cell must expend a small amount of ATP in order to make more ATP, in the same way that you sometimes have to spend money to make money. In glucose metabolism, this energy expenditure is regulated by a specific enzyme that prevents the cell from “spending” too much of its ATP on metabolism.

But when we metabolize fructose in large quantities, a different enzyme takes over, and this enzyme does not put the brakes on ATP “spending.” Instead, energy (ATP) levels inside the cell drop rapidly and dramatically. This rapid drop in energy levels makes the cell think that we are still hungry. The mechanisms are a bit complicated, but the bottom line is that even though it is rich in energy, fructose basically tricks our metabolism into thinking that we are depleting energy—and need to take in still more food and store more energy as fat.[*5]

On a more macro level, consuming large quantities of liquid fructose simply overwhelms the ability of the gut to handle it; the excess is shunted to the liver, where many of those calories are likely to end up as fat. I’ve seen patients work themselves into NAFLD by drinking too many “healthy” fruit smoothies, for the same reason: they are taking in too much fructose, too quickly. Thus, the almost infinite availability of liquid fructose in our already high-calorie modern diet sets us up for metabolic failure if we’re not careful (and especially if we are not physically active).

I sometimes think back to that patient who first introduced me to fatty liver disease. He and Samuel Zelman’s Patient Zero, the man who drank a dozen Cokes a day, had the same problem: they consumed many more calories than they needed. In the end, I still think excess calories matter the most.

Of course, my patient was in the hospital not because of his NAFLD, but because of his colon cancer. His operation turned out beautifully: we removed the cancerous part of his colon and sent him off to a speedy recovery. His colon cancer was well established, but it had not metastasized or spread. I remember the attending surgeon feeling pretty good about the operation, as we had caught the cancer in time. This man was maybe forty or forty-five, with a long life still ahead of him.

But what became of him? He was obviously also in the early stages of metabolic disease. I keep wondering whether the two might have been connected in some way, his fatty liver and his cancer. What did he look like, metabolically, ten years before he came in for surgery that day? As we’ll see in chapter 8, obesity and metabolic dysfunction are both powerful risk factors for cancer. Could this man’s underlying metabolic issues have been fueling his cancer somehow? What would have happened if his underlying issues, which his fatty liver made plain as day, had been recognized a decade or more earlier? Would we have ever even met?

It seems unlikely that Medicine 2.0 would have addressed his situation at all. The standard playbook, as we touched on in chapter 1, is to wait until someone’s HbA1c rises above the magic threshold of 6.5 percent before diagnosing them with type 2 diabetes. But by then, as we’ve seen in this chapter, the person may already be in a state of elevated risk. To address this rampant epidemic of metabolic disorders, of which NAFLD is merely a harbinger, we need to get a handle on the situation much earlier.

One reason I find value in the concept of metabolic syndrome is that it helps us see these disorders as part of a continuum and not a single, binary condition. Its five relatively simple criteria are useful for predicting risk at the population level. But I still feel that reliance on it means waiting too long to declare that there is a problem. Why wait until someone has three of the five markers? Any one of them is generally a bad sign. A Medicine 3.0 approach would be to look for the warning signs years earlier. We want to intervene before a patient actually develops metabolic syndrome.

This means keeping watch for the earliest signs of trouble. In my patients, I monitor several biomarkers related to metabolism, keeping a watchful eye for things like elevated uric acid, elevated homocysteine, chronic inflammation, and even mildly elevated ALT liver enzymes. Lipoproteins, which we will discuss in detail in the next chapter, are also important, especially triglycerides; I watch the ratio of triglycerides to HDL cholesterol (it should be less than 2:1 or better yet, less than 1:1), as well as levels of VLDL, a lipoprotein that carries triglycerides—all of which may show up many years before a patient would meet the textbook definition of metabolic syndrome. These biomarkers help give us a clearer picture of a patient’s overall metabolic health than HbA1c, which is not very specific by itself.

But the first thing I look for, the canary in the coal mine of metabolic disorder, is elevated insulin. As we’ve seen, the body’s first response to incipient insulin resistance is to produce more insulin. Think back to our analogy with the balloon: as it gets harder to get air (glucose) into the balloon (the cell), we have to blow harder and harder (i.e., produce more insulin). At first, this appears to be successful: the body is still able to maintain glucose homeostasis, a steady blood glucose level. But insulin, especially postprandial insulin, is already on the rise.

One test that I like to give patients is the oral glucose tolerance test, or OGTT, where the patient swallows ten ounces of a sickly-sweet, almost undrinkable beverage called Glucola that contains seventy-five grams of pure glucose, or about twice as much sugar as in a regular Coca-Cola.[*6] We then measure the patient’s glucose and their insulin, every thirty minutes over the next two hours. Typically, their blood glucose levels will rise, followed by a peak in insulin, but then the glucose will steadily decrease as insulin does its job and removes it from circulation.

On the surface, this is fine: insulin has done its job and brought glucose under control. But the insulin in someone at the early stages of insulin resistance will rise very dramatically in the first thirty minutes and then remain elevated, or even rise further, over the next hour. This postprandial insulin spike is one of the biggest early warning signs that all is not well.

Gerald Reaven, who died in 2018 at the age of eighty-nine, would have agreed. He had to fight for decades for insulin resistance to be recognized as a primary cause of type 2 diabetes, an idea that is now well accepted. Yet diabetes is only one danger: Studies have found that insulin resistance itself is associated with huge increases in one’s risk of cancer (up to twelvefold), Alzheimer’s disease (fivefold), and death from cardiovascular disease (almost sixfold)—all of which underscores why addressing, and ideally preventing, metabolic dysfunction is a cornerstone of my approach to longevity.

It seems at least plausible that my patient with the fatty liver had developed elevated insulin at some point, well before his surgery. But it is also extremely unlikely that Medicine 2.0 would have even considered treating him, which boggles my mind. If any other hormone got out of balance like this, such as thyroid hormone or even cortisol, doctors would act swiftly to rectify the situation. The latter might be a symptom of Cushing’s disease, while the former could be a possible sign of Graves’ disease or some other form of hyperthyroidism. Both of these endocrine (read: hormone) conditions require and receive treatment as soon as they are diagnosed. To do nothing would constitute malpractice. But with hyperinsulinemia, for some reason, we wait and do nothing. Only when type 2 diabetes has been diagnosed do we take any serious action. This is like waiting until Graves’ disease has caused exophthalmos, the signature bulging eyeballs in people with untreated hyperthyroidism, before stepping in with treatment.

It is beyond backwards that we do not treat hyperinsulinemia like a bona fide endocrine disorder of its own. I would argue that doing so might have a greater impact on human health and longevity than any other target of therapy. In the next three chapters, we will explore the three other major diseases of aging—cardiovascular disease, cancer, and neurodegenerative diseases—all of which are fueled in some way by metabolic dysfunction. It will hopefully become clear to you, as it is to me, that the logical first step in our quest to delay death is to get our metabolic house in order.

The good news is that we have tremendous agency over this. Changing how we exercise, what we eat, and how we sleep (see Part III) can completely turn the tables in our favor. The bad news is that these things require effort to escape the default modern environment that has conspired against our ancient (and formerly helpful) fat-storing genes, by overfeeding, undermoving, and undersleeping us all.