“You look at where you’re going and where you are and it never makes sense, but then you look back at where you’ve been and a pattern seems to emerge. And if you project forward from that pattern, then sometimes you can come up with something.”

Robert M. Pirsig

This chapter presents five patterns that address various types of concurrency architecture and design issues for components, subsystems, and applications: Active Object, Monitor Object, Half-Sync/Half-Async, Leader/Followers, and Thread-Specific Storage.

The choice of concurrency architecture has a significant impact on the design and performance of multi-threaded networking middleware and applications. No single concurrency architecture is suitable for all workload conditions and hardware and software platforms. The patterns in this chapter therefore collectively provide solutions to a variety of concurrency problems.

The first two patterns in this chapter specify designs for sharing resources among multiple threads or processes:

Both patterns can synchronize and schedule methods invoked concurrently on objects. The main difference is that an active object executes its methods in a different thread than its clients, whereas a monitor object executes its methods by borrowing the thread of its clients. As a result active objects can perform more sophisticated—albeit expensive—scheduling to determine the order in which their methods execute.

The next two patterns in this chapter define higher-level concurrency architectures:

Implementors of the Half-Sync/Half-Async and Leader/Followers patterns can use the Active Object and Monitor Object patterns to coordinate access to shared objects efficiently.

The final pattern in this chapter offers a different strategy for addressing certain inherent complexities of concurrency:

Implementations of all patterns in this chapter can use the patterns from Chapter 4, Synchronization Patterns, to protect critical regions from concurrent access.

Other patterns in the literature that address concurrency-related issues include Master-Slave [POSA1], Producer-Consumer [Grand98], Scheduler [Lea99a], and Two-phase Termination [Grand98].

The Active Object design pattern decouples method execution from method invocation to enhance concurrency and simplify synchronized access to objects that reside in their own threads of control.

Concurrent Object

Consider the design of a communication gateway,1 which decouples cooperating components and allows them to interact without having direct dependencies on each other. As shown below, the gateway may route messages from one or more supplier processes to one or more consumer processes in a distributed system.

The suppliers, consumers, and gateway communicate using TCP [Ste93], which is a connection-oriented protocol. The gateway may therefore encounter flow control from the TCP transport layer when it tries to send data to a remote consumer. TCP uses flow control to ensure that fast suppliers or gateways do not produce data more rapidly than slow consumers or congested networks can buffer and process the data. To improve end-to-end quality of service (QoS) for all suppliers and consumers, the entire gateway process must not block while waiting for flow control to abate over any one connection to a consumer. In addition the gateway must scale up efficiently as the number of suppliers and consumers increase.

An effective way to prevent blocking and improve performance is to introduce concurrency into the gateway design, for example by associating a different thread of control for each TCP connection. This design enables threads whose TCP connections are flow controlled to block without impeding the progress of threads whose connections are not flow controlled. We thus need to determine how to program the gateway threads and how these threads interact with supplier and consumer handlers.

Clients that access objects running in separate threads of control.

Many applications benefit from using concurrent objects to improve their quality of service, for example by allowing an application to handle multiple client requests simultaneously. Instead of using a single-threaded passive object, which executes its methods in the thread of control of the client that invoked the methods, a concurrent object resides in its own thread of control. If objects run concurrently, however, we must synchronize access to their methods and data if these objects are shared and modified by multiple client threads, in which case three forces arise:

For example, if one outgoing TCP connection in our gateway example is blocked due to flow control, the gateway process still should be able to run other threads that can queue new messages while waiting for flow control to abate. Similarly, if other outgoing TCP connections are not flow controlled, it should be possible for other threads in the gateway to send messages to their consumers independently of any blocked connections.

For example, if one outgoing TCP connection in our gateway example is blocked due to flow control, the gateway process still should be able to run other threads that can queue new messages while waiting for flow control to abate. Similarly, if other outgoing TCP connections are not flow controlled, it should be possible for other threads in the gateway to send messages to their consumers independently of any blocked connections.

Applications like our gateway can be hard to program if developers use low-level synchronization mechanisms, such as acquiring and releasing mutual exclusion (mutex) locks explicitly. Methods that are subject to synchronization constraints, such as enqueueing and dequeueing messages from TCP connections, should be serialized transparently when objects are accessed by multiple threads.

Applications like our gateway can be hard to program if developers use low-level synchronization mechanisms, such as acquiring and releasing mutual exclusion (mutex) locks explicitly. Methods that are subject to synchronization constraints, such as enqueueing and dequeueing messages from TCP connections, should be serialized transparently when objects are accessed by multiple threads.

In our gateway example, messages destined for different consumers should be sent concurrently by a gateway over different TCP connections. If the entire gateway is programmed to only run in a single thread of control, however, performance bottlenecks cannot be alleviated transparently by running the gateway on a multi-processor platform.

In our gateway example, messages destined for different consumers should be sent concurrently by a gateway over different TCP connections. If the entire gateway is programmed to only run in a single thread of control, however, performance bottlenecks cannot be alleviated transparently by running the gateway on a multi-processor platform.

For each object exposed to the forces above, decouple method invocation on the object from method execution. Method invocation should occur in the client’s thread of control, whereas method execution should occur in a separate thread. Moreover, design the decoupling so the client thread appears to invoke an ordinary method.

In detail: A proxy [POSA1] [GoF95] represents the interface of an active object and a servant [OMG98a] provides the active object’s implementation. Both the proxy and the servant run in separate threads so that method invocations and method executions can run concurrently. The proxy runs in the client thread, while the servant runs in a different thread.

At run-time the proxy transforms the client’s method invocations into method requests, which are stored in an activation list by a scheduler. The scheduler’s event loop runs continuously in the same thread as the servant, dequeueing method requests from the activation list and dispatching them on the servant. Clients can obtain the result of a method’s execution via a future returned by the proxy.

An active object consists of six components:

A proxy [POSA1] [GoF95] provides an interface that allows clients to invoke publicly-accessible methods on an active object. The use of a proxy permits applications to program using standard strongly-typed language features, rather than passing loosely-typed messages between threads. The proxy resides in the client’s thread.

When a client invokes a method defined by the proxy it triggers the construction of a method request object. A method request contains the context information, such as a method’s parameters, necessary to execute a specific method invocation and return any result to the client. A method request class defines an interface for executing the methods of an active object. This interface also contains guard methods that can be used to determine when a method request can be executed. For every public method offered by a proxy that requires synchronized access in the active object, the method request class is subclassed to create a concrete method request class.

A proxy inserts the concrete method request it creates into an activation list. This list maintains a bounded buffer of pending method requests created by the proxy and keeps track of which method requests can execute. The activation list decouples the client thread where the proxy resides from the thread where the servant method is executed, so the two threads can run concurrently. The internal state of the activation list must therefore be serialized to protect it from concurrent access.

A scheduler runs in a different thread than its client proxies, namely in the active object’s thread. It decides which method request to execute next on an active object. This scheduling decision is based on various criteria, such as ordering—the order in which methods are called on the active object—or certain properties of an active object, such as its state. A scheduler can evaluate these properties using the method requests’ guards, which determine when it is possible to execute the method request [Lea99a]. A scheduler uses an activation list to manage method requests that are pending execution. Method requests are inserted in an activation list by a proxy when clients invoke one of its methods.

A servant defines the behavior and state that is modeled as an active object. The methods a servant implements correspond to the interface of the proxy and method requests the proxy creates. It may also contain other predicate methods that method requests can use to implement their guards. A servant method is invoked when its associated method request is executed by a scheduler. Thus, it executes in its scheduler’s thread.

When a client invokes a method on a proxy it receives a future [Hal85] [LS88]. This future allows the client to obtain the result of the method invocation after the servant finishes executing the method. Each future reserves space for the invoked method to store its result. When a client wants to obtain this result, it can rendezvous with the future, either blocking or polling until the result is computed and stored into the future.

The class diagram for the Active Object pattern is shown below:

The behavior of the Active Object pattern can be divided into three phases:

Five activities show how to implement the Active Object pattern.

For each remote consumer in our gateway example there is a consumer handler containing a TCP connection to a consumer process running on a remote machine. Each consumer handler contains a message queue modeled as an active object and implemented with an MQ_Servant. This active object stores messages passed from suppliers to the gateway while they are waiting to be sent to the remote consumer.2 The following C++ class illustrates the MQ_Servant class:

For each remote consumer in our gateway example there is a consumer handler containing a TCP connection to a consumer process running on a remote machine. Each consumer handler contains a message queue modeled as an active object and implemented with an MQ_Servant. This active object stores messages passed from suppliers to the gateway while they are waiting to be sent to the remote consumer.2 The following C++ class illustrates the MQ_Servant class:

class MQ_Servant {

public:

// Constructor and destructor.

MQ_Servant (size_t mq_size);

~MQ_Servant ();

// Message queue implementation operations.

void put (const Message &msg);

Message get ();

// Predicates.

bool empty () const;

bool full () const;

private:

// Internal queue representation, e.g., a circular

// array or a linked list, that does not use any

// internal synchronization mechanism.

};

The put() and get() methods implement the message insertion and removal operations on the queue, respectively. The servant defines two predicates, empty() and full(), that distinguish three internal states: empty, full, and neither empty nor full. These predicates are used to determine when put() and get() methods can be called on the servant.

In general, the synchronization mechanisms that protect a servant’s critical sections from concurrent access should not be tightly coupled with the servant, which should just implement application functionality. Instead, the synchronization mechanisms should be associated with the method requests. This design avoids the inheritance anomaly problem [MWY91], which inhibits the reuse of servant implementations if subclasses require different synchronization policies than base classes. Thus, a change to the synchronization constraints of the active object need not affect its servant implementation.

The MQ_Servant class is designed to omit synchronization mechanisms from a servant. The method implementations in the MQ_Servant class, which are omitted for brevity, therefore need not contain any synchronization mechanisms.

The MQ_Servant class is designed to omit synchronization mechanisms from a servant. The method implementations in the MQ_Servant class, which are omitted for brevity, therefore need not contain any synchronization mechanisms.

In our gateway the MQ_Proxy provides the following interface to the MQ_Servant defined in implementation activity 1 (375):

In our gateway the MQ_Proxy provides the following interface to the MQ_Servant defined in implementation activity 1 (375):

class MQ_Proxy {

public:

// Bound the message queue size.

enum { MQ_MAX_SIZE = /* … */ };

MQ_Proxy (size_t size = MQ_MAX_SIZE):

scheduler_ (size), servant_ (size) { }

// Schedule <put> to execute on the active object.

void put (const Message &msg) {

Method_Request *mr = new Put (servant_, msg);

scheduler_.insert (mr);

}

// Return a <Message_Future> as the “future” result of

// an asynchronous <get> method on the active object.

Message_Future get () {

Message_Future result;

Method_Request *mr = new Get (servant_, result);

scheduler_.insert (mr);

return result;

}

// empty() and full() predicate implementations …

private:

// The servant that implements the active object

// methods and a scheduler for the message queue.

MQ_Servant servant_;

MQ_Scheduler scheduler_;

};

The MP_Proxy is a factory [GoF95] that constructs instances of method requests and passes them to a scheduler, which queues them for subsequent execution in a separate thread.

Multiple client threads in a process can share the same proxy. A proxy method need not be serialized because it does not change state after it is created. Its scheduler and activation list are responsible for any necessary internal serialization.

Our gateway example contains many supplier handlers that receive and route messages to peers via many consumer handlers. Several supplier handlers can invoke methods using the proxy that belongs to a single consumer handler without the need for any explicit synchronization.

Our gateway example contains many supplier handlers that receive and route messages to peers via many consumer handlers. Several supplier handlers can invoke methods using the proxy that belongs to a single consumer handler without the need for any explicit synchronization.

The methods in a method request class must be defined by subclasses. There should be one concrete method request class for each method defined in the proxy. The can_run() method is often implemented with the help of the servant’s predicates.

In our gateway example a Method_Request base class defines two virtual hook methods, which we call can_run() and call():

In our gateway example a Method_Request base class defines two virtual hook methods, which we call can_run() and call():

class Method_Request {

public:

// Evaluate the synchronization constraint.

virtual bool can_run () const = 0

// Execute the method.

virtual void call () = 0;

};

We then define two subclasses of Method_Request: class Put corresponds to the put() method call on a proxy and class Get corresponds to the get() method call. Both classes contain a pointer to the MQ_Servant. The Get class can be implemented as follows:

class Get : public Method_Request {

public:

Get (MQ_Servant *rep, const Message_Future &f)

: servant_ (rep), result_ (f) { }

virtual bool can_run () const {

// Synchronization constraint: cannot call a

// <get> method until queue is not empty.

return !servant_->empty ();

}

virtual void call () {

// Bind dequeued message to the future result.

result_ = servant_->get ();

}

private:

MQ_Servant *servant_;

Message_Future result_;

};

Note how the can_run() method uses the MQ_Servant’s empty() predicate to allow a scheduler to determine when the Get method request can execute. When the method request does execute, the active object’s scheduler invokes its call() hook method. This call() hook uses the Get method request’s run-time binding to MQ_Servant to invoke the servant’s get() method, which is executed in the context of that servant. It does not require any explicit serialization mechanisms, however, because the active object’s scheduler enforces all the necessary synchronization constraints via the method request can_run() methods.

The proxy passes a future to the constructors of the corresponding method request classes for each of its public two-way methods in the proxy that returns a value, such as the get() method in our gateway example. This future is returned to the client thread that calls the method, as discussed in implementation activity 5 (384).

The activation list is often designed using concurrency control patterns, such as Monitor Object (399), that use common synchronization mechanisms like condition variables and mutexes [Ste98]. When these are used in conjunction with a timer mechanism, a scheduler thread can determine how long to wait for certain operations to complete. For example, timed waits can be used to bound the time spent trying to remove a method request from an empty activation list or to insert into a full activation list.3 If the timeout expires, control returns to the calling thread and the method request is not executed.

For our gateway example we specify a class Activation_List as follows:

For our gateway example we specify a class Activation_List as follows:

class Activation_List {

public:

// Block for an “infinite” amount of time waiting

// for <insert> and <remove> methods to complete.

enum { INFINITE = -1 };

// Define a “trait”.

typedef Activation_List_Iterator iterator;

// Constructor creates the list with the specified

// high water mark that determines its capacity.

Activation_List (size_t high_water_mark);

// Insert <method_request> into the list, waiting up

// to <timeout> amount of time for space to become

// available in the queue. Throws the <System_Ex>

// exception if <timeout> expires.

void insert (Method_Request *method_request,

Time_Value *timeout = 0);

// Remove <method_request> from the list, waiting up

// to <timeout> amount of time for a <method_request>

// to be inserted into the list. Throws the

// <System_Ex> exception if <timeout> expires.

void remove (Method_Request *&method_request,

Time_Value *timeout = 0);

private:

// Synchronization mechanisms, e.g., condition

// variables and mutexes, and the queue implemen-

// tation, e.g., an array or a linked list, go here.

};

The insert() and remove() methods provide a ‘bounded-buffer’ producer/consumer [Grand98] synchronization model. This design allows a scheduler thread and multiple client threads to remove and insert Method_Requests simultaneously without corrupting the internal state of an Activation_List. Client threads play the role of producers and insert Method_Requests via a proxy. A scheduler thread plays the role of a consumer. It removes Method_Requests from the Activation_List when their guards evaluate to ‘true’. It then invokes their call() hooks to execute servant methods.

We define the following MQ_Scheduler class for our gateway:

We define the following MQ_Scheduler class for our gateway:

class MQ_Scheduler {

public:

// Initialize the <Activation_List> to have

// the specified capacity and make <MQ_Scheduler>

// run in its own thread of control.

MQ_Scheduler (size_t high_water_mark);

// … Other constructors/destructors, etc.

// Put <Method_Request> into <Activation_List>. This

// method runs in the thread of its client, i.e.

// in the proxy’s thread.

void insert (Method_Request *mr) {

act_list_.insert (mr);

}

// Dispatch the method requests on their servant

// in its scheduler’s thread of control.

virtual void dispatch ();

private:

// List of pending Method_Requests.

Activation_List act_list_;

// Entry point into the new thread.

static void *svc_run (void *arg);

};

A scheduler executes its dispatch() method in a different thread of control than its client threads. Each client thread uses a proxy to insert method requests in an active object scheduler’s activation list. This scheduler monitors the activation list in its own thread, selecting a method request whose guard evaluates to ‘true,’ that is, whose synchronization constraints are met. This method request is then removed from the activation list and executed by invoking its call() hook method.

In our gateway example the constructor of MQ_Scheduler initializes the Activation_List and uses the Thread_Manager wrapper facade (47) to spawn a new thread of control:

In our gateway example the constructor of MQ_Scheduler initializes the Activation_List and uses the Thread_Manager wrapper facade (47) to spawn a new thread of control:

MQ_Scheduler::MQ_Scheduler (size_t high_water_mark):

act_queue_ (high_water_mark) {

// Spawn separate thread to dispatch method requests.

Thread_Manager::instance ()->spawn (&svc_run, this);

}

The Thread_Manager::spawn() method is passed a pointer to a static MQ_Scheduler::svc_run() method and a pointer to the MQ_Scheduler object. The svc_run() static method is the entry point into a newly created thread of control, which runs the svc_run() method. This method is simply an adapter [GoF95] that calls the MQ_Scheduler::dispatch() method on the this parameter:

void *MQ_Scheduler::svc_run (void *args) {

MQ_Scheduler *this_obj =

static_cast<MQ_Scheduler *> (args);

this_obj->dispatch ();

}

The dispatch() method determines the order in which Put and Get method requests are processed based on the underlying MQ_Servant predicates empty() and full(). These predicates reflect the state of the servant, such as whether the message queue is empty, full, or neither.

By evaluating these predicate constraints via the method request can_run() methods, a scheduler can ensure fair access to the MQ_Servant:

virtual void MQ_Scheduler::dispatch () {

// Iterate continuously in a separate thread.

for (;;) {

Activation_List::iterator request;

// The iterator’s <begin> method blocks

// when the <Activation_List> is empty.

for (request = act_list_.begin ();

request != act_list_.end ();

++request) {

// Select a method request whose

// guard evaluates to true.

if ((*request).can_run ()) {

// Take <request> off the list.

act_list_.remove (*request);

(*request).call ();

delete *request;

}

// Other scheduling activities can go here,

// e.g., to handle when no <Method_Request>s

// in the <Activation_List> have <can_run>

// methods that evaluate to true.

}

}

}

In our example the MQ_Scheduler::dispatch() implementation iterates continuously, executing the next method request whose can_run() method evaluates to true. Scheduler implementations can be more sophisticated, however, and may contain variables that represent the servant’s synchronization state.

For example, to implement a multiple-readers/single-writer synchronization policy a prospective writer will call ‘write’ on the proxy, passing the data to write. Similarly, readers will call ‘read’ and obtain a future as their return value. The active object’s scheduler maintains several counter variables that keep track of the synchronization state, such as the number of read and write requests. The scheduler also maintains knowledge about the identity of the prospective writers.

The active object’s scheduler can use these synchronization state counters to determine when a single writer can proceed, that is, when the current number of readers is zero and no write request from a different writer is currently pending execution. When such a write request arrives, a scheduler may choose to dispatch the writer to ensure fairness. In contrast, when read requests arrive and the servant can satisfy them because it is not empty, its scheduler can block all writing activity and dispatch read requests first.

The synchronization state counter variable values described above are independent of the servant’s state because they are only used by its scheduler to enforce the correct synchronization policy on behalf of the servant. The servant focuses solely on its task to temporarily store client-specific application data. In contrast, its scheduler focuses on coordinating multiple readers and writers. This design enhances modularity and reusability.

A scheduler can support multiple synchronization policies by using the Strategy pattern [GoF95]. Each synchronization policy is encapsulated in a separate strategy class. The scheduler, which plays the context role in the Strategy pattern, is then configured with a particular synchronization strategy it uses to execute all subsequent scheduling decisions.

The future construct allows two-way asynchronous invocations [ARSK00] that return a value to the client. When a servant completes the method execution, it acquires a write lock on the future and updates the future with its result. Any client threads that are blocked waiting for the result are awakened and can access the result concurrently. A future can be garbage-collected after the writer and all readers threads no longer reference it. In languages like C++, which do not support garbage collection, futures can be reclaimed when they are no longer in use via idioms like Counted Pointer [POSA1].

In our gateway example the get() method invoked on the MQ_Proxy ultimately results in the Get::call() method being dispatched by the MQ_Scheduler, as shown in implementation activity 2 (378). The MQ_Proxy::get() method returns a value, therefore a Message_Future is returned to the client that calls it:

In our gateway example the get() method invoked on the MQ_Proxy ultimately results in the Get::call() method being dispatched by the MQ_Scheduler, as shown in implementation activity 2 (378). The MQ_Proxy::get() method returns a value, therefore a Message_Future is returned to the client that calls it:

class Message_Future {

public:

// Binds <this> and <pre> to the same <Msg._Future_Imp.>

Message_Future (const Message_Future &f);

// Initializes <Message_Future_Implementation> to

// point to <message> m immediately.

Message_Future (const Message &message);

// Creates a <Msg._Future_Imp.>

Message_Future ();

// Binds <this> and <pre> to the same

// <Msg._Future_Imp.>, which is created if necessary.

void operator= (const Message_Future &f);

// Block upto <timeout> time waiting to obtain result

// of an asynchronous method invocation. Throws

// <System_Ex> exception if <timeout> expires.

Message result (Time_Value *timeout = 0) const;

private:

// <Message_Future_Implementation> uses the Counted

// Pointer idiom.

Message_Future_Implementation *future_impl_;

};

The Message_Future is implemented using the Counted Pointer idiom [POSA1]. This idiom simplifies memory management for dynamically allocated C++ objects by using a reference counted Message_Future_Implementation body that is accessed solely through the Message_Future handle.

In general a client may choose to evaluate the result value from a future immediately, in which case the client blocks until the scheduler executes the method request. Conversely, the evaluation of a return result from a method invocation on an active object can be deferred. In this case the client thread and the thread executing the method can both proceed asynchronously.

In our gateway example a consumer handler running in a separate thread may choose to block until new messages arrive from suppliers:

In our gateway example a consumer handler running in a separate thread may choose to block until new messages arrive from suppliers:

MQ_Proxy message_queue; // Obtain future and block thread until message arrives. Message_Future future = message_queue.get (); Message msg = future.result (); // Transmit message to the consumer. send (msg);

Conversely, if messages are not available immediately, a consumer handler can store the Message_Future return value from message_queue and perform other ‘book-keeping’ tasks, such as exchanging keep-alive messages to ensure its consumer is still active. When the consumer handler is finished with these tasks, it can block until a message arrives from suppliers:

// Obtain a future (does not block the client). Message_Future future = message_queue.get (); // Do something else here… // Evaluate future and block if result is not available. Message msg = future.result (); send (msg);

In our gateway example, the gateway’s supplier and consumer handlers are local proxies [POSA1] [GoF95] for remote suppliers and consumers, respectively. Supplier handlers receive messages from remote suppliers and inspect address fields in the messages. The address is used as a key into a routing table that identifies which remote consumer will receive the message.

The routing table maintains a map of consumer handlers, each of which is responsible for delivering messages to its remote consumer over a separate TCP connection. To handle flow control over various TCP connections, each consumer handler contains a message queue implemented using the Active Object pattern. This design decouples supplier and consumer handlers so that they can run concurrently and block independently.

The Consumer_Handler class is defined as follows:

class Consumer_Handler {

public:

// Constructor spawns the active object’s thread.

Consumer_Handler ();

// Put the message into the queue.

void put (const Message &msg) { msg_q_.put (msg); }

private:

MQ_Proxy msg_q_; // Proxy to the Active Object.

SOCK_Stream connection_; // Connection to consumer.

// Entry point into the new thread.

static void *svc_run (void *arg);

};

Supplier_Handlers running in their own threads can put messages in the appropriate Consumer_Handler’s message queue active object:

void Supplier_Handler::route_message (const Message &msg)

{

// Locate the appropriate consumer based on the

// address information in <Message>.

Consumer_Handler *consumer_handler =

routing_table_.find (msg.address ());

// Put the Message into the Consumer Handler’s queue.

consumer_handler->put (msg);

}

To process the messages inserted into its queue, each Consumer_Handler uses the Thread_Manager wrapper facade (47) to spawn a separate thread of control in its constructor:

Consumer_Handler::Consumer_Handler () {

// Spawn a separate thread to get messages from the

// message queue and send them to the consumer.

Thread_Manager::instance ()->spawn (&svc_run, this);

// …

}

This new thread executes the svc_run() method entry point, which gets the messages placed into the queue by supplier handler threads, and sends them to the consumer over the TCP connection:

void *Consumer_Handler::svc_run (void *args) {

Consumer_Handler *this_obj =

static_cast<Consumer_Handler *> (args);

for (;;) {

// Block thread until a <Message> is available.

Message msg = this_obj->msg_q_.get ().result ();

// Transmit <Message> to the consumer over the

// TCP connection.

this_obj->connection_.send (msg, msg.length ());

}

}

Every Consumer_Handler object uses the message queue that is implemented as an active object and runs in its own thread. Therefore its send() operation can block without affecting the quality of service of other Consumer_Handler objects.

Multiple Roles. If an active object implements multiple roles, each used by particular types of client, a separate proxy can be introduced for each role. By using the Extension Interface pattern (141), clients can obtain the proxies they need. This design helps separate concerns because a client only sees the particular methods of an active object it needs for its own operation, which further simplifies an active object’s evolution. For example, new services can be added to the active object by providing new extension interface proxies without changing existing ones. Clients that do not need access to the new services are unaffected by the extension and need not even be recompiled.

Integrated Scheduler. To reduce the number of components needed to implement the Active Object pattern, the roles of the proxy and servant can be integrated into its scheduler component. Likewise, the transformation of a method call on a proxy into a method request can also be integrated into the scheduler. However, servants still execute in a different thread than proxies.

Here is an implementation of the message queue using an integrated scheduler:

Here is an implementation of the message queue using an integrated scheduler:

class MQ_Scheduler {

public:

MQ_Scheduler (size_t size)

: servant_ (size), act_list_ (size) { }

// … other constructors/destructors, etc.

void put (const Message m) {

Method_Request *mr = new Put (&servant_, m);

act_list_.insert (mr);

}

Message_Future get () {

Message_Future result;

Method_Request *mr = new Get (&servant_, result);

act_list_.insert (mr);

return result;

}

// Other methods …

private:

MQ_Servant servant_;

Activation_List act_list_;

// …

};

By centralizing the point at which method requests are generated, the Active Object pattern implementation can be simplified because it has fewer components. The drawback, of course, is that a scheduler must know the type of the servant and proxy, which makes it hard to reuse the same scheduler for different types of active objects.

Message Passing. A further refinement of the integrated scheduler variant is to remove the proxy and servant altogether and use direct message passing between the client thread and the active object’s scheduler thread.

For example, consider the following scheduler implementation:

For example, consider the following scheduler implementation:

class Scheduler {

public:

Scheduler (size_t size): act_list_ (size) { }

// … other constructors/destructors, etc.

void insert (Message_Request *message_request) {

act_list_.insert (message_request);

}

virtual void dispatch () {

for (;;) {

Message_Request *mr;

// Block waiting for next request to arrive.

act_list_.remove (mr);

// Process the message request <mr>…

}

}

// …

private:

Activation_List act_list_;

// …

};

In this variant, there is no proxy, so clients create an appropriate type of message request directly and call insert() themselves, which enqueues the request into the activation list. Likewise, there is no servant, so the dispatch() method running in a scheduler’s thread simply dequeues the next message request and processes the request according to its type.

In general it is easier to develop a message-passing mechanism than it is to develop an active object because there are fewer components. Message passing can be more tedious and error-prone, however, because application developers, not active object developers, must program the proxy and servant logic. As a result, message passing implementations are less type-safe than active object implementations because their interfaces are implicit rather than explicit. In addition, it is harder for application developers to distribute clients and servers via message passing because there is no proxy to encapsulate the marshaling and demarshaling of data.

Polymorphic Futures [LK95]. A polymorphic future allows parameterization of the eventual result type represented by the future and enforces the necessary synchronization. In particular, a polymorphic future describes a typed future that client threads can use to retrieve a method request’s result. Whether a client blocks on a future depends on whether or not a result has been computed.

The following class is a polymorphic future template for C++:

The following class is a polymorphic future template for C++:

template <class TYPE>

class Future {

// This class can be used to return results from

// two-way asynchronous method invocations.

public:

// Constructor and copy constructor that binds <this>

// and <r> to the same <Future> representation.

Future ();

Future (const Future<TYPE> &r);

// Destructor.

~Future ();

// Assignment operator that binds <this> and <r> to

// the same <Future> representation.

void operator = (const Future<TYPE> &r);

// Cancel a <Future> and reinitialize it.

void cancel ();

// Block upto <timeout> time waiting to obtain result

// of an asynchronous method invocation. Throws

// <System_Ex> exception if <timeout> expires.

TYPE result (Time_Value *timeout = 0) const;

private:

// …

};

A client can use a polymorphic future as follows:

try {

// Obtain a future (does not block the client).

Future<Message> future = message_queue.get ();

// Do something else here…

// Evaluate future and block for up to 1 second

// waiting for the result to become available.

Time_Value timeout (1);

Message msg = future.result (&timeout);

// Do something with the result …

} catch (System_Ex &ex) {

if (ex.status () == ETIMEDOUT) /* handle timeout */

}

Timed method invocations. The activation list illustrated in implementation activity 3 (379) defines a mechanism that can bound the amount of time a scheduler waits to insert or remove a method request. Although the examples we showed earlier in the pattern do not use this feature, many applications can benefit from timed method invocations. To implement this feature we can simply export the timeout mechanism via schedulers and proxies.

In our gateway example, the MQ_Proxy can be modified so that its methods allow clients to bound the amount of time they are willing to wait to execute:

In our gateway example, the MQ_Proxy can be modified so that its methods allow clients to bound the amount of time they are willing to wait to execute:

class MQ_Proxy {

public:

// Schedule <put> to execute, but do not block longer

// than <timeout> time. Throws <System_Ex>

// exception if <timeout> expires.

void put (const Message &msg,

Time_Value *timeout = 0);

// Return a <Message_Future> as the “future” result of

// an asynchronous <get> method on the active object,

// but do not block longer than <timeout> amount of

// time. Throws the <System_Ex> exception if

// <timeout> expires.

Message_Future get (Time_Value *timeout = 0);

};

If timeout is 0 both get() and put() will block indefinitely until Message is either removed from or inserted into the scheduler’s activation list, respectively. If timeout expires, the System_Ex exception defined in the Wrapper Facade pattern (47) is thrown with a status() value of ETIMEDOUT and the client must catch it.

To complete our support for timed method invocations, we also must add timeout support to the MQ_Scheduler:

class MQ_Scheduler {

public:

// Insert a method request into the <Activation_List>

// This method runs in the thread of its client, i.e.

// in the proxy’s thread, but does not block longer

// than <timeout> amount of time. Throws the

// <System_Ex> exception if the <timeout> expires.

void insert (Method_Request *method_request,

Time_Value *timeout) {

act_list_.insert (method_request, timeout);

}

}

Distributed Active Object. In this variant a distribution boundary exists between a proxy and a scheduler, rather than just a threading boundary. This pattern variant introduces two new participants:

The Distributed Active Object pattern variant is therefore similar to the Broker pattern [POSA1]. The primary difference is that a Broker usually coordinates the processing of many objects, whereas a distributed active object just handles a single object.

Thread Pool Active Object. This generalization of the Active Object pattern supports multiple servant threads per active object to increase throughput and responsiveness. When not processing requests, each servant thread in a thread pool active object blocks on a single activation list. The active object scheduler assigns a new method request to an available servant thread in the pool as soon as one is ready to be executed.

A single servant implementation is shared by all the servant threads in the pool. This design cannot therefore be used if the servant methods do not protect their internal state via some type of synchronization mechanism, such as a mutex.

Additional variants of active objects can be found in [Lea99a], Chapter 5: Concurrency Control and Chapter 6: Services in Threads.

ACE Framework [Sch97]. Reusable implementations of the method request, activation list, and future components in the Active Object pattern are provided in the ACE framework. The corresponding classes in ACE are called ACE_Method_Request, ACE_Activation_Queue, and ACE_Future. These components have been used to implement many production concurrent and networked systems [Sch96].

Siemens MedCom. The Active Object pattern is used in the Siemens MedCom framework, which provides a black-box component-based framework for electronic medical imaging systems. MedCom employs the Active Object pattern in conjunction with the Command Processor pattern [POSA1] to simplify client windowing applications that access patient information on various medical servers [JWS98].

Siemens FlexRouting - Automatic Call Distribution [Flex98]. This call center management system uses the Thread Pool variant of the Active Object pattern. Services that a call center offers are implemented as applications of their own. For example, there may be a hot-line application, an ordering application, and a product information application, depending on the types of service offered. These applications support operator personnel that serve various customer requests. Each instance of these applications is a separate servant component. A ‘FlexRouter’ component, which corresponds to the scheduler, dispatches incoming customer requests automatically to operator applications that can service these requests.

Java JDK 1.3 introduced a mechanism for executing timer-based tasks concurrently in the classes java.util.Timer and java.util.TimerTask. Whenever the scheduled execution time of a task occurs it is executed. Specifically, Timer offers different scheduling functions to clients that allow them to specify when and how often a task should be executed. One-shot tasks are straightforward and recurring tasks can be scheduled at periodic intervals. The scheduling calls are executed in the client’s thread, while the tasks themselves are executed in a thread owned by the Timer object. A Timer internal task queue is protected by locks because the two threads outlined above operate on it concurrently.

The task queue is implemented as a priority queue so that the next TimerTask to expire can be identified efficiently. The timer thread simply waits until this expiration. There are no explicit guard methods and predicates because determining when a task is ‘ready for execution’ simply depends on the arrival of the scheduled time.

Tasks are implemented as subclasses of TimerTask that override its run() hook method. The TimerTask subclasses unify the concepts behind method requests and servants by offering just one class and one interface method via TimerTask.run().

The scheme described above simplifies the Active Object machinery for the purpose of timed execution. There is no proxy and clients call the scheduler—the Timer object—directly. Clients do not invoke an ordinary method and therefore the concurrency is not transparent. Moreover, there are no return value or future objects linked to the run() method. An application can employ several active objects by constructing several Timer objects, each with its own thread and task queue.

Chef in a restaurant. A real-life example of the Active Object pattern is found in restaurants. Waiters and waitresses drop off customer food requests with the chef and continue to service requests from other customers asynchronously while the food is being prepared. The chef keeps track of the customer food requests via some type of worklist. However, the chef may cook the food requests in a different order than they arrived to use available resources, such as stove tops, pots, or pans, most efficiently. When the food is cooked, the chef places the results on top of a counter along with the original request so the waiters and waitresses can rendezvous to pick up the food and serve their customers.

The Active Object pattern provides the following benefits:

Enhances application concurrency and simplifies synchronization complexity. Concurrency is enhanced by allowing client threads and asynchronous method executions to run simultaneously. Synchronization complexity is simplified by using a scheduler that evaluates synchronization constraints to guarantee serialized access to servants, in accordance with their state.

Transparently leverages available parallelism. If the hardware and software platforms support multiple CPUs efficiently, this pattern can allow multiple active objects to execute in parallel, subject only to their synchronization constraints.

Method execution order can differ from method invocation order. Methods invoked asynchronously are executed according to the synchronization constraints defined by their guards and by scheduling policies. Thus, the order of method execution can differ from the order of method invocation order. This decoupling can help improve application performance and flexibility.

However, the Active Object pattern encounters several liabilities:

Performance overhead. Depending on how an active object’s scheduler is implemented—for example in user-space versus kernel-space [SchSu95]—context switching, synchronization, and data movement overhead may occur when scheduling and executing active object method invocations. In general the Active Object pattern is most applicable for relatively coarse-grained objects. In contrast, if the objects are fine-grained, the performance overhead of active objects can be excessive, compared with related concurrency patterns, such as Monitor Object (399).

Complicated debugging. It is hard to debug programs that use the Active Object pattern due to the concurrency and non-determinism of the various active object schedulers and the underlying operating system thread scheduler. In particular, method request guards determine the order of execution. However, the behavior of these guards may be hard to understand and debug. Improperly defined guards can cause starvation, which is a condition where certain method requests never execute. In addition, program debuggers may not support multi-threaded applications adequately.

The Monitor Object pattern (399) ensures that only one method at a time executes within a thread-safe passive object, regardless of the number of threads that invoke the object’s methods concurrently. In general, monitor objects are more efficient than active objects because they incur less context switching and data movement overhead. However, it is harder to add a distribution boundary between client and server threads using the Monitor Object pattern.

It is instructive to compare the Active Object pattern solution in the Example Resolved section with the solution presented in the Monitor Object pattern. Both solutions have similar overall application architectures. In particular, the Supplier_Handler and Consumer_Handler implementations are almost identical.

The primary difference is that the Message_Queue in the Active Object pattern supports sophisticated method request queueing and scheduling strategies. Similarly, because active objects execute in different threads than their clients, there are situations where active objects can improve overall application concurrency by executing multiple operations asynchronously. When these operations complete, clients can obtain their results via futures [Hal85] [LS88].

On the other hand, the Message_Queue itself is easier to program and often more efficient when implemented using the Monitor Object pattern than the Active Object pattern.

The Reactor pattern (179) is responsible for demultiplexing and dispatching multiple event handlers that are triggered when it is possible to initiate an operation without blocking. This pattern is often used in lieu of the Active Object pattern to schedule callback operations to passive objects. Active Object also can be used in conjunction with the Reactor pattern to form the Half-Sync/Half-Async pattern (423).

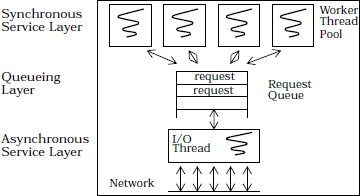

The Half-Sync/Half-Async pattern (423) decouples synchronous I/O from asynchronous I/O in a system to simplify concurrent programming effort without degrading execution efficiency. Variants of this pattern use the Active Object pattern to implement its synchronous task layer, the Reactor pattern (179) to implement the asynchronous task layer, and a Producer-Consumer pattern [Lea99a], such as a variant of the Pipes and Filters pattern [POSA1] or the Monitor Object pattern (399), to implement the queueing layer.

The Command Processor pattern [POSA1] separates issuing requests from their execution. A command processor, which corresponds to the Active Object pattern’s scheduler, maintains pending service requests that are implemented as commands [GoF95]. Commands are executed on suppliers, which correspond to servants. The Command Processor pattern does not focus on concurrency, however. In fact, clients, the command processor, and suppliers often reside in the same thread of control. Likewise, there are no proxies that represent the servants to clients. Clients create commands and pass them directly to the command processor.

The Broker pattern [POSA1] defines many of the same components as the Active Object pattern. In particular, clients access brokers via proxies and servers implement remote objects via servants. One difference between Broker and Active Object is that there is a distribution boundary between proxies and servants in the Broker pattern, as opposed to a threading boundary between proxies and servants in the Active Object pattern. Another difference is that active objects typically have just one servant, whereas a broker can have many servants.

The genesis for documenting Active Object as a pattern originated with Greg Lavender [PLoPD2]. Ward Cunningham helped shape this version of the Active Object pattern. Bob Laferriere and Rainer Blome provided useful suggestions that improved the clarity of the pattern’s Implementation section. Thanks to Doug Lea for providing many additional insights in [Lea99a].

The Monitor Object design pattern synchronizes concurrent method execution to ensure that only one method at a time runs within an object. It also allows an object’s methods to cooperatively schedule their execution sequences.

Thread-safe Passive Object

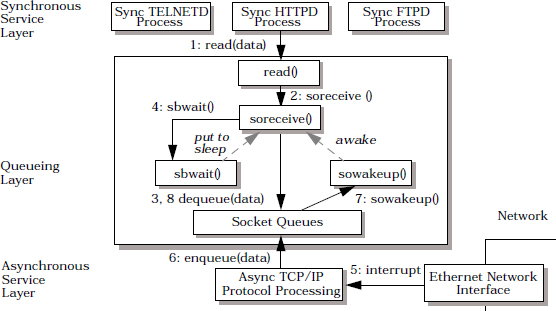

Let us reconsider the design of the communication gateway described in the Active Object pattern (369).4

The gateway process is a mediator [GoF95] that contains multiple supplier and consumer handler objects. These objects run in separate threads and route messages from one or more remote suppliers to one or more remote consumers. When a supplier handler thread receives a message from a remote supplier, it uses an address field in the message to determine the corresponding consumer handler. The handler’s thread then delivers the message to its remote consumer.

When suppliers and consumers reside on separate hosts, the gateway uses a connection-oriented protocol, such as TCP [Ste93], to provide reliable message delivery and end-to-end flow control. Flow control is a protocol mechanism that blocks senders when they produce messages more rapidly than receivers can process them. The entire gateway should not block while waiting for flow control to abate on outgoing TCP connections, however. In particular, incoming TCP connections should continue to be processed and messages should continue to be sent over any non-flow-controlled TCP connections.

To minimize blocking, each consumer handler can contain a thread-safe message queue. Each queue buffers new routing messages it receives from its supplier handler threads. This design decouples supplier handler threads in the gateway process from consumer handler threads, so that all threads can run concurrently and block independently when flow control occurs on various TCP connections.

One way to implement a thread-safe message queue is to apply the Active Object pattern (369) to decouple the thread used to invoke a method from the thread used to execute the method. Active Object may be inappropriate, however, if the entire infrastructure introduced by this pattern is unnecessary. For example, a message queue’s enqueue and dequeue methods may not require sophisticated scheduling strategies. In this case, implementing the Active Object pattern’s method request, scheduler and activation list participants incurs unnecessary performance overhead, and programming effort.

Instead, the implementation of the thread-safe message queue must be efficient to avoid degrading performance unnecessarily. To avoid tight coupling of supplier and consumer handler implementations, the mechanism should also be transparent to implementors of supplier handlers. Varying either implementation independently would otherwise become prohibitively complex.

Multiple threads of control accessing the same object concurrently.

Many applications contain objects whose methods are invoked concurrently by multiple client threads. These methods often modify the state of their objects. For such concurrent applications to execute correctly, therefore, it is necessary to synchronize and schedule access to the objects.

In the presence of this problem four forces must be addressed:

Synchronize the access to an object’s methods so that only one method can execute at any one time.

In detail: for each object accessed concurrently by multiple client threads, define it as a monitor object. Clients can access the functions defined by a monitor object only through its synchronized methods. To prevent race conditions on its internal state, only one synchronized method at a time can run within a monitor object. To serialize concurrent access to an object’s state, each monitor object contains a monitor lock. Synchronized methods can determine the circumstances under which they suspend and resume their execution, based on one or more monitor conditions associated with a monitor object.

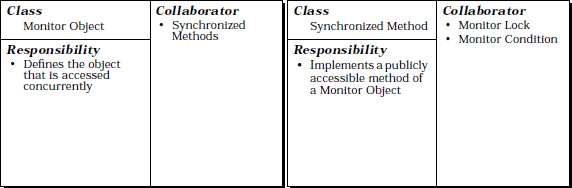

There are four participants in the Monitor Object pattern:

A monitor object exports one or more methods. To protect the internal state of the monitor object from uncontrolled changes and race conditions, all clients must access the monitor object only through these methods. Each method executes in the thread of the client that invokes it, because a monitor object does not have its own thread of control.5

Synchronized methods implement the thread-safe functions exported by a monitor object. To prevent race conditions, only one synchronized method can execute within a monitor object at any one time. This rule applies regardless of the number of threads that invoke the object’s synchronized methods concurrently, or the number of synchronized methods in the object’s class.

A consumer handler’s message queue in the gateway application can be implemented as a monitor object by converting its put() and get() operations into synchronized methods. This design ensures that routing messages can be inserted and removed concurrently by multiple threads without corrupting the queue’s internal state.

A consumer handler’s message queue in the gateway application can be implemented as a monitor object by converting its put() and get() operations into synchronized methods. This design ensures that routing messages can be inserted and removed concurrently by multiple threads without corrupting the queue’s internal state.

Each monitor object contains its own monitor lock. Synchronized methods use this lock to serialize method invocations on a per-object basis. Each synchronized method must acquire and release an object’s monitor lock when entering or exiting the object. This protocol ensures the monitor lock is held whenever a synchronized method performs operations that access or modify the state of its object.

Monitor condition. Multiple synchronized methods running in separate threads can schedule their execution sequences cooperatively by waiting for and notifying each other via monitor conditions associated with their monitor object. Synchronized methods use their monitor lock in conjunction with their monitor condition(s) to determine the circumstances under which they should suspend or resume their processing.

In the gateway application a POSIX mutex [IEEE96] can be used to implement the message queue’s monitor lock. A pair of POSIX condition variables can be used to implement the message queue’s not-empty and not-full monitor conditions:

In the gateway application a POSIX mutex [IEEE96] can be used to implement the message queue’s monitor lock. A pair of POSIX condition variables can be used to implement the message queue’s not-empty and not-full monitor conditions:

Note that the not-empty and not-full monitor conditions both share the same monitor lock.

The structure of the Monitor Object pattern is illustrated in the following class diagram:

The collaborations between participants in the Monitor Object pattern divide into four phases:

Four activities illustrate how to implement the Monitor Object pattern.

In our gateway example, each consumer handler contains a message queue and a TCP connection. The message queue can be implemented as a monitor object that buffers messages it receives from supplier handler threads. This buffering helps prevent the entire gateway process from blocking whenever consumer handler threads encounter flow control on TCP connections to their remote consumers. The following C++ class defines the interface for our message queue monitor object:

In our gateway example, each consumer handler contains a message queue and a TCP connection. The message queue can be implemented as a monitor object that buffers messages it receives from supplier handler threads. This buffering helps prevent the entire gateway process from blocking whenever consumer handler threads encounter flow control on TCP connections to their remote consumers. The following C++ class defines the interface for our message queue monitor object:

class Message_Queue {

public:

enum { MAX_MESSAGES = /* … */; };

// The constructor defines the maximum number

// of messages in the queue. This determines

// when the queue is ‘full.’

Message_Queue (size_t max_messages = MAX_MESSAGES);

// Put the <Message> at the tail of the queue.

// If the queue is full, block until the queue

// is not full.

/* synchronized */ void put (const Message &msg);

// Get the <Message> from the head of the queue

// and remove it. If the queue is empty,

// block until the queue is not empty.

/* synchronized */ Message get ();

// True if the queue is empty, else false.

/* synchronized */ bool empty () const;

// True if the queue is full, else false.

/* synchronized */ bool full () const;

private:

// … described later …

};

The Message_Queue monitor object interface exports four synchronized methods. The empty() and full() methods are predicates that clients can use to distinguish three internal queue states: empty, full, and neither empty nor full. The put() and get() methods enqueue and dequeue messages into and from the queue, respectively, and will block if the queue is full or empty.

Two conventions, based on the Thread-Safe Interface pattern (345), can be used to structure the separation of concerns between interface and implementation methods in a monitor object:

Similarly, in accordance with the Thread-Safe Interface pattern, implementation methods should not call any synchronized methods defined in the class interface. This restriction helps to avoid intra-object method deadlock or unnecessary synchronization overhead.

In our gateway, the Message_Queue class defines four implementation methods: put_i(), get_i(), empty_i(), and full_ i():

In our gateway, the Message_Queue class defines four implementation methods: put_i(), get_i(), empty_i(), and full_ i():

class Message_Queue {

public:

// … See above …

private:

// Put the <Message> at the tail of the queue, and

// get the <Message> at its head, respectively.

void put_i (const Message &msg);

Message get_i ();

// True if the queue is empty, else false.

bool empty_i () const;

// True if the queue is full, else false.

bool full_i () const;

};

Implementation methods are often non-synchronized. They must be careful when invoking blocking calls, because the interface method that called the implementation method may have acquired the monitor lock. A blocking thread that owned a lock could therefore delay overall program progress indefinitely.

A monitor object method implementation is responsible for ensuring that it is in a stable state before releasing its lock. Stable states can be described by invariants, such as the need for all elements in a message queue to be linked together via valid pointers. The invariant must hold whenever a monitor object method waits on the corresponding condition variable.

Similarly, when the monitor object is notified and the operating system thread scheduler decides to resume its thread, the monitor object method implementation is responsible for ensuring that the invariant is indeed satisfied before proceeding. This check is necessary because other threads may have changed the state of the object between the notification and the resumption. A a result, the monitor object must ensure that the invariant is satisfied before allowing a synchronized method to resume its execution.

A monitor lock can be implemented using a mutex. A mutex makes collaborating threads wait while the thread holding the mutex executes code in a critical section. Monitor conditions can be implemented using condition variables [IEEE96]. A condition variable can be used by a thread to make itself wait until a particular event occurs or an arbitrarily complex condition expression attains a particular stable state. Condition expressions typically access objects or state variables shared between threads. They can be used to implement the Guarded Suspension pattern [Lea99a].

In our gateway example, the Message_Queue defines its internal state, as illustrated below:

In our gateway example, the Message_Queue defines its internal state, as illustrated below:

class Message_Queue {

// … See above ….

private:

// … See above …

// Internal Queue representation omitted, could be a

// circular array or a linked list, etc.. …

// Current number of <Message>s in the queue.

size_t message_count_;

// The maximum number <Message>s that can be

// in a queue before it’s considered ‘full.’

size_t max_messages_;

// Mutex wrapper facade that protects the queue’s

// internal state from race conditions during

// concurrent access.

mutable Thread_Mutex monitor_lock_;

// Condition variable wrapper facade used in

// conjunction with <monitor_lock_> to make

// synchronized method threads wait until the queue

// is no longer empty.

Thread_Condition not_empty_;

// Condition variable wrapper facade used in

// conjunction with <monitor_lock_> to make

// synchronized method threads wait until the queue

// is no longer full.

Thread_Condition not_full_;

};

A Message_Queue monitor object defines three types of internal state:

The constructor of Message_Queue creates an empty queue and initializes the monitor conditions not_empty_ and not_full_:

The constructor of Message_Queue creates an empty queue and initializes the monitor conditions not_empty_ and not_full_:

Message_Queue::Message_Queue (size_t max_messages)

: not_full_ (monitor_lock_),

not_empty_ (monitor_lock_),

max_messages_ (max_messages),

message_count_ (0) { /* … */ }

In this example, both monitor conditions share the same monitor_lock_. This design ensures that Message_Queue state, such as the message_count_, is serialized properly to prevent race conditions from violating invariants when multiple threads try to put() and get() messages on a queue simultaneously.

In our Message_Queue implementation two pairs of interface and implementation methods check if a queue is empty, which means it contains no messages, or full, which means it contains max_messages_. We show the interface methods first:

In our Message_Queue implementation two pairs of interface and implementation methods check if a queue is empty, which means it contains no messages, or full, which means it contains max_messages_. We show the interface methods first:

bool Message_Queue::empty () const {

Guard<Thread_Mutex> guard (monitor_lock_);

return empty_i ();

}

bool Message_Queue::full () const {

Guard<Thread_Mutex> guard (monitor_lock_);

return full_i ();

}

These methods illustrate a simple example of the Thread-Safe Interface pattern (345). They use the Scoped Locking idiom (325) to acquire and release the monitor lock, then forward immediately to their corresponding implementation methods:

bool Message_Queue::empty_i () const {

return message_count_ == 0;

}

bool Message_Queue::full_i () const {

return message_count_ == max_messages_;

}

In accordance with the Thread-Safe Interface pattern, these implementation methods assume the monitor_lock_ is held, so they just check for the boundary conditions in the queue.

The put() method inserts a new Message, which is a class defined in the Active Object pattern (369), at the tail of a queue. It is a synchronized method that illustrates a more sophisticated use of the Thread-Safe Interface pattern (345):

void Message_Queue::put (const Message &msg) {

// Use the Scoped Locking idiom to

// acquire/release the <monitor_lock_> upon

// entry/exit to the synchronized method.

Guard<Thread_Mutex> guard (monitor_lock_);

// Wait while the queue is full.

while (full_i ()) {

// Release <monitor_lock_> and suspend the

// calling thread waiting for space in the queue.

// The <monitor_lock_> is reacquired

// automatically when <wait> returns.

not_full_.wait ();

}

// Enqueue the <Message> at the tail.

put_i (msg);

// Notify any thread waiting in <get> that

// the queue has at least one <Message>.

not_empty_.notify ();

} // Destructor of <guard> releases <monitor_lock_>.

Note how this public synchronized put() method only performs the synchronization and scheduling logic needed to serialize access to the monitor object and wait while the queue is full. Once there is room in the queue, put() forwards to the put_i() implementation method. This inserts the message into the queue and updates its book-keeping information. Moreover, the put_i() is not synchronized because the put() method never calls it without first acquiring the monitor_lock_. Likewise, the put_i() method need not check to see if the queue is full because it is not called as long as full_i() returns true.

The get() method removes the message at the front of the queue and returns it to the caller:

Message Message_Queue::get () {

// Use the Scoped Locking idiom to

// acquire/release the <monitor_lock_> upon

// entry/exit to the synchronized method.

Guard<Thread_Mutex> guard (monitor_lock_);

// Wait while the queue is empty.

while (empty_i ()) {

// Release <monitor_lock_> and suspend the

// calling thread waiting for a new <Message> to

// be put into the queue. The <monitor_lock_> is

// reacquired automatically when <wait> returns.

not_empty_.wait ();

}

// Dequeue the first <Message> in the queue

// and update the <message_count_>.

Message m = get_i ();

// Notify any thread waiting in <put> that the

// queue has room for at least one <Message>.

not_full_.notify ();

return m;

// Destructor of <guard> releases <monitor_lock_>.

}

As before, note how the synchronized get() interface method performs the synchronization and scheduling logic, while forwarding the dequeueing functionality to the get_i() implementation method.

Internally, our gateway contains instances of two classes, Supplier_Handler and Consumer_Handler. These act as local proxies [GoF95] [POSA1] for remote suppliers and consumers, respectively. Each Consumer_Handler contains a thread-safe Message_Queue object implemented using the Monitor Object pattern. This design decouples supplier handler and consumer handler threads so that they run concurrently and block independently. Moreover, by embedding and automating synchronization inside message queue monitor objects, we can protect their internal state from corruption, maintain invariants, and shield clients from low-level synchronization concerns.

The Consumer_Handler is defined below:

class Consumer_Handler {

public:

// Constructor spawns a thread and calls <svc_run>.

Consumer_Handler ();

// Put <Message> into the queue monitor object,

// blocking until there’s room in the queue.

void put (const Message &msg) {

message_queue_.put (msg);

}

private:

// Message queue implemented as a monitor object.

Message_Queue message_queue_;

// Connection to the remote consumer.

SOCK_Stream connection_;

// Entry point to a distinct consumer handler thread.

static void *svc_run (void *arg);

};

Each Supplier_Handler runs in its own thread, receives messages from its remote supplier and routes the messages to the designated remote consumers. Routing is performed by inspecting an address field in each message, which is used as a key into a routing table that maps keys to Consumer_Handlers.

Each Consumer_Handler is responsible for receiving messages from suppliers via its put() method and storing each message in its Message_Queue monitor object:

void Supplier_Handler::route_message (const Message &msg)

{

// Locate the appropriate <Consumer_Handler> based

// on address information in the <Message>.

Consumer_Handler *consumer_handler =

routing_table_.find (msg.address ());

// Put <Message> into the <Consumer Handler>, which

// stores it in its <Message Queue> monitor object.

consumer_handler->put (msg);

}

To process the messages placed into its message queue by Supplier_Handlers, each Consumer_Handler spawns a separate thread of control in its constructor using the Thread_Manager class defined in the Wrapper Facade pattern (47), as follows:

Consumer_Handler::Consumer_Handler () {

// Spawn a separate thread to get messages from the

// message queue and send them to the remote consumer.

Thread_Manager::instance ()->spawn (&svc_run, this);

}

This new Consumer_Handler thread executes the svc_run() entry point. This is a static method that retrieves routing messages placed into its message queue by Supplier_Handler threads and sends them over its TCP connection to the remote consumer:

void *Consumer_Handler::svc_run (void *args) {

Consumer_Handler *this_obj =

static_cast<Consumer_Handler *> (args);

for (;;) {

// Blocks on <get> until next <Message> arrives.

Message msg = this_obj->message_queue_.get ();

// Transmit message to the consumer.

this_obj->connection_.send (msg, msg.length ());

}

}

The SOCK_Stream’s send() method can block in a Consumer_Handler thread. It will not affect the quality of service of other Consumer_Handler or Supplier_Handler threads, because it does not share any data with the other threads. Similarly, Message_Queue::get() can block without affecting the quality of service of other threads, because the Message_Queue is a monitor object.Supplier_Handlers can thus insert new messages into the Consumer_Handler’s Message_Queue via its put() method without blocking indefinitely.

Timed Synchronized Method Invocations. Certain applications require ‘timed’ synchronized method invocations. This feature allows them to set bounds on the time they are willing to wait for a synchronized method to enter its monitor object’s critical section. The Balking pattern described in [Lea99a] can be implemented using timed synchronized method invocations.

The Message_Queue monitor object interface defined earlier can be modified to support timed synchronized method invocations:

The Message_Queue monitor object interface defined earlier can be modified to support timed synchronized method invocations:

class Message_Queue {

public:

// Wait up to the <timeout> period to put <Message>

// at the tail of the queue.

void put

(const Message &msg, Time_Value *timeout = 0);

// Wait up to the <timeout> period to get <Message>

// from the head of the queue.

Message get (Time_Value *timeout = 0);

};

If timeout is 0 then both get() and put() will block indefinitely until a message is either inserted into or removed from a Message_Queue monitor object. If the time-out period is non-zero and it expires, the Timedout exception is thrown. The client must be prepared to handle this exception.

The following illustrates how the put() method can be implemented using the timed wait feature of the Thread_Condition condition variable wrapper outlined in implementation activity 3 (408):

void Message_Queue::put

(const Message &msg, Time_Value *timeout)

/* throw (Timedout) */ {

// … Same as before …

while (full_i ())

not_full_.wait (timeout);

// … Same as before …

}

While the queue is full this ‘timed’ put() method releases monitor_lock_ and suspends the calling thread, to wait for space to become available in the queue or for the timeout period to elapse. The monitor_lock_ will be re-acquired automatically when wait() returns, regardless of whether a time-out occurred or not.

Strategized Locking. The Strategized Locking pattern (333) can be applied to make a monitor object implementation more flexible, efficient, reusable, and robust. Strategized Locking can be used, for example, to configure a monitor object with various types of monitor locks and monitor conditions.

The following template class uses generic programming techniques [Aus98] to parameterize the synchronization aspects of a Message_Queue:

The following template class uses generic programming techniques [Aus98] to parameterize the synchronization aspects of a Message_Queue:

template <class SYNCH_STRATEGY> class Message_Queue {

private:

typename SYNCH_STRATEGY::Mutex monitor_lock_;

typename SYNCH_STRATEGY::Condition not_empty_;

typename SYNCH_STRATEGY::Condition not_full_;

// …

};

Each synchronized method is then modified as shown by the following empty() method:

template <class SYNCH_STRATEGY>

bool Message_Queue<SYNCH_STRATEGY>::empty () const {

Guard<SYNCH_STRATEGY::Mutex> guard (monitor_lock_);

return empty_i ();

}

To parameterize the synchronization aspects associated with a Message_Queue, we can define a pair of classes, MT_Synch and NULL_SYNCH that typedef the appropriate C++ traits:

class MT_Synch {

public:

// Synchronization traits.

typedef Thread_Mutex Mutex;

typedef Thread_Condition Condition;

};

class Null_Synch {

public:

// Synchronization traits.

typedef Null_Mutex Mutex;

typedef Null_Thread_Condition Condition;

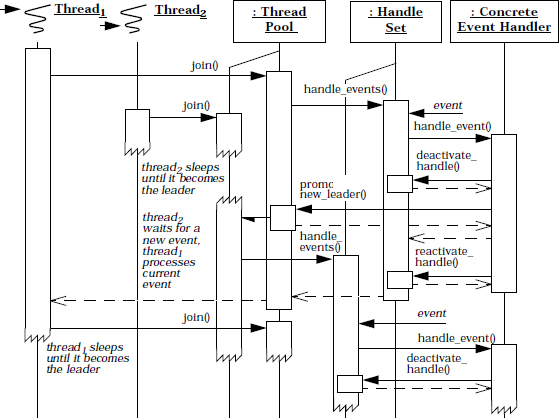

};