A definitive characteristic of encrypted information that helps to differentiate it from cleartext or encoding is the randomness found in the data at the character level. Entropy is a statistical measure of the randomness of a dataset.

In the case of network communications where file is storage based on the use of bytes formed by eight bits, the maximum level of entropy per character is eight. This means that all of the eight bits in such bytes are used the same number of times in the sample. An entropy lower than six may indicate that the sample is not encrypted, but is obfuscated or encoded, or that the encryption algorithm used may be vulnerable to cryptanalysis.

In Kali Linux, you can use ent to calculate the entropy of a file. It is not preinstalled, but it can be found in the apt repository:

apt-get update apt-get install ent

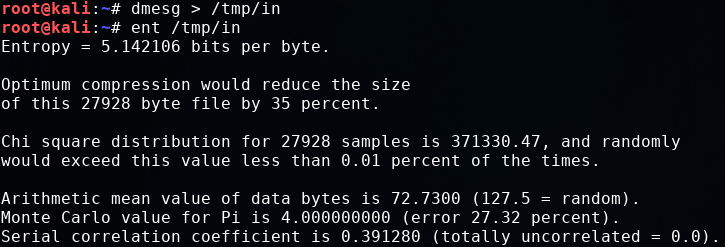

As a PoC, let's execute ent over a cleartext sample, for example, the output of dmesg (the kernel message buffer), which contains a large amount of text including numbers and symbols:

dmesg > /tmp/in ent /tmp/in

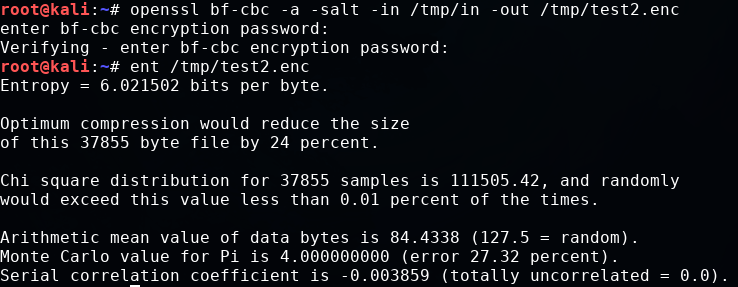

Next, let's encrypt the same information and calculate the entropy. In this example, we'll use Blowfish with the CBC mode:

openssl bf-cbc -a -salt -in /tmp/in -out /tmp/test2.enc ent /tmp/test 2.enc

Entropy is increased, but it is not as high as that for an encrypted sample. This may be because of the limited sample (that is, only printable ASCII characters). Let's do a final test using Linux's built-in random number generator:

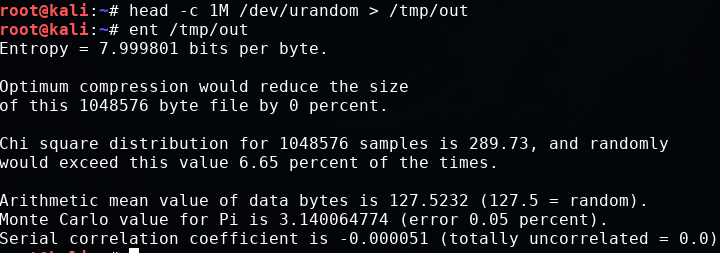

head -c 1M /dev/urandom > /tmp/out ent /tmp/out

Ideally, a strong encryption algorithm should have entropy values very close to eight, which would be indistinguishable from random data.