CHAPTER 11

MAKE DEPENDABLE DECISIONS

Jeremy Garritano, Purdue University

Learning Objectives

So that you can guide students to think critically about information they locate to support a design project, upon reading this chapter you should be able to

•Outline the major challenges student design teams have in determining the quality of information from various sources

•List and describe the importance and significance of six criteria for determining the trustworthiness of information

•Explain the application of three techniques for evaluating the quality of potential or proposed solutions in order to make dependable decisions

INTRODUCTION

Having synthesized knowledge of the specific needs of the stakeholders (Chapter 7), the context of the design task (Chapter 8), professional requirements and best practices for performance (Chapter 9), and the universe of previously developed solutions (Chapter 10), student teams will then systematically choose the solution that best fits their situation. This is an important step in the design process because

•designers can drive further efficiency or economy in implementation by comparing their ideas and solutions to those of others;

•designers will spend less time in testing or deployment since they will have eliminated less promising solutions and false leads early on in the process;

•aligning solutions with stakeholder needs will improve stakeholder satisfaction and acceptance of the final design solution.

The selection of potential solutions relies on evaluating the solutions on both nontechnical and technical bases. A number of evaluation and comparison activities, in order of increasing complexity, are discussed in this chapter.

COMMON CHALLENGES FOR STUDENTS

Students are aware that there are differences in information found on a freely available website versus a library database. A study by Head and Eisenberg (2010) confirms that students scrutinize public websites (seven or more evaluation standards used) more than library materials (four or fewer standards used). However, for students, the justification of the quality of an information resource can still be very shallow, even simply, “I know good information when I see it.” While various criteria for examining the trustworthiness of a source might seem obvious (e.g., who wrote it, what are their credentials, how old is the information), students may not slow down long enough to consider each criterion. A recent study indicates that undergraduate students do “not necessarily apply the selection criteria that they claimed to be important” (Kim & Sin, 2011, p. 184) when evaluating information resources. Also, in the digital age it can sometimes be difficult to identify all of the criteria for a particular source.

Using databases that offer easily identifiable fields such as the author, author’s organization, and date of publication are a great help compared to searching the open Web through a search engine. When comparing potential solutions, students may also have difficulty in extracting the technical information necessary to compare the solutions on the same level. Students are not experts in the field, and reading technical literature can be daunting. Additionally, not all of the needed information is usually found in one source, so students often need to piece together information from multiple sources in order to conduct a thorough analysis. There will also be gaps in knowledge, and students become frustrated when they find information related to one solution—say, monetary cost or environmental impact—but cannot find it for another. Finally, while not the same as a gap in knowledge, the ability to distinguish latent information versus explicit data described in a solution can also present a challenge for students. Not all conditions can be investigated during an experiment, so even if a solution or piece of equipment seems viable given favorable results in an article or report, it may not be able to withstand the particular environmental conditions of the new application—for example, if the team is designing for an environment that is extremely cold or exposed to high levels of moisture. When evaluating potential solutions, it is also important to be able to read between the lines and see what assumptions might have been made, even if unintentional. As an example, materials tested outdoors in the Southern United States might rarely see below-freezing temperatures and could be problematic for installation in the Northeastern United States.

EVALUATING THE TRUSTWORTHINESS OF INFORMATION

Potential solutions gathered from various sources often vary widely in their degree of quality. Any information used in the process of evaluating potential design solutions must be vetted for its trustworthiness and authority. Six basic criteria—authority, accuracy, objectivity, currency, scope/depth/breadth, and intended audience/level of information—used to do this are discussed below. These criteria have been adapted and expanded from a list of five criteria for evaluation of Internet resources suggested by Metzger (2007).

Authority

Students must consider the author/creator of the source, including credentials, qualifications, how closely they are associated with the original research, and whether they have been sponsored or endorsed by an institution or organization.

In finding research articles related to current technologies for distillation columns, how accepting of the claims of column efficiency should a student be if the author were a process engineer working at a petroleum company? A sales person working at a company that manufactures the columns being described? A chemical engineering professor at a university that has a lengthy history of publishing on column efficiencies?

A student finds a potential solution for increasing solar cell efficiency from a trade magazine. Is the author of the article a journalist reporting about the solution or is the author the originator of the solution? The student should follow the path back to the original research to read about it firsthand.

Accuracy

Students must consider whether the conclusions are appropriate and consistent given the wider body of knowledge and whether the claims made are supported by the evidence provided.

For many research publications, students should pay attention to sections such as the introduction, literature review, background, and conclusion, to see how authors are characterizing their work compared to that previously reported. Claims of breakthroughs or results inconsistent with past research may need to be verified by additional sources that confirm the initial claims. Bibliographies or works cited lists can be consulted for additional verification.

Objectivity (of Both the Author/ Creator and the Publisher)

Students must consider whether the author/creator/publisher has a mission/agenda/bias that would raise doubts as to the credibility of the information and determine whether there any conflicts of interest such as funding sources, sponsoring organizations, or membership in special interest groups.

In researching existing technologies and safety issues related to hydraulic fracturing, a student finds reports from the EPA (Environmental Protection Agency), Chevron, and a website called The True Cost of Chevron. How would knowing that the EPA is a government organization charged with investigating and reporting on environmental issues, that Chevron is a company that conducts hydraulic fracturing, and that the final website is supported by a variety of nonprofit organizations protesting hydraulic fracturing impact the student’s view of the objectivity of each report? How might the student reconcile contradictory information?

Currency

Students must consider not only the date when the information was published but also the date when the data was actually collected. Would an older solution continue to meet standards, laws, and regulations enacted since its publication? Should older solutions be reexamined in the context that these solutions may have been initially overlooked or are now considered viable given current technologies or social/economic/political trends?

Review articles, while useful, may cover a wide range of research published over a decade or more. When referencing tables or figures that are published in these articles, students must be careful to note when the actual data was published if the author is reprinting or collecting previously published data.

Students require guidance on what is considered current in their discipline. Knowing how quickly the electronics field makes advances, would a report on semiconductors that is 5 years old be considered current? What if the report were 10 years old? What about in other rapidly advancing fields such as nano-technology or biotechnology?

Scope/Depth/Breadth

Students must consider how specific the solution is compared to the desired application and under what variety of conditions the solution has been tested or implemented in order to extrapolate its applicability.

A student may find a report of new jet fighter wing designs in a conference proceeding. The student should be careful in extrapolating the solution’s appropriateness, as the purpose of some conference presentations is to present preliminary results to the engineering community that may not be fully tested, especially across a wider range of variables (such as particular speeds, temperatures, or altitudes) that may be important to the student’s artifact.

Intended Audience/Level of Information

Students must consider the intended audience of the information source, which may be written for the general public, an organization of professionals, or government officials. How do different audiences affect the presentation of the solution?

A solution a student may find described in a popular science and technology publication such as Scientific American may be oversimplified since its audience is meant to be the general public. The description may be incomplete, especially regarding specific details that would be required to truly compare the solution against others gathered.

Having students search in quality databases, such as those provided by libraries through institutional subscriptions, can often reduce the amount of time students must spend evaluating potential solutions. Results from searches on the Internet through general search engines, on the other hand, deserve enhanced scrutiny using the previously mentioned evaluation criteria. In situations where students may not have as much technical background to truly evaluate potential solutions, evaluating some of these nontechnical aspects can be just as useful in narrowing down a lengthy list of results. (See Hjørland [2012] for a concise summary of 12 ways in which information sources can be evaluated.)

ASSESSING THE CONTEXTUAL APPLICABILITY OF DESIGN INFORMATION

To be useful, information must have technical relevance in the particular design context. The types of questions that get at the technical relevance of information include the following:

•Is this the appropriate technical information for the design decision at hand?

•Has this technology (concept, material, component, etc.) been used successfully in a comparable context? Or is this a new, untested technology?

•Does this technology address the needs of the client and other stakeholders?

•Are there negative social or environmental aspects to this technology?

•What are the life cycle costs associated with this technology or design solution?

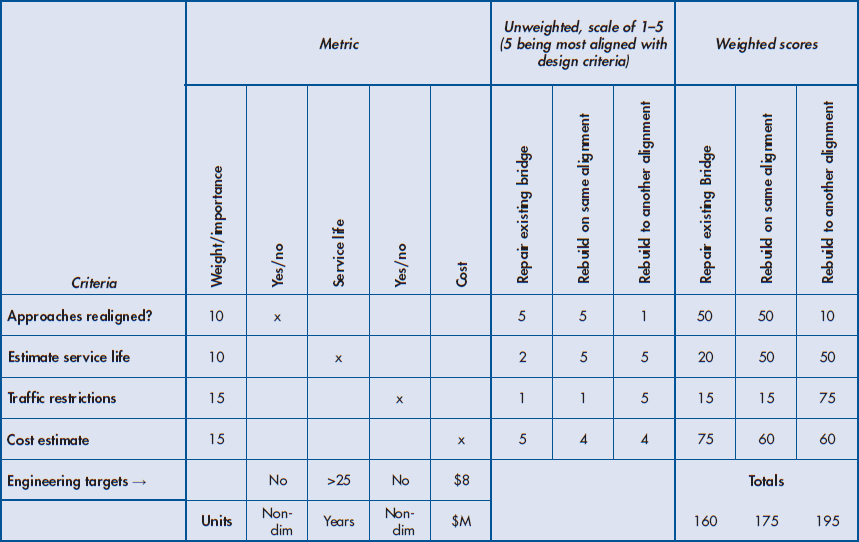

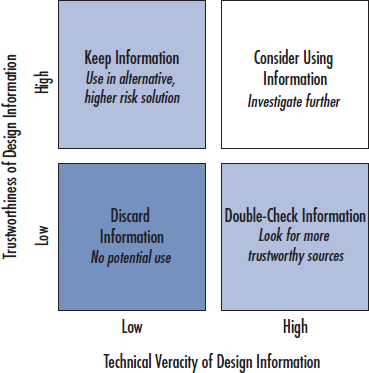

Broadly stated, a student can plot the potential value of a piece of design information along a continuum of how trustworthy it is and how relevant it is to the particular design problem. The essential design decision about whether or not to use particular information is depicted in Figure 11.1.

FIGURE 11.1 Design information decision grid.

The particular course of action students should take depends upon in which of the four quadrants a particular piece of design information is located. For example, if the design idea or technology found is based on untrustworthy information and is deemed to have low relevance to the design task at hand, it can be deemed not viable and thus discarded from further consideration. Conversely, information from trustworthy sources that offer highly relevant solutions deserves further consideration and additional information might need to be sought.

If the idea or technology is highly relevant and shows high technical potential, but it comes from an untrustworthy source (let’s say, a blog), then the student should proceed cautiously and definitely seek confirmation of the technical potential from additional information sources that are trustworthy. For example, the blog post might have mentioned published research, or the author of the blog post might be a reputable researcher or a designer with a proven track record. In this case the student could track down the original research using an author search in a library database. Conversely, if the information comes from a trustworthy source but is not particularly relevant to the context, then the student should keep the information for further consideration, possibly for use in an unconventional approach that, while it is unproven (and thus is riskier), might provide a more innovative, game changing design solution. An example of this might be a student investigating recycling efforts on college campuses. A peer institution might have a successful recycling program but not have a print student newspaper. So, unlike the student’s campus, the peer institution does not need to recycle newsprint. While coming from a high-quality source, the peer institution’s solution does not handle all situations being investigated by the student. The peer’s program may be investigated for particular aspects of the solution, but as an overall program it is not the best match.

Potential solutions gathered from various sources often vary widely in their degree of overall quality—defined as the combination of trustworthiness of the information and the applicability. Any information used in the process of evaluating potential design solutions must be well documented and recorded for appropriate comparisons to be made. What follows are three methods for comparing the quality of various solutions in order to narrow down the solutions to be considered. Each method is more sophisticated than the next and therefore would require students to have correspondingly more accurate, detailed, and trustworthy information about each potential solution.

Method 1: Pro/Con Evaluation

In Method 1, potential solutions are listed in a table with separate columns related to the pros and cons of each solution (Pahl & Beitz, 1996). An example is the rehabilitation or replacement of an aging bridge across a river. If there are actually two bridges, one for traffic in each direction, there are a variety of ways the bridges can be rehabilitated or replaced (see Table 11.1).

TABLE 11.1Pros and Cons Evaluation of Rehabilitating or Replacing an Existing Bridge

| Design/Solution | Pros | Cons |

| Rehabilitate existing bridge | Cheapest option Least disturbance to local geography |

Lowest estimated service life Existing bridge would need to be thoroughly analyzed before repair Traffic diverted to other bridge during rehabilitation |

| Remove existing bridge; rebuild on same alignment | Longest estimated service life |

Traffic diverted to other bridge during rehabilitation |

| Remove existing bridge; build to another alignment | Longest estimated service life No traffic restrictions during construction |

Highest cost option Greatest disturbance to local geography |

Only minimal and not necessarily complete information is needed for each possible solution. This method provides a very simple way to compare potential solutions on a rough scale and can reveal some general trends of the strengths and weaknesses of alternatives, but it does not offer a more data-driven or objective analysis.

Method 2: Pugh Analysis

Method 2, a Pugh Analysis (Pugh, 1991), can take information in a format similar to that of Method 1 but will compare each potential solution to either the current situation or a proposed solution the student wants to compare all other solutions against. More specific information is needed about each solution, as the student will then rate each criterion of a new solution against the existing solution or an initial proposed solution—in this case, a “+” for better than the baseline solution (existing or initial proposal), a “-” for worse than the baseline solution, or an “s” for same as the baseline solution. These are then summed to give a final score, and the results can then be reflected upon. In the case of the bridge rehabilitation, if the solutions are compared against simply rehabilitating the existing bridge, a Pugh Analysis might look like the analysis shown in Table 11.2.

TABLE 11.2Pugh Analysis of Rehabilitating or Replacing Existing Bridge

| Criterion | Proposed Solution: Repair Existing Bridge | Alternative 1: Rebuild on Same Alignment | Alternative 2: Rebuild to Another Alignment |

| Approaches realigned? | No | - | |

| Estimated service life | 10 years | + | + |

| Traffic restrictions during construction | 1 lane, northbound and southbound | s | + |

| Cost estimate | $8 M | - | - |

| Sum (+) | 1 | 2 | |

| Sum (-) | 1 | 2 | |

| Sum | 0 | 0 |

NOTE: “+” means the criterion is better than the proposed solution; “-” means criterion is worse than the proposed solution; “s” means the criterion is the same as the proposed solution. These are then summed: “+” = 1, “-” = -1, and “s” = 0.

To create this table the student would need to know detailed information on costs and service life, for example, in order to determine whether the solution criteria were better or worse than the proposed solution. Looking at the summations gives a more objective idea of how the alternative solutions compare to the proposed solution over the pro/con analysis.

Method 3: Weighted Decision Making

Method 3 takes an analysis similar to the Pugh Analysis but adds the dimension of weighting the criteria to further align the needs of the stakeholders with the proposed solutions (Cross, 2008; Pahl & Beitz, 1996). This is especially helpful if there are no clear winners among a Pugh Analysis. (For example, in Table 11.2, there are differences between the two proposed solutions, but it could be argued that there is not a clear alternative that is better than the other.) There are eight steps to constructing a weighted decision matrix:

1.List criteria (based on stakeholder needs).

2.Weight these criteria.

3.Determine metrics: What will be measured to determine if each criterion has been met?

4.Determine targets: Is there an optimal value for some of the metrics? What is the optimal value? (For some metrics, there will not be a target value.)

5.Determine relationships between criteria/needs and metrics: There might be one metric for each criteria, one metric that addresses multiple criteria, or several metrics that measure different dimensions of a single criterion. Use an “x” to denote that a metric is related to a particular criterion. If there are no metrics related to a particular criterion, add an additional metric.

6.Give scores to the alternatives based on actual data, whether gathered from existing research or determined by experiment/prototype.

7.Calculate the weighted total for each alternative: First calculate the weighted score for each criterion for each alternative, then sum the weighted total for each alternative.

8.Reflect on the results: Do they make sense?

This approach offers the potential for objectivity, if the weights are determined without any particular solution in mind, ideally using information gathered from stakeholders to determine the criteria and weights (see Chapters 7 and 8). In the bridge example, perhaps it is determined that due to other construction projects going on within the city, it is necessary to minimize traffic disruptions. Therefore the criterion “traffic restrictions during construction” (see Tables 11.1 and 11.2) will carry more weight than others. Additionally, costs are often a factor, so that criterion may also carry a greater weight. If the eight steps are followed as described, a weighted decision matrix (see Figure 11.2) will result. In the bridge example, as shown in Figure 11.2, because of the various weights given to the criteria, the solution “rebuild to another alignment” ends up with the highest score. Students would need to reflect then on what the scores really mean and if it makes sense that this appears to be the best solution to pursue. If it does not, then the weights might be reviewed and/or additional information and metrics could be added to the analysis if gaps are identified. Students must be cautious not to make modifications in order to raise the score of the solution that is simply preferred by either the designer or the stakeholders. The purpose of this matrix is to maintain as much objectivity as possible.

FIGURE 11.2Weighted decision matrix for rehabilitating or replacing existing bridge.

EVALUATING WHEN THERE ARE GAPS IN KNOWLEDGE

Given the three methods discussed in the previous section, any process in which data is placed into a carefully ordered grid or table might imply that a student will then be able to quickly read the table and decide what the best solution is, even if no weighted decision making is involved. In reality, an analysis often contains gaps, and these gaps are where students can struggle. One of the main questions for students to answer is, Do I have sufficient information that I trust in order to make to make a design decision that I can stake my reputation on? For example, in comparing solutions, one of the criteria might relate to comparing the environmental impact of the potential solutions. From the data gathered, it might be extremely difficult to know this information about every solution, since some solutions might still be in development, have test results that are confidential, or not even be fully implemented, especially in the context one is considering. To assist students in these gray areas, it is important to emphasize using existing knowledge and stakeholder needs to decide whether

•the particular gap in knowledge must be filled in order to continue. This might involve further searching for evidence or even calling up the particular people or company responsible for the solution in order to gain the necessary information.

•assumptions can be made. Knowing how similar solutions behave, can an assumption be made regarding how one particular solution will behave compared to another known solution?

•the gap in knowledge can be ignored. In the end, is the particular gap deemed not as important, or would it not factor into the desirability of the solution, so that the information is not necessary?

•stakeholders must be consulted. Is there enough uncertainty in the gap in knowledge that the stakeholders must review the importance or weight of the particular criterion in question?

Kirkwood and Parker-Gibson (2013) have detailed two comprehensive case studies for researching engineering information related to ecologically friendly plastics and biofuels, including evaluating information resources as a search progresses.

ACKNOWLEDGING SOURCES OF IDEAS

Once a set of potential solutions has been identified for further exploration, it is also important to acknowledge the sources of those ideas throughout the design process. Stakeholders should be informed of sources in order to provide feedback or reveal any additional knowledge or conflicts of interest given the selected potential solutions. If the solution is to be commercialized or pursuit of intellectual property protections are desired, it is important to document the prior art in order to determine what is original and what is already known. Intellectual property concerns may also prove to be obstacles in implementing or modifying existing solutions if particular solutions are still under protection and may require licensing from the patent assignees. Also, when evaluating the quality of proposed solutions, if analysis of criteria is undertaken, such as through a Pugh Analysis or weighted design matrix, it will be necessary to document the source from which each criterion was derived. For the bridge example, information on the life of a new bridge may have come from a source different than the one that detailed the costs of the new bridge. Information on the life span of the rehabilitated and new bridges may have come from different sources that used different methods for calculating anticipated lifetimes. In these cases, it would be important to annotate or cite the source of each criterion in case the original source would need to be referenced again. Particular tools that can manage citations have been previously discussed in Chapter 6. In the case of acknowledgment, the emphasis should not be placed on mastering any one particular citation style. Instead, the emphasis should focus on being consistent in the use of citations and in the way they are presented, regardless of the style used.

SUMMARY

In this chapter we considered how information such as stakeholder needs, the context of the design task, and prior published work addressing similar problems can be used as inputs in order to select the most promising potential solutions for further consideration, as well as to compare these solutions to a current or proposed solution. We reviewed a list of criteria for evaluating the trustworthiness of a source as well as several techniques for comparing solutions based on their technical details. Once students identify the most appropriate approach, they can start to work on embodying their solution—that is, determining how they will actually implement their solution. This will involve gathering more detail-oriented information, such as selecting materials and components that will meet the design requirements, as discussed in the following chapter.

SELECTED EXERCISES

Exercise 11.1

As pre-work for a class, have students research a particular topic, such as efficiency of wind turbines or biodegradability of particular polymers, and collect what they feel are five highly relevant information sources. Have students annotate the resources using the six criteria discussed (authority, accuracy, objectivity, currency, scope/depth/breadth, and intended audience/level of information) to justify their relevancy. In class, in small groups have students discuss with each other their top source, their rationale for picking this source, and what aspect of the quality of their source they are most uncertain about.

Exercise 11.2

For a particular design problem, have students independently research potential solutions creating their own pros and cons list. Then in class, within design groups, have the students analyze the potential solutions, creating a Pugh Analysis or weighted decision matrix (depending on the complexity of the assignment and level of detail you require) to turn in by the end of class. Students will need to work together to agree on what solutions are better than the current model, as well as potentially create different weights, measures, and targets based on existing knowledge, including information gathered from clients.

ACKNOWLEDGMENTS

The author acknowledges Monica Cardella, Purdue University, for the use of her instructions for constructing the weighted decision matrix. Data for the bridge repair/rehabilitation analyses was adapted from a report made by Parsons for the Indiana Department of Transportation, US 52-Wabash River Bridge Project, Des. No. 0400774, http://www.jconline.com/assets/PDF/BY1656611019.PDF, accessed July 3, 2013.

REFERENCES

Cross, N. (2008). Engineering design methods: Strategies for product design (4th ed.). Chichester, West Sussex, England: John Wiley & Sons Ltd.

Head, A. J., & Eisenberg, M. B. (2010). Truth be told: How college students evaluate and use information in the digital age. Project Information Literacy Progress Report, The Information School, University of Washington. Retrieved from http://projectinfolit.org/pdfs/PIL_Fall2010_Survey_FullReport1.pdf

Hjørland, B. (2012). Methods for evaluating information sources: An annotated catalogue. Journal of Information Science, 38(3), 258–268. http://dx.doi.org/10.1177/0165551512439178

Kim, K.-S., & Sin, S.-C. J. (2011). Selecting quality sources: Bridging the gap between the perception and use of information sources. Journal of Information Science, 37(2), 178–188. http://dx.doi.org/10.1177/0165551511400958

Kirkwood, P. E., & Parker-Gibson, N. T. (2013). Informing chemical engineering decisions with data, research, and government resources. In R. Beitle Jr. (Ed.), Synthesis lectures on chemical engineering and biochemical engineering. San Rafael, CA: Morgan & Claypool Publishers. http://www.morganclaypool.com/doi/abs/10.2200/S00482ED1V01Y201302CHE001

Metzger, M. J. (2007). Making sense of credibility on the Web: Models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58(13), 2078–2091. http://dx.doi.org/10.1002/asi.20672

Pahl, G., & Beitz, W. (1996). Engineering design: A systematic approach (K. Wallace, L. Blessing, & F. Bauert, Trans., 2nd ed.). K. Wallace (Ed.). London: Springer-Verlag.

Pugh, S. (1991). Total design: Integrated methods for successful product engineering. Workingham, England: Addison-Wesley.