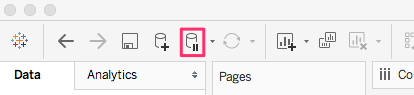

- We are using a live connection, which means that each interaction with a report will generate an SQL query and we should wait. To avoid this, we will pause Tableau Auto Updates, as shown in the following screenshot:

- Now, we can craft our report by dragging and dropping Dimensions and Measures to the canvas, as follows:

- You should add the following filters:

- C City: UNITED KI1 or UNITED KI5

- S City: UNITED KI1 or UNITED KI5

- D YEAR: Dec1997

Moreover, we should convert D Year into discrete and sort by Revenue.

- We should unpause auto updates and run our query. It takes ~18 seconds for my cluster. If we missed the time, we can log in to the AWS Console and navigate to the Redshift cluster | Queries Tab and see all executed queries, their time, and plan. You might see that, in the case of Tableau, we don't have actual queries; instead, we have something like fetch 10000 in SQL_CUR7. Tableau is using cursors and we can run the following query to see queries for currently active cursors:

SELECT

usr.usename AS username

, min(cur.starttime) AS start_time

, DATEDIFF(second, min(cur.starttime), getdate()) AS run_time

, min(cur.row_count) AS row_count

, min(cur.fetched_rows) AS fetched_rows

, listagg(util_text.text)

WITHIN GROUP (ORDER BY sequence) AS query

FROM STV_ACTIVE_CURSORS cur

JOIN stl_utilitytext util_text

ON cur.pid = util_text.pid AND cur.xid = util_text.xid

JOIN pg_user usr

ON usr.usesysid = cur.userid

GROUP BY usr.usename, util_text.xid;

- Let's run one more system query that will help us to identify the size of tables we are using in our Tableau data source, as follows:

select stv_tbl_perm.name as table, count(*) as mb from stv_blocklist, stv_tbl_perm

where stv_blocklist.tbl = stv_tbl_perm.id

and stv_blocklist.slice = stv_tbl_perm.slice and stv_tbl_perm.name in ('lineorder','part','dwdate','supplier')

group by stv_tbl_perm.name

order by 1 asc;

We can notice the size of the tables:

- lineorder=34311mb

- part=92mb

- dwdate=80mb

- supplier=76mb

Finally, we can tune our cluster to improve the performance of the queries.